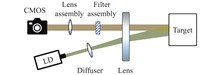

ObjectiveLaser atmospheric propagation is influenced by combined effects including turbulence, thermal blooming, atmospheric inhomogeneity, and other perturbations. Key beam quality metrics—such as target spot expansion ratio, spot radius growth, centroid displacement, and encircled energy ratio—quantify beam distortion and attenuation during atmospheric propagation, enabling systematic evaluation of laser propagation performance. Existing models fall into three categories: wave-optics models, empirical scaling-law models, and statistical analysis models. Wave-optics models provide high precision but suffer from prohibitive computational complexity for real-time applications. Empirical models simplify calculations but fail under extreme conditions (e.g., strong turbulence or thermal blooming). Statistical models enable rapid predictions but produce ensemble-averaged results insensitive to transient/local variations, require stringent data quality, and lack interpretability. This study introduces a Lasso regression-based framework to address these limitations, achieving real-time capability, high accuracy, and interpretability for laser atmospheric propagation assessment.MethodsFigure 1 outlines the Lasso regression modeling workflow (Fig.1). Simulation data were generated using a four-dimensional high-energy laser atmospheric propagation and adaptive optics compensation code developed by the Anhui Institute of Optics and Fine Mechanics, Chinese Academy of Sciences (Tab.1). The code implements a multi-phase-screen propagation model, with datasets comprising laser parameters (wavelength, power), atmospheric parameters (turbulence strength, thermal blooming distortion), and beam quality metrics. Lasso regression with L1 regularization was applied to model beam quality degradation mechanisms, automatically selecting dominant features from high-dimensional data while suppressing noise. Hyperparameters (regularization strength, convergence tolerance) were optimized via grid search (Fig.2, Tab.2).Results and DiscussionsThe Lasso regression-based model resolves critical limitations of conventional methods in real-time performance, accuracy, and feature interpretability (Tab.3). Leveraging Lasso’s feature selection mechanism, the model achieves precise predictions of beam quality metrics while maintaining computational efficiency and interpretability. Compared to traditional statistical models, it delivers superior prediction accuracy and faster computation, fulfilling real-time evaluation requirements in practical engineering scenarios. Simulation analyses demonstrate robust performance under complex atmospheric conditions, including strong turbulence and thermal blooming (Fig.3-Fig.7).ConclusionsThe proposed Lasso regression model enables rapid, accurate evaluation of laser atmospheric propagation under extreme conditions, addressing the trade-off between computational cost and physical fidelity. Its embedded feature selection mechanism aligns with laser propagation physics (e.g., turbulence-driven beam wander vs. thermal blooming-induced defocus), enhancing interpretability for field deployment. Future efforts will extend the framework to multi-wavelength/pulse regimes and hybrid machine learning architectures (e.g., physics-informed neural networks) for improved generalizability.

ObjectiveUnderwater fluorescence imaging technology is extensively utilized in various fields, including environmental monitoring, marine biological research, and marine energy exploration. However, underwater fluorescence imaging often suffers from insufficient contrast and noise interference due to the absorption and scattering of light in water, as well as other complex optical environmental factors. Because of their significant impact on image quality and subsequent analysis, there is an urgent need to develop techniques for underwater image enhancement and restoration to mitigate these effects.MethodsThe underwater fluorescence imaging detector, which primarily consists of an optical detection unit, a power supply and drive unit, and a control and data processing unit (Fig.3). Compared the two fluorescence signals and the two signal-to-noise ratios (SNR) of images of which the results were calculated with and without calibration serves to evaluate the effectiveness of the calibration method (Fig.5). The detection limit of the device was also tested to evaluate its performance in aquatic environments (Fig.6).Results and DiscussionsThe fluorescence signal intensity distribution becomes more uniform and the noise is significantly reduced after correction (Fig.7). The SNR of the image is also improved at various exposure times (Fig.8). In the underwater test, compared with the uncorrected fluorescence image of the Rhodamine B (RhB) solution, the fluorescence signal in the corrected image has been enhanced. Additionally, the contrast between the target and the background is significantly improved (Fig.9). The fluorescence signal diagram clearly illustrates a gradient distribution after correction (Fig.10). The underwater fluorescence imaging device developed in this work gained good performance with a quantification range from 130 µg/L to 910 µg/L and a detection limit low to 40 µg/L (Fig.11).ConclusionsThe experimental results show that the image correction method based on standardized coefficients not only effectively eliminates the fluorescence variations caused by the uneven intensity of excitation light, but also reduces the noise generated by the attenuation of light in the water, which in turn improves the images’ SNR. In the underwater test, the fluorescence signal of the corrected RhB solution was enhanced, the contrast was improved, and the fluorescence signal images could be reflected the concentration change. The fluorescence imaging device gained a detection limit of 40 µg/L and a quantification range of 130-910 µg/L, providing high-quality images for underwater environmental monitoring and biological research. In the subsequent research, the signal processing algorithm can be further optimized by an artificial intelligence training set, which in turn reduces the detection limit of the device and widens its linear range. By optimizing the correction algorithm, the detector is expected to be widely used in underwater imaging scenarios.

ObjectiveEffective monitoring approaches for carbon dioxide (CO2) have become critical as the impact of increasing atmospheric CO2 concentration on the global climate system intensifies. Satellite remote sensing technology is the prevailing method for CO2 monitoring, and the key to successful retrieval lies in constructing forward models. Traditional forward modeling software, although capable of simulating atmospheric radiative transfer processes, suffers from limitations such as low resolution, poor computational efficiency, the neglect of scattering effects, and the inability to integrate real-time measurement data into simulations. To address these issues, this study employs the Line-By-Line (LBL) calculation method and Mie theory to calculate the spectral properties of various atmospheric components, selecting ideal bands for CO2 retrieval. Furthermore, a forward model for CO2 radiative transfer was developed based on the Discrete Ordinate Radiative Transfer (DISORT) method. The forward model accounts for multiple scattering, achieves high resolution, and is capable of integrating real-time environmental observation data into radiative transfer simulations. To address challenges such as the uncertainty of boundary conditions, physical parameters, and the unknown sensitivity of environmental factors, the model was used to analyze the impact of different environmental parameters on the spectral radiance of CO2-sensitive bands. These findings provide a theoretical basis for the development of atmospheric CO2 concentration retrieval algorithms, the selection of environmentally sensitive parameters, and the analysis of retrieval errors.MethodsAccurate gas absorption coefficients in the atmosphere are first calculated using the LBL method, followed by the computation of aerosol spectral property parameters via Mie theory. High-resolution solar spectra, underlying surface types, and atmospheric models are selected, with their results incorporated into the atmospheric radiative transfer equation. The equation is then solved using the DISORT method to obtain radiance results under arbitrary solar zenith and azimuth angles. The forward simulation results are convolved with the instrument response function to produce the final forward model outputs. After identifying CO2-sensitive spectral bands, the simulated results of the model are compared with GOSAT-2 satellite observations to validate its accuracy. Finally, the model is used to analyze the impact of environmental parameters, such as surface types, solar zenith angles, aerosol types, and Aerosol Optical Depth (AOD), on the spectral radiance within CO2-sensitive bands.Results and DiscussionsThe results indicate that CO2 in the 6300–6400 cm-1 band is minimally affected by other gases, with moderate absorption, making it highly suitable for CO2 retrieval. The normalized simulation results of the model within this band exhibit a consistent trend with the wavelength-dependent variation trend of the normalized detection results from the GOSAT-2 satellite L1B product (Fig.5), demonstrating the validity of the model. Sensitivity analysis reveals that an increase in surface albedo results in a corresponding rise in reflected radiance, thereby enhancing radiance as surface albedo varies across different surface types (Fig.6). When the surface albedo difference reaches 0.14, the difference in the average rate of relative radiance change is 123.29% (Tab.2). As the solar zenith angle increases, the optical path length grows, resulting in a decay in radiance (Fig.7). The relative radiance change exhibits bimodal characteristics (Fig.8). Aerosols, due to their varying compositions, significantly impact radiance. Urban aerosols, which include strongly absorbing components, cause substantial radiance attenuation (Fig.9), with the average relative radiance change reaching -37.66% (Tab.3). An increase in AOD leads to distinct radiance outcomes for different aerosol types (Fig.10). Urban aerosols show high sensitivity to radiance changes, with radiance rapidly decreasing as AOD increases. In contrast, maritime aerosols, characterized by strong scattering properties, result in a slight enhancement of radiance. The average rate of relative radiance change for maritime and rural aerosols remains within ±5% (Fig.11).ConclusionsA high-resolution forward model was developed to simulate the spectral radiance of CO2-sensitive bands, incorporating scattering effects and real-time environmental observation data. The results demonstrate that the selection of environmental parameters has a significant impact on forward modeling in the regional atmospheric CO2 retrieval based on satellite data. During the retrieval process, it is recommended to use surface albedo data derived from MODIS satellite observations that are spatiotemporally matched with carbon-monitoring satellites. Additionally, high signal-to-noise ratio observations with smaller zenith angles should be utilized to achieve more accurate retrievals. Moreover, the multiple scattering and absorption effects caused by aerosols cannot be ignored, particularly when retrieving atmospheric CO2 concentrations over urban areas. To minimize uncertainties caused by aerosols, prioritizing data with lower AOD is recommended. These findings provide a theoretical foundation and model basis for the development of atmospheric CO2 retrieval algorithms, the selection of environmentally sensitive parameters, and the analysis of retrieval errors.

ObjectiveMolybdenum telluride (MoTe2), a transition metal dichalcogenide, possesses a narrow band gap (0.9 eV) and high electron mobility, demonstrating good application prospects in infrared optoelectronics. MoTe2 is a two-dimensional layered crystal structure combined by van der Waals forces. It is a reliable method to prepare MoTe2 quantum dots and nanosheets by liquid phase exfoliation. The kinds of solvent used in the liquid phase exfoliation method play an important role in the preparation process. However, in the related reports, studies about the effect of solvents on the exfoliation effect are rare. Therefore, it is significant to explore the best solvent of liquid phase exfoliation for the preparation of MoTe2 quantum dots.MethodsSix kinds of different solvents (1-methyl-2-pyrrolidone, 1-vinyl-2-pyrrolidone, acetone, ethanol, dimethylformamide, isopropanol) were added to MoTe2 powder by ultrasonic-assisted liquid phase exfoliation method. The mixed solution was sonicated at 210 W ultrasonic power for 6 h, centrifuged at 3000 r/min for 10 minutes, and the supernatant was taken for comparison. The size and morphology of the six samples were observed by transmission electron microscopy. The absorbance of the quantum dot solution was measured by a UV-Vis-NIR absorption spectrometer, and the turbidity of the quantum dot solution was measured by a turbidity meter. The suitable exfoliation solvent for MoTe2 quantum dots was determined using Hansen solubility theory.Results and DiscussionsThe results of transmission electron microscopy (Fig.2) show that the quantum dots prepared by 1-vinyl-2-pyrrolidone solution have the smallest size and the most uniform particle size. From the data of FTIR spectra and XRD patterns (Fig.3), it is known that the composition of quantum dots does not change during the ultrasonic process. The Hansen parameter diagram (Fig.6) shows that 1-vinyl-2-pyrrolidone with the smallest Ra value becomes a more suitable exfoliation solvent in theory. Combined with the statistical data and calculation results of (Tab.1) and (Tab.2), it is confirmed that 1-vinyl-2-pyrrolidone is indeed the suitable exfoliation solvent for MoTe2.ConclusionsBy comparing the liquid phase exfoliation effect of six kinds of solvents for MoTe2, it was found that the choice of solvent directly affects the liquid phase exfoliation effect. Combined with the calculation results of Hansen solubility theory and the experimental results of turbidity method, it was concluded that 1-vinyl-2-pyrrolidone solvent was more suitable for liquid phase exfoliation of MoTe2.

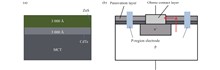

Objective With the continuous development of mercury cadmium telluride (HgCdTe) infrared detectors, the operational wavelength of HgCdTe has progressively shifted from mid-wave to long-wave and very long-wave, imposing higher demands on device performance such as resolution, reliability, and sensitivity. As a narrow-bandgap semiconductor device, the surface of HgCdTe infrared focal plane detector chips is susceptible to fixed charges introduced by contamination or dangling bonds, which can cause band bending of one to several bandgap magnitudes. This leads to accumulation, depletion, or inversion at the surface of HgCdTe materials, thereby increasing the surface leakage current and severely degrading device performance. Additionally, the high activity of Hg atoms in the material and the relatively low bond energy of Te-Hg bonds make Hg prone to escape, resulting in a Te-rich surface that further impacts device performance. Therefore, surface passivation is a critical step in the fabrication process of HgCdTe photovoltaic infrared focal plane detectors.Methods N-type Hg1-xCdxTe thin films were grown on cadmium zinc telluride (CdZnTe) substrates using horizontal liquid phase epitaxy. A CdTe/ZnS passivation layer was deposited via magnetron sputtering, and the schematic structure is shown in Fig.1(a). After passivation film growth, the samples were diced using a wafer saw and subjected to interdiffusion annealing under different conditions. The entire annealing process was carried out in a nitrogen atmosphere, with temperature fluctuations kept within 4 ℃ after reaching the set temperature. After annealing, the samples were cooled to room temperature over 25–35 minutes. Subsequently, dry etching was performed to open contact holes, and metal electrodes were deposited to complete the fabrication of long-wave HgCdTe devices, as illustrated in Fig.1(b).Results and Discussions Based on the improved three-stage annealing process described above, it was applied to long-wave mercury cadmium telluride unit devices. Figure 8 presents the corresponding I-V test results. The long-wave devices fabricated using the high-temperature three-stage annealing process exhibit superior performance under reverse bias voltage compared to those prepared by the traditional annealing process, particularly in the region where the reverse bias voltage exceeds 150 mV. This phenomenon is primarily attributed to the high-temperature three-stage annealing process, which enables the formation of higher-quality passivation layer crystal structures and thicker high-composition transition layers. As a result, the fixed charge density and defect state density on the material surface are significantly reduced, ultimately leading to a decrease in the leakage current of the long-wave devices.Conclusions The quality of the surface passivation film grown by magnetron sputtering and the thickness of the high-composition transition layer have a decisive impact on the performance of mercury cadmium telluride (HgCdTe) infrared devices. To address the conflict between the thickness of the high-composition transition layer and the minority carrier lifetime of the material in traditional annealing processes, we innovatively proposed a three-stage annealing process. This process not only successfully prepared high-quality CdTe passivation films and achieved a thicker high-composition transition layer but also significantly improved the minority carrier lifetime of the material, thereby achieving synergistic optimization of passivation film quality, high-composition transition layer thickness, and material minority carrier lifetime. Experimental results show that long-wave infrared devices fabricated using the three-stage annealing process exhibit significantly improved reverse leakage current levels in I-V characteristic tests, especially under high reverse bias voltages, where the leakage current is markedly reduced, leading to enhanced device performance. In the future, we will further optimize the annealing process parameters, explore more refined temperature and time control strategies, and extend the application of this process to long-wave HgCdTe infrared focal plane devices to verify its universality and scalability.

ObjectiveThermal protection for infrared cameras is critical for maintaining their stability under complex operating conditions, such as high temperatures and high humidity. The elevated temperatures and humidity during the drying process pose significant challenges to the camera’s performance and lifespan, potentially causing deformation of the imaging window, damage to electronic components, and a loss of temperature measurement accuracy. To address these issues effectively, a thermal shield that combines thermal insulation with active cooling is essential to maintain the camera’s operating temperature within its acceptable range (10 ℃ to 50 ℃). This thermal protection device must provide efficient heat dissipation, reliable sealing, and compact design. However, conventional thermal protection methods relying solely on insulation or basic cooling are insufficient to meet the demands of such complex conditions. Therefore, this study proposes a thermal protection device for infrared thermal imager that integrates passive insulation with active cooling, enabling adaptation to high-temperature, high-humidity environments while optimizing the camera’s temperature measurement performance and operational stability.MethodsA thermal protection structure for an infrared thermal imager is proposed (Fig.3). The design integrates passive thermal insulation using a PTFE housing and active heat transfer optimization with a diffuser installed at the cooling air inlet (Fig.4). Numerical simulations were performed to determine the optimal structural parameters of the diffuser (Fig.5). The flow and heat transfer processes within the structure were analyzed using computational fluid dynamics (CFD), with the Realizable k-ε turbulence model selected as the computational approach. Grid-independence validation (Fig.6) was conducted to ensure that the numerical simulation results were unaffected by the grid density. In order to verify the validity of the numerical simulation results, experimental tests were carried out within the high temperature drying process section (Fig.14).Results and DiscussionsThe guide vane angle was increased from 35° to 55°, significantly improving convective heat transfer efficiency and optimizing temperature distribution (Fig.7). The cooling effect was optimal at 55°, where the average temperature of the thermal imaging camera was 36.55 ℃, and the maximum temperature was 36.7 ℃ (Fig.8). When the horizontal diffusion circle diameter D1=40 mm, the average temperature of the infrared thermal imager was further reduced to a minimum of 33.65 ℃ (Fig.10), and the convective heat transfer coefficient reached a maximum value of 78.07 W·m-2·K-1 (Table 5), achieving optimal airflow distribution and temperature uniformity. Under fixed guide vane angle and diffusion circle diameter conditions, the guide vane length L=10 mm, resulted in the lowest infrared thermal imager temperatures, with an average temperature of 33.65 ℃ and a maximum temperature of 33.75 ℃ (Fig.12). At this length, the convective heat transfer coefficient also reached its maximum value of 78.07 W·m-2·K-1 (Tab. 6), indicating optimal airflow disturbance and heat transfer efficiency. However, excessive guide vane length reduced cooling performance and caused a temperature rebound. The temperature of the cooling air varies with seasonal weather, requiring a higher flow rate to enhance convective heat transfer in hot conditions. At an air inlet temperature of 38 ℃, increasing the flow rate from 40 m/s to 140 m/s reduced the average temperature of the camera from nearly 50 ℃ to 42.35 ℃ (Fig.13), meeting its operational requirements. The influence of the thermal gradient on heat conduction was analyzed, with the calculated results presented in Tab.7. The maximum deformation observed was 0.11 mm, and the peak thermal stress reached 5.52 MPa, both within acceptable limits. Numerical simulations were conducted under the same boundary conditions as the experiments, and the results from both approaches were compared. Figure 15 illustrates the comparative analysis between experimental data and numerical simulation outcomes under varying air inlet temperatures. The findings indicate a high degree of correlation between the numerical simulations and experimental measurements, with the maximum relative error for peak temperatures at measurement points being 5.72%, and for average temperatures, 5.92%.ConclusionsA forced convection heat transfer protection structure was designed by integrating passive heat insulation and active convection technologies. The passive design utilizes low thermal conductivity materials to form a shell structure, minimizing heat transfer in high-temperature environments. For active cooling, high-pressure gas is introduced as a cooling source, with a circular diffuser and optimized air inlet structure enhancing convective heat transfer efficiency. Both numerical simulations and experimental tests confirm that the proposed structure can reliably protect infrared thermal imager in ambient temperatures up to 130 °C. The optimized design achieves uniform cooling gas distribution, lowering the maximum camera temperature from 42.6 ℃ to 33.75 ℃—a 20.78% reduction. Under extreme room temperatures of 38 ℃, increasing the air inlet velocity to 140 m/s reduces the camera temperature to 42.35 ℃, meeting operational requirements. This study provides a valuable reference for addressing thermal protection challenges of optical instruments in industrial high-temperature scenarios. The experimental validation ultimately confirmed the effectiveness of the thermal protection structure, with the experimental results demonstrating excellent agreement with the numerical simulation outcomes.

Objective During actual use of the black high-emissivity coating on the surface of porous materials, micro-cracks on the surface of micron scale are generated due to thermal stress. In order to facilitate the subsequent development of the evolution and expansion laws of crack defects under thermal stress, low-contrast coating surface crack morphology research on visual inspection technology. A detection method that combines optical optimization and deep learning is proposed. By designing a light source to stimulate a monocular vision system, first optimizing the illumination method and incident angle parameters from the perspective of system design to enhance the local contrast of collected crack images. It proposes an algorithm to adapt to low-contrast image crack contrast enhancement. In turn, an improved U-Net network is built to improve the ability to extract low-contrast crack features by embedding attention modules, deep hyperparametric convolution and activation functions. Experimental results show that the local contrast of the acquired images is the highest when the incident light is 30° in the high illumination mode. After preprocessing, the image contrast is increased from 10.507 to 42.662, which effectively reduces the influence of background noise on crack information when the image is low contrast, and can better highlight the morphological characteristics of cracks. The Dice coefficient, SSIM index and accuracy Acc of the improved network reached 0.862, 0.892, and 0.901 respectively in terms of crack segmentation performance indicators. The detection rate of cracks with widths greater than 9.6 μm reached more than 90%, and the crack shape and direction were clearly recognizable.Methods In order to identify the shape and direction of microcracks on low-contrast images and define the minimum detection width, this study built a monocular vision acquisition platform to collect high-quality images starting from the lighting method and light incidence angle (Fig.1), so as to improve the contrast between microcracks and the substrate background, preprocess the image to reduce the influence of the background on crack information, and improve the contrast between cracks and background (Fig.4). Through the improved U-Net network model (Fig.11), an attention mechanism is added at the junction of down-sampling and up-sampling jumps to avoid the model being affected by image noise, improve the extraction ability of key features, and use deep hyperparametric convolution to increase the number of convolution kernels., more features can be extracted, thereby improving the model's representation ability and segmentation accuracy to complete the detection of cracks on the coating surface, and realizing crack segmentation.Results and Discussions Based on the design of the vision system in this research, high-quality images were obtained. The contrast of the original image was 10.507, and after pre-processing, the contrast was increased to 42.662, which was improved by 4 times, make the difference between the crack information and the background area in the image more obvious. Through this algorithm Att-Do-U-net combines the attention mechanism with the deep hyperparametric convolution structure, it ultimately performs the best among various indicators. The highest values were reached in terms of Dice coefficient of 0.862, SSIM index of 0.892 and accuracy of 0.901. In addition, in terms of segmentation results, the segmented crack information has a more complete vein structure, and also has a good segmentation effect on small branches of cracks (Fig.16). The lines are continuous and smooth on the segmentation results, which is better than the results of broken lines, breakpoints, etc. in other results. The cracks that cannot be detected are discussed (Fig.18). Between 4.8 and 9.6 μm, the crack detection rate is less than 25%, while between 9.6 and 14.4 μm, the crack detection rate is as high as more than 90%. For crack widths above 14.4 μm, the detection rate reaches 100%, so the minimum width of detectable cracks is defined as 9.6 μm.Conclusions In this paper, a light source excited monocular vision system is constructed. By optimizing lighting methods and image preprocessing algorithms, combined with improving U-Net network, accurate detection of low-contrast micro-cracks is achieved. The pretreatment method in this paper can reduce the interference of noise and obtain complete crack information. The segmentation effect of low-contrast crack images based on the improved U-net network is better than that of the original U-shaped network. The crack segmentation is more complete. The boundary of crack morphology characteristics on the result is smoother. The average SSIM reaches 0.892, and the Dice coefficient reaches 0.862. A crack width of 9.6 μm can be recognized in terms of crack width definition. The current method has limitations for crack detection less than 10 μm. In the future, super-resolution reconstruction technology can be introduced to recover the crack skeleton from low-resolution crack images to achieve lower-width detection.

ObjectiveWith the development of infrared focal plane detector applications, there is an increasing demand for high-frame-frequency imaging, such as the detection and tracking of high-speed small targets, and the tracking and imaging of unmanned aircraft swarms in complex backgrounds, and other fields. The infrared dynamic vision sensor is a bionic sensor different from the traditional infrared sensor, which integrates the photocurrent at a fixed frame rate to form a grayscale image, whereas the infrared dynamic vision sensor asynchronously measures the luminance change of each pixel and outputs the position, time, polarity, etc. of the area of the luminance change in the form of an event stream, which removes the background interference and retains the information of the moving object only, greatly reducing the data Redundancy and lower transmission pressure. Compared with the traditional infrared focal plane readout circuit, the infrared dynamic vision sensor readout circuit has the advantages of high temporal resolution, high dynamic range and low power consumption. Therefore, in this paper, a digital processing circuit based on infrared event detector devices and its visualization method are designed.MethodsThe paper adopts Round Robin arbitration principle in digital circuit design, which replaces the arbitration tree and reduces the arbitration delay (Fig.4). At the same time, it adopts only row arbitration, together with the output of the whole row information through the compression algorithm, which reduces the delay brought by the column arbitration and the redundancy of the time-stamped information (Fig.5). Two RAM IP cores are used to accumulate the event data over time, enabling writing and reading, and ultimately transmission via the HDMI protocol (Fig.7).Results and DiscussionsUsing the robin arbitration algorithm, the arbitration result can be output in one clock with a delay of 1 clock, which is a significant improvement compared to the arbitration tree structure with a delay of 2-9 clocks. Using only line arbitration with the whole line of information through the compression algorithm output, the equivalent frame rate FR = 1085 Hz, data throughput EPS = 0.36 G, the results are comparable to the visible light band product parameters (Tab.1).ConclusionsFacing the application requirements of infrared focal plane high frame rate readout, a digital processing method for differential infrared dynamic vision sensors is proposed in this paper, which adopts polling arbitration algorithm instead of arbitration tree structure to reduce the impact of arbitration delay; at the same time, it adopts only row arbitration together with the data compression output to reduce the uncertainty and arbitration delay caused by column arbitration. The design is verified using Xilinx FPGA development board, and the corresponding visualization method is proposed. In the visualization process, the 40 bit timestamp information is not used, which can be used for subsequent digital image processing of the event stream. It lays a technical foundation for the development, testing and data processing of the subsequent infrared band dynamic vision sensors.

Objective The solution for the infrared radiation characteristics of the tail flame gas exhibits stronger spectral selectivity and volumetric participation compared to the infrared radiation from the skin. The absorption of asymmetric polyatomic gas molecules demonstrates significant spectral selectivity. Consequently, prior to analyzing the infrared radiation characteristics of the tail flame, it is essential to determine the fundamental physical properties of the gas medium, such as the spectral absorption coefficient, spectral scattering coefficient, and spectral transmittance. Typically, the tail flame plume of an aeroengine consists of H2O, CO2, CO, and common atmospheric molecules. Since the infrared radiation of symmetric diatomic molecules can be disregarded, only the absorption and emission of H2O, CO2, and CO molecules need to be considered. In addition to these molecules, the infrared radiation characteristics of the aircraft are also influenced by the high-temperature walls of the exhaust system. In this case, both the emitted radiation from the high-temperature wall and its diffuse reflection characteristics must be considered. Calculating the spectral physical properties of the tail flame medium represents the primary challenge in determining its infrared radiation characteristics.MethodsA solution method for the radiation transfer equation that combines the apparent ray method and the reverse Monte Carlo method has been proposed. The combined method has the advantages of both methods and can quickly solve the radiation transfer equation for absorption and scattering media. Combined with the statistical narrow-band k-distribution model, the influence of different gas basic physical property libraries on the infrared radiation characteristics of the exhaust system was calculated and analyzed. Considering the existence of multiple versions of HITRAN/HITEMP database, systematic comparison was made between HITRAN database and HITEMP database for solving basic physical properties of gas radiation (Fig.3), and the influence of database version on solving absorption coefficient and radiation intensity was analyzed.Results and Discussions The mean absorption coefficient and spectral radiation intensity of single molecule Planck are calculated by using the database, and the absolute errors of the calculated results of each physical property library are calculated relative to HITRAN2020 (Fig.5-7). The results show that the absolute errors of CO2 molecules exist near three absorption peaks in the bands of 2.7 μm, 4.3 μm and 8-14 μm. The absolute error of CO molecule almost only exists around 2100 cm-1 wave number. The maximum absolute error of the absorption coefficient of H2O molecules at a wavenumber of 150 cm-1 is 0.024 (Fig.11-13). This is because the wavenumber of 150 cm-1 is far from the emission center of the Planck function. Finally, the accuracy of the proposed method was evaluated based on the experimental results (Fig.17-20).ConclusionsConsidering the existence of multiple versions of the HITRAN/HITEMP databases, a systematic comparison was performed among various versions of the HITRAN and HITEMP databases to address the fundamental physical properties of gas radiation. The impact of database versions on the calculation of absorption coefficients and radiation intensity was thoroughly analyzed. Given that the HITRAN database lacks spectral line information for CO molecules in the 8-14 μm band, if the partial pressure of CO in the high-temperature gas under consideration is relatively significant or the calculation results are highly dependent on CO, it is recommended to use the HITEMP database for calculations. The absolute errors of Planck-mean absorption coefficients and spectral radiation intensities of single molecules calculated using the HITRAN2008, HITRAN2012, and HITRAN2016 databases relative to HITRAN2020 were evaluated and compared. Results demonstrate that for CO2 molecules, absolute errors occur near the three absorption peaks in the 2.7 μm, 4.3 μm, and 8-14 μm bands; for CO molecules, absolute errors are predominantly observed near the wavenumber of 2100 cm-1; and the spectral line information of H2O molecules has undergone substantial changes, affecting both the relative magnitude of absorption coefficient values and their distribution across different wavenumber positions. Considering that the infrared radiation characteristics of the exhaust system are influenced by both absorbent gases and diffuse-reflecting solid boundaries, a novel method for solving the RTE was developed by integrating the apparent ray method with the reverse Monte Carlo method. The performance of this method was assessed in an experimental setting. Results confirm that the proposed method can more accurately predict and analyze light transmission issues in complex environments.

ObjectiveLidar detects targets by actively emitting and receiving laser signals, and obtains information such as the target's distance, speed, and direction from the reflected echo. FMCW lidar is a ranging technology based on frequency modulated continuous wave signals, combining the advantages of frequency modulated continuous wave ranging and laser detection. This technology transmits a frequency modulated continuous wave to the target, mixes the signal light with the intrinsic light, and uses the beat frequency signal generated by laser interference to calculate the distance and speed of the target, thereby achieving high-precision distance and speed measurement. It has the characteristics of integrated distance and speed measurement and strong anti-interference ability. However, during laser transmission, depolarization effects may occur due to atmospheric turbulence or reflection from the target surface, resulting in polarization mismatch between the echo light and the intrinsic light. This polarization mismatch can significantly reduce the heterodyne efficiency, signal-to-noise ratio, maximum detection distance, and detection accuracy of the system. Therefore, in order to achieve high-precision long-distance detection, research on the polarization characteristics of FMCW lidar echoes has important academic significance and application value.MethodsIn actual detection of FMCW laser radar, the polarization matching degree between signal light and intrinsic light directly determines the heterodyne efficiency of coherent detection, which in turn affects the signal-to-noise ratio, detection distance and accuracy of the FMCW laser radar system. Since the polarization state of the intrinsic light remains stable when it is transmitted in the polarization-maintaining fiber, and the polarization state of the signal light changes due to the scattering of the detection target surface when it is received by the optical system after undergoing a complex atmospheric propagation process. Therefore, it is particularly important to analyze the polarization characteristics of the echo in order to better understand its impact on the performance of the FMCW laser radar system. Based on the optimization and improvement of the traditional FMCW laser radar detection principle, a new detection system based on polarization orthogonal demodulation is proposed. A 1/4 wave plate is added to the incident end of the signal light, and the different polarization states of the signal light are simulated by changing the fast axis angle of the wave plate. The intensity of the balanced detector output signal is analyzed and verified.Results and DiscussionsIn view of the polarization mismatch between the echo light and the intrinsic light caused by the change of polarization state caused by the surface scattering of the detection target, this paper analyzes and studies the detection performance of FMCW laser radar based on polarization orthogonal demodulation from the perspective of target detection and recognition, effectively solving the problems of weakened detection capability and limited detection distance caused by polarization mismatch. The experimental results show that under the same test environment, compared with the typical detection method, the side mode suppression ratio of the six detection targets (reflector, quadcopter UAV, bird feather, green luo, marble, plastic bag) is improved by 11.85%, 11.98%, 13.46%, 15.39%, 17.12%, and 18.29% respectively. Further experiments show that by adjusting the wave plate angle (22.5°, 45°, 60°), when the wave plate angle is 45°, the ranging standard deviation reaches the minimum value of 0.012 m, and the side mode suppression ratio can be increased to 16.931 dB. Through the study of the echo polarization state, further theoretical support is provided for the application of FMCW laser radar in target recognition.ConclusionsThis article combines polarization information with FMCW laser radar technology to provide theoretical basis for the polarization characteristics of echoes, expand their applications in military, autonomous driving, and industrial detection fields, improve target recognition, low reflectivity target detection, and environmental perception capabilities, solve the recognition limitations of traditional laser radar in complex environments, and provide theoretical support for high-precision target recognition and system optimization design.

ObjectiveThe distributed Bragg reflector (DBR) is a crucial component of vertical-cavity surface-emitting laser (VCSEL), relying on the multilayer stacking of high-refractive-index and low-refractive-index materials to achieve high reflectivity through interference superposition of reflected light. The DBR structure of traditional VCSELs typically uses the AlGaAs material system, however, due to the small difference in refractive indices between materials with different Al compositions, the reflection bandwidth of the DBR structure is narrow, which affects the mode stability of VCSEL. The critical absorption wavelength of the AlGaAs material system is relatively long, resulting in some absorption losses. Moreover, the multi-layer growth process of AlGaAs DBR requires extremely high precision, increasing manufacturing difficulty and cost. The use of metal-organic chemical vapor deposition (MOCVD) to grow DBR structures typically requires high temperatures, making it incompatible with the photolithographic lift-off process for optoelectronic devices, which is not conducive to patterned growth. Therefore, this paper investigates a DBR structure with a wide reflection bandwidth, low absorption loss in the visible and near-infrared bands, and the ability to be deposited at room temperature, which perfectly matches the fabrication process of VCSEL devices.MethodsIn this study, the reflection spectrum of the SiO2/ZnS material system DBR structure was simulated and calculated to determine the number of periods required to meet the requirements of a wide reflection bandwidth and high reflectivity at the target wavelength (Fig.3). The thickness tolerances of the two materials in the DBR structure were also calculated. The process flow for the fabrication of the inner cavity contact VCSEL device has been designed (Fig.5), and magnetron sputtering was used to deposit the DBR structure on VCSEL devices, as well as on quartz glass and GaAs substrates. The reflection spectrum of the deposited DBR structure was measured using a micro-area spectroscopic measurement system. Additionally, the P-I-V characteristics and spectral properties of the devices were tested using a laser testing system.Results and DiscussionsUsing a micro-area spectroscopic measurement system, the reflection spectra of an 8-period DBR structure deposited on quartz glass and GaAs substrates were obtained. The designed center wavelength was 850 nm, and the reflection bandwidth with reflectivity exceeding 99% reached 209 nm (Fig.6). The VCSEL device with an oxide aperture of 8 μm exhibited a threshold current of 0.5 mA and achieved a peak output power of 1.55 mW at an injection current of 3.45 mA (Fig.9). The central wavelength in the spectrum was 843 nm, and the far-field divergence angle was less than 20.6°(Fig.10). The devices with the DBR center wavelength offset by 50 nm also achieved stable lasing, with no significant differences in P-I-V characteristics and spectral properties compared to devices matching the designed center wavelength. These results indicate that precise matching of the center wavelength to the design parameters is not necessary, further validating the broadband advantage of this DBR structure.ConclusionsThis study investigated vertical-cavity surface-emitting laser (VCSEL) with broadband mirrors based on the SiO2/ZnS material system. A broadband DBR structure using the SiO2/ZnS material system was designed through simulations, and the results showed that the DBR structure achieved a high reflectivity exceeding 99% within a reflection bandwidth of 209 nm, meeting the requirements for broadband VCSEL applications. VCSEL devices with the broadband DBR were fabricated and characterized, exhibiting excellent P-I-V performance at room temperature, with a threshold current of 0.5 mA and a peak output power of 1.55 mW. Additionally, the broadband DBR structure demonstrated a high tolerance to center wavelength variations, maintaining stable VCSEL output over a wide range of wavelength shifts. This characteristic significantly reduces the precision requirements for film deposition during fabrication, providing feasibility for large-scale, low-cost VCSEL production.

ObjectiveSatellite Laser Ranging (SLR) serves as a high-precision space geodetic technique, significantly contributing to the determination of the origin and scale factor of the International Terrestrial Reference Frame (ITRF). However, system delay in SLR is one of the main factors affecting ranging accuracy, and traditional ground target measurement method has limitations in real-time performance. Traditional ground target measurements are carried out separately before and after satellite observation or at fixed time intervals, such as every 60 minutes. This approach has limitations in capturing the real-time changes in system delay, especially when system delay varies dynamically with time, environment, and operational status. For example, changes in environmental temperature and thermal drift of electronic equipment can cause dynamic changes in system delay, which cannot be promptly reflected by fixed-time interval measurements, affecting ranging accuracy and the real-time performance and reliability of observational data. To enhance SLR data precision, a method of obtaining SLR system delay values using the geodetic satellite LAGEOS-1 as a satellite target has been proposed and verified by the SLR system at Changchun Observatory. By adopting a strategy of alternating measurements between the satellite target and the observation target, the real-time performance of the measurement method is enhanced. Finally, the SLR observation data are improved using the SLR system delay values, offering support to enhance SLR ranging precision.MethodsThe researchers selected LAGEOS-1 as the satellite target due to its strong orbital stability, broad motion coverage, and even data distribution (Fig.2). The study selected LAGEOS-1 as the satellite target due to its strong orbital stability, broad motion coverage, and even data distribution. It established a SLR range model, using precise ephemerides as the accurate result, and applied the least square method to the ranging residuals to obtain the system delay. Three satellites with different orbital altitudes, LAGEOS-2, AJISAI, and ETALON-1, were selected to validate the results.Results and DiscussionsThe precision of the ranging data corrected by the satellite target has improved, with enhancements ranging from 13.5 mm to 100.7 mm, averaging an improvement of 50.2%. The range bias of the observational targets has also decreased, with reductions between 13.7 mm and 142.1 mm, and an average improvement rate of 48.6% (Tab.5). Specifically, the RMS of the ranging residuals for the LAGEOS-2 satellite decreased by 57.2 mm and 14.9 mm, with relative change rates of 72.4% and 70.7%, respectively (Fig.4). For the AJISAI satellite, the RMS of the ranging residuals decreased by 70.2 mm, 44 mm, 13.5 mm, and 32.4 mm, with relative change rates of 31.9%, 74.2%, 12.8%, and 19.7%, respectively (Fig.5). The ETALON-1 satellite showed reductions in the RMS of the ranging residuals by 67.3 mm, 100.7 mm, 32.3 mm, 54.4 mm, and 65.3 mm, with relative change rates of 57.8%, 62.8%, 58.4%, 46.1%, and 45.8%, respectively (Fig.6).Compared to the AJISAI satellite, the SLR system delay values obtained by the satellite target demonstrated a better correction effect on the ETALON-1 satellite. The AJISAI satellite exhibited average improvement rates for the RMS of the ranging residuals and range bias of 34.6% and 45.8%, while the ETALON-1 satellite showed average improvement rates of 54.2% and 68.2%. The higher orbital altitude of ETALON-1, along with its lower angular velocity and slower system state changes, may contribute to the more significant correction effects observed when using LAGEOS-1 as the satellite target.ConclusionsA method for calibrating SLR system delays using the geodetic satellite LAGEOS-1 as a calibration target has been proposed. Compared to ground targets, LAGEOS-1 provides a reliable reference for system delay calibration with an observation accuracy difference of only 6 picoseconds. The effectiveness of this method was validated through alternating observations of satellites with varying orbital altitudes (LAGEOS-2, AJISAI, and ETALON-1) using the SLR system at the Changchun Observatory. The results indicate that, in the case study presented in this paper, the SLR system delay values obtained using the satellite target can enhance the precision of SLR data by 13.5 mm to 100.7 mm and reduce range biases by 13.7 mm to 142.1 mm. Notably, the calibration using the satellite target exhibits a more pronounced correction effect on the higher-orbit ETALON-1 satellite, suggesting that this method is particularly advantageous for calibrating delays when dealing with high-orbit satellites.

ObjectiveThe 1.5 μm laser is located both in the near-infrared atmospheric window and in the eye-safe wavelength band, so there is a wide demand for 1.5 μm lasers in fields such as optical communication, laser ranging, and lidar. Currently, optical parametric oscillators (OPOs) based on the principle of nonlinear frequency conversion, represent a prominent approach for generating 1.5 μm lasers. KTiOAsO4 (KTA) is an ideal material for generating 1.5 μm laser output via OPO technology due to its high nonlinear coefficient, broad transmission range, and high damage threshold. However, research in this field has primarily focused on extracavity OPOs operating below 100 Hz and intracavity OPOs operating above kHz. While low repetition frequency extracavity KTA-OPOs have successfully achieved high power 1.5 μm laser output, the output power levels of high repetition frequency intracavity KTA-OPOs are generally lower due to limitations of intracavity power density. In response to the current research status and the demand for high repetition frequency and high power 1.5 μm lasers in application fields, the 10 kHz 1.5 μm laser output power is enhanced by utilizing extracavity KTA-OPO.MethodsTo obtain a pump beam with a repetition rate of 10 kHz and good beam quality, a master oscillator power amplifier (MOPA) system was constructed using LD end-pumped Nd:YVO4 crystals. The 1064 nm oscillator utilizes a BBO crystal for electro-optic Q-switching to achieve a 10 kHz laser, and three-stage amplification is carried out afterwards. In the amplifier stages, to minimize the impact of thermal effects, the first two stages employ single-end pumping, while the third stage uses dual-end pumping. Lenses are placed between each stage to ensure good mode matching between the pump and oscillation spots. During the OPO stage, a plane-plane cavity OPO is built using KTA as the nonlinear crystal to achieve type II non-critical phase matching for the generation of 1.5 μm parametric light. The optical-to-optical conversion efficiency is enhanced by optimizing the pump spot size and the oscillator cavity length (Fig.1).Results and DiscussionsThrough electro-optic Q-switching technology, the oscillator achieves a 1064 nm laser output of 1.02 W, with a repetition rate of 10 kHz and a pulse width of 7.1 ns. The beam quality factors are M2x=1.18 and M2y=1.20 (Fig.2). Using 878 nm LDs as the pump sources, the oscillator power was successfully increased to 6.26 W, 12.40 W, and 20.13 W. The corresponding beam qualities are as follows: for the first stage, M2x=1.20, M2y=1.26; for the second stage, M2x=1.32, M2y=1.26; for the third stage, M2x=1.42, M2y=1.49 (Fig.4). With a cavity length of 40 mm and a pump spot diameter of 430 μm, the KTA-OPO generates a laser with a central wavelength of 1535.8 nm and a maximum output power of 6.26 W (Fig.5). The corresponding optical-to-optical conversion efficiency is 33%, with a pulse width of 7.2 ns and a linewidth of 0.26 nm. The beam quality factors are M2x=2.75 and M2y=3.81 (Fig.6).ConclusionsA high-power 1.5 μm laser with a repetition frequency of 10 kHz has been successfully obtained using an extracavity KTA-OPO structure. To achieve high beam quality pump beam, a MOPA was constructed using LD end-pumped Nd:YVO4 crystals. By combining single-end pumping and double-end pumping in a three-stage amplification, a 1064 nm pump light with a beam quality factor better than 1.5 and a pulse repetition rate of 10 kHz was obtained, with an average power of 20.13 W. In the aspect of the KTA-OPO, the effects of cavity length and pump spot diameter on the pump threshold and conversion efficiency were comparatively studied. The pump spot parameters and resonator parameters were optimized to improve the conversion efficiency of the KTA-OPO, 1.5 μm pulsed laser with an average power of 6.26 W and a pulse width of less than 10 ns, corresponding to an optical-to-optical conversion efficiency of up to 33%. Adoption of extracavity KTA-OPO structure effectively improves the high-frequency 1.5 μm laser output power. Subsequent improvements should focus on enhancing the 1064 nm pump laser power while maintaining good beam quality. Additionally, adopting a ring cavity structure could further optimize the beam quality of the KTA-OPO. Moreover, single-frequency seed injection could be employed to narrow the output linewidth, thereby meeting practical application requirements.

Significance With the growing demands of big data technologies, there is an increasing need for improved data transmission speed, bandwidth, and energy efficiency. Photons, as a medium for information transmission, possess unique advantages, such as high bandwidth, rapid transmission speeds, low power consumption, and compatibility with CMOS technology. Micro-transfer printing has become a pivotal technique for wafer-scale heterogeneous integration, enabling the co-integration of various materials or devices detached from their substrates and transferred onto silicon-based optoelectronic target substrates. This technology offers remarkable versatility and integration potential. This paper delves into recent developments in micro-transfer printing (MTP), exploring its underlying mechanisms, transfer methodologies, and applications. Additionally, it evaluates yield rates, process optimization, and equipment reliability, providing insights into the commercial viability of this technology.Progress This review discusses various auxiliary methods used in micro-transfer printing. During device transfer from a stamp onto a target substrate, the adhesion force of the stamp must be less than the interaction force between the devices and the substrate. Adhesives are commonly used to strengthen the interaction force between the devices and the substrates. The performance of integrated devices is heavily influenced by the interface contact between metal electrodes and materials. Traditional metal deposition processes often introduce defects, strain, and metal diffusion, leading to high resistance at the contact interface. Two-dimensional materials, which lack surface dangling bonds, help mitigate these issues during the transfer process. In laser-assisted non-contact transfer, the laser absorption layer absorbs energy, heating the water in the hydrogel and inducing a localized liquid-to-vapor phase transition. This phase transition causes the adhesive layer's surface to bulge, effectively eliminating interfacial adhesion forces. In recent years, micro-transfer printing has shown significant advantages in heterogeneous integration, allowing for the high-density integration of diverse photonic components, including C-band tunable lasers on SOI and SiN platforms, InGaAsP-based photodetectors, and electro-optical modulators such as thin-film lithium niobate devices. The paper also explores the future commercialization prospects of micro-transfer printing technology.Conclusions and Prospects This work provides a thorough review of heterogeneous integration techniques based on micro-transfer printing. MTP technology is crucial for the fabrication of high-performance heterogeneous photonic integrated circuits. However, its commercialization in the photonics field faces several challenges. Achieving large-scale production requires addressing key factors such as batch production yield, which depends on the yield of devices from the source wafer, the release process, the pickup process, and the printing process itself. Process optimization and device performance are critical areas that need improvement. For instance, capillary forces can cause materials to collapse or fracture, but these issues can be mitigated through vapor-phase etching processes. Additionally, the strength and number of tethers supporting the devices play a vital role in the transfer process, necessitating the design of optimized tethers to improve transfer efficiency. The reliability of transfer printing equipment is another critical consideration. As micro-transfer printing technology matures, this heterogeneous integration method has become essential for fabricating high-performance photonic integrated circuits, and overcoming these challenges will be key to its widespread commercialization.

ObjectiveWith the advancement and development of science and technology, traditional optical systems are no longer able to meet the growing demand of people. Conformal optical sphere cover is an optical protective structure that highly matches the shape of the carrier, and the demand for conformal optical sphere cover is increasing with new high-tech things. However, traditional conformal optical systems require the addition of a phase plate at the rear of the system, resulting in excessive mass and high cost. In order to reduce the weight of the conformal optical system and simplify its structure, a conformal optical system without the need for additional phase plates was designed using the optical numerical joint method, effectively reducing the system weight and simplifying the system structure.MethodsWe studied a method based on optical numerical joint to eliminate the influence of aerodynamic optical effects on conformal optical systems, and investigated the restoration of overall scanning angle images based on different feature scanning angles (Fig.9). In the image restoration section, the sum of image gradients based on different feature scanning angles was studied (Tab.2), and the Richardson Lucy iterative algorithm was used to deconvolve and restore the synthesized PSF model to obtain the restored image (Fig.10). By combining optical and digital methods, the influence of aerodynamic optical effects on conformal optical systems has been eliminated.Results and DiscussionsThis article designs a conformal optical system with a focal length of 50 mm, a working wavelength range of 3-5 μm, and a scanning field of view of ±15°. Firstly, the Zernike polynomial is used to fit the wavefront aberration to construct a generalized pupil function, and then Fourier transform is performed to obtain the PSF model. Then, singular value decomposition is implemented, and the cardinality matrix Bi and coefficient matrix Miare introduced to construct an asymmetric full field PSF model, which is used for deconvolution image restoration. The sum of image gradients in a conformal optical system reflects the overall edge intensity and texture complexity characteristics of the image. The reconstructed image has improved sum of image gradients by at least 1.4E+07 at each scanning angle, and the improvement in partial scanning field of view can reach 2.0E+07. This method does not require the introduction of additional correction components, and significantly improves the impact of aerodynamic optical effects on imaging quality while ensuring system lightweighting and low cost.ConclusionsTo address the aberrations caused by aerodynamic optical effects in conformal optical systems, a segmented restoration of the full field PSF model is constructed through the combination of light and number to restore the image. This article designs a conformal optical system with a focal length of 50 mm, a working wavelength range of 3-5 μm, and a scanning field of view of ±15°. Firstly, the wavefront aberration is fitted using Zernike polynomials to construct a generalized pupil function, followed by Fourier transform to obtain the PSF model. Then, singular value decomposition is implemented, and the cardinality matrix Bi and coefficient matrix Mi are introduced to construct an asymmetric full field PSF model. The sum of image gradients in a conformal optical system reflects the overall edge intensity and texture complexity characteristics of the image. The sum of image gradients of the reconstructed image at each scanning angle is improved by at least 1.4E+07, and the improvement in partial scanning field of view can reach 2.0E+07. This method provides a new approach to eliminate the influence of aerodynamic optical effects on conformal optical systems and has practical engineering application prospects.

ObjectiveIn-vehicle heads-up display systems allow drivers to see key data without turning their heads or looking down by virtually superimposing all types of driving information on the real-world view of the road. Head-up display systems require at least two or more virtual image depth planes to realize the augmented reality display effect truly. Currently, a variety of methods have been proposed for realizing AR-HUD systems with multiple depth planes. However, most of these designs suffer from the problem of insufficient depth of far-optical road imaging distance adjustment, which is unable to effectively respond to the visual convergence adjustment conflicts brought about by changes in vehicle speeds, affecting the driving experience and safety. Research has shown that holographic imaging technology can provide a realistic three-dimensional display effect and all the depth cues required by the human eye. At the same time, the holographic three-dimensional imaging content displayed by the use of spatial light modulator (SLM) depth of the virtual image continuously adjustable, a complete solution to the imaging of the convergence of the adjustment of conflicts, vertigo, to achieve an indeed augmented reality display effect.MethodsBased on the holographic imaging principle, the impulse response function of the holographic display equipped with a spatial light modulator and the modulation transfer function are derived, and a dual-optical path AR-HUD system that can realize continuous depth adjustment is established (Fig.4). The optical system structure was constructed by acquiring windshield surface data and extracting the application area through Zemax (Tab.1). The dual-optical path AR-HUD system was designed with an off-axis reflective optical design to solve the superposition problem of the near-optical path and far-optical path information. The system optimizes the dot column plots, MTF curves, grid and dynamic aberrations of the near- and far-optical paths. It gives the projection distance and image width variation diagrams of the projection unit of the far-optical path (Fig.10).Results and DiscussionsAt the end of the design optimization of the dual optical path AR-HUD system, the maximum value of the RMS radius is 10.933 µm at 15 m. The RMS radius is 23.304 µm at 3 m (Fig.6), the MTF curves, the MTFs are all greater than 0.5 at a cut-off frequency of 6 lp/mm (Fig.7). The maximum mesh aberration is less than 2% for the projected distance of 15 m. The maximum mesh aberration is less than 3% for the projected distance of 3 m (Fig.8). In the process of continuous adjustment of projection distance, the worst image quality is at the projection distance of 7 m (Fig.9), at this time, the RMS spot radius is within the radius of the Airy spot, the MTF can satisfy the requirement of more than 0.5 at 6 lp/mm, and the mesh aberration is less than 2%, which meets the acceptable range of the human eye. It can satisfy the driving demand in the actual use of the system.ConclusionsAiming at the problems of the traditional AR-HU, such as the inability of the final imaging to match the depth distance of the natural scene and the poor imaging quality caused by the windshield irregularities in the holographic HUD, the theoretical feasibility of the continuous depth adjustment system is verified through the research based on the holographic imaging theory. A dual-optical path AR-HUD system with continuous depth adjustment is designed. The aberration correction capability of free-form optics is utilised to accurately correct the irregular aberration introduced by the windshield, which significantly improves the imaging quality and ultimately obtains dual-optical path AR-HUDs with imaging distances of 3 m, 7-15 m, and field of view angles of 6°×1° and 12°×4° with good imaging quality, respectively. At the same time, the dynamic aberration analysis of the designed dual-optical path AR-HUD system is carried out, which proves the stability of the designed AR-HUD system.

ObjectiveWith the rapid development of photoelectric detection technology, the wide use of various interference, camouflage and stealth technologies, as well as the diversity of detection and identification targets and the changing complexity of the use environment, single-spectral photoelectric detection technology is insufficient. The combination of infrared imaging detection and visible light imaging detection can improve the all-weather detection capability, anti-interference capability and target acquisition capability of the photoelectric imaging components. If the optical system of the long-wave infrared/visible dual-spectral imaging components adopts a common aperture splitter structure, each channel uses a separate optical structure and detector, it is assuredly easier to achieve, but the structure will be more complex, the volume and mass will be relatively larger, and the assembly difficulty is relatively larger. On the basis of ensuring the detection capability of the photoelectric imaging components, in order to realize the miniaturization of the long-wave infrared/visible light dual-spectral composite photoelectric imaging components, combined with the engineering application requirements, the long-wave infrared/visible light dual-spectral composite photoelectric imaging components has been successfully designed and fabricated by the modular design idea.MethodsFirstly, the optical system is designed according to the optical parameters in the technical requirements. Secondly, the electronic system is designed according to the electrical parameters in the technical requirements. Then, based on the size of optical system and circuit system, the structure is designed and realized. In this process, each part is adjusted and optimized according to the requirements of technical indicators to achieve the purpose of meeting the indicators. Finally, according to the optical, mechanical and electrical characteristics and working requirements of the composite photoelectric imaging components, the environmental adaptability design is completed. The specific design method is as follows: The optical part adopts coaxial refraction reflection type, and the detection component adopts long-wave infrared/visible light integrated movement design. The mechanical structure does not adopt the three-arm bracket with large volume and more light blocking, but fixes the visible light lens group and the planar mirror as an integral component in the center of the first infrared lens, and makes the visible light detector and the side wall of the infrared lens cylinder basically parallel. In this way, space is saved to the greatest extent, the volume is reduced, and the shading of the infrared channel is reduced.Results and DiscussionsThe long-wave infrared light signal is imaged to the uncooled focal plane detector through three infrared lenses including diffraction lenses. The visible light signal is focused through the visible light lens set nested in the center of the first infrared lens, reflected by the planar mirror located in the aperture center to the side of the infrared lens cylinder, and received by the CMOS detector as (Fig.4) and (Fig.8). The performance of the optical system in the long-wave infrared/visible dual-spectral composite photoelectric imaging components directly affects the detection range and target recognition accuracy of the photoelectric components. The design results of the optical part are as follows: The working wavelength of the long-wave infrared channel is 8-12 μm, F# is 0.95, the focal length is 44 mm, the field of view is 10°×8°, and the optical length is 53 mm; Visible light channel operating wavelength 0.45-0.75 μm, F# is 4.3, focal length 25 mm, field of view 7.9°×6.3°, total optical length 32 mm.ConclusionsThe whole optical-mechanical system has been passive non-thermal design at -40-+60 ℃. The composite photoelectric imaging components have simple and compact structure, small size and light weight. The volume (length × width × height) is 62 mm×40 mm×50 mm, and the total weight is (133±3) g. The experimental results show that the composite photoelectric imaging module has good imaging performance and fully meets the design criteria.

Objective Unmanned Aerial Vehicle (UAV) photogrammetry serves as a high-efficiency, flexible, and cost-effective complement to traditional aerial surveying. Payload limitations necessitate UAVs to employ non-metric cameras, whose interior orientation elements cannot be measured in real-time and rely on pre-calibration. Temperature variations induce focal length drift in lenses, causing deviations in interior orientation elements. This introduces scale errors into photogrammetric models, propagating directly to 3D ground coordinate errors and degrading overall measurement accuracy and reliability. Consequently, enhancing focal length thermal stability is critical for UAV mapping precision. Athermalization design is essential to mitigate focal length variation. Current research (passive optical, passive mechanical, active electro-mechanical) primarily focuses on maintaining imaging quality over specific temperature ranges, with significantly less attention directed toward focal length stability under thermal load. Existing focal length thermal stability methods—such as lens power/material distribution, mechanical compensation, and wavefront coding—often exhibit low material matching efficiency or complex structural designs. These limitations hinder their suitability for UAV mapping lenses demanding high precision, lightweight construction, and low cost. Image-space telecentric lenses, vital for enhancing geospatial accuracy and resolution in mapping, present additional challenges due to their complex multi-element designs restricting traditional athermalization approaches. A novel focal length thermal stabilization method based on combinatorial spacer material selection is proposed.Methods The proposed method accounts for the influence of assembly methods on variations in air thickness (Fig.1) and quantitatively analyzes the effects of temperature on lens refractive index, optical surface curvature, and thickness. The Gaussian matrix enables rapid calculation of the optical system’s focal length without introducing image-space parameters. A coupled mathematical model integrating temperature, spacer thermal expansion coefficients (CTE), and system focal length is established based on matrix optics theory. Multiple individual mechanical spacers within the lens barrel are treated as an integrated combinatorial design unit. Using this mathematical model, the globally optimal material combination is solved under the constraints of available spacer materials with the objective of minimizing focal length variation. This approach transforms the traditional method of manual spacer material matching into a quantifiable optimization process, significantly improving material selection efficiency(Fig.4). Results and DiscussionsTaking an airborne image-space telecentric lens (focal length: 23.9719 mm) as an example, thermal stability design of the focal length was performed. Mechanical structure design (Fig.7) and tolerance analysis (Fig.8) ensured structural design rationality. The range of optional materials for the four spacer rings was analyzed (Tab.1), and combinatorial material selection was performed using the proposed mathematical model (Tab.5). After thermal stability design, within the temperature range of (20±40) ℃, the focal length variation was reduced from [-12.2 μm, +12.4 μm] (Tab.3) to [-4.9 μm, +5.1 μm] (Tab.6), representing a 59.35% reduction in total variation (Fig.9), demonstrating the feasibility and effectiveness of the proposed method. Furthermore, the total focal length variation accounts for 68% of the system's depth of focus, leaving sufficient margin for factors such as adjustment errors and mechanical vibrations, thereby enhancing imaging quality stability under complex working conditions.Conclusions A novel thermal stability design method for the focal length of airborne mapping lenses is presented. By constructing a coupled model of temperature, material CTE, and system focal length, and treating the spacer assembly as an optimization unit, global matching of spacer materials is achieved, effectively reducing the impact of temperature on the focal length of the system. Application to an airborne image-space telecentric lens demonstrated a 59.35% reduction in focal length variation over (20±40) ℃, with the total variation being less than the system's depth of focus, meeting the comprehensive requirements of UAV-borne high-precision mapping for focal length stability and imaging quality. Compared to traditional passive optical athermalization designs, which face high complexity challenges in multi-lens systems, and complex mechanical athermalization structures that increase volume, weight, and reduce stability, the proposed method transforms traditional empirical spacer material selection into a quantifiable optimization process through parametric modeling. Focal length thermal stability is enhanced solely by replacing spacer materials, avoiding alterations to the initial structural parameters of the optical system, offering advantages of low design complexity and high engineering operability.