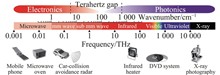

Significance Terahertz (THz) technology, due to its unique non-contact measurement and non-destructive testing capabilities, has shown remarkable potential in a wide range of marine applications. The ability to detect pollutants, assess the condition of marine infrastructure, and monitor ecological health in real time offers significant advantages over traditional methods. As such, THz technology holds great promise for advancing marine environmental protection and resource management.Progress Firstly, the application of terahertz (THz) waves in marine environment monitoring is first introduced. In the THz waveband, the normalized radar cross section (NRCS) is used to measure variations in reflection intensity at different incident angles, which helps evaluate the characteristics of the oil film. NRCS reflects the interaction of THz waves with the oil film surface, providing insights into its thickness, distribution, and surface properties. As the propagation properties of THz waves differ between the oil film and the water surface, factors such as the thickness, composition, and surface conditions of the oil film directly influence the reflection characteristics. By measuring the reflection intensity at different incident angles, detailed information about the oil film can be obtained, allowing for precise assessment of its thickness and distribution (Fig.5). For water quality classification, THz waves are incident at a specific angle onto an ATR prism, generating evanescent waves that penetrate the sample through total reflection. Fourier transform is applied to extract the reflection coefficient and calculate the complex permittivity. Optical parameters are modeled and classified to achieve water sample classification (Fig.6-Fig.7). Next, the application of THz technology in marine non-destructive testing is introduced. This part is divided into three sections: non-destructive testing of ship hull fiberglass materials, protective coatings and paint layers, and PE pipes. Non-destructive testing of ship hull fiberglass materials (Fig.8, Fig.10-Fig.11) and PE materials (Fig.16-Fig.17) is carried out using THz time-domain spectroscopy (THz-TDS). The principle involves exciting THz pulses to pass through the sample, collecting transmitted and reflected signals. By sampling these signals in the time domain and applying Fourier transform, the data is converted into the frequency domain, creating imaging for internal structure and defect visualization. This process enables the non-destructive evaluation of internal features and defects. For the non-destructive testing of ship protective coatings and paint layers (Fig.12-Fig.14), time-domain THz technology is used to record the time delay and amplitude variations of reflected signals. Deconvolution techniques are applied to calculate the coating thickness, and stationary wavelet transform (SWT) is utilized to extract characteristic signals for internal defect identification. Finally, the application of THz technology in marine ecosystem monitoring is discussed. This includes the detection of microalgal and microbial metabolites (Fig.18-Fig.20) to assess the potential for ecological issues, such as red tide phenomena, and the detection of radioactive cesium ions in seawater (Fig.22). The use of THz waves in marine ecosystem monitoring offers a promising approach for early detection of ecological disruptions and contamination in aquatic environments. The results shown in Fig.18 and Fig.22 highlight the potential of THz technology in enhancing marine environmental monitoring, ensuring a safer and more sustainable marine ecosystem.Conclusions and Prospects THz technology has demonstrated substantial potential in various marine applications, particularly in pollution detection, material integrity assessment, and ecological monitoring. Its non-contact and non-destructive characteristics make it an ideal tool for safeguarding marine infrastructure and ecosystems. As THz technology continues to evolve, its applications in the marine field are expected to expand, offering more efficient and accurate methods for real-time monitoring and early warning systems. In the future, THz technology is poised to play a crucial role in marine resource protection, contributing to sustainable marine management and environmental conservation.

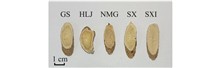

ObjectiveAstragalus membranaceus, a widely recognized traditional Chinese medicinal herb, is extensively employed for its immunomodulatory and health-enhancing properties. The quality and therapeutic efficacy of Astragalus are profoundly influenced by its geographical origin, underscoring the necessity for reliable methods to authenticate its provenance, ensure product integrity, and mitigate adulteration risks. Conventional identification techniques, encompassing morphological, chemical, and DNA-based approaches, are often constrained by their time-intensive, laborious, and costly nature, thereby limiting their applicability in large-scale industrial contexts. Spectroscopic techniques, such as Laser-Induced Breakdown Spectroscopy (LIBS) and Near-Infrared Spectroscopy (NIR), have emerged as rapid, non-destructive, and efficient analytical tools for quality assessment and geographical origin determination. Nevertheless, the inherent complexity of Astragalus, characterized by its diverse elemental and molecular profiles, often renders single-spectral techniques inadequate for comprehensive characterization. Data fusion methodologies, which integrate complementary information from multiple sources, offer a promising avenue to enhance classification accuracy. By leveraging advanced data fusion strategies to combine LIBS and NIR spectral data, the accuracy of geographical origin discrimination for Astragalus membranaceus can be substantially improved.MethodsAstragalus samples were collected from five different geographical origins: Gansu, Heilongjiang, Inner Mongolia, Shanxi, and Shaanxi (Fig.1). Complementary elemental and compositional information was obtained using LIBS and NIR techniques (Fig.2). Initially, Support Vector Machine (SVM), Logistic Regression (LR), and Linear Discriminant Analysis (LDA) models were developed based on individual LIBS and NIR spectral data, and LDA was selected as the base model for investigating fusion classification outcomes based on the single-spectral classification results (Tab.1). To improve classification performance, lower-level and mid-level data fusion strategies were employed to integrate LIBS and NIR spectral information. Lower-level data fusion involves the direct concatenation of LIBS and NIR spectral data to form a new lower-level fused spectral dataset for model classification (Fig.4). Mid-level data fusion, on the other hand, extracts the most representative features from LIBS and NIR spectra separately and then concatenates these features to form a mid-level fused spectral dataset for model classification (Fig.4). The model's performance was evaluated using various metrics, including classification accuracy (ACC), macro-precision (M-P), macro-recall (M-R), macro-F1 score (M-F1), and the Area Under the Curve (AUC), to assess the effectiveness of spectral fusion strategies compared to single-spectral approaches.Results and DiscussionsIn single-spectrum analysis, the LDA model for LIBS achieved an optimal classification accuracy of 88% on the test set (Tab.1). In comparison, the lower-level fusion LDA model attained an accuracy of 92.00% and an AUC value of 0.9964 on the test set (Tab.3). The most notable enhancement, however, was observed in the mid-level fusion approach, which utilized the Stepwise Projection Algorithm (SPA) for feature selection on both LIBS spectral lines and NIR data. This mid-level fusion LDA model achieved a classification accuracy of 96.00% and an AUC value of 0.9998 on the test set (Tab.3), showing substantial improvements in both precision and reliability. The mid-level fusion approach successfully eliminated redundant data, enabling more efficient and accurate classification. Finally, an importance analysis was conducted on the features in the mid-level fusion (Fig.9), with key features being interpreted. The results indicate that integrating complementary spectral data from LIBS and NIR significantly outperforms single-spectrum analysis in terms of classification accuracy and robustness.ConclusionsThe results demonstrate the efficacy of combining LIBS and NIR spectral data through data fusion for the accurate and efficient identification of the geographical origin of Astragalus membranaceus. The mid-level fusion model, which integrates feature selection techniques, provided the highest classification performance, indicating its potential for non-destructive and rapid origin authentication. The findings not only highlight the advantages of spectral fusion in enhancing classification accuracy but also propose a reliable and scalable solution for the quality control and traceability of medicinal herbs in the pharmaceutical industry. The successful application of LIBS-NIR spectral fusion paves the way for more comprehensive analytical approaches in the quality assessment of traditional Chinese medicinal materials.

ObjectiveIce crystal particles form complex mixed-state features during the melting process, which in turn affects their optical and radiative properties, with important implications for studies of global radiation effects and weather forecasting. Early researchers assumed the shape of ice crystal particles as spherical for theoretical studies. Recently, various standard nonspherical particle models such as hexagonal, cylindrical, and bullet have been developed to study the optical properties of ice crystal particles. However, the real ice crystal particle morphology is much more complex than the standard models. For example, the melting process of ice crystal particles is a very common but extremely important process, which is important for the study of microphysical and optical properties of ice crystal particles. During rainfall process, ice crystal particles are transformed into raindrops in the melting layer, and the microphysical properties such as the morphology and mixing state of ice crystal particles undergo a complex change. For the study of the melting process of ice crystal particles, there are advantages and shortcomings in both field observations and radar observations. Therefore, it is important to construct an accurate melting ice crystal particle model to study the optical properties of ice crystal particles during the melting process.MethodsIn this paper, a non-spherical non-uniform model is proposed to simulate the morphology and mixing state of ice crystal particles during the melting process, and the Discrete Dipole Approximation (DDA) method is used to systematically study the effect of frequency, aspect ratio, and Ice-to-Water Mixing Ratio (IWMR) on the optical properties of melting ice crystal particles.Results and DiscussionsThe results show that the optical properties (extinction efficiency factor, scattering efficiency factor, asymmetry factor, and scattering phase matrix) of ice crystal particles at different melting stages have large differences. Specifically, the larger the particle size of melting ice crystal particles, the larger the oscillation amplitude of its extinction efficiency factor, scattering efficiency factor and asymmetry factor with frequency change, and the larger the oscillation of the scattering phase matrix element of melting ice crystal particles, with the increase of frequency. With the melting of the ice crystal particles, these optical parameters show regular changes with the decrease of IWMR, which also implies that ignoring the melting process of the ice crystal particles may lead to misestimation of their optical properties. The results also show that the influence of ice crystal particle morphology on extinction efficiency factor, scattering efficiency factor and asymmetry factor is mainly in the lower melting stage. When the melting degree is low, the aspect ratio of the ice crystal nuclei has a significant effect on all the optical property parameters. When the melting degree is high, the effect of the nuclear aspect ratio on the non-scattering optical parameters such as the particle extinction efficiency, scattering efficiency and asymmetry factor is basically negligible, but with the increase of the particle size, the nuclear aspect ratio of the ice crystals still has a significant effect on the particle scattering matrix elements. The results of the study can provide a reference for further understanding of the evolution law of microphysical properties of ice clouds and improving the accuracy of ice-water content inversion and other studies.ConclusionsThis paper presents a parameterized model of melting ice crystals, which is based on the actual scenario of the complex evolution process of particle morphology and mixing state exhibited during the melting process of ice crystal particles. This model has been developed in order to address the practical needs of microwave remote sensing and inversion algorithm research of ice crystal particles. A particle model is constructed and the DDA method is employed to conduct a comprehensive investigation into the influence of various factors, including particle size, frequency, morphology and melting degree, on the optical characteristics of ice crystal particles. These characteristics include extinction efficiency factor, scattering efficiency factor, asymmetry factor and scattering matrix elements.

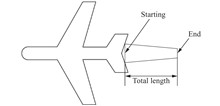

Objective This study aims to analyze the detection capabilities of space-based high-orbit infrared sensors, specifically the retired SBIRS-GEO and the upcoming Next-Gen OPIR, for identifying low-temperature exhaust plumes of aircrafts. The research focuses on understanding how these sensors perform under different operational states of aircraft engines and various observation angles, providing effective measures to escape space infrared detection for aircraft design.Methods With the GEO orbital detection model and specified early warning scene, U.S. two generation of advanced space infrared sensors, carried by SBIRS-GEO and Next-Gen OPIR satellites, is analyzed for their detectability on aircraft tail flame. The research employs a high-orbit infrared detection model and constructs corresponding space-based detection scenarios. The study models the infrared radiation characteristics of aircraft exhaust plumes under different engine states (with and without afterburner) and observation angles. The analysis is conducted in two observation bands: 2.8-4.3 μm and 8.0-10.8 μm. The study also considers the impact of atmospheric spectral transmittance and the geometric and thermodynamic parameters of the exhaust plumes. The performance of SBIRS-GEO and Next-Gen OPIR sensors is compared based on their energy signal-to-noise ratios (SNR) and detection thresholds.Results and Discussions The results show that, with the observation bands of 2.8-4.3 μm and 8.0-10.8 μm, the infrared radiation energy of the tail flame of the aircraft can reach up to 400-600 W/sr under non-afterburner state, and up to 2600-10000 W/sr under afterburner state. Both can be detected by the infrared sensors carried by SBIRS-GEO and Next Gen OPIR, but the energy SNR of SBIRS-GEO is only 4.0-12.37, significantly lower than the 18.92-41.72 of Next Gen OPIR. When the radiation area of the tail flame is amplified by 1.5 times, the energy signal-to-noise ratio of both infrared detectors is significantly improved, with SBIRS-GEO showing the most significant improvement, reaching 6.92-20.31, significantly increasing the probability of infrared detection, indicating that plume control is still necessary. Through further analysis, it was found that under the non-afterburner state, when the initial temperature of the tail flame is below 750 K and the final temperature is below 360 K, the SBIRS-GEO detector theoretically cannot detect the aircraft tail flame. Therefore, the effective measures to escape space infrared detection will include: downsizing the tail flame, lowering its temperature, and flight with specified angles.Conclusions Space-based infrared warning sensors deployed in geostationary orbit at 36000 km can effectively detect and image low-temperature aircraft exhaust plumes. The afterburner state of the aircraft engine and the observation angle of the satellite are critical factors influencing the performance of space-based infrared detection. The SBIRS-GEO infrared sensor cannot detect low-temperature exhaust plumes in non-afterburner states. While the SBIRS-GEO sensor can identify exhaust plumes in afterburner states, its low pixel radiance results in lower identification success rates. The Next-Gen OPIR system, equipped with a new generation of 4 K large-array infrared detectors, offers higher energy resolution and can accurately identify exhaust plumes in both afterburner and non-afterburner states. Reducing component temperature, optimizing plume control, increasing observation elevation angles, and decreasing azimuth angles can effectively reduce the infrared radiation energy of engine exhaust plumes, thereby lowering the probability of detection by space-based infrared sensors. This study provides valuable theoretical references for the development of next-generation space-based infrared warning systems, emphasizing the importance of advanced sensor technology and optimized plume control strategies in enhancing detection capabilities.

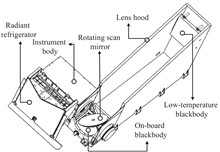

ObjectiveThe primary objective of this study is to address the effectiveness in improving the calibration accuracy of the low-temperature part based on the low-temperature blackbody. With the improvement of inversion accuracy of the ground object target from remote sensing, the quantitative level of the spaceborne equipment is increasing. Typically, on-board blackbody, variable-temperature blackbody, or reference blackbody are installed to solve the problems of on-orbit calibration and reference transfer measurements. From the ground applications, the temperature field of on-orbit instruments is different from tank, it means that the calibration coefficients obtained before launch cannot be directly applied to on-orbit calibration equations. Consequently, the on-orbit calibration coefficients calculated using two-point method based on the "on-board blackbody + cold space" exits significant deviations, especially for detecting targets below 200 K. Therefore, in order to enhance the inversion accuracy at the low-temperature infrared spectrum, a low-temperature blackbody is added to calibrate combined with the on-board blackbody.MethodsFor the center wavelengths of 10.8 μm and 12 μm, the in-orbit calibration method using the dual blackbody for spaceborne infrared spectral imager is researched, and the fusion calibration of "on-board blackbody+low-temperature blackbody", "low-temperature blackbody+cold space" and "on-board blackbody+cold space" is proposed. Based on the in-orbit calibration data, the calibration accuracy and the response consistency of the fusion calibration method for spaceborne infrared spectral imager are analyzed, combined with the laboratory calibration data. Firstly, using the two-point fitting method based on "low-temperature blackbody+on-board blackbody" the temperature deviations at different radiation targets are calculated (Fig.3-Fig.4). It is shown that when the errors of the two blackbodies remain constant, the larger temperature difference between the low-temperature and on-board blackbodies can lead to the smaller inversion errors, while a smaller temperature difference can lead to the greater extrapolated radiance deviations. Similarly, using the two-point fitting methods based on "cold space+on-board blackbody" and "low-temperature blackbody+cold space", temperature deviations at various radiation targets are calculated (Fig.6, Fig.8). The results indicate that the "low-temperature blackbody+cold space" calibration method produces relatively consistent temperature deviations at the low-temperature section compared to the "on-board blackbody+cold space", but exhibits larger deviations at high-temperature section @350 K. Additionally, the response consistency of the infrared detection systems before and after launch is calculated (Tab.1), it is shown that the responsivity ratio of dual blackbodies using on-orbit calibration method and pre-launch calibration method is consistent. Finally, based on 370 sample datasets, on-orbit calibration coefficients of the infrared detection system are calculated using the two-point method with three calibration sources: low-temperature blackbody, on-board blackbody, and cold space. The radiance of Earth’s low-temperature targets is computed (Fig.11, Fig.13) to analyse the calibration effectiveness.Results and DiscussionsThrough extensive experiments, it is found that: the brightness temperature results calculated using "on-board blackbody+low-temperature blackbody", "low-temperature blackbody+cold space" and "on-board blackbody+cold space" are different in the low-temperature region, the brightness temperature results calculated using "low-temperature blackbody+cold space" and "on-board blackbody+cold space" are approximate; The calibration accuracy using "on-board blackbod+low-temperature blackbody” is depended on the calibration accuracy of two blackbodies, the calibration accuracy using "low-temperature/on-board blackbody+cold space" is depended on the calibration accuracy of the blackbody and cold space energy. To acquire more accurate radiance of the cold space, the low-temperature blackbody should be controlled to 180 K. When the blackbody calibration error is 0.5 K, a calibration accuracy of 0.5 K can be achieved for the low-temperature section of radiation targets.ConclusionsAccording to the laboratory calibration data and the on-orbit calibration data, before and after injection, the response of the on-board blackbody and the low-temperature blackbody is consistent using the current calibration scheme. Based on the on-orbit calibration data, through the fusion calibration method—"on-board blackbody+low-temperature blackbody", "on-board blackbody+cold space", and "low-temperature blackbody+cold space”, it is found that the brightness temperature results from the "on-board blackbody+cold space” and "low-temperature blackbody+cold space” are similar, they all have calibration differences in low-temperature region. To obtain a more detailed low-temperature nonlinear curve, it is necessary to increase the dynamic range of the low-temperature blackbody, and the temperature at the lower-temperature part is below 180 K.

ObjectiveSince the 1960s, precision-guided weapons have demonstrated powerful strike capabilities on the battlefield with the development of opto-electronic technology. As one of the important passive jamming measures, smoke screen play an important role in the modern opto-electronic guided weapons countermeasure. Accurately and reliably evaluating the obscuring power has become one of the important research interests in the field of smoke screens. Currently, some scholars have proposed using image correlation indexes to evaluate the jamming effectiveness, but most analyses are based on changes in correlation before and after smoke screen jamming, without considering the applicability of evaluation indexes for matching and tracking performance of guidance systems. Therefore, a method for selecting image correlation indexes based on simulation and field experiments is proposed, with a comprehensively analysis of the change in image correlation and matching performance before and after smoke jamming.MethodsNormalized Mean Squared Similarity (NMSS), Normalized Product Correlation Coefficient (NProd), Pearson's Correlation Coefficient (PLCC), and Structural Similarity Index Measure (SSIM) are proposed as functions for evaluating image correlation. The factors influencing image correlation due to smoke jamming are analyzed (Fig.1), and a method for selecting image correlation indexes based on simulation and field experiments is established. The simulation dataset includes 70 images featuring various typical targets such as aircraft, ships, and vehicles (Fig.2). It constructs a simulated jamming dataset based on attenuation rates, target occlusion ratios, and overall image grayscale variations (Fig.3). The smoke-emitting equipment used in the field experiments, along with targets and basic parameters, is detailed (Tab.1). The layout of the experimental site and data processing methods are depicted (Fig.5-Fig.6).Results and DiscussionsNMSS, NProd, PLCC, and SSIM indexes are utilized to evaluate the image correlation of target areas within the simulation jamming dataset and to analyze target matching performance. The NMSS and NProd are significantly affected by attenuation rates, target area occlusion, and overall image grayscale changes. The PLCC and SSIM correlation values show sensitivity to changes in attenuation rates, target area occlusion, and overall image grayscale, with their matching accuracy trends aligning with the correlation curve variations. This suggests that they can be preliminarily considered suitable as smoke jamming effectiveness evaluation indexes (Fig.4). In field experiments, the attenuation rate, occlusion ratio, and overall image grayscale changes exhibit an initial rise followed by a decline with increasing image frame. The amplitudes of attenuation rate and occlusion ratio changes are similar, while the overall image grayscale variation is less than 30%, primarily due to the flame produced by the smoke-emitting equipment combustion (Fig.8). In field experiment, the correlation values of the PLCC and SSIM are consistent with the trend in matching accuracy, making them suitable as quantitative and graded evaluation indexes for smoke jamming effectiveness (Fig.9).ConclusionsThe evaluation indexes for smoke jamming effectiveness based on image correlation are proposed according to the tracking mechanism of guidance systems, making the evaluation results more realistic in combat scenarios. However, current research mainly focuses on the changes in correlation before and after smoke jamming, with few studies analyzing the applicability of indexes in conjunction with target matching performance. The reliability of these evaluation results remains to be verified. Therefore a method for selecting image correlation indexes based on simulation and field experiments is proposed, which can effectively evaluate the applicability of indexes. The PLCC and SSIM are more sensitive to smoke jamming effectiveness and can be used as quantitative and graded evaluation indexes for smoke jamming effectiveness.

ObjectiveWith the continuous advancement of infrared thermal imaging detection and guidance technologies, research on infrared radiation has become pivotal for mitigating threats to ships. The exhaust plume is one of the main sources of infrared radiation, the suppression of the plume is critical for the overall infrared stealth characteristic of ships. Experimental investigations serve as the principal means for understanding the infrared radiative characteristics of ship exhaust plumes and are important for the design and optimization of exhaust systems. In practical applications, experimental systems are often scaled down to reduce testing costs. Consequently, examining the similarity in infrared radiative characteristics between scaled and full-scale systems provides a foundation for applying experimental results. Existing studies mainly consider the similarity of temperature and concentration fields as prerequisites for achieving radiative similarity. However, whether optical thickness should remain consistent before and after scaling remains unresolved. In practical scaled model experiments, when the exhaust gas composition is consistent across scaled and full-scale systems, variations in geometric dimensions often result in changes to optical thickness. Exploring the impact of optical thickness on radiative similarity is therefore of significant academic and practical importance for validating and applying the results of scaled model experiments.MethodsRadiative similarity is investigated firstly in one-dimensional media. The analytical solution for radiative intensity is derived through solving the radiative transfer equation. Single-layer media with only high temperature gas and multi-layer media containing high temperature and surrounding low temperature gases are considered. Similarity conditions including consistent temperature and medium concentration distribution as well as equal optical thickness are examined. In order to check the applicability of the radiative similarity rules in a practical system, the plume of a ship exhaust system is investigated. Computational fluid daynamic are conducted to obtain the temperature field and the molar fraction fields of CO2 and H2O in the plume. The reverse Monte Carlo method is then employed to compute the radiative intensity of the exhaust system. This approach enables a detailed analysis of the infrared radiative similarity of different scaled models under actual exhaust conditions.Results and DiscussionsFor one-dimensional media, under the condition of the same temperature and gas concentration distribution as well as equal optical thickness, the maximum deviation between scaled and full-scale systems is 1.37%, regardless of whether the medium consists of a single layer or multiple layers of gas. When the gas concentration remains unchanged (resulting in significant variations in optical thickness), the infrared radiative intensity exhibits some differences between scaled and full-scale systems. For two-layer and three-layer media where there is a low temperature surrounding media, the deviation is less than 10%. The spectral distributions are illustrated in Fig.2 and Fig.3, while the results of the spectrally integrated radiative intensity are summarized in Tab.1. For the plume of a ship exhaust system, the infrared images are shown in Fig.9. Radiative intensity results in Tab.3 show that if the optical thickness is the same for scaled and full-scale systems, the biggest deviation is 2.15%. If the Mole fractions of the gases keep unchanged (with optical thickness changed), the biggest deviation is 10.47%.ConclusionsUnder the conditions of consistent temperature distribution and similar flow fields as well as the same optical thickness, the scaled and full-scale exhaust systems exhibit high similarity in their infrared radiation characteristics. The integrated radiative intensity of the exhaust plume area is proportional to the square of the scaling ratio. When the scaled and full-scale systems satisfy similar temperature and flow fields but maintain a constant Mole fraction of the gas medium (resulting in changes in optical thickness), deviations in infrared radiation intensity are observed. Nevertheless, the results from the scaled model remain valuable for predicting the infrared radiation characteristics of the full-scale system. In scaled experiments of exhaust system, ensuring similar temperature and flow fields is crucial for maintaining radiation similarity. Although changes in optical thickness introduce some impact on radiative similarity, the deviations are relatively small and do not undermine the predictive value of the scaled model results.

ObjectiveThe welding joints of small-diameter nozzles of pressure vessels usually have complex structures and groove types. During the production and manufacturing process, defects such as porosity, lack of penetration, and lack of fusion are prone to occur, which can easily cause stress concentration, resulting in fatigue cracks, leakage, and even explosion accidents. Structural damage detection of small-diameter nozzles is of great significance for the safe service of pressure vessels. However, the current technology applied to the welding seam detection of small-diameter nozzles of pressure vessels is limited by the special structure of small-diameter nozzles and the high requirements for surface quality. Therefore, the detection effect is limited. Research on detection technology suitable for the complex structure, poor surface quality, and narrow space of small-diameter nozzles is of great significance for effectively evaluating the safety status of small-diameter nozzles. Eddy current thermography detection can detect objects within a large field of view from a distance, especially suitable for complex structures such as small-diameter nozzles of pressure vessels.MethodsBased on the principle of eddy current thermography (Fig.1), a small-diameter nozzle of a pressure vessel model and an excitation coil model were constructed using SolidWorks (Fig.4-Fig.5). Using COMSOL finite element software for electromagnetic thermal coupling simulation analysis, the temperature distribution around the weld defect of the small-diameter nozzle under the excitation of the arc-shaped double coil and the adaptability of the coil are studied. Exploring the thermography method for small-diameter nozzle defects in pressure vessels using the intersecting line scanning mode in simulation, achieving intelligent detection of defect signals in the intersecting line dimension, and laying the foundation for the industrial application of this technology. Meanwhile, an eddy current thermography experimental simulation system was set up in the laboratory to simulate the welding defects of small-diameter nozzles of pressure vessels (Fig.13), and the results were compared with the simulation for verification. Finally, using MATLAB to extract grayscale and pixel positions from the thermal imaging results, thus completing the quantitative evaluation and detection of defect length.Results and DiscussionsThrough COMSOL simulation, it can be concluded that under static inspection, the temperature difference between the defective area and the non-defective area of the small-diameter nozzle weld seam in the pressure vessel can reach a maximum of 10 ℃ or more (Fig.8), therefore the effect is good. In the dynamic detection of intersecting line scanning mode, defects of different sizes showed significant temperature changes, and the highest heating temperature could reach 283 ℃ (Fig.12). The laboratory has set up an eddy current thermal imaging detection system, and through actual simulation under non ideal conditions, the temperature in the defect area has increased by about 5 ℃ (Fig.18). The above research results indicate that eddy current thermography technology can meet the demand for defect detection of small-diameter nozzle welds in pressure vessels. The quantitative evaluation of defect length was achieved through MATLAB and related formulas. The calculated values of defect length were 7.74 mm and 3.91 mm, respectively, with errors of 3.25% and 2.25% compared to the actual length.ConclusionsBased on eddy current thermography technology, a new type of excitation coil suitable for defect detection of small-diameter nozzle welds in pressure vessels was designed. Through COMSOL simulation, it was found that when the excitation coil heats the defect, the temperature change in the defect area is significant, and the defect causes disturbance in the induced eddy current, mainly concentrated at the two ends of the defect, forming the high-temperature zones. And completed the intersection line scanning mode of thermography along the spatial curve for detecting small diameter nozzle defects in pressure vessels. Preliminary verification has been conducted on the effectiveness of eddy current thermography technology in detecting small diameter nozzle welds in pressure vessels. In the experimental simulation, the heating effect of the excitation coil on small-diameter nozzle welds defects is significant, and the existence of defects can be effectively detected. This indicates that the arc-shaped double coil has good applicability to the welding seam of small-diameter nozzle, and verifies the effectiveness of eddy current thermography technology in the detection of welding seams of small-diameter nozzle in pressure vessels. By using MATLAB to extract grayscale and pixel positions from thermal imaging images, quantitative evaluation and detection of the length of small-diameter weld defects in pressure vessels have been achieved.

ObjectiveAerospike is considered a relatively simple and effective technique to reduce drag for hypersonic vehicles. Aerospike reconstructs the flow field and reduces drag, as well as modifying the magnitude and distribution of the skin temperature, thus influencing the radiation signatures of the blunt body. To explore the impacts of diverse aerospike on infrared radiation over blunt body, this study focuses on aerospikes respectively equipped with conical, hemispherical, and flat-faced aerodisks. The aerodynamic force, thermal property, and infrared radiation of the blunt body affected by the aerospike with three typical aerodisks are numerically simulated. This study provides theoretical reference for the design of low signatures aerospike and the infrared detection of related targets.MethodsThe Navier-Stokes equation was solved based on the Reynolds averaging method to obtain the flow field of the plume. The flow field parameters were computed through finite rate chemical reactions with seven components. The skin temperature was calculated based on the thin-wall approximation and the radiative equilibrium wall. By Planck's blackbody radiation law, the infrared radiation signatures of the blunt body considering the wall occlusion effect are predicted with the ray tracing method. Two representative cases (H=15 km and H=40 km) were selected to analyze the effect of the aerospike on the blunt body, including the aerodynamic force, thermal property, and infrared radiation signatures.Results and DiscussionsThe drag reduction efficiency of the aerospike at 40 km decreases by about 1%–7% compared to that at 15 km. The aerospike with flat-faced aerodisk maintains drag reduction efficiency above 50%, exceeding the other two structures by 5%-13% (Tab.4). The aerospike reduces the temperature near the nose of blunt body by 35%–50%, and the heat reduction effect on the downstream of the blunt body is not obvious. (Fig. 15). For the flat-faced aerodisk, the peak radiation intensities decrease by 25.3% and 39.4% at side viewing and front viewing observation angles respectively (Fig.16). The maximum in-band radiance suppression rate reaches 19.3%, which is 2%–16% higher than the other aerodisks (Tab.5-Tab.6).ConclusionsAerodisk shape directly affects the aerodynamic force, thermal property, and infrared radiation signatures of the blunt body. The aerospike has the best thermal suppression effect in blunt nose. The aerospike with flat-faced aerodisk features high drag reduction efficiency and is less susceptible to environmental impacts. Among the three types of aerospike structures, only the aerospike with flat-faced aerodisk demonstrates a heat reduction effect behind the blunt nose. The infrared radiation suppression capability of the aerospike is more effective in the MWIR band than in the LWIR band at 15 km, while the reverse holds at 40 km. At different observation angles and in different bands, the aerospike with flat-faced aerodisk mainly suppresses infrared radiation intensity, while the other types of aerospike only show such suppression at the front-view observation angle.

ObjectiveCarbon fiber composites are widely used in aircraft fuselage, wing, engine casing and other key structures because of their high specific strength, excellent fatigue resistance and lightweight characteristics. Compared with mechanical connection, adhesive connection will not cause damage to the connection material, can effectively avoid the problem of connection stress concentration, and show excellent fatigue resistance. The presence of resin on the surface of the carbon fiber composite material will affect the strength of the bonded joint. In order to ensure the excellent strength and durability of the bonded joint, it is necessary to remove the resin on the surface of the composite material as much as possible while avoiding damage to the carbon fiber substrate. Laser cleaning technology has outstanding advantages such as green, good cleaning effect, wide application range and non-contact, and has been applied in related fields.MethodsThe laser cleaning test of the resin on the surface of carbon fiber composite materials (CFRP) was utilized by a pulsed fiber laser. The study examined the effects of laser energy density and travel speed on the cleaning outcomes. An analysis of the microstructure and elemental composition of the cleaned samples was conducted. Additionally, the contact angle post-cleaning were evaluated. A bonding experiment was performed, followed by tensile testing and tensile fatigue testing of the bonded parts. The fracture morphology was then assessed to verify the impact of laser cleaning on bonding properties.Results and DiscussionsThe results showed that with the increase of energy density, the removal effect of resin was improved initially, but excessive energy resulted in fiber damage. At a lower energy density (4.77 J/cm2), the resin began to fracture. At higher densities (above 6.37 J/cm2), fiber burn-out and breakage become apparent. Travel speed range from 6 mm/s to 2 mm/s. Higher travel speed reduce the interaction time, resulting in incomplete resin removal. Lower speed leads to better cleaning but risk damaging fibers due to heat accumulation. The analysis showed that effective cleaning corresponded to higher carbon content and lower oxygen content, indicating successful resin removal. As can be seen from Fig.8 (b), the surface bonding strength after S3 treatment can reach 13.02 MPa, which is higher than that of untreated and mechanically polished samples. According to the analysis of tensile fatigue performance, the number of load cycles of the joint after laser treatment is 144000, which is higher than the 95374 after mechanical polishing. At a laser energy density of 6.37 J/cm2 and a laser travel speed of 4 mm/s, the resin is thoroughly cleaned and the carbon fiber matrix is completely exposed, which makes it more conducive to the penetration of the adhesive during the bonding process. At this time, the surface contact angle is lower than other laser treated and mechanically ground surfaces. Through analysis of tensile stress and cross-section, it is found that the laser cleaned sample has better adhesion performance with the metal.ConclusionsLaser energy density and laser travel speed both affect the removal of resin layer on the sample surface. With the increase of laser energy density, the removal amount of resin layer increases gradually. With the decrease of laser travel speed, the removal amount of resin layer increases gradually. Reasonable selection of laser energy density and travel speed can obtain the ideal cleaning effect. When the energy density is 6.37 J/cm2 and the travel speed is 4 mm/s, the surface morphology is the cleanest, the surface contact angle is the smallest 63.29°. At a laser energy density of 6.37 J/cm2 and a laser travel speed of 4mm/s, the interface failure mode is a mixed failure of carbon fiber adhesive layer aluminum alloy, with a shear strength of up to 13.02 MPa, which is more than twice that of untreated and it has good fatigue performance. Processing the surface of carbon fiber composite materials under appropriate laser process parameters can significantly improve the shear strength after bonding.

ObjectiveDifferential Absorption Lidar (DIAL) is capable of detecting temperature profiles within the atmospheric troposphere by measuring the variation of oxygen (O2) absorption coefficient with altitude or temperature. However, in practical applications, the detection accuracy of the O2 absorption coefficient and thus the temperature profile is influenced by various factors. Currently, the temperature detection error of O2-DIAL is at 3-10 K. Therefore, it is necessary to establish theoretical models and systematically analyze various influence factors of the O2-DIAL technique to improve the temperature detection accuracy. For this purpose, this paper focuses on the effects of noise (signal-to-noise ratio, SNR), Doppler broadening of molecule scattering, specific humidity, and laser wavelength stability on the temperature retrieval results for the O2-DIAL technique. Consequently, this study provides important theoretical support and guidance for the design and implementation of an O2-DIAL system and lays a crucial foundation for optimizing the retrieval algorithm of the temperature profile.MethodsBased on the O2 absorption spectrum (Fig.1) and the atmospheric model (Fig.2-3), a simulation model of the O2-DIAL technique operating at 770 nm has been developed. The on-resonance wavelength and the off-resonance wavelength are selected to be 769.7958 nm (λon) and 769.8156 nm (λoff), respectively. The impacts of noise (SNR), Doppler broadening of molecular scattering, specific humidity, and laser wavelength stability on the retrieved temperature profile have been investigated based on the Monte Carlo method and the O2-DIAL model. Lidar signals added by random noise with different SNRs are used for the retrieval of the O2 absorption coefficient and thus the temperature profile based on an iterative approach. The Doppler broadening effect of molecular scattering has also been added into the simulation model, while it is neglected in the retrieval process to evaluate its influence on the retrieved temperature profile. In addition, the temperature retrieval results of three atmospheric models with different aerosol distributions are also compared to illustrate the influence of the aerosol gradient. Besides, the temperature retrieval results under different specific humidities are simulated to show the influence of specific humidity. Finally, the influence of laser wavelength stability (including wavelength shift and fluctuation), has also been investigated based on Monte Carlo method.Results and DiscussionsSimulation studies have shown that these factors have different impacts on the retrieval accuracy of the temperature profile. As the increasing of the measurement altitude, the SNR decreases (Fig.4), and the temperature deviation will significantly increase. In addition, the larger the segmented fitting distance, the smaller the retrieval error (Fig.5). Therefore, in order to accurately retrieve the temperature profile, it is crucial to improve the SNR. At the same time, in low SNR situations, the segmented fitting distance can be prolonged to reduce retrieval errors. If the influence of the Doppler broadening effect is neglected during the retrieval process, the temperature retrieval error (Fig.8) may increase significantly (up to 12 K) especially at the altitude with large gradients in aerosol load. Therefore, the influence of the Doppler broadening effect should be carefully considered during the retrieval process for high accuracy retrieval of the temperature profile. When the deviation of specific humidity is less than 0.02, the temperature retrieval deviation will be less than 1 K (Fig.11). Therefore, to accurately retrieve the temperature profile, it is necessary to obtain an accurate specific humidity profile. If the frequency shift and fluctuation of the laser are controlled within 50-100 MHz, the retrieval deviation of temperature profile will be less than 1 K (Fig.15).ConclusionsAccording to the above discussion, the noise (SNR), Doppler broadening of molecular scattering, specific humidity, and laser wavelength stability are important factors affecting the retrieval accuracy of temperature profile. In practical measurements, if the frequency shift and fluctuation of the laser source should be controlled within 50-100 MHz, the corresponding temperature retrieval deviation will be less than 1 K or even negligible. The influence of specific humidity on the retrieved temperature results is relatively small. In actual measurements, by using the specific humidity profile data from radiosondes, the measurement error caused by the uncertainty of specific humidity can be effectively reduced. If the influence of aforementioned factors can be reduced to a negligible level, the noise and the Doppler broadening effect become the main factors influencing the retrieval accuracy of the temperature profile, which should be carefully considered in practical measurements.

ObjectiveSingle-photon lidar is widely used as an active detection technology with high accuracy and high temporal resolution for 3D high-precision imaging in a variety of scenes. However, weak echo scenarios corresponding to limited signal photon counts and low signal-to-noise ratio scenarios corresponding to high background noise counts pose a great challenge to efficiently and accurately solve the depth. For the single-point ranging scenario of single-photon lidar applied to the above challenging scenarios, this paper proposes a convolutional neural network based on a soft-threshold denoising module and a self-attention mechanism.MethodsA convolutional neural network based on a soft-threshold denoising module and a self-attention mechanism is proposed in this paper. The initial feature extraction and data enhancement of the photon sequence histogram data are carried out by the sliding time window module matched with the pulse width of the transmitting laser pulse. And the self-attention mechanism module is introduced to capture the long-range correlation of the photon sequence histogram and to improve the distance solving accuracy and robustness. Then the soft-threshold denoising module is introduced to adaptively generate the threshold value and to filter out the noisy photons, then the echo waveforms of denoised signals are outputted and the depth of the solution is solved. At the same time, this paper uses multi-loss function constraint for network training to focus on the distribution characteristics of the photon sequence histogram and the task demand for a combination of constraints. And we through the ablation experiment to prove its effectiveness. Compared with other histogram techniques, comprehensive experiments on simulated datasets and real datasets show that the proposed model can achieve optimal quantization results, improve the quantization index by at least three times and have better distance resolution performance under different signal-to-noise ratio environments.Results and DiscussionsBased on the comparison of the quantization results of the simulated dataset, the method proposed in this paper is able to identify the signal photon time correlation features and solve the depth with high accuracy and robustness. It can be seen that in the first two signal-to-noise ratio scenarios (2∶10 and 2∶20), removing a small amount of anomalous data, the method proposed in this paper is able to achieve high accuracy and stability with achieving centimeter-level resolution. In the very low signal-to-noise ratio scenario (2∶50), the extractable data features, i.e., the temporal correlation, are affected by a large number of noise photons. The presence of a large number of noise photons gathering anomalously throughout the detection leads to a decrease in the accuracy of the distance solution. Still, the best quantization effect is achieved in the comparison of different methods, which proves the effectiveness and better development prospect of the deep learning method in the weak echo and low signal-to-noise ratio scenarios.ConclusionsFor the single-point ranging scenario of single-photon lidar applied to the above challenging scenarios, this paper proposes a convolutional neural network based on a soft-threshold denoising module and a self-attention mechanism. With modules proposed as sliding time window module, self-attention mechanism module, and soft-threshold denoising module, the proposed network achieve high accuracy and stability with achieving centimeter-level resolution in multiple signal-to-noise ratio scenarios in the comparison of different methods.

ObjectiveDuring the measurement process of Airborne LiDAR Bathymetry (ALB), there are problems such as difficulties in setting control points and residual calibration errors. At the same time, due to the inconsistent accuracy of underwater measurement points, there is an elevation inconsistency phenomenon between ALB survey strips.MethodFirst, the overlapping area between strips is extracted based on the eight-neighborhood method to limit the point-surface matching range. Then, by constructing a Triangulated Irregular Network (TIN) and matching points of adjacent strips to determine approximate corresponding points, the relationship between strips is established. The Random Sample Consensus (RANSAC) algorithm is used to optimize the matching, a regional network strip adjustment model is constructed, and the optimal transformation matrix of the strips is solved. Finally, a polynomial surface is used to represent the complex terrain, and the correction values of each point are calculated and corrected according to the point-surface matching distance and the least-squares solution of the polynomial coefficients.Results and DiscussionsTo verify the effectiveness of the proposed method, experiments were carried out using data collected by the ALB system Mapper 20KU, and the data accuracy before and after adjustment was evaluated based on land RTK points and shipborne single-beam bathymetry points. After the ALB strip adjustment, the land and underwater measurement deviations decreased by 8.8 cm and 7.5 cm respectively, and the processed data bathymetry accuracy was 24.0 cm.ConclusionsThis paper takes into account the data characteristics of the ALB system and the limitations of measurement operations. Considering that the ALB point cloud data in coastal zones lacks obvious features, and the strip data is approximately planar with sparse point clouds, the eight-neighborhood overlap region extraction method is introduced to improve the data matching efficiency and avoid local optimal solutions caused by iteration. In view of the elevation discrepancies between strips that affect the representation of real terrain data, the regional network adjustment with point-to-surface matching, combined with the RANSAC iterative method, effectively improves the internal consistency accuracy of ALB. To address the uncertainty of external consistency accuracy caused by the lack of control point constraints in regional network adjustment, the intersection area of the adjusted survey lines and inspection lines is used as a control, and the Bursa model is employed for correction. Considering the inconsistent accuracy of ALB point clouds above and below the water, a nonlinear adjustment model is utilized to weaken the distortion within the strips.

ObjectiveSolar-pumped laser is a device that directly converts sunlight into laser light, holding promising applications in fields such as space laser communication, space laser wireless energy transmission, chemical energy cycling, and material processing. The structure of a solar-pumped laser is typically divided into three major components: a sunlight concentration device, a pumping cavity, and a laser gain medium. Existing research has made certain advancements in the design methods for the size and pumping structure of the gain medium in solar-pumped lasers. However, the intrinsic correlation between the size of the laser and its laser output power has not yet been fully explored. When designing lasers for specific output power requirements, reliance is often placed on empirically selected sizes, leading to frequent mismatches between laser size and desired output power. For example, lasers may be excessively bulky without a corresponding increase in output power, or they may have moderate sizes but suffer from inefficient performance. Due to the lack of a systematic theoretical framework to guide the size design of lasers in addressing these issues, a model of a solar-pumped laser incorporating Fresnel lenses and a liquid optical waveguide structure is established based on existing research foundations. This model is used to explore the potential correlation mechanism between laser output power and laser size, providing a scientific basis for designing more efficient and compact solar-pumped lasers.MethodsA simulation model for a solar-pumped solid-state laser with a liquid optical waveguide structure, incorporating Fresnel lenses, is constructed, with corresponding materials assigned based on structural characteristics. Simulations are conducted for Fresnel lenses with diameters of 400, 600, 800, 1000, 1200, 1500, 1800 mm by varying their sizes. Optical ray tracing software is utilized to obtain the optimal sizes of the quartz tube and metal conical cavity for each Fresnel lens diameter. Furthermore, the optimal length range of the crystal rod is theoretically calculated using formulas, and the precise optimal length is determined using the laser simulation software ASLD. Finally, based on the obtained optimal sizes of the quartz tube, metal conical cavity, and crystal rod for different Fresnel lens diameters, the theoretical laser output power is calculated using ASLD software.Results and DiscussionsThe solar-pumped laser system is simulated using optical tracing software, yielding fitting curves between the optimal dimensions of the quartz tube, the optimal input aperture of the metal conical cavity, and the diameter of the Fresnel lens (Fig.3). Additionally, the optimal length of the crystal rod as a function of the Fresnel lens diameter is determined using the laser simulation software ASLD (Fig.5). Based on the optimal dimensions of each optical component, further simulations are conducted to obtain the fitting curve between the laser output power and the Fresnel lens diameter (Fig.6). The results show that when the relative aperture of the Fresnel lens is 1, the optimal dimensions of the crystal rod, quartz tube, and metal conical cavity increase as the Fresnel lens diameter increases. This indicates that as the diameter of the lens increases, the optical flux incident on the system is enhanced, necessitating adjustments in the dimensions of the optical components to maintain optimal optical performance. Furthermore, by varying the relative aperture of the Fresnel lenses, simulations are conducted to calculate the relationship between laser output power and Fresnel lens diameter. The results indicated that the laser output power increased with the increase in the relative aperture of the Fresnel lenses (Fig.7).ConclusionsA model of a solar-pumped laser based on Fresnel lenses and a liquid optical waveguide structure is constructed to investigate the relationship between the sizes of quartz tubes, metal conical cavities, crystal rods, and Fresnel lenses. Through simulation calculations using various sizes of Fresnel lenses, a fitting curve is obtained that relates output power to the diameter of the Fresnel lens. When the relative aperture of the Fresnel lens is 1, the optimal sizes of the crystal rod, quartz tube, and metal conical cavity increase with the diameter of the Fresnel lens. Based on these optimal sizes, the simulated laser output power increases with the diameter of the Fresnel lens, exhibiting an upward parabolic trend with an open upper end. This paper theoretically establishes the relationship between the size of the Fresnel lens and the output laser power, providing guidance for the design of solar-pumped lasers.

ObjectiveLaser has good directionality, high brightness, good monochromaticity, and strong coherence, making it significantly advantageous in the field of ranging. Satellite laser ranging (SLR) is the most accurate satellite ranging technology, and the kilohertz picosecond laser is the iconic light source of the fourth-generation satellite laser ranging. In kilohertz picosecond lasers, regenerative amplifiers are commonly used to amplify the mode-locked pulses, typically using Nd:YAG as the gain crystal with a gain bandwidth of 0.15 nm. In addition, the smaller the bandwidth of the narrowband filter used for satellite ranging, the less the influence of ambient light, and the higher the signal-to-noise ratio. The narrowband filter bandwidth used by several observatories we cooperate with is 0.2 nm, so the laser spectrum width needs to be less than 0.2 nm. To match the gain bandwidth of the regenerative amplifier with the bandwidth of the narrowband filter, a requirement of an output spectrum width of 0.15 nm was proposed for the oscillator. Semiconductor saturable absorber mirrors (SESAM) have the advantages of stable performance, simple structure, low mode locking threshold, and the ability to achieve full fiber integration. It has been widely used in mode-locked fiber lasers and has achieved product commercialization. Most commercial applications of picosecond lasers are generated by SESAM passive mode locking technology. SESAM products on the market have low selectivity and the consistency of each batch is poor. There may be unpredictable differences between the physical parameters of SESAM and the design requirements, resulting in uncontrollable oscillator parameters. Therefore, this study improved the passive mode locking model of SESAM to guide the design of oscillator parameters.MethodsBy using the Split-Step Fourier Transform (SSFT) to solve the Ginzburg-Landau equation, a simulation model is established, and SESAM parameter requirements are proposed. The simulation results meet the design requirements (Fig.1) and can achieve dual peak, triple peak, and quad peak mode locking by adjusting the cavity length (Fig.3). When using this model to simulate oscillators with large bandwidth chirped fiber Bragg gratings (CFBG) as output couplers, the results are distorted (Fig.9). The chirp dispersion brought by CFBG was introduced into the Ginzburg-Landau equation.Results and DiscussionsSESAM was prepared by low pressure metal organic compound vapor deposition (LP-MOCVD) method, and its parameters were tested as modulation depth 11.5%, non-saturated loss 7.6%, saturation fluence 39.3 μJ/cm2, relaxation time 5.5 ps, and damage threshold 21.8 mJ. The linear cavity oscillator achieves laser output with an average power of 30.00 mW, a repetition rate of 44.57 MHz, a peak wavelength of 1 064.07 nm, a spectrum width of 0.14 nm and a pulse width of 31.50 ps when injected with a pump power of 150 mW (Fig.6), meeting the design requirements. It can also achieve dual peak, triple peak, and quadruple peak mode locking by adjusting the cavity length. The chirp dispersion brought by CFBG was introduced into the Ginzburg-Landau equation. The improved model was used to guide parameter design and obtain picosecond laser output with an average power of 55.70 mW, repetition rate of 26.32 MHz, peak wavelength of 1030.15 nm, spectrum width of 0.58 nm, and pulse width of 7.62 ps after fiber pre-amplification.ConclusionsIn response to the SLR, we used the SSFT to solve the Ginzburg-Landau equation and established a simulation model and designed a set of SESAM parameters. The parameters of the linear cavity oscillator built on the basis of this SESAM meet the design requirements, and its application in SLR systems will improve the ranging signal-to-noise ratio and achieve better SLR accuracy. To solve the problem of the distortion in the simulation results of oscillators using large bandwidth CFBG as output couplers, the chirp dispersion brought by CFBG was introduced into the Ginzburg-Landau equation. An equation was established to describe the grating reflection spectrum. The improved model was used to guide parameter design, and the experimental results were consistent with the simulation results, verifying the rationality of the simulation model.

ObjectiveOptical imaging technology is widely used in military and civilian fields. With the deepening of application requirements, people hope to achieve more imaging details, larger imaging range, further detection target detection effect. This requires an optical system with higher resolution and wider field. For an imaging optical system, wide field and high resolution are difficult to meet at the same time. In order to obtain a large field imaging, the imaging resolution will be greatly reduced; On the contrary, to achieve a higher imaging resolution, the imaging field can only be reduced. At present, in order to achieve wide-field high-resolution imaging detection, the existing research methods are non-single aperture, non-single detector imaging, which is complex in structure, large in volume, and requires post-image processing and stitching, and it is also difficult to achieve real-time detection. However, the traditional single-aperture and single-detector optical imaging system is limited by the optical system aperture size, off-axis aberration and other factors. So it is difficult to meet the requirements of large field and high resolution detection. The wide field means that the optical system has a larger off-axis aberration, and the optical aberrations have a direct effect on the imaging resolution.MethodsTo solve the problem, a new method for constructing wide-field high-resolution systems is proposed. In the initial stage of design, the inverse telephoto structure is used as the starting point. The front and rear lenses of the structure are complicated and replaced with lens groups respectively to bear different aberrations. By tracing the main ray and the edge ray, the Seidel aberration expression of the system can be obtained. Seidel aberration is a function of the radius of curvature, lens spacing, air spacing and refractive index of the system. When the function is solved, some specific constraints are added to make the initial structure adapt to improve the resolution in the subsequent optimization process. The specific constraint is to control the angle of incident light at the stop, so that the angle difference of different aperture light in each field is less than a certain range. In addition, the constraints of the system itself should also be taken into account, and the optimal solution of the function under the corresponding constraints is finally obtained, which is the initial structure of the optical system. To improve the resolution, the initial structure was further optimized. The Seidel aberration of the initial structure has been corrected and balanced, but there is still a large residual wave aberration, and the MTF curve cannot approach the diffraction limit in the middle and high frequency band, in which the high-order aberration is the main influencing factor. The wave aberration of the system can be decomposed by Zernike polynomial, and the constraint conditions are set up to correct the specific order aberration, so that the PSF can be distributed centrally and the MTF can be improved.Results and DiscussionsAfter design, an optical system structure composed of 9 lenses is proposed. In the process of optimization, two kinds of high order aberration constraints are added to the original first order aberration optimization function based on high order spherical aberration and high order astigmatism. With the increase of the number of iteration optimization, the optimization function gradually becomes stable. After optimization, the absolute value of aberration decreases greatly, which realizes the purpose of controlling the balance of high order aberration. The final system field angle is 70 degrees, and the MTF curves are close to the diffraction limit, and better than 0.2 at 550 lp/mm, indicating that the system has high resolution and good imaging effect. The focal length of the system is 24.04 mm, the total length of the system is 208.8 mm, the diameter of the entrance pupil is 12.02 mm, the maximum optical diameter D=37.04 mm, and the system contains four high-order even aspheres.ConclusionsAberrations are the main factors affecting imaging resolution, so the study of aberration is the key to achieve high resolution with wide field. Based on Seidel's aberration theory and by controlling the angle of the diaphragm, an initial structure design of wide-field high-resolution imaging optical system is proposed. On this basis, a high order aberration correction algorithm based on Zernick polynomials is proposed. Through the constraint correction of some high order aberrations, the optimal path to improve the resolution is quickly found, and the high resolution imaging design of wide-field optical systems is realized. The method effectively solves the problem of low imaging resolution and difficult optimization design of wide-field optical system, and has certain reference value for wide-field optical system design. However, the method only considers some high-order aberrations, and the decomposition of aberrations is not complete. Therefore, further research is still necessary.

ObjectiveThe laser beam splitter is capable of dividing a laser beam into multiple beams with controllable energy and direction, thereby significantly enhancing the efficiency and flexibility of optical systems. It finds extensive applications in various fields such as laser communication, laser processing, laser scanning, and medical treatment. The existing types of laser beam splitters primarily include Diffractive Optical Element (DOE) beam splitter, microlens array beam splitter, and free-form lens beam splitter. While these can be applied to different scenarios for beam splitting purposes, they do have certain limitations in terms of processing tolerance and working distance. Therefore, we propose a design method for a microprism array-based laser beam splitter that offers a novel approach towards developing long-distance beam splitters with arbitrary energy distribution.MethodsThis paper proposes a laser beam splitter based on a microprism array, investigates the design principle of the beam splitter, establishes a functional relationship model between the structural parameters and optical properties of the microprism unit, develops an algorithm for designing the microprism, and analyzes fabrication and detection schemes for microprism arrays with different structural characteristics. Using a 1×3 microprism array beam splitter with an interval angle of 0.15° and an energy distribution ratio of 0.365∶0.225∶0.365 as an example, we conducted modeling and geometric ray tracing simulations for the beam splitter, designed its target structure using lithography to achieve precise fabrication, built a test light path, and used spot image processing algorithms to characterize its optical performance. Result and Discussions The output light spot of the 1×3 beam splitter is shown in Fig.15(b), where the energy utilization rate exceeds 91%. The energy utilization rate can be effectively improved by further optimizing and developing the preparation process and reducing the occlusion of invalid areas. Due to the alignment error in the experiment, there is an energy distribution error of less than 6%, which can be alleviated by using a high-precision alignment device for assembly. The deflection Angle error is less than 0.04%, which can be optimized by improving the hardware facilities. The spot shape exhibits excellent consistency in long-distance application scenarios where the light source can be considered a point light source. Overall, laser beam splitters based on microprism arrays exhibit exceptional performance in achieving high energy utilization and arbitrary distribution of sub-spots.ConclusionsThe design methodology of the microprism array laser beam splitter proposed in this paper primarily focuses on solving the incident energy distribution and microprism array parameters. By analyzing various existing micro and nano processing technologies, we propose optimization and selection methods for micro-prism structures with different parameters. We conducted a design and fabrication experiment on a 1×3 array beam splitter. The performance of the microprism beam splitter was evaluated through microstructure characterization and optical testing. The results demonstrate that the prepared beam splitter achieves an energy efficiency of approximately 91%, with a spot energy distribution error below 6% and deflection angle error less than 0.4%. Compared to diffraction-based beam splitters (DOE), the beam splitter exhibits significantly improved energy efficiency and uniformity.

ObjectiveIn the precision manufacturing industry, the measurement of the thickness and tolerance of small parts is a crucial step. Common measurement methods mainly include contact and non-contact schemes. Contact measurement may cause damage to the measured parts due to contact with the surface, and the contact area can generate stress on the measured parts, potentially causing minor displacements that lead to inaccurate measurement results. Non-contact measurement offers the advantages of not needing to touch the measured parts, fast measurement speed, and flexible integration. Recent advancements in optical technologies have further enhanced the precision and reliability of non-contact methods, making them increasingly viable for industrial applications. Spectral confocal measurement systems are one type of non-contact measurement; therefore, designing a compact, high-precision, low-cost spectral confocal system is essential.MethodsBy analyzing the components of spectral confocal systems, it was determined that the system to be designed mainly consists of two parts: the dispersive objective lens and the spectrometer, with defined design specifications. The complex and cumbersome entire spectral confocal system was divided into three subsystems using the concept of modular design. This approach not only simplifies manufacturing and assembly but also improves system maintainability and scalability.Results and DiscussionsA hybrid diffractive/refractive spectral confocal system with a wavelength range of 400-800 nm based on APC (Angled Physical Contact) port type Y-fibers was designed, with a working distance of 10 mm and a working range of 2.1 mm. The design of each subsystem reaches or approaches the diffraction limit, and the lens shapes and tolerances are in line with manufacturing processes. The theoretical measurement resolution of the entire system is 350 nm.ConclusionsDesigned a hybrid diffractive/refractive spectral confocal measurement system with a working distance of 10 mm and a working range of 2.1 mm. Compared to other confocal spectral measurement systems, this design utilizes the concept of modular design to modularize the complex and cumbersome entire ranging system. Furthermore, the hybrid diffractive refractive design effectively corrects chromatic aberrations while maintaining a compact structure, making it suitable for integration into automated production lines. The dispersive objective lens and the spectrometer share the same collimation module, which can be installed in conjunction with other modules, and the structure is unified. Future work will focus on experimental validation and further optimization for industrial deployment. In the optical system design, each system has good manufacturability and the relative cost of lens materials is low. Third-order and linear polynomials were used to fit the chromatic focal shift curves and position curves of the dispersive objective lens and the spectrometer, with minimum residual coefficients of 0.9996 and 0.9999.

ObjectiveWith the advancement of technology and the upgrading of information-based equipment, laser target imaging echo simulators have become essential tools for simulating target characteristics in complex scenarios and evaluating system performance. These simulators provide high-precision echo characteristic reconstruction, offering reliable data support for the testing and optimization of information-based equipment. The accuracy of echo signals and the ability to acquire depth information directly influence the simulator’s capability to replicate real-world scenarios, thereby determining its effectiveness in supporting key technologies such as target recognition, tracking, and ranging. However, traditional target simulators, constrained by single-light-source imaging methods, struggle to effectively obtain target depth information, resulting in insufficient simulation accuracy in complex scenarios and limiting the accurate representation of target spatial structure characteristics. To address these limitations, a novel optical system for a laser target imaging echo simulator is proposed.MethodsA laser target imaging echo simulator optical system with a wavelength of 1064 nm has been designed to achieve precise simulation of dynamic target scenes. The system utilizes a high-uniformity 3×5 fiber array illumination scheme (Fig.9). It also integrates silicon-based liquid crystal and a relay system to construct a regionalized multi-wavefront superimposition imaging technique (Fig.12). Additionally, the projection system features a dual field-of-view optical system, allowing flexible switching between 2° and 3° field of view angles (Fig.15). Based on this, the stray light characteristics and energy uniformity of the optical system are analyzed, and potential accuracy and imaging errors between the theoretical design and actual processing of the optical system are evaluated to ensure the system’s imaging quality and stability.Results and DiscussionsThe illumination system can accommodate up to 15 channels, with each channel achieving a uniformity greater than 91% (Fig.10). The radius of the diffraction spot for each subchannel of the relay system is smaller than the Airy disk radius, while the optical system exhibits a distortion value of 0.7112% and a field curvature of <0.1 mm (Fig.13-Fig.14). The Modulation Transfer Function (MTF) exceeds 0.4 at 26 lp/mm, and the energy concentration in the imaging optical system is high, with S.D≥0.9 (Fig.17-Fig.18). The polarization filter and stray light elimination stop reduce the system's stray light to 0.3%, improving suppression by a factor of 10.56 (Fig.23). The average energy uniformity at the system exit pupil remains consistent with the illumination system (Tab.5). Combining the tolerance analysis results, the system offers high imaging quality and good stability (Tab.8).ConclusionsThe study provides a detailed description of an optical system for a laser target imaging echo simulator. Comprehensive analysis shows that the system features high illumination uniformity and excellent imaging quality, with the diffraction spot radius smaller than the Airy disk radius, approaching the diffraction limit. Additionally, the optical system exhibits low distortion and minimal field curvature. The projection system adopts a dual field-of-view optical system, ensuring adaptability to the aperture requirements of various devices under test. The system's tolerance distribution is well-balanced, meeting current manufacturing precision requirements and offering good assembly tolerance. The designed optical system delivers high-quality target image information, providing essential design guidance for accurately simulating training scenarios and target signals.