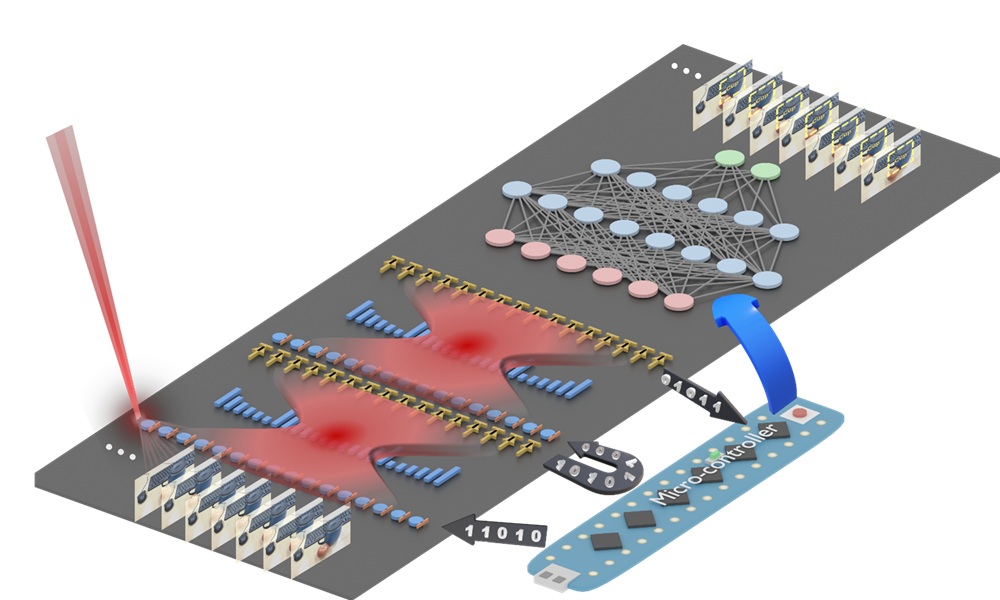

A newly developed silicon photonic chip turns light-encoded data into instant convolution results. Image credit: H. Yang (University of Florida)

Artificial intelligence (AI) systems are increasingly central to technology, powering everything from facial recognition to language translation. But as AI models grow more complex, they consume vast amounts of electricity—posing challenges for energy efficiency and sustainability. A new chip developed by researchers at the University of Florida could help address this issue by using light, rather than just electricity, to perform one of AI’s most power-hungry tasks. Their research is reported in Advanced Photonics.

The chip is designed to carry out convolution operations, a core function in machine learning that enables AI systems to detect patterns in images, video, and text. These operations typically require significant computing power. By integrating optical components directly onto a silicon chip, the researchers have created a system that performs convolutions using laser light and microscopic lenses—dramatically reducing energy consumption and speeding up processing.

“Performing a key machine learning computation at near zero energy is a leap forward for future AI systems,” said study leader Volker J. Sorger, the Rhines Endowed Professor in Semiconductor Photonics at the University of Florida. “This is critical to keep scaling up AI capabilities in years to come.”

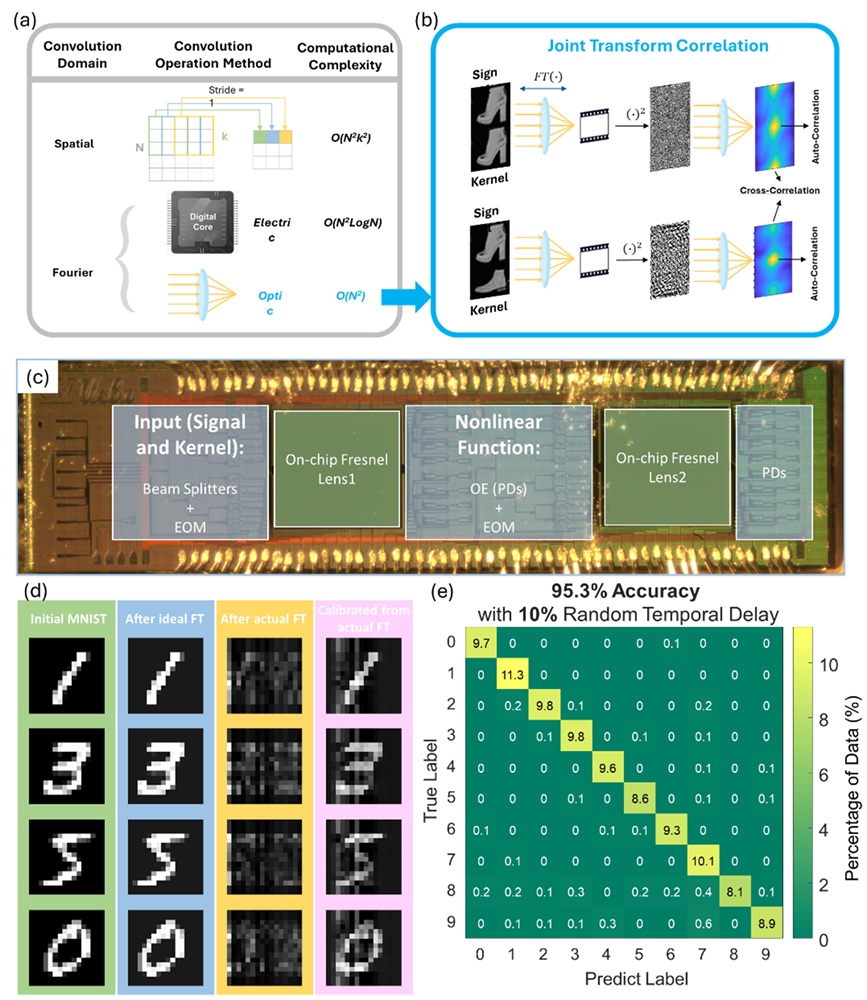

In tests, the prototype chip classified handwritten digits with about 98 percent accuracy, comparable to traditional electronic chips. The system uses two sets of miniature Fresnel lenses—flat, ultrathin versions of the lenses found in lighthouses—fabricated using standard semiconductor manufacturing techniques. These lenses are narrower than a human hair and are etched directly onto the chip.

To perform a convolution, machine learning data is first converted into laser light on the chip. The light passes through the Fresnel lenses, which carry out the mathematical transformation. The result is then converted back into a digital signal to complete the AI task.

pJTC Convolutional techniques. (a) Comparison of spatial convolution, Fourier electrical convolution, and Fourier optical convolution in terms of computational complexity. (b) Schematic of a JTC, demonstrating how it performs Fourier optical convolution by optically generating the Fourier transform of the combined input Signal and Kernel, detecting the intensity pattern, and producing the auto- and cross-correlation between Signal and Kernel. (c) Optical microscope image of the fabricated SiPh chiplet from AIM Photonics. (d) Comparison of the initial MNIST image (green line), the output after an ideal Fourier transform (blue line), the output after the actual on-chip lens Fourier transform (yellow line), and the calibrated output obtained from the actual on-chip lens after applying phase correction (pink line). (e) Confusion matrix shows the classification accuracy for 10,000 test MNIST images with 10 percent random temporal delay introduced in the input electrical signal, achieving total accuracy of 95.3 percent. Credit: H. Yang et al., doi 10.1117/1.AP.7.5.056007

“This is the first time anyone has put this type of optical computation on a chip and applied it to an AI neural network,” said Hangbo Yang, a research associate professor in Sorger’s group at UF and co-author of the study.

The team also demonstrated that the chip could process multiple data streams simultaneously by using lasers of different colors—a technique known as wavelength multiplexing. “We can have multiple wavelengths, or colors, of light passing through the lens at the same time,” Yang said. “That’s a key advantage of photonics.”

The research was conducted in collaboration with the Florida Semiconductor Institute, UCLA, and George Washington University. Sorger noted that chip manufacturers such as NVIDIA already use optical elements in some parts of their AI systems, which could make it easier to integrate this new technology.

“In the near future, chip-based optics will become a key part of every AI chip we use daily,” Sorger said. “And optical AI computing is next.”

The authors explain their work in this video:

For details, see the original Gold Open Access article by H. Yang et al., “Near-energy-free photonic Fourier transformation for convolution operation acceleration," Adv. Photon. 7(5), 056007 (2025), doi: 10.1117/1.AP.7.5.056007