ObjectiveThe infrared search and track (IRST) system has characteristics such as good concealment, long detection range, and strong anti-jamming capabilities, and is widely used in airborne, shipborne, vehicle-mounted, and spaceborne systems. The operating range is an important indicator of the detection capability of IRST systems. Accurately analyzing the operating range is significant for improving design quality and evaluating comprehensive performance. Traditional operating range models for IRST systems generally do not consider the spectral radiation characteristics of both the target and the background. Many studies have made improvements in this area, but they have not simultaneously considered both the efficiency of target radiation received by the pixels and the short-term random errors in system tracking. In response to the current research gap, this study establishes an optimization model for the operating range of a staring IRST system and presents a novel method for solving this range. The model accounts for both the number of pixels occupied by the target’s image on the focal plane and the efficiency with which these pixels receive the target’s radiation. During the solution process, the theoretically calculated target spectral radiation intensity and background spectral radiation brightness are normalized and treated as weights, which are then multiplied by the actual measurement results. This approach balances the advantages of both theoretical calculations and actual measurements, thus improving calculation accuracy. We hope that this model can serve as a reference for analyzing factors related to the operating range of IRST systems and assist in the optimization and performance evaluation of such systems.MethodsWe improve the existing IRST system operating range model by using the number of pixels n occupied by the target to handle the system’s short-term random tracking errors, and by using the pulse visibility factor (PVF) to address the efficiency of pixel response due to target radiation. During the solution process of the operating range model, a normalization method is proposed. First, the target radiation intensity and background radiation brightness, obtained from theoretical calculations, are separately normalized to obtain the normalized target radiation intensity and normalized background radiation brightness. These values are then used as weights and multiplied by the actual measurement results to derive new spectral radiation characteristics for the target and background. The integral part is then fitted to obtain a hidden function equation that is only related to distance, which is then solved. Finally, the optimized operating range model and normalization method are used for example calculations and experimental verification.Results and DiscussionsAccording to the optimized operating range model and solution method proposed in this study, the operating range is calculated to be 15.4461 km, with a relative error of 2.97%. In contrast, if the unoptimized solving method is used, the operating range is calculated to be 17.5512 km, with a relative error of 17.01%. Therefore, the calculation results based on the optimized operating range model and solution method in this study are closer to the measured values. Without changing other conditions, the relationship between n and the entrance pupil diameter and operating range (Fig. 7) shows that the operating range decreases as n increases, but the rate of decrease slows down; it increases as the entrance pupil diameter increases, though the rate of increase also slows down. Therefore, optimizing the IRST system tracking device can reduce the short-term random errors in system tracking, lower the n value, and thereby increase the operating range. The operating range of the IRST system can also be increased by appropriately enlarging the optical system’s aperture.ConclusionsWe establish an optimization model for the operating range of a gaze-type IRST system. The operating range model corrects the traditional derivation method, which assumes a uniform distribution of the energy of the speckle pattern formed by point targets on the focal plane, while also considering the speckle effect caused by short-term random tracking errors, thereby improving the accuracy of the calculations. Finally, the operating range model is applied to calculate instances of aircraft targets, and the effect of the number of pixels n occupied by the target on the focal plane, as well as the optical system aperture, on the operating range, is analyzed. The calculation results indicate that the operating range decreases as n increases, but the rate of decrease slows down; the operating range increases as the entrance pupil diameter increases, though the rate of increase also slows down. Finally, the reliability of the operating range model is verified through field experiments. The research indicates that both the number of pixels occupied by the target on the focal plane and the efficiency of these pixels in receiving target radiation are important factors affecting the operating range of IRST systems and should be considered in calculations. The theoretically calculated target spectral radiation intensity and background spectral radiation brightness fully take into account the spectral radiation characteristics of the target and background. However, there may be some deviation between theoretical calculations and actual measurements. While actual measurements are highly accurate, they often reflect the overall radiation characteristics and cannot reveal the detailed spectral radiation characteristics. Normalizing the theoretical calculation values as weights for the actual measurement values can yield new spectral radiation characteristics for the target and background. This method combines the advantages of both theoretical calculations and actual measurements, which makes it more aligned with real-world situations. Additionally, optimizing the IRST system tracking device or appropriately increasing the optical system aperture can enhance the operating range of the IRST system. For large-aperture optical systems, further increasing the system aperture will have a diminishing effect on the improvement of the operating range.

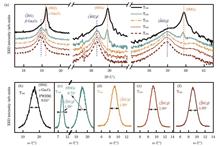

ObjectiveAmong the five polymorphs of Ga2O3, β?Ga2O3 is notable for its superior thermal stability and a breakdown field strength of approximately 8 MV/cm. In addition, β?Ga2O3 demonstrates high selectivity and exceptional photoresponse characteristics towards solar-blind ultraviolet light, making it an ideal candidate for solar-blind photodetectors (SBPDs). In contrast, ε?Ga2O3, the second most stable polymorph, exhibits ferroelectric properties and a significant spontaneous polarization coefficient of 24.44 μC/cm2. However, a major challenge is the lack of mature commercial metal-organic chemical vapor deposition (MOCVD) equipment specifically designed for Ga2O3, as well as the absence of suitable epitaxial growth substrates. In this paper, we utilize a self-developed MOCVD system, which demonstrates stability and reliability, enabling significant advancements in Ga2O3 growth. This leads to high system stability and moderate-scale production capabilities. Recent studies employ c-plane sapphire as the substrate for heteroepitaxial growth of Ga2O3, with controlled growth conditions such as temperature, gas flow rates, and pressure to yield various Ga2O3 polymorphs. Lower growth temperatures favor the formation of hexagonal ε?Ga2O3, while temperatures above 500 ℃ promote a gradual transition to β-Ga2O3. In this paper, we investigate the influence of growth temperature on the properties of Ga2O3 thin films and SBPDs at temperatures below 700 ℃, providing a feasible approach for the heteroepitaxial MOCVD growth of Ga2O3 thin films. We aim to overcome existing limitations by offering practical insights into optimizing growth conditions for enhanced material quality and device performance.MethodsDuring epitaxial growth, the MOCVD system’s reactor pressure is maintained at 50 mBar while the temperature is gradually increased to the target level. Upon reaching the target temperature, oxygen (O2) and triethylgallium (TEGa) are introduced as O and Ga sources onto a (002) c-plane sapphire substrate with a molar flow ratio of O to Ga of 1600 for a growth duration of 1 hour. Five sets of Ga2O3 thin films are grown at temperatures of 500, 530, 580, 630, and 680 ℃, labeled as T500, T530, T580, T630, and T680, respectively. Post-growth characterization is performed using X-ray diffraction (XRD), scanning electron microscopy (SEM), X-ray photoelectron spectroscopy (XPS), and ultraviolet-visible spectrophotometry to analyze the structural, surface, elemental composition, and optical properties of the Ga2O3 thin films. Metal hard masks are used for pattern transfer, and Ag interdigitated electrodes are sputtered onto the films to fabricate planar metal-semiconductor-metal (MSM) SBPDs. The current-voltage characteristics of the detectors are measured under dark conditions and illumination at 254 nm and 365 nm wavelengths (both with a light power density of 600 μW/cm2) using a Keithley 2450 SourceMeter. The transient photoresponse characteristics are evaluated by recording current-time (I-t) curves while periodically turning the 254 nm light source on and off at 60-second intervals.Results and DiscussionsXRD analysis reveals that T500 predominantly consists of ε-Ga2O3, T530 is a mixture of ε and β phases, while T580, T630, and T680 are composed entirely of the β?Ga2O3 [Fig. 1(a)]. This indicates that temperature is a critical factor influencing the crystalline phase of Ga2O3, with a transition from ε to β phase occurring around 530 ℃. The XRC full width at half maximum (FWHM) results for all films align with those typically reported for heteroepitaxial growth [Figs. 1(b)?(f)]. All Ga2O3 thin films exhibit high crystalline quality, as evidenced by a sharp absorption edge near 255 nm in their transmission spectra [Fig. 3(a)]. SEM images show that film surfaces become smoother at lower temperatures but increasingly rougher as the growth temperature rises [Figs. 2(b)?(f)], likely due to the stronger crystal orientation of β-Ga2O3 at higher temperatures. The bandgap, derived from the absorption spectrum using the Tauc plot, increases with temperature [Figs. 3(b) and (c)], attributed to a reduction in oxygen vacancy defects and improved stoichiometry relative to bulk Ga2O3. XPS results show that the binding energies of Ga 2p1/2, Ga 2p3/2, and Ga 3d increase and then decrease with temperature [Fig. 4(b)], indicating that lower binding energies suggest more Ga—O bond formation. A similar trend is observed in the relative concentration of oxygen vacancies [Fig. 4(c)], suggesting that the T500 and T680 films exhibit enhanced structural stability. The fabricated SBPDs demonstrate excellent overall performance, particularly the device based on T680, which achieves a light-dark current ratio (PDCR) of 3.75×107, a responsivity (R) of 0.5 A/W, a detectivity (D) of 3.11×1012 Jones, an external quantum efficiency (EQE) of 242.4%, and an R254 nm/R365 nm of 1.35×106. In transient photoresponse testing, reduced oxygen vacancy defect concentrations result in diminished photoconductivity effects and enhanced response speeds. For the SBPD based on T680, the response time constants are τr1=0.067 s, τr2=0.366 s, τd1=0.008 s, and τd2=0.063 s [Fig. 5(b)].ConclusionsUsing the MOCVD system, pure ε-phase Ga2O3 can be grown heteroepitaxially at 500 ℃. At 530 ℃, the onset of β?phase Ga2O3 occurs, and further heating to 580 ℃ results in the formation of pure β?phase Ga2O3. Simultaneously, the bandgap of Ga2O3 increases from 4.83 eV to 5.00 eV with rising temperature. All Ga2O3 thin films exhibit high crystalline quality, as evidenced by a sharp absorption edge near 255 nm in their transmission spectra. In addition, XPS analysis indicates that the crystal structures of the pure ε?phase Ga2O3 film (T500) and the highest-temperature-grown pure β?phase Ga2O3 film (T680) are relatively more stable. The fabricated MSM-type SBPDs demonstrate rapid photoresponse characteristics, along with good repeatability and stability. Specifically, under a bias voltage of 5 V and a light power density of 600 μW/cm2, the SBPD based on T680 achieves a PDCR of 3.75×107, a D of 3.11×1012 Jones, an EQE of 242.4%, and an R254 nm/R365 nm of 1.35×106, indicating excellent overall performance. In transient photoresponse tests conducted at a light power density of 600 μW/cm2, the rise time and fall time are measured to be 0.067 and 0.008 s, respectively.

ObjectiveIn the upcoming sixth-generation (6G) mobile communication systems, new intelligent applications and services have emerged, such as autonomous driving and machine-type communication. Currently, the separate design of sensing and communication cannot meet the simultaneous requirements of high data transmission rates and high-precision sensing. Therefore, the integrated sensing and communication (ISAC) system has become one of the key technologies for 6G. ISAC technology combines communication and sensing functions, resolving the spectrum conflict between these systems and effectively alleviating the scarcity of spectrum resources. In recent years, the development and application of terahertz (THz) technology have promoted the implementation of ISAC. The abundant spectrum resources in the THz frequency band meet the growing demand for high-speed communication, and the short wavelength of THz waves enables fine spatial resolution, making them suitable for applications in imaging, localization, and environmental sensing. THz ISAC technology can provide real-time precise mapping and localization capabilities while maintaining high-speed communication links, presenting great potential for intelligent applications. Currently, THz signals are mainly generated by electronic and optical methods. The communication rate of THz signals generated via electronic technology is constrained by the microwave signal prior to the frequency multiplication, and its performance is limited by the bandwidth of electronic devices. In contrast, THz signals generated by optical methods directly leverage the large bandwidth advantage of THz communication, effectively overcoming the bandwidth bottleneck of electronic devices. This significantly simplifies the system structure and offers advantages such as high sensitivity and low power consumption. Orthogonal frequency-division multiplex (OFDM) is increasingly utilized in ISAC systems due to its excellent anti-multipath performance and high delay-Doppler resolution. By integrating minimum-shift keying (MSK) and linear frequency modulation (LFM) technologies, the fuzzy function can be further optimized, yielding better sensing performance. Therefore, we propose an optically loaded THz ISAC system based on OFDM-MSK-LFM, which provides a reference model for the fusion of communication and sensing functions in 6G.MethodsThe ISAC signal is generated in MATLAB, where a certain number of pseudo-random binary sequences are mapped to MSK signals. Subsequently, series-parallel conversion and inverse Fourier transform are applied to perform OFDM modulation. The signal is then modulated onto an LFM carrier. The ISAC signal is converted into an analog signal and drives the I/Q modulator, which is modulated onto the continuous-wave optical source generated by an external cavity laser. The optical signal is coupled with another local oscillator light source, and finally, a THz signal is generated by the photodetector. A portion of the terahertz signal is sent to the communication receiver, where the communication signal is recovered after de-chirping, Fourier transform, and channel equalization. The remaining signal is reflected by the target and received by the sensing receiver. The range and velocity of the target are recovered after estimating the sensing channel from both the echo signal and the original signal.Results and DiscussionsThe proposed ISAC system achieves both high-rate communication and high-precision sensing. The fuzzy function of the ISAC signal is thumbtack-shaped, indicating excellent sensing performance (Fig. 8). The peak sidelobe ratio increases with the LFM bandwidth (Fig. 9), enhancing the anti-interference ability of ISAC. In the close-range static dual-target simulation test, the ranging error is kept within 5 mm (Fig. 10). In the high-speed dynamic dual-target test, relatively high precision for both range and velocity measurements is achieved (Fig. 11). After transmitting the 10 GBaud ISAC signal over a 25 m wireless link, the bit error rate reaches the threshold for hard decision forward error correction [Fig. 13(b)]. However, the bit error rate of the ISAC signal deteriorates as the LFM bandwidth ratio increases [Figs. 13(c) and (d)]. The partial sequence transmission algorithm can suppress the peak-to-average power ratio (PAPR) by up to 3.30 dB. As NFFT increases, the system’s PAPR also increases, while the theoretical maximum measurement error decreases. A balance is achieved when NFFT is 256, with simulated distance and speed errors of 0.4 cm and 10.83 m/s, respectively. The complementary cumulative distribution function for PAPR of >8 dB is 0.123.ConclusionsIn this paper, we propose an optical carrier ISAC system based on OFDM-MSK-LFM. Compared to traditional OFDM, MSK and LFM suppress Doppler sidelobes, concentrating energy towards the main lobe, thus improving the anti-interference performance and high-speed target detection capabilities of the ISAC signal. Due to the nonlinear effects of LFM, the bit error rate (BER) of the integrated signal exacerbates as the LFM bandwidth ratio increases. The adopted multi-carrier modulation enhances the overall sensing performance of the system but also increases the PAPR. The PTS algorithm, while adding some computational complexity, effectively suppresses the PAPR. The proposed system allows for flexible adjustment of the ISAC signal’s bandwidth and NFFT according to system requirements, enabling optimization of the detection range, sensing resolution, communication rate, and PAPR. This provides a valuable reference for the future development of 6G ISAC technology.

ObjectiveWith the rapid growth in the number of low-Earth orbit (LEO) satellites, especially with the increasing application of satellite optical communication networks, efficiently and flexibly managing and optimizing the communication links and network traffic between satellites has become an urgent problem. Traditional satellite network routing and load balancing methods often perform poorly in the face of high dynamics, complex topologies, and unbalanced satellite-ground traffic distribution, which leads to issues such as link congestion, traffic bottlenecks, and resource utilization inequality. This reduces satellite resource utilization and causes network load imbalance. Therefore, it is particularly important to study an adaptive load balancing algorithm that can effectively handle network load fluctuations, reduce the blocking rate, and improve throughput.MethodsWe address the problem from the perspective of satellite-ground traffic balancing, considering the onboard and satellite-ground traffic distribution characteristics of LEO satellite optical networks. Using the polar Walker constellation model, a LEO satellite optical network scenario is constructed, and two load balancing algorithms are proposed: the redundancy-aware load balancing (RALB) algorithm and the detour multipath routing algorithm for satellite-ground traffic (DMRA-SGT). First, the RALB algorithm optimizes the Dijkstra path search logic by utilizing multiple equivalent paths in inter-satellite links. It removes overloaded links, prioritizes lightly loaded ones, and effectively alleviates network bottlenecks, thereby improving resource utilization efficiency. Second, the DMRA-SGT algorithm combines the congestion distribution index and uses traffic packet transmission to redirect some traffic from the default route to detour paths located farther from the core satellite region, which loads are lighter. The routing tables for both detour paths and shortest paths are updated synchronously to avoid temporary loops. To quickly respond to data forwarding and congestion paths, a fast distributed routing protocol (FDR) is designed as the default routing scheme for the satellite-ground traffic detour algorithm. This protocol requires fewer onboard resources for calculating the shortest and long-distance detour paths, thus achieving effective traffic distribution and mitigating cascading congestion.Results and DiscussionsOn MATLAB R2018b and satellite simulation software STK 11.6, we build a polar-orbiting satellite constellation, including 288 satellites (constellation parameters: 288/12/24, 1400 km) and 16 ground stations, and use OPNET Modeler 14.5 for simulation verification. To measure the degree of network congestion under different algorithms, we use the traffic blocking ratio (TBR) as an indicator. Simulation results show that the DMRA-SGT algorithm performs the best among the four algorithms, while the Dijkstra algorithm performs the worst. When the total traffic volume is lower than 5.7 Tbit/min, the TBR of Dijkstra’s algorithm is zero. However, when the traffic volume exceeds this threshold, the network becomes congested, and the TBR increases rapidly. When the system reaches the maximum of 9.9 Tbit/min, the TBR reaches 26.28%. This is because Dijkstra’s algorithm needs to store the entire network information, resulting in excessive memory consumption in complex networks. In contrast, the RALB algorithm begins to show congestion when the total traffic volume reaches 6 Tbit/min and the TBR peaks of 21.71% at 9.9 Tbit/min. Although the RALB algorithm considers link loads to avoid heavily loaded links, its effect is limited. The SGC-LB algorithm works by directing congestion traffic to the nearest gateway, but as the link load is not taken into account, the network begins to experience congestion at 6.6 Tbit/min, with the final TBR peak of 2.08%. However, the congestion in the DMRA-SGT algorithm only appears when the total traffic volume reaches 6.6 Tbit/min, and when it reaches 9.9 Tbit/min, the peak value of TBR is only 0.49%. This is 25.79 percentage points, 21.22 percentage points, and 1.59 percentage points lower than those of Dijkstra, RALB, and SGC-LB, respectively. Regarding the evaluation of the average packet loss rate and total throughput, the simulation results show that the DMRA-SGT algorithm performs the best in terms of packet loss rate. Especially when the total traffic is 10 Tbit/min, the packet loss rate of DMRA-SGT is 3.17%, which is much lower than that of the other algorithms. The reason for this is that DMRA-SGT reduces congestion and packet loss by bypassing routes to avoid high-traffic areas. SGC-LB and RALB also reduce the packet loss rate by choosing lower load paths, although not as effectively as DMRA-SGT. The Dijkstra algorithm, however, selects the congested links, which results in the highest packet loss rate, reaching 14.12%. In terms of total throughput, the DMRA-SGT algorithm also demonstrates the best performance. When the total traffic is 9.9 Tbit/min, the total throughput of DMRA-SGT is 9821.6 Gbit, which is 18.2% higher than that of Dijkstra’s algorithm. Although the increase in total throughput is not as significant as that of the blocking rate and packet loss rate, it still highlights the advantages of DMRA-SGT under high loads. In summary, the DMRA-SGT algorithm outperforms the Dijkstra, RALB, and SGC-LB algorithms in terms of traffic blocking rate, packet loss rate, and total throughput, which demonstrates superior network performance.ConclusionsIn this paper, we consider the effect of traffic load on satellite optical networks and propose a satellite-based redundancy-aware load balancing algorithm. This algorithm utilizes on-board resource redundancy to reduce network congestion and improve network throughput. We then introduce the DMRA-SGT algorithm, which combines the shortest path with long-range detour paths. Based on network state information, the algorithm computes a congestion distribution index and selects long detour paths using a detour multipath algorithm according to a fast distributed routing protocol. Through simulation, we compare and analyze the performance of the DMRA-SGT algorithm under high-throughput traffic, which shows that it outperforms other algorithms in terms of traffic blocking rate, packet loss rate, and total throughput. Specifically, compared to the widely used Dijkstra algorithm, the blocking rate is reduced by 25.79 percentage points, the packet loss rate is reduced by 10.95 percentage points, and there is a significant improvement in total throughput.

ObjectiveAs society and the economy continue to advance, there is an increasing demand for real-time monitoring of temperature and strain in fields such as oil extraction, aerospace, and renewable energy. Traditional methods, which rely on thermocouples and resistance strain gauges, often suffer from low accuracy, environmental sensitivity, and limitations in measurement (typically only at a single point). Fiber optic sensors are widely used in engineering due to their corrosion resistance, immunity to electromagnetic interference, and ease of integration into networks. However, traditional fiber optic sensors tend to be bulky, which limits their ability to meet the increasing demand for smaller, lighter designs in modern applications. In addition, addressing temperature-strain cross-sensitivity in these sensors often requires complex fabrication processes, and the specialized structures involved may not be practical for real-world use. To address these challenges, we propose a dual-parameter sensing structure for temperature and strain, combining indium selenide and fiber Bragg grating (FBG) technologies.MethodsThe D-shaped fiber in this structure is prepared by side-polishing a standard single-mode optical fiber. Due to the small distance between the polishing surface and the core, along with the flatness of the polishing area, a large evanescent field is generated. This facilitates the integration of the two-dimensional material, indium selenide, enabling efficient fluorescence excitation and collection. Using a femtosecond laser, the FBG structure is inscribed point by point in the polished area of the D-shaped fiber. The mechanically stripped indium selenide material is then transferred onto the polished surface of the fiber, which is inscribed with the FBG, via a dry transfer technique. When the fiber core is excited by 532 nm light, the evanescent field of the D-shaped fiber stimulates indium selenide to emit fluorescence at approximately 1000 nm. The central wavelength of the FBG is primarily influenced by changes in the effective refractive index and the periodic spacing of the grating. As the temperature changes, thermal expansion and thermal optical effects alter both the refractive index and the period of the grating, resulting in a shift in the central wavelength. Similarly, when strain is applied to the FBG, both the periodicity of the grating and the effective refractive index in the grating region are modified due to the elastic-optic effect, causing a shift in the central wavelength. By establishing the relationship between environmental parameters and the Bragg wavelength drift, it is possible to monitor a variety of environmental conditions. Moreover, experimental results indicate that as temperature increases, the bandgap energy of the fluorescent material decreases, leading to a linear redshift in the fluorescence peak. This redshift is much larger than the Debye temperature of the material in the temperature range of interest, allowing for real-time temperature monitoring through the linear relationship between the fluorescence peak wavelength and temperature. Importantly, the side-polished D-shaped fiber disrupts the structural symmetry of a conventional cylindrical fiber, but the axial strain within the mechanical strength range (0?5000 με) is insufficient to significantly alter the bandgap of indium selenide. Thus, it can be concluded that the fluorescence peak of indium selenide in this composite structure is primarily sensitive to temperature.Results and DiscussionsIn the temperature test, the sensor is subjected to a temperature range from room temperature to 350 ℃. As the temperature increases, the reflection peak of the D-shaped FBG exhibits a redshift. The temperature sensitivity of the D-shaped FBG is found to be 13.5 pm/℃ [Fig. 9(a)]. For strain testing, the D-shaped FBG is subjected to strains ranging from 0 to 3000 με. As strain increases, the reflection peak also redshifts, with the strain sensitivity measured at 1.28 pm/με [Fig. 9(b)]. The fluorescence temperature response is tested between room temperature and 150 ℃. As the temperature increases, the fluorescence peak wavelength redshifts and the peak width broadens. The temperature sensitivity of the fluorescence signal is 207.4 pm/℃ [Fig. 11(a)]. Next, the effect of axial strain on the fluorescence wavelength of indium selenide is examined within the range of 0 to 3000 με. The results show that the fluorescence wavelength of the composite structure remains largely unaffected by strain, demonstrating that its axial strain sensitivity is negligible (approximately 0 pm/με) within this range [Fig. 11(b)]. Based on these findings, a sensor matrix can be developed, enabling dual-parameter monitoring of temperature and strain. By tracking both the Bragg reflection wavelength shift of the D-shaped FBG and the fluorescence wavelength drift of indium selenide, the system can effectively distinguish and measure temperature and strain independently, resolving the issue of cross-coupling between the two parameters.ConclusionsIn this paper, we present a composite structure combining indium selenide and a D-shaped FBG, fabricated using PMDS-assisted transfer and femtosecond laser point-by-point inscription. This structure leverages the fluorescence properties of the two-dimensional material and the temperature and strain response characteristics of the D-shaped FBG, enabling simultaneous detection of both parameters. Experimental results demonstrate that the composite structure achieves a temperature sensitivity of 207.4 pm/℃ and a strain sensitivity of 1.28 pm/με. Future work will focus on the packaging and material protection of the composite structure to expand the temperature detection range of the indium selenide fluorescence signal. The proposed composite structure effectively resolves the cross-sensitivity issues between temperature and strain commonly encountered in traditional optical fiber sensors. Compared to conventional devices, it offers superior sensing performance and shows promising potential for practical engineering applications.

ObjectiveUnmanned aerial vehicle (UAV) radio frequency (RF) communication is vulnerable to attacks such as interference and eavesdropping due to its open channel and broadcast nature, which poses significant threats to communication reliability and security. The introduction of artificial interference can conceal communication activity by confusing malicious users on the legitimate communication process, thus ensuring communication security. However, both artificial interference and legitimate signals operate within the RF band, which can reduce the communication rate of legitimate signals. Free-space optical (FSO) communication offers high transmission rates, resistance to RF interference, and greater security against eavesdropping. However, it requires precise alignment and is sensitive to channel conditions. We propose a UAV covert communication strategy based on a hybrid RF/FSO link, where FSO is used to compensate for RF communication to mitigate the self-interference effects of artificial interference on RF signals.MethodsUnder the condition that the hostile user implements communication monitoring, we focus on the UAV relay communication scenario, specifically by adding a relay UAV to assist the reconnaissance UAV in realizing information transmission to the ground receiving station. Communication between the UAVs is realized through RF and FSO links, with the relay UAV generating artificial interference to enable covert communication. The maximum ratio combination (MRC) scheme is adopted to process the received RF/FSO signals. The information is transmitted to the receiving station over the RF link using the decode-and-forward protocol. In this paper, we first derive the minimum total detection error probability of the hostile user, then construct an optimization model with covert communication requirements, transmit power, and deployment location as constraints, and link transmission rate as the objective. An iterative solution algorithm based on block coordinate descent (BCD) and successive convex approximation (SCA) is proposed to obtain a suboptimal solution through the joint optimization of UAV relay deployment location, interference, and RF/FSO transmit power.Results and DiscussionsWith the increase in the covert communication requirement, the transmit power of both RF and FSO signals shows a decreasing trend. However, as the maximum transmit power of the UAV increases, the transmit power of RF and FSO signals shows an increasing trend. The trend in the transmit power of RF signals is mainly affected by the transmit power of artificial interference, and it has a linear relationship with both variables. The transmit power of FSO signals varies with the transmit power of RF signals, which exhibits a linear relationship between the square of FSO transmit power and RF transmit power (Figs. 2 and 3). As the covert communication requirement rises, the transmit power of artificial interference decreases, though its rate of change increases. To satisfy heightened covert communication needs, both the transmit power of artificial interference and RF signals can be appropriately reduced. When the maximum transmit power increases linearly, artificial jamming power also rises linearly, as the fixed demand for covert communication requires only the jamming and RF signals to adjust linearly (Fig. 4). The proposed strategy leverages the fast transmission rate of the FSO signal, which maintains around 4 Mbit/s under various conditions, while the baseline scheme struggles to maintain approximately 0.03 Mbit/s due to interference on the RF link. Furthermore, increasing the maximum transmit power enhances the FSO signal’s transmission rate by allowing a corresponding rise in RF signal power. In contrast, the baseline scheme’s transmission rate remains largely constant due to the linear increase in both interference and RF transmit power (Figs. 5 and 6).ConclusionsWe propose a covert communication strategy for UAV communication based on a hybrid RF/FSO link to achieve both covertness and effectiveness. We construct an optimization model with constraints on covert communication requirements, transmit power, and deployment position while aiming to maximize the link transmission rate. An iterative solution algorithm based on BCD and SCA is introduced for the joint optimization of deployment location, artificial interference, and RF/FSO power. Simulation results indicate that a transmission rate of 4 Mbit/s can be achieved with the hybrid RF/FSO link, which significantly outperforms schemes that rely solely on RF links. Furthermore, increasing the UAV’s maximum transmit power can further enhance the transmission rate. The proposed algorithm reformulates the problem as a convex optimization task, which allows for polynomial-time solutions and convergence to approximate optimal solutions, in contrast to traditional heuristic methods. Future research will focus on the flexible allocation of RF/FSO power under complex channel conditions and on extending RF/FSO cooperative covert communication to multi-user or network scenarios, thereby improving the technology’s adaptability to various communication environments.

ObjectiveVisible light communication (VLC) has emerged as a promising solution for indoor communication due to its high efficiency and low power consumption. However, in practical applications, the uneven distribution of received power in indoor three-dimensional space significantly affects both the system’s communication quality and the fairness of received signals across different heights of receiving planes. This power unevenness can degrade communication performance and negatively affect user experience. Consequently, optimizing the layout of indoor light sources to enhance the uniformity of received power has become a key research area in VLC. We introduce an optimization method for optical power uniformity based on an improved Harris hawk optimization (IHHO) algorithm. The proposed algorithm integrates strategies such as the best point set, adaptive t-distribution perturbation mutation, and dynamic selection probability, combined with a nonlinear escape energy factor to enhance the global search capability during the exploration phase and the local search capability during the exploitation phase. This improves the algorithm’s adaptability, convergence speed, and accuracy. Building upon this, an optimization method is proposed for the LED transmitter light source layout. By simultaneously considering critical variables such as LED light source placement and transmission power, an optimization model is established to maximize coverage and ensure optimal uniformity of received optical power. The proposed algorithm is applied to refine the model further, which improves the uniformity of received optical power and the communication system’s coverage efficiency. This addresses the issue of uneven received power distribution in three-dimensional communication spaces, thereby enhancing signal transmission performance and ensuring fairness for users.MethodsWe propose a method based on the IHHO to optimize the light source layout of the indoor VLC system and improve the uniformity of optical power distribution in the receiving plane. First, an indoor VLC channel model is developed, and the transmission characteristics between the LED light source and the receiver photodiode (PD) are analyzed using the Lambert radiation model. We adjust the light source layout optimization scheme by assessing the effect of different light source configurations on the distribution of received optical power. The IHHO algorithm combines multiple strategies for optimization, with key enhancements including the introduction of an optimized point set strategy to improve the initial population and prevent premature convergence to local optima during initialization; the use of an adaptive t-distribution mutation strategy along with a nonlinear escape energy factor to balance global exploration and local exploitation, thereby improving convergence speed; the incorporation of a dynamic selection probability strategy to mitigate the risk of early convergence to suboptimal solutions. With these improvements, the IHHO algorithm effectively optimizes the light source layout, selects a more suitable LED light source configuration and transmission power scheme, reduces optical power dead zones in the receiving plane of the three-dimensional communication space, ensures a more uniform power distribution, and extends the effective communication coverage range.Results and DiscussionsIn this study, the IHHO algorithm is employed to optimize the light source layout of the indoor VLC system, which leads to a significant improvement in the light power distribution in the receiving plane. In the initial (non-optimized) light source layout (Fig. 6), the receiving plane exhibits noticeable uneven power distribution, with lower received power in the edge regions. This unevenness is primarily due to the distance of the light sources and the effects of light reflection after it passes through the walls [Fig. 6(a)]. Additionally, at a receiving plane height of h=1.5 m, the variation in received power between the center and edge regions is substantial, which results in a marked power difference [Fig. 6(b)]. These uneven distributions negatively affect communication quality and lead to a lack of fairness in user communication. In the optimized LED layout (Fig. 8), the light source placement is optimized across three height planes (upper, middle, and lower) to improve the light power distribution. This optimization significantly enhances the uniformity of received light power throughout the communication space (Fig. 7). At h=0 m, the optimized layout notably improves the power uniformity of the receiving plane, with the received power quality factor rising to 1.82 [Fig. 7(a)]. At h=1.5 m, although there is still a power gap of approximately 5 dBm between the maximum and minimum received power, the overall power distribution is much more uniform compared to the unoptimized layout, and the received power quality factor increases to 1.51 [Fig. 7(b)]. Furthermore, Table 4 compares the system performance indicators before and after optimization, showing that the optimized layout not only enhances the power uniformity of the receiving plane but also increases the LED coverage. The LED coverage before optimization is 55%, while after optimization, it increases to 82% (Table 4).ConclusionsIn this study, we investigate the issue of uneven distribution of received optical power in indoor VLC systems and propose an optimization method for the light source layout based on the IHHO algorithm. The indoor three-dimensional communication space is divided into three planes—upper, middle, and lower, and the overall performance of received optical power at various heights is used as the objective function. An optimization model is established to maximize coverage and achieve uniformity of received optical power. By considering key variables such as the LED light source layout and transmission power, the model is finely optimized using the IHHO algorithm to determine the optimal light source layout. Compared to other traditional intelligent algorithms, the IHHO algorithm demonstrates clear advantages in optimization accuracy, convergence speed, and the ability to avoid local optima. Simulation results demonstrate that optimizing the light source layout with the IHHO algorithm effectively reduces optical power dead zones in the receiving area, improves the uniformity of received optical power distribution within the VLC system, enhances the effective coverage of the communication signal range, and improves communication fairness for indoor users.

ObjectiveWith the continuous enrichment of mobile services and the explosive growth of internet traffic, the architecture of cloud radio access networks (C-RAN) has garnered significant attention. Mobile fronthaul is a crucial part of the C-RAN architecture. As we step into the sixth generation of mobile communications technology (6G), the fronthaul system is required to support data rates equivalent to the common public radio interface (CPRI) exceeding terabits, along with high-order modulation formats such as 1024 quadrature amplitude modulation (QAM) and above, to meet the demands for large-capacity and high-speed transmission in the 6G era. However, traditional digital radio-over-fiber and analog radio-over-fiber schemes both have shortcomings, and it has been found that they struggle to meet these requirements. In recent years, the widely studied digital-analog radio-over-fiber (DA-RoF) technology has demonstrated a signal to noise ratio (SNR) gain of over 12 dB at the cost of sacrificing half of the spectral efficiency. Meanwhile, due to the cost sensitivity of operators in mobile fronthaul scenarios, intensity-modulation and direct-detection (IM-DD) technology shows great potential because of its cost advantage. Against this background, relying on the DA-RoF system based on IM-DD to balance cost, explore transmission capacity, and optimize fidelity has become a key issue in the mobile fronthaul field. We propose a high-capacity DA-RoF scheme based on space-division multiplexing (SDM). Focusing on the IM-DD system, we adopt an improved modulation factor optimization strategy to achieve an SNR gain exceeding 15 dB, which facilitates the stable transmission of 1024-QAM signals and promotes the development of 6G fronthaul technology.MethodsThe terabit DA-RoF communication system proposed in this work is primarily based on DA-RoF modulation technology and an improved modulation factor optimization strategy, combined with the space-division multiplexing technology of multi-core fiber. At the transmitting end, the 1024-QAM modulated signal generated by the digital signal processing (DSP) module is converted into an orthogonal frequency division multiplexing (OFDM) signal through the inverse fast Fourier transform to simulate the wireless waveform. Then, DA-RoF modulation is applied to divide the wireless waveform into a digital part with probabilistic constellation shaping and an analog part with quantization residual error. Joint optimization is performed on the amplitudes of the digital part, the analog part, and the original wireless waveform to achieve the maximum recovered SNR. At the receiving end, a third-order Volterra equalizer is used to compensate for the link impairments, and finally, the corresponding DA-RoF demodulation and OFDM demodulation are carried out to recover the original signal. The experimental setup uses 10 km of single-mode fiber, 10 km of 7-core fiber, and 1 km of 7-core fiber to explore the effectiveness of the proposed method in different scenarios. Through the experimental implementation, terabit DA-RoF transmission of the 1024-QAM signal has been successfully realized.Results and DiscussionsThe optimization outcome of the modulation factor under a back-to-back configuration is presented in Fig. 5. When considering the 1024-QAM signal with a 2.5% error vector magnitude (EVM) threshold, the received optical power (ROP) sensitivity reaches -11 dBm. Upon transmission through a 10-km single-mode fiber, the recovered SNR of the 1024-QAM signal exceeds 32 dB, which features a symbol rate of 40 GBaud and a CPRI equivalent data rate of 313 Gbit/s, thus fulfilling the 1024-QAM transmission criteria, as illustrated in Fig. 6(b). Fig. 6(c) depicts the variation in SNR corresponding to different symbol rates. Evidently, as the symbol rate rises, the in-band noise increases, causing the recovered SNR to correspondingly diminish. After the 30 GBaud 1024-QAM signal is transmitted over a 10-km 7-core fiber, the average recovered SNR of the signals across all cores is measured at 33.54 dB. This indicates that the proposed scheme attains an SNR gain exceeding 15 dB and accomplishes a CPRI equivalent rate-distance product of 16.4 Tbit/(s·km-1) (Fig. 8). Moreover, the 60 GBaud 1024-QAM signal has been successfully transmitted over a 1 km 7-core fiber, achieving a CPRI equivalent data rate of 3.28 Tbit/s (Fig. 10).ConclusionsWe propose and experimentally demonstrate a large-capacity, high-fidelity mobile fronthaul transmission scheme utilizing a C-band IM-DD configuration. By employing DA-RoF modulation technology and jointly optimizing the modulation factors, an SNR gain of over 15 dB is achieved, which enables the successful transmission of 1024-QAM signals. The system capacity is significantly enhanced through the use of SDM technology with 7-core fibers. Specifically, the experiment shows the transmission of 1024-QAM signals with a symbol rate of 30 GBaud over 10 km of 7-core fiber, achieving a record 16.4 Tbit/(s·km-1) CPRI equivalent rate-distance product. Additionally, the transmission of 1024-QAM signals with a symbol rate of 60 GBaud over 1 km of 7-core fiber is also demonstrated, achieving a record 3.28 Tbit/s CPRI equivalent data rate. These experimental results indicate that the proposed IM-DD DA-RoF scheme offers a promising large-capacity, high-fidelity, and cost-effective solution for future beyond 5G/6G mobile fronthaul scenarios.

ObjectiveMedical image fusion is a crucial technology for assisting doctors in making accurate diagnoses. However, existing medical image fusion techniques suffer from issues such as blurred lesion boundaries, loss of detailed information, and high texture similarity between normal tissues and lesion regions. To address these problems, we propose a dual-branch multimodal medical image fusion method based on the collaboration of local and global information. This method not only reduces the loss of detailed information but also effectively improves the clarity and accuracy of the fused images, which ensures more precise identification of lesion regions, thereby providing more reliable and accurate support for medical image diagnosis.MethodsWe propose a dual-branch multimodal medical image fusion model based on the collaboration of local and global information. Firstly, the model utilizes a multi-scale depth-separable convolutional network to extract feature information with different receptive fields from the input images. Subsequently, the extracted features are fed into a dual-branch structure, which mainly consists of two modules: the deep local feature enhancement module and the global information extraction module. The local feature enhancement module focuses on enhancing image details, especially those in the lesion areas, to improve the clarity of these regions. The global information extraction module, on the other hand, emphasizes capturing the global structural context of the input images, which ensures the overall consistency of the images and the integrity of their organizational structures during the fusion process. To further optimize the feature fusion process, we introduce two advanced fusion units: the Multidimensional Joint Local Fusion Unit (MLFU) and the Cross-Global Fusion Unit (CGFU). The MLFU efficiently fuses the local features extracted by the two branches, which ensures that important fine-grained information is retained and enhanced during the fusion process. The CGFU promotes the fusion of global features, which facilitates information sharing and complementarity between different modalities. Finally, a convolutional layer is used to adjust and reconstruct the fused features, and a fused image with richer details and higher clarity is output.Results and DiscussionsThe effectiveness of the proposed model in medical image fusion tasks has been validated through extensive comparison and ablation experiments. The experimental results demonstrate that our model significantly outperforms nine other mainstream methods in several key objective evaluation metrics on the Harvard public medical image dataset. Specifically, our model achieves improvements of 3.14%, 0.95%, 13.66%, 16.81%, and 1.12% in EN, SD, SF, AG, and CC, respectively (Table 4). The model enhances local feature extraction through the deep local feature enhancement module, which accurately captures subtle differences in the input images and significantly improves the clarity of lesion boundaries. Furthermore, to further optimize the fusion results, the model employs different fusion strategies for different feature types, which effectively integrates local features with global information and achieves more efficient information complementarity and collaboration between modalities. As a result, the fused images exhibit richer texture details and clearer structural features, thereby significantly enhancing image readability and diagnostic value (Figs. 6, 7, and 8). Ablation experiments further validate the effectiveness of each module in the model. The results show that removing the deep local feature enhancement module leads to a noticeable decline in lesion boundary clarity and texture detail, particularly in high-contrast lesion areas, where the fusion quality deteriorates. Furthermore, removing the global information fusion module results in a significant loss of global consistency and information complementarity between different modalities, which leads to a fusion result with a lack of necessary global coherence (Figs. 11, 12, and 13).ConclusionsThe algorithm proposed in this paper effectively integrates local and global information, achieving excellent detail preservation and structural representation in medical image fusion tasks. This method not only accurately fuses normal tissue structures to ensure global consistency but also highlights the details of abnormal lesion areas, which improves the visibility and recognizability of lesions. By combining deep local feature extraction with global context information, the algorithm ensures the preservation of local details while effectively enhancing the texture features and boundary clarity of lesions. The fused images exhibit richer texture details and clearer structural features. Experimental results, verified through numerous comparative experiments, demonstrate that this algorithm is effective in improving the diagnostic accuracy of medical images. Compared with other mainstream methods, the proposed algorithm performs outstandingly in multiple key objective evaluation metrics, especially in terms of detail preservation, structural clarity, and lesion prominence.

ObjectiveVisibility refers to the maximum horizontal distance at which an individual with normal vision can identify and distinguish an object against the sky background under prevailing weather conditions without external assistance. It is a critical parameter reflecting atmospheric transparency and serves as a key indicator in the transportation sector. Various factors influence visibility, with fog and haze having the most significant influence. In foggy and hazy weather, the fine particles suspended in the air hinder light transmission and absorb light reflected from object surfaces, significantly reducing visibility. On highways, reduced visibility due to fog and haze is a major cause of traffic accidents, posing severe risks to public safety and economic stability. The accurate and efficient acquisition of visibility data is essential for effective traffic management. Therefore, developing a high-precision visibility detection method that provides reliable data support for transportation authorities has become a key research focus in ground-based meteorology. To address this, we propose an advanced visibility detection method based on a depth estimation network and an atmospheric scattering model.MethodsIn this paper, we propose a novel visibility detection method leveraging an encoder-decoder structured depth estimation network. Using highway surveillance videos, the method determines visibility by integrating principles of the atmospheric scattering model to extract atmospheric transmission coefficients and scene depth information. First, the K-means clustering algorithm is applied to segment video frames into foggy and visible road regions, enabling the identification of the maximum scene depth area of the road. Next, the dark channel prior algorithm is enhanced using a regional entropy method to refine the selection of atmospheric light intensity, ensuring a more precise estimation of atmospheric transmission coefficients. Subsequently, an encoder-decoder-based depth estimation network extracts depth information from the images. Finally, visibility is calculated based on the atmospheric scattering physical model.Results and DiscussionsThe depth information obtained using the DenseNet-169 network is compared with that derived from five other depth estimation networks: BTS, LapDepth, MonoDepth, CADepth, and Lite-Mono (Table 1). The results show that DenseNet-169 outperforms these models in terms of root mean square error (RMSE), absolute relative error (AR), and accuracy. This demonstrates that the depth information generated by DenseNet-169 is highly precise and effectively supports visibility estimation. To further enhance network performance, four attention modules—coordinate attention (CA), convolutional block attention module (CBAM), separable self-attention (SSA), and efficient channel attention (ECA)—are integrated into DenseNet-169 and compared against the original network without attention modules (Table 2). Among these, the integration of the CBAM module achieves the best performance, yielding the lowest RMSE and AR values while attaining the highest accuracy at a threshold of 1.252. As a result, we adopt the Dense+CBAM encoder-decoder network for depth estimation. Experimental data are collected from surveillance video recorded on November 24, 2024, between 14:25 and 15:15. A sample video frame is shown in Fig. 11, while Table 4 and Fig. 12 present visibility detection results and error variations across different timestamps. The proposed method’s visibility estimation results are compared with those of a visibility monitoring device and four alternative networks. The findings indicate that the proposed method closely aligns with the monitoring device, with only minor deviation observed at 15:00. Overall, the proposed method demonstrates high accuracy in both short-range and long-range visibility estimation, achieving an accuracy rate of 89.83% and an average error of approximately 73 m. Furthermore, the method exhibits strong generalization capabilities, highlighting its reliability and precision in visibility measurement.ConclusionsWe propose a novel visibility detection method based on an encoder-decoder depth estimation network and an atmospheric scattering model. This method employs the atmospheric scattering physical model as its theoretical foundation to calculate visibility by deriving atmospheric transmittance coefficients and scene depth information from images. To enhance accuracy, a regional entropy method is introduced to refine atmospheric light intensity estimation, leveraging stable gray-level variations in foggy regions. Image segmentation techniques are applied to locate the boundary between visible road surfaces and foggy sky regions, focusing on pixel information within the target area to minimize the interference from irrelevant features. In the depth estimation network, the encoder-decoder structure of DenseNet-169 is optimized by incorporating the Dense Block-B module, which suppresses redundant features in the input and enhances feature extraction efficiency. In addition, to mitigate interference from the original image background, the CBAM is embedded in the encoder’s convolutional module, improving road surface feature extraction while reducing the influence of irrelevant features. Experimental results demonstrate that, compared to existing methods, the proposed approach achieves higher accuracy in both long-range and short-range visibility estimation, with an accuracy rate of 89.83%, reduced overall error, and enhanced generalization capability. This method enables efficient and precise visibility measurement, providing reliable data support for traffic management.

ObjectiveAugmented reality (AR) helmet-mounted displays (HMDs) hold significant promise for applications in education, healthcare, industry, and military fields, emerging as a crucial platform for next-generation human-computer interaction. The design and optimization of optical systems are critical for enhancing AR-HMD performance. Traditional optical design methods primarily involve identifying initial structures from patent literature and subsequent optimization, which not only demand substantial human resources but also rely heavily on designers’ personal experience. This makes it challenging to meet the rapid development needs of AR technology. Given the successful application of deep learning technology across various fields, integrating it into optical system design has become increasingly feasible. In this paper, we propose a design method combining optical simulation software with deep learning, aiming to automate the construction of AR optical systems, thus improving design efficiency and reducing reliance on designer experience.MethodsIn this paper, we utilize xy polynomial freeform surfaces based on quadric surfaces to design an optical structure that combines freeform prisms and compensation prisms. This structure integrates the display light path (one total internal reflection, one reflection, and two transmissions) with the real-world light path. For dataset acquisition, initial structures are created via Python-Zemax software interaction, with half-XFOV (Fhalf?X), half-YFOV (Fhalf?Y), and F-number (F) as key input parameters, with their respective ranges determined. Several base systems with good imaging performance and reasonable optical structure are obtained by optimizing the prism structures’ edges and total internal reflection. Based on these base systems, system samples covering the entire input parameter range are generated through gradual parameter variation (step size 0.2). The system performance is evaluated using merit function results and the root-mean-square (RMS) radius of each field. Finally, samples with optical structure errors are filtered out, leaving only those that meet imaging quality requirements (Fig. 3). The deep neural network training employs a multi-layer perceptron (MLP) structure (Fig. 4). The input layer consists of three features (Fhalf?X, Fhalf?Y, F), while the output layer includes the optical system’s structural parameters (radius of curvature, thickness, conic value, decentration, tilt degree, and freeform surface coefficients). The network comprises four hidden layers, uses a tanh activation function, and employs the MSELoss function and Adam optimizer for training. System verification is carried out by using the trained model to predict optical system structures, importing the predicted results into Zemax for optimization, and validating the design results through RMS radius evaluation of system performance.Results and DiscussionsAfter 80000 training iterations, the loss function decreases to 0.995 and stabilizes (Fig. 5). System performance testing with 100 randomly generated parameter combinations (Fhalf?X, Fhalf?Y, F) shows that 91 systems have an average RMS spot radius within 0?500 μm, with only 9 systems exceeding 500 μm in RMS radius. Among them, 3 systems have an average RMS spot radius approaching 2000 μm due to excessive position parameters (Fig. 6). Although these 91 systems may not directly qualify, they can be used as is or further optimized to improve the optical system structure. For example, with Fhalf?X=20, Fhalf?Y=15, and F=4, model prediction and post-optimization results are obtained (Fig. 7). After Zemax optimization, the system’s average RMS radius decreases from 30.34 to 22.67 μm, which is less than 1 arc minute after unit conversion, meeting human eye’s imaging standards (Figs. 8 and 9). Regarding modulation transfer function (MTF) performance, most fields maintain sagittal and meridional MTF values above 0.2 at 30 lp/mm spatial frequency (Fig. 10). The maximum grid distortion in the AR optical system’s perspective light path is controlled within 3.4%, meeting the human visual system’s tolerance for distortion (Fig. 12). The final AR optical system’s three-dimensional diagram is shown after adding the compensating prism (Fig. 11), and the overall performance of the final design meets AR display optical requirements.ConclusionsThe design method proposed in this paper combines optical simulation software with deep learning technology, enabling rapid generation of freeform prism optical structures within required spaces, followed by further optimization to enhance system image quality. This method significantly improves the design efficiency of freeform systems and reduces dependence on designer experience. The feasibility of the method is verified using 100 randomly generated parameter combinations (Fhalf?X, Fhalf?Y, F), as well as a specific case with Fhalf?X=20, Fhalf?Y=15, F=4, where the image quality meets human eye requirements. The method provides new insights into the rapid design and development of AR glasses optical systems. In addition, it can be effectively applied to other optical systems featuring freeform surfaces, offering vital technical support for AR-HMD applications in education, healthcare, industry, and military fields. The success of this method opens new possibilities for automated optical design in related fields such as virtual reality systems and advanced imaging devices. Its adaptability and scalability suggest that it can be extended to address other complex optical design challenges in various industrial and scientific fields. Future work may focus on refining the deep learning model, incorporating additional optical parameters, and exploring more complex optical system configurations to further expand the method’s applicability and effectiveness.

ObjectiveThe division of focal plane polarization camera is a widely used, integrated polarization imaging system. Crosstalk between the pixels of the micro-polarizer array is a unique interference factor in such systems, which introduces errors in the measurement of the target’s polarization information. The value and superposition ratio of the crosstalk light intensity vary with the polarization state of the incident light in the scene. Previous polarization crosstalk models generally regard crosstalk as random temporal noise, which assumes that it is difficult to eliminate the crosstalk effect through pre-calibration methods. A mathematical model describing the relationship between crosstalk and the system’s polarization parameters has not been established or verified. The main solution to restraining the crosstalk effect is typically through the optimization of the polarization imaging system’s structure.MethodsWe consider the factors influencing crosstalk in application and establish a whole-process crosstalk model that includes the parameters of the hardware, crosstalk, and polarization analyzer. The result of crosstalk is regarded as the linear superposition of constant weights of multiple analyzed intensities at different polarization analyzed directions. The intensity response containing crosstalk can still be accurately characterized using the cosine form, which is equivalent to a constant deviation in parameters such as the extinction ratio and analyzed directions, and can be distinguished from the random error caused by temporal noise. Through our experiment correlating the polarization state and crosstalk, we demonstrate the calibrability of the crosstalk-influenced analyzer parameters, the non-correlation of the incident light’s polarization state, and the correctability of the crosstalk deviation. The experiment uses a Sony polarization sensor to sample polarized light emitted from the integrating sphere, which rotates through a full cycle via a motor-driven mechanism, to fit the analyzer parameters and inversely infer the crosstalk coefficient with high precision. The light intensity and degree of polarization correlation experiments are respectively judged by the linear characteristics of the denoised unpolarized-light and pure-polarized-light response, while the angle of polarization correlation experiment is judged by the cosine response characteristics of the full angle of polarization periods.Results and DiscussionsThe simulation shows that the transverse electric (TE) wave transmittance can be ignored, and the diffraction crosstalk distribution only needs to consider the transverse magnetic (TM) wave component in the incident light. Therefore, the crosstalk coefficient can be regarded as constant (Fig. 6). Increasing the exposure time leads to an increase in dark noise and a decrease in the extinction ratio (Figs. 10?12), which highlights the necessity of pre-calibration and denoising to eliminate interference in crosstalk measurement. The simulation shows that crosstalk is independent of the target’s angle of polarization and degree of polarization (Fig. 6). This can be verified by varying the target polarization state and measuring the change in the system extinction ratio coefficient. The average change in the crosstalk coefficient is <0.1% within the measuring range (Figs. 13?15), which proves that no correlation exists.ConclusionsCrosstalk will lead to degradation of the extinction ratio, the deviation in the analyzer polarization angle and non-uniformity of the light intensity response coefficient. However, as long as the crosstalk coefficients do not change during use, the system analysis model remains a simple cosine form with constant coefficients, and this crosstalk effect can be calibrated. The crosstalk coefficient only needs to consider the TM wave diffraction distribution, and the polarization state of the target/scene is not correlated with the crosstalk. The crosstalk coefficient is a function of the effective aperture (working F-number) and pixel position.

ObjectiveTraditional spectral imaging systems, which rely on spatial or temporal scanning, face significant limitations in practical applications, including narrow spectral coverage, low photon throughput due to sequential acquisition, and the unresolved tradeoff between spatial resolution and spectral fidelity. To address these challenges, we propose a metasurface-enabled snapshot compressive spectral imaging system, which fundamentally redefines the system architecture through two key innovations: 1) a spectrally-encoded metasurface array that performs parallel light field manipulation across 28 spectral channels within the visible range (450?650 nm); 2) a physics-informed window channel attention-deep unfolding network (WCA-DUN) that synergizes computational optics with deep learning for real-time hyperspectral cube reconstruction. Compared to existing snapshot spectral imaging systems based on coded apertures or dispersive optical elements, our approach leverages the unique capability of metasurfaces to engineer spectral-spatial responses at subwavelength scales, enabling a compact, lightweight, and integrated spectral imaging system.MethodsIn this paper, we design a snapshot compressive spectral imaging system based on a metasurface array. By leveraging this metasurface array for efficient spectral encoding and integrating the proposed WCA-DUN algorithm, real-time hyperspectral image reconstruction with high spectral resolution can be achieved. For the metasurface design, a randomly generated binary pattern is used to construct a meta-atom library. This approach enriches the meta-atom library while ensuring the minimum feature size, making fabrication easier. Meta-atoms with low correlation are selected using the Pearson correlation coefficient as criteria. For the reconstruction algorithm, we propose the WCA-DUN algorithm, which integrates window segmentation into channel attention, achieving a larger receptive field to capture more global information. In addition, it combines amplitude and phase feature learning of the optical field to suppress artifacts and minimize cross-talk between spectral channels.Results and DiscussionsOur system demonstrates excellent robustness to noise. The reconstruction results of our proposed system decrease by only 0.61 dB in PSNR under Gaussian noise, while the results of other existing systems (GAP-TV, ADMM-net, and PnP) decrease by 4.58, 0.56, and 9.1 dB, respectively. Moreover, our system achieves a significantly faster reconstruction speed. Compared to systems using traditional iterative algorithms, our proposed system improves the reconstruction speed by three orders of magnitude. In addition, our algorithm ensures both high reconstruction speed and spectral reconstruction accuracy, outperforming depth-unfolding-network-based algorithms. Specifically, a reconstruction rate of 30 Hz can be achieved with a spectral resolution of 1 nm.ConclusionsIn summary, we propose a novel snapshot-based compressive spectral imaging system based on a metasurface array, enabling real-time hyperspectral image reconstruction with high spectral resolution. By leveraging the unique capabilities of metasurfaces to manipulate light at subwavelength scales, the designed metasurface array facilitates the development of a compact, lightweight, and high-performance spectral camera with superior spatiotemporal resolution. To achieve real-time and high-fidelity hyperspectral reconstruction, we introduce WCA-DUN, a deep unfolding network framework that synergistically integrates computational optics with deep learning. This advanced approach not only enhances reconstruction accuracy but also significantly improves processing efficiency, making real-time applications feasible. The proposed system provides a groundbreaking solution to the long-standing tradeoff between spatial, temporal, and spectral resolutions in spectral imaging. By overcoming the fundamental limitations of traditional systems, it enables broader spectral coverage, higher photon throughput, and superior resolution without the need for bulky optical components or mechanical scanning. While our current implementation focuses on the visible range, the system architecture and design methodology can be easily extended to other spectral regions, including the near-infrared and terahertz ranges. This adaptability makes it a promising candidate for a wide range of applications, such as aerospace exploration, remote sensing, biomedical imaging, and machine vision, where compact and high-resolution spectral imaging is crucial. We believe that this innovation will pave the way for the next generation of spectral imaging technologies, driving advancements across multiple scientific and industrial fields.

ObjectiveIn recent years, the demand for efficient and accurate object localization technology has been increasing with the rapid development of computer vision, radar detection, and biomedicine. Traditional object localization technology mainly depends on image acquisition, typically adopting the ‘image first, localization later’ method, combined with object localization algorithms for positioning. However, image-dependent object localization is often limited by the resolution of image reconstruction. Due to the complexity of the object, scene, and environmental uncertainties, existing object localization technology faces many challenges, such as severe occlusion, blurring, changes in illumination, and distance variations, which may lead to object loss. Additionally, geometric deformation and attitude changes may result in positioning failures, thus reducing localization accuracy. In contrast, single-pixel detection technology shows unique advantages in object localization, especially in low-light detection environments, with strong robustness and anti-interference capabilities. Furthermore, most existing research on single-pixel, image-free detection in complex scenes focuses on known object information, which improves object localization performance in complex environments through algorithmic enhancements. However, there are relatively few studies on image-free target localization for unknown object information. Therefore, we aim to propose a single-pixel, image-free object localization method that works with unknown scene information. This method can effectively protect image privacy, save storage space, and provide a new approach for object localization.MethodsWe propose a single-pixel, image-free object localization strategy based on global search (SPIF-GSOL) for single-pixel imaging. We use an improved genetic algorithm to perform a global search when the object information is unknown. The template projection position is updated through continuous feedback iterations, and the optimized reference point coordinates are obtained. The template and the pure white pattern are projected onto the coordinate point, and the R-value is calculated at this stage. This process is repeated to obtain a continuously optimized population, and the projection position is updated iteratively. The method checks whether the number of iterations meets the termination condition. If the termination condition is satisfied, the highest R-value and the corresponding coordinate point position after convergence are output. If the termination condition is not satisfied, the global search is performed based on the fitness value, and the matching position is continuously updated until the iteration count is met, which ultimately achieves image-free object localization.Results and Discussions The results show that1) The method proposed in this paper can still achieve accurate positioning in the case of complex scene interference. As shown in Figs. 9?11 and Fig. 13, the method can accurately locate the object when the template position changes, its size changes, its attitude changes, or there is occlusion. 2) The proposed method (SPIF-GSOL) is compared with traditional ghost imaging (GI) localization, differential ghost imaging (DGI) localization, normalized ghost imaging (NGI) localization, and compressed sensing ghost imaging (CSGI) localization. Fig. 13(a) presents the time consumption curves for different projection times, while Fig. 13(b) shows the localization accuracy curves for the same projection times. The results reveal that the proposed method consumes the least time and achieves the highest accuracy compared to the “image first-localization later” method. 3) Compared to 74529 iterations without using the genetic algorithm, the number of iterations for our method is reduced to 5000, which represents a 93.29% reduction. This fully demonstrates that the single-pixel image-free object localization using this method effectively reduces the number of projections and avoids the problem of increasing projections with the growth of image size. 4) Compared with different fitness functions, the proposed method achieves the lowest mean absolute error (MAE).ConclusionsIn our study, we do not need to load a fixed projection sequence. We calculate the fitness value based on the received single-pixel value and use an improved genetic algorithm to continuously update the template pattern projection position during the feedback iteration process. Simulated and experimental results show that, when the object scene information is unknown, the proposed method does not require imaging to accurately localize the object in complex scenes, while also effectively protecting image privacy. The genetic algorithm also reduces the number of projections and improves the efficiency of object localization. Furthermore, the proposed method exhibits certain anti-interference characteristics for object scaling, rotation, and offset in complex scenes. Compared to other algorithms, our method achieves higher localization accuracy.