SignificanceCarbon dioxide (CO2) is one of the most significant anthropogenic greenhouse gases, and its concentration is closely linked to global climate change. Since the industrial revolution, the extensive combustion of fossil fuels and changes in land use have led to a continuous rise in atmospheric CO2 levels, triggering a series of environmental issues such as global warming, increased frequency of extreme weather events, glacier retreat, and sea-level rise. CO2 plays a critical role in radiative forcing within the climate system and profoundly influences the balance of the carbon cycle, ecosystem stability, and the sustainable development of human society. Therefore, comprehensive research on the observation, simulation, and underlying mechanisms of CO2 dynamics is a central component of global climate change studies. With the global push for carbon peaking and carbon neutrality, there is an urgent demand from both the scientific community and policymakers for high-precision, high-resolution CO2 observations to evaluate carbon source-sink patterns, verify emission reduction outcomes, and improve climate models. Therefore, achieving high-precision CO2 observations has become particularly important.ProgressThe integral path differential absorption (IPDA) lidar technology has unique advantages in global CO2 monitoring, making it an important tool in climate change research. Unlike passive remote sensing technologies that rely on sunlight, IPDA lidar uses its own laser pulses as the light source, enabling high-precision observations in all weather conditions and at any time, making it particularly suitable for nighttime and high-latitude gas monitoring. This allows IPDA technology to overcome limitations of passive remote sensing in these conditions. Furthermore, IPDA is less affected by cloud cover and aerosols, showing strong resistance to interference, and can still provide reliable measurement data under complex meteorological conditions.The principle of IPDA is based on measuring the absorption characteristics of gas molecules to laser signals, allowing precise inversion of gas concentrations by analyzing the attenuation of the laser signal as it passes through the atmosphere. This technology offers high spatial resolution and large coverage, making it particularly suitable for satellite platforms, enabling global CO2 monitoring. IPDA also has a high signal-to-noise ratio, allowing it to maintain high precision in long-range detection, significantly improving measurement accuracy.China has made significant progress in this field, successfully developing lidar systems based on IPDA technology. In 2022, China successfully launched the atmospheric environment monitoring satellite (DQ-1), marking an important step for China in global CO2 monitoring technology.Conclusions and ProspectsThe DQ-1 satellite offers significant promise for enhancing global CO2 monitoring capabilities. Trends indicate the integration of artificial intelligence with satellite data to improve carbon flux detection. Ongoing advancements in active remote sensing technology, along with improvements in data processing and fusion techniques, will enhance the accuracy and spatial resolution of CO2 observations. These developments will drive progress in global carbon monitoring, enabling more precise tracking of emissions and supporting efforts to mitigate climate change.

SignificanceAtmospheric temperature and humidity (water vapor) are fundamental parameters that characterize the physical state of the atmosphere. Temperature governs the thermal processes of the atmosphere, and water vapor plays a crucial role in the Earth’s water and energy cycles. The spatiotemporal variations of temperature and humidity have a significant impact on atmospheric dynamics, thermodynamics, and climate, and are closely related to a series of atmospheric physicochemical processes, including radiative transfer, energy cycles, water cycles, and the formation of clouds and precipitation. Organizations such as the World Meteorological Organization (WMO) and the U.S. National Research Council (NRC) have emphasized that high-precision, high-spatiotemporal-resolution temperature-humidity profiles are foundational to atmospheric science research. Such observations are essential for advancing our understanding of boundary-layer physical processes, improving numerical weather prediction (NWP) models, and studying climate change. Consequently, enhancing the observation of temperature and humidity profiles has become a widely recognized priority among the research community.Existing methods for measuring temperature and humidity, including radiosondes, meteorological towers, and microwave radiometers, all have limitations in either temporal or spatial resolution. As an active remote sensing technique, atmospheric lidar shows great potential for detecting atmospheric temperature and humidity profiles with high spatiotemporal resolution. Currently, the primary techniques are Raman lidar and differential absorption lidar (DIAL). However, since the Raman scattering cross-section of gas molecules is much smaller than that of Rayleigh and Mie scattering, Raman lidars generally suffer from weak return signals and a poor signal-to-noise ratio (SNR) during daytime operation. In contrast, the DIAL technique directly detects atmospheric Mie and Rayleigh scattering signals, yielding an SNR that is typically 3‒4 orders of magnitude higher than that of Raman lidar. Furthermore, DIAL does not require calibration with other instruments, which substantially reduces operational complexity. Owing to its superior all-day detection capability and calibration-free nature, DIAL is particularly well suited for ground-based observation networks aimed at producing high-density, high-resolution, and high-precision temperature and humidity profiles.ProgressThis paper first describes the absorption-line characteristics of oxygen and water vapor, then details the fundamental principles of temperature-humidity DIAL, and finally provides a systematic review of its development. In the field of O₂-DIAL, the Max Planck Institute for Meteorology reported the first atmospheric temperature profiles retrieved using O₂-DIAL in 1993. However, they noted that the Doppler broadening effect of atmospheric molecules could introduce temperature retrieval errors of up to 10 K. How to accurately correct this effect became a long-standing challenge limiting the measurement accuracy of O₂-DIAL. It was not until 2018 that teams from Montana State University (MSU) and the National Center for Atmospheric Research (NCAR) proposed a solution that integrates O₂-micro-pulse DIAL (MPD) with high-spectral-resolution lidar (HSRL). This approach used HSRL to precisely measure the molecule-aerosol backscatter ratio, thereby accurately isolating and correcting the influence of Doppler broadening in the DIAL retrieval equation. This breakthrough successfully improved the temperature measurement accuracy of O₂-DIAL to within 3 K. Furthermore, to address the large near-ground blind zone of pulsed lidars, groups including Dalian University of Technology developed a continuous-wave (CW) DIAL technique based on the Scheimpflug principle. With a tailored optical geometry, this approach achieved a small blind zone for near-ground temperature profiling, providing a crucial supplementary tool for fine-scale boundary-layer research.In the field of H2O-DIAL, the technique is relatively mature and is gradually transitioning toward operational applications. Early systems using CO2 lasers (~10 μm) or dye lasers (~720 nm) demonstrated feasibility but were constrained by laser performance and maintenance demands. Since the 1990s, all-solid-state lasers, represented by alexandrite lasers, Ti∶sapphire lasers, and optical parametric oscillators (OPOs), have become mainstream, significantly enhancing system performance. Based on these advances, institutions such as German Aerospace Center (DLR), National Aeronautics and Space Administration (NASA) in the U.S., and the European Space Agency (ESA) have developed advanced airborne and even space-based concept systems (e.g., the WALES project). With the rapid development of semiconductor technology, MSU and NCAR jointly developed the H2O-MPD. This system employs a light source architecture featuring a distributed Bragg reflector (DBR) seed laser and a tapered semiconductor optical amplifier (TSOA), enabling a compact, low-cost system capable of long-term, unattended operation. Its fifth-generation system is already capable of all-day detection of water vapor profiles up to 4 km, laying a solid foundation for the construction of a ground-based, high-density water vapor observation network.Conclusions and ProspectsIn summary, temperature-humidity DIAL has made considerable progress. In humidity profiling, semiconductor-based MPD has demonstrated the potential for networked deployment. However, temperature DIAL still faces challenges, as its current accuracy (~3 K) falls short of the threshold required for numerical weather prediction (<1 K). In addition, few lidar systems have been reported that achieve simultaneous, high-precision measurements of both temperature and humidity. Future research must therefore focus on developing DIAL techniques capable of synchronous, high-precision, all-day measurements of temperature and humidity profiles, thereby better serving the pressing needs of advanced atmospheric science and weather forecasting.

SignificanceAtmospheric aerosols, clouds, and precipitation play significant roles in the Earth’s environment and climate change. Changes in the vertical structure of the atmosphere, in particular, are crucial for understanding the triggering mechanisms and formation processes of extreme weather events. However, atmospheric data from a single region often lack representativeness, making it difficult to capture large-scale atmospheric changes. Therefore, the establishment of a large-scale ground-based atmospheric sounding network is essential for enabling real-time and continuous atmospheric monitoring. The progress of atmospheric science depends on high-quality observational data.ProgressAt present, international organizations and research institutes are actively developing ground-based atmospheric sounding networks. These networks are evolving from single, independent soundings to multi-instrument, network-based soundings, significantly expanding their observational coverage. However, some of these networks still face challenges, such as the complexity of equipment and the high costs associated with operation and maintenance. In this paper, we provide an overview of the organizational structure and development status of major ground-based atmospheric sounding networks worldwide. It also highlights the ANSO integrated atmospheric observation network (ANSO-AON) developed in China, detailing its characteristics, including scientific focus, organizational structure, station construction, instrumentation, and data products. Through specific cases, such as the smog and sandstorm in Beijing from 9 to 11 February 2022, the scientific value of the observational data is demonstrated, filling the observational gap in mid-to-high latitude regions along the Belt and Road Initiative, with important scientific and policy implications. Leveraging the advantages of miniaturization, automation, and networking, the ANSO-AON improves flexibility and efficiency in atmospheric sounding. In conclusion, the ANSO-AON offers significant benefits in terms of size, automation, and networking. The paper emphasizes the importance of strengthening observation capabilities, particularly at high latitudes, to better support global environmental and climate research, as well as efforts in climate change and environmental protection.Conclusions and ProspectsIn the context of global climate change, enhancing atmospheric observation capabilities in mid-latitude and high-latitude regions, particularly in areas like Russia and Belarus influenced by continental climates, has become a critical focus for future development. The climate characteristics of these regions are valuable for research, yet the lack of strong atmospheric observation infrastructure has led to a significant data gap. Strengthening long-term observations in these regions will provide crucial data for global climate research and support satellite data validation. In the future, observations in these regions will fill important data gaps in global climate change research and provide more comprehensive empirical evidence to enhance the accuracy of global climate change models. The ANSO-AON has filled the data gap in traditional networks by providing continuous monitoring in mid- and high-latitude regions along the Belt and Road Initiative through its long-term stations and advanced equipment. The deployment of miniaturized equipment in these regions enables this achievement, offering valuable support for research on climate change, air quality, and environmental pollution. By utilizing miniaturized and automated observation instruments, the ANSO-AON ensures efficient data collection and real-time transmission, while maintaining the stability and reliability of its equipment, even in harsh environments. This capability has established the network as a critical foundation for global atmospheric change research and extreme weather warning systems. Furthermore, the successful deployment of these stations has fostered international cooperation among BRICS countries and deepened scientific and technological collaboration along the Belt and Road. Looking ahead, the ANSO-AON will focus on addressing multiple strategic objectives, including tackling global climate change, promoting scientific and technological innovation, and enhancing emergency response capabilities. As the challenges posed by climate change intensify and the demand for environmental protection grows, the ANSO-AON is poised to play a pivotal role in scientific research, policy formulation, and international cooperation. It will also enhance global climate system understanding, foster cross-border collaborations, and strengthen international emergency response efforts.

SignificanceHyperspectral lidar (HSL), an emerging active remote sensing technology, integrates the three-dimensional (3D) spatial detection capability of traditional lidar with the rich spectral information of hyperspectral imaging, addressing the long-standing limitation of separate spatial and spectral information acquisition in conventional remote sensing. Unlike passive hyperspectral imaging (which lacks 3D perception) and single-wavelength lidar (which lacks spectral discrimination), HSL simultaneously captures high-resolution 3D coordinates and spectral reflectance characteristics of targets, generating four-dimensional spatial-spectral point clouds. This unique capability is pivotal for advancing precision applications such as forest resource surveys (quantifying vertical structure and biochemical components), land cover classification (enhancing accuracy via spectral-spatial synergy), urban 3D modeling (distinguishing material properties), and target detection (penetrating obscurations). By enabling “one-stop” acquisition of both physical structure and chemical composition, HSL revolutionizes how we perceive and analyze complex environments, making it indispensable for addressing global challenges like sustainable resource management, and smart urban development.ProgressOver the past two decades, HSL has evolved from dual-wavelength prototypes to sophisticated systems with tens even hundred spectral channels, driven by advancements in supercontinuum laser sources and spectral detection technologies. Key progress includes:1) System architectures: Two dominant spectral splitting schemes have been developed: spatial splitting (using gratings for simultaneous multi-wavelength detection, suitable for airborne large-area scanning) and wavelength scanning [using acousto-optic tunable filter/liquid crystal tunable filter (AOTF/LCTF) for high spectral resolution, ideal for fine spectral analysis]. Representative systems, such as the 56-channel airborne HSL (Wuhan University) have achieved detection ranges up to 500 m and 101-channel ground-based HSL (Anhui Jianzhu University), and spectral resolution as high as 5 nm.2) Waveform processing: To extract accurate spatial-spectral information from overlapping echoes, methods like multi-spectral waveform decomposition (MSWD), multi-channel interconnection waveform decomposition (MIWD), and range resolution enhanced method with spectral properties (RREM) have been proposed. These techniques will enhance range resolution for lidar signals by leveraging cross-channel spectral correlations, overcoming the limitations of single-wavelength decomposition.3) Radiometric correction: Strategies to mitigate distance effect (via piecewise fitting), incidence angle effect (using Lambertian-Beckmann models), and sub-footprint effect (through spectral ratio and area-weighted correction) have been developed, ensuring reliable spectral reflectance retrieval across diverse targets (vegetation, minerals, building materials).4) Spatial-spectral point cloud applications: Techniques for point cloud generation (enabling true-color imaging without passive data), classification (combining machine/deep learning with spatial-spectral features), and feature extraction (e.g., crop nitrogen content, mineral identification) have been validated, with classification accuracies exceeding 90% in vegetation and mineral scenarios.Conclusions and ProspectsHSL has demonstrated significant potential in various applications, including vegetation monitoring, mineral exploration, and urban modeling, by providing detailed spatial and spectral information. However, challenges remain: limited detection range (mostly <100 m for ground systems), slow multi-channel data processing (lagging behind acquisition rates), and high system complexity hindering commercialization. Future research should focus on: 1) System advancement: developing miniaturized, multi-platform (airborne, satellite-borne, underwater) systems via high-power supercontinuum lasers and low-loss spectral splitters to extend detection range and reduce cost; 2) Information processing: enhancing real-time performance through hardware acceleration (FPGA/ASIC) and deep learning-based multi-channel waveform decomposition, and improving radiometric correction for non-Lambertian targets; 3) Application expansion: exploring new frontiers such as defense reconnaissance (obscured target identification) and smart agriculture (3D biochemical mapping), supported by open datasets and standardized processing workflows. As these challenges are addressed, HSL is poised to become a cornerstone technology in high-precision remote sensing, enabling unprecedented insights into Earth systems and beyond.

ObjectiveHigh-resolution and accurate profiles of atmospheric temperature have wide applications in weather analysis and forecasting, climate change assessment, atmospheric chemistry research, and remote-sensing measurements. Current primary tools for measuring atmospheric temperature profiles include radiosondes, microwave radiometers, and lidars. While conventional radiosondes provide temperature profiles with satisfactory accuracy and excellent height resolution, their inadequate temporal resolution (approximately 12 hours) impedes the capture of relatively rapid atmospheric processes. Temperature profiles retrieved from microwave radiometer measurements generally suffer from relatively low accuracy, as they rely on statistics-based inversion algorithms that incorporate artificially introduced assumptions. Single-line-extracted pure rotational Raman (PRR) lidar represents an active remote sensing technique capable of obtaining accurate, high-range-resolution atmospheric temperature profiles from the lower troposphere to the lower stratosphere. Originally proposed by Cooney in 1972, this concept remained unrealized for over fifty years due to technical challenges: the atmospheric pure rotational Raman spectrum consists of mixed spectral lines from nitrogen and oxygen molecules, and the bandwidth of commercially-available optical filters was excessive. Although many research teams worldwide have contributed to its development, the technical implementation was not achieved until recently. By analyzing the PRR spectra of air molecules, we identified that two individual Stokes N2 PRR lines corresponding to rotational quantum numbers J=4 and J=14 can be optically isolated using commercially available interference filters (IF) combined with Fabry-Perot interferometer (FPI). Based on this finding, we developed a single-line-extracted PRR lidar system for accurate atmospheric temperature profiling and simultaneous retrieval of aerosol/cloud backscatter coefficients.MethodsThe lidar utilizes the second harmonic (532.237 nm) of a seeded Nd∶YAG laser system with injection as an emitted light. The receiving unit comprises two different-aperture (0.3 m and 1.0 m) receivers, dedicated to near-range and far-range measurements, respectively. Each receiver is equipped with a custom-built three-channel polychromator. This advanced polychromator isolates two individual Stokes N2 PRR lines corresponding to rotational quantum numbers J=4 and J=14 (in the two Raman channels), along with the Rayleigh/Mie backscatter signal (in the elastic channel). The two Raman lines correspond to the vacuum wavelengths of 533.479 nm and 535.749 nm, respectively. Each Raman channel uses a set of signal extraction components consisting of two identical interference filters and a temperature-controlled sandwich solid Fabry-Perot interferometer. The interference filters are centered at the respective Raman wavelengths (533.479 nm or 535.749 nm), with a bandwidth of 0.16 nm, a peak transmission of approximately 60%, and an elastic-signal rejection ratio exceeding 104. The elastic channel is equipped with a single interference filter with a bandwidth of 0.3 nm and a peak transmission of about 80% at 532.237 nm.Results and DiscussionsThe atmospheric temperature T(z) has been derived from a rigorous analytical expression that relates temperature to the ratio Q(z) of the signal photon counts in the two Raman channels. This analytical expression estalishes a simple functional relation between T(z) and Q(z) involving two constant parameters, A and B. Parameter A is obtained through theoretical calculation, while B is determined via laboratory measurement, eliminating the need for external calibration using data from other instruments. Using the derived temperature profiles, aerosol and cloud backscatter coefficients can be rigorously derived from the signal photon counts in the J=4 Raman channel and the elastic channel, eliminating the need for additional assumptions such as a constant lidar ratio or ?ngstr?m relationship. This provides for the first time a valid and straightforward approach for profiling the optical properties of aerosols and clouds. The performance of the lidar system was evaluated through observational examples and statistical comparisons at a subtropical site in Wuhan, China, using an integration time of 1 hour and a vertical resolution of 150 m. During nighttime operations, the lidar effectively measured temperature profiles from approximately 2 km to 35 km in altitude. The results showed excellent agreement with simultaneous radiosonde data within the 2?16 km altitude range (the altitude range of available sonde data). The temperature measurement uncertainty in nighttime was below 1 K at altitudes up to 21 km. Under daytime conditions, the lidar successfully retrieved temperature profiles from ~2 km to 16 km, showing good consistency with simultaneous sonde data. The daytime lidar temperature profiles exhibited statistical uncertainty of less than 1 K within an altitude range from 2 to 11 km. Such a large altitude range is unprecedented for daytime lidar temperature measurements. Additionally, the pure rotational Raman lidar also demonstrated an excellent performance in revealing small-scale temperature structures, such as temperature inversions, in the troposphere.ConclusionsSingle-line-extracted pure rotational Raman lidar has been develpoed for accurate profiling of atmospheric temperature and aerosol/cloud backscatter coefficients. A seeded frequency-doubled Nd∶YAG laser is utilized as the light source. Light backscattered from atmosphere is collected by two receivers with diameters of 0.3 m and 1.0 m, respectively. Each receiver is equipped with a custom-built three-channel polychromator that isolates respectively two individual Stokes N2 PRR lines corresponding to rotational quantum numbers J=4 and J=14, along with the Rayleigh/Mie backscatter signal. The logarithm of the ratio between two PRR channel signals is a linear function of the reciprocal of atmospheric temperature. The two parameters in this function are a physical constant and a laboratory-measurable quantity, respectively. Therefore, the atmospheric temperature profiles can be obtained accurately derived from the ratio of the two PRR line signals without requiring external calibration. Based on the derived temperature profiles, the aerosol/cloud backscatter and extinction coefficient profiles can be further rigorously determined from the measured PRR J=4 signal and elastic backscatter signal, without relying on additional assumptions. The derived aerosol/cloud parameters and resulting lidar ratio provide benchmarks for aerosol and cloud lidar measurements. Field experiments have demonstrated that this lidar achieves a wide altitude coverage, high time and altitude resolutions, and unprecedented daytime performance, making it widely applicable in the fields of meteorology and atmospheric environment research.

ObjectiveOzone is an important trace component in the atmosphere. Stratospheric ozone can absorb ultraviolet (UV) radiation from the sun, protecting the Earth’s surface from its damaging effects. However, tropospheric ozone acts as a pollutant gas, posing health risks to human and ecosystems when concentrations are excessive. Similarly, high concentrations of ground-level aerosols not only reduce visibility but also contribute to respiratory and cardiovascular diseases. In recent years, domestic control measures for PM2.5 have led to a gradual decline in its concentrations. On the contrary, ozone pollution has become increasingly prominent. To promote the coordinated control of PM2.5 and ozone, accurate monitoring of theirspatiotemporal distribution using atmospheric lidar is essential. This enables the characterization of ozone concentrations at both local and larger scales. Achieving this goal necessitates advancements in ozone lidar laser source technology. Currently, most ozone lidar systems employ gaseous Raman laser sources, which suffer from low conversion efficiency and poor stability, rendering them unsuitable for mobile platforms such as vehicle-based monitoring. Therefore, building upon previous research, a novel compact all-solid-state free-tuning four-wavelength laser source for ozone differential absorption lidar using intra-cavity Raman laser technology has been designed. This design further enhances the stability and reliability of the laser source system. It provides a foundation for developing portable, multi-platform differential absorption lidar systems for atmospheric ozone monitoring, thereby expanding the hardware capabilities for such measurements.MethodsFor ozone detection wavelength selection, solid-state Raman media including KGW and SrWO4 crystals were systematically analyzed within an intra-cavity Raman laser system configuration. The designed laser architecture utilizes an 808 nm laser diode-pumped Nd∶YVO4 crystal to achieve dual-wavelength ultraviolet output. By implementing a nested cavity structure, the system effectively couples laser oscillation with stimulated Raman scattering within a single resonant cavity. Subsequent nonlinear optical interactions generate pulsed outputs spanning ultraviolet and visible spectral regions. This intra-cavity Raman laser serves as the emission source for ozone lidar systems, facilitating comparative atmospheric ozone detection tests to validate the laser’s operational reliability and measurement efficacy. Field validation was further conducted using both ground-based stationary and vehicle-mounted mobile monitoring platforms, enhancing regional ozone distribution monitoring capabilities.Results and DiscussionsThe implementation of an intra-cavity Raman laser system successfully generated four-wavelength laser output (Fig. 3), demonstrating superior optical performance. This laser was integrated into an ozone lidar system. Comparative validation experiments conducted at the Canton Tower environmental monitoring station (Fig. 7) confirmed the reliability and effectiveness of the intra-cavity Raman laser as the lidar emission source. Utilizing this outdoor intra-cavity Raman laser technology, the ozone lidar system performed extended ground-based observations in Xianyang city. These observations yielded high-quality detection data (Fig. 10), simultaneously capturing cloud altitude, aerosol transport and dissipation dynamics, along with spatiotemporal ozone concentration distribution patterns. Furthermore, mobile monitoring trials conducted with the lidar system in Nanjing effectively pinpointed localized ozone concentration hotspots along the survey route (Fig. 12), providing valuable technical support for tracing ozone pollution origins.ConclusionsA differential absorption lidar emission laser source for atmospheric ozone monitoring has been developed employing intra-cavity Raman laser technology. This innovative system utilizes nested resonator configurations and nonlinear optical effects to generate high-quality visible and ultraviolet laser beams, enabling simultaneous detection of atmospheric ozone and aerosol vertical profiles. The intra-cavity architecture significantly reduces reliance on external optical components, enhancing the overall system stability. Through compact integrated design, the laser’s physical dimensions have been substantially minimized, enhancing its suitablility for mobile monitoring platforms. The lidar system, based on this intra-cavity Raman laser, has been successfully deployed in multiple operational scenarios, including extended ground-based observations and vehicular mobile detection campaigns. Field monitoring data consistently demonstrate the robust and reliable system performance, establishing critical hardware foundations for advancing atmospheric ozone monitoring capabilities.

ObjectiveOHx free radical LIDAR detection usually requires a single-frequency ultraviolet wavelength, such as 308 nm, and an optical parametric oscillator (OPO) is one of the main devices to achieve nonlinear frequency conversion. However, most traditional OPOs use wide linewidth or multi-mode lasers as pump sources, which leads to problems such as high phase noise and low spectral purity of the final output laser and limits the application of OPOs in high-precision spectral detection and quantum optics. In addition, increasing the power of single-frequency lasers faces challenges such as nonlinear effects and thermal effects in laser gain media. Therefore, it is essential to use a high-quality and high-power single-frequency pump source for pumping OPOs. In this paper, based on the efficient one-dimensional heat dissipation characteristics and spherical aberration self-compensation technology of Innoslab, we designed the Innoslab amplifier to increase the single-frequency power while keeping the beam quality unchanged, providing a good pump source for OPOs.MethodsThe thermal effect of the laser crystal is essential to the design of the Innoslab laser amplifier. We first used finite element analysis to simulate the multi-dimensional thermal effects of the laser crystal and explored the temperature distribution and thermal stress distribution of the laser crystal from each dimension. Then, we investigated whether Nd∶YVO4 would undergo thermally induced stress fracture at the theoretical pump power and determined the focal length of the thermal lens of the laser amplifier at the same theoretical pump power. Another key design factor of the Innoslab laser amplifier was the pattern matching between the seed light and the pump light. For the known pump light size, we used multiple cylindrical lenses to flexibly shape the dimensions of the seed light along the fast axis and slow axis directions to match the pump spot. In addition, while realizing the high-power single-frequency laser output, we should also avoid the deterioration of beam quality caused by the thermally induced spherical aberration effect. Therefore, we designed spherical aberration self-compensation structure based on the principle of the Fourier transform 4f imaging system.Results and DiscussionsThrough finite element analysis and simulation, the temperature distribution and multi-dimensional thermal stress distribution of the laser crystal are clarified, and the focal length of the thermal lens is further determined. We numerically determine the thermal effect of the laser crystal accurately and provide theoretical support for the design of the Innoslab laser amplifier (Fig. 3 and Fig. 4). Thermally induced spherical aberration has always been a key factor affecting the performance of Innoslab laser amplifier. The realization principle of spherical aberration self-compensation is explained from the perspective of the 4f imaging system based on Fourier optics (Fig. 5), and the beam quality before and after amplification remains the same. The design of the Innoslab laser amplifier requires that when the seed light passes through the laser crystal multiple times, its size in the fast axis direction remains unchanged, and its size in the the slow axis direction increases evenly. This purpose is achieved through the flexible design of the magnifying end-pump and multiple cylindrical lenses (Fig. 7). The engineered Innoslab laser amplifier designed has been operating continuously and stably for several months (Fig. 10).ConclusionsThe proposed high-power single-frequency laser is successfully applied to the OHx free radical LIDAR light source, and the pumped OPO module successfully outputs a 731 nm laser, which provides the basis for subsequent nonlinear frequency conversion. Compared with previous laser amplifiers, the principle of spherical aberration self-compensation is theoretically explained, ensuring that the beam quality before and after laser amplification is unchanged. In addition, the designed Innoslab laser amplifier has been applied in engineering and exhibits stability. So far, it has been operating stably for several months.

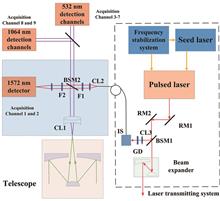

ObjectiveWith the rapid and diversified development of the national economy, the current domestic atmospheric environment remains complex. So lidar is in high demand as a critical tool for monitoring air pollution and greenhouse gases. In atmospheric remote sensing lidar system, high-spectral-resolution lidar (HSRL) and differential absorption lidar (DIAL) play significant roles in fields such as atmospheric composition detection and environmental monitoring due to their high precision and high sensitivity. A high-power, narrow-linewidth, and frequency tunable 1064 nm continuous-wave (CW) single-frequency laser can be used as the light source for HSRL via pulse modulation and power amplification. It can also generate short-wave and mid-infrared lasers through nonlinear optical frequency conversion techniques such as optical parametric oscillators (OPOs), providing DIAL with rich wavelength options. This paper aims to develop a broadband, mode-hop-free, tunable CW single-frequency Nd∶YVO4 laser based on a unidirectional figure-eight ring cavity structure. In the future, this laser will be integrated as a core component into mobile-platform lidar systems, providing robust support for air pollution and greenhouse gases monitoring.MethodsIn this work, we develope a mode-hop-free, continuously tunable single-frequency laser at 1064 nm, which features a compact structure, high output power, and a broad frequency tuning range. The figure-eight ring cavity consists of four mirrors (M1?M4). M1 and M4 also serve as the pump light input and laser output couplers, respectively. The laser is pumped by a fiber-coupled laser diode. The gain medium is an Nd∶YVO4 crystal with natural birefringence. Compared to the Nd∶YAG crystal, Nd∶YVO4 has a higher absorption coefficient, a broader absorption bandwidth, and can directly generate linearly polarized lasers. To ensure single-longitudinal-mode operation, an optical unidirectional device composed of a terbium gallium garnet (TGG) crystal and a half-wave plate (HWP) is added into the cavity to eliminate the spatial hole burning. To achieve high power output, an etalon with a free spectral range (FSR) much larger than the cavity’s FSR is incorporated to narrow the effective gain bandwidth and suppress competing longitudinal modes. Intracavity insertion of a noncritically phase-matched LiB3O5 (LBO) crystal enables 532 nm laser output, which coincides with the absorption line of iodine molecules, facilitating long-term frequency stabilization. Finally, through coordinated control of the laser crystal temperature and piezoelectric ceramics (PZT)-driven cavity mirror, continuous mode-hop-free frequency tuning over a range of 14 GHz is achieved.Results and DiscussionsExperimental results demonstrate that the laser designed in this work exhibits excellent performance in all aspects. Under a pump power of 25 W, the maximum output powers of the fundamental-wave and second-harmonic-wave laser reach 6.5 W and 2.1 W, with slope efficiencies of 26.12% and 8.36%, respectively (Fig. 4). The power stabilities over 4 h are 0.23% and 0.31% (Fig. 5). Within 1.35 h, the fundamental-wave power fluctuation is approximately ±0.238 pm (±63.1 MHz) (Fig. 7). The PZT tuning coefficient of the fundamental-wave is about -0.58 pm/V (-153.53 MHz/V) (Fig. 8), while the temperature tuning coefficient is about -26.89 pm/℃ (-7.12 GHz/℃) (Fig. 9). By employing the coordinated control of crystal temperature and cavity length, mode-hop-free wavelength tuning over a range of 53.16 pm (14.08 GHz) is achieved (Fig. 10). Compared to the method of coordinated control of the etalon angle and PZT-driven mirror, the wavelength tuning approach proposed in this paper eliminates the need for complex mechanical and electronic control systems. Although the experimental results align well with theoretical predictions, the current frequency tuning method requires further refinement. Due to the inherent thermal inertia of crystal temperature adjustment, the tuning speed must remain moderate, and nonlinearity is observed during the initial and final stages of frequency tuning (Fig. 10). Additionally, hysteresis effects in the PZT actuator introduce deviations in wavelength tuning linearity. These issues will be systematically addressed in future work.ConclusionsThis paper presents a continuous-wave single-frequency Nd∶YVO4 laser based on a figure-eight ring cavity, achieving 6.5 W of fundamental-wave and 2.1 W of second-harmonic wave laser output, with power stabilities of 0.23% and 0.31% over 4 h, respectively. Continuous wavelength tuning across a 14 GHz range is achieved by driving a PZT mounted cavity mirror to adjust the resonator length while synchronizing with laser mode variations induced by crystal temperature tuning. This laser overcomes the limitations of conventional cavity length control methods, which only allow continuous tuning within a single longitudinal mode. And, in contrast to approaches that require coordinated control of the etalon angle and cavity length, our design eliminates the need for complex control systems involving galvanometer actuators and phase-locked electronics. However, its tuning range and speed are still inferior to what can be achieved with etalon angle control, and further improvements are needed for the linearity of the control algorithm. Finally, the wavelength tuning performance is experimentally verified by scanning the absorption spectrum of iodine molecules. Potential applications of this laser include lidar systems or serving as a pump source for mid-infrared OPOs.

ObjectiveMultiwavelength lidar aerosol microphysical parameter retrieval represents a fundamental ill-posed inverse problem governed by Fredholm integral equations, where limited optical observations must constrain infinite-dimensional particle size distributions. Current regularization methods provide only point estimates without uncertainty quantification, severely limiting their applications in climate model validation and data assimilation. The IPCC sixth assessment report identifies aerosol-radiation interactions as the largest uncertainty source in climate forcing (-2.0~-0.4 W·m-2), largely due to inadequate aerosol property characterization. Traditional approaches suffer from three critical limitations: subjective regularization parameter selection, absence of uncertainty bounds, and inability to systematically incorporate prior physical knowledge. This study develops a comprehensive Bayesian framework that transforms deterministic retrieval into probabilistic inference, providing rigorous uncertainty quantification essential for advancing atmospheric aerosol science and climate applications.MethodsWe establish a complete Bayesian inversion framework based on Mie scattering theory: gp(λ)=∫Kp(λ, r, m)v(r)dr+ε, where gp(λ) represents observed optical parameters, Kp(λ, r, m) is the Mie-derived kernel function, v(r) is volume size distribution, and ε denotes measurement noise. The continuous distribution is discretized using eight B-spline basis functions with weight coefficients w treated as random variables. The hierarchical Bayesian model specifies P(w|α)=N(0, α-1I) and P(g|w, A, β)=N(Aw, β-1I), where precision hyperparameters α and β follow Gamma priors and are automatically estimated through variational inference. This eliminates subjective parameter selection while providing complete posterior distributions P(w|g, A)=N(μw, Σw) with mean μw=(αI+βATA)-1βATg and covariance Σw=(αI+βATA)-1. We rigorously establish mathematical equivalence between Tikhonov regularization and Bayesian maximum a posteriori (MAP) estimation, demonstrating that classical methods emerge as special cases of our probabilistic framework, thus providing unified theoretical guidance for method selection.Results and DiscussionsComprehensive validation using 3β+2α lidar configurations (355, 532, 1064 nm backscatter; 355, 532 nm extinction) demonstrates exceptional performance across challenging scenarios. For bimodal distributions representing atmospheric fine and accumulation modes, geometric mean radius retrieval errors remain below 5% (fine mode: -0.6%, accumulation mode: +4.4%) with effective radius errors under 3.5% (Fig. 3). Under realistic noise conditions (10%?20%), the Bayesian method exhibits superior robustness through automatic regularization adaptation, while posterior variance analysis reveals physically meaningful uncertainty patterns: elevated uncertainty in modal boundaries (0.2?0.4 μm) reflecting observational constraints, and high uncertainty in large particle tails consistent with reduced lidar sensitivity (Fig. 4). Statistical validation through 1000 Monte Carlo experiments confirms 95% credible intervals, demonstrating accurate uncertainty quantification. The method correctly captures aerosol microphysics where fine particles dominate numerically (90.9%) while accumulation mode controls volume (93.5%) and optical properties. Comparative analysis reveals complementary characteristics: Tikhonov excels in computational efficiency and single-mode accuracy (1.24% mean error), while Bayesian provides comprehensive uncertainty information with comparable accuracy (2.29% mean error), making it optimal for scientific applications requiring rigorous uncertainty assessment.ConclusionsThis paper establishes a transformative Bayesian framework for multiwavelength lidar aerosol retrieval that addresses fundamental limitations of deterministic approaches. Key contributions include: 1) complete probabilistic formulation providing both accurate retrievals and comprehensive uncertainty quantification; 2) rigorous mathematical unification of regularization methods under Bayesian theory; 3) automatic hyperparameter estimation eliminating subjective parameter selection; 4) demonstrated superior robustness and statistically validated uncertainty bounds. The framework’s scientific impact extends to climate model evaluation through quantified parameter uncertainties, improved data assimilation via observation error covariances, and uncertainty-aware environmental monitoring. Experimental validation confirms geometric mean errors below 5% with reliable uncertainty propagation under observational noise. This probabilistic paradigm represents a significant methodological advancement for atmospheric remote sensing, providing essential tools for climate prediction, air quality assessment, and environmental policy applications requiring rigorous uncertainty quantification.

ObjectiveThe middle atmosphere, extending from the stratosphere to the lower thermosphere, functions as a critical transitional region between the lower and upper atmosphere. This layer exhibits complex dynamical processes—including gravity waves, atmospheric tides, and planetary waves—that substantially influence global circulation, energy transport, and vertical coupling across atmospheric layers. Precise characterization of these processes necessitates high-resolution temperature measurements, obtainable through Rayleigh scattering Lidar systems. These Lidars detect molecular backscatter signals, enabling accurate temperature profiling of the middle atmosphere with fine spatial and temporal resolution. Two principal methods are commonly employed to retrieve temperature profiles from Rayleigh Lidar measurements: the hydrostatic equilibrium method developed by Hauchecorne and Chanin (HC, also referred to as the C-H method), and the optimal estimation method (OEM). The HC method, a classical approach, utilizes hydrostatic equilibrium and integrates downward from a specified reference temperature at a high altitude. Although widely implemented, its accuracy depends significantly on the selected reference point. Conversely, OEM is a statistical retrieval method based on Bayesian theory. It integrates observational data with prior information to minimize uncertainties and enhance retrieval stability, proving particularly effective for nonlinear and ill-posed inverse problems. This study conducts a systematic comparison of the HC and OEM methods using both simulated and actual Lidar observations from Zhongshan Station, Antarctica. Their performance is assessed regarding retrieval accuracy, error propagation, and sensitivity to reference conditions, thus providing insights into Rayleigh Lidar retrieval method optimization.MethodsSimulations were based on Rayleigh Lidar observations collected at Zhongshan Station on August 10, 2020. A synthetic Lidar signal was constructed using temperature profiles from the CIRA-86 model, which served as the true reference temperature. Temperature retrievals were then performed using both the HC and OEM methods. The HC method primarily relies on the hydrostatic equilibrium equation and the ideal gas law. An altitude was selected as the reference height where the uncertainty in the retrieved atmospheric density is 10%, and the corresponding model temperature at this altitude was used as the reference temperature. The OEM is based on Bayesian statistical theory and implemented using a least-squares optimization framework. Its fundamental principle involves the weighted integration of observational data and prior knowledge (such as the prior state vector and its covariance) in the error covariance space, thereby deriving the most physically reasonable state estimate that optimally fits the actual observations. In the application of the OEM, a suitable forward model [equation (14)] was constructed based on the Rayleigh Lidar equation, and the MSISE-00 model temperature was used as the a priori temperature profile.Results and DiscussionsTemperature retrievals using both HC and OEM methods were evaluated with the simulated lidar signals. The HC method, based on hydrostatic equilibrium, effectively retrieved the temperature structure across most of the vertical range when accurate reference temperatures were available (Fig. 2). However, the method exhibited high sensitivity to the chosen reference temperature, particularly within the 10?15 km region below the reference height where retrieval uncertainties increased substantially (Fig. 3). The OEM, evaluated through its averaging kernel matrix, demonstrated that observational data dominated the retrieval below 96 km (Fig. 5), while error budget analysis (Fig. 7) systematically quantified contributions from observation noise, forward model errors, and prior information. Additionally, the OEM’s enhanced robustness was confirmed through reference pressure perturbation tests (Fig. 8), demonstrating significant stability against such variations. Validation using actual Lidar measurements revealed good agreement between the two methods. Temperature differences remained generally below 2% below 87 km (Fig. 9), indicating consistency between the HC and OEM retrievals under well-constrained conditions.ConclusionsIn the present study, we compare HC and OEM temperature retrieval methods using Rayleigh Lidar signal from Zhongshan Station, Antarctica, and CIRA-86-based simulations. Key findings include: 1) both methods achieved accurate retrievals below 95 km with true reference parameters, with the HC method showing slightly better precision; 2) HC method exhibited strong sensitivity to reference temperature (especially within 10?15 km below the reference height), while OEM demonstrated superior robustness against reference pressure perturbations; 3) OEM enabled comprehensive uncertainty quantification (observation noise, model errors, etc.), offering distinct advantages for scientific analysis; 4) the HC method, due to its simplicity and low computational cost, remains well suited for operational or real-time applications. While both methods can reliably retrieve atmospheric temperature profiles, OEM is better suited for scientific applications requiring comprehensive uncertainty quantification, whereas the HC method is more applicable in scenarios prioritizing algorithmic simplicity and retrieval stability.

ObjectiveCirrus clouds, consisting entirely of ice crystals in the upper troposphere, cover approximately 25% of the globe at any given time. These clouds produce a net warming effect through substantial longwave trapping, despite reflecting some solar radiation. Ice crystal formation occurs through two pathways: homogeneous nucleation, which requires temperatures below -38 ℃ and high ice supersaturation, or heterogeneous nucleation on ice-nucleating particles (INPs) such as mineral dust and smoke at lower supersaturation levels. These distinct formation mechanisms result in different microphysical properties: heterogeneous nucleation typically produces fewer, larger crystals and optically thinner clouds, while homogeneous nucleation generates numerous small crystals that enhance infrared heating. Although the upper troposphere above oceanic regions is generally considered pristine, Asian dust transported over long distances can reach altitudes of 5?15 km and periodically seed cirrus formation in remote areas, including the mid-North Atlantic. However, the quantitative impact of such transported dust on ice formation—and consequently on cirrus radiative forcing—remains inadequately understood due to limited direct in-situ observations. This study examines representative oceanic cases to determine the relative prevalence of heterogeneous versus homogeneous nucleation in dust-influenced cirrus, providing essential constraints for climate models and reducing uncertainty in cirrus feedbacks.MethodsIn this study, we first interrogate CALIPSO/CALIOP profiles to locate dust?cirrus co-occurrences along the trans-Pacific/North American corridor into the North Atlantic. We use CALIOP Level-1 (v4.51) 532 nm attenuated backscatter and volume depolarization ratio to flag layered features, and Level-2 aerosol profiles (v4.2) to obtain extinction, particle depolarization, and the vertical feature mask for subtype identification (pure/polluted dust and cirrus). Because automated classification can confuse optically thin cirrus with dust, we apply additional screening based on depolarization thresholds and layer continuity, and collocate meteorology (temperature, pressure, relative humidity) from embedded MERRA-2 fields. To characterize cirrus microphysics, we use A-Train DARDAR products that combine CloudSat CPR with CALIOP. DARDAR-Cloud provides extinction, effective radius, and ice water density; DARDAR-Nice supplies ice crystal number concentration (ICNC) profiles for ice crystal diameters greater than 5, 25, and 100 μm at 60 m vertical and 1.7 km horizontal resolution. Recognizing lidar?radar size-sensitivity differences and known retrieval limitations, we assign a conservative factor-of-three uncertainty to ICNC. Dust-related ice-nucleating particles concentration (INPC) is derived with POLIPHON using dust extinction as input. Dust extinction is separated from total aerosol extinction via a one-step method and computed assuming a dust lidar ratio of 45 sr. We convert extinction to dust mass, coarse-mode number, and surface area concentrations with regionally constrained coefficients from nearby AERONET observations (Tudor Hill). Given the very low cirrus temperatures, we restrict nucleation to deposition mode and calculate INPC with the U17-D parameterization using dust surface area as predictor. We define dust?cirrus interaction when vertical or horizontal overlap is evident. Event provenance is corroborated with daily MERRA-2 dust column mass fields and HYSPLIT back-trajectories (GDAS-driven), linking the observed North Atlantic layers to Asian sources and establishing two rare, long-range transport cases for subsequent INPC?ICNC closure.Results and DiscussionsIn the first event (May 18, 2007), CALIOP reveals a cirrostratus deck embedded within a pure dust layer between 9.4 and 10.9 km, exhibiting strong attenuated backscatter and high depolarization that indicate nonspherical ice and dust (Fig. 1). HYSPLIT traces the air mass to Asian deserts on May 9?10, with elevated layers tracked across the Pacific, over North America, and into the North Atlantic (Figs. 2?3). Within the overlap zone, DARDAR-Cloud retrievals demonstrate mean extinction near 0.10 km-1, an effective radius of 39.2 μm, and an ice water density of 2.0 mg·m-3 (Fig. 4). Using POLIPHON-derived dust extinction with the U17-D scheme, the dust INPCs measure 26.0 L-1 at an ice saturation ratio of 1.15 and 483.5 L-1 at 1.25, increasing toward the cloud top (Fig. 5). Collocated DARDAR-Nice indicates mean ICNCs of 32.3 L-1 for diameters larger than 5 μm, 15.3 L-1 for larger than 25 μm, and 2.4 L-1 for larger than 100 μm, peaking approximately 0.3 km below the top (Fig. 5). Excluding the 1.25 case—where dust INPC exceeds ICNC, likely due to sedimentation and particle-size assumptions—the closure remains within one order of magnitude, supporting dust-driven heterogeneous nucleation. In the second event (April 25, 2008), a smaller cirrus system between 10.0 and 11.4 km exists adjacent to a polluted or aged dust layer. The aerosol exhibits weaker backscatter and lower volume depolarization ratio (0.1?0.2), while the cirrus maintains higher volume depolarization ratio above 0.3 (Fig. 6). Trajectories again suggest Asian sources and sustained lofted transport between roughly 7 and 11 km (Figs. 7?8). Microphysical retrievals indicate mean extinction near 0.25 km-1, an effective radius of 56.9 μm, and an ice water density of 8.9 mg·m?3 (Fig. 9). Under temperatures of -54 to -57 ℃ and typical ice saturation ratios of 1.15?1.25, the U17-D scheme, reduced tenfold to reflect aging, yields mean dust INPC from 4.4 to 95.7 L-1 (Fig. 10). The corresponding ICNC averages are 68.9 L-1 (>5 μm), 34.1 L-1 (>25 μm), and 6.6 L-1 (>100 μm), with localized enhancements in nice,5 μm approaching 500 L-1 (Figs.9?10). The near-closure again indicates heterogeneous nucleation dominance; brief high-ICNC pockets likely reflect transient updrafts or partial INP depletion. These two trans-Pacific cases demonstrate that Asian dust maintains ice-nucleating activity after extensive transport and can significantly influence cirrus formation over the North Atlantic, with pure dust active at lower saturation and polluted dust requiring slightly higher saturation to produce comparable ice.ConclusionsTo reduce uncertainties in cloud radiative forcing and climate projections, models must explicitly represent how Asian dust is lofted by midlatitude westerlies, traverses the Pacific, and enters the North Atlantic, while maintaining ice-nucleation activity after long-range transport. Clay minerals such as kaolinite and illite provide efficient ice-nucleating sites at relatively warmer upper-tropospheric temperatures, increasing initial ice crystal numbers, shifting sizes toward larger particles, rapidly consuming supersaturation, and inhibiting subsequent homogeneous freezing. These modifications affect cirrus effective radius, ice water density, and the balance between longwave cooling and shortwave reflection, potentially influencing regional heat budgets and circulation. Therefore, global and regional models should incorporate prognostic dust mineralogy and aging-dependent INP efficiency, resolve competition between heterogeneous and homogeneous pathways under realistic updrafts, and evaluate against combined lidar?radar constraints (e.g., CALIPSO/CloudSat and EarthCARE) and reanalysis dust fields. Additional marine INP sources (sea salt, smoke, volcanic aerosols) should also be considered. Our North Atlantic cases demonstrate that dust-driven heterogeneous nucleation can dominate cirrus formation, emphasizing the importance of including these processes to reduce radiative-forcing uncertainty.

ObjectiveOxygen A-band (O2A, 762 nm) airglow is one of the strongest radiation features in the middle and upper atmosphere. O2A airglow is evenly distributed across all latitudes and occurs at altitudes of 40‒200 km. Most ground-based measurements are limited due to the strong absorption of O2 in the airglow. Therefore, it is necessary to study space-based limb detection methods to detect global airglow. Based on this, the global temperature profile can be obtained. This profile supports studies of atmospheric environmental change, atmospheric dynamics, and meteorological monitoring.MethodsUsing the temperature and ozone profile monitoring spectrometer (TOPS), we discuss space-based limb detection methods for O2A airglow. TOPS was launched on January 29, 2025, to measure the global distribution of temperature, atmospheric ozone, and other atmospheric components. In this paper, the O2A airglow radiation calculation method is analyzed. Using TOPS’s limb observation geometry, along with temperature, pressure, oxygen number density in each layer, and O2 self-absorption data, we calculate high-spectral-resolution airglow emissions at different limb tangent heights. Then, TOPS’s detection mode and system parameters are analyzed, and spectral and radiometric calibrations are performed to obtain TOPS’s performance parameters. Next, the on-orbit airglow data detected by TOPS are preprocessed, and the optimal estimation algorithm is used to retrieve the temperature profile. We also discuss the selection method of the inversion wavelength. O2A airglow lines are generally classified as strong, medium, or weak. The inversion wavelength is selected by analyzing each line’s radiation intensity, atmospheric transmittance, and temperature dependence. Finally, for an error analysis, the random noise error, smoothing error, and model error of the inversion results are discussed.Results and DiscussionsIn the O2A airglow radiation calculation, the pressure, temperature, and O2 number density profiles are obtained from the MISI model, and the O2 line intensity is obtained from the HITRAN database. We obtain the high spectral resolution O2A airglow radiation from 60 to 100 km (Fig. 5). The results show that airglow radiation varies with tangent height, primarily due to changes in excited oxygen number density and O2 self-absorption. The results also show a strong oxygen self-absorption effect at the tangent height of 60 km, and the effect decreases with increasing height. Due to the low emission intensity, TOPS adopts a limb scanning mode, with an instantaneous field of view of 200 km (horizontal)×2 km (vertical), a vertical scanning range of 10‒100 km, and a vertical resolution of 2 km (Fig. 6 and Fig. 7). TOPS’S spectral range is 498.1‒802.3 nm, spectral resolution is 1.46 nm, spectral calibration accuracy is 0.1 nm, and radiometric calibration accuracy is 3.6%. The signal-to-noise ratio is 140 at a tangent height of 80 km. Analysis results show that TOPS can accurately detect O2A airglow. The in-orbit O2A airglow data detected by TOPS are preprocessed, providing knowledge of the dependence of O2A airglow radiation on tangent heights and solar zenith angles. Based on TOPS’s spectral resolution, we use the optimal estimation algorithm to invert the temperature profile. For the inversion wavelength selection, the weak lines have a strong positive response to temperature increases, while the strong lines are difficult to use for recovering temperatures at lower altitudes due to strong self-absorption. Combined with the spectral resolution of TOPS, the medium line at 765 nm is selected. The inversion results show that the average kernel function peak above 80 km is more than 0.6. As self-absorption increases, the average kernel function peak decreases to less than 0.1 below 80 km, indicating that the temperature inversion error increases. At the same time, it can also be seen that the weight function peak above 90 km and below 75 km decreases, but the average kernel function peak above 90 km basically does not decrease, indicating that the inversion accuracy in the region above 90 km is higher than that in the region below 75 km (Fig. 14). The error results show that errors above 80 km mainly come from measurement errors, whereas errors below 80 km mainly come from the temperature prior due to the significant contribution of the prior values to the inversion results. The error range is -6 to 6 K at 90‒100 km, -10 to 10 K at 80‒90 km, and -12 to 12 K at 64‒80 km.ConclusionsThe analysis of in-orbit airglow shows that TOPS can detect O2A airglow effectively. The limb detection method, system, and performance parameters of TOPS serve as a reference for designing systems to monitor the middle and upper atmosphere. Based on O2A airglow spectra, we initially obtain the temperature profile using the optimal estimation algorithm. This provides a foundation for analyzing long-period airglow data, optimizing inversion algorithms, and cross-comparing inversion results.

ObjectiveThe accurate detection of near-space (20?100 km) atmospheric density profiles plays a pivotal role in spacecraft orbit optimization, aircraft thermodynamic performance evaluation, and space environment monitoring. Nevertheless, conventional optical remote sensing techniques are limited by constraints such as low light flux, inadequate spectral resolution, and coarse vertical sampling intervals, which pose significant challenges to the detection of fine-scale structures associated with key dynamic processes. Aiming at the demand for space-borne high-precision detection of near-space atmospheric density profiles, optical design and performance verification of hyperspectral atmospheric density profile imager (HDI) are conducted. Using single frame limb observation image by HDI, high-spatial-resolution hyperspectral information of oxygen A-band absorption spectrum and airglow radiation spectrum can be acquired over an atmospheric vertical height range of approximately 100 km, while simultaneously inverting atmospheric density profiles and temperature profiles.MethodsBased on the principle of spatial heterodyne interferometric imaging spectroscopy (SHIS), the HDI optical system is designed. It can meet the high-precision detection requirements for near-space atmospheric density profiles, which include high signal-to-noise ratio (greater than 100 under typical spectral radiance), hyperspectral resolution (better than 0.04 nm), and fine vertical sampling interval (better than 0.1 km).To meet the technical index requirements of HDI, relationships between basic parameters of each functional component and residual polarization sensitivity characteristics of HDI are analyzed, and the optimized design of the optical system is completed. The optical system includes a front component with orthogonal heterogeneous optical field modulation, a spatial heterodyne interferometer, and a re-imaging component. The front component consists of a front cylindrical lens and a collimator lens. The front cylindrical lens group has a focal length of 534.5 mm, a spectral field of view of ±1.85°, and corresponds to a horizontal atmospheric width of 160 km. The front collimator lens group has a focal length of 461.6 mm, a spatial field of view of ±1.185°, and corresponds to a vertical atmospheric height range of 102 km. Consequently, the sampling resolution interval of HDI in the spatial dimension is approximately 0.1 km.In spatial heterodyne interferometric spectrometers, there are various polarization-sensitive components such as diffraction gratings, mirrors, and beam-splitting prisms. When the optical system itself exhibits residual polarization sensitivity, it not only impairs the radiometric measurement accuracy of the spectrometer but also leads to image separation in imaging spectrometers. The HDI polarization response model and measurement platform are established. Test results indicate that by incorporating a quartz wedge-type depolarizer between the field diaphragm of the front cylindrical lens group and the mirror in the HDI optical system, and adjusting the depolarizer to ensure the maximum image separation occurs in the spectral dimension, the influence of the depolarizer on the spatial dimension imaging quality and reconstructed spectral accuracy of HDI can be minimized.Results and DiscussionsGround tests and calibration results confirm that HDI meets all design specifications. Spectral characterization shows an operational range of 756.8?771.4 nm, which fully covers the primary absorption region of the oxygen A-band (759?769 nm). Calibration with tunable laser and wave-meter yields a measured spectral resolution of 0.0394 nm, which is in close agreement with theoretical predictions. Under typical entrance pupil radiance, during limb observation at an orbital altitude of 520 km, with spatial binning of 20 pixel in the atmospheric vertical direction (corresponding to a vertical profile spatial resolution of 2 km), the average spectral signal-to-noise ratio (SNR) is 149. However, due to the detector heat sink cooling temperature of -16.2 ℃, which is slightly higher than the designed value of -20 ℃, the dark current noise of HDI in orbit has increased marginally. Consequently, the average spectral SNR of HDI is slightly lower than 149, but it still satisfies the requirement (≥100).ConclusionsThe development of HDI makes a significant advancement in space-borne hyperspectral detection of near-space atmospheric parameters. These technical achievements not only provide critical data support for current aerospace atmospheric monitoring missions but also lay a foundation for long-term global atmospheric density profile remote sensing.To meet the requirements of data applications, a variable-scale data binning processing method will be adopted in the future to retrieve high-precision atmospheric density profile data products. Additionally, to accurately assess the on-orbit spectral resolution of HDI and its capability for airglow spectrum in the middle and upper atmospheres, it is planned to reconfigure the on-orbit operating parameters of HDI. This reconfiguration aims to detect and analyze the hyperspectral characteristics of atmospheric tracers at altitudes above 80 km, while also conducting research on the retrieval of atmospheric temperature profiles in the near-space region (80?110 km).

SignificanceThe construction of large space telescopes involves substantial costs. However, the rapid advancement of small satellite and CubeSat technologies has introduced a new era of low-cost, highly flexible small space telescopes. Currently, the only large ultraviolet (UV) telescope in orbit, the Hubble Space Telescope, faces deteriorating UV instruments. Meanwhile, next-generation UV telescopes, such as the U.S. Habitable Worlds Observatory and Russia’s Spektr-UV, are not scheduled for launch until after 2030. This gap presents an optimal opportunity for developing small UV telescopes to observe valuable scientific targets.ProgressSince 2020, NASA has initiated dedicated funding programs for space astrophysics satellites, including the Astrophysics Pioneers program (supporting missions with lifecycle costs of 10?20 million dollars) and CubeSat initiative (below 10 million). These programs have generated several specialized UV small satellite projects, such as the Colorado Ultraviolet Transit Experiment (CUTE), Europa Ultraviolet Spectrograph (Europa-UVS), Star-Planet Activity Research CubeSat (SPARCS), and Apsera. Notably, mid-sized countries have gained opportunities to independently develop their own small satellites. For instance, Czech Republic has led its own astronomy program, Quick Ultra-Violet Kilonova Surveyor (QUVIK). Based on their scientific objectives, these missions can be categorized into three groups: exoplanet-focused missions (CUTE and Europa-UVS), stellar activity monitors (SPARCS and QUVIK), and diffuse source mappers (Aspera).CUTE, the first ultraviolet CubeSat mission dedicated to exoplanetary atmosphere observations, was funded by NASA’s Pioneers Program, with a total lifecycle cost of $16 million. Its primary scientific objective is to quantify atmospheric escape from hot Jupiters through near-UV spectra in a bandpass of 247.9?330.6 nm, specifically targeting Mg I (285.2 nm) and Fe II (240?260 nm) absorption features during exoplanetary transits. To achieve high-resolution observations of ultraviolet spectral lines while conforming to the constrained 4U volume, CUTE utilizes a 6U CubeSat platform housing a compact Cassegrain telescope paired with a holographic grating (Fig. 1). CUTE implements a “point-stare-repeat” observation strategy, wherein the spacecraft repeatedly revisits the same exoplanet over a 4?8 week observation window. Launched in 2021, CUTE has successfully obtained a series of observational datasets (Fig. 2), including the ultraviolet spectra of the exoplanet WASP-189.Europa-UVS, as a critical subsystem of NASA’s flagship Europa Clipper mission, aims to investigate the habitability of Europa. Europa, a prime candidate for harboring a subsurface ocean, exhibits water vapor plumes and atmospheric outgassing detectable in the far-UV waveband. Europa-UVS systematically examines these phenomena through three observational modes: 1) push-broom imaging during close flybys; 2) disk-scanning from large distance through the airglow port; 3) measuring trace elements during solar occultation. Europa-UVS employs a 15-cm Rowland circle spectrograph (55?206 nm) with an effective area of 4 cm×4 cm. Its advanced microchannel plate (MCP) detectors have atomic-layer-deposited MgF2 photocathodes, which enable high-sensitivity measurements in the radiation-heavy Jovian environment (Fig. 3).SPARCS is an ultraviolet microsatellite mission led by the University of Arizona. It received funding from NASA’s 2016 Astrophysics Research and Analysis Program. Targeting nearby M-dwarf stars (10%?60% solar mass), it examines their intense, variable UV radiation that drives atmospheric photochemistry in orbiting planets, affecting key molecules like water and ozone. SPARCS operates in two UV bands: the near-UV band centers on the 280 nm Mg II line, primarily tracing the stellar chromosphere, while the far-UV band includes C IV and He II lines, originating from the transition region between the chromosphere and corona. SPARCS is a 6U CubeSat and has a mass of ~12 kg. This satellite employs a 9 cm aperture, RC telescope paired with a 1000×1000 CCD detector (Fig. 4). To minimize data transmission volume, SPARCS implements a “stamp imaging” strategy, which involves extracting only 10 pixel×10 pixel regions around targets, reducing daily science data downlink to less than 120 MB.QUVIK is the first Czech-led ultraviolet telescope, developed by Masaryk University and partners. It aims to study kilonovae from neutron star mergers by capturing early ultraviolet emissions, helping distinguish explosion mechanisms. QUVIK has a 1°×1° field of view and two channels: far-UV (140?190 nm) and near-UV (260?360 nm), enabling analysis of ejecta structure based on two wavebands. Its optical system utilizes an RC design with corrective lenses, splitting light via a dichroic mirror onto two 4096 pixel×4096 pixel detectors. QUVIK employs a rapid-response mode—when a merger is detected, it reorients within 20 minutes to observe early emissions, providing critical UV data for astrophysical studies.Apsera, an EUV small satellite mission led by the University of Arizona, represents one of the initial projects funded under NASA’s 2020 Astrophysics Pioneers Program. The mission’s primary objective is to detect warm-hot phase plasma gas in the halos of nearby galaxies and map its two-dimensional distribution. Drawing upon the technical heritage of the FUSE ultraviolet telescope, Apsera utilizes four parallel-channel Rowland-circle spectrometers within a single payload, though in a more compact configuration. Each spectrometer incorporates an off-axis parabolic primary mirror (6.2 cm×3.7 cm aperture) and a toroidal diffraction grating. To enhance observations of faint, extended sources, Apsera implements strategic improvements: balancing slightly lower spectral resolution (R>1500) with increased sensitivity to diffuse emissions (Fig. 5).Given that China’s technological expertise in ultraviolet space telescopes predominantly centers on imagers, imaging technology and associated scientific objectives are anticipated to be the initial areas of advancement. The scientific scope can extend from solar observations to other stellar objects, such as SPARCS observing M dwarfs or QUVIK observing kilonovae, both utilizing imaging systems.Conclusions and ProspectsThis paper systematically reviews the scientific objectives and technical approaches of small UV telescopes, aiming to provide strategic references for China’s future planning and development of ultraviolet small satellites missions.