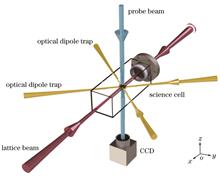

ObjectiveOptically trapped ultracold atoms in optical lattices are important physical systems for conducting quantum computing, quantum simulation, and quantum precision measurement. The study of ultracold atoms in optical lattices serves as a bridge connecting the microscopic world and macroscopic condensed matter. It can be used to simulate strongly correlated quantum many-body systems, gauge fields, and novel topological quantum states. In these experiments, the depth of the optical lattice is a key parameter for regulating interaction strength and energy level structure. It directly affects the dynamical properties of atoms in the optical lattice, including Bloch oscillations, tunneling effects, and quantum phase transitions. Therefore, high-precision calibration of the optical lattice trap depth is crucial for achieving precise quantum control of ultracold atom systems.MethodsWe propose a high-precision methodology for calibrating the trap depth of optical lattices based on the principle of multiple-pulse Kapitza?Dirac (KD) diffraction. Accurate calibration of the optical lattice depth is achieved by measuring the high diffraction efficiency of the first-order momentum state of atoms within the optical lattice. To validate the effectiveness of this method, a comprehensive comparison is made with single-pulse KD diffraction, Raman?Nath (RN) diffraction, and parametric oscillation heating. In the experimental process, Bose?Einstein condensation (BEC) of 87Rb atoms is initially realized using a crossed optical dipole trap. The atoms are then loaded into a one-dimensional optical lattice under various experimental conditions. Finally, the diffraction distribution of the atoms is observed in momentum space using time-of-flight expansion imaging. By carefully analyzing their dynamic behaviors, the depth of the optical lattice is precisely determined.Results and DiscussionsThe multiple-pulse KD diffraction method proposed in this paper utilizes the interference effect produced by a multiple-pulse optical lattice sequence to enhance the diffraction resolution of atoms, thereby improving the accuracy of calibrating the depth of the optical lattice. A comprehensive and systematic measurement of the experimental process is performed for lattice depth calibration, and the practicality and limitations of the four methods—multiple-pulse KD diffraction, single-pulse KD diffraction, RN diffraction, and parametric oscillation heating—are analyzed. The optical lattice depths obtained using the single-pulse and multiple-pulse KD diffraction methods maintain a high degree of linearity with the detection voltage over the entire range, and these two methods are applicable to a wide range of depths and time intervals. However, the single-pulse KD diffraction method determines the depth of the optical lattice through the fitting of experimental data, which requires collecting a large amount of data. This fitting process introduces potential errors and increases the complexity of the measurement. In the multiple-pulse KD diffraction method, the transmission fidelity of diffraction orders is highly sensitive to the lattice depth, and no data fitting is required during the measurement process, which ensures highly accurate calibration of the optical lattice depth. When the laser interaction time is long, the optical lattice depth measured by the RN diffraction method is consistent with the first two methods. However, as the interaction time between the optical lattice and the atoms increases, the diffraction process must account for changes in atomic momentum, and thus the optical lattice depth obtained by this method may have deviations from the true value. The parametric oscillation heating method can be used for optical lattices of different depths and is effective within a wide parameter range. However, at low depths, the wide energy band of the optical lattice increases the frequency range of atomic loss due to resonant heating, which affects the determination of the resonant frequency and further increases measurement error.ConclusionsThrough the analysis of the experimental results, we assess the practicality and limitations of the four trap depth measurement methods. RN diffraction is suitable for cases with short interaction time between the optical lattice and the atoms, and its core mechanism is phase modulation based on the momentum state. When the optical lattice interaction time becomes longer, the momentum change becomes significant, which leads to the breakdown of the diffraction mode approximation. The KD diffraction method has the advantage of a broad range of applicability in both time and depth. It can accurately describe multi-stage diffraction phenomena and remains effective even at high depths. Compared to the single-pulse method, the multiple-pulse KD diffraction method is based on the interference effect. By applying a series of pre-set optical lattice pulses, all atoms are transferred to the first-order diffraction momentum state. Compared to RN diffraction and the single-pulse KD diffraction method, this approach improves the intensity and resolution of the diffraction signal, thereby enhancing the accuracy of depth measurements. Furthermore, it has a broad range of applicability in both time and depth. The advantage of the parametric oscillation heating method is its direct detection of the lattice band structure and calibration through the relationship between band transition frequency and depth. However, at low depths, the frequency range of atomic loss caused by resonance heating increases, which can affect the determination of the resonance frequency. The multiple-pulse KD diffraction method enhances lattice depth measurement accuracy. Therefore, this method is expected to provide a technical reference for optical lattice quantum precision measurements and quantum regulation.

ObjectiveWith the large-scale deployment of low earth orbit (LEO) satellite constellations, the demand for inter-satellite laser communication has grown significantly. Compared to traditional microwave communication, laser communication offers higher data transfer rates, greater capacity, and higher security. However, most existing laser communication systems are designed for point-to-point configurations, which are insufficient for the communication requirements of large-scale satellite networks. In particular, traditional point-to-point systems cannot efficiently interconnect multiple satellites in LEO constellations. In this paper, we propose a novel laser communication system to address challenges related to field-of-view (FOV) and long-distance communication in LEO satellite networks. By introducing a new laser communication system that supports multipoint communication, this approach facilitates the transition from point-to-point configurations to point-to-multipoint or multipoint-to-multipoint networking. The proposed system overcomes the limitations of narrow FOV and distance constraints inherent in traditional optical systems through advanced optical designs and system integration techniques.MethodsTo address the challenges of inter-satellite communication in LEO constellations, we first analyze satellite constellations and orbital configurations, detailing the relative positions and communication distances between satellites both within the same orbit and across different orbits. This orbital analysis is crucial for determining communication link characteristics and ensuring the optical system meets the required communication distances. Subsequently, a panoramic optical system is proposed, employing a dual-mirror configuration to achieve a wide FOV, which is essential for satellite network communication. The dual-mirror design significantly reduces optical aberrations compared to traditional block lens systems. Ray-tracing techniques and vector reflection laws are applied to analyze the interaction between light rays and the system’s optical components, linking outgoing rays to the desired FOV. Furthermore, a freeform mirror is designed and optimized using a point-by-point calculation method to derive its characteristic parameters, and an XY freeform surface is subsequently generated using fitting software. Finally, the freeform mirror is integrated with the rear mirror group, relay system, and collimation-coupling system to form a complete panoramic laser communication system. To verify the feasibility of the system, indoor equivalent validation experiments are conducted, simulating space losses through reduced transmission power, active attenuation, and decreased receive sensitivity to assess the system’s performance under real-world conditions.Results and DiscussionsIn this paper, we propose a novel optical system tailored to the inter-satellite communication requirements of LEO satellite constellations. First, a panoramic optical system with a dual-mirror configuration is proposed, offering a significantly larger FOV than traditional Cassegrain optical systems (Fig. 14). This dual-mirror design effectively mitigates chromatic aberrations, thus enhancing communication link performance. Second, the integration of freeform mirror technology into the panoramic optical system further enhances light distribution and beam shaping, particularly at the FOV edges, effectively reducing optical aberrations and improving overall system performance. By optimizing the freeform mirror, the modulation transfer function (MTF) at the edges of the FOV is further improved, significantly enhancing image quality and reducing optical distortion by approximately 10%. Moreover, beam divergence is reduced by 60% compared to traditional aspheric systems, thus improving signal quality (Fig. 15). These innovations have been experimentally validated through indoor active attenuation experiments (Table 7), demonstrating the system’s long-distance communication performance. The combination of panoramic optical design and freeform mirror technology provides an innovative solution for future wide-field inter-satellite communication.ConclusionsThe proposed laser communication system successfully addresses the challenges of inter-satellite communication in LEO satellite constellations, meeting FOV requirements of 30°?70° and -30°?-70° with a maximum communication distance of 1200 km. Compared to traditional point-to-point communication systems, this design offers a wider FOV, enabling more flexible and efficient communication between satellites. The freeform mirror design further enhances image quality at FOV edges and reduces optical aberrations, which is critical for long-distance inter-satellite communication. Experimental results demonstrate that the system is capable of achieving a low bit error rate (BER) under simulated space-loss conditions, validating its feasibility for practical applications. Overall, the laser communication system proposed in this paper advances the development of point-to-multipoint and multipoint-to-multipoint systems, offering new possibilities for reliable and high-performance satellite communication networks and laying the foundation for future innovations in optical communication systems.

ObjectiveWhen optical signals are transmitted through atmospheric turbulence channels, the signal transmission quality is degraded due to channel attenuation caused by aerosol particles in the atmosphere, as well as beam drift and scintillation due to atmospheric turbulence. This leads to an increase in the communication bit error rate and a decrease in channel capacity, which severely affects the performance of the communication system. Therefore, based on the real-time changes in the atmospheric channel state, an efficient adaptive transmission scheme is designed at the transmitter to effectively mitigate the degradation of the transmitted signal caused by atmospheric turbulence. At the same time, M-th quadrature amplitude modulation (M-QAM) signals have become a hot topic in recent research on new modulation methods due to their high-frequency band utilization and anti-noise performance. With this modulation method, the data transmission rate can reach tera bits per second (Tbit/s). In this context, turbulence changes in the atmospheric channel can be regarded as a slow fading process. Under these conditions, it is feasible to adaptively adjust the optimal probability distribution of the transmitted signal using genetic algorithms, based on the real-time acquired turbulent channel state information in combination with probabilistic shaping technology. Furthermore, by combining geometric shaping technology, the signal’s resilience to turbulence can be further enhanced, thereby improving communication quality. In this study, research is conducted under the Gamma-Gamma turbulent channel model. The proposed scheme integrates adaptive probabilistic shaping with geometric shaping based on channel conditions and provides a system model. This scheme can effectively improve the generalized mutual information of QAM signals and alleviate the effect of atmospheric turbulence on communication systems.MethodsBased on the phase noise generated by the electrical demodulation module, treated as Gaussian noise, we consider the influence of different turbulence intensities on the laser signal. It obtains the turbulent channel state information in real time through the scintillation index calculation device and feeds this information back to the transmitter. An adaptive communication system model is then constructed based on the channel state. At the transmitter, the optimal probability distribution of the transmitted signal is determined through iterative optimization using a genetic algorithm, with the maximum generalized mutual information as the objective. The transmitted signal is adjusted to the optimal distribution through a distribution matcher. Next, combined with geometric shaping technology, the square QAM constellation is transformed into a symmetrically distributed circular arrangement, which realizes a research scheme that integrates adaptive probabilistic shaping with geometric shaping. For the low signal-to-noise ratio under different scintillation indices, we calculate and analyze the generalized mutual information of the proposed scheme, the bit error rate before forward error correction decoding, and the normalized generalized mutual information.Results and DiscussionsTo solve the problems of high bit error rates and low generalized mutual information caused by the influence of atmospheric turbulence on uniformly distributed signals in atmospheric channels, we propose an adaptive probabilistic shaping technique combined with geometric shaping, which is based on time-varying turbulent channel state information under QAM modulation. Compared with uniform distribution or the application of single probabilistic shaping or geometric shaping, the constellation diagram obtained by the scheme proposed in this paper not only significantly reduces the aliasing phenomenon (Fig. 6) but also facilitates signal judgment. In terms of generalized mutual information, the signal after joint shaping shows a gain of 0.07 bit/symbol compared with uniform distribution (Fig. 7). In addition, when the scintillation index is 0.1, the bit error rate of the joint shaping scheme proposed in this paper is reduced from 7.6×10-2 to 9.5×10-3 (Fig. 9), which achieves an order-of-magnitude improvement compared with uniform distribution.ConclusionsWe propose a technical scheme for adaptive probabilistic shaping combined with geometric shaping based on channel conditions. At the transmitting end, the optimal probability distribution of the transmitted signal is explored using a genetic algorithm. The proposed scheme is simulated under turbulence intensities corresponding to different scintillation indices, and the generalized mutual information, normalized generalized mutual information, and bit error rate performances of the four schemes—uniform distribution, geometric shaping, adaptive probabilistic shaping, and adaptive probabilistic shaping combined with geometric shaping—are analyzed in detail. The results show that, compared with the uniform distribution or single constellation shaping schemes, the adaptive probabilistic shaping combined with the geometric shaping scheme proposed in this paper achieves the best communication performance. It can reduce the bit error rate of the communication system, improve the generalized mutual information of the system, and alleviate the influence of atmospheric turbulence on the signal to some extent. Due to experimental limitations, we only simulate and verify the proposed scheme. In the actual communication process, to ensure the smooth implementation of this scheme, the optimal distribution lookup table for the transmitted signal can be pre-established through simulation experiments to ensure that signal shaping and transmission are completed quickly within the atmospheric coherence time. In summary, the adaptive probabilistic shaping combined with the geometric shaping scheme proposed in this paper provides a new approach to improving the performance of laser communication systems.

ObjectiveThe digital hologram reconstruction process has experienced significant improvements in recent years due to the rise of deep learning techniques. Unlike traditional reconstruction methods that primarily rely on complex optical setups and post-processing algorithms, deep learning-based methods provide the potential for faster, more efficient, and more accurate reconstructions. However, the effectiveness of deep learning models in digital holography is often constrained by the quality and quantity of available training data. High-quality and large-scale holographic datasets are difficult to obtain, with significant challenges in terms of both the complexity of data generation and associated costs. This creates a critical bottleneck in developing deep learning models capable of delivering high-performance hologram reconstructions. While deep learning techniques have demonstrated impressive results in holographic reconstruction with sufficient data, the problem becomes far more difficult when the available datasets are limited in size or quality. We investigate hologram reconstruction with small sample datasets and provide an innovative solution in the form of a graph neural network (GNN) model designed to enhance reconstruction performance by effectively capturing the physical relationships between amplitude and phase information in holograms.MethodsWe propose a GNN-based model for digital hologram reconstruction that addresses the limitations imposed by small sample datasets. The primary challenge in digital holography lies in the complex correlation between the amplitude and phase of the reconstructed light field. Traditional methods often struggle to capture these complex relationships accurately under scarce training data. By leveraging the capabilities of graph-based neural networks, our model can effectively encode these amplitude-phase correlations. Specifically, the GNN model constructs a graph structure that represents the physical relationships between pixels in the hologram, allowing it to learn the underlying patterns of light propagation more effectively than traditional convolutional neural network (CNN). To overcome the challenges of few-shot learning (FSL), we consider the intrinsic relationship between the amplitude and phase of the light field. The model takes the raw hologram as the input and performs joint modeling and inference of both amplitude and phase features by GNN. This approach allows the amplitude and phase to complement each other during the reconstruction process, enhancing the overall quality of the model’s output. In particular, the model first extracts initial amplitude and phase features from the input hologram. Then, by adopting the graph structure, GNN iteratively refines these features by considering the relationships between amplitude and phase during the inference process. This enables the model to restore both the amplitude and phase information of the light field accurately, even under limited data. To train the model, we employ a small dataset consisting of ten animal cell mitosis slices. Though small, this dataset provides sufficient labeled data for supervised training while adhering to the FSL scenario.Results and DiscussionsThe proposed GNN model is evaluated by a series of experiments on both synthetic and real holographic datasets. The results indicate that the GNN model consistently outperforms traditional deep learning approaches in terms of both amplitude and phase reconstruction, especially in scenarios where the sample size is limited. In particular, the GNN model demonstrates exceptional performance in recovering the phase information, which is notoriously difficult to reconstruct by employing conventional methods. The ability of the model to accurately capture amplitude-phase correlations significantly improves the overall quality of the reconstructed holograms. Compared to other models, GNN shows better generalization capabilities when faced with small sample datasets, indicating that it can leverage the existing data more efficiently and avoid overfitting. Additionally, our experiments show that the model can reconstruct the light field with minimal detail loss, even in low-light conditions, which highlights its robustness. The improvements in both amplitude and phase reconstruction are particularly notable in complex holograms with high-frequency components, where traditional methods tend to fail or require extensive data preprocessing. Furthermore, the GNN model demonstrates the ability to generalize across different types of holograms, suggesting that the model’s effectiveness is not restricted to specific datasets but can be adapted to a variety of experimental conditions. The physical insights gained from the graph-based approach provide new perspectives on the interplay between the amplitude and phase, deepening the understanding of how light field information is encoded and reconstructed in digital holography.ConclusionsWe propose a novel approach to digital hologram reconstruction by GNNs, which significantly improves the reconstruction performance in small sample conditions. The ability of the GNN model to effectively model the complex physical relationships between amplitude and phase information is a key factor in its success. Experimental results demonstrate that GNN outperforms traditional deep learning models, particularly in terms of phase reconstruction, and provides a viable solution for hologram reconstruction even with limited training data. Our study lays a solid foundation for further advancements in digital holography and deep learning applications, particularly in situations where data acquisition is limited or expensive. By addressing the challenges of small sample datasets, the proposed model provides new possibilities for the practical implementation of digital holography in real-world applications, such as medical imaging, optical testing, and industrial inspections. Future research may explore further optimizations of the GNN architecture and its application to more diverse types of holographic data, potentially broadening its applicability and improving its robustness.

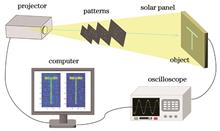

ObjectiveBucket detectors, commonly used in ghost imaging systems, include charge coupled devices (CCDs), complementary metal oxide semiconductor (CMOS) sensors, silicon photocells, single-photon detectors, and perovskite detectors. However, these detectors often come with high costs, limiting the widespread application of ghost imaging. Therefore, it is important to explore low-cost detectors to make ghost imaging more accessible. In this paper, we propose using silicon solar panels as bucket detectors in ghost imaging systems and investigate the performance of image reconstruction in this low-cost configuration. Silicon solar panels offer advantages such as broad-spectrum sensitivity and high efficiency. Additionally, with the rapid development of silicon-based photonic integrated circuits, silicon solar panels now feature high integration, which allows for the collection, processing, and analysis of experimental data on a single compact device. This integration can substantially lower the manufacturing costs of bucket detectors. Our goal is to explore the potential of silicon solar panels as a cost-effective alternative to traditional detectors in ghost imaging systems and to evaluate their performance under various sampling conditions and reconstruction algorithms.MethodsWe propose a pseudo-inverse ghost imaging system using commercial silicon solar panels as bucket detectors. In this system, the object light is captured by the silicon solar panel and converted into a voltage signal, which serves as the measurement signal, also called the bucket signal. In the experiment, a series of binary random patterns, generated by a projector, are sequentially projected onto the object, which is placed close to the silicon solar panel. The output voltage signals are digitized by an oscilloscope and then transmitted to a computer for image reconstruction. To verify the feasibility of the proposal, we first confirm the linear relationship between the output voltages of the silicon solar panel and the number of illuminated pixels. The deviation between the experimental data and theoretical predictions is minimal. For comparison, we also perform ghost imaging experiments using a CCD as the bucket detector under similar conditions. Image quality is evaluated using standard metrics. To further enhance image quality and optimize the experimental setup, various reconstruction algorithms are applied, including the correlation algorithm, pseudo-inverse algorithm, Schmidt orthogonalization, compressed sensing, and filtering techniques. The performance of ghost imaging with these methods is compared under different sampling rates.Results and DiscussionsThe verification of the linear relationship between the voltage signals from the silicon solar panel and the illuminated area demonstrates the feasibility of the proposed experimental setup (Fig. 2). Following this, 4096 speckle patterns are generated, and the object “T” is imaged. To evaluate the performance of silicon solar panels as bucket detectors, we conduct several experiments at different sampling rates and reconstruction algorithms. Similar experiments with a CCD as the bucket detector are also conducted for comparison. The experimental results are shown in Figs. 3 and 4. When the sampling rate exceeds 40%, the object’s outline becomes more distinguishable, and the results from the silicon solar panel closely match those obtained from CCD detectors. However, the silicon solar panel offers a significant cost advantage, being much more affordable than CCDs. Fig. 5 presents the peak signal-to-noise ratio (PSNR) values of the reconstructed images under various sampling rates and reconstruction algorithms. The results indicate that, in comparison to CCDs, the PSNR values for images reconstructed using silicon solar panels remain stable across different conditions. This stability highlights the robustness of the system, with the silicon solar panels demonstrating superior fault tolerance and ease in selecting an optimal sampling rate. As a result, the system can maintain high-quality image reconstruction even under variable conditions or environments. Furthermore, Fig. 6 shows the structural similarity index (SSIM) values for the reconstructed images under different sampling rates and algorithms. The SSIM results corroborate the findings from the PSNR analysis, demonstrating that the use of silicon solar panels as detectors leads to stable image recovery, even under low sampling conditions. This suggests that silicon solar panels can provide reliable and high-quality imaging performance in resource-constrained environments, making them an attractive option for practical ghost imaging applications.ConclusionsWe propose a pseudo-inverse ghost imaging system based on silicon solar panels as bucket detectors. The low-cost silicon solar panels deliver good performance in reconstructing images, demonstrating their potential as viable alternatives to traditional, more expensive detectors like CCDs. Various reconstruction algorithms such as the pseudo-inverse, Schmidt orthogonalization, and quadratic filtering techniques are applied, and the results show that high-quality, stable images can be obtained even with low sampling rates. The silicon solar panels’ excellent photovoltaic properties, broad spectral response, and low cost have made them widely used in fields such as environmental monitoring, intelligent transportation, building surveillance, security, disaster monitoring, and aerospace. Our imaging system not only broadens the application scope of ghost imaging but also integrates silicon solar panels into cost-effective, high-efficiency detection and monitoring technologies, paving the way for more practical applications in real-world scenarios.

ObjectiveMid-wave infrared (MWIR) detectors, with a spectral range of 3?5 μm, are indispensable in a wide range of applications, including aerospace, missile early warning systems, infrared imaging, biochemical gas detection, and environmental monitoring. There is an increasing demand for high-performance MWIR focal plane arrays (FPAs) to support the development of compact, efficient, and high-resolution imaging systems. However, traditional MWIR materials like InSb and HgCdTe (MCT) face inherent challenges. InSb detectors, with their narrow bandgap tunability and low operating temperatures (~80?100 K), are limited in applicability to compact infrared systems. MCT, despite its tunable bandgap, suffers from poor material uniformity, high defect density, and limited scalability for large arrays. To address these issues, III-V semiconductor materials, particularly InAs/InAsSb type-II superlattices (T2SLs), have emerged as promising candidates due to their excellent material uniformity, larger bandgap tunability, and compatibility with low-cost substrate technologies. This study focuses on the design, fabrication, and performance characterization of InAs/InAsSb T2SL-based MWIR FPAs, with an emphasis on achieving high operating temperature (HOT) performance. We employ an nBn barrier structure and strain-balanced epitaxial growth via molecular beam epitaxy (MBE) to fabricate 640×512 array detectors and evaluate their material and optoelectronic properties under operational conditions.MethodsThe nBn structure is designed to minimize dark current and enhance carrier transport. The epitaxial layers are grown on n-type GaSb (001) substrates using solid-source MBE. Key parameters, including growth temperature, V/III beam equivalent pressure ratios, and layer thicknesses, are carefully optimized to achieve strain balance and high crystal quality. Real-time monitoring with reflection high-energy electron diffraction (RHEED) ensures precise control of the growth process. The complete nBn structure comprises a 200 nm n-doped InAs/InAsSb T2SL bottom contact layer, a 3 μm unintentionally doped T2SL absorber layer (AL), a 180 nm AlAsSb barrier layer (BL), a 200 nm n-doped T2SL top contact layer, and a 20 nm n-doped InAs capping layer. The T2SL layers consist of alternating InAs (3.75 nm) and InAsSb (1.3 nm) layers with constant Sb composition. Device fabrication involves ICP etching to define the mesa structure, followed by dielectric passivation to reduce surface leakage currents. Ti/Pt/Au metal contacts and In bump interconnects are then deposited to establish reliable electrical connections. The fabricated 640×512 FPAs are hybridized with readout integrated circuits (ROICs) via flip-chip bonding. The final devices are encapsulated in Dewar packages for performance testing at 130 K. Material characterization methods include atomic force microscopy (AFM) for evaluating surface morphology and high-resolution X-ray diffraction (HRXRD) for assessing crystal quality and lattice matching. Optoelectronic characterization involves spectral response measurements, dark current analysis, and imaging performance evaluation.Results and DiscussionsAFM results confirm the excellent surface morphology of the epitaxial layers. Root mean square (RMS) surface roughness values are measured at 0.239, 0.200, and 0.179 nm for scanning areas of 50 μm×50 μm, 5 μm×5 μm, and 1 μm×1 μm, respectively (Fig. 3). The presence of atomic steps in the smallest scanned area indicates high surface quality and precise control over the epitaxial growth process. HRXRD analysis further validates the structural quality of the layers. The (004) ω-2θ diffraction profile exhibits sharp and well-defined satellite peaks (SL is ±1, ±2, ±3), with the full width at half maximum (FWHM) of the primary SL0 peak measuring 25.1″ (Fig. 5). These results confirm the successful implementation of strain-balanced growth and precise lattice matching between the T2SL layers and the GaSb substrate. Additionally, the atomic number fraction of Sb of 0.35 in the absorber layer is consistent with the design specifications, while the barrier layer demonstrates excellent alignment with the substrate lattice. The fabricated MWIR FPAs exhibit outstanding performance under hot conditions. At 130 K, the detectors achieve an average peak detectivity of 4.81×1011 cm·Hz1/2·W-1, with a noise equivalent temperature difference (NETD) of 15.8 mK. The defective pixel rate is as low as 0.16%, and the responsivity non-uniformity is measured at 4.67% (Table 1). The devices exhibit a 50% cutoff wavelength of 5.18 μm and a 100% cutoff wavelength of 5.75 μm (Fig. 9), which fully meets the requirements for MWIR detection applications. Dark current analysis indicates that, at a -0.5 V bias, the device exhibits a dark current density of 4.57×10-5 A/cm2 at 150 K, which increases to 9×10-2 A/cm2 at 295 K [Fig. 8(a)]. The Arrhenius plot of the J-V characteristics reveals an activation energy of 206 meV for temperatures above 150 K, which closely matches the material’s bandgap energy. This strongly confirms that diffusion current is dominated in this range [Fig. 8(b)]. At lower temperatures, tunneling currents become more significant, with an activation energy of 25 meV. Imaging tests at 130 K further demonstrate the detector’s capability to capture high-resolution thermal images. The device effectively resolves fine thermal details, such as facial features, flame contours, and subtle temperature variations on textured surfaces (Fig. 10). These results confirm the detector’s high temperature resolution and imaging quality, which makes it ideal for applications that require detailed infrared imaging and target detection.ConclusionsThis study demonstrates the potential of InAs/InAsSb T2SL MWIR FPAs for high-performance infrared imaging under hot conditions. The optimized nBn barrier structure and strain-balanced epitaxial growth lead to superior material quality, low dark current, and high detectivity. The 640×512 array exhibits excellent uniformity, low noise, and robust imaging performance, which confirms its suitability for MWIR imaging systems. The detector’s performance highlights its potential as a viable alternative to traditional MWIR materials like InSb and MCT, particularly in applications requiring compact, high-temperature-capable systems. Future efforts will focus on scaling the array size further, optimizing device fabrication processes, and integrating advanced ROICs to enhance system-level performance. These advancements aim to broaden the technology’s applicability in portable, high-resolution, and high-dynamic-range infrared imaging systems.

ObjectiveTraditional optical imaging systems project images of a scene at different distances onto a two-dimensional image, which results in the loss of distance information of targets in the scene. This limitation makes it difficult to meet the requirements of many fields, such as autonomous driving, biological imaging, and deep space exploration. Compared to active ranging methods, passive ranging methods have become a research hotspot in recent years due to their simplicity, low power consumption, and rich texture structure. Furthermore, they do not require additional active light sources for illumination. Currently, passive ranging methods mainly include multi-view stereo vision, monocular defocus, and monocular computational reconstruction via wavefront coding. Due to their simplicity, lower cost, and better application prospects, passive ranging systems based on monocular defocus have recently gained widespread attention. However, this method has limitations in measurement accuracy and non-unique distance decoupling. In this paper, we propose a high-precision passive ranging and refocusing method based on traditional optical imaging systems and two-frame detection signals. The proposed method can not only restore the target’s focused image when it is defocused but also solve the problem of non-unique decoupling of distance information, which is present in traditional monocular defocus ranging methods and achieve higher ranging accuracy. This work is useful for the accurate recognition of airborne targets and three-dimensional (3D) microscopic imaging.MethodsBy combining the compressive sensing image reconstruction algorithm with the gradient difference image quality evaluation function, we propose a high-precision passive ranging and refocusing method based on a traditional optical imaging system and two-frame detection signals (Fig. 2). Firstly, the point spread function (PSF) measurement matrix library is pre-calibrated/pre-computed in combination with the optical imaging system. Secondly, two frames of images of the scene target are recorded by the CCD. Thirdly, two sets of image sequences are reconstructed based on the theory and reconstruction algorithm of compressive sensing, and the image quality evaluation function (IQEF) is used to evaluate the image reconstruction results. Using the value of IQEF, the optimized image of the target can be achieved, and preliminary distance information of the target is obtained through the distance decoupling method (Fig. 3). Finally, highly accurate target distance information can be obtained by using the compressive sensing image reconstruction algorithm with an orthogonal constraint and an evaluation function based on the slice image information ratio.Results and DiscussionsTo demonstrate the validity of the proposed passive ranging and refocusing method, we build an optical imaging experimental system based on Fig. 2(a) for verification. In this case, the system’s depth of field is set as ΔL=1.43 mm. According to Eq. (4), the reconstruction results and normalized IQEF curves are shown in Figs. 4?6 based on two-frame detection signals and different PSF measurement matrices. Similar to the monocular defocus ranging method, the IQEF curve for each detection signal shows a structure of two peaks due to the symmetrical decoupling problem (Fig. 6). By combining the peak position of the IQEF curve, the coarse ranging architecture in Fig. 3, and Eq. (8), the problem of symmetrical decoupling is effectively solved in monocular defocus ranging, and it can be determined that the distance of the target is approximately 254.5 mm. Furthermore, by analyzing the influence of the searching step and the axial distance deviation (Δz) of two-frame detection signals on the accuracy of coarse ranging, the experimental results show that when the axial distance deviation Δz is greater than ΔL/2, the accuracy of coarse ranging method can reach ΔL/2 (Figs. 7 and 8). On the basis of the coarse ranging method, when the proposed TVAL3+OC reconstruction algorithm and the evaluation function of slice image information ratio are adopted, the target’s distance is confirmed near 252.8 mm and its ranging accuracy can reach ΔL/16 (Fig. 10), which is one order of magnitude higher than the existing monocular defocus ranging method.ConclusionsFaced with the low ranging accuracy and non-unique decoupling of distance information in the monocular defocus ranging method using a traditional optical imaging system, we propose a passive ranging and refocusing method based on two-frame detection signals. By combining the compressed sensing image reconstruction theory and two-frame detection signals, a clear target image can still be obtained even if the target is defocused and the accuracy of coarse ranging can reach half of the system depth of field (ΔL/2) when the axial distance deviation of two-frame detection signals is not smaller than ΔL/2. Based on the results of coarse ranging, the ranging accuracy of the target can reach ΔL/16 when the proposed precision ranging algorithm with orthogonal constraint is used. This work not only solves the problem of non-uniqueness in distance information acquisition that exists in the traditional monocular defocus ranging method, but also achieves higher-precision ranging. The proposed method has important application prospects in scenarios such as passive detection and ranging of aerial targets at medium and long distances, and three-dimensional microscopic imaging. When deep learning technology is introduced into the reconstruction process of coarse and precision ranging, the speed of target image reconstruction and distance information extraction is expected to improve dramatically. Moreover, high-precision ranging of small targets under conditions of low detection signal-to-noise ratio is an issue that needs further investigation in future work.

ObjectiveLaser heterodyne interferometry is widely used in applications such as vibration measurement, velocity measurement, and displacement measurement. Traditional methods for eliminating the effect of laser frequency noise on measurements have mainly focused on reducing the laser noise itself. To suppress the effect of laser frequency noise on heterodyne interferometric signal, we study the model of laser frequency noise’s effect on the heterodyne interferometric signal, simulate the resulting tendencies and suppression extent with compensation fibers, and finally validate the suppression method through experimentation.MethodsA typical laser heterodyne interferometric structure is introduced, and the transitive relation from laser frequency noise to displacement noise is derived mathematically. A simulation system is set up, with input parameters including laser wavelength, laser emission power, laser frequency noise, coupler ratio, modulator frequency, photodetector gain, sampling rate, sampling time, and the passband and stopband frequencies of the low-pass filter. The variable is the measuring optical lengths, which are successively set to 0, 10, 20, and 30 m. The outputs are the power spectral densities of displacement. Three conditions are set: shot noise only, laser frequency noise only, and both shot noise and laser frequency noise considered. Root mean squares (RMSs) of power spectral densities in certain frequency ranges are calculated for the three conditions. The results are presented in a graph to show the trend. Similar work is conducted to show the graph of RMS-laser frequency noise at 0, 10, 20, and 30 m optical lengths. Compensation for the 30 m optical length fiber is simulated. The experiment is conducted using fibers of different optical lengths. The delay fibers with optical lengths of 30 and 154.1 m are used to simulate the real measuring optical length in air. Compensation results are recorded for comparison.Results and DiscussionsThe model analysis shows that in the non-compensation situation, the measuring optical length causes a significant time lag in light propagation. Mathematical derivation indicates that the power spectral density of displacement is directly proportional to the power spectral density of laser frequency and the square of the time lag. The results imply that differences in optical length, causing propagation time lag, may be a significant error source in the laser heterodyne interferometer. Thus, by compensating for the optical length difference, the error could be suppressed. Simulation of the power spectral density of displacement at different measuring optical lengths shows that the total noise level increases as the optical length increases. The shape of the power spectral density changes distinctly, which indicates that the effect of optical length change differs across frequency ranges. In the case of long optical lengths, the power spectral density graph consists of a main lobe and several side lobes. The 0.9?1.1 MHz results of RMS show that shot noise does not change significantly as the optical length increases. Meanwhile, in the case of long optical lengths, the system is mainly affected by laser frequency noise, and shot noise becomes negligible. When laser frequency noise is considered, RMS increases by 0.01 pm?Hz-1/2 as the optical length increases by 1 m. The 9?11 MHz results of RMS show that noise increases first and then decreases as the optical length increases from 0 to 30 m. 15 m has the largest value of 0.08 pm?Hz-1/2. The 2?30 MHz results of RMS show that the RMS speed decreases as the optical length increases. The RMS-laser frequency noise graph indicates that laser frequency noise has little effect, and displacement noise does not increase as laser frequency noise increases at 0 m optical length. When the optical length is greater than 0 m, displacement noise increases linearly as the laser frequency noise increases. The slope of the line depends on the optical length and increases as the optical length increases. Simulation of compensation with a 30-m optical length fiber shows that displacement noise is efficiently suppressed, and the total noise is reduced to the shot noise level. The experimental results are in accordance with a deduction from derivation and simulation. In the 30-m experiment, the RMSs of 1?5 MHz are respectively 0.031, 0.096, and 0.028 pm?Hz-1/2 in the conditions of no delay fiber, with delay fiber, and with delay fiber and compensation fiber. In the 154.1-m experiment, they are 0.027, 0.106, 0.024 pm?Hz-1/2. The results validate the suppression method.ConclusionsWe demonstrate the analysis of the effect of laser frequency noise on the heterodyne interferometric signal and the method for its suppression. Theoretical derivation is conducted to obtain the relationship between the power spectral density of laser frequency noise and that of displacement. The power spectral density of displacement is used to represent the magnitude of the noise effect. A numerical simulation is conducted to present the trend of the effect. The results show that displacement noise increases as laser frequency noise increases, and similarly, displacement noise increases as the detecting optical length increases. In the simulation, detecting optical length is set to be 15 m and laser frequency noise is set to be 3 Hz?Hz-1/2. After adding the compensation fiber of 30-m optical length, the RMS of power spectral density of displacement between 2 and 30 MHz decrease from 0.08 to 0.02 pm?Hz-1/2 in the simulation. The experiment using 154.1 m compensation fiber is done for compensating the effect of delay fiber. The RMS of power spectral density of displacement decreases from 0.106 to 0.024 pm?Hz-1/2. The experiment validates the method for suppression. It is implied that inserting delay fiber can sufficiently suppress the impact of laser frequency noise in heterodyne interferometry.

ObjectivePose estimation for non-cooperative spacecraft involves determining the spatial position and attitude of spacecraft that lack active cooperation devices, such as defunct satellites or space debris. This technology is critical for advanced space applications, including autonomous rendezvous and docking, on-orbit servicing, and orbital debris removal. For non-cooperative spacecraft with unknown geometric structures, a prominent research approach involves leveraging simultaneous localization and mapping (SLAM) to reconstruct the three-dimensional (3D) structure using model-based methods for pose estimation. Current methods often rely on feature-based techniques to establish the pose constraints, followed by pose graph optimization to minimize cumulative estimation errors. However, the time-consuming nature of feature extraction poses challenges for real-time applications, and existing information matrices may inadequately represent pose estimation uncertainties. Sensors for pose estimation can be categorized as passive or active. Passive sensors are low-cost and high-frame-rate but can be affected by lighting variations. In contrast, active sensors like lidar directly acquire 3D point clouds, offering high accuracy and being less susceptible to lighting and scale variations. Therefore, we utilize lidar as the sensor for pose estimation. To enhance real-time performance and model reconstruction accuracy, we propose a non-feature-based 3D reconstruction and pose estimation method (NFRPE-3D) using lidar point cloud data.MethodsFirst, we apply the iterative closest point (ICP) algorithm to execute a keyframe registration technique to obtain the relative pose, which is then recursively used to estimate the pose of the current frame. However, this process can introduce cumulative errors. To mitigate these errors, we update the pose graph based on attitude relationships between keyframes, establishing loop constraints. Pose graph optimization is performed using the g2o framework. Notably, the loop constraints in the pose graph are established solely through attitude relationships, which eliminate the need for complex feature extraction and reduce computational overhead. To address the limitation of existing methods where the information matrix does not accurately reflect pose estimation uncertainty, we propose constructing the information matrix for graph optimization by minimizing the sum of squared distances between corresponding keyframe points, thus enhancing pose graph optimization accuracy. The optimized pose graph results are then used to reconstruct the target’s point cloud model. After model reconstruction, subsequent pose estimations are performed using a model registration strategy.Results and DiscussionsTo validate our method, we conduct a semi-physical simulation experiment using a 1∶1 satellite model under simulated space lighting conditions. We first evaluate the attitude and position estimation accuracy of various methods (Figs. 9?12, Table 2). Our methods achieve mean absolute errors of 2.34°, 1.67°, and 1.71° for the three-axis attitude, and 0.033, 0.007, and 0.025 m for the three-axis position, significantly outperforming other methods. Compared to existing feature-based methods, our method improves three-axis attitude and position accuracy by over 40%. A comparison of point cloud models before and after pose graph optimization (Fig. 13) shows that the proposed method effectively reduces cumulative errors and enhances model reconstruction accuracy. The reconstructed point cloud model (Fig. 14) delineates the overall structure, demonstrating the effectiveness of our model reconstruction step. Finally, the computational times of the methods are presented (Fig. 15). Overall, the maximum real-time computation time of our method does not exceed 0.2 s, and except for the model reconstruction step, the computation time remains below 0.1 s. The average computation time per frame is 0.040 s, demonstrating excellent real-time performance. Compared to existing feature-based methods, our approach increases the average computing speed by 95.8%.ConclusionsIn this paper, we propose a method named NFRPE-3D for point cloud model reconstruction and pose estimation of non-cooperative spacecraft using lidar point cloud data. The method establishes pose graph constraints based solely on attitude relationships between keyframes, eliminating the need for feature extraction and matching, which significantly reduces computational complexity. Furthermore, by minimizing the sum of squared distances between corresponding points in keyframes, we construct the information matrix for pose graph optimization, thus improving pose estimation accuracy. Experimental results demonstrate that NFRPE-3D effectively enables spacecraft model reconstruction and pose estimation. In the absence of target model information, pose estimation is dependent on keyframe registration, which can result in significant fluctuations due to cumulative errors. However, pose graph optimization effectively mitigates these errors, improving accuracy and stabilizing pose estimation. After model reconstruction, the model registration strategy further stabilizes pose estimation. Compared to existing feature-based methods, our approach improves three-axis attitude and position accuracy by over 40%, while also increasing the average computation speed by 95.8%.

ObjectiveWhite light scanning interferometry (WLSI) is a powerful technique for surface profilometry and has been widely applied in semiconductor inspection, additive manufacturing, film thickness characterization, and other precision measurements. However, in actual measurements, the hysteresis effect of piezoelectric ceramics and unpredictable environmental disturbances can distort the interference signal by increasing phase noise, leading to inaccurate localization of the zero optical path difference. Therefore, effectively suppressing phase noise without altering the structure of the white light interferometry system is significantly important in practice. In recent years, various approaches have been proposed to mitigate phase noise. Some scholars have developed advanced iterative algorithms to compensate for phase noise. However, these algorithms exhibit slow convergence and require additional computation for envelope and phase extraction, significantly increasing computational complexity, particularly for large-field interferometry. Other methods incorporate preprocessing techniques, such as short-time spectrum threshold denoising or improved complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN), to filter out phase noise before integrating phase analysis for height extraction. However, these methods are constrained by pixel-by-pixel processing, limiting their efficiency. To address these challenges, we propose a multi-period moving difference phase noise preprocessing method. A multi-period difference equation is derived from the white light interference signal using a non-iterative least squares sinusoidal fitting approach. Compared to other preprocessing techniques, this method does not require additional hardware assistance or complex iterative optimization, ensuring an effective noise suppression rate while significantly reducing computational complexity. In addition, fast Fourier transform (FFT) and an improved seven-step phase shift method are combined to calculate the phase, minimizing scenarios where the phase step error is not π/2 and further improving phase calculation accuracy.MethodsIn this paper, we propose a fast multi-period differential signal preprocessing method, which is computationally efficient and can be processed using a forward recurrence operation. First, mathematical analysis is employed to separate key parameter information including step phase and phase noise from the white light interference signal. Using least squares sinusoidal fitting, a multi-period difference equation is derived. Then, leveraging the normal distribution characteristics of phase noise, the noise term in the equation is compensated, and moving differential filtering is applied to significantly suppress phase noise. Finally, FFT is utilized to extract the envelope of the interference signal after noise suppression, and the improved seven-step phase shift method is applied to enhance phase calculation, effectively minimizing the influence of residual phase noise.Results and DiscussionsSimulations and experiments are conducted to verify the computational efficiency and performance. The proposed method requires only 0.01 s to process a interference signal matrice with sampling length of 100 frame and size of 100 pixel×100 pixel, demonstrating an operational efficiency about ten times higher than that of Savitzky-Golay (S-G) filtering, continuous wavelet transform (CWT), and CEEMDAN, thus confirming its high computational efficiency. Table 1 shows the residual scanning errors of four different preprocessing methods under different noise levels. Compared to other methods, the proposed method yields the lowest residual scanning error (Δs), demonstrating superior performance in noise suppression. In addition, when the error amplitude increases to 50 nm, the residual scanning errors of the other three methods approach the Gaussian scanning error added by simulation, while the proposed method achieves a residual error that is only 30% of the simulated error value. To validate the feasibility and effectiveness of the proposed method, a standard step sample and an inner etching groove step sample are measured. The interference signal and carrier phase distribution with phase noise are illustrated in Fig. 3, while the denoised signals after preprocessing are shown in Figs. 4 and 6. The phase noise suppression rates for the two step samples after preprocessing are 92.8% and 94.6%, respectively. Repetitive measurements of 10 sets of data reveal that the average depth of the standard step height is (11.963±0.006) μm, with a relative error of 0.005% compared to the nominal value of (11.970±0.05) μm. In addition, to assess the model’s effectiveness for complex surface structures, the morphology and curvature radius of a microlens array are measured. The measurement results are shown in Fig. 9, while Table 6 demonstrates the 10-set statistical results of the curvature radius. The microlens array’s curvature radius is determined to be (1.082±0.016) mm, with a relative error of about 0.53% compared to the nominal value of (1.076±0.033) mm, further confirming the method’s effectiveness in surpassing phase noise and its applicability to complex structures.ConclusionsIn this paper, we propose a multi-period moving difference signal preprocessing method for white light interferometry, effectively mitigating unavoidable phase noise caused by mechanical vibrations and environmental disturbances. The multi-period differential filtering method, based on least squares fitting, applies a moving smoothing process to suppress phase noise efficiently. In addition, FFT-based coherence peak detection is integrated with an enhanced seven-step phase shift algorithm to accurately determine the zero optical path difference position, thus reducing the influence of residual phase noise on measurement accuracy. Comparative measurements of a standard step sample and an inner etching groove step sample demonstrate that the proposed method achieves a relative error of less than 0.7%, outperforming the frequency domain analysis (FDA) algorithm and the white light demodulation algorithm based on FFT and white light shift. The phase noise suppression rates for the interference signals of the two test samples are 92.8% and 94.6%, while the phase noise processing times are only 4.2764 s and 2.1235 s, respectively. In addition, microlens array measurements confirm that the proposed algorithm maintains high accuracy and repeatability even for complex structures. These results validate the effectiveness of the proposed phase noise suppression method and offer a new approach to anti-vibration measurement technology in low-vibration environments.

ObjectiveLithium niobate photonic integration represents a cutting-edge technology driving advancements in high-speed optical communication and optical information processing. On-chip power splitters are essential components in photonic integrated circuits. However, most traditional design schemes for power splitters are based on known physical effects. Geometries are typically determined by empirical models and optimized through fine-tuning of characteristic parameters. As a result, conventional designs have limited flexibility, which hinders further device integration. Unlike traditional design methods, the inverse design approach mathematically formulates the physical problem and employs various intelligent algorithms to iteratively compute the device structure based on the desired performance. This method fully explores the entire parameter space, overcoming conventional structural constraints and enabling the design of smaller, higher-performance optical devices. However, lithium niobate is an anisotropic material, meaning that the transmission characteristics of light waves within the device depend on the crystal orientation and propagation direction, necessitating future study of inverse design approaches for lithium niobate-based devices. In this paper, we incorporate the anisotropic properties of lithium niobate into the inverse design of 1×2 power splitters with different splitting ratios, utilizing both X-cut and Z-cut lithium niobate thin-film platforms. We compare the performance differences of power splitters with different crystal orientations to promote the application of inverse design methodology in highly integrated lithium niobate photonic circuits.MethodsThe device design area is divided into an M×N grid of equal-sized pixel cells. Each pixel has two possible states: etched (coded as 0) and unetched (coded as 1). In the simulation, the refractive index of lithium niobate is represented as a 3×3 diagonal matrix. The design process combines the DBS algorithm and 3D FDTD analysis, where the DBS algorithm iteratively generates new matrix states, and 3D FDTD evaluates the device’s figure of merit (FOM). The optimization continues until the target performance is reached or the maximum iteration count is achieved, at which point the final device structure is recorded.Results and DiscussionsThe inverse design of power splitters on a Z-cut/X-propagation lithium niobate thin-film platform is conducted. When the target ratio is 1∶1, the insertion losses of the optimized devices within the 1500?1600 nm wavelength range for three sizes (L1×L2=2.86 μm×2.42 μm, 2.86 μm×2.86 μm, 2.86 μm×3.30 μm) are 0.135 dB?0.188 dB (ΔIL=0.053 dB), 0.070 dB?0.097 dB (ΔIL=0.027 dB), and 0.030 dB?0.060 dB (ΔIL=0.030 dB), respectively (Fig. 4). As shown, all optimized devices exhibit low insertion loss (ξIL<0.2 dB), with IL showing minimal variation with the incident light wavelength (ΔIL<0.06 dB). When the target ratio is 1∶2, the input light wave is unevenly split into the two output waveguides, and the splitting ratio of the device closely matches the ideal target value, while the overall insertion loss remains below 0.22 dB (Fig. 5). Next, the inverse design of power splitters is carried out on an X-cut/Y-propagation lithium niobate thin-film platform. When the target ratio is 1∶1, the insertion losses of the optimized devices in the 1500?1600 nm wavelength range are 0.206 dB?0.258 dB (ΔIL=0.052 dB), 0.100 dB?0.135 dB (ΔIL=0.035 dB), 0.057 dB?0.077 dB (ΔIL=0.020 dB), respectively (Fig. 6). When the target ratio is 1∶2, the insertion loss of the device varies between 0.243?0.307 dB (Fig. 7).ConclusionsIn this paper, the inverse design of low-loss, compact 1×2 power splitters is carried out based on an anisotropic lithium niobate thin-film platform. The effects of design area sizes, splitting ratios, and crystal orientations on the inverse design results are also investigated. Firstly, three different sizes of 1∶1 and 1∶2 power splitters (2.86 μm×2.42 μm, 2.86 μm×2.86 μm, and 2.86 μm×3.30 μm) are designed on Z-cut/X-propagation and X-cut/Y-propagation lithium niobate thin-film platforms, respectively. The simulation results show that the devices exhibit low-loss characteristics within the 1500?1600 nm wavelength range. For a device size of 2.86 μm×2.86 μm, the insertion losses of the 1∶1 and 1∶2 power splitters are less than 0.14 dB and 0.31 dB, respectively, with the splitting ratios within the 100 nm operating bandwidth closely matching the target values, meeting the design requirements. The larger the design area size and the higher the number of pixels, the greater the design freedom, allowing for lower device loss, although this increases the simulation time. The inverse design results of the Z-cut/X-propagation and X-cut/Y-propagation lithium niobate thin-film platform are compared. The results indicate that devices designed based on the Z-cut/X-propagation platform are more likely to achieve lower loss due to the anisotropy of the lithium niobate crystals when TE-mode light waves are incident.

ObjectiveWith the rapid advancement of 5G (fifth generation of mobile communications technology), artificial intelligence, and big data, the demand for data transmission in optical communications is growing at an unprecedented rate. Conventional wavelength-division multiplexing (WDM) technology is constrained by the Shannon limit and fiber nonlinear effects, which makes mode-division multiplexing (MDM) technology essential to overcome communication capacity bottlenecks. Known for its high transmission capacity, integration, and scalability, silicon mode-division multiplexing systems are regarded as one of the most promising platforms for signal multiplexing. Among their components, the mode multiplexer/demultiplexer plays a crucial role. However, existing designs face several challenges, as conventional mode multiplexer/demultiplexer structures often suffer from large size and design complexity. While inverse design algorithms enable more compact layouts, they frequently require lengthy design cycles. To enhance device design efficiency, machine learning has been widely emphasized and studied in the field of photonics. In this study, we develop a silicon photonic device inverse design platform utilizing deep neural networks (DNN) and use it to inverse-design a silicon hybrid multiplexer/demultiplexer. The DNN-based inverse design platform for silicon photonic devices can significantly improve design efficiency and greatly expand design flexibility.MethodsThe inverse design platform is constructed using a DNN architecture, which includes one input layer, several hidden layers, and one output layer (Fig. 2). The input layer incorporates the operating wavelength, the desired transmittance, and three modes corresponding to a specific wavelength. The output layer consists of nodes that represent the distribution of subunits in the functional region. We combine the direct binary search (DBS) algorithm with the three-dimensional full-vector time-domain finite-difference (3D-FV-FDTD) method to compile the dataset, which ensures an appropriate division between the training and validation sets. The rectified linear unit (ReLU) is selected as the activation function, while the Adam optimizer is employed to approximate the nonlinear function and optimize the weights and biases during the training process. The binary cross-entropy (BCE) loss function is used to train the network model and measure the error between the predicted and actual outputs. By adjusting the number of hidden layers and neurons per layer, we identify the optimal configuration of hidden layers, neurons, and iterations. Ultimately, using the trained deep neural network model, we implement a silicon hybrid multiplexer/demultiplexer that achieves the desired performance through inverse design (Fig. 1). A silicon hybrid multiplexer/demultiplexer chip is fabricated using the complementary metal-oxide-semiconductor (CMOS) process (Fig. 8). Utilizing a self-constructed test system, we conduct performance tests on the silicon hybrid multiplexer/demultiplexer chip to evaluate the consistency between theoretical predictions and experimental results (Fig. 9).Results and DiscussionsUtilizing the established inverse design platform, the silicon hybrid multiplexer/demultiplexer can be designed within 10 ms, with a compact size of only 4.8 μm×2.56 μm (Fig. 1). Theoretical results indicate that the insertion losses for the TE0, TM0, and TE1 modes at the central wavelength are 0.48 dB, 0.19 dB, and 0.41 dB, respectively, while the 3 dB operating bandwidth exceeds 100 nm (Fig. 6). Experimental test results reveal that the insertion losses for the TM0, TE0, and TE1 modes at the central wavelength are 0.56 dB, 0.31 dB, and 0.93 dB, respectively. Within the 100 nm bandwidth range, the insertion loss remains below 3.75 dB, and the inter-modal crosstalk is less than -16.26 dB (Fig. 9). Compared to traditional ADC and AC structures, the silicon hybrid multiplexer/demultiplexer proposed in this paper effectively reduces the overall size of the device by introducing a functional area. In contrast to structures designed using conventional reverse design methods, the proposed structure enhances multiplexing/demultiplexing efficiency and minimizes the area of the required functional components by incorporating tapered waveguide units.ConclusionsIn this study, we present a photonic device inverse design platform built on DNN and conduct both theoretical and experimental studies of a silicon hybrid multiplexer/demultiplexer. Utilizing a combined approach of DBS and 3D-FV-FDTD methods, we collect 4077 data points as a dataset to train the neural networks. The architecture is optimized to include four hidden layers, each containing 120 neurons. With the developed inverse design platform, the efficient design of a silicon hybrid multiplexer/demultiplexer with arbitrary desired performance can be achieved by collecting the dataset just once. Using this platform, we successfully design a silicon hybrid multiplexer/demultiplexer with a size of only 4.8 μm×2.56 μm, which enables TM0, TE0, and TE1 mode multiplexing/demultiplexing. Theoretical results indicate that the insertion losses for the TE0, TM0, and TE1 modes at the center wavelength are 0.48 dB, 0.19 dB, and 0.41 dB, respectively, with a 3 dB operating bandwidth exceeding 100 nm. Experimental results reveal that the insertion losses for the TM0, TE0, and TE1 modes at the center wavelength are 0.56 dB, 0.31 dB, and 0.93 dB, respectively. Additionally, the insertion loss for the TE1 mode within the 100 nm bandwidth is less than 3.75 dB, and the inter-mode crosstalk is below -16.26 dB. We demonstrate the design of high-performance silicon mode-control devices using the DNN inverse design method, which serves as a crucial component for MDM networks. Furthermore, the DNN-based inverse design platform developed in this study can be extended to the design of various types of photonic devices, thus providing an effective tool for advancing photonic integration technology.

ObjectiveWith the continuous development of optical technology, there is an increasing demand for the precise manipulation of light beams and efficient utilization of energy. However, traditional optical devices often have single functions, which makes it difficult to meet the complex and diverse needs of modern applications. In recent years, the introduction of phase change materials has not only enabled dynamic control of metasurfaces but also significantly enhanced their functionality and flexibility, which better addresses the requirements of multifunctional application scenarios. Phase change materials are highly sensitive to environmental changes and can alter their lattice states under external stimuli, which exhibits rapid phase switching and phase retention capabilities. Among various phase change materials, vanadium dioxide (VO2) has attracted attention due to its unique electrical and optical properties. Currently, most VO2-based metasurface structures can only achieve single-function control within specific wavelength ranges. However, research on metasurfaces that can integrate perfect absorption and anomalous reflection dual functions, while also possessing switchable characteristics, has not yet been reported. We theoretically and numerically propose a switchable dual-function metasurface based on the phase change characteristics of vanadium dioxide.MethodsThe designed metasurface structure consists of three layers: gold (Au) as the substrate, silicon dioxide (SiO2) as the dielectric middle layer, and a top layer made of a cross-shaped VO2 structure (Fig. 1). The electromagnetic simulations are performed by utilizing the finite-difference time-domain (FDTD) method. Circularly polarized light is incident on the metasurface along the negative direction of z-axis. To ensure the convergence of calculations, the simulation time is set to 30000 fs.Results and DiscussionsThe simulation results indicate that utilizing the reversible phase transition of vanadium dioxide between metallic and dielectric states, the metasurface can flexibly switch between a dual-band perfect absorber and a four-channel beam splitter. Specifically, when vanadium dioxide is in the metallic state, the designed metasurface structure can be regarded as a metal-insulator-metal (MIM) model, where the gold substrate and the top layer of metallic VO2 form a selective perfect absorber, which achieves dual-peak perfect absorption at wavelengths of 490 and 798 nm (Fig. 2). When vanadium dioxide is in the dielectric state, the structure can reflect the incident circularly polarized light into four equal-intensity anomalous reflected beams in the 720?770 nm wavelength range. This results in a conversion efficiency exceeding 90%, thus functioning as a broadband four-channel anomalous reflector (Fig. 3). Furthermore, as the wavelength increases, the anomalous reflection angle changes from 46.05° to 50.35° (Fig. 4). The theoretical calculations are consistent with the numerical simulation results. Finally, since the geometric dimensions of the structural parameters cannot be ignored in practical applications, we discuss the effects of the geometric parameters, i.e., thickness (h), structural period (P), cross width (w), and cross length (L), on the performance of the four-channel beam splitter. The results reveal that the structural parameters h and P significantly influence the anomalous reflection characteristics (Fig. 5), while variations in parameters w and L have a smaller impact on the anomalous reflection performance (Fig. 6).ConclusionsIn summary, we present a functional switchable metasurface based on the phase change characteristics of vanadium dioxide. By changing the temperature to alter the phase change characteristics of VO2, it is possible to switch between dual-band optical perfect absorption and beam splitting. When VO2 is in the metallic state, dual-peak perfect absorption is achieved at wavelengths of 490 nm and 798 nm. When VO2 is in the dielectric state, the structure can achieve a conversion efficiency exceeding 90% for four-channel broadband anomalous reflection in the 720?770 nm wavelength range. Ultimately, this switchable dual-function metasurface structure based on vanadium dioxide can achieve precise control over the direction, intensity, and wavelength of light beams, which facilitates dynamic optical path switching, multi-wavelength splitting, and non-traditional beam manipulation. This development provides new technological pathways for the integration of photonic chips, super-resolution imaging, and quantum optical devices, thus promoting the innovative advancement of multifunctional optical devices.