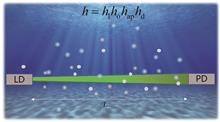

ObjectiveUnderwater wireless optical communication (UWOC) has a longer transmission distance and a higher data rate compared with underwater radio frequency communication and underwater acoustic communication. However, the absorption, scattering, and turbulence effects in the marine environment seriously affect the transmission quality of the optical signals, resulting in a limited transmission rate and an increased bit error rate (BER) of the UWOC system. Autoencoders can achieve end-to-end UWOC performance by using deep neural networks to jointly optimize the transmitter and receiver. However, as one of the most important data representation methods in autoencoders, the one-hot vector has a low data transmission rate. In order to solve these issues, in this paper, we propose an adaptive transmission scheme for underwater autoencoders based on deep neural networks on a joint channel that considers Gamma-Gamma turbulence and transmission path loss. This scheme can effectively suppress the impacts of underwater turbulence, absorption, and scattering on the performance of UWOC systems, improve the data rate of underwater autoencoders, and reduce the BER of the system.MethodsIn this paper, an adaptive transmission scheme for underwater autoencoders with mean square error (MSE) performance constraints was proposed by using the deep neural network. The UWOC channel model was established by using the path loss of the Beer-Lambert law and the probability density function of the Gamma-Gamma underwater turbulence distribution. By simulating the performance of the autoencoder’s non-adaptive one-hot vector and comparing it with that of the adaptive transmission scheme under different UWOC channel conditions, the effects of different turbulence intensities, received signal-to-noise ratios (SNRs), and training parameter ensembles on the non-adaptive and adaptive transmission performance of the underwater autoencoder were discussed, respectively.Results and DiscussionsIn this paper, an adaptive transmission scheme for underwater autoencoders is proposed to solve the problem of limited data rate caused by the one-hot vector of underwater autoencoders. The autoencoder is trained and tested under different ocean channels, as well as under different network training conditions, and the optimal transmission vectors are adaptively selected according to the set MSE performance constraints. Compared with non-adaptive transmission, the adaptive transmission scheme of the underwater autoencoder maximizes data transmission rate, reduces the BER, and improves communication performance (Fig. 5 and Fig. 7). At the same time, for different types of water bodies, instead of using a single training condition parameter, using a training parameter set for underwater autoencoders can obtain a more robust neural network model, making the autoencoder have a certain degree of generalization ability (Fig. 8).ConclusionsThe adaptive transmission scheme for underwater deep autoencoders proposed in this paper can adaptively select the optimal vector for transmission according to the MSE constraints under different UWOC channel conditions, so as to maximize the data transmission rate. Under the joint influence of Gamma-Gamma turbulence and transmission path loss, the BER and data rate of the autoencoder using non-adaptive one-hot vector and adaptive transmission schemes are simulated and analyzed, respectively. The results show that the underwater autoencoder not only simplifies the system model but also has better BER performance compared with conventional communication systems. The autoencoder has different network loss performances under different training conditions, and the autoencoder trained by utilizing training parameter sets can obtain a more robust performance than that trained by utilizing a single training parameter. In addition, under the same training conditions, the BER and data rate of the adaptive transmission scheme adopted by autoencoders are better than those of the non-adaptive scheme. The proposed adaptive transmission scheme for underwater autoencoders provides a new approach to improving the performance of the UWOC system, and its feasibility has been verified through simulation.

ObjectiveAfter illumination, the electron transport chain of dark-adapted phytoplankton is inhibited to bring about the gradual closure of the reaction center. Light energy absorbed by the light-harvesting pigment is released solely by fluorescence or thermal dissipation to elevate fluorescence yield. This increase initiates the chlorophyll fluorescence induction process. In the early stage of chlorophyll fluorescence induction, the photochemical reaction has not yet commenced, and the photosynthetic reaction center remains fully accessible. This stage is termed the initial fluorescence phase, and it is measured by light sources with varying wavelengths within the visible light spectrum. These measurements provide vital photosynthetic insights, including pigment content, reaction center concentration, energy absorption, and excitation energy transfer. They precisely depict the structure and composition of light-harvesting pigments in phytoplankton, along with energy absorption efficiency. Consequently, this technique critically contributes to analyzing the photosynthetic status and primary productivity of live phytoplankton. Following the revelation of chlorophyll fluorescence induction, multiple techniques for measuring initial fluorescence have emerged. For example, Schreiber et al. introduced the technique of pulse amplitude modulation (PAM) for measuring photoinduced fluorescence kinetics, Kolber et al. suggested the fast repetition rate fluorescence (FRRF) measurement technique, and Strasser et al. developed the OJIP technique for rapidly measuring chlorophyll fluorescence-induced kinetic curves by continuous excitation luminescence. Currently, research on the technical approaches predominantly centers on characteristic absorption bands. Nevertheless, the sensitivity of non-characteristic absorption bands remains limited, hindering the accurate portrayal of the structural composition of light-harvesting pigments and energy absorption efficiency. Therefore, the development of a profoundly sensitive method for initial fluorescence measurement is pivotal in advancing the investigations of phytoplankton primary productivity.MethodsWe employ the photosynthetic electron transport model and the OJIP fluorescence kinetics measurement technology to regulate the redox state of electron receptors proximate to the O phase, thereby attaining optimal excitation conditions. Under weak light excitation, LHCII absorbs energy at a low level, and the excitation energy is transferred to the reaction center. The electron acceptor can receive and promptly re-oxidize electrons to establish a rapid dynamic equilibrium, which leads to a consistent initial fluorescence signal. Due to the weak nature of the initial fluorescence signal, integrating and amplifying signals across various bands within the microsecond range enable the attainment of highly sensitive detection (50-150 μs) of initial fluorescence. Thus, precise acquisition technology for initial fluorescence is indispensable for investigating the primary productivity of phytoplankton by fluorescence dynamics. Validation of the initial fluorescence measurement results involves comparing the PSII absorption coefficient and initial fluorescence similarity.Results and DiscussionsThe findings from the initial fluorescence measurements indicate strong correspondence between the measurements of photosynthetic pigment absorption in phytoplankton and actual absorption patterns. For example, Microcystis aeruginosa exhibits a PE absorption peak at 569 nm and a PC absorption peak at 620 nm, and freshwater green algae show an absorption peak of Chl a at 439 nm and a Car absorption peak at 474 nm (Fig. 3). Moreover, compared to the reference sample, the verification results indicate the proficient representation of PSII absorption by the initial fluorescence, thereby confirming a substantial degree of measurement accuracy. The PSII fluorescence yield closely mirrors the initial fluorescence profile, exhibiting similarity values of 0.996 for Microcystis aeruginosa, 0.999 for Scenedesmus dimorphus, 0.999 for Scenedesmus obliquus, 0.999 for Chlorella ellipsoidea, 0.998 for Oocystis lacustris, and surpassing 0.998 for all four species of freshwater green algae (Fig. 4).ConclusionsWe address the constraints of existing initial fluorescence measurement methodologies, which predominantly concentrate on characteristic absorption bands to reduce sensitivity for absorption bands lacking distinct characteristics. As a result, these techniques inadequately represent the energy absorption efficiency of photosynthetic organs in algae. To this end, we propose a precise technology for acquiring initial fluorescence and facilitating primary productivity measurement in phytoplankton by fluorescence dynamics. This approach integrates the photosynthetic electron transfer model with the measurement principles of OJIP fluorescence dynamics technology. The results of the initial fluorescence measurements demonstrate significant correspondence between the measurements of photosynthetic pigment absorption in phytoplankton and actual absorption patterns. For example, Microcystis aeruginosa exhibits a PE absorption peak at 569 nm and a PC absorption peak at 620 nm, and freshwater green algae show an absorption peak of Chla at 439 nm and a Car absorption peak at 474 nm. Furthermore, the comparative verification results indicate a close similarity between the shapes of the PSII fluorescence yield and the initial fluorescence, affirming the capacity of the initial fluorescence to precisely mirror PSII absorption. The similarity values are noteworthy, with 0.996 for Microcystis aeruginosa, 0.999 for Scenedesmus dimorphus, 0.999 for Scenedesmus obliquus, 0.999 for Chlorella ellipsoidea, 0.998 for Oocystis lacustris. Additionally, all the four species of freshwater green algae surpass 0.998. We introduce a remarkably sensitive measurement technology for initial phytoplankton fluorescence to facilitate precise and accurate measurements. Consequently, noteworthy technical advancements are provided for investigating primary productivity in phytoplankton.

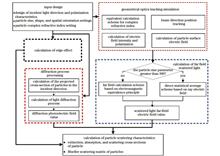

ObjectiveCirrus clouds and some top clouds typically contain a large number of ice crystal particles, which have a strong scattering and absorption effect for visible and infrared radiation and play an important role in the balance of the atmospheric energy budget. Understanding the radiation characteristics of cirrus clouds must start with understanding the single scattering characteristics of non-spherical ice crystals. However, due to the irregular shape of ice crystal particles such as hexagonal columns and bullet flowers, the calculation of their scattering characteristics has significant uncertainty, making them one of the most uncertain factors in radiation transfer simulation. Therefore, accurately simulating the light scattering process of ice crystals is a current research focus. At present, for ice crystal particles with small and medium-sized parameters, scattering calculation models for non-spherical particles are gradually developing, such as discrete dipole approximation (DDA), invariant imbedding T-matrix (IIMT matrix), and finite-difference time-domain (FDTD) method. The DDA and FDTD models are mainly employed for particles with size parameters less than 40, and the IIMT matrix model is mainly for particles with size parameters less than 100. For large-size parameter particles, most of the traditional geometric optical approximation models are adopted in China to calculate their scattering parameters. However, this method is based on the fact that the particle size is much larger than the incident light wavelength, and the effect becomes worse during calculating particles with size parameters less than 300. Therefore, it is extremely important to independently develop the geometrical optics approximation model and expand its applicable size parameter range. In this regard, we develop an improved geometric-optical approximation model by combining the electromagnetic equivalence principle with ray tracing techniques and considering the effects of diffraction and particle absorption.MethodsFirstly, a ray tracing algorithm considering polarization is constructed using Monte Carlo technology to simulate the beam reflection and refraction processes and to track the propagation direction and electric field vector of the beam. Secondly, based on ray tracing, a calculation scheme for far-field scattering electric field is studied. For particles with size parameters greater than 300, a direct ray statistics scheme is adopted, and for small particles, a far-field electric field calculation scheme based on the electromagnetic equivalence principle is designed. Furthermore, the study of diffraction calculation techniques for irregular cross-sections involves projecting particles in the direction of incident light and numerically solving the diffraction equation to obtain the diffraction electric field. Finally, based on the ray tracing electric field, the far field electric field of scattered light, and the diffraction electric field, the calculation scheme of particle scattering characteristics is designed to realize the calculation of particle extinction, absorption cross section, S-matrix, and other scattering parameters. The calculation model of the edge diffraction effect is built to realize the compensation and correction of extinction and absorption cross section.Results and DiscussionsWe compare the calculation results of IGOA with the physical geometrical optics approximation model (PGOM) developed by Yang et al. and the independently developed IIMT model, with the phase matrix elements computed by IGOA and PGOM compared. The particle is a hexagonal column with an aspect ratio of 1.0, and the size parameter is 100 and 300. The refractive index is 1.308+i1.43×10-9, and the incident light wavelength is 0.65 µm. The results of the IGOA model and the PGOM model are basically consistent, and their scattering phase function (P11) varying with scattering angle is basically in sound agreement, especially in the forward scattering angle (0°-90°), which is more significant. Generally, P12, P22, P33, P34, and P44 have a high degree of agreement. A hexagonal prism particle with a size parameter of 300 is calculated, with a bottom length of 572.95779 µm, a height of 286.47889 µm, an incident light wavelength of 12 µm, and a particle complex refractive index of 1.2762+i0.4133. The calculated results are compared with those of PGOM, as shown in Fig. 7. For strongly absorbing particles, the calculation results of IGOA and PGOM maintain high consistency at various scattering angles, especially for polarization characteristics, where the calculation curves of the two models basically coincide. This means that the IGOA model can also achieve a high level of computational accuracy for strongly absorbing particles. To analyze the calculation results of smaller parameter particles with different shapes, we calculate the scattering phase matrices of hexagonal and dodecagonal prism particles by IGOA and IIMT models, with sound consistency between the two models.ConclusionsThe geometrical optics approximation model is an important tool for calculating the light scattering characteristics of large size parameter particles, but it is based on the fact that the incident light wavelength is longer than the particle size. For particles with size parameters of 100-300, the simulation accuracy is relatively poor because they are just in the transition scale range from physical optics to geometrical optics. To solve this problem, we combine the ray tracing technology with the electromagnetic equivalence principle, consider the strong absorption and diffraction of particles and other factors, and independently compile the improved IGOA model. The calculation results are compared with those of the IIMT and PGOM models, and the following conclusions are drawn:1) For particles with size parameters ranging from 80 to 300, the calculation results of the IGOA model are highly consistent with those of the PGOM and IIMT models, which indicates that the calculation results of the model have high accuracy. Generally, the calculation accuracy of the model is maintained at a high level for particles with different size parameters and complex refractive indexes.2) For scattering characteristic calculation of strongly absorbing particles, the IGOA model shows sound simulation performance, and its calculation results basically coincide with the calculation curve of PGOM.

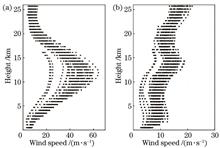

ObjectiveWind speed and direction exert an important influence on atmospheric optical properties, and their vertical distribution and variation laws are of significance in astronomical observation, adaptive optics, and laser atmospheric propagation. Meanwhile, strong wind shear can trigger turbulence, and the transverse wind is closely related to thermal blooming. Since the natural environment is complex and changeable, wind speed and direction vary in different regions. Therefore, local wind speed and direction models should be established in practical applications. Due to the low accuracy of existing wind speed profile models and the lack of wind direction profile models, we analyze the variations of wind speed and direction in typical coastal areas of China, and propose novel wind speed and direction model functions respectively. We hope that the proposed wind speed and direction profile models are helpful to the design of adaptive optical systems, laser atmospheric propagation engineering, and free space optical communication.MethodsFirst, by analyzing the daily data of 50 years of radiosonde data in typical coastal areas of China, the monthly average wind speed and direction profiles are obtained to study the monthly variation characteristics. Then, according to the monthly variation characteristics, the months in each season are adjusted, and the seasonal average profiles are acquired by statistically calculating daily data, with standard deviation at the corresponding height given. According to the seasonal average profiles, the coefficients of the model functions are obtained by genetic algorithm. Further, taking the average profile as the standard, the proposed models are compared with the existing models to verify that the accuracy of the proposed model is improved. Next, the standard deviation is adopted as uncertainty to analyze the variation characteristics of uncertainty with height.Results and Discussions1) According to the variation characteristics of monthly average profiles of typical coastal areas in China, the wind speed profiles are divided into two types (Fig. 1), and the wind direction profiles are divided into three types (Fig. 2). 2) The seasonal average profiles of wind speed show that the tropopause wind speed is generally high in winter and low in summer in the middle latitudes. The wind speed near 20 km at Dalian and Qingdao decreases to the minimum in summer, but the same phenomenon appears in winter at Xiamen and Xisha Islands. 3) Employing the genetic algorithm, we obtain the fitting coefficients of the wind speed and wind direction model functions in different seasons (Tables 5 and 6). 4) The established wind speed models are closely consistent with the average profiles, and the accuracy is significantly improved compared with existing wind speed models (Figs. 4-8). The average relative deviations of established wind speed models of four seasons in Dalian are 1.35%, 1.54%, 1.12%, and 0.79%, respectively. While those are 2.92%, 2.50%, 3.29%, and 3.21% in Qingdao, 2.28%, 1.18%, 1.06%, and 1.96% in Shanghai, 1.82%, 1.17%, 2.56%, and 1.66% in Xiamen, 2.80%, 0.90%, 0.98%, and 3.16% in Xisha Islands, respectively. 5) The established wind direction profile models are closely consistent with the average profiles (Figs. 9-13). The average relative deviations of established wind direction models of four seasons in Dalian are 0.53%, 1.56%, 0.67%, and 0.44% respectively. While those are 0.82%, 1.30%, 0.56%, and 0.59% in Qingdao, 0.48%, 0.78%, 1.25%, and 1.07% in Shanghai, 0.95%, 1.06%, 1.71%, and 1.81% in Xiamen, 0.87%, 1.04%, 0.69%, and 2.70% in Xisha Islands, respectively. 6) The variation trend of wind speed uncertainty is different from that of wind direction. The uncertainty of wind speed is generally the largest at the tropopause, while the uncertainty of wind direction is the smallest near the tropopause in Dalian and Qingdao, and the uncertainty of wind direction is more varied in other areas. However, the uncertainties of wind speed and wind direction have seasonal and regional varying characteristics.Conclusions1) In typical coastal areas of China, the wind speed profiles are divided into two types, and the wind direction profiles are divided into three types. 2) In our study, wind speed and direction vary with regions, months, and seasons. For optical engineering applications such as laser atmospheric propagation, the adoption of wind speed and direction models in local regions and corresponding seasons is more accurate. 3) The proposed wind speed and direction model functions are universal in the typical coastal areas of China. The wind speed and direction profile models established in this paper have higher accuracy than the existing models. The mean relative deviation of wind speed is less than 3.29%, and that of wind direction is less than 2.70%. 4) The statistical uncertainties of wind speed and direction have seasonal and regional characteristics.

ObjectiveDue to the increasing ocean exploration by a large number of scientific activities and military operations, researchers are investigating high-speed, stable, and long-range underwater wireless communication technologies. Compared with traditional acoustic and radio frequency communications, underwater wireless optical communication (UWOC) systems are attracting a great deal of interest from researchers due to their advantages of big bandwidth, high information transmission rate, and sound confidentiality. However, UWOC systems are not only affected by water absorption but also suffer from the loss caused by misalignment between receiving and transmitting systems or the loss caused by pointing error, which cannot be neglected. Additionally, ocean turbulence also causes flickering of received light intensity, affecting the system performance. However, the inconsistency of salinity diffusion and heat diffusion mechanisms in real marine environments causes unstable seawater stratification, which results in the scintillation effect of the Gaussian beam in the ocean turbulence channel deviating significantly from the actual marine environment. Therefore, a pointing-jitter error model in UWOC is developed by the unstable Yue ocean power spectrum with ocean water stratification. The model takes into account water body attenuation, pointing-jitter error loss, and link attenuation caused by seawater turbulence, and finally investigates the effect of aperture averaging technique on system performance.MethodsTo more accurately model underwater wireless communication systems, we discuss the effect of pointing-jitter error on the performance of laser communication systems based on a turbulent seawater fading channel. Firstly, for the pointing error, we introduce the relative position parameter of the laser transmitter and the deflection angle parameter to determine the state of the transmitter. Meanwhile, the jitter error is employed to characterize the effect of seawater turbulence on the receiver body. For the effect of seawater turbulence, we adopt the Yue spectrum that considers the stratification instability of the ocean water body and give an analytical equation for the scintillation index of a Gaussian beam based on the Yue spectrum after aperture averaging. Additionally, we build a composite channel model including water body attenuation, pointing-jitter error loss, and seawater turbulence, and introduce a signal-to-noise ratio correction factor to simulate the positional attenuation of the Gaussian beam in the attenuation channel. At the same time, we give the bit error rate (BER) expressions of the system based on the on-off key (OOK) modulation with and without the jitter error to measure the system performance respectively.Results and DiscussionsTo obtain the scintillation index of Gaussian beam transmission under the parameter of variable temperature salt vortex diffusion ratio in the water column, we numerically simulate the scintillation index of Gaussian beams in ocean turbulence with the transmission distance and different aperture sizes. The results show that the aperture averaging technique has the effect of suppressing the scintillation index caused by both stable and unstable seawater stratification, but the suppression effect is nonlinearly related to both aperture sizes (Fig. 6). The system decreases the turbulence suppression effect with the increasing aperture size. The effects of pointing error and jitter error in the composite channel on the UWOC system are further investigated. In the jitter error-free channel, a change of 0.04 m in the transmitter position sending can greatly affect the system performance, and the BER performance of the system decreases by 17.15 dB in strong turbulence, and 20.55 dB in weak turbulence (Figs. 7 and 8). In the composite channel that includes water body attenuation, pointing-jitter error loss, and seawater turbulence, we find that in the weak turbulence channel of the UWOC system, the effect of pointing error on the system performance is greater than that of jitter error on the system performance, while the effect of weak turbulence on the system performance is less (Fig. 11).ConclusionsThe results show that the aperture averaging technique has an inhibiting effect on the scintillation index caused by both stabilization and instability of seawater stratification, but the inhibiting effect is nonlinearly related to both aperture sizes. As the aperture size increases, the turbulence suppression effect gradually decreases. In the jitter error-free channel, a change of 0.04 m in the transmitter position sending can greatly affect the system performance, and the BER performance of the system decreases by 17.15 dB in strong turbulence and 20.55 dB in weak turbulence. In the composite channel containing water fading, pointing-jitter error loss, and seawater turbulence, we find that in the UWOC system of the weak turbulence channel, the pointing error has a greater effect on the system performance than the jitter error has on the system performance, while the weak turbulence has a smaller effect on the system performance. Additionally, the aperture averaging technique can significantly improve both turbulent channel attenuation and pointing-jitter error, while the suppression of seawater turbulence is the most obvious. Our study is of guiding significance for an in-depth understanding of the transmission characteristics of Gaussian beams in real ocean channels and provides an effective theoretical basis for the application of aperture averaging technique to suppress turbulence in complex ocean environments. Meanwhile, references are offered for related research on underwater laser localization.

ObjectiveUnderwater imaging is an important method for exploring oceans, lakes, and other underwater environments, and it is of significance for many fields such as coastal defense, ocean exploration, underwater rescue, and aquaculture. However, there are many suspended particles in the actual water environment, which will scatter and absorb the signal light of the target. Therefore, images obtained by underwater imaging often feature image quality degradation, such as serious contrast reduction and serious detail loss. Due to the differences in polarization characteristics between the signal light and the backscattered light, polarization imaging technology is introduced to underwater imaging and polarization information is employed to suppress scattered light and enhance signal light, which can make up for the shortcomings of detection effects restricted by the environment. Although existing polarization-based descattering methods for underwater imaging can enhance image contrast and improve image quality, these methods only focus on intensity information and ignore polarization information restoration, resulting in a loss of polarization information. In fact, the degree of linear polarization (DoLP) and polarization angle (AoP) among other polarization information can reflect the polarization characteristics of the target. Meanwhile, they are adopted to distinguish targets based on different polarization states and have important applications in underwater detection and recognition. Therefore, we propose a neural network based on the channel attention mechanism to extend the function of underwater polarization imaging by restoring polarization information.MethodsThe proposed method mainly utilizes a convolutional neural network to restore the polarization information. The network is mainly composed of three parts, including shallow feature extraction module (SFE), a series of residual dense modules (RDBs), and channel attention-based global feature fusion module (CAGFF). Specifically, SFE employs U-Net and two convolutional layers as feature extraction module to extract shallow features containing polarization features of input images. Subsequently, the shallow features with rich polarization information extracted by SFE are input into a series of RDBs, which are mainly composed of dense connections and residual connections of convolutional layers. A series of RDBs outputs are then fed into the CAGFF which consists of a channel attention module and a convolutional layer. Finally, the polarization informed content and style loss (CSL) is designed to train the network, which employs intensity images to calculate the content loss and adopts polarization information to calculate style loss.Results and DiscussionsThe results of polarization imaging experiments of different objects in underwater environments show that our method can restore polarization information and improve underwater imaging quality. In ablation experiments, the four contributions of the network are removed step by step to verify the effectiveness of each contribution. By visual comparison, the network with all improvements accurately recovers both intensity image and polarization information (Fig. 5). Removing any component will degrade the restored results and blur details. Quantitatively, our network yields the highest peak signal to noise ratio (PSNR) value among different network structures (Table 2). Compared with other representative methods of underwater image enhancement, the proposed method has the best performance in intensity, DoLP, and AoP images (Fig. 6). The PSNRs of two different scenes illustrate that the proposed method has advantages in the reconstruction of DoLP and AoP images. Additionally, several groups of experiments on different parameters are conducted to verify the influence of these parameters. First. the number of channel attention modules is determined by comparing the network performance with different values (Fig. 7). Then by the comparative experiment of RDB parameters, we find that the values of RDB parameters are positively correlated with the network performance (Fig. 8). Finally, the weight of the loss function is fully discussed (Fig. 9).ConclusionsAiming to remove the scattered light and restore the polarization information, we propose a neural network based on the channel attention mechanism. Based on the residual dense network, our network mainly has four contributions, which are polarization input, SFE with U-Net, CAGFF, and polarization informed CSL function. By performing the above contributions, the network can efficiently extract and utilize the polarization features of different levels to restore the polarization information. Meanwhile, we build a dataset for underwater polarimetric images, in which the input images and ground truth images are respectively obtained in turbid water and clear water. Based on this dataset, we carry out a series of experiments on different objects in underwater environments. Ablation experimental results show that our contributions to the network are effective, and removing anyone will degrade the results. Compared with other underwater polarization imaging methods, the results show that our method can suppress the influence of scattered light on polarization imaging and significantly improve the image contrast and clarity. Specifically, our method can successfully restore the DoLP and AoP to expand the function of underwater polarization imaging.

ObjectiveLaser return number is an important parameter to perform the detection ability of a satellite laser ranging (SLR) system, which is proven to be closely related to the atmospheric transmission characteristics of laser. Accurate evaluation of the laser return number in the SLR system not only provides a theoretical basis for system design and optimization but also is a key issue and primary link in the future development of SLR automation systems. In SLR system operation, the atmospheric scattering effect, atmospheric absorption effect, and atmospheric turbulence effect continuously reduce the laser energy during atmospheric channel transmission, directly affecting the size of the average laser return number in the SLR system. The influence of the atmospheric environment on photon detection becomes increasingly evident as the detection distance further increases. To effectively evaluate the average laser return number in the SLR system and explore the relationship between laser atmospheric transmission characteristics and the detection performance of the SLR system, we should analyze the atmospheric transmission characteristics of lasers.MethodsLidar atmospheric correction (LAC) model based on Mie scattering theory and actual meteorological conditions is built in our study. First, based on the tilted propagation theory of laser, the entire atmosphere transmittance at different wavelengths (450, 500, 550 nm) is calculated. Then, the average laser return number per unit time of the SLR system in different meteorological conditions is calculated, and the model is validated by the actual observation results of the 60 cm SLR system at Changchun Observatory. Finally, the effects of visibility and relative humidity on the average laser return number are analyzed.Results and DiscussionsCompared with the empirical formula adopted in conventional lidar equations, the mean average relative error of atmospheric transmittance calculated using the laser slanting revise theory decreases from 14.201% to 5.992%, which is about an order of magnitude smaller (Fig. 2 and Table 1). The calculated average laser return number per unit time of SLR system based on the LAC model exhibits good consistency with the measured data, with an average relative error of less than 15% (Fig. 4 and Table 2). The average laser return number received by the SLR system is proportional to visibility and inversely proportional to relative humidity (Figs. 5 and 6). When the elevation angle of the telescope is less than 15°, the influence of visibility and relative humidity on the average laser return number is not significantly different. When the elevation angle of the telescope is greater than 15°, the influence of visibility is slightly greater than that of relative humidity, and reaches its peak around 60° (Fig. 7). Additionally, we also find that due to the temperate continental climate of Changchun Observatory, there are significant seasonal variations in the average laser return number per unit time received by the SLR system (Fig. 8).ConclusionsAverage laser return number in SLR system is an important parameter characterizing the detection ability of the system, which is closely related to the atmospheric transmission characteristics of lasers. Based on Mie scattering theory and the actual distribution of aerosol particles, the LAC model is proposed and employed to calculate the average laser return number in the SLR system. By taking the 60 cm SLR system at Changchun Observatory as an example, the effect of climate conditions on the average laser return number in the SLR system is analyzed. The results indicate that the average laser return number in SLR system increases with the rising visibility near the surface and decreases with the increasing relative humidity. When the elevation angle of the telescope is greater than 15°, the influence of visibility is greater than that of relative humidity, and their influence reaches its peak around 60°. Our study not only elucidates the inherent mechanism by which climate conditions affect the detection performance of SLR system but also provides new theoretical solutions and technical support for SLR system site selection and performance evaluation.

ObjectiveWith the escalating concerns about global climate change and the intensification of the greenhouse effect, the increase in atmospheric CO2 concentration is considered one of the primary driving factors. To effectively manage and mitigate these emissions, the accurate and real-time monitoring of atmospheric CO2 concentrations becomes particularly crucial. Monitoring atmospheric CO2 not only provides scientists with valuable information on current emission levels and changing trends but also offers policymakers a basis for decision-making to formulate or revise relevant environmental and climate policies. Moreover, by continually and accurately monitoring atmospheric CO2, we can gain a better understanding of its interactions with other climate parameters, thus supplying more accurate input data for global climate models. In recent years, satellite remote sensing technology has become a vital tool for monitoring atmospheric CO2, especially in vast or inaccessible regions. However, point source emissions, such as those from factories and power plants, tend to be highly concentrated spatially. For these small yet concentrated emission sources, traditional satellite remote sensing technologies may encounter challenges related to insufficient resolution. To address this challenge, this paper delves into a profound theoretical analysis of the spatial resolution capabilities of the next generation of imaging satellite remote sensing in monitoring atmospheric point source CO2 emissions. It quantifies the resolution capabilities and applicable scenarios of imaging satellites, laying a theoretical foundation for the resolution capability analysis and information interpretation methods of future imaging detection data for CO2 emissions, drawing from specialized backgrounds.MethodsTo explore the enhancement of point source CO2 emission monitoring capabilities by the spatial detection capabilities of satellite imaging remote sensing, this study is based on the CALPUFF Lagrangian particle dispersion model to investigate the dispersion state after carbon source emissions. Furthermore, based on the capabilities of satellite observation, we conduct an analysis of the spatial resolution capabilities of atmospheric CO2 satellite imaging remote sensing. The satellite imaging remote sensing’s resolution capability for atmospheric CO2 primarily reflects the accuracy in the spectral, radiative, and spatial resolutions. Additionally, the CO2 concentration retrieval based on remote sensing data also involves the merits of the method and the accuracy of environmental parameters, making the analysis of satellite imaging remote sensing’s CO2 monitoring capabilities a highly complex issue. Since Japan’s GOSAT and the U.S.’s OCO-2 have already demonstrated retrieval capabilities of 4×10-6 and 1×10-6 respectively from spectral, radiative, and retrieval perspectives, this paper is built upon this foundation, that is, based on the existing remote sensing technology capabilities, to conduct simulations and analyses on spatial resolution capabilities. Simultaneously, to further quantify the detection capabilities of atmospheric CO2 under imaging satellite conditions, we introduce quantitative evaluation methods, namely, the pixel count statistical method and the emission flux algorithm. Under different satellite spatial resolution conditions, we quantitatively evaluate the spatial resolution capabilities of imaging satellites in detecting point source CO2 emissions.Results and DiscussionsWe conduct an in-depth simulation analysis of the spatial plume distribution characteristics of point source CO2, focusing on the impact of different meteorological conditions and emission source intensities on its dispersion. Initially, the results show that under calm conditions, the spread of the CO2 plume takes on a concentric circular pattern. Under conditions with an emission source as high as 3000 t/h, its spatial dispersion can reach a radius of 1 km. This distribution characteristic suggests that under stable atmospheric conditions, the diffusion of pollutants is primarily driven by the motion of CO2 particles themselves, making their detection from a satellite perspective more prominent. In windy conditions, the presence of wind dominates the direction and scope of the CO2 plume’s dispersion. As wind speed increases, the spatial range of CO2 dispersion expands, but its concentration gradient difference gradually narrows, especially evident when wind speeds reach 10 m/s. However, as the emission intensity of CO2 increases, the difference in its spatial concentration distribution grows exponentially. This effectively indicates that a higher emission source intensity can counteract some of the atmospheric CO2 dispersion dilution effects caused by increased wind speeds. Further analysis reveals that spatial resolution is crucial for the success of satellite detection. Within a spatial resolution range of 0.05-10 km, high-resolution detection pixels demonstrate significant advantages under various environmental conditions. Specifically, as the spatial resolution increases, the number of CO2 plume pixels identifiable from a satellite perspective notably grows. For emission sources of 3000 t/h compared with 500 t/h, the number of detectable pixels increases by nearly 15% on average. This further validates the pivotal role of spatial resolution and emission source intensity in satellite detection of CO2 plumes. Additionally, we also closely examine the impact of different satellite CO2 retrieval accuracies on detection capability. Data indicates that under the retrieval accuracy of 1×10-6 compared with 4×10-6, satellites can detect a greater number of pixels, with the difference reaching up to two times. Furthermore, when the emission intensity reaches the research-set maximum of 3000 t/h, compared with medium and low emission sources, the required spatial resolution is 2-4 km, further reducing the demands on satellite technology.ConclusionsConsidering the spatial plume distribution of atmospheric CO2, we comprehensively consider the effects of meteorological conditions and emission source intensity. In calm wind conditions, CO2 diffuses in concentric circles, with a diffusion radius of up to 1 km. Moreover, its spatial gradient is more substantial, making it more amenable to satellite detection. Both wind speed and CO2 source emission intensity have significant impacts on dispersion and detection. Notably, high wind speeds result in an expanded dispersion range but reduce gradient differences, while high emission sources enhance the feasibility of satellite detection. Meanwhile, high spatial resolution and XCO2 retrieval accuracy can improve detection results. A higher resolution can enhance the identification of CO2 plume patterns and reduce estimation errors of its emission intensity. Meanwhile, the retrieval accuracy of 1×10-6, compared with 4×10-6 , better highlights XCO2 gradient changes, improving estimation accuracy by 15%. Under various conditions, sources with high emission intensities are more easily and accurately identified, especially when the wind speed is 10 m/s, and the emission intensity is 1000 t/h, requiring a spatial resolution of up to 1 km. With the advancement of imaging satellite technology, the spectral and spatial resolutions of remote sensing will further improve. The application areas and demands for remote sensing will also expand, thus making more significant contributions to global carbon emission monitoring.

ObjectiveAs underwater military activities and scientific research are increasingly frequent, the demand for high-speed, high-quality, and high-bandwidth underwater communication has become urgent. However, laser communication effectiveness in seawater is hampered by the scattering, absorption, and turbulence effects, which causes degraded beam quality and increased communication error rates. Consequently, it is of application significance to study the beam quality degradation characteristics of blue-green lasers in seawater. However, laser propagation calculation in seawater turbulence is quite complex and time-consuming. Therefore, it is of significance to establish a beam expansion calibration formula, especially for blue-green laser propagation in seawater turbulence. This scaling law will enable the rapid prediction and evaluation of beam expansion influence and patterns.MethodsFirst, we build a rigorous physical model to comprehend the propagation of blue-green lasers in seawater turbulence. By adopting the power spectrum inversion method, phase screens of seawater turbulence are generated to enable the numerical calculation of beam expansion variation, with both seawater parameters and laser parameters considered. Second, the β factor is employed to evaluate the energy concentration of laser beams on the target plane and thus revealing the beam expansion of lasers by seawater turbulence. Finally, a beam expansion calibration formula for blue-green lasers propagating in seawater turbulence is proposed via the processing method of the mean square sum.Results and DiscussionsThe estimation results of the scaling law obtained by fitting are compared with those of numerical calculation. The results show that the scaling law matches well with the numerical calculation under certain laser and seawater turbulence parameters. This is under the scenario that the laser parameters fall within the ranges of 0.001-0.100 m for the beam waist radius, 1.0-4.0 for the initial beam quality factor, 470-550 nm for the wavelength, and -5.0--0.5 for the value range of temperature-induced seawater turbulence to salinity-induced seawater turbulence. Additionally, the seawater turbulence parameters are kinetic energy dissipation rate of 10-10-10-1 m2/s3, and 10-10-10-4 K2/s for the dissipation rate of temperature difference. After imposing this limitation, for the total beam expansion, the maximum error between the beam expansion estimated by the scaling law and the numerical calculation results is 10.90%, with a maximum average error of 4.70%. Consequently, the scaling law can accurately predict the beam expansion laws of Gaussian beams propagating in seawater turbulence.ConclusionsTo rapidly and accurately predict the beam expansion law of Gaussian beams propagating in seawater turbulence, we first analyze the variation of beam expansion with laser and seawater parameters. Subsequently, the scaling law for beam expansion of blue-green lasers in seawater turbulence is proposed. On this basis, the coefficients in the scaling law are determined by employing the least squares method. The scaling law is then utilized to estimate the errors between the beam expansion estimated by the scaling law and the numerical calculation results under different parameters. The results show that within the specified parameter range, the error between the estimated beam expansion law by the scaling law and the numerical calculation results is within 5%.

ObjectivePhase state recognition of cloud particles is an important content in cloud physics research and also significant for inverting other cloud microphysical parameters. With the development of remote sensing detection technology, researchers have developed various recognition methods of cloud phase particles, such as decision tree recognition, classic statistical decision recognition, neural networks, clustering algorithms, and fuzzy logic algorithms. However, due to the complex characteristics of cloud particles, the radar information corresponding to different particles does not have absolute features, and there may be some overlap degree. Thus, recognition algorithms based on rigid threshold conditions are not well suitable for phase recognition and classification of cloud particles. Fortunately, the fuzzy logic recognition algorithm can improve this rigid threshold defect, but the accuracy of the T-function coefficients in fuzzy logic will directly determine the accuracy of the recognition results. To accurately and finely identify cloud phase states, we propose an optimization algorithm based on fuzzy logic to recognize the phase states of cloud particles. The optimized fuzzy logic algorithm can also recognize supercooled water and warm cloud droplets compared to the original fuzzy logic algorithm which can only recognize ice crystals, snow, mixed phases, liquid cloud droplets, drizzle, and raindrops.MethodsBased on the induction and summary of a large number of aircraft and remote sensing instruments simultaneously observed data and comprehensive characteristic consideration of different cloud types, we adjust and optimize the T-function coefficients of fuzzy logic. A table of T-function coefficient parameters for different cloud phase particles is constructed as shown in Table 2. The corrected reflectivity factor, radial velocity, and spectral width detected by millimeter wave cloud radars with high spatiotemporal resolution, as well as the temperature detected by microwave radiometer, are adopted as input parameters for the optimized fuzzy logic algorithm. According to the phase recognition process of cloud particles shown in Fig. 1, snow, ice, mixed phase, supercooled water, warm cloud droplets, drizzle, and rain in cloud particles can be identified.Results and DiscussionsThe cloud particle phase of a snowfall observed on 6 February 2022 in Xi'an is inverted to verify the effectiveness and accuracy of the optimized algorithm. Additionally, we input the parameters (corrected reflectivity factor, radial velocity, spectral width, and temperature) that can characterize the features of cloud particles in Fig. 3 into the optimized fuzzy logic algorithm, and obtain the phase recognition results of cloud particles shown in Fig. 5. The cloud phase distribution in Fig. 5 (near the ground area, at a height of about 200 m) is highly consistent with the particle phase changes recorded by the ground precipitation phenomenon meter. Meanwhile, we also compare the recognition results of the optimized fuzzy logic algorithm (Fig. 5) with the original fuzzy logic algorithm (Fig. 4) and find that the optimized algorithm can identify supercooled water that cannot be recognized by the original algorithm, which is beneficial for explaining the particle phase transformation process and precipitation mechanism research in clouds.ConclusionsWe propose an optimized fuzzy logic algorithm by optimizing the asymmetric T-function coefficients and considering the effects of reflectivity factor attenuation and temperature on the accuracy of recognition results. The corrected reflectivity factor, radial velocity, spectral width, and spatiotemporal continuous temperature detected by the microwave radiometer are leveraged as input parameters for the optimized fuzzy logic algorithm. The optimized algorithm can accurately identify snow, ice, mixed phase, supercooled water, warm cloud droplets, drizzle, and rain particles in clouds, which would help study and invert cloud microscopic parameters.

ObjectiveFilament refers to a plasma channel with high laser intensity and high plasma density formed by the propagation of intense femtosecond laser pulses in a transparent medium. Several literatures have shown that the cross-section image of an optical filament at a specific z usually contains abundant structural information such as filament diameter, length, and energy distribution, which is of great significance for the visualization study of the dynamic process of filament formation. Moreover, accurate acquisition of the spatial structure and energy deposition distribution of femtosecond optical filaments are also of great significance for the development of filamentation-based atmospheric applications. Nevertheless, it is also the inherent parameter most difficult to measure directly. To solve the problem, we introduce a new medical imaging method named photoacoustic tomography (PAT) for optical filament cross-section imaging. The feasibility of reconstructing monofilament and multifilament images by photoacoustic tomography is verified theoretically. Moreover, we also study the influence of the performance parameters of the ultrasonic transducers on the optical filament image reconstruction.MethodsWe adopt a forward simulation model based on the photoacoustic wave equation to simulate the acquisition process of ultrasonic signals induced by optical filaments in air. A circular-scanning-based PAT system is considered to obtain the cross-section image of the laser filament. To simplify the problem, we assume that the initial heat source distribution of the optical filament satisfies the Gaussian distribution form, which can represent both the small high-energy core of the optical filament and its weak background energy region with a larger range. Based on experimental measurements, the initial maximum energy deposition density is assumed to be in the order of 10 mJ/cm3, and the diameter of the heat source is assumed to be in the order of 100 μm. The simulated time series of the acoustic signal is then applied to reconstruct the transverse distribution of femtosecond laser filaments with delay and sum (DAS) algorithm. Moreover, we also analyze the influence of performance parameters of ultrasonic transducers such as center frequency, bandwidth, surface size, and detection surface sensitivity on the reconstruction of filament cross-sectional images. The back-projection amplitude distribution profile along the y-axis is leveraged to compare the effect of image reconstruction.Results and DiscussionsAccording to the time series of ultrasound signals generated by monofilaments and multifilaments recorded at different detection distances, the frequency of monofilament and multifilament induced by femtosecond laser with multi-millijoule pulse energy is mainly concentrated within 4 MHz (Fig. 2). The signal spectrum of monofilament is single-peak structure, while the acoustic signal spectrum of multifilament is multi-peak structure (Fig. 2). The amplitude value of sound pressure signal decreases rapidly due to the attenuation of air. As the center of the optical filament deviates further from the scanning center, the cross-section image of the optical filament reconstructed by the back-projection (BP) algorithm and the DAS algorithm appears an obvious "elongated" phenomenon in the tangential direction (y-axis), which is the so-called "finite aperture effect" (Fig. 3). For monofilaments, the maximum energy amplitude decreases significantly with the increase in the center frequency of the transducer, which may be related to the filtering out of more low-frequency signals (Fig. 4). The same method is adopted to reconstruct the image of multifilament. It is found that the reconstructed multifilament image appears serious deformation with the multifilament center position deviating from the scanning center (Fig. 5). When x0=1.0 mm, the two monofilaments near the scanning origin side can still be distinguished, whereas the two monofilaments near the transducer side are fused and cannot be distinguished. Therefore, the secondary filaments around the multiple filaments are more susceptible to the "aperture effect" and the fuzzy deformation occurs. The fuzzy deformation effect will be more obvious when the distance becomes larger from the scanning center or the distance becomes smaller from the surface of the transducer. Therefore, compared with monofilament reconstruction, multi-filament image reconstruction is more affected by the "aperture effect". Especially, the blur deformation of the surrounding sub-filaments is more likely. In summary, the characteristics of the transducer have an obvious influence on the reconstruction of monofilament and multifilament cross-sectional images. A larger bandwidth of the transducer will cause a smaller surface diameter, a larger surface sensitivity parameter, and a better reconstruction quality of monofilament and multifilament images. The influence of the center frequency of the transducer on the optical fiber image reconstruction is very complicated. Therefore, it is necessary to select the transducer with the appropriate center frequency combined with the spectrum analysis of the acoustic signal in the actual measurement.ConclusionsWe utilize a novel medical imaging method named PAT to reconstruct cross-section images of femtosecond laser filament formed in an air medium. The results show that the acoustic signal induced by a single filament has a single-peak structure, while that induced by a multifilament has a multi-peak structure. The performance parameters of the transducer have an obvious influence on the reconstruction results. A larger bandwidth of the transducer will lead to a smaller surface diameter, a larger surface sensitivity coefficient, and a better reconstruction effect of energy deposition distribution of optical filament. Compared with monofilament, the reconstruction of the multifilament image is more susceptible to the "finite aperture effect". Our study can provide some theoretical support for the experimental measurement of the spatial deposited energy distribution of femtosecond laser filament transmission under real atmospheric conditions.

ObjectiveAtmospheric aerosol particles refer to various solid, liquid, and solid-liquid mixed particles suspended in the atmosphere, with particle sizes generally ranging from 0.001 μm to 100 μm. These particles possess distinct physical and chemical properties that differ from other gas molecules in the atmosphere. As the concentration of aerosol particles in the atmosphere reaches certain thresholds, they exert a pronounced influence on radiation transmission. Moreover, the composition of aerosols undergoes conspicuous temporal and spatial variations, influenced by many factors such as aerosol source distribution, underlying surface composition, season, and meteorological conditions. To facilitate calculation and research, several typical aerosol models are usually given through systematic observation experiments, considering the two premises of aerosol composition and sampling area. These models provide very convenient data for radiative transfer calculations. However, different aerosol models have significant effects on radiative transfer and cannot be ignored. Therefore, accurately selecting an appropriate aerosol radiative transfer model under different circumstances is crucial.MethodsOur study is based on the AOD-AROD classification model and integrates it with atmospheric radiative transfer calculations. Using the above model based on MODIS aerosol data in typical areas from 2018 to 2022, the inter-annual patterns of aerosol model changes are calculated by month. We deeply explore the connections between meteorological elements, aerosol source locations, and aerosol models through the random forest method. A multi-temporal aerosol model judgment method is developed, considering the temporal changes of meteorological elements, thus improving the applicability and accuracy of the method. Backward trajectory analysis and atmospheric probability distribution fields are utilized for verification and optimization, enhancing the correlation between meteorological elements and aerosol models. Finally, the aerosol model judgment results are validated using TROPOMI’s surface radiance data to enhance the accuracy of atmospheric radiation transfer calculations.Results and DiscussionsBased on the AOD-AROD classification method and MODIS satellite data, the aerosol optical thickness data of the two bands are inputted into the AOD-AROD model. Using the aerosol optical thickness of 470 nm and the ratio of the aerosol optical thickness of the 470 nm and 660 nm bands, aerosols are divided into five types: dust, continent,subcontinent, biomass combustion,and urban industry. The aerosol model classification map of the Xianghe area from 2018 to 2022 is obtained, and statistical results for 2018 are shown in Fig. 7. In the random forest model, based on the specific meaning of various parameters and the characteristics of the training dataset in the Xianghe area, the corresponding parameters are adjusted in a targeted manner to achieve the highest accuracy of the data. Finally, 25% of the dataset is used to verify the judgment results of the random forest, and the predicted aerosol types are compared with the actual aerosol types. The comparison process is shown in Fig. 9. The accuracy of the final judgment model reached 69.11%.ConclusionsThrough a comprehensive analysis based on MODIS satellite data and the AOD-AROD aerosol model classification model, we summarize the interannual variation patterns of aerosol models in the study area. Random forest is effectively used to establish an aerosol-type judgment model, considering meteorological elements and aerosol source locations. By analyzing possible causes of model errors through backward trajectories, we compare random forest models in different phases to build a model that is most suitable for the study area. Simultaneously, we combine the analysis of backward trajectories and atmospheric transport probability distribution fields to further improve the accuracy of the model. The research results show that the accuracy of using this model to evaluate the aerosol model in the Xianghe area is 71.04%, and the average error rate of radiance simulation is reduced by 38.25%.

ObjectiveThe atmospheric extinction coefficient can be measured to obtain essential information, such as particle spectrum distribution and chemical composition of aerosols. It has an important application value in atmospheric environment monitoring, visibility measurement, and outdoor testing of optoelectronic equipment. Solar radiometer is a high-precision atmospheric extinction coefficient measurement device. However, due to its passive measurement, solar radiometer finds it difficult to measure the extinction coefficient in low visibility and during nighttime. Lidar uses an active light source to measure the extinction coefficient, with a long detection distance and an ability to obtain the distribution of atmospheric extinction coefficient profiles. However, based on the principle of backscatter measurement, the extinction-to-backscatter ratio needs to be assumed, and the optimization effect of optical parameters affects its inversion results. Therefore, it is necessary to study the transmission extinction coefficient measurement method based on active light sources.MethodsThe visibility transmissometer is usually the most typically employed instrument when using active light sources for extinction coefficient measurement. It can be categorized into four types, namely, single end, double end, triple end, and variable baseline. The double-ended transmissometer places the transmitting and receiving devices at both ends of the baseline. However, this requires light source with a high stability and is easily affected by window pollution. The triple-ended transmissometer uses two receiving systems to measure the same radiation source on different atmospheric attenuation paths. Two receiving systems are assumed to have the same pollution and parameters. Therefore, the stability and high-precision measurement of the long-term system cannot be guaranteed. It is often used to expand the measurement range of the instrument. The single-ended transmissometer sets the transmitting and receiving devices at the same end of the baseline, while the other end uses an optical reflector to bend back the beam, making it easy to achieve synchronous measurement. It can eliminate the correlation of light source jitter and reduce the sensitivity of the system to window pollution, but it cannot avoid the influence of backward scattering. The variable-baseline transmissometer measures the same radiation source on multiple different atmospheric attenuation paths using the same receiving device. This reduces the influence of lens pollution, environmental changes, and other factors, consequently improving the measurement accuracy of the instrument. Therefore, this paper analyzes the advantages and disadvantages of various methods to conduct research on a multiband extinction coefficient measurement using halogen lamps as radiation sources based on the variable-baseline measurement method. Quantitative analysis was conducted on the signal resolution, noise characteristics, dynamic range, random noise, response temperature sensitivity, and background radiation of the photoelectric detection circuit. Basic parameters, such as detection photocurrent, movement distance, measurement duration, and sampling rate, were established. Quantitative analysis was then conducted on the performance characteristics of three typical signal processing methods (least squares method, spectrum peak search method, and sampling integration method) in synchronous and quasi-synchronous measurements. The results confirmed the advantages of the spectrum peak search method and the sampling integration method in quasi-synchronous measurement. The spectrum peak search method can reduce the measurement errors caused by spectral differences at both ends compared with the commonly used phase-locked amplification technology. Moreover, it does not require reference signals from the transmitting unit and has strong independence and a wider application range. The results provide a theoretical basis for achieving high-precision extinction coefficient measurement through a variable-baseline method.Results and DiscussionsThis paper analyzes the impact of photoelectric measurement from four aspects. In the analysis of the system detection circuit, starting from the extinction coefficient resolution and measurement range, the photocurrent demand and the range of researchable movement distance were established, and the reliability of the circuit was ensured through noise characteristic analysis. In the analysis of digital signal processing methods, the application of three typical signal processing methods (least squares method, spectrum peak search method, and sampling integration method) in transmissometers was studied. It was found that the least squares method is more suitable for synchronous measurement, while the spectrum peak search method and the sampling integration method are more suitable for quasi-synchronous measurement. Based on this, the measurement time and sampling rate of each point were established, and a preliminary evaluation of the accuracy of extinction coefficient measurement affected by random noise was completed. In the analysis of background radiation impact, the system can suppress the changes in background radiation. Furthermore, in the analysis of the influence of environmental temperature and humidity, the advantages of multi-baseline measurement in resisting environmental temperature and humidity changes were confirmed, but the temperature impact cannot be ignored yet.ConclusionsThis paper studies a multi-baseline and multiband atmospheric extinction coefficient measurement method. The development of the system detection circuit is completed based on the requirements of extinction coefficient resolution and measurement range. By analyzing the influence of random noise on transmittance measurement, the least squares method is shown to be more suitable for synchronous measurement, while the spectrum peak search method and the sampling integration method are both suitable for quasi-synchronous measurement. By studying the influence of environmental temperature and humidity on transmittance measurement, the advantages of multi-baseline measurement in resisting environmental interference were confirmed in practice. At the same time, the detection circuit system cannot completely avoid the influence of environmental temperature and background radiation. The research results indicate that the detection photoelectric current should not be less than 1.27×10-7 A, the distance of each movement should not be less than 1 m, the duration of each measurement point should not be less than 40 s, and the sampling rate should not be less than 10 kHz. During the current research baseline distance of 5-20 m, the spectrum peak search method or the sampling integration method used for quasi-synchronous measurement can achieve an extinction coefficient measurement accuracy <0.8% as affected by random noise.

ObjectiveThe FengYun (FY)-3G satellite is China’s first meteorological satellite in a low-inclination orbit, and the medium resolution spectral imager-rainfall mission (MERSI-RM) is one of its primary payloads. Due to the unique overpass times of FY-3G compared to most polar-orbiting meteorological satellites, such as FY-3D and Terra, the precipitable water vapor (PWV) data derived from FY-3G/MERSI-RM is critical for studies on weather systems and climate change. However, there is currently a lack of accessible MERSI-RM PWV data. To address this issue, we develop a semi-empirical PWV retrieval algorithm for the near-infrared (NIR) channels at 0.865 and 0.940 μm from FY-3G/MERSI-RM.MethodsThe relationship between the natural logarithm of the water vapor absorption transmittance (WVAT) in the NIR water vapor absorption (WVA) channel and the slant column water vapor content along the sun-earth-satellite path is closely correlated and can be expressed by a quadratic equation. The NIR PWV retrieval model for MERSI-RM is established based on this correlation. Initially, average ground-based PWV data from the Aerosol Robotic Network (AERONET) obtained within a 30-min window of satellite transit are matched with the average MERSI-RM data within a 10 km×10 km area centered on the ground stations. Subsequently, the three coefficients of the quadratic equation are solved based on these matching results, completing the construction of the MERSI-RM PWV retrieval model. To ensure that AERONET PWV data can be used both for establishing the PWV retrieval model and for the quality assessment of the retrieval results, the matching data are divided into two independent sets based on the locations of the ground stations: data from the eastern hemisphere are used to construct the MERSI-RM PWV retrieval model, while data from the western hemisphere are used to validate the MERSI-RM PWV retrieval results.Results and DiscussionsValidation results using ground-based data show that the root mean square error (RMSE) and relative error (RE) of MERSI-RM PWV data, developed using the semi-empirical algorithm, are 0.20 cm and 0.10, respectively. In contrast, the RMSE and RE of MERSI-RM PWV data, developed using the traditional retrieval algorithm based on a radiative transfer model, are 0.35 cm and 0.15, respectively. Meanwhile, the RMSE and RE of MODIS PWV data are 0.57 cm and 0.39, respectively. Compared to MODIS PWV data, MERSI-RM PWV data, developed based on the semi-empirical algorithm, exhibit a 65% reduction in absolute error and a 74% reduction in relative error. Given that MODIS PWV data are widely acknowledged for their high accuracy, it can be concluded that the MERSI-RM PWV data developed using the semi-empirical algorithm also exhibit high accuracy. In comparison to MERSI-RM PWV data developed using the retrieval algorithm based on a radiative transfer model, the absolute error of the MERSI-RM PWV data derived using the semi-empirical algorithm is reduced by 43%, while the relative error is reduced by 33%. The lower accuracy observed in MERSI-RM PWV data and MODIS PWV data developed based on the radiative transfer model is primarily attributed to noticeable systematic errors. In contrast, the MERSI-RM PWV data obtained using the semi-empirical algorithm do not exhibit this issue. The success of the semi-empirical algorithm is attributed to its PWV retrieval model, which is constructed based on matching results between satellite observations and ground-based data. In other words, the errors in satellite observations are considered in the retrieval model. To provide a more comprehensive evaluation of the semi-empirical algorithm and offer additional choices for model construction methods, we also assess the PWV retrieval model constructed based on randomly allocated data. Validation results based on ground-based data show that the retrieval accuracy of the model constructed using randomly allocated data is equivalent to that of the retrieval model constructed using data obtained from the eastern hemisphere.ConclusionsWe introduce a semi-empirical PWV retrieval algorithm tailored specifically for FY-3G/MERSI-RM. This algorithm effectively tackles the current challenge of unavailable PWV data from FY-3G satellite observations. It does not rely on complex radiative transfer models but instead utilizes a quadratic equation, resulting in remarkably efficient PWV retrieval. Compared to traditional methods based on radiative transfer models, this semi-empirical approach achieves notably higher retrieval accuracy. The errors in MERSI-RM PWV data, obtained using the algorithm, are reduced by at least 33% compared to those derived from models based on radiative transfer. Moreover, when contrasted with the widely utilized MODIS official PWV data (MOD05), this semi-empirical algorithm diminishes errors in MERSI-RM PWV data by a minimum of 65%. These results underscore the high accuracy and efficiency of the semi-empirical PWV retrieval algorithm for MERSI-RM, making it suitable for large-scale PWV data development.