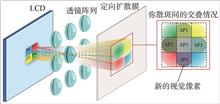

3D light field display technology has been paid attention to by research scholars because of its large viewing angle and dense viewing viewpoint. Resolution is an important parameter of 3D light field display technology, and the method to improve the resolution is complicated, so the research scholars began to focus on the visual resolution. To improve the visual resolution of the light field displays, an approach based on convolutional neural network to acquire pre-processing elemental image (PEI) is proposed. In the imaging procedure of light field display, lens aberrations diffuse the pixels on the imaging plane. The aliasing areas of the diffuse pixels can be regarded as new visual pixels and used as extra information carriers. A visual resolution-enhanced convolutional neural network (CNN) is employed to obtain the pre-processing elemental image array (PEIA) from a high-resolution elemental image array (HEIA). The PEIA is loaded onto the LCD, which is optically transformed by the lens array and diffused by the diffuser to render a 3D light field display image with visual resolution enhancement. In the experiment, by using the LEIA, lens array and diffuser, a light field display with a 70° viewing angle and improved visual resolution is demonstrated.

High-resolution and high-quality 3D images are particularly important as 3D displays are applied to cutting-edge areas such as military and medicine. However, the 3D image quality of integral imaging is constrained by the resolution of the 2D display screen. To overcome the resolution limitation of 2D display screen, this paper proposed a high-resolution integral imaging 3D display using retro-reflectors and reflective polarizers. The elemental image array (EIA) is separated into two orthogonal polarized rays by the reflective polarizer. The retro-reflector, the quarter-wave retarding film and the reflective polarizer reflect the two polarized rays and superimposed them along the diagonal direction, and then generate a new EIA with smaller pixel pitch and higher pixel density. According to the pixel index relationships between the polarized EIA and the superimposed high-resolution EIA, the pixel values of polarized EIA are calculated backwards. The experimental results indicate that the proposed device can reconstruct high resolution 3D images and weaken the black grid between pixels.

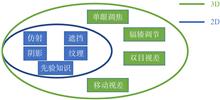

Glasses-free three-dimensional (3D) display is the gateway to the “metaverse” and it is a transformative technology that can redefine the way human interact with information. After more than a hundred years’ development, glasses-free 3D display has made significant progress, but there are still a bunch of problems, such as limited field of view (FOV), severe resolution degradation, limited movement parallax and visual fatigue. Recent studies have shown that micro/nano photonic devices (diffractive gratings, diffractive lens and metasurfaces) have flexible and accurate manipulating capability on the intensity, phase, polarization and other parameters of lightwave. It is expected that nanophotonics can solve the intrinsic bottleneck of glasses-free 3D display. However, micro-nano photonic devices face significant challenges at both the design and fabrication levels. In this paper, the limitations of glasses-free 3D display based on geometric optics are analyzed, and the latest research progress of glasses-free 3D display based on planar optics is introduced in detail from the aspects of device design and micro-nano fabrication. Finally, we highlight the future direction and potential applications of glasses-free 3D display.

Tabletop light filed display system based on views-segmented voxels can display high quality 3D images with frontal viewing area, 100° viewing angle and full parallax. However, the system also has the problem of nonuniform distribution of viewpoints. The central area of the viewing zone has dense distribution of viewpoints, and both marginal sides of the viewing zone has sparse distribution of viewpoints. Nonuniform distribution of viewpoints causes the incorrect perspective relations of the displayed 3D image and crosstalk between adjacent viewpoints. This paper performs analysis on the construction process of viewpoints and determines that the main reason for the nonuniform distribution of viewpoints is the aberration of the cylindrical lens used in the system which causes the emitted light rays not to converge at one point, but a diffuse spot. An aspheric lens is proposed and designed to optimize aberrations. Finally, the experiments are conducted to verify the correctness of the method. The uniformity of the viewpoints distribution is increased from 39.32% to 98.39%, and the problems of incorrect perspective and crosstalk between viewpoints are effectively improved.

In the large-format integral imaging display system, the position error of the macro lens array will seriously affect the imaging quality. The position error of the macro lens array can be divided into axial position error and lateral position error. Pixels converging at the reconstructed point ideally will no longer converge with the position error, and will be imaged at other points. In this paper, the average and variance of the distances from the reconstructed point to these points are used to measure the size of the position error. In addition, this paper discusses the measurement methods of the axial and lateral positions of the macro lens array, and then gives the correction methods of the axial and lateral position errors of the macro lens array, thereby alleviating the influence of the macro lens array’s position error on the imaging quality and improving the display effect.

With the rapid development of stereoscopic display technology, integral imaging three-dimensional (3D) display technology has been widely researched. But the visual crosstalk in integral imaging seriously affects its stereoscopic visual effect. In order to reduce the crosstalk and improve the visual comfort, a crosstalk-free integral imaging 3D display based on a mask array was proposed. The mask array was particularly designed to block the light from the adjacent elemental images. The proposed structure can effectively eliminate the crosstalk caused by the adjacent elemental images, and it is compact and easy to be fabricated. In this paper, the distribution of viewing area and the viewing angle of integral imaging were analyzed, and the design principle and structure of the proposal were described in detail. The experimental results show that the proposed structure can completely block the crosstalk caused by the adjacent elemental images and effectively reduce the crosstalk in integral imaging. As the viewing angle increasing, the crosstalk image was gradually blocked and eventually disappeared, the 3D visual effect was improved.

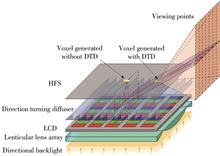

To realize three-dimensional(3D) images with low crosstalk, full resolution, and multi-view, a 3D display based on a multi-directional backlight is proposed. An eye-tracker in the system is used to acquire the position of human eyes and feedback to multi-directional backlight and the liquid crystal display (LCD) panel. Directional parallax images are time-sequentially provided to each eye by simultaneously working of multi-directional backlight and LCD screen. Viewers can achieve 3D perception based on the persistence of vision. Multi-directional backlight is mainly realized by a series of cylindrical waves and a holographic optical element (HOE). Different cylindrical waves produce diffracted beams in different directions through HOE. Double-LED type and diffuser type are proposed to improve the uniformity of the proposed system. Experimental results indicate that this system can provide full resolution 3D images in 25 viewing zones, and the uniformity is improved to 80%, average crosstalk is as low as 2.75%, which satisfy the demands for crosstalk, resolution, uniformity in the 3D display system, and the visual experience of viewers is greatly improved.

In naked eye 3D display, the traditional multi-viewpoint acquisition method is a uniform acquisition. The study shows that the viewpoint distribution constructed by the display is not uniform due to the aberrations of the column lens grating, and there are contradictions between the distribution of the collected viewpoints and display, producing problems such as misaligned viewpoint images and incorrect perspective relationships, which affect the final viewing experience. In this paper, a multi-viewpoint image distribution correction scheme is proposed for the above problems. The scheme consists of a viewpoint screening algorithm and an intermediate viewpoints generation network. Combined with the real distribution law of spatial viewpoints, the viewpoint screening is performed for the uniformly collected multi-viewpoint images to guide the intermediate viewpoint prediction network to generate virtual viewpoints at corresponding positions to match the display construction viewpoint positions. Experimental results prove the scheme effectively solves the mismatch between the spatial viewpoint of the display and the position of the acquisition viewpoint, thus improves the viewing quality of the naked eye 3D display.

Wavefront encoding is a crucial step in computer-generated holography, which converts the complex-amplitude wavefront on the hologram plane into a holographic modulating function. Since the digital element for complex-amplitude modulation is not yet available, current implementations of holographic wavefront modulation are carried out by phase-type or amplitude-type elements. The holograms are relatively converted to amplitude-only or phase-only forms. Herein, the phase optimization encoding and complex-amplitude converting methods of computer-generated holography based on liquid crystal spatial light modulators are introduced. The basic principle, range of applications, and algorithm flows are discussed, providing feasible strategies for various holographic implementations.

To solve the limited resolution problem of holographic display using digital micromirror device, a large-size and high-resolution holographic display method is proposed. In this paper, the high-resolution object plane is input using the computer rendering or related transform method, and the resolution of hologram is increased using Fresnel diffraction algorithm and Fourier transform parallel computing. According to the features of the digital micromirror device, the diffraction image sequence is spatially multiplexed and dynamically fused. The resolution and dynamic calculation of the holographic display are effectively improved. Experimental results show that this method can achieve large-size and high-resolution dynamic holographic display effect, breaking through the inherent limitation of pixel number and resolution for digital micromirror device. 8K or higher resolution reconstructed image can be displayed using the 2K digital micromirror device, and the size can reach 82 mm or even larger.

A holographic three-dimensional display method of virtual and real scene based on the combination of digital hologram (DH) and computer-generated hologram (CGH) is proposed. Firstly, the lensless Fourier transform digital hologram is used to record the three-dimensional information of the real scene. Secondly, the sampling frequency of DH is transformed according to the pixel interval of spatial light modulator. Then high pass filtering, scene scaling, in-plane rotation and translation operations are performed on the transformed DH in the frequency domain. Finally, the CGH of virtual scene is calculated by bipolar intensity method. In the calculation, the processed DH is taken as the initial value of bipolar intensity superposition, so as to realize the fusion of real and virtual scene holograms. The color dynamic holographic three-dimensional display system is built to verify the proposed method by experiments. A commercial 4K projector employing reflective liquid crystal is reformed, and the color dynamic holographic three-dimensional display of 30 fps is realized. Experimental results show that the proposed method realizes the efficient fusion of DH and CGH, and provides an effective way for dynamic holographic three-dimensional display of virtual and real mixed scenes.

Near-eye displays, which seamlessly integrate the digital and physical worlds, are expected to become the next generation of augmented reality display terminals. Retinal projection displays (RPD) technology is one of the research hotspots in the field of near-eye display due to its advantages of convergence-conflict free, high light efficiency and large field of view. In this paper, the development of RPD technology is reviewed, the basic working principle of RPD is described, the latest progresses of RPD and its eyebox expansion are reviewed, and the future prospect of RPD is forecasted. In the future, by combining the advantages of holographic wavefront control and holographic optical element (HOE), it is expected to realize a lightweight RPD near eye display with large eyebox and high system freedom.

Through sequentially presenting four perspective views to two eyes of the viewer respectively, Super multi-view three-dimensional (3D) display which is free from vergence-accommodation conflict (VAC) gets demonstrated in our previous work by four near-eye liquid crystal light valves. However, on one hand, the display frequency is only 30 Hz, which brings in obvious flicker. On the other hand, the small aperture size restricted the observed field of view. In this paper, combining with polarization-multiplexing, a spliced image constructed by partial perspective views for different near-eye liquid crystal light valves is generated for a large field of view (FOV). Experimentally, based on an equivalent polarization-characteristic display screen which is constructed by two 240 Hz computer display screens through a polarization beam splitter, flicker-free 60 Hz super multi-view display with a diagonal field of view 70° gets demonstrated.

A real-time floating 3D display interaction system is proposed, which can realize independent interaction of multiple floating 3D images. The proposed system consists of the display and interaction modules. In the display module, 3D images are reconstructed through integral imaging 3D display screen and then floats into the air through the dihedral corner reflector array. In the interaction module, Leap Motion captures positions of hand joints, extracts the gesture information and judges the motive of the users. After checking interaction channels, positions of multiple 3D objects are changed individually, and the 3D film source is rendered, which forms a human-computer interaction closed loop and realizes the real-time floating 3D display interaction. The experimental results demonstrate that the system can reach 30 frame/s under the resolution of 3 840×2 160. The real-time individual interaction of multiple objects can be achieved in the proposed system, and the interaction freedom is increased.