To address the challenges of long reconstruction time and numerous model voids in large-scale scenes and weakly textured regions during 3D reconstruction of unmanned aerial vehicle (UAV) images using existing multi-view stereo reconstruction (MVS) algorithms, an improved 3D reconstruction algorithm based on PatchMatch MVS (PM-MVS), called MCP-MVS, is proposed. The algorithm employs a multi-constraint matching cost computation method to eliminate outlier points from the 3D point cloud, thereby enhancing robustness. A pyramid red-and-black checkerboard sampling propagation strategy is introduced to extract geometric features across different scale spaces, while graphics processing unit based parallel propagation is exploited to improve the reconstruction efficiency. Experiments conducted on three UAV datasets demonstrate that MCP-MVS improves reconstruction efficiency by at least 16.6% compared to state-of-the-art algorithms, including PMVS, Colmap, OpenMVS, and 3DGS. Moreover, on the Cadastre dataset, the overall error is reduced by 35.7%, 20.3%, 19.5%, and 11.6% compared to PMVS, Colmap, OpenMVS, and 3DGS, respectively. The proposed algorithm also achieves the highest F-scores on the Cadastre and GDS datasets, 75.76% and 79.02%, respectively. These results demonstrate that the proposed algorithm significantly reduces model voids, validating its effectiveness and practicality.

To address the issue of low window-detection completeness and accuracy caused by the irregular distribution of windows on building facades, this study proposes a novel window-detection method that leverages hole constraints and hierarchical localization. This approach utilizes the least-squares method to fit lines to the projected point cloud data of the building facade, with distance constraints applied to obtain the primary wall point cloud data. The initial window position is determined using the hole-based detection method. Incorporating the concept of region expansion, the method employs an improved Alpha-Shape algorithm to extract boundary points around the initially identified window positions. Feature points among the boundary points are identified, and the boundary points are regularized based on these feature points, thus enabling the precise construction of window wireframe models. Experimental results show that this method significantly improves the accuracy of window detection, as evidenced by its average accuracy and completeness of 100% and 93.34%, respectively.

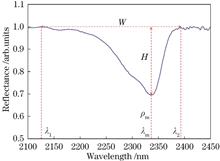

Hyperspectral remote sensing has been widely applied in geological research due to its rich multi-band spectral information. Most studies mainly focus on the identification of soil components and clay minerals, with relatively fewer studies on carbonate rocks, so this paper proposes a decision tree model to achieve precise classification of carbonate rocks based on hyperspectral data. A continuum-removed method is used to preprocess the data, and then combines spectral knowledge and machine learning to extract features. Specifically, the study determines spectral intervals closely related to carbonate rocks through spectral knowledge and extracts key waveform features from the spectral curves. Subsequently, the study uses the random forest algorithm to select features with discriminative capabilities, determines the optimal classification discriminant through threshold analysis, and builds a decision tree model. Finally, the model performance is evaluated using a confusion matrix, and the classification accuracy is compared with other five models. Results show that the decision tree model constructed based on the order of the lowest point wavelength of the absorption valley, the right shoulder wavelength of the absorption band , and the absorption bandwidth exhibited the highest classification accuracy, with an accuracy rate of 95.57%.

To address issues such as poor output consistency, information loss, and blurred boundaries caused by incomplete truth labeling in current weakly-supervised point cloud semantic segmentation methods, a weakly-supervised point cloud semantic segmentation method with input consistency constraint and feature enhancement is proposed. Additional constraint is provided on the input point cloud to learn the input consistency of the augmented point cloud data, in order to better understand the essential features of the data and improve the generalization ability of the model. An adaptive enhancement mechanism is introduced in the point feature extractor to enhance the model's perceptual ability, and utilizing sub scene boundary contrastive optimization to further improve the segmentation accuracy of the boundary region. By utilizing query operations in point feature query network, sparse training signals are fully utilized, and a channel attention mechanism module is constructed to enhance the representation ability of important features by strengthening channel dependencies, resulting in more effective prediction of point cloud semantic labels. Experimental results show that the proposed method achieves good segmentation performance on three public point cloud datasets of S3DIS, Semantic3D, and Toronto3D, with a mean intersection over union of 66.4%, 77.9%, and 80.5%, respectively, using 1.0% truth labels for training.

In the context of real-world observations of water purification flocculation processes, current image segmentation-based methods for detecting floc features face several challenges, which include poor recognition accuracy for deep-lying flocs, high annotation costs, and difficulties in adaptively processing depth-of-field information aiming at these problems, a new floc feature detection method based on improved density map and locally enhanced convolutional neural network (LECNN) is proposed. First, a density map construction method based on multipoint marking and average kernel smoothing is designed, to address the inability of the density map to simultaneously reflect multiple floc feature parameters. Second, a scene depth adaptive structure that assigns different weights to flocs at various depths is proposed, to mitigate the inaccuracies in floc parameter detection caused by parallax. Then, the proposed LECNN captures multiscale receptive fields while emphasizing local features. In comparative tests on a floc image dataset with multipoint markings, LECNN demonstrates accurate and robust density map fitting performance against recently proposed pixel-level prediction network structures, achieving a performance improvement over other floc feature detection benchmark methods in experimental results.

To address the problems of detail loss and color distortion in the current image defogging algorithms, this paper proposes a multi-dimensional attention feature fusion image dehazing algorithm. The core step of the proposed algorithm is the introduction of a union attention mechanism module, which can simultaneously operate in three dimensions of channel, space, and pixel to achieve accurate enhancement of local features, while parallel a multi-scale perceptual feature fusion module effectively captures global feature information of different scales. To achieve a more refined and accurate dehazing effect, a bi-directional gated feature fusion mechanism is added to the proposed algorithm to realize the deep fusion and complementarity of local information and global information features. Experimental validation on multiple datasets, such as RESIDE, I-Hazy, and O-Hazy shows that, the proposed algorithm exhibits better performance than the existing state-of-the-art in terms of peak signal-to-noise ratio (PSNR) and structural similarity (SSIM). Compared with the classical GCA-Net, the PSNR and SSIM of the proposed algorithm increased by 2.77 dB and 0.0046, respectively. Results of this study can provide new insights and directions for investigating image dehazing algorithms.

Due to the complex background and small target of the transmission line cotter pin, the intelligent power inspection of unmanned aerial vehicles (UAVs) is vulnerable to the problems of low detection accuracy and high missed and false detection rates. Addressing these issues, the present study proposes a target detection algorithm based on YOLOv8-DEA to better adapt to UAVs and other application scenarios. First, the backbone network's C2f module is modified, enabling the model to focus on regions of interest and enhancing its perception of local image structures. Subsequently, an efficient mamba-like linear attention (EMLLA) mechanism is used to capture distant dependencies, and the efficient multilayer perceptron (EMLP) module is applied to map the model features to a higher dimensionality, enhancing the model's expressiveness. Finally, a dynamic selection mechanism is used to improve the Neck layer. The adaptive fusion of deep and shallow features enables the effective integration of features from different levels, allowing the model to accurately capture global semantic information, as well as extract rich detailed information when processing complex and diverse data. The experimental results demonstrate that the improved algorithm achieves a 2.33 percentage points increase in mean average precision (mAP@0.5) and 3.67 percentage points increase in recall (R@0.5) on the custom cotter pin dataset. Additionally, the algorithm achieves a precision of 95.58% and speed of 67.84 frame/s. When compared to mainstream algorithms, the proposed method not only exhibits improved detection accuracy, but also ensures real-time performance, making it more suitable for the needs of transmission-line cotter pin detection in engineering applications.

In this study, a three-dimensional (3D) facial UV texture restoration algorithm based on gated convolution is proposed to address the texture loss caused by self-occlusion in unconstrained facial images captured from large viewing angles during reconstructing 3D facial structures from a single image. First, a gated convolution mechanism is designed to learn a dynamic feature selection approach for each channel and spatial position, thereby enhancing the network's ability to capture complex nonlinear features. These gated convolutions are then stacked to form an encoder-decoder network that repairs 3D facial UV texture images with irregular defects. In addition, a spectral normalization loss function is introduced to stabilize the generative adversarial network, and a segmented training approach is implemented to overcome the challenges of cost and accessibility in collecting 3D facial texture datasets. The experimental results show that the proposed algorithm outperforms mainstream algorithms in terms of the peak signal-to-noise ratio and structural similarity. The proposed algorithm effectively restores UV texture maps under large angle occlusion, yielding more comprehensive facial texture maps with natural, coherent pixel restoration, and realistic texture details.

To address issues such as low brightness, low contrast, and lack of details in images taken under challenging conditions like nighttime, backlighting, and severe weather, a nonlinear adaptive dark detail enhancement algorithm is proposed for improving low-light images. To ensure color authenticity, the original image is first converted to HSV space, and the brightness component V is extracted. For dealing with the issues of poor brightness and low contrast in low-light images, an improved gamma correction algorithm is then adopted to adaptively adjust image brightness. Subsequently, a brightness adaptive contrast enhancement algorithm is introduced, combining a low-pass filtering approach to adaptively enhance high-frequency details. This helps highlight textures and edge information of dark areas of the image. Finally, a brightness-guided adaptive image fusion algorithm is proposed to preserve edge details in highlighted areas while avoiding overexposure. Experimental results demonstrate that the proposed algorithm effectively adapts to the image characteristics of low-light environments. It not only significantly enhances the brightness and contrast of low-light images but also highlights details in darker areas while preserving color authenticity.

To address the problem of reduced clarity caused by detail information degradation during haze processing in complex scenes, this study presents a multi-scale feature fusion dehazing network based on U-net. In the encoder component, we employ a dynamic large kernel convolution with a dynamic weighting mechanism to enhance global information extraction. This mechanism allows for adaptive adjustment of feature weights, thereby improving the model's adaptability to complex scenes. In addition, we introduce a parallel feature attention module PA1 to capture critical details and color information in images, effectively mitigating the loss of important features during the dehazing process. To tackle the challenges posed by complex illumination changes and uneven haze conditions, we incorporate coordinate attention in the decoder's parallel feature attention module PA2. This approach integrates spatial and channel information, allowing for a more comprehensive capture of key details in feature maps. Experimental results show that the proposed network model achieves excellent dehazing effects across various datasets. The proposed network model outperforms classical dehazing networks such as FFA-Net and AOD-Net, effectively addressing detail loss while providing superior image dehazing performance.

Although Transformers excel in global feature extraction, they often have limitations in capturing local image details, leading to the loss of some local lighting information and uneven overall lighting distribution. To address this issue, this study proposes a low-light image enhancement algorithm based on light perception enhancement and dense residual denoising. The proposed algorithm leverages the advantages of Transformer and convolutional neural networks and effectively enhances the visual quality and lighting uniformity of low-light images through a detailed light perception mechanism. The network architecture features a codec design that integrates multilevel feature extraction and attention fusion modules internally. The feature extraction modules capture both global and local image features, and the attention fusion modules filter and combine these features to optimize information transmission. Additionally, to address the issue of noise amplification in low-light image enhancement, the enhanced image denoising module effectively reduces the noise of the enhanced image using dense residual connection technology. The performance of the proposed algorithm in processing low-light images is evaluated via comparative experiments. The experimental results show that the proposed algorithm can not only improve the problem of uneven lighting but also significantly reduce image noise and achieve higher quality image output.

Aiming to solving the problems of feature redundancy and blurred edge texture in images reconstructed via some of the existing image super-resolution reconstruction algorithms, an image super-resolution reconstruction network with spatial/high-frequency dual-domain feature saliency is proposed. First, the network constructs a feature distillation refinement module to reduce feature redundancy via introduction of blueprint separable convolution, and it then designs parallel dilated convolutions to refine extraction of multiscale contextual features so as to reduce feature loss and compensate for loss of texture in local regions. Second, a spatial dual-domain fusion attention mechanism is designed to enhance the high-frequency feature expression for fully capturing long-range dependency between different locations and channels of the feature map while facilitating reconstruction of edge texture details. The experimental results demonstrate that with its reconstructed image quality, the proposed model outperforms other comparison algorithms both in terms of objective metrics and subjective perception on multiple datasets. At a scaling factor of 2, compared with the VapSR, SMSR, and EDSR, the proposed method enhances the peak signal-to-noise ratio (PSNR) by an average of 0.14 dB, 0.36 dB, and 0.35 dB, respectively.

The existing algorithms for detecting dress code violations at airports exhibit high computational complexity and weak real-time performance. Furthermore, they are prone to errors and omissions during detection in complex airport security scenarios, making it difficult to meet the requirements of real-time security detection. In response to this situation, a method called SGS-YOLO is proposed based on the YOLOv8n technology route for detecting violations of dress code by airport security personnel. First, a parameter-free SimAM attention mechanism is introduced into the backbone network of the model to enhance the perception ability of important features and improve the accuracy of object detection. Second, GSConv and VoV-GSCSP modules are introduced into the neck network to reduce the number of parameters, which helps achieve lightweighting of the model. Finally, a detection box regression loss function based on SIOU is adopted to reduce misjudgments in cases involving small changes between the predicted and real target boxes. The experimental results show that compared with the baseline model, the SGS-YOLO improves the average accuracy by 6.3 percentage points. Further, it reduces the number of parameters and floating-point operations by 9.63% and 8.64%, respectively. The proposed approach effectively achieves a balance between model lightweighting and performance, and thus, it possess good engineering application value.

Nighttime images suffer from low visibility due to insufficient illumination and glow effects caused by artificial light sources, which severely impair image information. Most existing low-light image enhancement algorithms are designed for underexposed images. Applying these methods directly to low-light images with glows often intensifies the glow regions and further degrades image visibility. Moreover, these algorithms typically require paired or unpaired datasets for network training. To address these issues, we propose a zero-shot enhancement method for low-light images with glows, leveraging a layer decomposition strategy. The proposed network comprises two main components: layer decomposition and illumination enhancement. The layer decomposition network integrates three sub-networks, including channel attention mechanism modules. Under the guidance of glow separation loss with an edge refinement term and self-constraint information retention loss, the input images is decomposed into three components: glow, reflection, and illuminance images. The illuminance map is subsequently processed via the illumination enhancement network to estimate enhancement parameters. The enhanced image is reconstructed by combining the reflection map and the enhanced illuminance map, following the Retinex theory. Experimental results demonstrate that the proposed method outperforms state-of-the-art unsupervised low-light image enhancement algorithms, achieving superior visualization effects, the best NIQE and PIQE indices, and a near-optimal MUSIQ index. The method not only effectively suppresses glows but also improves the visibility of dark regions, producing more natural enhanced images.

Due to significant brightness differences in airborne flare-containing marine optical images, the image enhancement process may result in low contrast and fuzzy details. To solve these problems, an image enhancement method based on local compensation and non-subsampled contourlet transform (NSCT) is proposed. First, the image is segmented into high-brightness area and low illumination area by mean filtering and the maximum interclass variance method. Then, contrast limited adaptive histogram equalization (CLAHE) algorithm is used to balanced image brightness of high-brightness area, and NSCT algorithm is used to decompose the low illumination area into low-frequency component and several high-frequency components. Subsequently, the uneven illumination of low-frequency component is corrected by the multi-scale-Retinex algorithm, while the high-frequency components are adjusted to improve the details by Laplace operator. The processed low-frequency and high-frequency components are subjected to NSCT reconstruction, and CLAHE algorithm is used to further improve the contrast of image. Finally, by using improved local compensation model, the enhanced low-illumination area is compensated, and therefore the enhanced image can be obtained. Experimental results show that, compared with other methods, the image information entropy, average gradient, and contrast of the algorithm in this paper are improved by 1.35%, 40.62%, and 77.15% on average. Besides, the enhanced image also performs better in terms of image brightness, contrast and texture details.

In the infrared- and visible-image alignment of power equipment, severe nonlinear radial aberrations as well as significant viewing-angle and scale differences occur between the infrared and visible images, which result in image-alignment failure. Hence, a local normalization-based algorithm for the infrared- and visible-image alignment of power equipment is proposed. First, local normalization was performed to eliminate the nonlinear distortion of the images and improve the accuracy of the curvaturescale space (CSS) algorithm in extracting the feature points. Subsequently, the main direction of the feature points was calculated based on the local curvature information, and the multiscale oriented gradient histogram (MSHOG) was used as the feature descriptor. Finally, the features were matched using the proposed accurate matching method to obtain the parameters of the inter-image projective transformations. The proposed algorithm has average root mean square errors of 2.18 and 2.24 and average running times of 13.09 s and 12.07 s under infrared- and visible-image datasets of electric power equipment, respectively. Experimental results verify the effectiveness of the method in addressing images to be aligned with obvious differences in viewpoints and proportions, as well as in realizing the high-precision alignment of infrared and visible images of electric power equipment.

With the traditional attention mechanism, the representational ability and detection performance of the model are limited or its complexity and calculation cost are high. To solve these problems, an innovative lightweight multi-head mixed self-attention (MMSA) mechanism is proposed, aimed at enhancing the performance of object detection networks while maintaining the model's simplicity and efficiency. The MMSA module ingeniously integrates channel information with spatial information, as well as local and global information, by introducing a multi-head attention mechanism, further augmenting the network's representational capabilities. Compared to other attention mechanisms, MMSA achieves a superior balance between model representation, performance, and complexity. To validate the effectiveness of MMSA, it is integrated into the Backbone or Neck portions of the YOLOv8n network to enhance its multi-scale feature extraction and feature fusion capabilities. Extensive comparative experiments on the CityPersons, CrowdHuman, TT100K, BDD100K, and TinyPerson public datasets show that, compared with the original algorithm, YOLOv8n with MMSA improved their mean average precision (mAP@0.5) by 0.9 percentage points, 0.9 percentage points, 2.3 percentage points, 1.0 percentage points, and 1.7 percentage points, respectively, without significantly increasing the model size. Additionally, the detection speed reached 145 frame/s, fully meeting the requirements of real-time applications. Experimental results fully demonstrate the effectiveness of the MMSA mechanism in improving object detection outcomes, showcasing its practical value and broad applicability in real-world scenarios.

To address the problems of detail loss, low brightness and color distortion when processing sea fog images by dark channel prior defogging algorithm, this paper proposes a sea fog image defogging algorithm based on sky region segmentation. First, accurate segmentation of the sky region is achieved through threshold segmentation and region growing. On this basis, an approach with stronger anti-interference capabilities is used to optimize the atmospheric light intensity, the median value of the top 0.1% pixels belonging to the region with the highest luminance is chosen as the atmospheric light intensity. Second, the transmittance is refined using fast bootstrap filtering and an adaptive correction factor is introduced to adjust and optimize the transmittance mapping. Finally, the obtained transmittance and atmospheric light intensity are utilized with an atmospheric scattering model to restore the defogging image. Experimental results demonstrate that the algorithm significantly enhances evaluation metrics such as structural similarity and peak signal-to-noise ratio, effectively improving the quality of the defogging image.

To address the challenges in traditional image restoration algorithms based on regularization models that the information encapsulated in the regularization term prior may not be sufficiently rich, and determining the regularization coefficients can be cumbersome or require adaptive adjustments, combining the advantages of traditional and deep learning methods, this paper combines L2-norm regularization with deep learning, proposes a deep learning network with strict mathematical model foundation and interpretability: interpretable deep learning image restoration algorithm with L2-norm prior. Nonlinear transformations are employed to replace the regularization term in the traditional model, and deep learning networks are utilized to solve the regularization model. This not only optimizes the model solving process but also enhances the interpretability of the deep learning network. Experimental results demonstrate that the proposed algorithm is capable of effectively removing image blurriness while suppressing image noise, thereby improving image quality.

An improved multilayer progressive guided face-image inpainting network is proposed to solve problems such as artifacts and incongruent facial contours after face-image inpainting. The network adopts an encoding-decoding structure comprising structure-complement, texture-generation, and main branches, and gradually guides the generation of structure and texture features among different branches. A feature-extraction module is introduced to enhance the connection between different branches when the feature transfer is carried out in different branches. Additionally, a feature-enhancing attention mechanism is designed to strengthen the semantic relationship between channel and spatial dimensions. Finally, the output features of different branches are passed on to the context aggregation module such that the inpainting images become more similar to the actual images. Experimental results show that, compared with PDGG-Net (Progressive Decoder and Gradient Guidance Network), the proposed network in the CelebA-HQ dataset presents average improvements of 0.003 and 0.13 dB in terms of the SSIM and PSNR, respectively. To prevent overfitting, multi-dataset joint training and fine-tuning are performed in the sparse profile dataset, which improves the SSIM and PSNR by 0.003 and 0.29 dB on average, respectively, compared with the results of direct training using the profile dataset.

Existing infrared and visible image fusion methods cannot effectively balance the unique and similar structures of infrared and visible images, resulting in suboptimal visual quality. To address these problems, this study proposes a cross-fusion Transformer-based fusion method. The cross-fusion Transformer block is the core of the proposed network, which applies a cross-fusion query vector to extract and fuse the complementary salient features of infrared and visible images. This cross-fusion query vector balances the global visual characteristics of infrared and visible images and effectively improves the fusion visual effect. In addition, a multi-scale feature fusion block is proposed to address the problem of information loss caused by the down-sampling operation. Experimental results on the TNO, INO, RoadScene, and MSRS public datasets show that the performance of the proposed method surpasses existing representational deep-learning-based methods. Specifically, comparing with the suboptimum results on the TNO dataset, the proposed method obtains ~27.2%, ~29.2%, and ~9.9% improvements in terms of standardized mutual information, mutual information, and visual fidelity metrics, respectively.

To address the issues of high computational complexity and insufficient real-time performance encountered in three-dimensional object detection for complex aircraft maintenance scenes, a three-dimensional object detection method which integrates visual camera and LiDAR data and based on prior information is proposed. First, the parameters of a camera and LiDAR are calibrated, the point cloud obtained by LiDAR is preprocessed to obtain an effective three-dimensional point cloud, and the YOLOv7 algorithm is used to identify aircraft fuselage targets in the camera images. Next, the depth of the target object is calculated based on its prior length using the Efficient Perspective-n-Point (EPnP) method. Finally, depth information and point cloud clustering methods are utilized to complete three-dimensional object detection and identify obstacles. Experimental results show that the proposed method can accurately detect targets from environmental point clouds, with a recognition accuracy of 94.70%. Furthermore, the processing time for one frame is 42.96 ms, which indicates good performance in terms of both recognition accuracy and real-time capability, thus satisfying the collision risk detection requirements during aircraft movement.

To address the challenges of low recognition accuracy and high computational complexity in underwater optical target recognition algorithms, a lightweight YOLOv8 underwater optical recognition algorithm based on automatic color equalization (ACE) image enhancement is proposed. Initially, we apply the ACE image enhancement algorithm to preprocess images. Subsequently, we improve the feature extraction capabilities by replacing the YOLOv8 backbone with an upgraded SENetV2 backbone network. To further decrease computational quantity, we introduce a lightweight cross-scale feature fusion module in place of the neck network. Then, we utilize DySample as a substitute for the traditional upsampler to improve image processing efficiency. We refine the DyHead detection head to better perceive targets. Finally, we enhance the accuracy of bounding box regression by replacing loss function of YOLOv8 with InnerMPDIoU based on the minimum point distance intersection ratio (MPDIoU). Experimental results show that the proposed SCDDI-YOLOv8 algorithm achieves a mean average precision of 77.3% and 71.5% on the URPC2020 and UWG datasets, respectively, while reducing parameters by ~20.7%, floating-point operations by 6×108, and model size by 1.2 MB compared with the original YOLOv8n. Compared with other advanced algorithms, the proposed algorithm can meet the sensitive computational needs of edge devices.

To address the problems that existing methods have difficulty in achieving a high compression ratio and low distortion when processing whole-brain data of macaque with high dynamic range, this paper proposes an end-to-end multi-scale compression network based on the U-Net framework. First, the stability of the network is increased and high-frequency information of the image data is preserved by establishing a multi-level controllable jump connection between the compression module and the reconstruction module. Then, the data output by the coding module are processed with straight-through estimation quantization to accelerate the modeling process of the probability model and improve the compression ratio. Experimental results show that the rate-distortion curves of the network on the cellular architecture dataset and the nerve fiber dataset are better than those of other mainstream deep learning methods and the traditional JPEG2000 method. Under a compression ratio of 160, the multi-scale structural similarity index is not less than 0.99.

Inspection robots have become critical tools for roller detection in belt conveyors. However, the infrared images detected by these robots often suffer from low resolution and a low signal-to-noise ratio, thereby introducing higher requirements for target detection algorithms. In this study, we propose improvements to inspection robots for roller detection tasks in belt conveyors based on the YOLOv5 network. Inspired by DenseNet, we first introduce dense connection modules into the YOLOv5 network to enhance its feature extraction capabilities. We then introduce a Wise-IoU (WIoU) loss function to evaluate the quality of anchor rectangles and in turn improve network performance and generalization capabilities. Experimental evaluations on a dataset of infrared data collected by inspection robots on belt conveyors demonstrate that, compared with the original YOLOv5, the recall rate and mean average precision are improved by 2.4 percentage points and 1.5 percentage points, respectively (with the latter reaching 98%), while a recognition speed of 80 frame/s and model size of 15 MB are maintained. The improved inspection robot features a small size, fast speed, and high efficiency.

To address the limitations of traditional image restoration techniques in accurately restoring laser interference images, this paper proposes a nove deep learning framework. This framework leverages convolutional neural networks and a multi-head attention mechanism to extract multi-scale features, thereby enhancing the understanding and restoration of image structures. Experiments are conducted on a synthetic laser interference image dataset comprising 5 scenes, each scene containing 5000 images. Experimental results reveal that the proposed framework visually restores images affected by laser interference and achieves high peak signal-to-noise ratio (PSNR) and structural similarity (SSIM). In particular, the PSNR and SSIM values for the reconstructed images, across various levels of image damage, exceed 34 dB and 0.98, respectively. The proposed method holds promise for broad applications in laser interference scenarios and offers valuable support for military defense and civilian technologies.

To address the issue of limited denoising effectiveness caused by the lack of ground-truth images during the training of self-supervised image denoising methods, a multistage self-supervised denoising method based on a memory unit is proposed. The memory unit modularly stores intermediate denoising results, which resemble clear images, and collaboratively supervises the network training process. This ability allows the network to learn not only from noisy images but also from the intermediate outputs during training. Additionally, a multistage training scheme is introduced to separately learn features from flat and textured areas of noisy images, while a spatial adaptive constraint balances noise removal and detail retention. Experimental results show that the proposed method achieves peak signal-to-noise ratios of 37.30 dB on the SIDD dataset and 38.52 dB on the DND dataset, with structural similarities of 0.930 and 0.941, respectively. Compared with existing self-supervised image denoising methods, the proposed method remarkably improves both visual quality and quantitative metrics.

This paper proposes a new bidirectional weighted multiscale dynamic approach, the BiEO-YOLOv8s algorithm, to enhance the detection of small targets in aerial images. It effectively addresses challenges such as complex backgrounds, large-scale variations, and dense targets. First, we design a new ODE module to replace certain C2f modules, enabling the accurate, quick, and multiangle location of target features. Then, we develop a bidirectional weighted multiscale dynamic neck network structure (BiEO-Neck) to achieve deep fusion of shallow and deep features. Second, adding a small object detection head further enhances feature extraction capability. Finally, the generalized intersection union ratio boundary loss function is used to replace the original boundary loss function, thereby enhancing the regression performance of the bounding box. Experiments conducted on the VisDrone dataset demonstrat that as compared to the base model YOLOv8s, the proposed model achieved a 6.1 percentage points improvement in mean average precision, with a detection speed of only 4.9 ms. This performance surpasses that of other mainstream models. The algorithm effectiveness and adaptability are further confirmed through universality testing on the IRTarget dataset. The proposed algorithm can efficiently complete target detection tasks in of unmanned aerial vehicle aerial images.

In this study, we propose a novel scheme for expanding chaotic keys for encrypting and decrypting image and video signals. The process begins with achieving chaos synchronization in semiconductor lasers driven using a common signal over a 130-km optical fiber link with a synchronization coefficient of 0.945. The resulting synchronized chaotic signal is processed through dual-threshold quantization, and error bits are removed through lower-triangle reconciliation, thereby yielding consistent keys at 1 Gbit/s. These keys are expanded to 80 Gbit/s using the Mersenne twister algorithm. Analysis shows that they can pass the NIST tests, thereby demonstrating good randomness and security. Thus, the encryption and decryption of image and video signals using these expanded keys is experimentally demonstrated.

Light-sheet fluorescence microscopy imaging systems are extensively used for imaging large-volume biological samples. However, as the field of view of the optical system expands, imaging will exhibit spatially uneven degradation throughout the entire field of view. Conventional model-driven and deep learning approaches exhibit spatial invariance, making it challenging to directly address this degradation. A position-dependent model-driven deconvolution network is developed by introducing positional information into the model-driven deconvolution network, which is achieved by randomly selecting training image pairs with different degradation patterns during training and using block-based reconstruction techniques during image restoration. The experimental results reveal that the network facilitates rapid deconvolution of large field-of-view optical images, thereby considerably enhancing image processing efficiency, image quality, and the uniformity of image quality within the field of view.

To address the scarcity of point cloud datasets in foggy weather, an optical model-based foggy weather point cloud rendering method is proposed. First, a mathematical relationship is established between the LiDAR impulse responses during good weather and foggy weather in the same scene. Second, an algorithm is designed using laser attenuation in a foggy weather, and the visibility of the rendered point cloud is set by modifying the attenuation coefficient, backscattering coefficient, and differential reflectance of the target in the algorithm to obtain the rendered point cloud of the foggy weather under the set visibility. Experiments reveal that the proposed method effectively renders foggy weather point cloud with a visibility within 50?100 m, and that the method shows stable results. Compared with the real foggy weather point clouds, the average values of KL (Kullback-Leibler) dispersion of the rendered point clouds are less than 6, the average values of the percentage of Hausdorff distance less than 0.5 m are not less than 85%, and the average values of the mean square error distance are less than 8, proving the feasibility of proposed method. Therefore, the proposed method can render foggy weather point cloud under good weather and overcomes the lack of foggy weather point cloud datasets and visibility data.

To solve the problems of the low-accuracy detection or inaccurate classification of small target defects in solar cell panel defect detection, an improved lightweight YOLOv5s solar cell panel defect detection model suitable for small target detection is proposed in this study. First, an SiLU activation function is used to replace the original activation function to optimize the convergence speed and enhance the generalization ability of the model. Second, the C3TR and convolution block attention modules are used to re-optimize the backbone feature sampling structure to improve the recognition ability for different defect types, especially small target defects. Third, the content-aware re-assembly of features is realized in the feature extraction network to improve the detection accuracy and detection speed without increasing the model weight. Finally, a dynamic nonmonotonic loss function WIoUv3 is added to the dynamic matching prediction box and real frame to enhance the robustness of small target datasets and noise. Experimental results show that the mean average precision (mAP@0.5) of the proposed model is 95.9% and that its classification accuracies for large-area cracks and star-shaped scratches reach 98.0% and detection speed reaches 75.133 frame/s, demonstrating its lightweight nature and rapidness that meet the requirements of industrial production.

Fisheye cameras offer lower deployment costs than traditional cameras for detecting the same scene. However, accurately detecting distorted targets in fisheye images requires increased computational complexity. To address the challenge of achieving both accuracy and inference speed in fisheye image detection, we propose an enhanced YOLOv8m-based fisheye object detection model, which we refer to as Fisheye-YOLOv8. First, we introduce the Faster-EMA module, which integrates lightweight convolution and multiscale attention to reduce delay and complexity in feature extraction. Next, we design the RFA-BiFPN structure, incorporating a parameter-sharing mechanism to enhance the detection speed and accuracy through receptive field attention and a weighted bidirectional pyramid structure. In addition, the lightweight G-LHead detection head is introduced to minimize the number of model parameters and reduce complexity. Finally, the LAMP pruning algorithm is introduced to balance improvements in recognition accuracy with inference speed. Experimental results demonstrate that Fisheye-YOLOv8 achieves mean average precision values of 60.5% and 59.7% on the Fisheye8K and WoodScape datasets, respectively, which is an increase of 2.2 and 1.2 percentage points compared to YOLOv8m. Moreover, the proposed model's parameter and computational complexity are only 20.5% and 29.7% of those of YOLOv8m, respectively, with a detection speed of 118 frames/s. The proposed model meets real-time requirements and is better suited for fisheye camera deployment than the other models.

With an increase in the shooting depth, underwater images suffer from issues such as low brightness, color distortion, and blurred details. Therefore, an underwater low-illumination image enhancement algorithm based on an encoding and decoding structure (ULCF-Net) is designed. First, a brightness enhancement module is designed based on a half-channel Fourier transform, which enhances the response in dark regions by combining the frequency domain and spatial information. Second, cross-scale connections are introduced within the encoding and decoding structure to improve the detailed expression of underwater optical images. Finally, a dual-stream multiscale color enhancement module is designed to improve the color fusion effects across different feature levels. Experimental results on publicly available underwater low-illumination image datasets demonstrate that the proposed ULCF-Net exhibits excellent enhancement in terms of brightness, color, and details.

The study on attention in attention network (A2N) in single-image super-resolution has revealed that all attention modules are not beneficial to the network. Therefore, in the design of the network, input features can be divided into attention and nonattention branches. The weights on these branches can be adaptively adjusted using dynamic attention modules based on the input features so that the network can strengthen useful features and suppress unimportant features. In practical applications, lightweight networks are suitable to be run on resource-constrained devices. Based on A2N, the number of attention in attention block (A2B) in the original network is reduced and lightweight receptive field modules are introduced to enhance the overall performance of the network. In addition, by adjusting the L1 loss to a combination loss based on Fourier transform, the spatial domain of the image is transformed into the frequency domain, enabling the network to learn the frequency characteristics of the image. The experimental results show that the improved A2N reduces parameter count by about 25%, computational complexity by about 20%, and inference speed by 15%, thereby enhancing the performance.

High-dynamic range (HDR) image reconstruction algorithms based on the generation of bracketed image stacks have gained popularity for their capabilities in expanding the dynamic range and adapting to complex lighting scenarios. However, existing approaches based on convolutional neural networks often suffer from local receptive fields, limiting the utilization of global information and recovery of over- or underexposed regions. To solve this problem, this study introduces a Transformer architecture that equips the network with a global receptive field to establish long-range dependency. In addition, a unidirectional soft mask is added to the Transformer to alleviate the effects of invalid information from over- and underexposed regions, further improving the reconstruction quality. Experimental results show that the proposed algorithm improves the peak signal-to-noise ratio by 2.37 dB and 1.33 dB on the VDS and HDREye datasets, respectively, and subjective comparisons further prove the effectiveness of the proposed algorithm. This study provides a novel approach for improving the information recovery capabilities of HDR image reconstruction algorithms for over- and underexposed regions.

Aiming at the problems of low detection accuracy and missed detection caused by complex contour information, large change of shape and small size contraband in X-ray images, an improved GELAN-YOLOv8 model based on YOLOv8 is proposed. First, the RepNCSPELAN module based on generalized efficient layer aggregation network (GELAN) is introduced to improve the feature extract ability for contraband. Second, the GELAN-RD module is proposed by combining deformable convolution v3 (DCNv3) and RepNCSPELAN module to adapt contraband with different postures and serious changes in size and angle. Third, the spatial pyramid pooling is improved, so that the model can pay more attention to the feature information of small target contraband. Finally, the Inner-ShapeIoU is proposed by combining inner-intersection over union (Inner-IoU) and Shape-IoU to reduce the false detection and missed detection and speed up the convergence of the model. Results on the SIXray dataset show that the mAP@0.5 of the improved algorithm are 2.8 percentage points higher than YOLOv8n, and the performance is better than YOLOv8s. The GELAN-YOLOv8 effectively realizes the real-time detection of contraband in X-ray images.

Marine microorganisms are fundamental to marine ecosystems. However, underwater imaging often blurs microbial contours due to water absorption and scattering. To address this, we propose a contour segmentation method for underwater microorganisms that combines an underwater imaging model with Fourier descriptors. First, the background light and water attenuation coefficients are estimated using the underwater imaging model to extract a clear, water-free feature map of the object. Next, a classification header determines the target location, while a regression header uses Fourier descriptors to represent and refine the microorganism's contour in the pixel domain. In addition, hologram reconstruction and preprocessing steps are applied, and a microbial contour segmentation dataset is generated. Experimental results demonstrate that the Fourier descriptor outperforms the star polygon method in contour representation accuracy and spatial continuity. Compared to traditional segmentation methods, the proposed algorithm achieves an F1 score of 0.8894, intersection over union of 0.7887, and pixel accuracy of 0.8608, all improved metrics indicating superior segmentation capability.

Aiming at the problem that the traditonal cloth simulation filtering (CSF) algorithm cannot distinguish the local microtopography of pavement damage, which leads to the wrong detection and omission of pothole damages, an adaptive descend distance CSF algorithm for pavement pothole extraction is proposed. First, the proposed algorithm preprocesses and denoises the point cloud of the road to obtain the pavement point cloud. Second, by improving the displacement distance of the"external force drop"and"internal force pull back"processes of the simulated cloth in the CSF algorithm, the adaptive distance drop of the simulated cloth is realized, and then further constructs the accurate local datum plane of the road surface and generates the depth-enhanced information model of the point cloud. Finally, depth threshold classification and Euclidean clustering algorithm are used to achieve precise detection of potholes and extract geometric attribute features of potholes. Experiments and analysis of the measured road data show that, the recall of potholes in the measured data reaches 83.3%, and the precision reaches 87.5%, the maximum relative error of area is 17.699%, and the maximum relative error of depth is 9.677%, which has a certain degree of robustness and applicability. The proposed algorithm can provide a powerful support for the work of large-scale three-dimensional pavement point cloud data for the automatic and precise detection of potholes on pavements.

This study develops a lightweight roadside object detection algorithm called MQ-YOLO. The algorithm is based on multiscale sequence fusion. It addresses the challenges of low detection accuracy for small and occluded targets and the large number of model parameters in urban traffic roadside object detection tasks. We design a D-C2f module based on multi-branch feature extraction to enhance feature representation while maintaining speed. To strengthen the integration of information from multiscale sequences and enhance feature extraction for small targets, the plural-scale sequence fusion (PSF) module is designed to reconstruct the feature fusion layer. Multiple attention mechanisms are incorporated into the detection head for greater focus on the salient semantic information of occluded targets. To enhance the detection performance of the model, a loss function based on the normalized Wasserstein distance is introduced. Experimental results on the DAIR-V2X-I dataset demonstrate that MQ-YOLO achieves improved mAP@50 and mAP@(50?95) by 3.9 percentage point and 6.0 percentage point compared to the valuses obtained with baseline YOLOv8n with 3.96 Mbit parameters. Experiments on the DAIR-V2X-SPD-I dataset show that the model has good generalizability. During roadside deployment, the model reaches detection speeds of 62.5 frame/s, meeting current roadside object detection requirement for edge deployment in urban traffic.

In order to improve the consistency of metrics between the objective assessment and the human subjective evaluation of stereo image quality, inspired by the top-down mechanism of human vision, this paper proposes a stereo attention-based no-reference stereo image quality assessment method. In the proposed stereo attention module. First, the amplitude of binocular response is adaptively adjusted by the energy coefficient in the proposed binocular fusion module, and the binocular features are processed simultaneously in the spatial and channel dimensions. Second, the proposed binocular modulation module realizes the top-down modulation of the high-level binocular information to the low-level bino- and monocular information simultaneously. In addition, the dual-pooling strategy proposed in this paper processes the binocular fusion map and binocular difference map to obtain the critical information that is more conducive to quality score regression. The performance of the proposed method is validated based on the publicly available LIVE 3D and WIVC 3D databases. The experimental results show that the proposed method achieves high consistency between objective assessment indices and labels.

An U-shaped dual-energy computed tomography (DECT) material decomposition network, called DM-Unet, that combines a selective state spaces model Mamba and efficiency channel attention module is proposed in this paper. The network uses a visual state space module that introduces a channel attention mechanism to capture feature information, adjusts the weights of different levels for feature information in a block through adjustable parametric residual connections, and reduces the gradient explosion and the loss of organizational details through residual connections between the encoder and decoder. Experimental results show that the root mean square error of the base matter image obtained by DM-Unet is as low as 0.041 g/cm3, the structural similarity reaches 0.9981, and the peak signal-to-noise ratio can reach 36.54 dB. Compared with traditional decomposition methods, DM-Unet shows better ability to restore organizational details, noise suppression, and edge information restoration, and is able to fulfill the task of DECT decomposition, which can provide accurate references for the subsequent medical diagnostic work.

This paper proposes a multiplexed fusion deep aggregate learning algorithm for underwater image enhancement. First, the image preprocessing algorithm is used to obtain the image attribute information of three branches (contrast, brightness, and colour) respectively. Then, the image attribute dependency module is designed to obtain fusion features of multiplexed using a fusion network, and then explore the potential fused image attribute correlations through parallel graph convolution. A self-attention deep aggregate learning module is introduced to deeply mine the interaction information between the private and public domains of the multiplexed using sequential self-attention and global attribute iteration mechanisms, and also effectively extract and integrate the important information between image attributes by means of aggregation bottlenecks to achieve more accurate feature representation. Finally, skip connections are introduced to continue enhancing the image output to further improve the effect of image enhancement. Numerous experiments have demonstrated that the proposed method can effectively remove colour bias and blurring, and improve image clarity, as well as facilitate underwater image segmentation and key point detection tasks. The peak signal-to-noise ratio and structural similarity metrics can reach the highest values of 23.01 dB and 0.90, which are improved by 5.0% and 4.7% compared with the suboptimal method, while the underwater colour image quality metrics and information entropy metrics have the highest values of 0.93 and 14.33, which are improved by 2.2% and 0.5% compared with the suboptimal method.

A turbulent fuzzy target restoration algorithm with a nonconvex regularization constraint is proposed to address degradation issues, such as low signal-to-noise ratio, blurring, and geometric distortion, in target images caused by atmospheric turbulence and light scattering in long-range optoelectronic detection systems. First, we utilized latent low-rank spatial decomposition (LatLRSD) to obtain the target low-rank components, texture components, and high-frequency noise components. Next, two structural components were obtained by denoising the LatLRSD model; these were weighted and reconstructed in the wavelet transform domain, and nonconvex regularization constraints were added to the constructed target reconstruction function to improve the reconstruction blur and scale sensitivity problems caused by the traditional lp norm (p=0,1,2) as a constraint term. The results of a target restoration experiment in long-distance turbulent imaging scenes show that compared with traditional algorithms, the proposed algorithm can effectively remove turbulent target blur and noise; the average signal-to-noise ratio of the restored target is improved by about 9 dB. Further, the proposed algorithm is suitable for multiframe or single-frame turbulent blur target restoration scenes.

The existing aluminum surface-defect detection algorithms yield low detection accuracy in practical tasks. Hence, this paper proposes an improved YOLOv8s aluminum profile surface-defect detection algorithm (CDA-YOLOv8). First, the 3×3 downsampling convolution in the network was improved using the context guided block (CG Block) module. This enhances the extraction of features from the global context of the target and aggregate local salient features and global features, thus improving the feature generalization ability. Second, the dilation-wise residual (DWR) module was introduced to improve the Bottleneck structure in C2f, thus improving the multiscale feature-extraction capability. Finally, to address the feature-information loss of microdefects on the surface of aluminum profiles, an ASFP2 detection layer was designed, which integrates the small-target detection layer and the scale sequence feature fusion (SSFF) module. The layer was integrated into the neck of YOLOv8s to extract and transfer more critical small-target feature information in small-sized defects, thereby enhancing the detection performance. Experimental results show that the CDA-YOLOv8 algorithm achieves 93.4%, 80.4%, and 88.1% for indicators of precision, recall, and mean average precision, respectively, which are 5.1 percentage points, 2.4 percentage points, and 4.4 percentage points higher than those of the original YOLOv8s algorithm. This algorithm significantly improves detection performance, particularly through its ability to detect microdefects.

To address issues such as voids and incomplete shapes in three-dimensional (3D) point clouds obtained during the current 3D reconstruction process, a multiscale hybrid feature extraction and activation query point-cloud completion network is proposed. This network adopts an encoder-decoder structure. To extract local information while considering the overall structure, a multiscale hybrid feature extraction module is proposed. The input point cloud was classified into different scales through downsampling, and the hybrid feature information of the point cloud was extracted at each scale. To maintain the high correlation of the point-cloud completion results, an activation query module that retains the feature sequences with high scores and strong correlations is proposed for scoring operations. After the feature sequences are passed through the decoder for point-cloud completion, a complete point cloud is obtained. Experiments on the public dataset PCN indicate that in the comparison of quantitative and visual results, the proposed network model achieves superior completion effects in point-cloud completion and can further enhance the quality of point-cloud completion.

The aim of infrared and visible image fusion is to merge information from both types of images to enhance scene understanding. However, the large differences between the two types make it difficult to preserve important features during fusion. To solve this problem, this paper proposes a dynamic contrast dual-branch feature decomposition network (DCFN) for image fusion. The network adds a dynamic weight contrast loss (DWCL) module to the base encoder to improve alignment accuracy by adjusting sample weights and reducing noise. The base encoder, based on the Restormer network, captures global structural information, while the detail encoder, using an invertible neural network (INN), extracts finer texture details. By combining DWCL, DCFN improves the alignment of visible and infrared image features, enhancing the fused image quality. Experimental results show that this method outperforms existing approaches, significantly improving both visual quality and fusion performance.

Lidar-scanned point cloud data often suffer from missing information, and most existing point cloud completion methods struggle to reconstruct local details because of the sparse and unordered nature of the data. To address this issue, this paper proposes an attention-enhanced multiscale dual-feature point cloud completion method. The multiscale dual-feature fusion module is designed by combining global and local features, to improve completion accuracy. To enhance feature extraction, an attention mechanism is introduced to boost the network's ability to capture and represent key feature points. During the point cloud generation phase, a pyramid-like decoder structure is used to progressively generate high-resolution point clouds, preserving geometric details and reducing distortion. Finally, a generative adversarial network framework, combined with an offset-position attention discriminator, further enhances the point cloud completion quality. The experimental results show that the complementary accuracy of this method on the PCN dataset improves by 11.61% compared to that of PF-Net, and the visualization results are better than those of other methods in comparisons, which verify the effectiveness of the proposed network.

In order to solve the problems of background noise interference, variable scale and low detection accuracy caused by small scale defects in insulator defect detection, an insulator defect detection algorithm based on guided attention and scale perception (GASPNet) is proposed. First, a guided attention module (GAM) is constructed on the backbone network to guide the attention of deep features by using shallow features that have a stronger ability to express small targets, and combining channel and space bidirectional attention to reduce the interference of background noise. Second, in the neck network, a feature enhanced fusion network (FEFN) is proposed to enhance the effective fusion of semantic information and local information by cross-fusing different levels of feature information. Finally, the EIoU loss function is used to define the penalty term by combining the vector angle and position information, which improves the regression accuracy of the detection box and achieves accurate detection of small scale targets. The experimental results show that the mean average precision (mAP@0.5) of GASPNet on the insulator defect detection dataset reaches 94.8%, and the detection speed is 95.3 frame/s, which is significantly better than other detection algorithms. At the same time, the embedded experiments verify that GASPNet still has efficient real-time detection performance under the condition of limited computing resources, which is suitable for practical application scenarios.

In recent years, the Transformer has demonstrated remarkable performance in image super-resolution tasks, attributed to its powerful ability to capture global features using a self-attention mechanism. However, this mechanism has high computational demands and is limited in its ability to capture local features. To address these challenges, this study proposes a lightweight image super-resolution reconstruction network based on dual-stream feature enhancement. This network incorporates a dual-stream feature enhancement module designed to enhance reconstruction performance through the effective capture and fusion of both global and local image information. In addition, a lightweight feature distillation module is introduced, which employs shift operations to expand the convolutional kernel's field of view, significantly reducing network parameters. The experimental results show that the proposed method outperforms traditional convolution-based reconstruction networks in terms of both subjective visual quality and objective metrics. Furthermore, compared to Transformer-based reconstruction networks such as SwinIR-Light and NGswin, the proposed method achieves an average improvement of 0.06 dB and 0.14 dB in PSNR, respectively.

The limited generalization and dataset scarcity of existing generative facial image detection methods present significant challenges. To address these issues, this study proposes a high-quality facial image detection model based on noise variation and the diffusion model. The proposed method employs an inversion algorithm using the denoising diffusion implicit model (DDIM) to generate inverted images with text-based guidance. By comparing the noise distribution differences between real and generated images after inversion, the method optimizes a residual network to identify image authenticity, and enhances both accuracy and generalization. Additionally, a dataset of 10000 high-quality, multi-category facial images is constructed to address the shortage of available facial data. Experimental results demonstrate that the proposed algorithm achieves 98.7% accuracy in detecting generated facial images and outperforms existing methods, enabling effective detection across diverse facial images.

To address the missed detection of small prohibited items in X-ray security inspection images due to low pixel ratios and ambiguous features, this study proposes a detection algorithm based on fine-grained feature enhancement. First, we design a learnable spatial reorganization module that replaces traditional downsampling operations with dynamic pixel allocation strategies to reduce fine-grained feature loss. Second, we construct a dynamic basis vector multi-scale attention module that adaptively adjusts the number of basis vectors according to feature entropy, enabling cross-dimensional feature interaction. Finally, we introduce a 160×160 high-resolution detection head that reduces the minimum detectable target size from 8 pixel×8 pixel to 4 pixel×4 pixel. Experimental results demonstrate that on the SIXray, OPIXray, and PIDray datasets, our algorithm achieves mean Average Precision (mAP) values of 93.3%, 91.2%, and 86.9%, respectively, showing improvements of 1.2%?3.1% over the YOLOv8 baseline model while only increasing the parameter count by 0.2×106.

To address the challenge of balancing model complexity and real-time performance in unmanned aerial vehicle (UAV)-based forest fire smoke detection, this paper proposes a lightweight and efficient multi-scale detection algorithm based on an improved YOLOv8n architecture, named LEM-YOLO (Lightweight Efficient Multi-Scale-You Only Look Once). First, a lightweight multi-scale feature extraction module C2f-IStar (C2f-Inception-style StarBlock) is designed to reduce model complexity while enhancing the representation capability for images of flames and smoke that exhibit drastic scale variations. Second, a multi-scale feature weighted fusion module (EMCFM) is introduced to mitigate the information loss and background interference of densely packed small targets during the feature fusion process. Third, a lightweight shared detail-enhanced convolutional detection head (LSDECD) is constructed using shared detail-enhanced convolutions to reduce computational load and improve the model's ability to capture image details. Finally, the complete intersection over union (CIoU) loss function is replaced by the powerful intersection over union (PIoU) loss function to improve the convergence efficiency in handling non-overlapping bounding boxes. Experimental results indicate that, compared with the baseline model, the improved model achieves increases of 1.9 percentage points and 2.5 percentage points in mean average precision at intersection over union of 0.5 and 0.5 to 0.95, respectively, while reducing model parameters by 31.6% and computational cost by 27.2%, and the processing speed reaches 57.82 frame/s. The improved model achieves an effective balance between lightweight design and detection performance.

Existing methods for few-shot fine-grained image classification often suffer from feature selection bias, making it difficult to balance local and global information, which hinders the accurate localization of key discriminative regions. To address this issue, a multiscale joint distribution feature fusion metric model is proposed in this paper. First, a multiscale residual network is employed to extract image features, which are then processed by a multiscale joint distribution module. This module computes the Brownian distance covariance between the different scales, thereby integrating both local and global information to enhance the representation of important regions. Finally, an adaptive fusion module with attention mechanism based on global average pooling and Softmax weight normalization is used to dynamically adjust feature contributions and maximize the impact of key region features on the classification results. Experimental results indicate that classification accuracies of 87.22% and 90.65% are achieved on the 5-way 1-shot task of the CUB-200-2011 and Stanford Cars datasets, respectively, demonstrating significant performance in few-shot fine-grained image classification tasks.

To address the issue of noise interference in ultraviolet (UV) images, the denoising method based on Facet filtering and local contrast is proposed to enhance image quality for corona detection. First, Facet filtering with a small kernel is applied to the UV image to enhance target pixels and suppress high-intensity noise. Subsequently, a three-layer sliding window traverses the UV image to calculate the local contrast at three levels, generating a saliency map. Finally, noise is removed through threshold segmentation. Experimental results show that proposed method significantly improves UV image quality by enhancing the distinction between salient regions and background, thereby facilitating corona detection.

Solidago canadensis L. is a priority invasive species under strict management in China. Effective detection, identification, and localization are essential for its control. To rapidly and accurately identify Solidago canadensis L. in complex natural environments, a lightweight detection model, YOLOv8-SGND, is proposed as an improvement over the YOLOv8 model to address issues of large parameter size and high computational complexity. Based on the YOLOv8 model's head, the new model designs a lightweight network structure and introduces a shared group normalization detection (SGND) head to enhance both localization and classification performance while significantly reducing the parameter count. First, batch normalization is replaced with group normalization in the convolutional blocks. Second, convolution parameters are shared between two convolutional blocks after feature aggregation to reduce the parameter volume and computational complexity. To improve the model's robustness and optimize the balance of errors in the bounding-box coordinates, the original complete intersection over union (CIoU) is replaced by wise IoU (WIoU) v3 as the bounding-box loss function. Finally, while using shared convolution, the Scale layer is applied to adjust the bounding-box predictions, thus ensuring consistency with the input image dimensions and feature-map sizes across different detection layers. Detection experiments on real-world data show that the proposed YOLOv8-SGND model achieves mAP@0.50 and mAP@0.50∶0.95 of 98.8% and 79.6% (mAP is mean average precision), respectively, which represent improvements of 2.8 and 6.0 percentage points over the original YOLOv8 model, respectively. Additionally, the model parameters and floating-point operations are reduced by approximately 21.4% and 1.6 Gbit, respectively. Compared with mainstream object-detection algorithms such as YOLOX, Faster R-CNN, Cascade R-CNN, TOOD, and RTMDet, YOLOv8-SGND outperforms them in all precision evaluation metrics. The proposed method offers high detection accuracy and inference speed, thus can provide technical support for the lightweight and intelligent recognition of invasive species.

To address the inefficiency of manual color difference classification, this study proposes a multi-strategy improved black-winged kite optimized extreme learning machine (MBKA-ELM) model for dyeing fabric color difference classification. First, as the random initialization of hidden layer weights and biases in extreme learning machine (ELM) algorithms can lead to uneven model training and algorithm instability, the black?‐?winged kite (BKA) optimization algorithm is employed to optimize these key parameters. Second, the incorporation of mirror reverse learning, BKA circumnavigation foraging, and longitudinal and transverse crossover strategies enhances both the convergence speed and global optimization ability of the algorithm. Finally, the MBKA-ELM model is constructed for dyeing fabric color difference classification, achieving an accuracy rate of 98.8% and confirming the feasibility of using this model compared to conventional color difference calculation formulas for detection. Comparative experiments demonstrate the stabilization of the MBKA-ELM model after 10 iterations with a higher classification accuracy than comparable models. Compared with the traditional ELM and optimized models—black-winged kite optimized ELM, spotted emerald optimized ELM, Guanhao pig optimized ELM, cougar optimized ELM, and snake optimized ELM—the classification accuracy improves by 13%, 3.4%, 1.4%, 5%, 4.2%, and 3%, respectively. The proposed model demonstrates superior convergence speed and classification accuracy.

During the photovoltaic inspection of drones, it is necessary to obtain the position information of the photovoltaic panels in the image to determine the fault location of photovoltaic modules. The accuracy of the position and orientation system (POS) data transmitted by small drones is low because of their technical limitations, making it difficult to use them for photovoltaic panel positioning in small drone images. This paper proposes an automatic positioning method for photovoltaic panels in drone images to address this issue. Based on the drone images and the geographical coordinates of the photovoltaic panels within the inspection area, the actual location of the photovoltaic panels within the image is determined, providing accurate location information for troubleshooting. First, the proposed method establishes a coordinate transformation model and its unknown parameters based on the projection relationship between the object and image. It determines the parameter range based on the shooting conditions, selects multiple sets of parameters to determine the geographical coordinates of the photovoltaic panels within the image, and compares these with the mean square error of the back-projection difference of the photovoltaic panels within the area as a loss value. Next, it optimizes the parameters by comparing multiple sets of loss values and verifies them using overlapping images. Finally, based on the optimal parameters, coordinate conversion is performed to achieve automatic positioning of the photovoltaic panel image. In two experiments using more than 14,000 fault boards, the positioning accuracy of the proposed method reaches 97.78% and 98.54%, which increases by 13.06 percentage points and 12.96 percentage points, respectively, compared to the positioning method based on POS data. It also overcomes the influence of changes in the shooting conditions, thereby verifying the accuracy and robustness of the proposed method.

To address the issues of inferior image quality, uneven lighting, and blurred details in low-light environments that result in low detection accuracy, this study proposes a night-time detection model named LowLight-YOLOv8n, which is an improved version of YOLOv8n. First, a low-light image enhancement network named Retinexformer is introduced before convolutional feature extraction in the Backbone network, thus improving the visibility and contrast of low-light images. Second, conventional convolution operations are replaced with RFCAConv in both Backbone and Neck networks, where convolution kernel weights are adjusted adaptively to solve the issue of shared parameters in conventional convolutions, thus further enhancing the model's feature extraction and downsampling capabilities. Subsequently, a new C2f_UniRepLKNetBlock structure is formed by combining the large convolution kernel architecture of UniRepLKNet with the C2f module of the Neck network, thereby achieving a larger receptive field that encompasses more areas of the image with fewer convolution operations, thus allowing a broader range of contextual information to be aggregated, and more potential target information to be captured in low-light images. Finally, a new bounding-box regression loss function named Focaler-CIoU is adopted, which focuses on the detection of difficult samples. Experimental results on the ExDark dataset show that, compared with the baseline model YOLOv8n, LowLight-YOLOv8n improves the mAP@0.5 and mAP@0.5∶0.95 metrics by 6.8% and 5.8%, respectively, and reduces the number of parameters by 0.09×106.

The accurate identification and localization of tilted droplets is a key preprocessing link for achieving a high-precision measurement of the dynamic contact angle. For the problems of low detection accuracy of traditional algorithms and excessive hardware occupancy of deep learning-based target detection algorithms, this paper proposes Light-YOLOv8OBB, a lightweight tilted droplet detection and localization model based on the improved YOLOv8 algorithm. First, this paper designs a C2f-light convolutional structure to lighten and improve the backbone network. Second, the Slim-Neck design paradigm is introduced into the neck network to further lighten the network model. The convolutional attention mechanism module is added to strengthen the model's ability to detect small target objects. Experiments on a homemade droplet dataset and analysis of the results reveal that our algorithm can balance model performance and detection efficiency well. The mean average precision (mAP@0.5:0.95) value of proposed algorithm reaches 76.7%, an improvement of 7.5 percentage points compared with the base model, whereas the number of parameters and computation decrease by 38.7% and 34.9%, respectively, compared with the base model, and the inference time is only 16.1 ms on NVIDIA GeForce MX250.