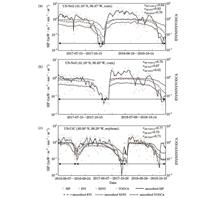

ObjectiveSolar-induced chlorophyll fluorescence (SIF), a byproduct of photosynthesis, exhibits a strong correlation with electron transport in the photosystem and functions as a reliable indicator of vegetation photosynthetic activity. However, its broad application in global productivity assessments, vegetation growth monitoring, and environmental stress detection remains constrained by data sparsity and insufficient long-term time series. To address these limitations, research focusing on SIF prediction through multiple explanatory factors has gained prominence. Studies incorporating multi-source explanatory factors have partially addressed issues concerning spatial discontinuity and low resolution of SIF products from atmospheric satellite sensors. However, a significant challenge persists: optical remote sensing imaging’s inherent susceptibility to cloud reflection or absorption results in lost ground-level information. Consequently, optical remote sensing-based vegetation indices cannot retrieve information from cloud-obscured areas, compromising SIF prediction accuracy. In contrast to optical remote sensing, microwave remote sensing enable ground-level information retrieval because of microwave penetrating non-precipitating and some precipitation clouds. This characteristic makes microwave remote sensing particularly suitable for continuous monitoring in cloud-prone regions. Microwave remote sensing-derived vegetation indices demonstrate both robust cloud penetration capabilities and reflection of vegetation’s internal physiological characteristics. Therefore, this study introduces a method for SIF prediction based on microwave vegetation indices, facilitating surface information acquisition in cloud-covered areas and enhancing SIF prediction accuracy.MethodsThis study utilizes global ozone monitoring experiment-2 (GOME-2) SIF data from 2013 to 2015, integrating it with MODIS-derived normalized bidirectional reflectance (NBAR), land surface temperature (LST), photosynthetically active radiation (PAR), and vegetation optical depth datasets. A bilinear interpolation or aggregation method is then applied to generate 0.25° grid data for dataset construction. The research implements machine learning algorithms for model training and employs cross-validation for hyperparameter optimization, establishing a microwave vegetation index-based SIF prediction model. Additionally, independent satellite-based observation data, including TROPOMI SIF, OCO-2 SIF, and MODIS gross primary productivity (GPP), were collected. The model’s performance evaluation metrics such as the coefficient of determination (R2) and root mean square error (RMSE) are used to assess differences between predicted results and original satellite data.Results and DiscussionsThe microwave vegetation index-based SIF prediction model developed in this study demonstrates validation results against multi-source satellite SIF and GPP products, as illustrated in Fig. 3, achieving R2 values of 0.921, 0.935, 0.923, 0.875, 0.812, and 0.802. The study compared the effectiveness of microwave and optical vegetation indices for SIF prediction under extreme satellite observation conditions. The experimental results indicate that for each 10% increase in effective cloud coverage, the R2 value of the optical vegetation index-based SIF prediction model decreases by 0.042, 0.041, and 0.031 in 2013, 2014, and 2015, respectively (Fig. 6). In comparison, the R2 values of the proposed model decrease by only 0.025, 0.022, and 0.017 (Fig. 6). The decay rate of R2 values for the proposed model decreased by 43%, indicating that the decay rate of SIF prediction accuracy based on optical remote sensing exceeds that of the presented model by a factor of 1.7 (Table 3).ConclusionsThis study presents an SIF prediction method based on microwave vegetation indices, and the potential for satellite-scale SIF prediction of the method is investigated. The method’s accuracy undergoes quantitative evaluation using SIF/GPP products from multi-source remote sensing satellites in orbit (including GOME-2 SIF, TROPOMI SIF, OCO-2 SIF, and MODIS GPP). The results demonstrate that the microwave vegetation indices-based model achieves an R2 value of up to 0.935. Additionally, compared to optical remote sensing-based SIF prediction models, the microwave-based model exhibits a 43% decay rate with increasing effective cloud coverage. This finding confirms that microwave remote sensing’s superior penetration capability effectively mitigates cloud contamination effects on SIF predictions. However, microwave data typically offer lower spatial resolution than optical data, limiting the spatial detail in SIF predictions. For instance, in the current dataset, the microwave vegetation index has a spatial resolution of 0.25°, whereas the optical vegetation index provides a finer resolution of 0.05°. Consequently, applications requiring high spatial resolution should employ optical vegetation index-based methods for SIF prediction, while areas with substantial cloud cover would benefit from microwave vegetation index-based methods for enhanced accuracy.

ObjectiveOne key point in the preparation of optical fiber surface-enhanced Raman spectroscopy (SERS) probes is the enrichment of metal nanoparticles on the fiber end face. When using self-assembly methods to enrich metal nanoparticles, it is necessary to soak the fiber in a solution to deposit the metal particles, which requires a relatively long time, typically 1?2 h. In addition, photolithography methods involve high equipment costs. To reduce both time and experimental costs, we propose the use of laser-induced metal deposition technology. Laser-induced metal deposition, a method that has become relatively popular in recent years, enables rapid and controllable enrichment of metal particles on the fiber end face. To address the fragility of tapered fibers, a more robust spherical-tip fiber is fabricated. The spherical-tip structure overcomes the evanescent field limitations of traditional planar optical fibers and achieves synergistic optimization of spatial light field compression and electromagnetic enhancement through curvature tuning. By controlling the gradient distribution of silver nanoparticles during the laser-induced deposition process, an Ag NP aggregation zone is formed at the apex of the sphere, effectively increasing the density of hot spots.MethodsIn this paper, spherical-tip optical fibers with controllable radii are prepared using a fusion splicer via a melting process. Fibers with radii of 70, 80, 90, and 100 μm are fabricated by adjusting the discharge time and intensity. Uniformly shaped silver nanoparticles are synthesized using a chemical reduction method. A 532 nm laser is then introduced into the prepared spherical-tip fibers, and via laser-induced deposition methods, silver nanoparticles are enriched at the fiber tip, forming the spherical-tip fiber-optic SERS probe. Scanning electron microscopy (SEM) is used to characterize the morphology and size of the probes with different tip radii. A confocal Raman microscope is employed to optimize the Raman characteristic parameters (radius R, cycle number N, and evaporation time t2) and to perform Raman measurements. Moreover, COMSOL software is used to model and simulate the spherical fiber tip, and radius parameters are simulated and analyzed.Results and DiscussionsWe demonstrate that spherical-tip fibers with controllable radii can be prepared via a simple melting method (Fig. 1). The spherical-tip fiber-optic SERS probe shows favorable Raman performance. The maximum electric field at the tip increases initially and then decreases as the radius increases. At a radius of 80 μm, the maximum electric field intensity reaches 27 V/m (Fig. 3). Through optimization of R, N, and t2, the Raman response of the spherical-tip fiber is enhanced (Fig. 4). The optimized probe achieves a detection limit for R6G as low as 10-10 mol/L. Tests with multiple fibers demonstrate that the prepared optical fiber SERS probe has good stability, with a relative standard deviation (dRSD) of 12.2%. In addition, the probe detects multiple molecules (MG, CV, R6G) and real-world molecules (uric acid), confirming its practical application (Fig. 5).ConclusionsWe present a high-performance SERS probe based on a spherical-tip optical fiber. Efficient fabrication is achieved by combining the melting method with a simplified laser-induced nanoparticle self-assembly process. The probe demonstrates excellent sensitivity, achieving a detection limit as low as 10-10 mol/L and strong enhancement capability, with an analytical enhancement factor of 2.07×108 for R6G detection. The spherical geometry enhances the coupling between the localized surface plasmon resonance (LSPR) of silver nanoparticles and the light field through total internal reflection, increasing both light field energy density and the distribution intensity of hot spots. The probe also demonstrates good stability (dRSD=12.2%) and effective detection of malachite green, crystal violet, and uric acid, validating its utility in multiplexed analysis under complex conditions. Future work will focus on two directions: 1) development of a controllable self-cleaning probe structure to improve long-term stability and reusability via surface chemical modification or photocatalytic design; 2) optimizing the laser-induced nanostructure assembly process by integrating a machine learning-driven parameter control strategy to push the limits of single-molecule detection and expand its application in in vivo biosensing.

ObjectiveThe p-n junction ultraviolet (UV) photodetectors based on wide bandgap semiconductor materials demonstrate advantages including low energy consumption, rapid response, and high responsivity, enabling applications in fire warning, ozone detection, and missile tracking. Gallium oxide (Ga2O3), a next-generation semiconductor material, exhibits excellent thermal stability and an optical bandgap ranging from 4.4 to 5.2 eV, enabling strong UV light absorption. Its inherent oxygen defects provide n-type conductivity properties, although achieving stable p-type conductivity remains a technical challenge. Gallium oxide (GaN), another wide bandgap material, exhibits high carrier mobility and superior thermal stability, with well-established p-type doping technology. The combination of Ga2O3 and GaN forms a heterojunction interface characterized by minimal lattice mismatch and low conduction band offset. These properties establish Ga2O3/GaN heterojunction as an optimal material for UV photodetector fabrication. While significant advances have been achieved in aspects of the preparation and UV detection performance of porous Ga2O3/GaN heterojunctions, the oxidation mechanism of various porous GaN films and their influence on heterojunction detection performance remain unclear, which is essential for developing high-performance photodetectors. This research initially employs UV-assisted electrochemical etching to fabricate porous GaN films in different electrolytes, followed by high-temperature oxidation to produce porous Ga2O3/GaN heterojunctions. The study compares and analyzes the oxidation mechanism of different porous GaN films and the detection performance of Ga2O3/GaN heterojunctions.MethodsThe p-GaN epitaxial wafer is initially cut into 10 mm×5 mm segments. These segments undergo sequential ultrasonic cleaning in isopropanol, acetone, and deionized water for 15 minutes each, followed by nitrogen gas drying. The cleaned p-GaN film serves as the anode, with a platinum plate as the cathode. Under UV light irradiation, porous GaN films are prepared through 10-minute etching at 10 V using NaCl, NaNO3, and NaOH solutions as electrolytes. The three porous GaN films undergo thermal oxidation in a quartz tube furnace for 120 minutes at 900 ℃, maintaining an oxygen flow rate of 1.5 L/min. Following oxidation and furnace cooling, the porous Ga2O3/GaN heterojunctions are completed. A portion of the Ga2O3 film is removed using hot phosphoric acid to expose the underlying GaN layer, and circular Ag/In contact electrodes are deposited on the Ga2O3 and GaN film areas via DC magnetron sputtering, completing the porous Ga2O3/GaN heterojunction detector (Fig. 1). Scanning electron microscopy characterizes the microstructures of the porous GaN films and porous Ga2O3/GaN heterojunctions. X-ray diffractometer analysis examines the crystalline structures of the porous Ga2O3/GaN heterojunctions. Room-temperature Raman spectral measurements utilize a Raman spectrometer. An Ultraviolet-Visible-Near Infrared Spectrophotometer tests the optical properties, while a semiconductor parameter analyzer collects and analyzes the detector’s electrical signals.Results and DiscussionsThe Ga2O3 films formed through thermal oxidation of porous GaN films maintain a three-dimensional porous structure, with an irregular interface between the Ga2O3 and GaN films due to lattice structure differences. The porous Ga2O3/GaN heterojunctions prepared via etching in NaCl, NaNO3, and NaOH solutions followed by oxidation exhibit average pore sizes of 28.6, 36.7, and 41.3 nm, respectively, with corresponding Ga2O3 layer thicknesses of 269, 327, and 502 nm. The enhanced thickness of the Ga2O3 film correlates with the increasing pH value of the etching solution, where holes and OH? ions jointly facilitate the GaN film oxidation process, resulting in porous GaN films with larger pore sizes and higher pore densities. This configuration provides additional oxidation sites, yielding a thicker Ga2O3 layer (Fig. 2). XRD and room-temperature Raman spectra reveal characteristic peaks corresponding to β-Ga2O3 films, confirming their formation during thermal oxidation. The GaN (001) plane predominantly transforms into the β-Ga2O3 (-201) plane during this process. Peak intensity variations reflect the thickness changes of the Ga2O3 layer in the Ga2O3/GaN heterojunction (Fig. 3). The porous Ga2O3/GaN heterojunction prepared through NaOH solution etching and subsequent oxidation demonstrates enhanced UV light absorption capacity, attributed to its larger pore size and complex microcavity structure, which effectively restrict photon escape and extend the light transmission path (Fig. 4). This heterojunction exhibits superior light absorption capability and increased Ga2O3 layer thickness, resulting in enhanced photocurrent while maintaining low dark current. Under 0 V bias, the heterojunction maintains a dark current of 0.22 nA. The photo-to-dark current ratio under 254 nm UV illumination achieves 10520, with a responsivity of 108.4 mA/W, an external quantum efficiency of 52.9%, a detectivity of 1.36×1012 Jones, and a response time of 0.35 s/0.13 s. The device exhibits consistent stability during continuous on-off light cycling (Figs. 6 and 7).ConclusionsIn summary, porous Ga2O3/GaN heterojunctions were successfully fabricated on porous GaN films through etching with NaCl, NaNO3, and NaOH solutions utilizing thermal oxidation methodology. The heterojunction prepared via NaOH solution etching and subsequent oxidation demonstrated optimal detection performance. Under 0 V bias, the device achieved a dark current of 0.22 nA, a photo-to-dark current ratio exceeding 104 under 254 nm UV illumination, a responsivity of 108.4 mA/W, an external quantum efficiency of 52.9%, a detectivity of 1.36×1012 Jones, and a response time of 0.35 s/0.13 s. The exceptional detection performance stems from the large pore size and complex microcavity structure of the heterojunction, which effectively restrict photon escape and extend the light transmission path, enhancing UV light absorption. Furthermore, the porous GaN film etched in NaOH solution exhibits higher average pore size and pore density, providing additional oxidation sites and yielding a thicker Ga2O3 layer post oxidation. The increased intrinsic resistance of the thicker Ga2O3 layer reduces dark current, enhancing overall device performance. This research contributes significantly to the advancement and application of high-performance Ga2O3/GaN heterojunction photodetectors.

ObjectiveAs a non-contact and highly sensitive monitoring method, optical fiber curvature measurement is widely applied in structural health monitoring, environmental sensing, and other fields. However, traditional optical fiber speckle pattern analysis methods face issues of insufficient information extraction and low measurement accuracy when dealing with large curvatures or complex environments, which limits the widespread application of optical fiber sensing technology. Previous research has explored the use of single-mode to multimode fiber structures, as well as the optimization of image processing algorithms, but these methods have relatively high requirements for camera resolution and light sources. Deep learning algorithms can improve anti-interference capability and prediction accuracy when they are introduced into optical fiber sensing technology. We proposed a high-precision curvature measurement method based on the VggNet 16 model and specialty optical fiber speckle patterns. By designing a single-mode-multimode-specialty optical fiber structure and utilizing deep learning technology, the accuracy and anti-interference capability of optical fiber curvature measurement have been significantly improved.MethodsFirst, a single-mode-multimode-specialty optical fiber structure is designed, in which the multimode fiber increases the mode field diameter, while the dual-core single-side hole fiber excites asymmetrically distributed modes, enhancing the information content of speckle patterns and improving curvature measurement accuracy. The single-mode fiber serves as the signal input channel, ensuring high fidelity of the signal source and avoiding intermodal dispersion. The multimode fiber supports multiple transmission modes, laying the foundation for complex interactions. The specialty fiber section promotes optical field redistribution and strong coupling between modes, enhancing the complexity of the light spot morphology and increasing the response sensitivity to stress and curvature changes. Then, an automated experimental platform is established. A device is constructed that automatically provides curvature variations, automatically changes the degree of fiber bending, and saves the speckle patterns along with curvature labels. The experimental platform consists of a coherent light source, fiber optic sensing structure, complementary metal-oxide-semiconductor (CMOS) camera, three-dimensional displacement stage, stepper motor, and host computer. A 632.8 nm laser from a He-Ne laser source is coupled into the single-mode fiber through a collimating mirror and then coupled into the multimode fiber through mode mismatch, exciting multiple conduction modes. After passing through the multimode-specialty fiber fusion point, the various modes are coupled into the asymmetric dual-core off-center hole fiber, where coupling and interference occur. The superimposed optical field is focused and magnified by a lens, and finally, the speckle pattern is captured by a CMOS camera. After collecting and processing a large dataset, we conduct tests using the VggNet 16 classification model. Analysis of prediction errors reveal that the model will misidentify speckle patterns with similar curvatures, rather than making chaotic or random misjudgments, indicating that the speckle patterns presented by this fiber structure have a correlation when curvatures are similar. Subsequently, the dimension of the final output layer of the VggNet 16 model is adjusted to train a regression model, and its performance and accuracy are compared with traditional algorithms.Results and DiscussionsThe combination of specialty optical fiber and the VggNet 16 model achieves extremely high prediction accuracy on the test set, with 100.00% of samples having an error less than 0.1 m-1, 97.03% of samples having an error less than 0.07 m-1, and 96.04% of samples having an error less than 0.05 m-1. The mean square error (MSE) of the prediction is 5.877×10-4 m-2,and the root mean square error (RMSE) is 2.424×10-2 m-1. The prediction results are then compared with typical structures and traditional algorithms. First, Fig. 14 reveals that traditional algorithms will make misjudgments in cases where timestamps are similar. Fig. 15 compares typical optical fiber structures with the structure designed in this paper, showing that the addition of specialty optical fiber improves this phenomenon, specifically by reducing the numerical differences in feature indicators at the same curvature. Fig. 18 compares the VggNet 16 model with the ResNet 50 model, and the results indicate that VggNet 16 performs better under certain error thresholds, especially when the error is less than 0.05 m-1 and 0.1 m-1, where VggNet 16 has higher accuracy. Therefore, the superiority of VggNet 16 in curvature sensing has been verified, and the advantages of the optical fiber structure have been further enhanced on the foundation of deep learning.ConclusionsThe stress measurement sensing method for specialty optical fiber structures based on the VggNet 16 model proposed in this paper can improve the accuracy and stability of curvature measurement. By comparing the performance between different optical fiber structures, as well as various traditional algorithms and deep learning algorithms, this method has been verified to demonstrate superior measurement performance across different curvature ranges and achieve high-precision regression prediction of curvature values. Through the use of various gradient-weighted class activation mapping techniques for activation area visualization analysis, the key regions focused on by the VggNet 16 model during the prediction process are revealed, enhancing the model’s interpretability and reliability. Simultaneously, the designed single-mode-multimode-specialty optical fiber structure effectively increases the information content of the speckle images, improving the performance of curvature measurement. The automatic curvature setting device designed in the experiment greatly reduces experimental costs and provides support for future research on optical fiber curvature detection methods. Future research can further explore model optimization strategies and the application of these technologies in other related fields.

ObjectiveHigh-sensitivity strain sensors play a crucial role in various fields such as aerospace engineering, structural health monitoring, human healthcare, and motion tracking. Over the past few decades, a wide range of optical strain sensors have been developed using different methods and structures. However, many of these sensors require costly equipment or specialized optical fibers, increasing both expense and fabrication complexity. In recent years, the Vernier effect has attracted significant attention due to its ability to substantially enhance the sensitivity of various optical fiber sensors. A typical Vernier-effect-based fiber sensor consists of two interferometers with similar free spectral ranges (FSRs). A minor spectral shift in one interferometer leads to a pronounced shift in the superimposed spectrum, which significantly improves the sensitivity by several orders of magnitude. Various Vernier-effect-based sensor structures have been proposed for measuring physical parameters such as temperature, refractive index, displacement, and strain. However, traditional fiber-optic sensors often rely on broadband light sources, resulting in low optical transmission power and wide 3 dB bandwidths, which compromise sensing accuracy. If a light source with higher power and a narrower 3 dB bandwidth is used, sensing accuracy can be significantly improved. Fiber laser sensors offer a promising solution to these limitations. In such systems, the sensing element acts as a filter, and its central wavelength determines the operating wavelength of the laser. Changes in external conditions lead to a shift in the central wavelength of the filter, which in turn alters the operating wavelength of the laser. While the integration of the Vernier effect with fiber laser sensing has enhanced the performance of strain sensors, challenges such as limited stability and high temperature cross-sensitivity remain. To address these limitations, we propose a high-sensitivity fiber laser strain sensor based on a Vernier effect filter.MethodsThe core of the proposed sensor is a Vernier-effect-based filter composed of two cascaded Mach-Zehnder interferometers (MZIs), where one functions as the reference arm and the other as the sensing arm. The FSRs of the two MZIs are matched by carefully adjusting the length difference between the arms, ensuring the generation of a Vernier effect. When external stress causes a change in the optical path of the sensing MZI, the laser’s central wavelength experiences a significant shift, enabling high-sensitivity strain detection.Results and DiscussionsThe sensing MZI is stretched in increments of 72 nm, corresponding to a strain change of 0.72 με. As strain increases, the peak wavelength of the laser shifts accordingly. Within the strain range of 0?3.6 με, the sensor exhibits a sensitivity of 4.15 nm/με (Fig. 6). In repeatability tests, the maximum deviation in strain sensitivity across four experiments is 0.023 nm/με (Fig. 6). During a 1-h stability test, the wavelength drift remains between 0.04 nm and 0.06 nm (Fig. 7). After temperature compensation, the temperature sensitivity is 0.097 nm/℃, and the temperature cross-sensitivity is 0.023 με/℃(Fig. 9).ConclusionsWe present a high-sensitivity strain fiber laser sensor based on a Vernier effect filter, which comprises two cascaded MZIs serving as reference and sensing arms. The FSR of the two MZIs is closely matched to generate a Vernier effect. By integrating the Vernier effect filter into a ring fiber laser, the sensor achieves a high strain sensitivity of 4.15 nm/με over a range of 0?3.6 με. The system also shows excellent repeatability, with a maximum strain deviation of 0.023 nm/με across four trials, and good stability, with a wavelength drift of only 0.04?0.06 nm over 1 h. After temperature compensation, the temperature sensitivity reaches 0.097 nm/℃, and the temperature cross-sensitivity is 0.023 με/℃. To the best of our knowledge, this sensor demonstrates the highest strain sensitivity among reported MZI-based fiber sensors. By leveraging the inherent advantages of fiber lasers, the proposed sensor exhibits excellent repeatability and stability, making it highly suitable for applications across a wide range of fields.

ObjectiveUnderwater wireless optical sensor network (UWOSN) represents a vital technology for high-speed, low-latency underwater data transmission, supporting applications from environmental monitoring to underwater exploration. The performance of UWOSN critically depends on the accuracy of node localization. The underwater environment introduces distinct challenges, including node mobility due to ocean currents, severe signal attenuation, and energy constraints, which complicate precise localization. Although existing dynamic localization algorithms have advanced the field, they frequently struggle to address the unpredictability of node movement and environmental effects on signal propagation. This paper presents a novel dynamic localization algorithm based on optimal anchor node combination selection (DLOS) to address these challenges. The proposed DLOS algorithm combines advanced optimization techniques and machine learning to improve localization accuracy and success rate in dynamic underwater conditions.MethodsThe proposed DLOS algorithm incorporates four key innovations. First, it introduces a comprehensive fitness function to evaluate anchor node combinations by simultaneously considering three critical factors: position uncertainty, residual energy, and geometric coplanarity. This multi-criteria approach ensures the selection of anchor nodes that are both reliable and energy-efficient. Besides, the proposed DLOS algorithm employs an improved Lévy flight-based grey wolf optimizer (LGWO) to efficiently search for the optimal anchor combination. The LGWO is enhanced with a nonlinear distance control parameter and a good-point-set initialization method to improve convergence speed and avoid local optima. Additionally, the proposed DLOS algorithm incorporates a random forest-based dynamic ranging model to handle time-varying parameters such as trajectory angle and optical signal attenuation. This model is trained on extensive datasets to predict accurate distance measurements despite environmental fluctuations. To further enhance performance, by employing a hybrid localization approach that integrates time difference of arrival (TDOA) and received signal strength (RSS) ranging techniques, the proposed DLOS algorithm effectively mitigates localization errors induced by clock asynchrony. Based on the above key innovative methods, the proposed DLOS algorithm effectively increases localization accuracy and localization success rate in despite of node mobility.Results and DiscussionsThe performance of the proposed localization algorithm DLOS is verified by simulations. In order to visually validate the performances of the proposed DLOS algorithm, the M-RSS algorithm, the LLSH algorithm, the RSS/KF algorithm, and the DLNS algorithm are selected as the compared algorithms. The proposed DLOS algorithm shows an obvious improvement in localization accuracy compared to the other four algorithms across varying numbers of anchor nodes (Fig. 7). Evidently, the DLOS algorithm leverages its optimal-anchor-combination selection mechanism for global search and rapid convergence, identifying the most suitable anchor combination. This significantly shortens localization time and reduces localization errors. As the ranging noise variance gradually increases from 0 to 1, the proposed DLOS algorithm outperforms the other four compared algorithms in RMSE (Fig. 8). In UOWSNs, both anomalous ranging values and amplified noise variance can affect the overall accuracy of position estimation. The DLNS and the DLOS algorithms consider the impact of noise on distance measurements. They utilize a random forest model to process each input data instance, ultimately yielding excellent distance values. Specifically, the localization accuracy of the DLOS algorithm, which incorporates the anchor node selection mechanism, significantly surpasses that of the DLNS algorithm. This is mainly because the anchor node selection mechanism can alleviate the generation of abnormal distance measurement. As the node communication radius varies, the proposed DLOS algorithm maintains lower RMSE compared to the other four algorithms (Fig. 9). This is attributed to the fact that the anchor node selection mechanism in the DLOS algorithm considers both remaining energy and node mobility, thereby reducing the impact of increased energy consumption caused by enlarging node communication radius on localization accuracy. The proposed DLOS algorithm exhibits the lowest RMSE with diverse attenuation coefficient compared to the other four algorithms (Fig. 10). This phenomenon mainly results from the fact that the mentioned algorithms all employ the RSS ranging technology, making their localization accuracy heavily depend on the extent of underwater signal path loss. As for the DLOS and DLNS algorithms, they use the variation of attenuation coefficient as input to the random forest model and train an effective model to predict the precise distance values between nodes. Additionally, the DLOS and DLNS algorithms integrate both RSS and TDOA ranging, alleviating the limitations of solely relying on RSS ranging, resulting in smoother error curves. The proposed DLOS algorithm outperforms the other four algorithms in localization success rate with different number of simulation times (Fig. 11). This can be attributed to the fact that the DLOS algorithm incorporates an optimal-anchor-combination selection mechanism based on an improved LGWO and employs the random forest model to reduce dynamic ranging errors. This enables the DLOS algorithm to maintain the highest localization success rate in the five algorithms.ConclusionsThis paper presents a dynamic localization algorithm based on optimal anchor combination selection, namely DLOS, for UOWSN. Through the implementation of a novel optimal anchor node combination strategy utilizing a comprehensive objective function and an improved LGWO, the proposed DLOS algorithm enhances localization accuracy and success rate. Furthermore, considering the stochastic mobility of underwater nodes and optical wave attenuation characteristics, a dynamic localization model based on random forest is incorporated to improve accuracy and success rate. The proposed DLOS algorithm also implements a hybrid localization strategy based on TDOA and RSS ranging approaches to minimize localization errors caused by clock asynchrony. Simulation results confirm that the proposed DLOS algorithm achieves superior performance in localization accuracy and success rate compared to the four reference algorithms.

ObjectiveReconfigurable broadband linear frequency-modulated (LFM) signals are essential for modern radar systems to achieve high-resolution target detection and reliable operation in complex electromagnetic environments. Existing photonic approaches encounter significant limitations: conventional electronic methods (high-speed AWGs, ≥256 GSa/s) face difficulties balancing >10 GHz bandwidth with real-time parameter tuning, while photonic techniques such as spectral stitching or photon-assisted frequency multiplication necessitate hardware reconfiguration, restricting software-defined adaptability. Current solutions typically depend on expensive ultra-high-speed electronics or complex optical components, impeding practical implementation. Modern applications—including cognitive radar for dynamic spectrum sharing, adaptive electronic warfare for anti-jamming, and joint spectrum sensing—require agile waveform control across bandwidth, center frequency, and time-frequency patterns, which current methods cannot adequately provide. This study introduces a novel optical LFM generation scheme utilizing fiber nonlinearity and low-speed AWGs (≤10 GSa/s). Through self-phase modulation (SPM) in highly nonlinear fibers (HNLFs), broadband signal processing transitions to the optical domain, enabling software-defined dynamic adjustment of key parameters—bandwidth (4?8 GHz), center frequency (20?30 GHz), and waveform duration—through basic electrical signal modifications. This research presents a cost-effective, simplified hardware solution bridging photonic processing and electronic stability. It enables next-generation radar systems with real-time reconfigurability, suitable for civilian-military integrated applications including 5G/6G beamforming, autonomous vehicle perception, and intelligent electronic countermeasures.MethodsThe proposed system comprises two optical paths (Fig. 1). In the upper path, a low-speed AWG generates electrical shaping signals to intensity-modulate an optical carrier via a dual-drive Mach-Zehnder modulator (DD-MZM). The modulated optical signal is amplified and fed into a highly nonlinear fiber (HNLF) for spectral broadening via SPM. The lower path employs a dual-parallel MZM (DP-MZM) to generate a frequency-shifted optical carrier. The spectrally broadened signal and the shifted carrier are then heterodyned at a photodetector to produce the LFM waveform. Key parameters—bandwidth, center frequency, and duration—are controlled by adjusting the AWG’s driving signal, peak optical power, and frequency shift. Simulations in MATLAB and OptiSystem validate the scheme’s performance under varying conditions (Table 2).Results and DiscussionsSimulations demonstrate that a 10 GSa/s AWG produces an 8 GHz bandwidth LFM signal centered at 30 GHz with high linearity [Fig. 4(f)]. Lower sampling rates (1 GSa/s) reduce frequency linearity [Fig. 4(c)], while higher rates (20 GSa/s) provide no substantial improvement [Fig. 4(i)]. Modifying the peak optical power (1?2 W) or AWG signal period (5?10 ns) enables bandwidth tuning from 4 GHz to 8 GHz [Figs. 5(c)?(f)]. Adjusting the frequency shift modifies the center frequency [20 GHz to 30 GHz, Fig. 5(a)]. Through specialized electrical driving signals, the system generates LFM waveforms with time-frequency diagrams representing arabic numerals 1?5 [Fig. 6(d)]. This illustrates the system’s capability for arbitrary waveform design, essential for adaptive radar and electronic warfare applications.ConclusionsThis study presents a highly reconfigurable LFM signal generation scheme based on optical nonlinear effects. By exploiting SPM in HNLF and low-speed AWGs, the method achieves continuous control over bandwidth (4?8 GHz), center frequency (20?30 GHz), and waveform envelope without hardware reconfiguration. Key advantages include reduced reliance on high-speed electronics, software-defined flexibility, and compatibility with mainstream radar frequency bands. The scheme offers a cost-effective solution for software-defined radar systems, cognitive electronic warfare, and adaptive spectrum sensing, bridging the gap between photonic processing and electronic stability. Future work will focus on experimental validation and extending the bandwidth beyond 10 GHz.

ObjectiveThe rapid advancement of direct and indirect reconnaissance technologies with wide-area and full-spectrum coverage has exposed limitations in traditional digital camouflage methods. Current camouflage techniques primarily rely on background color distribution, achieving initial concealment but lacking precise target edge feature perception. This deficiency results in visible target contours and inadequate environmental integration, compromising camouflage effectiveness. Consequently, the accurate perception of target edges and generation of seamlessly integrated digital camouflage images has emerged as a critical challenge. This study addresses these limitations by proposing an adaptive digital camouflage generation method based on deep learning. The approach combines the you only look once version 8 (YOLOv8) object detection algorithm with resolution-robust large mask inpainting with Fourier convolutions (LAMA), establishing a comprehensive closed-loop pipeline from target perception to camouflage generation. The effectiveness of the proposed method is evaluated against existing approaches using three objective metrics: structural similarity index (SSIM), peak signal-to-noise ratio (PSNR), and mean squared error (MSE). Experimental results indicate that the proposed method effectively processes target edge regions and generates digital camouflage images that achieve superior background integration.MethodsThis study presents an adaptive digital camouflage image generation algorithm leveraging deep learning capabilities. The algorithm automatically generates digital camouflage images that closely match the surrounding environment based on acquired background images, enabling target concealment in the visible spectrum. The methodology employs the YOLOv8 deep learning model for target object detection and mask generation, establishing the foundation for edge-aware camouflage processing. To overcome limited training data constraints, the YOLOv8 training process incorporates a few-shot learning strategy to enhance detection accuracy. The target mask and background image are subsequently processed through the digital camouflage generation network, which perceives target edge features while extracting background texture and semantic information through deep learning. Furthermore, a single-objective optimization algorithm refines the YOLOv8-generated target mask, facilitating the production of digital camouflage images with enhanced texture, color, and structural consistency with the background.Results and DiscussionsThe performance evaluation of the proposed method involved comparison with six representative baseline methods, all applied to identical target objects (Fig. 7). Traditional approaches demonstrate inadequate perception of target color space information and environmental edge characteristics, resulting in poor spatial localization of color distribution. Consequently, the target region remains visually distinct, yielding low fusion quality in the generated camouflage image. While deep learning-based methods show improved fusion, they are hindered by excessive edge sharpness that compromises camouflage effectiveness. The proposed method successfully addresses target edge regions, producing camouflage images with markedly enhanced background integration. Quantitative assessment employed three established image quality metrics—SSIM, PSNR, and MSE. Higher SSIM and PSNR values, coupled with lower MSE, indicate superior visual similarity and background integration. Statistical analysis of the results, expressed as mean±standard deviation (xMean±xStd), demonstrates that the proposed method achieves an average SSIM increase of 0.028, PSNR improvement of 0.14 dB, and MSE reduction of 1.14, confirming its superior performance. Additional experiments conducted across diverse background scenarios (Figs. 8?10) demonstrate consistent production of high-quality camouflage images, validating the method’s robustness and broad applicability.ConclusionsThis paper presents a deep learning-based optical adaptive digital camouflage generation method to address the limitations of traditional approaches, particularly their inadequate perception of target edge features and subsequent poor background integration. The proposed methodology encompasses two primary stages: target detection and camouflage image generation. The approach implements data augmentation techniques to enhance YOLOv8 detection network generalization, followed by the LAMA image inpainting algorithm for high-quality digital camouflage image generation through target mask optimization. Performance evaluation utilizes objective metrics including SSIM, PSNR, and MSE. Experimental results demonstrate superior performance across all objective evaluation metrics compared to conventional methods, with improvements of 0.028 in average SSIM, 0.14 dB in PSNR, and a reduction of 1.14 in MSE. Additional experiments across varied environmental backgrounds confirm the method’s robustness and general applicability.

ObjectiveThe limited depth-of-field (DoF) in optical imaging systems poses a fundamental challenge in digital photography, making it difficult to capture all scene elements in sharp focus simultaneously. Multi-focus image fusion (MFIF) addresses this limitation by integrating multiple images with varying focus regions into a single all-in-focus image. Traditional MFIF methods typically follow a sequential “match-then-fuse” paradigm, which is susceptible to artifacts caused by imperfect registration, particularly in stereo scenarios with significant disparities. While deep learning-based methods have improved upon traditional techniques, they mostly target monocular cases and often struggle with large-scale content mismatches caused by stereo disparities or dynamic scene changes. In this paper, we tackle the more general and challenging scenario of stereo multi-focus image fusion, where issues such as lens breathing, defocus spread effects (DSE), and inter-view disparities further complicate content alignment and information preservation. The two primary goals are: 1) to achieve robust stereo matching that accommodates large disparities without explicit image registration, and 2) to ensure high-quality fusion that retains both local details and global semantic coherence. By addressing these challenges, the proposed method enhances the applicability of MFIF in critical fields such as microscopic imaging, industrial inspection, and security surveillance, where stereo vision systems are increasingly used but existing fusion methods often fall short.MethodsThe proposed framework incorporates several key innovations to meet the demands of stereo multi-focus fusion. Central to the design is a novel asymmetric network architecture that explicitly separates processing into primary and secondary branches, breaking away from traditional symmetric structures. The primary encoder, based on ResNet-101, extracts fine-grained local details using a multi-scale feature pyramid (Conv 1?Conv 4). Meanwhile, the secondary encoder leverages a Vision Transformer (ViT-B/16) to capture global context by dividing input images into 16×16 patches and encoding them into 768-dimensional token vectors. This dual-path structure enables the model to simultaneously preserve fine details and global coherence. A key innovation is the disparity-aware matching module, inspired by the querying Transformer (Q-Former)’s modality alignment capabilities. This module consists of six stacked matching blocks, each incorporating self-attention and cross-attention layers. Learnable queries facilitate feature alignment between the two branches, supporting stereo matching without explicit registration. The module is trained using a hybrid loss function that combines matching loss and feature reconstruction loss, with differentiated treatment for positive and negative sample pairs. The fusion module adopts cross-attention mechanisms to compare and selectively integrate sharp features from both branches. Unlike the matching module, it treats both streams symmetrically and uses residual learning to blend high-frequency details and inconsistent content. A U-Net-based decoder with skip connections reconstructs the final all-in-focus image. The end-to-end uses a composite loss comprising matching loss, fusion loss, multi-scale reconstruction loss, and a perceptual anti-artifact loss based on the learned perceptual image patch similarity (LPIPS) metric. The training dataset includes synthetic datasets (NYU-D2 and InStereo2K with simulated defocus) and real-world datasets (Lytro and Middlebury 2014), with a balanced emphasis on monocular and stereo tasks.Results and DiscussionsExtensive experiments validate the effectiveness of the proposed method. In monocular fusion tasks using the Lytro dataset, the method achieves state-of-the-art results, with a QAB/F score of 0.7894, a 0.021 improvement over the IFCNN baseline. The normalized mutual information (NMI) score of 1.1034 further confirms its strong capacity for transferring information from input images to the fused output (Table 1). In stereo fusion tasks on the Middlebury 2014 dataset, the method shows remarkable robustness to disparities. The artifact metric (NAB/F) drops to 0.0715, a 0.06 improvement over conventional methods, while maintaining high visual fidelity [visual information fidelity for fusion (VIFF) score of 0.9813]. These results validate the effectiveness of the Q-Former-inspired matching module in handling large content mismatches (Table 2). Qualitative comparisons (Figs. 9 and 10) reveal several key advantages: 1) In regions with abrupt DoF transitions, the method achieves smooth blending without halo artifacts; 2) In regions affected by DSE, it successfully reconstructs textures that other methods blur or miss; 3) In stereo cases with substantial disparity, it preserves sharp details and avoids ghosting effects common in registration-dependent approaches. Ablation studies (Table 3) show that removing the matching module degrades NAB/F by 0.08, confirming its importance for stereo tasks. Likewise, omitting the fusion module leads to a 0.027 drop in QAB/F, affirming its relevance to both monocular and stereo fusion. The asymmetric design also proves more effective than symmetric alternatives in addressing content mismatches.ConclusionsWe present a robust solution for stereo multi-focus image fusion, featuring several novel components. The asymmetric network structure balances local detail preservation with global context modeling. The Q-Former-inspired matching module establishes a new paradigm for handling stereo disparities without explicit registration. The cross-attention fusion mechanism effectively integrates complementary sharp features while suppressing artifacts. Experimental results across multiple benchmarks confirm that the proposed method outperforms existing approaches in both monocular and stereo settings. The framework’s robustness to content mismatches and ability to reconstruct missing details in defocused regions significantly expands the practical applicability of multi-focus fusion technology. Current limitations include computational demands from Transformer components and reduced effectiveness in extreme cases of content mismatch (e.g., full occlusion or severe motion blur). Future work will focus on 1) developing lightweight variants for real-time applications, 2) enhancing adaptability to extreme scene variations, and 3) exploring applications in multi-modal fusion and dynamic scene reconstruction. Overall, the proposed method offers a flexible and high-performance solution for advancing image fusion technologies in both academic and industrial applications.

ObjectivePneumonia represents a respiratory disease with high incidence and mortality rates in childhood. The accurate segmentation of lung computed tomography (CT) images plays a crucial role in early diagnosis and treatment planning. The manual labeling of infected regions, however, is time-intensive and burdensome, significantly increasing radiologists’ workload. Therefore, developing efficient automatic segmentation methods holds substantial practical significance in alleviating medical resource constraints. Current predominant medical image segmentation approaches primarily utilize U-shaped architecture, known for its semantic modeling capabilities. However, the encoder-decoder structure inherently requires multiple down-sampling operations, resulting in the loss of critical spatial structure information and compromising segmentation accuracy. Furthermore, infected regions in childhood pneumonia CT images typically present with scattered and fragmented multifocal distribution patterns, demanding enhanced model capabilities for capturing long-distance dependencies and maintaining overall structural coherence. While Transformer-based segmentation networks have demonstrated strong performance in global modeling recently, their limited local spatial priors and patch size constraints often lead to inadequate local detail segmentation. Additionally, normal anatomical structures such as the lung hilum exhibit morphological similarities to infected regions, necessitating superior network anti-interference capabilities. To address these challenges, this study proposes a U-Net-based difference aware guided boundary Transformer segmentation network.MethodsDBTU-Net aims to enhance both local structural detail modeling and global semantic dependency modeling capabilities, enabling accurate segmentation of fragmented and scattered multi-focal regions. The network architecture builds upon the classical U-shaped structure, incorporating three key components: gated channel Transformer (GCT), difference aware fusion (DAF), and boundary Transformer (BT), as shown in Fig. 1. During the feature extraction phase, multi-scale semantic information is progressively extracted through multilayer convolution and down-sampling operations. To enhance the network’s context modeling capability, the GCT module (Fig. 2) is integrated into each encoder layer. This module dynamically models channel dependencies to adaptively adjust the importance distribution across different semantic channels, thereby strengthening global information perception. The DAF module is implemented in the skip connection path, explicitly enhancing spatial structure by computing difference information between adjacent encoder layer features. This mechanism mitigates spatial detail loss from down-sampling while providing the decoder with comprehensive structural priors, improving the model’s capacity to recognize small lesion regions. At the network’s bottleneck layer, the BT module (Fig. 3) further enhances global modeling capability. This module utilizes encoder multi-scale disparity maps for guidance, establishing potential connections between distant lesion regions through Transformer architecture, improving distant lesion recognition, and refining boundary segmentation while maintaining global consistency. The decoder ultimately produces a high-quality segmentation map through up-sampling operations.Results and DiscussionsExperimental analyses are conducted on a private childhood pneumonia CT dataset (Child-P) and two public COVID-19 CT datasets (COVID, MosMed) to validate DBTU-Net’s effectiveness. Eight ablation schemes were designed to evaluate the performance of three key modules: GCT, DAF, and BT. The results demonstrate that each module enhances network segmentation performance (Table 2), with the DAF module showing notable improvements of 8.21 percentage points, 12.34 percentage points, and 13.94 percentage points for Dice similarly coefficient (Dice), Jaccard index (JI), and sensitivity (SE) metrics, respectively, confirming its effectiveness in enhancing spatial detail expression, preserving structural information, and improving lesion integrity. Module combination experiments further validate the DAF module’s importance. Without DAF, retaining only GCT and BT leads to performance decreases of 1.09 percentage points and 0.99 percentage points in JI and SE metrics compared to the full model. The DAF and BT combination achieves 25.24 pixel in Hausdorff distance (HD) metrics while maintaining high comprehensive performance, demonstrating their synergistic effect on boundary detail extraction. In comparison experiments, DBTU-Net achieves optimal results across all five metrics on the Child-P dataset (Table 3), with Dice and JI reaching 89.61% and 81.17%, representing improvements of 8.17 percentage points and 12.48 percentage points over the baseline network, surpassing the suboptimal CASCADE network. Visualization results indicate DBTU-Net’s superior sensitivity in identifying scattered and tiny lesions, reducing missed segmentation and over-segmentation instances (Fig. 6). The decreased HD metrics demonstrate the model’s advantage in lesion boundary modeling, validating the effectiveness of cross-scale difference perception and boundary modeling mechanisms. On the COVID dataset, DBTU-Net leads in all five metrics, with JI, SE, and Matthews correlation coefficient (MCC) reaching 69.66%, 77.02%, and 81.04%, significantly outperforming U-Net++ in balancing lesion pixel identification and background differentiation (Table 4). On the MosMed dataset, despite slightly lower SE than TransDeepLab, DBTU-Net achieves optimal results in Dice, JI, and MCC metrics (Table 5), demonstrating robust lesion structure modeling. Visualization results show DBTU-Net’s advantage in reducing mis-segmentation and false positives, attributed to its contextual modeling mechanism integrating GCT and BT (Fig. 7). Multiple local detail visualizations confirm DBTU-Net maintains consistent segmentation in regions with blurred edges or irregular lesion morphology, validating its local segmentation accuracy and robustness under complex structures (Fig. 8).ConclusionsThis research focuses on childhood pneumonia segmentation. DBTU-Net addresses the limitations of traditional U-shaped networks in segmenting small, fragmented, and complex lesions due to spatial information loss and limited global feature extraction. The network enhances spatial structure expression by utilizing the DAF module to mine differential features between layers, while incorporating the BT module to guide high-level semantic information for boundary enhancement. This combination improves the modeling capability for distant lesions and local boundary segmentation accuracy, reducing mis-segmentation in complex lesion regions. Experimental results on the private childhood pneumonia dataset demonstrate DBTU-Net’s superior performance compared to existing mainstream methods across multiple evaluation metrics. Additionally, its strong performance on the public COVID-19 dataset validates the method’s generalization capabilities.

ObjectiveSparse aperture optical systems consist of multiple sub-apertures. By optimizing the array structure and performing image restoration, the information obtained about the target object can be comparable to that of an equivalent single-aperture optical system, effectively addressing problems caused by increasing aperture size. However, since the light-transmitting area of a sparse aperture system is smaller than that of its single-aperture counterpart, it results in the loss of intermediate-frequency information, leading to issues such as blurred texture details and reduced image contrast. To address these problems, we combine polarization imaging with sparse aperture imaging.MethodsIn this paper, we propose a polarization image fusion method for sparse aperture optical systems. This process mainly includes the following steps: First, a Golay 3 polarization sparse aperture imaging system is built to capture polarization images at four different polarization angles. Second, these images are preprocessed to calculate the degree of linear polarization and the angle of polarization. Then, the polarization sparse aperture fusion network (PSAFNet) is used to fuse the polarization intensity image, the degree of linear polarization, and the polarization angle, integrating the polarization information into the intensity image and producing a more information-rich result. Next, intermediate-frequency regions of the full-aperture image, sparse-aperture image, and fused image are extracted. The Canny operator is applied to extract intermediate-frequency edges to compare the richness of intermediate-frequency information. Information entropy, standard deviation, and the multi-scale structural similarity index (MS-SSIM) are also used to evaluate PSAFNet and other fusion methods.Results and DiscussionsThe proposed PSAFNet method effectively alleviates the decline in intermediate-frequency information caused by the sparse aperture structure. In an indoor scene (Fig. 8), compared with the sparse aperture image, the fused image shows increases of 26.2%, 21.0%, and 27.0% in edge density, information entropy, and the number of connected regions, respectively (Fig. 9). In an outdoor scene (Fig. 12), the fused image shows corresponding improvements of 32.9%, 23.6%, and 16.6% (Fig. 13). Compared with other fusion methods, the proposed method performs better in indoor scenes (Fig. 10), with higher information entropy, standard deviation, and MS-SSIM (Table 1). The MS-SSIM of PSAFNet is close to 1, indicating higher similarity to the single-aperture image in contrast and structure, with lower image distortion. Compared with the sparse aperture image, the information entropy increases by 10.10%, and the standard deviation increases by 63.49%. In outdoor scenes (Fig. 14), the proposed method also surpasses other fusion methods, with information entropy increasing by 3.55% and standard deviation by 30.35% (Table 2).ConclusionsWe propose a polarization image fusion method, PSAFNet, for sparse aperture optical systems based on a deep learning network. The method extracts texture features from the polarization intensity image and the degree of linear polarization, as well as semantic features from the polarization intensity and polarization angle using an encoder. The fusion module uses spatial and channel attention mechanisms to enhance image details and preserve semantic information. In addition, edge features are extracted and fused with attention-enhanced features to further strengthen edge representation. Finally, combined deconvolution is used in the decoder to generate the fused image. To validate the performance of the method, polarization images are collected using the Golay 3 sparse aperture imaging system. Experimental results show that the algorithm achieves stable and optimal results in preserving texture detail and the overall image structure. Compared with sparse aperture images, the fused image contains more intermediate-frequency information, clearer contours, and richer texture details. The introduction of polarization information effectively addresses problems such as image smoothing and weakened textures due to intermediate-frequency loss. Compared with traditional fusion methods such as wavelet transform and PFNet, this method more effectively enhances texture details and contrast in sparse aperture.

ObjectiveImage dehazing represents a crucial research direction in low-level vision, aimed at restoring visibility and details in hazy images. This capability holds significant importance for applications including autonomous driving, surveillance systems, and remote sensing. While deep learning-based single-image dehazing algorithms have demonstrated notable advances in recent years, they continue to face adaptability challenges when processing real-world hazy scenes characterized by complex lighting conditions and diverse haze distributions. Traditional polarization-based dehazing methods demonstrate effectiveness in complex hazy environments, yet they frequently overlook the polarization degree of transmitted light and exhibit limited adaptability to global illumination changes, constraining their practical performance. Consequently, developing a more effective and adaptive image dehazing method that maximizes polarization information benefits while addressing existing methodological limitations remains essential.MethodsThis paper addresses these challenges by introducing a polarization-aware dual-encoder dehazing network that utilizes scene polarization information for image restoration. The network implements a dual-encoder architecture (Fig. 1), consisting of two parallel branches: convolutional neural networks (CNN) encoding and Transformer encoding. The CNN encoding branch captures local details and texture information, while the Transformer encoding branch processes long-range dependencies and global contextual information. Within the CNN branch, a multi-angular polarization aggregation (MAPA) module embeds distinct position encodings into multi-angular polarization information and performs compression. Subsequently, a dynamic large kernel (DLK) module extracts multi-scale polarization local features. The Transformer branch employs a retentive meet transformer (RMT) module to extract multi-scale global feature information and integrate fine local features from the CNN branch, enhancing the Transformer module’s local representation capability. An adaptive dynamic feature fusion (ADFF) module dynamically fuses features from different levels. The architecture concludes with a Transformer decoder that globally decodes the multi-level feature layers, progressively upsampling the resolution and restoring image details to produce the dehazed result.Results and DiscussionsThe experimental results demonstrate the algorithm’s superior performance in both objective evaluation metrics and visual quality. For comprehensive evaluation, this study employed parametric metrics including peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and visibility index (VI), alongside non-parametric metrics such as natural image quality evaluator (NIQE) and blind/referenceless image spatial quality evaluator (BRISQUE), conducting assessments across multiple datasets (Table 1). On the IHP dataset, the method achieved optimal performance with PSNR, SSIM, and VI values of 22.54 dB, 0.8379, and 0.8669, respectively, surpassing the second-best results by 0.85 dB, 0.012, and 0.0161. The method achieved the highest PSNR on the Cityscapes-DBF dataset, exceeding FocalNet by 1.05 dB and ConvIR by 2.64 dB. The synthetic dataset’s use of coarse semantic segmentation maps for probabilistic filling resulted in average SSIM performance. In outdoor real-world scenes, the method achieved NIQE and BRISQUE scores of 12.83 and 40.28, ranking first and second respectively. Visually, the algorithm effectively removes haze while maintaining crucial scene details and color information (Figs. 6?8). Extensive ablation studies, documented in Tables 2?4, and Fig. 9, confirmed the effectiveness of the dual-encoder structure and key modules, showing decreased performance when using single-branch structures or removing key components.ConclusionsThis paper presents an advanced deep learning-based dehazing method for polarized images. The research introduces three innovative modules: MAPA, DLK, and ADFF, integrating CNN and Transformer features to construct a dual-encoder?single-decoder polarization dehazing network that estimates residuals for image restoration from four polarization states. This approach extends polarization-based dehazing applications, operating independently of prior knowledge while utilizing semantic and contextual information to address spatially varying scattering phenomena. The method demonstrates state-of-the-art performance on the IHP and Cityscapes-DBF datasets, with proven robustness in real outdoor environments. Current limitations include reduced effectiveness in processing hazy images containing sky regions, due to training dataset constraints. Future research will focus on dataset enrichment to enhance algorithm performance across diverse environments.

ObjectiveLow-level-light night vision technology enables the generation of visible-light images that are clearly recognizable to the human eye under low-illumination conditions. An electron bombarded active pixel sensor (EBAPS) represents a vacuum-solid hybrid low-level-light imaging device that delivers exceptional performance in sensitivity, resolution, power efficiency, and compact design, making it valuable for low-light-level night vision applications. However, the imaging procedure introduces complex noise patterns. The electron bombardment process used for signal enhancement generates electron bombarded semiconductor (EBS) noise, characterized by spatially random distribution, multi-pixel aggregated clusters, and a diffuse pattern that gradually darkens from center to edges, manifesting as bright spots. While traditional denoising methods effectively address common Gaussian noise and salt-and-pepper noise that occur in solid-state imaging devices, they prove inadequate for EBS noise removal. Currently, effective methods for EBS noise removal remain limited. Previous attempts combining noise detection with median filtering yielded unsatisfactory results. Processing speed represents another critical factor in practical applications, with some complex algorithms requiring extensive processing time. Deep learning-based approaches show promise in handling unconventional EBS noise but face challenges in dataset construction, processing speed, and generalization capability. Therefore, we propose a Harris-guided adaptive switching median and bilateral filtering (HASMBF) algorithm for rapid and effective EBAPS image noise removal.MethodsThe proposed algorithm comprises three main components: EBS noise detection, adaptive switching median filtering, and bilateral filtering. The Harris corner detector demonstrates effectiveness in EBS noise detection. However, it exhibits over-detection tendencies, where non-noise pixels surrounding EBS noise pixels are incorrectly classified as noise pixels. These misclassified pixels typically display significant brightness differences from actual noise pixels. To address this limitation, we incorporate the Otsu threshold to optimize the Harris corner detector results, developing a Harris-Otsu joint noise detection algorithm. The Otsu method functions as an adaptive thresholding technique based on image histogram statistics. Its fundamental principle maximizes between-class variance, enabling automatic computation of optimal segmentation thresholds based on image grayscale distribution, thus effectively separating targets from backgrounds without manual intervention. The process begins with Harris corner detector identifying approximate EBS noise regions, followed by retention of only pixels exceeding the Otsu threshold as final detection results. Subsequently, an adaptive switching median filter guided by detection results removes EBS noise. Finally, a bilateral filter eliminates residual Gaussian and Poisson noise.Results and DiscussionsThe proposed Harris-Otsu joint noise detection algorithm accurately detects EBS noise pixels (Fig. 4). To verify the denoising performance of the HASMBF algorithm, denoising experiments were subsequently conducted using both simulation images with artificially added noise and real EBAPS images. The simulation images are generated by adding artificial EBS noise and Gaussian noise, and are divided into three categories based on illumination levels (Fig. 6). The real EBAPS images were captured using an EBAPS camera under illumination condition of 5×10-4 lx (Fig. 10). Experiment results show that the algorithm proposed in this paper is effective in removing the mixed noise in EBAPS images (Figs. 7, 8, 9 and 11). EBS noise is significantly removed after being processed by the proposed algorithm. Our method also performs well in both PSNR and SSIM (Tables 1, 2, 3 and 5), and it also attains a competitive processing speed (Tables 4 and 5). For real EBAPS images, the proposed algorithm improves PSNR by approximately 10 dB and achieves an SSIM above 0.9. Meanwhile, the processing time is only 1.8 times that of the classic bilateral filtering algorithm.ConclusionsThis study analyzes the imaging principle and noise characteristics of EBAPS, including Gaussian noise and EBS noise. The proposed HASMBF algorithm demonstrates accurate EBS noise detection and effective mixed noise removal in EBAPS images. The denoising performance evaluation utilizes both simulation and real images. Experimental results confirm the method’s effectiveness in noise suppression while maintaining efficient processing speed. However, the algorithm requires parameter adjustments based on environmental variations, including noise detection threshold and bilateral filtering kernel parameters. Further research should focus on developing an adaptive parameter-setting mechanism to enhance the algorithm’s environmental adaptability.

ObjectiveDue to current technical limitations and the influence of shooting environments, images captured by the same device often fail to provide a comprehensive description of different scenes. The visible sensor can generate visible images that contain rich texture details, but the image quality is easily affected by harsh environments. On the other hand, the infrared sensor can generate infrared images that offer distinct salient targets but lack texture details. Infrared and visible image fusion aims to integrate the complementary information from infrared and visible modalities to generate a single image. The fused image exhibits more prominent targets along with abundant texture details, thereby facilitating downstream visual tasks. Although most current methods have proven effective in generating satisfactory fused images in typical scenarios, performance remains unsatisfactory in strong light scenes. To achieve a fused image that contains prominent targets and rich texture details under strong light scenes, we propose a mask-guided two-stage infrared and visible image fusion network.MethodsConsidering the issue of blurred salient targets and texture details under strong light conditions, we design a salient object detection (SOD) network in the first stage to extract salient targets from the infrared image to guide feature extraction and reconstruction. Specifically, we embed RepVGG block into U2-Net, achieving structural reparameterization, which effectively enhances U2-Net’s ability to extract salient object instances. In the second stage, due to the degradation of texture details in strong light scenes, we establish a scene segmentation and enhancement module (SSEM) in the encoder stage. This module uses discrete wavelet transform to extract high-frequency information and supplements it into the hierarchical features, thus providing texture details. In addition, to avoid interference from redundant information between foreground and background, a dual-branch feature fusion module (DFFM) is designed to separately fuse the foreground and background, and then merge them into a unified feature map during the fusion stage. Moreover, in the decoder stage, a mask-guided reconstruction module (MGRM) is proposed to fully utilize the mask and address the problem of indistinct salient targets in strong light scenes. This module employs two sets of channel and spatial attention modules to extract critical information. It enhances significant regions while suppressing redundant information in both channel and spatial dimensions, improving the network’s ability to extract crucial features. For loss functions, in the first training stage, the loss function contains the loss of side output saliency maps and the final fusion output saliency map, which constrains the multi-scale saliency maps to focus on key features. In the second training stage, pixel intensity loss and structural similarity loss are used to guide the fused images in retaining salient targets, texture details, and structural information.Results and DiscussionsDuring the testing stage, comparative experiments are conducted on the TNO, RoadScene and FLIR datasets. The proposed method is compared with nine other methods, and eight quantitative indicators are used to assess the performance of the aforementioned ten methods. In qualitative experiments, compared to other methods, the proposed method can generate fusion results with clear salient targets and rich texture details under both normal and strong light environments. In quantitative experiments, the proposed method achieves the best discrete cosine features mutual information (FMIdct), small Baud sign mutual information (FMIω), visual-fidelity (VIF), and the second-best mutual information (MI) values. This suggests that the proposed method effectively transfers relevant information from the source images to the fused image while maintaining high visual fidelity. Finally, ablation experiments are conducted to validate the effectiveness of the proposed salient object detection network, the scene segmentation and enhancement module, and the mask-guided reconstruction module. These results confirm the contributions of each proposed module.ConclusionsIn this study, we propose a mask-guided two-stage infrared and visible image fusion method, which consists of two phases: Firstly, a RepVGG module is introduced to reparameterize U2-Net, which enhances its ability to extract salient targets. Secondly, a two-stage autoencoder-based infrared and visible image fusion method is designed. In the encoder phase, wavelet transform is employed to extract high-frequency information to supplement texture details. During the fusion phase, the foreground and background are fused separately to minimize redundant information. Finally, an attention mechanism is used to incorporate the extracted foreground targets for supplementing the salient information. The fused images contain richer texture details and enhanced salient target information. Applying this method in strong light scenes can mitigate information loss caused by intense illumination. This approach provides a novel image processing strategy to preserve more salient information under strong light conditions.