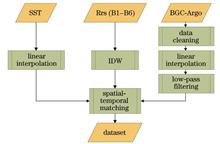

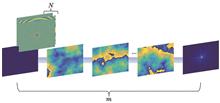

ObjectiveColored dissolved organic matter (CDOM) plays a pivotal role in the global carbon cycle and climate change. The rapid development of satellite remote sensing technology has provided a vast amount of ocean surface remote sensing data for oceanographic research, reflecting the internal state of the ocean to a certain extent. We combine multi-source ocean remote sensing data with deep learning techniques to propose a remote sensing inversion method for subsurface CDOM in the ocean. This method inverses the vertical distribution of subsurface CDOM by employing ocean surface remote sensing data, thus providing a new perspective and theoretical support for a deeper understanding of the mechanisms of the ocean carbon cycle and its interactions with climate change.MethodsFirstly, the CDOM profile data obtained from BGC-Argo is preprocessed to address the uncertain vertical resolution. By conducting linear interpolation, the data is standardized to an interval of 1 m, ensuring consistency in depth between data points for subsequent analysis. Additionally, a low-pass filter is adopted to reduce peak fluctuations in the data, enhancing its smoothness and reliability. To address the missing ocean remote sensing data, we employ the inverse distance weighting (IDW) interpolation method, effectively filling in missing values in remote sensing images. The K-fold cross-validation method is utilized to evaluate the interpolation model, with the mean absolute percentage error (MAPE) selected as the evaluation metric. Given the spatial resolution mismatch between sea surface temperature (SST) data and remote sensing reflectance data, the bilinear interpolation algorithm is employed to reconstruct the resolution of the SST dataset, enhancing its resolution and ensuring spatio-temporal consistency of the model input data. Finally, based on the convolutional neural network (CNN) model, we design a subsurface CDOM inversion model for the ocean, adopting multi-band remote sensing reflectance, SST, and other parameters as inputs. This model consists of an input module, a CNN feature extraction module, and a prediction module, enabling the vertical distribution prediction of subsurface CDOM concentration in the ocean. As a result, the model’s applicability is evaluated via a test set and two independent test areas.Results and DiscussionsThe filtered profile data of CDOM of the ocean exhibits smoother and more stable characteristics, effectively eliminating the interference of outliers on the overall data trend (Fig. 3). To achieve spatio-temporal consistency between BGC-Argo data and remote sensing reflectance data, we employ the IDW method to interpolate missing values in remote sensing reflectance images and validate the spatial interpolation model through K-fold cross-validation. By taking the Rrs443 remote sensing data from the first day of each month in 2020 as an example, the initial distribution of remote sensing data is shown in Fig. 4, while the reconstructed remote sensing data after IDW spatial interpolation is presented in Fig. 5. During cross-validation, the K value is set to 5, with the MAPE employed as the evaluation criterion. The results indicate that the overall error of the interpolation model remains below 30%, demonstrating the sound performance of the interpolation model. The proposed inversion model achieves a root mean square error (RMSE) of 0.14 μg/L, a correlation coefficient (r) of 0.73, and a coefficient of determination (R2) of 0.74 in the test set. Furthermore, in the validation of two independent test areas, the RMSE values are 0.13 μg/L and 0.18 μg/L respectively, with r values of 0.81 and 0.74, and R2 values of 0.79 and 0.69 respectively. By analyzing the vertical distribution plots of predicted and actual values for independent test zones A and B (Figs. 8 and 9), combined with the residual scatter plot between predicted and actual values (Fig. 10), it is evident that the predicted values are mostly concentrated around the y=x diagonal with the actual values. This result demonstrates a high degree of consistency between the model’s predictions and the measured CDOM distribution characteristics, thereby confirming the validity and applicability of the proposed model. The correlation between the distribution of CDOM and SST is explored via the subsurface CDOM-SST scatter plot (Fig. 11), which further validates the rationality of the inversion results.ConclusionsWe leverage multi-band ocean remote sensing spectral data (B1: Rrs412; B2: Rrs443; B3: Rrs490; B4: Rrs510; B5: Rrs560; B6: Rrs665), SST remote sensing data, and BGC-Argo data, combined with a CNN model, to develop an inversion model for the vertical distribution of marine subsurface CDOM in the Northwest Pacific region (131°E?180°E, 26°N?54°N). To validate the accuracy of this model, we evaluate the performance of this model by adopting a test set, proving the model’s sound performance. Additionally, to further verify the model’s applicability, we conduct predictions for the vertical distribution of CDOM in two independent test areas, which reveals a high degree of consistency between the predicted and measured CDOM distribution characteristics, thereby proving the model’s effectiveness in presenting the vertical distribution characteristics of marine subsurface CDOM. Meanwhile, an analysis of the vertical distribution characteristics of subsurface CDOM in the Northwest Pacific region is conducted by utilizing the constructed vertical distribution maps of CDOM in the independent test areas. Notably, the mass concentrations in spring and summer are significantly higher than those in autumn and winter, with CDOM mass concentrations gradually increasing with depth. As a crucial component of the oceanic carbon cycle, the distribution and variation of CDOM significantly influence this cycle. We not only uncover these key features of the vertical distribution of marine subsurface CDOM but also provide a solid theoretical foundation and support for its inversion, facilitating a deeper understanding and prediction of the dynamic changes in the oceanic carbon cycle. However, our study has certain limitations. For instance, the IDW remote sensing data reconstruction method based on spatial correlation can be further optimized by incorporating factors such as time series to enhance the model’s ability to capture dynamic temporal changes. Additionally, considerations can be given to adjusting the model structure, increasing network depth, and exploring the inclusion of additional remote sensing parameters such as sea surface elevation and wind speed to delve deeper into the complex relationship between ocean remote sensing data and the vertical distribution of marine subsurface CDOM and improve prediction accuracy.

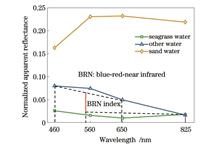

ObjectiveSeagrass, a typical aquatic flowering plant, thrives in shallow coastal and estuarine waters, playing an important role in maintaining ecosystem stability and facilitating the carbon cycle. However, it is currently facing a significant decline, necessitating effective monitoring. The coastal zone imager (CZI) onboard the HY-1C/D satellite provides 50-m resolution data and high-frequency observations twice every three days, facilitating satellite remote sensing of seagrass. In this study, we analyze the spectral differences among seagrass water, sand water, and other water types in Swan Lake using numerous CZI images from 2018 to 2023, employing the spectral-index method. We then propose a seagrass remote sensing detection model based on the constructed Blue-Red-NIR index (BRN index) and Δz index. The accuracy validation results show that this model performs well in both qualitative and quantitative assessments. Subsequently, we apply the seagrass remote sensing model to CZI images from 2023 to reveal trends in seagrass distribution based on cumulative pixel area and aggregation density. Overall, we provide a reference for monitoring seagrass resources using domestic satellites, which would be beneficial in broadening their application in marine resource monitoring.MethodsIn this study, we employ the spectral-index method to develop a seagrass remote sensing detection model. Firstly, we identify the substrate types for water in Swan Lake using in-situ surveys and star-earth matching technology, categorizing them into seagrass water, sand water, and other water (excluding the first two). Then, we select adequate pure samples for the aforementioned three water types through visual interpretation. Following this, we calculate the normalization of remote sensing reflectance of pixels and analyze the spectral differences between seagrass water, sand water, and other water based on numerous samples chosen from CZI images. Next, we discard sand water pixels, which exhibit higher normalized apparent reflectance than the other two water types in the red band. We further distinguish seagrass water from other water pixels by constructing two indexes, i.e., the BRN index and the Δz index. The BRN index denotes the difference between the green band and the NIR band, while the Δz index represents the difference between the blue band and the green band, and is used for separating seagrass water from other water pixels. Subsequently, the seagrass remote sensing detection model is developed based on these indexes, and a confusion matrix is employed to evaluate its performance.Results and DiscussionsThe accuracy evaluation indicates that the seagrass distribution detected by the seagrass remote sensing detection model closely aligns with that of the false-color images [Figs. 8(a) and 8(e)]. Furthermore, a comparison between the seagrass distributions detected by our model and another model using Landsat images, proposed by Liang et al., demonstrates that our model effectively monitors the majority of seagrass in the central part of Swan Lake [Figs. 8(b) and 8(f)], exhibiting good performance in the cumulative seagrass pixel area. Additionally, the confusion matrix results reveal that the seagrass detection model performs well, with Overall Accuracy (OA) exceeding 80% and F1-Score above 0.85 (Table 1). We then apply this model to CZI images from 2023 to calculate the cumulative pixel area and aggregation density, respectively. It is observed that the cumulative pixel area first increases and then decreases, specifically rising gradually from June to August before declining (Fig. 9), while aggregation density peaks in August (Fig. 10). Moreover, we observe stability with slight fluctuations in the cumulative pixel area from 2018 to 2023 (Fig. 11). Overall, the robust performance of the seagrass remote sensing detection model can be attributed to the normalization process based on numerous CZI images (Fig. 12). Furthermore, sensitivity analyses in turbid waters demonstrate that our model remains stable (Fig. 13). Looking ahead, future research should explore the applicability of the seagrass remote sensing detection model in other regions. Additionally, more high-spatial-resolution domestic satellite images, along with hybrid image decomposition technologies, need to be synthesized to achieve highly accurate seagrass detection.ConclusionsIn this paper, we propose a seagrass remote sensing detection model based on two constructed indexes, the BRN index and the Δz index, developed after analyzing the spectral differences among seagrass water, sand water, and other water using HY-1C/D CZI images. Accuracy evaluations show that this model aligns well with false-color images and seagrass distribution results detected by Landsat images. Moreover, it achieves OA exceeding 80% and an F1-Score above 0.85. When applying this model to CZI images from 2023, we find that the cumulative pixel area of seagrass increases from June to August and decreases after peaking in August. Aggregation density shows a similar trend, first increasing and then decreasing from June to October, peaking in September. We also observe stability with slight fluctuations in the annual changes in cumulative pixel area over the last six years. Our proposed seagrass remote sensing detection model using domestic HY-1C/D CZI satellite data provides a reference for monitoring seagrass resources with domestic satellites, broadening their application in marine resources monitoring.

ObjectiveRecently, some scholars have proposed inverted pin beam (IPB) with a Bessel-like shape and found that compared with Bessel beam (BB), pin beam (PB), and Gaussian beam (GB), IPB have a lower scintillation index during moderate to strong atmospheric turbulence transmission. Although IPBs have a superior anti-turbulence ability in long-distance transmission, the characteristic of the gradually increasing beam width during transmission will result in lower received power in the far field. Therefore, we propose nonuniformly correlated inverted pin beam (NUCIPB), which can further reduce the light intensity fluctuations and other perturbation effects caused by atmospheric turbulence and improve the received power in a certain range of the far field by introducing self-focusing characteristics with the assistance of nonuniform correlation modulation.MethodsBased on the coherent mode decomposition and random phase screen methods, a numerical simulation model of NUCIPB transmitting in atmospheric turbulence is built, and the light intensity evolution characteristics of the beam transmitting through free space and turbulent atmosphere are simulated and analyzed. The aperture averaged scintillation index, beam wander, and beam broadening are employed to evaluate the beam quality affected by atmospheric turbulence. On this basis, the average bit error rate (BER) of the system is calculated when the beams are adopted as a space optical communication link, and the transmission and communication performances of the NUCIPB, IPB, PB, BB, and GB are compared in the same conditions.Results and DiscussionsThe light intensity evolution during free space transmission of IPB and NUCIPB shows that the trend of spot size variations for NUCIPB and IPB is identical, but the intensity distribution of NUCIPB is more uniform. Meanwhile, NUCIPB also show the characteristics of Bessel-like distribution in the paraxial region. The difference is that it will degenerate into a Gaussian-like distribution after a certain distance, and the attenuation degree of light intensity along the axis increases significantly lower than that of IPB with the increasing transmission distance (Figs. 1?5). The fluctuation degree of light intensity for all beams increases with the rising transmission distance and turbulence intensity, and the corresponding communication performance also degrades gradually. The comparison of the two sizes of receiving apertures indicates that an increase in the receiving aperture can significantly reduce the scintillation index and the communication BER. Under strong turbulence and Ra=0.10 m, compared with GB, the scintillation index of BB, PB, IPB and NUCIPB decreases by 55.1%, 16.8%, 67.2%, and 78.0% respectively after atmospheric turbulence transmission of 10 km, and the BER also decreases by 50.7%, 12.6%, 63.4%, and 78.5% respectively (Figs. 8 and 13). The optical power in the two receiving apertures gradually decreases with the increasing transmission distance, and the greater turbulence intensity leads to a faster decline rate. The power in the bucket (PIB) of BB is greatly affected by the aperture size and NUCIPB will have self-focusing characteristics compared with IPBs during the transmission. In the case of strong turbulence and Ra=0.10 m, the PIB of NUCIPB at the focusing position will increase by nearly 38.6% compared with IPB (Fig. 9). Meanwhile, the beam spreading degree of NUCIPB after 10 km transmission in strong turbulence is 38.4%, 13.7%, 22.6%, and 5.1% lower than that of GB, BB, PB, and IPB respectively (Fig. 10). In terms of the degree of beam wander, NUCIPB have a certain advantage in weak to moderate turbulence for long-distance transmission (Fig. 11).ConclusionsWe propose and construct NUCIPB, and build an atmospheric turbulence transmission model based on the coherent mode decomposition and the random phase screen methods. The transmission and communication characteristics of NUCIPB are simulated, and compared with GB, BB, PB, and IPB, the simulation results show that as the transmission distance and turbulence intensity increase, the intensity fluctuations of all beams continually intensify, causing gradual degradation of corresponding communication performance. A comparison between two sizes of receiving apertures reveals that increasing the receiving aperture size can significantly reduce intensity scintillation and decrease the communication BER. Under strong turbulence and Ra=0.10 m, compared with GB, the scintillation index of BB, PB, IPB, and NUCIPB decreases by 55.1%, 16.8%, 67.2%, and 78% respectively after 10 km atmospheric turbulence transmission, and the BER also decreases by 50.7%, 12.6%, 63.4%, and 78.5% respectively. Additionally, the optical power in the two receiving apertures gradually decreases with the increasing transmission distance, and the greater turbulence intensity leads to a faster decline rate. The PIB of BB is greatly affected by the aperture size and NUCIPB will have self-focusing characteristics compared with IPB in the transmission process. Under strong turbulence and Ra=0.10 m, the PIB of NUCIPB at the focusing position will increase by nearly 38.6% compared with IPB. Meanwhile, with the rising transmission distance, beam spreading becomes more severe. There is little difference between beam spreading in weak and moderate turbulence intensity. Compared with GB, BB, PB, and IPB, the beam spreading of NUCIPB after 10 km is reduced by 38.4%, 13.7%, 22.6%, and 5.1% respectively. In terms of the degree of beam wander, NUCIPB have certain advantages in long-distance transmission under weak to moderate turbulence, but with the rising turbulence intensity, there is little difference between NUCIPB and IPB. Compared with fully coherent IPB, NUCIPB perform better in reducing the negative effects caused by turbulence, such as light intensity fluctuations, beam wander, and beam spreading. Meanwhile, due to the introduction of self-focusing characteristics, NUCIPB surpass IPB in far-field energy focusing within a specific transmission range, which can improve the far-field energy receiving efficiency. Although the current research is entirely based on simulation calculations to explore the performance of NUCIPB in atmospheric turbulence channels, we can further employ digital micro-mirror devices (a modulation rate of 17 kHz) and programmable lithium niobate SLMs (a modulation rate able to reach 5 MHz and 1.6 GHz respectively) with high modulation rates to construct NUCIPB and experimentally verify the feasibility of NUCIPB for free space optics (FSO) communication.

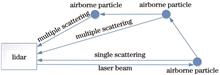

ObjectiveAtmospheric visibility plays a crucial role in aerospace, transportation, and environmental monitoring, directly affecting traffic safety and transportation efficiency. In automatic observation, visibility is represented by the meteorological optical range (MOR), defined as the path length through which the luminous flux of a parallel beam of light emitted by an incandescent lamp with a color temperature of 2700 K is attenuated to 5% of its initial value in the atmosphere. In photon counting mode, lidar captures return energy by receiving backscattered photon signals, which consist of both single-scattered and multiple-scattered photons. Single-scattered photons follow definite paths and directly convey target information, while multiple-scattered photons have complex trajectories and carry significant non-target information, increasing uncertainty and noise. To obtain the true atmospheric visibility, we need to calculate the actual extinction coefficient considering only single-scattered photon returns. However, since lidar cannot distinguish between single-scattered and multiple-scattered photons, the extinction coefficient directly derived from lidar return signals is the apparent extinction coefficient, which is influenced by multiple scattering. Therefore, in-depth research on lidar-based apparent extinction coefficient inversion and the multiple-scattering processes in the atmosphere is crucial for accurately calculating the actual extinction coefficient.MethodsWe derive the relationships among the actual extinction coefficient, apparent extinction coefficient, and multiple-scattering factor based on the lidar equation and the parameterized lidar equation, laying a theoretical foundation for subsequent analysis. Then, we generate 10000 sets of simulated signals under typical weather conditions and construct a comprehensive dataset by combining these simulated signals with real measurement data. This ensures that our model has broad adaptability and accurately reflects the complexities in practical applications. After that, we perform data preprocessing to enhance the linear correlation between features and labels and train the temporal convolutional network (TCN). This neural network model can accurately estimate the atmospheric apparent extinction coefficient by analyzing lidar return signals. Based on the apparent extinction coefficient estimated by the TCN, we determine an initial scattering free path and conduct multiple-scattering simulations to obtain an initial multiple-scattering factor and simulated photon number. We then calculate the relative error between the simulated photon number and the theoretical photon number derived from lidar return signals. If the relative error exceeds a predetermined threshold, we correct the scattering free path using the multiple-scattering factor and repeat the multiple-scattering simulation. This iterative process continues until the error falls below the set threshold, yielding the final multiple-scattering factor. Finally, we substitute the estimated apparent extinction coefficient and final multiple-scattering factor into the derived relationships to accurately calculate the actual extinction coefficient.Results and DiscussionsUnder low visibility conditions, the visibility calculated by our proposed method shows significant differences compared to the results without considering multiple-scattering effects. Simulation results show that at a visibility of 100 m, the initial accuracy of the multiple-scattering simulation is relatively low, with substantial deviations between the simulated photon number/multiple-scattering factor and theoretical value. However, as the number of iterations increases, the accuracy of the multiple-scattering simulation gradually improves, with the simulated photon number and multiple-scattering factors converging towards the theoretical values (Figs. 8 and 9). The mean actual extinction coefficient calculated by our proposed method is 29.45 km-1, with a relative error of 1.70% compared to the theoretical value. In contrast, the mean actual extinction coefficient obtained using the Klett algorithm is 25.88 km-1, with a relative error of 13.61%. Correspondingly, the average visibility calculated by our proposed method is 101.73 m, while the Klett algorithm yields a visibility of 115.76 m. The root mean square errors of the actual extinction coefficients calculated by our proposed method and the Klett algorithm relative to the theoretical values are 10.49 and 18.63, respectively. The calculated results by our proposed method is closer to the theoretical values, and the calculated visibility is more accurate (Fig. 10). At visibility of 800 m, the multiple-scattering photon number is relatively low, and the effect of multiple scattering on the return signal is smaller (Fig. 11). Using our proposed method, only one iteration is required, and the return signal is nearly identical to the theoretical value, while the multiple scattering factor approaches the theoretical value (Figs. 13 and 14). The mean actual extinction coefficient calculated by our proposed method is 3.73 km-1, while that of the Klett algorithm is 3.68 km-1. Compared to the theoretical value, the relative errors are 0.26% and 1.60%, respectively, with root mean square errors of 0.27 and 0.30. The average visibility calculated by our proposed method is 803.21 m, while the Klett algorithm gives 814.13 m. For the experimental signals, we select two actual lidar return signals, A and B, with measured visibilities of 1.41 and 4.44 km, respectively (Fig. 16). The visibility calculated by our proposed method for these signals is 1.23 and 5.93 km, while the Klett algorithm calculates 1.26 and 6.06 km. Compared to the measured values, the relative error for signal A is 2.44% for the Klett algorithm and 0.81% for our proposed method, with a reduction of 1.63 percentage point. For signal B, the relative errors are 2.19% for the Klett algorithm and 1.01% for our proposed method, with a reduction of 1.18 percentage point (Figs. 17 and 18). These results demonstrate that our proposed algorithm, which accounts for multiple-scattering effects, offers significant advantages over the Klett method which does not consider multiple scattering, thus validating its effectiveness under multiple-scattering conditions.ConclusionsWe propose an iterative solution algorithm for atmospheric visibility based on the TCN and multiple-scattering simulation. This algorithm is used to solve the actual extinction coefficient and improve the accuracy of visibility inversion under multiple-scattering conditions. It estimates the apparent extinction coefficient through a TCN, then repeats multiple-scattering simulations to obtain multiple-scattering factors, and finally solves for the actual extinction coefficient to obtain atmospheric visibility. The simulation results show that under visibility conditions of 100 and 800 m, the algorithm we propose significantly improves the accuracy of visibility calculation compared to the case without considering multiple scattering. The comparison of measured signals and calculation results also confirms this conclusion. The advantage of our algorithm is that in estimating the apparent extinction coefficient, the TCN effectively avoids the cumulative error caused by the calculation error of the boundary value of the apparent extinction coefficient. In multiple-scattering simulations, iterative correction of the scattering free path is used to gradually improve simulation accuracy, ultimately enabling accurate calculation of multiple-scattering factors and actual extinction coefficients.

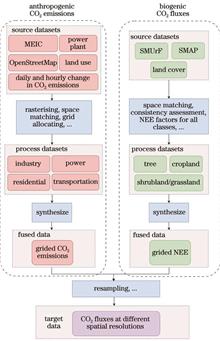

ObjectiveUrban areas contribute approximately 70% of global anthropogenic carbon dioxide (CO2) emissions, making them a key area in carbon monitoring efforts. The “top-down” approach, which uses measured atmospheric CO2 concentrations, allows for near real-time emission estimates on a global urban scale and serves as a crucial tool for verifying urban emission reductions. Currently, the prior estimates of urban CO2 fluxes in top-down assessments rely on data from open-source data inventory for anthropogenic CO2 (ODIAC) and vegetation photosynthesis and respiration model (VPRM). However, these prior fluxes possess high spatial uncertainty, resulting in significant bias in urban emission estimates and failing to meet the sub-kilometer resolution required for urban grids. In our study, we construct a high-resolution spatial and temporal dataset for urban CO2 fluxes by integrating multi-source data. We also evaluate the effect of this spatial optimization using column-averaged dry-air mole fraction of CO2 (XCO2) data from the orbiting carbon observatory-3 (OCO-3) satellite. The results indicate that using the optimized CO2 fluxes enables more accurate simulations of local CO2 concentration variations, achieving a closer match with observations. Our high-resolution urban CO2 flux dataset can contribute to reducing uncertainty in CO2 flux estimates and provide more accurate prior values for “top-down” urban emission estimates.MethodsFor CO2 fluxes, there are significant spatial dependencies. Anthropogenic emissions mainly come from fixed sources such as power plants, transportation networks, and industrial zones, while biogenic fluxes are concentrated in vegetation-covered areas like forests, croplands, and grasslands. To represent these spatial patterns, we use land cover types as proxies for CO2 fluxes. For anthropogenic CO2 emissions, we utilize datasets such as the global power plant database, OpenStreetMap, and the essential urban land use categories (EULUC), which offer detailed representations of emissions from power plants, industry, residential areas, and transportation networks. For biogenic CO2 fluxes, we select the WorldCover land cover dataset to distinguish key land cover types, including forests, croplands, and grasslands. The construction of CO2 flux grids involves specific methodologies for anthropogenic and biogenic fluxes. For anthropogenic emissions, we utilize sector-specific, grid-based emission data from the multi-resolution emission inventory for China (MEIC) and process spatial proxy data grid by grid to accurately allocate total emissions across geographic regions. For biogenic fluxes, we estimate flux factors for various vegetation types and integrate them with land use data to calculate precise flux values for each vegetation category. To validate the CO2 flux datasets, we adopt an indirect evaluation approach. We assess the accuracy of the constructed datasets by comparing observed and simulated CO2 concentrations. Simulations are carried out using the stochastic time-inverted Lagrangian transport (STILT) model, and the outputs are validated against XCO2 observations from the OCO-3 satellite. This approach provides a robust evaluation of the spatial representation of CO2 fluxes and their alignment with observed atmospheric CO2 distributions.Results and DiscussionsIn our study, we take Hefei as a case study to develop a high-resolution urban CO2 flux grid with a spatial resolution of 0.002°×0.002° (Figs. 3 and 4). The constructed grid data effectively captures the detailed distribution characteristics of CO2 sources and sinks, which are not well represented in previous datasets. We compare the spatial patterns of the improved CO2 emissions with those from the MEIC and ODIAC datasets (Figs. 5 and 6). Additionally, we analyze the changes in biogenic CO2 fluxes before and after optimization using remote sensing imagery with a spatial resolution finer than 1 m (Fig. 8). To evaluate the effectiveness of the CO2 flux optimization, we employ the X-STILT model to simulate XCO2 concentrations based on both pre- and post-optimization CO2 flux data. These simulations are then validated against XCO2 observations from the OCO-3 satellite. The validation utilizes OCO-3 data from three observations: June 16, 2022 (Fig. 9), June 4, 2021 (Fig. 11), and October 11, 2020 (Fig. 12).ConclusionsIn the present study, we develop a high-resolution grid of urban anthropogenic and biogenic CO2 fluxes by integrating effective information from multi-source datasets with varying formats, spatial resolutions, and temporal coverage. We validate and evaluate the spatial optimization of CO2 fluxes using observational data from OCO-3. The analysis highlights pronounced local spatial heterogeneity in urban anthropogenic CO2 emissions. Strong point sources, such as power plants, and weaker sources, such as residential areas, lead to significantly different variations in local CO2 concentrations. Coarse-resolution emission data tend to average these differences in simulations, making it difficult to capture localized CO2 peaks. Compared to ODIAC data, the spatially optimized emission data substantially refine the urban CO2 emission distribution, transforming it from a “Gaussian-like” pattern to a “multi-centered” distribution. For biogenic CO2 fluxes, the optimized data successfully identify small-scale urban green spaces, enabling a more precise simulation of vegetation’s influence on local CO2 concentration dynamics. Using the WRF-XSTILT model, we compare simulations of XCO2 concentrations before and after optimization against OCO-3 observations. The results show significant improvements in both validation cases: correlation coefficients increase from 0.26 to 0.46, from 0.62 to 0.73, and from 0.50 to 0.60, respectively, while biases decrease from 1.36×10-6 to 1.24×10-6, from 0.87×10-6 to 0.80×10-6 and from 0.80×10-6 to 0.73×10-6. These findings underscore the enhanced capability of the optimized data to accurately represent the spatial distribution of CO2 fluxes.

ObjectiveXinjiang, recognized as a crucial coal resource area and strategic reserve in China, possesses abundant coal resources. The Zhundong coalfield, a large-scale open-pit mining area within this region, significantly contributes to increased concentrations of light-absorbing aerosols due to its coal production activities and associated industrial processes. These activities also produce substantial amounts of black carbon (BC), which, through atmospheric transport, mixes with snow and ice, influencing glacier ablation in the Tianshan Mountains. While previous studies on the Zhundong coalfield have predominantly concentrated on the ecological pollution resulting from mining activities, they have overlooked the implications for climate and radiative forcing in the area. In this context, it is crucial to employ satellite remote sensing technology to analyze and assess the optical properties and radiative forcing effects of light-absorbing aerosols in the Zhundong coalfield region. Such an approach is significant for understanding the regional environmental and climatic impacts associated with the development of open-pit coal resources in the arid regions of western China.MethodsWe investigate the temporal and spatial characteristics of aerosol optical depth (AOD) in the Zhundong coalfield by utilizing MODIS aerosol product (MOD04~~L2) data spanning from 2005 to 2020. To simulate aerosol particle size information, a Mie scattering model is employed under the “core-shell” assumption. An uncertainty interval of 0.03 is selected to estimate the possible range of particle sizes within each grid, constrained by maximum and minimum values. The intersection of these constraints is then used to calculate the optical parameters for various particle size combinations. Additionally, the influence of sand and dust aerosols is considered by setting the single scattering albedo (SSA) range for these aerosols between 0.93 and 0.96. The simulated extinction coefficient (σext) is used as a threshold value; any portion smaller than this threshold is excluded to quantify the concentration of local BC columns. Finally, the radiative forcing effect of light-absorbing aerosols in the Zhundong coalfield over the past decade is evaluated using the SBDART radiative transfer model.Results and DiscussionsThe AOD in the Zhundong coalfield exhibited pronounced spatial heterogeneity from 2005 to 2020, with high AOD values predominantly concentrated in the mining area and its surrounding regions (Fig. 2). Seasonal variations reveal the highest concentrations in spring and winter, followed by fall, with the lowest levels observed in summer. During spring and winter, AOD values generally exceed 0.15, except in certain desert areas. Interannual fluctuations in AOD are frequent, marked by significant turning points in 2010, 2012, and 2017 (Fig. 3), which indicates that coal production, energy restructuring, and capacity reduction policies have a significant effect on air quality in mining regions. The inter-monthly variation displays a distinct “U” pattern (Fig. 3), with AOD peaking at 0.27 in February, which highlights the substantial influence of anthropogenic activities on regional air quality. Dusty weather in spring emerges as a dominant factor. Overall, the temporal variation in AOD in the Zhundong coalfield reflects the combined effects of natural factors and human activities. In the Wucaiwan and Dajing mining areas, the range of BC number density is (1?3)×1018 grid-1 (Fig. 6). In 2012, against the backdrop of China’s coal economic performance, open-pit mining was less affected by the decline in production growth due to its larger production capacity and lower costs, influenced by mining methods, climatic conditions, and economic activities. In contrast, shaft mining is more heavily affected by safety risks and environmental constraints, which may lead to production limitations, especially under strengthened policy and regulatory measures. As a result, there are greater fluctuations in BC number density in the Dajing mining area (Fig. 6). The range of BC number density is 20?40 kg/grid, with seasonal variations largely consistent, although peak months differed. This suggests that BC mass concentration is closely related to particle aging and size (Fig. 7). Radiative forcing values at the top of the atmosphere, at the surface, and within the atmosphere showed varying degrees of decrease between 2011 and 2017, followed by a gradual increase. This suggests that reducing emissions of light-absorbing aerosols from mining sites can effectively lower regional radiative forcing values in the context of reduced coal production (Fig. 10). Radiative forcing values are higher in March and April during spring, when BC is aged and mixed with other aerosol components through mutual encapsulation, which results in more complex microphysical-chemical properties. This process enhances the absorption capacity of BC for both short- and long-wave radiations (Fig. 10).ConclusionsWe analyze the overall change in AOD in the Zhundong coalfield from 2005 to 2020 using the MODIS aerosol dataset. By integrating a meter scattering model to simulate optical parameters under various particle size combinations and constraining these simulations with single scattering albedo (SSA) observations from MODIS, this approach allows us to determine the eligible particle size information and optical parameters, enabling the calculation of BC mass concentration within the atmospheric column of the Zhundong coalfield. Subsequently, the area’s radiative forcing is estimated using the SBDART radiative transfer model. The findings reveal several key insights. 1) The changes in AOD are closely linked to policy implementation and economic activities within the coal mining area. Interannual variations indicate that AOD peaked in 2012 and subsequently declined, which suggests that policies and economic activities significantly affect AOD levels. Seasonally, AOD is higher in spring and winter and lower in summer. The unique topographic and meteorological conditions facilitate the transport of BC from the mining area to other regions, which highlights the combined effects of seasonal meteorological conditions and human activities. 2) The column concentration of light-absorbing aerosols in the coal mine area is affected by both anthropogenic activities and meteorological conditions, particularly during sandy and dusty weather. A comparison of column concentrations between the Wucaiwan and Dajing mines shows that open-pit mining adapts more effectively in 2012, given the context of China’s coal economic operations, whereas shaft mining may face greater challenges. 3) By examining the changes in AOD and light-absorbing aerosols, it is evident that reducing emissions of light-absorbing aerosols from coal mining areas can effectively decrease regional radiative forcing values in the short term. Inter-monthly variations reveal that atmospheric radiative forcing trends differ from those at the surface and the top of the atmosphere, with the latter two being closely related to the optical properties of light-absorbing aerosols. In spring, the frequent occurrence of sand and dust facilitates the mixing of BC with other substances, forming light-absorbing aerosols with a “core-shell” structure. This significantly enhances the light-absorbing capacity of BC, thereby increasing radiative forcing.

ObjectiveCarbon dioxide (CO2) is the most significant anthropogenic greenhouse gas in the atmosphere. Accurately assessing CO2 emissions is critical for developing effective and feasible reduction policies to mitigate global warming. Spaceborne platforms equipped with active and passive remote sensing instruments enable high-precision global column-averaged dry air mole fraction of CO2 (XCO2) observations, supporting the “top-down” approach to carbon emission estimation. Among these, spaceborne integrated path differential absorption (IPDA) lidar offers resilience to aerosol interference and, with its high pulse repetition frequency, can achieve global XCO2 observations with high temporal and spatial resolution. However, due to single observation errors, the data often need to be processed using the sliding average algorithm, which diminishes the high temporal and spatial resolution advantages of spaceborne IPDA lidar. Therefore, we propose using the Kalman smoothing algorithm to reconstruct the high temporal and spatial resolution lidar XCO2 observation from spaceborne IPDA data. Simulation experiments validate the algorithm’s filtering performance, and its application to point-source emission monitoring highlights its potential for high-resolution XCO2 monitoring. These findings underscore the significance of the Kalman smoothing algorithm in enhancing global carbon emission quantification using spaceborne IPDA lidar data.MethodsBased on the high temporal and spatial resolution advantage of spaceborne IPDA lidar XCO2 data and its offline acquisition characteristics, we propose using the Kalman smoothing algorithm to reconstruct high temporal and spatial resolution XCO2 observation results. First, a pseudo-true value sequence is constructed based on XCO2 data simulated by weather research and forecasting model with greenhouse gases module (WRF-GHG). Various levels of observation errors are then superimposed on this sequence to create a pseudo-observation sequence. The filtering performance of the Kalman smoothing algorithm is tested with different state transfer matrices, and the optimal matrix is selected. Comparative experiments show that the Kalman smoothing algorithm outperforms the sliding average algorithm in terms of filtering performance. Finally, both the Kalman smoothing and sliding average algorithms are used to estimate the carbon emission rate of the same point source at the same time, confirming the Kalman smoothing algorithm’s applicability in high-resolution XCO2 monitoring.Results and DiscussionsSimulation experiments first determine the state transfer matrix for the Kalman smoothing algorithm, followed by a comparison of its filtering performance with the sliding average algorithm, which uses a spatial resolution of 50 km. The results show that the Kalman smoothing algorithm not only retains the original observation’s temporal and spatial resolution (0.05 s, 337.5 m), but also improves the mean absolute error (MAE) by 9.46%, reduces the root mean square error (RMSE) by 13.39%, and increases the correlation coefficient by 6.46%, compared to the sliding average algorithm with a temporal and spatial resolution of 7.4 s and 50 km. The monitoring capabilities of the Kalman smoothing algorithm and the sliding average algorithm for the same point source emissions are further compared. The XCO2 enhancement, obtained using the Kalman smoothing algorithm, estimates the point source emission rate at that moment to be 843.2 kg/s, with a correlation of 0.98 between the XCO2 enhancement and the Gaussian point source model simulation results. In contrast, the sliding average algorithm estimates the point source emission rate at that moment to be 1876.8 kg/s, with a lower correlation of 0.81 between the XCO2 enhancement and the Gaussian point source model simulation results. According to the emission inventory data for this point source, the annual average emission rate is 1100 kg/s. The instantaneous emission rate calculated by the Kalman smoothing algorithm is closer to this annual average, and the XCO2 enhancement shows a higher correlation. Therefore, it can be concluded that the Kalman smoothing algorithm offers superior point source emission monitoring capabilities compared to the sliding average algorithm.ConclusionsIn response to the demand for high temporal and spatial resolution in the application of XCO2 observation results from spaceborne IPDA lidar, we propose the use of the Kalman smoothing algorithm to process the original XCO2 data. We discuss the selection of the state transfer matrix in the Kalman smoothing algorithm and compare its filtering performance with that of the commonly used sliding average algorithm. The MAE between the Kalman smoothing algorithm’s filtering result and the true value is reduced by 9.46% compared to the sliding average algorithm, which has a temporal and spatial resolution of 7.4 s and 50 km. In addition, the RMSE is reduced by 13.39%, and the correlation coefficient is increased by 6.46%. Therefore, it’s concluded that the Kalman smoothing algorithm provides better filtering performance than the sliding average algorithm, which has a theoretical temporal and spatial resolution of 7.4 s and 50 km while retaining the original high temporal and spatial resolution (0.05 s, 337.5 m). The application of the Kalman smoothing algorithm in point source emission monitoring is also tested. The instantaneous emission rate calculated by the Kalman smoothing algorithm is closer to the annual average, and the XCO2 enhancement shows a higher correlation. Therefore, it’s shown that the Kalman smoothing algorithm can be effectively applied to high temporal and spatial resolution XCO2 observation scenarios. High-resolution XCO2 observations are crucial for assessing regional carbon sources and sinks, and the XCO2 observations reconstructed using the Kalman smoothing algorithm can provide vital data support.

ObjectiveThe atmospheric profile is a critical component in radiative transfer calculations, and constructing an atmospheric model that accurately reflects regional atmospheric conditions is essential to ensure the precision of these calculations. In this paper, we aim to explore atmospheric profile variations and improve the accuracy of radiative transfer calculations by proposing a novel method for constructing atmospheric models.MethodsWe analyze the vertical distribution and variation patterns of key atmospheric parameters, including temperature, water vapor, pressure, carbon dioxide, ozone, and methane. A new approach based on K-means clustering and random forest regression is developed to construct atmospheric profiles. Data sources include ERA5, WACCM, and CarbonTracker, covering historical atmospheric profile data over the past two decades. To address the resolution differences among these data sources, spatiotemporal interpolation, and height normalization methods are applied. We focus on the eastern region of China, where temperature, pressure, water vapor, and ozone profiles are clustered to reveal their seasonal and regional variation patterns. Subsequently, carbon dioxide and methane profiles are reconstructed using newly processed data.Results and DiscussionsThe self-developed atmospheric model is compared with the 1976 US standard atmosphere using MODTRAN software to simulate spectral data. The simulated spectra are then compared with actual measurements from the FengYun satellite. The results show that the self-developed model improves simulation accuracy by 11.2% in January and 10.5% in July compared to 1976 US standard atmosphere model, indicating that the proposed model better approximates real atmospheric conditions (Fig. 5). This method offers a new approach for constructing atmospheric profiles for radiative transfer calculations.ConclusionsThe proposed method, which combines K-means clustering and random forest regression, significantly improves the accuracy of radiative transfer calculations by better capturing regional and seasonal variations in atmospheric profiles. This approach not only enhances the precision of radiative transfer simulations but also provides a valuable tool for atmospheric research and applications.

ObjectiveSeawater depth information is of great significance for marine navigation, environmental monitoring, and seabed topography research. However, traditional depth measurement methods face difficulties in specific areas, such as remote waters and shallow regions. Satellite remote sensing depth measurement offers advantages such as wide coverage and cost-effectiveness, which makes it particularly suitable for the continuous monitoring of shallow marine areas and other regions that are difficult to reach with conventional field measurement methods. As a result, it has gained considerable attention. However, most existing remote sensing depth inversion models only use remote sensing reflectance as input features, which neglects the effect of water environmental factors on the results. To improve the accuracy and adaptability of depth inversion models, we introduce the chromaticity angle as a new feature and combine machine learning techniques to enhance the precision and applicability of existing remote sensing depth inversion methods, thereby providing effective technical support for remote sensing depth inversion in shallow marine areas.MethodsWe introduce the chromaticity angle as a new feature and combine it with remote sensing reflectance data to develop a shallow water depth inversion model using three machine learning algorithms: random forest (RF), extreme gradient boost (XGB), and support vector regression (SVR). First, Sentinel-2 satellite imagery is used to collect water reflectance data, and the chromaticity angle is calculated as an additional feature. This angle effectively captures the optical properties of the water, compensating for the limitations of using only reflectance in traditional remote sensing methods. Then, machine learning models are built using both the reflectance and chromaticity angle data for depth inversion. RF handles nonlinear relationships by constructing multiple decision trees, while SVR excels in dealing with small sample sizes and high-dimensional data. XGB, an advanced ensemble algorithm, iteratively optimizes the model’s performance for complex regression tasks. The inversion accuracy of the models is assessed using metrics such as root mean square error (RMSE), mean absolute error (MAE), and mean relative error (MRE). Additionally, Shapley additive explanations (SHAP) values are applied to analyze the contribution of each feature variable to the model’s output, which further confirms the significant role of the chromaticity angle in improving inversion accuracy.Results and DiscussionsAfter combining the chromaticity angle with the remote sensing reflectance data, the accuracy of the shallow water depth inversion model is effectively improved. The comparative analysis of the three machine learning algorithms indicates that the improved XGB model performs the best, with an RMSE of 1.11 m, MAE of 0.81 m, and MRE of 11.05% (Table 2), which demonstrates a clear advantage over traditional empirical algorithms. Additionally, the XGB model exhibits robust inversion performance in areas with steep depth gradients (Fig. 9). The scatter plot demonstrates that the chromaticity angle enhances the correlation between predicted and observed values and improves the coefficient of determination R2 (Fig. 5). Residual analysis shows that the application of the chromaticity angle feature results in a more concentrated distribution of residuals, with smaller deviations between predicted and observed values (Figs. 6 and 7). Compared to other depth ranges, the effect of the chromaticity angle is more significant in the deeper water range of 15?25 m (Table 3). SHAP analysis quantifies the contribution of each input variable to the model, which confirms that the chromaticity angle feature is a crucial predictor of water depth and has a more substantial impact in deeper waters (Fig. 10).ConclusionsWe propose a shallow water depth inversion method assisted by the chromaticity angle based on machine learning. The chromaticity angle is calculated from the remote sensing reflectance of the red (R), green (G), and blue (B) bands as a new inversion feature to improve the accuracy of satellite bathymetry. The method is applied and validated using three machine learning models: RF, XGB, and SVR. The results show that incorporating the chromaticity angle as an input feature can effectively enhance the predictive performance of the machine learning models. Among them, the improvement in the RF model is the most significant, while the XGB model, combined with the chromaticity angle, achieves the best performance. Compared to other machine learning algorithms and traditional empirical methods, this approach demonstrates clear advantages and higher fitting accuracy in areas with steep depth changes, which exhibits excellent water depth inversion performance. A depth-segment analysis reveals that the effect of the chromaticity angle is more pronounced in waters deeper than 15 m. Additionally, since the calculation of the chromaticity angle is based on widely available remote sensing imagery data, the proposed method has great potential for application in different geographic regions.

ObjectiveThe atmospheric turbulence simulation device is used to study the propagation effects of laser in atmospheric turbulence. Most previous turbulence simulation devices generate turbulence by adjusting parameters such as temperature difference and wind speed. Although they successfully simulate the basic characteristics of atmospheric turbulence, the environmental parameters that can be measured and controlled are limited, and the automatic control capability is relatively weak. To generate a stable and controllable turbulent state in the simulation chamber, it is necessary to create stable boundary conditions. Therefore, the measurement and control system of the atmospheric turbulence simulation device must have high precision, stable control capabilities, and intelligent characteristics. At the same time, a model linking turbulence intensity and control parameters should be established based on the measured data.MethodsTo simulate atmospheric turbulence under various conditions, we develop a turbulence simulation chamber that integrates control functions for temperature difference, wind speed, and air pressure. The chamber is equipped with hot and cold plates to create temperature differences, fans to adjust wind speed, and a vacuum pump to create a low air pressure environment by sealing the chamber. Based on the principles of turbulence generation, we design a system that considers both convective and hot air characteristics to simulate high-frequency turbulence. To meet control requirements, we develop an integrated measurement and control system based on a programmable logic controller (PLC) and host computer software (Fig. 3). This system combines various sensors and actuators to monitor temperature, humidity, air pressure, and wind speed throughout the simulation chamber. Additionally, it can be integrated with specialized test equipment to measure the atmospheric coherence length along the integral path within the turbulence simulation chamber. We calculate and analyze the control accuracy and uncertainty of the system. By measuring turbulence intensity under different temperature differences and air pressure conditions, we build a model that describes the relationship between turbulence intensity, air pressure, and temperature difference. The accuracy of this model has been analyzed based on the measured data.Results and DiscussionsInitially, we design the turbulence simulation chamber and its measurement and control system based on the principles of turbulence generation. We then analyze the control errors and uncertainties of the control variables (Figs.5,6,and 7). The results indicate that the absolute value of control errors for different temperature differences is less than 1.40%, and the absolute value of control errors for different air pressures is less than 0.425%. The control uncertainty of r0 under different temperature differences is limited to a maximum of 0.0490 cm. Additionally, we establish a log-linear relationship between turbulence intensity and air pressure (Fig. 9), which can be used to calculate the input temperature difference required to achieve a specific r0 at different air pressures. The correlation coefficient between the fitted values based on the turbulence model and the measured values exceeds 0.99, and the root mean square errors do not exceed 0.10854.Conclusions1) The measurement and control system has functions for measurement, control, real-time display, and data storage, with high automation and control accuracy, which effectively ensures that the turbulence state in the turbulence simulation chamber remains stable and controllable. 2) The turbulence intensity in the turbulence simulation chamber mainly depends on the temperature difference. As the temperature difference grows, the turbulence intensity becomes stronger. They exhibit a clear logarithmic linear relationship. At the same time, the turbulence intensity and the chamber air pressure also show a logarithmic linear relationship. 3) By logarithmic linear fitting of the measured data under different temperature differences and air pressure conditions, we build a turbulence state control function model for the simulation chamber. This model can be used to predict the required plate temperature difference within the allowable error range, based on the target turbulence intensity to be simulated under specific air pressure conditions.

ObjectiveAccurate monitoring of global carbon dioxide (CO?) column concentrations (XCO2) is crucial for understanding carbon cycles and supporting climate mitigation policies. However, current methods, including satellite observations and atmospheric transport models, each face significant limitations. Satellite-based XCO2 products are hindered by limited spatial-temporal coverage and retrieval uncertainties caused by cloud interference and surface reflectance variability. Meanwhile, chemical transport models, such as GEOS-Chem, often exhibit systematic biases due to uncertainties in emission inventories and parameterizations. To overcome these challenges, we aim to develop a high-precision, spatiotemporally continuous global XCO2 dataset by assimilating multi-source satellite observations into the GEOS-Chem model using an ensemble Kalman filter (EnKF). This approach is designed to meet the urgent need for reliable, high-resolution CO? monitoring systems that can support carbon flux inversion and global carbon budget assessments.MethodsWe integrate three satellite-based XCO2 products (TanSat, OCO-2, and GOSAT) into the GEOS-Chem v14.2.3 chemical transport model using an ensemble Kalman filter with covariance localization. The assimilation system is designed to generate a global XCO2 dataset with a 3-hourly temporal resolution and a 2.0°×2.5° spatial resolution for the period from March 1, 2017 to February 28, 2018. Satellite data are preprocessed with quality screening and weighted averaging based on normalized prior uncertainties [Eqs. (3)?(6)]. Model output from GEOS-Chem is vertically integrated to obtain column-averaged concentrations [Eqs. (1)?(2)], and a 20-member ensemble is constructed using perturbed initial states to represent model uncertainty. Covariance localization is applied using a Schur product approach [Eqs. (10)?(11)] to mitigate spurious correlations in the high-dimensional state space. The Kalman gain and state update equations [Eqs. (12)?(14)] ensure physical consistency during the assimilation. The final dataset is validated against ground-based TCCON measurements from 16 globally distributed sites.Results and DiscussionsWe propose and implement a data assimilation framework tailored for multi-source satellite observations, effectively addressing the challenges of data fusion and error propagation within the ensemble Kalman filter. The results demonstrate that integrating multi-source satellite data significantly enhances the spatiotemporal coverage of global XCO2 observations, which effectively fills previous observational gaps and substantially increases the volume of assimilable data (Figs. 2 and 3). Validation shows that the GEOS-Chem model generally underestimates XCO2 concentrations, with overestimations in polar regions—consistent with previous studies. By assimilating multi-source satellite observations, these systematic biases are effectively corrected: the model’s RMSE is reduced from 1.27×10-6 to 1.19×10-6, and the mean bias improves from -0.42×10-6 to -0.28×10-6 (Fig 4). Moreover, seasonal deviations are notably mitigated (Figs. 6 and 9), and the model’s performance under extreme climatic conditions becomes more consistent with actual observations (Fig. 10).ConclusionsWe develop a global XCO2 reanalysis dataset by assimilating multi-source satellite observations into the GEOS-Chem model using an ensemble Kalman filter. The assimilation significantly enhances spatial-temporal data coverage, reduces systematic model biases, and improves agreement with ground-based measurements. The final dataset not only preserves realistic seasonal XCO2 dynamics but also captures extreme meteorological and geographic influences more accurately. While limitations remain due to restricted satellite data availability and the potential introduction of new observational errors, we provide a solid foundation for future carbon flux inversion studies and support enhanced climate policy implementation. Further improvements can be achieved by expanding domestic satellite participation and developing higher-resolution assimilation frameworks.

ObjectiveHydroxyl radical (?OH), the most significant oxidant in the atmosphere, initiates oxidation reactions of most natural and anthropogenic trace gas species, determines the atmospheric lifetimes of these pollutants, and regulates the atmosphere’s self-cleaning capacity. Time-resolved measurements of ?OH provide an essential tool for researching chemical reaction kinetics and field measurements of atmospheric ?OH total reactivity, which is crucial for understanding ozone formation and secondary organic aerosols. The pump?probe technique represents a vital method for time-resolved ?OH measurements. This technique employs a 266 nm UV photolysis laser to generate ?OH and initiate its chemical reaction with reactants while synchronously detecting ?OH in another optical path. Using an optical multi-pass cell (MPC) to increase the overlap path length between the detection optical path and the UV photolysis beam effectively enhances pump?probe detection sensitivity. Several research groups have implemented Herriott-type multi-pass cells for pump?probe applications. Although these multi-pass cells provide powerful tools for pump?probe technology, their effective utilization efficiencies remain relatively low compared to designed path lengths, limiting further improvements in detection sensitivity. This study developes a high-efficiency Herriott pump?probe cell and constructed a pump?probe system based on the cell for time-resolved ?OH measurements.MethodsThe spot distribution pattern of the Herriott cell is investigated. A pump?probe MPC with an optical path utilization efficiency of 75.4% is developed. Based on the cell, a Faraday rotation spectroscopy system for time-resolved measurement of ?OH is constructed (Fig. 5). ?OH radicals are generated through the photolysis of O3 and H2O at 266 nm. The system uses a 2.8 μm continuous-wave distributed feedback (cw-DFB) laser as the probe light source. The Q(1.5e) line of ?OH at 3568.523 cm-1 is selected as the detection absorption line, with a line intensity of S=9.023×10-20 cm-1/(molecule·cm-2). By measuring the beam waist position of the laser (Fig. 4) and matching it with the multi-pass cell, the problem of beam divergence is solved. The stability and detection precision are evaluated by Allan deviation analysis. The kinetic rate constant for the reaction between ?OH and CH4 is measured. The dynamic monitoring performance of the system is tested in a photochemical smog chamber. Additionally, the system is applied to real atmospheric field observation.Results and DiscussionsThe distributions of the reflection spots on the mirror surface and at the cell center position are simulated under reflection angles of 50.4°, 79.2°, 122.4°, and 158.4°, respectively (Fig. 2). When the reflection angle is set to 158.4°, the system achieved an effective absorption path length of 28.5 m, with an overlapping efficiency of 75.4%. The red light test demonstrates that positioning the laser beam waist outside the multi-pass cell results in significant beam dispersion after several reflections, preventing the formation of a clear and complete spot pattern (Fig. 3). When the laser beam waist matches the cell center, a distribution of 25 reflection spots, including the light-through hole, is obtained on the mirror surface with relatively uniform spot sizes. The Allan deviation analysis (Fig. 7) of zero air measurement indicates a measurement precision of 0.22 s-1 with an acquisition time of 60 s, improving to 0.14 s-1 and 0.11 s-1 at averaging times of 180 s and 300 s, respectively. The statistical histogram exhibits a normal distribution, indicating system stability without obvious drift. The measured reaction rate constant for ?OH+CH4 is 6.49(-1.1, +1.3)×10-15 cm3 molecule-1 s-1 (Fig. 8). The time series of kOH' monitored in the smoke chamber correlate well with calculated values from measured CO particle concentration (Fig. 9), demonstrating good agreement in numerical values and change trends with a slope of 0.95 and a linear correlation coefficient of R2=0.97. The daily variation of atmospheric kOH' is measured in the Shouxian area in May 2024 (Fig. 10). The daily average value of kOH' is 18.4 s-1, with peaks of 19.6 s-1 at 06:00 and 21.1 s-1 at 19:00, respectively. A trough of 15.8 s-1 occurres at 14:00.ConclusionsA pump?probe MPC with an optical base length of 77.2 cm achieves an overlap efficiency of 75.4%. The ray propagation in the cell is confirmed using red light. Through precise alignment of the incident laser beam’s waist position with the cell center, the beam maintains consistent propagation during multiple reflections, producing 25 uniformly distributed spots on the mirror surface. The beam waist position of the 2.8 μm cw-DFB laser is determined and aligned with the cell center. A Faraday rotation spectroscopy system is established for time-resolved ?OH measurements. Allan deviation analysis reveals a measurement precision of the ?OH decay rate at 100 mbar of 0.22 s-1 (1σ, 60 s). The measured reaction rate constant for ?OH+CH4 demonstrates strong agreement with the recommended values from the International Association of Pure and Applied Chemistry (IUPAC). The system’s deviation from dynamic measurements of kOH' in the smog chamber remains below 5%. The daily variation of atmospheric kOH' is monitored in the Shouxian area in May 2025.

ObjectiveCompared with traditional acoustic communication technologies, underwater vertical wireless optical communication (UVWOC) offers several advantages, including high bandwidth, low latency, compact device size, and energy efficiency. These qualities make it highly promising for applications in high-speed data transmission, multimedia content distribution, and real-time marine communication. However, the performance of UVWOC is significantly affected by the combined effects of absorption, scattering, and turbulence in seawater, all of which vary considerably with depth. Existing simulation methods face critical limitations: Monte?Carlo (MC) techniques are commonly used for modeling absorption and scattering effects, while phase screen approaches are typically employed for turbulence simulation. However, optical turbulence fundamentally arises from random variations in the refractive index along the light propagation path, driven by depth-dependent fluctuations in temperature and salinity. These environmental parameters exhibit strong stratification in ocean environments, leading to complex vertical heterogeneity that cannot be adequately captured by conventional decoupled modeling approaches. In this study, we develop an integrated photon transport model that captures the continuous interplay between particulate interactions and refractive turbulence in stratified marine environments. By unifying these physical processes within a single MC framework, we enable accurate simulation of optical signal degradation across the entire water column. The model incorporates empirical data from oceanic sensors to ensure a realistic representation of vertical stratification effects.MethodsThe MC simulation framework developed in this study employs a multi-layer photon transport model to characterize light propagation in stratified underwater optical channels. Photon packets are initialized with spatial and angular distributions that match practical laser diode outputs, which feature beam divergence angles ranging from 0.1 to 50 mrad. During propagation, each photon packet undergoes energy attenuation and trajectory deviation due to combined absorption, scattering, and turbulence effects. The model implements wavelength-dependent absorption coefficients derived from empirical seawater databases, with scattering effects calculated using the Henyey?Greenstein phase function. Turbulence is simulated using a refractive cell approach, which vertically discretizes the water column into 0.1?1 m thick layers. Each layer contains spherical turbulence elements, with refractive index fluctuations determined by local temperature and salinity gradients. The receiver module incorporates a 0.2 m aperture diameter and a 120° field-of-view constraint. Photon tracking continues until either successful detection within the receiver criteria, energy falling below the detection threshold, or divergence beyond the effective propagation range. Model validation employs three complementary approaches: first, confirming that simulated light intensity distributions under pure turbulence conditions conform to lognormal statistics; second, implementing controlled verification by comparing pure turbulence channels against composite channels with scattering artificially disabled (scattering coefficient set to 0); third, comparing with field measurements from South China Sea waters.Results and DiscussionsThe simulation results demonstrate three key characteristics of underwater vertical optical channels through a comprehensive parametric analysis. Under pure turbulence conditions, scintillation index analysis reveals that the link distance contributes approximately 60% to the overall turbulence intensity, followed by refractive index variations (~30%) and layer spacing (~10%) (Fig. 6). The research defines threshold criteria for turbulence regimes of weak, moderate, and strong (Fig. 7). Path loss measurements show that absorption and scattering dominate signal attenuation, with coastal waters exhibiting a 10 dB higher loss than that of clear oceanic waters, while turbulence introduces an additional 1 dB penalty due to beam wander and distortion (Fig. 10). Comparative analysis between pure turbulence and composite channels reveals significant nonlinear interactions between scattering and turbulence effects. In turbid coastal waters (scattering coefficient >1.5 m-1), the presence of multiple scattering amplifies turbulence-induced signal fluctuations by 35%?40% compared to clear ocean conditions, as quantified by the enhanced scintillation index values. The vertical stratification effects are particularly pronounced in thermocline regions (100?700 m depth), where temperature and salinity gradients cause scintillation indices to fluctuate between 0.8?1.3, compared to the more stable mixed layer (0?100 m, σSI=0.04?0.08) and deep-water regions (>700 m, σSI=0.05?0.1) (Fig. 12). The model’s accuracy is confirmed through excellent agreement (R2>0.9) with lognormal distributions in turbulence-only scenarios and successful reproduction of field measurement data from South China Sea campaigns, particularly in predicting the nonlinear relationship between water depth and signal degradation.ConclusionsWe develop a MC-based simulation framework for underwater vertical wireless optical communication (UVWOC) that systematically integrates absorption, scattering, and turbulence effects in stratified marine environments. The model demonstrates high fidelity in characterizing channel behavior, with validation results confirming its accuracy in predicting both turbulence-induced signal fluctuations (scintillation index) and beam wander effects. Key findings reveal that link distance (L) dominates turbulence intensity, which contributes approximately 60% to the observed scintillation index (σSI), while refractive index variation (Δn) and turbulent layer spacing (Δz) account for 30% and 10%, respectively. The research defines threshold criteria for different turbulence regimes: weak turbulence (σSI<0.15) occurs when refractive index variation Δn<1.8×10-4 and turbulent layer spacing Δz>0.50 m, primarily found in optically stable surface mixed layers; moderate turbulence (0.15≤σSI≤1) emerges at Δn=1.8×10-4?2.6×10-4 with Δz=0.25?0.50 m, typically observed in thermocline transition zones; while strong turbulence (σSI>1) dominates when Δn>2.6×10-4 and Δz<0.25 m. In composite channel simulations, absorption and scattering are identified as the primary drivers of power attenuation, with coastal waters exhibiting 10 dB higher path loss compared to clear oceanic conditions. The integration of real-world Argo float temperature-salinity profiles confirms the model’s applicability across distinct oceanic layers—mixed layer (0?100 m), thermocline (100?700 m), and deep water (>700 m)—where turbulence characteristics vary significantly with depth. This framework offers a robust tool for optimizing UVWOC systems in challenging scenarios such as deep-sea exploration and cross-layer communication. Future enhancements will incorporate machine learning for real-time turbulence prediction and expand experimental validation through controlled underwater trials, which further improves the model’s predictive reliability in dynamic marine environments.

ObjectiveThin cloud contamination in remote sensing images presents a significant challenge affecting data quality, resulting in imprecise analysis and interpretation across applications including land cover classification, environmental monitoring, and disaster assessment. Conventional thin cloud removal methods typically depend on feature extraction at a single scale and inadequately capture the multi-scale characteristics of clouds, leading to suboptimal declouding results. Furthermore, deep learning-based approaches, particularly those utilizing generative adversarial network (GAN), frequently encounter detail loss and texture blur in generated images and demonstrate limited capability in modeling local features accurately. To address these challenges, this study introduces a novel GAN-based method incorporating a convolutional block attention module (CBAM) and a multi-scale attention mechanism. The proposed approach aims to enhance the accuracy of thin cloud removal while maintaining the spectral and spatial details of the original imagery, thus improving the overall quality of remote sensing data.MethodsThe proposed framework integrates the GAN architecture with CBAM and multi-scale attention mechanism for effective thin cloud removal. The generator network is engineered to capture global and local features of the input image, enabling the model to restore detailed surface information while removing thin clouds effectively. The discriminator network assesses the authenticity of the generated image, ensuring high similarity to the real cloud-free image. The multi-scale attention mechanism serves a crucial function by implementing parallel convolution branches with independent parameter optimization strategies. This approach enables differentiated feature expression, enhancing the model’s capacity to process cloud contamination and underlying surface features at various scales. Furthermore, CBAM is integrated for enhanced feature extraction at different scales. CBAM applies sequential channel and spatial attention to feature maps, adaptively emphasizing important features while suppressing irrelevant noise. This integration of multi-scale attention and CBAM substantially improves the model’s capability to restore image brightness and recover fine details. Comprehensive experiments were conducted on the RICE1 dataset and a custom remote sensing cloud removal dataset based on Sentinel-2 imagery. The model’s performance is evaluated using quantitative metrics including peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM). The proposed method is compared against several state-of-the-art thin cloud removal techniques, including Haze Removal, FFA-Net, C2PNet, CGAN, and SpA-GAN, to demonstrate its effectiveness.Results and DiscussionsExperimental results show that the proposed method surpasses traditional thin cloud removal techniques in both visual quality and quantitative metrics. The integration of CBAM with the multi-scale attention mechanism substantially enhances the model’s ability to recover detailed surface information while effectively removing thin clouds (Figs. 8?11). Comparative analysis reveals that the proposed method achieves a PSNR of 31.321 dB and an SSIM of 0.894, exceeding the performance of state-of-the-art methods (Tables 1 and 2). The generated images are further analyzed based on the average brightness of the RGB channels (Figs. 11 and 12). The results indicate that the cloud-free images generated by the proposed method most closely match the real images in terms of RGB channel brightness, validating the method’s effectiveness in preserving spectral details. An ablation study examines the synergistic contribution of the two attention mechanisms (Table 4 and Fig. 14). The results confirm that their combination significantly enhances model performance, demonstrating their complementary role in improving image quality. Specifically, the multi-scale attention mechanism facilitates feature capture at different scales, while CBAM enhances feature extraction accuracy through channel and spatial dimension focus.ConclusionsThis study presents a novel method for thin cloud removal from remote sensing images based on GAN enhanced with CBAM and multi-scale attention mechanism. The proposed approach enhances cloud removal accuracy while preserving the spectral and spatial details of the original image. Experimental results validate the effectiveness and robustness of the proposed method, demonstrating its superior performance compared to state-of-the-art techniques in terms of visual quality and quantitative metrics. The integration of CBAM and multi-scale attention mechanism proves instrumental in achieving these results, underscoring their significance in enhancing model performance. The proposed method offers a promising solution for improving remote sensing data quality. Future research will concentrate on optimizing the model architecture and expanding its applicability to additional types of cloud pollution and remote sensing datasets.