ObjectiveAmong the myriad factors influencing climate change, the interaction between clouds and aerosols is the most uncertain element in global climate dynamics, and is widely acknowledged as a formidable challenge in atmospheric science. High-spectral-resolution lidar (HSRL) observations of vertical distribution characteristics of clouds and aerosols, independent of assumptions about cloud vertical structure and lidar ratio, hold immense scientific potential for future research on cloud-aerosol interactions. In HSRL systems, active frequency locking technology is typically employed to match the emitted laser wavelength with the etalon, thus ensuring system parameter stability. However, changes in the working environment or hardware failures can reduce the locking precision to significantly degrade the high-spectral-resolution detection performance. Therefore, real-time calibration of molecular transmittance, correction of detection results, and enhancement of detection accuracy are of paramount significance.MethodsIn the HSRL system, the molecular transmittance is determined by the collaboration of multiple components such as the emitted laser and the etalon. Ground-based lidar observations are susceptible to environmental changes, which require timely calibration for accurate inversion. The atmosphere is replete with a multitude of components such as clouds and aerosols, necessitating stratified identification. Distinguishing between clouds, aerosols, and clean areas in the atmosphere lays the foundation for calibrating molecular transmittance. We introduce an online method for calibrating molecular transmittance, which avoids interference from clouds and aerosols by stratified identification to achieve online calibration of molecular transmittance. Since the proposed HSRL can directly invert atmospheric optical property parameters without assuming a lidar ratio, the utilization of a scattering ratio threshold method for classification provides unique advantages.Results and DiscussionsThe experiment selects calibration cases in three distinct atmospheric conditions in Beijing, including clear, dusty, and cloudy conditions. Under these different atmospheric states, molecular transmittance calibration can be performed by following atmospheric stratified identification, which demonstrates that this method can calibrate molecular transmittance in various weather conditions. To verify the accuracy of the HSRL detection results, we compare the HSRL with the widely employed sun photometer. The observation results in Beijing are inverted by adopting both fixed molecular transmittance and online calibration parameters. When fixed parameters are leveraged for inversion, the correlation coefficient of the detection results of the two instruments is 0.92, and the root mean square error is 0.136. After conducting correction with this method, some inversion errors are effectively corrected, the correlation coefficient reaches 0.94, and the root mean square error decreases to 0.078. The detection results obtained from the inversion show higher consistency with that of the sun photometer.ConclusionsWe initially analyze the molecular transmittance error based on the fundamental principles and detection methods of HSRL. In the HSRL system, where an iodine molecule absorption cell serves as a spectral etalon, the systematic error in molecular transmittance primarily results from the frequency fluctuation of the emitted laser and temperature instability in the molecular absorption cell. Meanwhile, an online calibration method for molecular transmittance is proposed to rectify the influence caused by system instability. Unlike the calibration method that fixes clean atmospheric areas, this method exploits the HSRL characteristics that can simply and accurately invert the backscatter ratio, and employs the backscatter ratio as the basis for stratified identification. Additionally, after selecting clean areas in the atmosphere, the molecular transmittance is calibrated by adopting these clean areas. This leads to the result that transmittance calibration cannot be limited to clear weather, thus supplementing the calibration method in non-clear weather conditions. Finally, based on the observation results of the HSRL system in Beijing, an analysis of its observation results is conducted to demonstrate the effectiveness of this method in enhancing detection accuracy.

ObjectiveClouds play a crucial role as intermediary factors in maintaining the balance of atmospheric radiation energy and water cycle. The particle size distribution (PSD) and the optical and microphysical properties of clouds are intricately linked. Therefore, precise determination of PSD is pivotal for analyzing the interactions among different atmospheric components. Polarized remote sensing, a novel atmospheric detection technology, can be utilized to retrieve the PSD of water clouds. Multi-directional observation information can be leveraged to retrieve PSD. However, current methods overlook sensor scattering angle coverage and actual cloud characteristics. The fixed-resolution sampling method within the field of view (FOV) neglects the influence of sensor imaging characteristics and cloud heterogeneity. Therefore, conducting studies aimed at enhancing the accuracy of water PSD inversion based on sensor imaging and cloud characteristics is important for atmospheric research.MethodsIn PSD retrieval research using polarized multi-angle observation data, the selection of inversion scale significantly influences the number of available observation angles and the cloud’s heterogeneity. To address these limitations, we propose a dynamic scale PSD retrieval method based on multi-angle polarized data, leveraging the polarized radiation characteristics of water clouds and radiation transmission theory. We conduct a quantitative evaluation of retrieval feasibility at various scales within satellite imaging geometry and cloud characteristics. Our method utilizes an optimal pixel merging strategy at a pixel-by-pixel level to improve inversion resolution while maintaining accuracy, ultimately applying the inversion method to directional polarization camera (DPC) observation data. Results indicate that, unlike the fixed retrieval scale of 25 pixel×25 pixel used in POLDER (polarization and directionality of the Earth’s reflectance) product, our method dynamically adjusts the inversion scale between 1 pixel×1 pixel and 7 pixel×7 pixel, leading to improved retrieval resolution. Thus, the optimization strategy for inversion scale in this study aims to strike the best balance between inversion success rate and accuracy, employing a dynamic selection method on a pixel-by-pixel basis. Tailored to the imaging characteristics of domestically produced DPC data, we devise the technical flowchart depicted in Fig. 1. Initially, we establish a polarized scattering phase function library for various water cloud droplet PSDs. By considering the number of observed angles within the water cloud “rainbow” effect among DPC observations, we determine the initial inversion scale. Simultaneously, we iteratively optimize the inversion resolution based on the number of observed angles and cloud attribute information within the scale. Finally, by leveraging multi-angle polarized observation data, we achieve the inversion of water cloud droplet size distributions at the optimal inversion scale.Results and DiscussionsCompared with moderate resolution imaging spectroradiometer (MODIS) cloud effective particle radius products, the spatial distribution shows good consistency. As depicted in Fig. 8, the inversion results of overlapping areas between MOD06~~L2.A2022068.0220.061.20220 and DPC are contrasted within the case study region. Figures 8(a) and 8(b) vividly depict that the values and distributions of cloud effective particle radius from DPC and MODIS exhibit remarkable similarity. However, Fig. 8(c) reveals substantial disparities in inversion values between the two, primarily in fragmented cloud regions, whereas variances in stable cloud cluster areas are negligible. In Fig. 9, we perform a quantitative statistical analysis of the inversion results within overlapping areas. Using regression equations derived from fitting, our inversion results yield smaller values for cloud effective particle radius compared to MODIS products, especially for radius of 5?12 μm. This trend aligns with comparisons between POLDER and MODIS. For larger particles, both DPC and inversion results surpass those of MODIS, possibly due to lower sensitivity of polarization to larger particles, leading to increased inversion errors for this particle size range. In histogram analysis, the proportion of inversion results with errors less than 2.05 μm exceeds 50%. Considering significant differences in imaging time between DPC and MODIS, substantial shifts in cloud position, variations in shape, and disparities in sensor resolution and inversion methods, significant errors in pixel-by-pixel comparisons are expected. However, these deviations are acceptable. Therefore, analyses indicate our method can yield more detailed inversion results while maintaining high accuracy.ConclusionsThe dynamic inversion resolution method improves upon conventional techniques by considering the variations in scattering angle coverage across different regions and the effect of cloud structures on satellite wide FOV imaging. By carefully considering observational conditions and the real-time state of clouds at a pixel level, this method avoids loss of accuracy and success rate stemming from arbitrary resolution selection in PSD inversion. Additionally, it reduces uncertainties from geometric variations in multi-angle imaging and cloud heterogeneity during inversion. Consequently, our study provides significant benefits in enhancing the accuracy and success rate of cloud PSD retrieval. In conclusion, our research explores ways to enhance the efficiency of utilizing domestic multi-angle polarized data and improve the accuracy of PSD inversion.

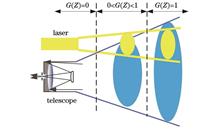

ObjectiveAs a new type of active remote sensing equipment, lidar is increasingly widely used in the measurement of atmospheric components such as aerosols, water vapor, and ozone. Raman lidar used for aerosol and water vapor detection has outstanding advantages such as high detection accuracy, high spatiotemporal resolution, and real-time measurement capabilities. It is suitable for various mobile platforms such as vehicle mounted and airborne systems and has become one of the main technical means for accurately detecting the distribution of atmospheric aerosols and water vapor. The receiving field of view of a lidar cannot completely coincide with the laser beam at close range. The laser beam gradually enters the receiving field of view, so the echo signal received by the lidar at close range is only a partial echo signal of the laser beam. To describe this effect, a geometric overlap factor, abbreviated as the geometric factor, is defined. Due to the influence of geometric factors, the measurement results of lidar in the close range geometric factor area are inaccurate. The closer the distance, the more significant this effect becomes. Since atmospheric water vapor is mainly distributed below the troposphere, if we want to use lidar to obtain accurate water vapor distribution profiles, it is necessary to calibrate and calculate the geometric factors. This article focuses on the situation where the receiving telescope’s field of view is partially obstructed by obstacles during horizontal experimental measurement of geometric factors in the practical application of gas-soluble glue water vapor Raman lidar. We have made some improvements to the correction method of geometric factors.MethodsWe propose an improved geometric factor correction method to solve the problem of partial occlusion of the telescope’s receiving field of view in horizontal experimental measurements of gas-soluble glue water vapor Raman lidar. This method is based on the experimental method commonly used for geometric factor correction, which involves measuring the lidar along the horizontal direction under horizontally uniform atmospheric conditions. Improvements have been made to the experimental scheme and data processing methods. Firstly, a shading device is used to completely block the lower half of the telescope’s field of view (Fig. 2). The position of the shading device can be flexibly adjusted according to the occlusion of the object, ensuring that the unobstructed part can be fully received on the path. The improved experimental plan is as follows: ① a shading device is used to cover half of the received field of view of the telescope, followed by horizontal measurement with a lidar in the azimuth direction under good visibility conditions; ② the pitch angle is adjusted to 90°, ensuring the shading device in an unobstructed state for a set of vertical measurements with the same parameters; ③ the shading device is quickly removed to perform another set of vertical measurements under the same conditions. To obtain the geometric factor of the lidar before the method improvement, distance squared correction is applied to the echo signal measured horizontally by the lidar in the first step. An appropriate linear range is then selected for fitting [Fig. 4(a)], and the ratio of the two measurements is processed to determine the geometric factor [Fig. 4(b)]. Since the occlusion state of the telescope remains unchanged during horizontal measurement in step ① and vertical measurement in step ②, the geometric factors processed in step ① can be used for correcting the vertical occlusion measurement echo signal in step ② (Fig. 5). In step ③, the shading device is quickly removed to perform a set of vertical measurements with the same parameters as in step ②. The interval between the two vertical measurements is very short, allowing the assumption that the atmospheric state remains unchanged. The echo signal of the vertical unobstructed measurement without geometric factor correction in step ③ and the echo signal of the vertical unobstructed measurement with geometric factor correction in step ② are plotted together (Fig. 6). Normalizing the ratio of the two signals provides the true and accurate geometric factor of the aerosol water vapor Raman lidar (Fig. 7).Results and DiscussionsContinuous atmospheric observation experiments are conducted using a self-developed aerosol water vapor Raman lidar system, and the extinction coefficient profiles of aerosols before and after geometric factor correction using the improved method are inverted (Fig. 10). We compare and analyze the calculation results of the 532 nm wavelength optical thickness of the lidar before and after the improvement of the geometric factor correction method with the continuous measurement results of the 550 nm wavelength optical thickness of the solar radiometer at the same time and space (Fig. 11). The correlation analysis results show that the correlation coefficient between the improved geometric factor correction method and the measurement results of the lidar and solar radiometer is as high as 0.9779 (Fig. 12), indicating good consistency between the two. From the calculation results of relative error, the relative error between the improved lidar optical thickness (532 nm) calculation results and the solar radiometer (550 nm) measurement results is within 10%, with an average relative error of 3.81% and a maximum relative error of 8.23%. The average relative error before the method improvement is 8.34%, and the maximum relative error is 18.26%. The accuracy of the improved method is 2.19 times that of the original method. The reliability of the lidar measurement results and the rationality and accuracy of the improved geometric factor correction method have been fully verified.ConclusionsWe calculate and analyze the correction effect of the proposed improved geometric factor correction method. The results indicate that this method can accurately calculate the geometric factors of Raman lidar systems under partial occlusion conditions. After the geometric factor correction obtained by the improved method, the accuracy and reliability of the lidar measurement results are good. The improved geometric factor correction method has a certain reference value for the practical application of aerosol water vapor Raman lidar systems.

ObjectiveHigh spatiotemporal resolution atmospheric wind field detection has important applications in pollution transport and diffusion, extreme weather monitoring, numerical weather forecasting, wind resource assessment, and other areas. Coherent Doppler lidar, as an active laser remote sensing device, acquires high spatiotemporal resolution vector wind field vertical-structure information. However, in practical applications, factors such as platform or power supply stability, and weather conditions can lead to missing wind profiles, limiting the application scope of wind-sensing lidar. Deep learning methods based on historical data modeling have been widely used in wind field prediction. The long short-term memory (LSTM) network shows good performance in wind field prediction. However, most studies mainly focus on one-dimensional temporal or spatial wind fields, while atmospheric wind fields exhibit both temporal and vertical spatial characteristics. Doppler lidar, as a high spatiotemporal resolution atmospheric wind field detection tool, obtains spatiotemporal two-dimensional wind field information. Therefore, we propose a method using a bidirectional long short-term memory (Bi-LSTM) model applied to wind field detection with lidar for wind profile prediction. The aim is to fully utilize the spatiotemporal two-dimensional wind field data observed by the lidar, train a temporal Bi-LSTM model to capture the temporal variation characteristics of wind profiles, predict future wind profiles, interpolate missing wind profiles, and acquire more continuous wind field information.MethodsOur study focuses on Doppler lidar atmospheric wind field detection experiments in Juehua Island, Liaoning Province, China. We utilize complete wind profile data for modeling and validation to predict and interpolate deficient wind profiles detected by the lidar. Previous complete wind profile data segments serve as the training and validation sets to establish wind profile prediction models based on a time-series Bi-LSTM model and a non-time series convolutional neural network (CNN) model for the zonal component u and meridional component v of the wind profiles. We train the models using the same parameter settings, including step size, number of iterations, loss function, and optimization algorithm. We evaluate the wind profile prediction performance of the Bi-LSTM and CNN models using various metrics such as coefficient of determination (R2), root mean square error (RMSE), and mean absolute error (MAE). The Bi-LSTM model with superior validation wind profile prediction performance is then used for deficient wind profile prediction and interpolation to obtain more continuous wind field information.Results and DiscussionsBased on the evaluation results of wind profile prediction (Fig. 4), the Bi-LSTM model shows similar trends and ranges in performance evaluation metrics R2, RMSE, and MAE for different look-back steps. As a temporal network, the Bi-LSTM model exhibits consistent performance across different look-back steps, indicating that wind profiles have short-term or long-term temporal dependencies that allow prediction based on past wind profiles at various time steps. With an increase in prediction time steps, errors accumulate gradually. After the 16th time step iteration, the model’s predictive capability rapidly declines, with R2 values for predicting u and v components falling below 0.5, indicating an inability to accurately forecast wind profiles beyond that point. This suggests that the Bi-LSTM model demonstrates good short-term predictive ability for the next 15 wind profiles (within the next 2.5 h). Comparing the wind profile prediction performance of the temporal Bi-LSTM model with the non-temporal CNN model, the box plot analysis (Fig. 5) reveals that the CNN model shows greater variability in R2 values for wind profile prediction across different look-backs, indicating a more pronounced influence of the look-back parameter on wind profile prediction and greater uncertainty introduced by the choice of look-backs. The Bi-LSTM model outperforms the CNN model in predicting u and v profiles, likely due to its ability to capture temporal features of wind profiles. In short-term wind prediction, the Bi-LSTM model exhibits lower variability in R2 values across different look-backs, demonstrating greater robustness in wind profile prediction. Compared to the CNN model, the Bi-LSTM model achieves higher R2 values and lower errors in prediction. The differences in predictive performance may stem from the CNN model’s proficiency in extracting local features using convolutional kernels, while wind profiles, as time series data, exhibit features closely related to preceding and subsequent time steps, potentially limiting the CNN model’s performance in handling such time-dependent wind profile data. In contrast, the Bi-LSTM network, with bidirectional LSTM layers, considers features of wind profiles from multiple time steps in both directions, enabling it to better capture dependencies in time series data and make more accurate wind profile predictions. Future work involves incorporating time-series data such as boundary layer height, temperature, humidity, and pressure as input features to further explore the Bi-LSTM model’s wind profile prediction performance (Fig. 8). Additionally, we find it necessary to increase the number of training time steps to achieve better wind profile prediction results.ConclusionsIn the present study, we propose a method for wind profile prediction using a Bi-LSTM model applied to wind field detection with lidar. The aim is to fully utilize the spatiotemporal two-dimensional wind field data observed by the lidar. By training a temporal Bi-LSTM model to extract the temporal variations of wind profiles, we predict future wind profiles and interpolate missing wind profiles. We conduct a comparison between the temporal Bi-LSTM model and the non-temporal CNN model in wind profile prediction. Our study reveals that the temporal Bi-LSTM model exhibits higher robustness in short-term wind field prediction compared to the non-temporal CNN model.

ObjectiveNitrogen dioxide (NO2) not only directly affects air quality and participates in secondary chemical reactions but also influences human health. It primarily originates from industrial and vehicular emissions, as well as regional transport at higher altitudes. Therefore, surface in situ measurements alone cannot fully comprehend the high-altitude transport, vertical evolution, and atmospheric chemical processes of NO2. In this study, we aim to investigate the vertical distribution characteristics and temporal variations of NO2 in Beijing, given its status as a primary atmospheric pollutant originating from industrial activities and vehicular emissions. With Beijing’s dense population and high vehicle density, vehicular emissions constitute a major source of NO2. Despite improvements in air quality due to environmental policies, regional transport, especially along the southwest?northeast corridor, remains a significant contributor to NO2 levels in Beijing. Traditional monitoring methods have limitations in capturing NO2 transport dynamics, necessitating advanced techniques such as multi-axis differential optical absorption spectroscopy (MAX-DOAS). This research, based on two years of MAX-DOAS observations, is designed to understand NO2’s vertical distribution and its response to policy interventions and holiday effects. By providing detailed insights into NO2 behavior under various conditions, we support effective air quality management and policy formulation in Beijing.MethodsOur study examines the spatiotemporal distribution of NO2 in Beijing using a MAX-DOAS observation station located at the Chinese Academy of Meteorological Sciences from June 1, 2020, to May 31, 2022. Positioned at an elevation of 130 m, the instrument is situated 40 m above ground level with a viewing azimuth of 130°. The station is strategically placed near major NO2 emission sources from busy traffic areas within a 5 km radius, despite the absence of industrial emissions. The MAX-DOAS instrument comprises modules for collecting sunlight scattered light and signal processing. Sunlight scattered by a right-angle prism is directed onto a single lens fiber, which transmits the light to ultraviolet and visible spectrometers covering wavelengths of 296?408 nm and 420?565 nm, respectively. Observations are automatically taken when the solar zenith angle (SZA) is below 92° across elevation angles ranging from 1° to 90°, with exposure times dynamically adjusted to maintain optimal signal quality. Data processed using QDOAS software undergoes least-squares inversion to derive tropospheric slant column densities (SCDs) of NO2 within the 338?370 nm wavelength range. This process includes specific parameter settings and aerosol prior profiles, alongside VLIDORT radiative transfer modeling for accurate vertical profile retrievals. Rigorous quality control criteria are applied to ensure a comprehensive analysis of NO2’s spatiotemporal variations in Beijing, providing critical data support for enhancing air quality management strategies and informing policy development.Results and DiscussionsThe bottom NO2 volume fraction extracted from NO2 vertical profiles is well correlated with the NO2 mass concentration measured at a CNEMC station, Guanyuan (R=0.7723, Fig. 2). The study shows that the highest NO2 volume fraction near the ground in Beijing occurs in January (17.40×10-9) and the lowest in April (5.51×10-9, Fig. 3). We find that NO2 volume fraction in Beijing varies in the order of winter (15.71×10-9)>autumn (15.39×10-9)>spring (8.52×10-9)>summer (8.06×10-9) for seasonal variation (Fig. 4). NO2 profiles all show an exponential shape in different seasons. The averaged diurnal variation of NO2 in spring and summer exhibits a single peak pattern appearing before 10:00, and it shows a bi-peak pattern in autumn and winter with peaks appearing before 10:00 and after 15:00 (Fig. 5). Moreover, there is not an obvious weekend effect for NO2 in Beijing from the perspective of concentration variations. However, it shows an obvious weekend effect from the perspective of NO2 diurnal variations, which mainly manifests in deferred NO2 peaking (16.16×10-9) on Saturday morning being larger than that on weekdays and Sunday and the afternoon peak on Sunday being larger than that on weekdays and Saturday. This may be related to the travel cross-cities on Saturday and return on Sunday (Fig. 7). In addition, we also reveal that the reduction of NO2 in Beijing during major events is significantly greater than that during holidays. The average volume fraction of surface NO2 during major events and holidays decreases by 29.0% and 18.5%, respectively, compared with the whole observation period (Fig. 8).ConclusionsDuring the period from June 1, 2020, to May 31, 2022, we conduct continuous MAX-DOAS NO2 remote sensing observations in Beijing. Using a least-squares algorithm, we derive NO2 slant column densities (SCDs) from various elevation angles, enabling us to construct NO2 vertical profiles for the entire observation period using an optimal estimation method. The correlation coefficient between the derived near-surface NO2 volume fractions and mass concentrations from CNEMC stations reaches 0.7723, which indicates strong agreement. Key findings include monthly variations in near-surface NO2 volume fractions, with peak levels observed during winter (17.40×10-9) and lowest levels in April (5.51×10-9). NO2 vertical distribution exhibits a seasonal pattern, with higher volume fractions observed in winter and autumn compared to spring and summer. Daily variations in NO2 volume fraction show distinct patterns depending on the season: single peaks before 10:00 in spring and summer, and double peaks before 10:00 and after 15:00 in autumn and winter. Notably, NO2 volume fractions decline more rapidly after 10:00 in spring and summer due to increased boundary layer heights and solar radiation intensity. Weekend effects are also observed, with NO2 volume fractions decreasing by 1.5% on Saturdays and 7.0% on Sundays compared to weekdays. Weekday, Saturday, and Sunday variations show double-peak patterns, with peaks occurring before 08:00 and after 16:00. Saturdays exhibit the highest peak volume fraction and a delayed peak compared to weekdays and Sundays. Analysis during holidays and major events reveals decreased NO2 volume fractions of 29.0% and 18.5%, respectively, compared to the overall period. Both periods show double-peak patterns, with peaks before 09:00 and after 16:00, although volume fractions are lower during major events compared to holidays. These findings emphasize the importance of long-term MAX-DOAS NO2 vertical observations in understanding its influence on the atmospheric environment and supporting NO2 prevention and control efforts in Beijing.

ObjectiveLaser-induced breakdown spectroscopy (LIBS) has such as no sample pretreatment, simultaneous multi-element detection, and rapid analysis. It is currently the only technique capable of direct in-situ detection of solid metal elements underwater. Although LIBS has been successfully applied underwater, it encounters challenges like weak characteristic radiation, severe spectral line broadening, and short signal lifetime due to the properties of water. Therefore, it is necessary to develop enhancement methods tailored for in-situ underwater LIBS detection. Previous studies have confirmed in the laboratory that solid substrate-assisted LIBS can effectively enhance spectral intensity. Based on this, we verify the feasibility of this enhancement method for underwater in-situ applications using a self-developed deep-sea LIBS system, tested in both shallow- and deep-sea environments.MethodsUsing the LIBSea Ⅱ system developed by Ocean University of China (OUC), we incorporate a solid substrate-assisted enhancement module. The system structure is shown in Figure 1. The module consists of an underwater stepper motor and a solid substrate target. The solid substrate target is placed on a substrate carrier device designed as a quarter-circle for ease of operation by robotic systems. Six solid targets are positioned equidistantly on the carrier device and secured with adhesive. In practice, the underwater stepper motor drives the substrate carrier in a reciprocating motion, rotating 90° each time, with the laser sequentially acting on the diagonal of the six square substrates. We test the system in a laboratory pool, in the shallow waters off Jiaozhou Bay, Qingdao, and in the South China Sea at a depth of 1503 m to validate the method.Results and DiscussionsIn the laboratory validation, comparing the enhancement effects of silicon, zinc, copper and nickel substrates, silicon demonstrates the best performance and is thus used as the substrate material in subsequent tests. Six identical silicon substrates are fixed in the substrate carrier, and rotation is controlled by the underwater motor. The LIBS system operates continuously for 240 min. Figure 3 shows the seawater LIBS spectra assisted by the silicon substrate over time. The spectral intensities of Ca I (422.7 nm), Na I (588.9 nm, 589.6 nm), and K I (766.5 nm, 769.9 nm) are illustrated in Figure 4. The intensities of Ca, Na, and K decrease as working time increases. The spectral intensities remain relatively stable during the first 90 min of continuous operation but significantly decrease after 90 min, and the substrate no longer exhibits enhancement effects after 170 min of continuous use. In shallow-sea tests (Fig. 7), the spectral signals of Ca are enhanced, and the atomic spectral lines of Na and K were enhanced by more than 6 times, with Na (588.9 nm) enhanced by 6.6 times, Na (589.6 nm) by 6.2 times, K (766.4 nm) by 6.0 times, and K (769.9 nm) by 6.4 times. In deep-sea tests (Fig. 10), the spectral intensity is significantly enhanced with substrate assistance, showing a 5-fold enhancement for Na and K elements.ConclusionsWe verify the feasibility of solid substrate-assisted enhancement for underwater in-situ LIBS detection. A solid substrate enhancement module, consisting of an underwater stepper motor and a solid substrate target, is developed. The service life of the substrate is extended by motor rotation. After comparing different substrates in the laboratory, silicon is selected for its superior enhancement effect, which is most effective within 90 min of continuous operation. Beyond 90 min, enhancement sharply decreases due to surface damage. Shallow- and deep-sea trials confirm the feasibility of substrate-assisted in-situ detection, with more than 6-fold enhancement achieved in shallow seas using silicon substrates. At a depth of 1503 m in the deep sea, a 5-fold enhancement is obtained using an iron substrate, which outperforms the long-pulse enhancement method reported to date.

ObjectiveNitrogen dioxide (NO2), the main component of nitrogen oxides (NOx), is an important air pollutant that can adversely affect human health and the environment. Satellite remote sensing monitoring offers near-real-time, continuous, and large-scale monitoring of atmospheric NO2. The geostationary environmental monitoring spectrometer (GEMS) aboard GK2B, launched in February 2020, is the world’s first satellite payload capable of monitoring atmospheric trace gases on an hourly scale. It provides tropospheric NO2 column densities in Asia and the Pacific during the daytime. In this study, we validate GEMS tropospheric NO2 column density products using observations from the TROPOspheric Monitoring Instrument (TROPOMI) and ground-based multi-axis differential optical absorption spectroscopy (MAX-DOAS) to obtain more comprehensive results. These steps are essential prerequisites for applying quantitative remote sensing products. Furthermore, since satellite data coverage can be influenced by various factors, including cloud cover, which can drastically reduce the spatial coverage of the GEMS dataset after quality control, applying the data interpolating empirical orthogonal functions (DINEOF) method to the quality-controlled GEMS dataset significantly improves spatial coverage. This enables a more comprehensive assessment of tropospheric NO2 concentrations across the study area.MethodsThe datasets we used are satellite-based data from GEMS and TROPOMI and ground-based data from MAX-DOAS (Xuzhou). In the data preprocessing phase, the satellite data are first screened by parameters such as cloud fraction to ensure the state and quality of the inversion results. Then, the bilinear interpolation method is applied to resample both GEMS and TROPOMI observation data into a 0.05°×0.05° grid. In the comparison with TROPOMI, the TROPOMI data are first averaged on a daily basis. Subsequently, the data from GEMS at 12:45 and 13:45 (Beijing time) are selected for averaging, and the integrated dataset is used for correlation analysis. For the comparison with MAX-DOAS, the data corresponding to the grid in GEMS are initially filtered based on station coordinates. Then, hourly averages of MAX-DOAS data are calculated based on the actual transit time of GEMS, with the analysis limited to the first and second half hours. We analyze metrics such as data volume (N), correlation coefficient (R), mean absolute error (MAE), root mean square error (RMSE), and normalized mean bias (NMB) for validation. In the reconstruction of the quality-controlled dataset, the DINEOF algorithm initializes all missing data to an identical predicted value at the beginning of the reconstruction. Subsequently, the dataset undergoes iterative cross-validation using the EOF method to achieve optimal reconstruction results.Results and DiscussionsGEMS tropospheric NO2 data products are compared and validated before and after quality control using TROPOMI and MAX-DOAS (Xuzhou) (Fig. 1). After quality control, the R-values are 0.88 and 0.85 respectively (P<0.05), indicating a high correlation between GEMS and both datasets. Numerically, GEMS data show similarities with MAX-DOAS and significantly higher values than TROPOMI. The number of products changes in a phased pattern, consistent with the designed observation schedule (Fig. 2). From the perspective of mean values, tropospheric NO2 column densities in East China generally exhibit an increasing trend from morning to noon followed by a decrease (Fig. 3). On a daily basis, normalized NO2 mass concentrations observed by ground stations in Shanghai display a pattern similar to satellite monitoring data, albeit with a relative lag [Fig. 4(b)]. The overall high NO2 column densities derived from GEMS inversion are also prominently visible [Fig. 4(c)]. Cloud fraction is the most influential factor affecting GEMS data volume during quality control. The data product coverage stabilizes at a high level when transitioning to full central (FC) and full west (FW) modes. Spatially, observation coverage in the southern to central parts of East China is generally lower compared to that in the northern regions. The distribution of cloud fraction generally follows a pattern of high in the south and low in the north (Fig. 5). Data reconstruction markedly increases the coverage of GEMS tropospheric NO2 products [Fig. 6(a)]. The validation of the reconstructed dataset using satellite-based and ground-based observations yields R-values of 0.85 and 0.64 respectively [Figs. 6(b) and (c)]. Therefore, the reconstructed dataset maintains high reliability.Conclusions1) GEMS provides 6?10 observations per day, which enables the study of hourly distribution of tropospheric NO2 concentrations. 2) GEMS products demonstrate good agreement with both TROPOMI and MAX-DOAS observations during validation. After quality control, the R-values can reach 0.88 and 0.85 respectively. 3) Numerically, GEMS shows noticeably higher values than TROPOMI and similar values to MAX-DOAS. The inversion results from GEMS generally indicate higher overall concentrations. 4) Due to influences such as cloud fraction, there has been a notable reduction in the volume of GEMS tropospheric NO2 data after quality control. Spatial-temporal reconstruction using the DINEOF method effectively improves the spatial coverage of GEMS data. The reconstructed dataset maintains a high level of reliability.

ObjectiveClouds and aerosols play a crucial role in the Earth’s atmospheric system, significantly impacting the Earth’s radiation balance, water cycle, and air quality. Space-borne lidar serves as a unique tool for the vertical simultaneous detection of aerosols and clouds, providing the advantage of all-weather operation. The cloud-aerosol lidar and infrared pathfinder satellite observations (CALIPSO) satellite represents the most notable example of this technology. However, due to its low signal-to-noise ratio, traditional lidar layer detection algorithms based on slope and threshold often miss optically thin layers of clouds and aerosols. Therefore, we propose a U-Net neural network classification model based on a two-dimensional hypothesis testing layer detection algorithm (2DMHT-UNet) to achieve high-precision detection and classification of these missed layers.MethodsWe initially employ a two-dimensional hypothesis testing (2D-MHT) algorithm for high-precision layer detection of CALIPSO observations. Subsequently, we construct a cloud and aerosol classification model based on the U-Net neural network, using RGB inputs of optical signals such as depolarization ratio, color ratio, and backscatter coefficient. This model aims to categorize atmospheric layers detected by the 2D-MHT but missed by official CALIPSO products. To ensure spatial consistency with CALIPSO products, we use long-term CALIPSO official classification products (VFM) as the training set, validating model performance with independent samples. Furthermore, we compare the combined classification results of 2DMHT-UNet (including both successfully detected and missed layers by CALIPSO) with Radar-Lidar joint observation products for validation.Results and DiscussionsThe model, trained using CALIPSO VFM official products as ground truth and validated for accuracy based on independent samples from one month, indicates a classification consistency of 89.4% (land) and 90.2% (sea), with accuracy above 88% for both day and night (Fig. 2, Fig. 3 and Table 2). Comparative results based on Radar-Lidar joint observations demonstrate that the model effectively identifies cloud information missed by CALIPSO VFM official products due to low signal-to-noise ratio, reducing the relative error in cloud base detection by 21% (land) and 25% (sea) (Fig. 6).ConclusionsThe results demonstrate the excellent performance of 2DMHT-UNet in classifying atmospheric layers undetected by the CALIPSO official product. The 2DMHT-UNet algorithm significantly improves CALIPSO’s ability to detect boundary layer clouds, especially over land. However, due to the similarity in properties between marine aerosols and thin water clouds, accurately distinguishing between them remains challenging and may lead to misclassifications. Future efforts involve further optimizing the model to enhance classification accuracy and adding more validation experiments for aerosols based on airborne observations.

ObjectiveLidar remote sensing technology possesses significant advantages in detecting atmospheric parameters (such as clouds and aerosols, temperature, and wind speed) with high precision and high timeliness. However, atmospheric turbulence can affect the transmission characteristics of laser in the atmosphere, causing a series of turbulence effects such as light intensity fluctuations, phase fluctuations, beam wander, and beam spread. Based on the backscattering enhancement effect of laser transmission in turbulent atmosphere, a double-telescope hard-target reflection lidar system for detecting atmospheric turbulence intensity is proposed. The system realizes the measurement of backscattering enhancement coefficient by receiving the diffuse reflection echo signals of hard-target with the double telescope. The biggest advantage of this system is the utilization of small-aperture double-telescope receiving channels, greatly simplifying the complexity of the system and reducing equipment costs.MethodsThe application of double-telescope lidar technology based on backscattering enhancement effect in atmospheric turbulence detection is studied. First, a double-telescope hard-target reflection lidar for detecting atmospheric turbulence intensity is proposed and designed based on the backscattering enhancement effect. The system consists of one transmission channel and two receiving channels, where one receiving channel aligns with the transmitting channel and the other is offset by 15 cm. Then, the backscattering enhancement coefficient of hard-target reflected signal at the receiving telescope is established based on the generalized Huygens-Fresnel principle. Finally, the experimental system is constructed, and the preliminary experiments are conducted in calm weather with uniform turbulence intensity. The effects of turbulence intensity, laser transmission distance, temperature, and wind speed on the backscattering enhancement coefficient are studied.Results and DiscussionsThe detection principle of the proposed backscattering enhancement effect lidar is presented. It breaks through the limitation of traditional lidar using a large-aperture receiving telescope, which leads to complex system structures, particularly in terms of the optical path. Meanwhile, this system features simple structure, mobility, and low cost (Fig. 1). Table 1 presents the main parameters of the lidar system. By simulating various turbulence intensity changes through the distance between the beam and the heater, the relationship between backscattering enhancement coefficient and turbulence intensity is analyzed (Fig. 3). Under the same observation conditions, the relationship between different laser integration paths and the backscattering enhancement coefficient is studied (Fig. 4). The correlation between nighttime wind speed and temperature changes with the backscattering enhancement coefficient is observed and analyzed. The results show that, due to the gradual decline trend of night ground temperature is consistent with the conventional turbulence intensity, they show a strong correlation [Fig. 5(b)]. However, the random variation of wind speed is different from the decline trend of conventional turbulence intensity, and the correlation is poor [Fig. 6(b)].ConclusionsWe propose a double-telescope hard-target reflection lidar based on the backscattering enhancement effect to study the transmission characteristics of laser beam in turbulent atmosphere. The biggest advantage of this system is that a small-aperture double-telescope receiving channel can be employed to simplify the structure and reduce costs. The theoretical analysis of the backscattering enhancement coefficient of the echo signal is performed by receiving reflected signal of the double telescope. Additionally, the preliminary experiments are conducted in calm weather with uniform turbulence intensity. The results show that the backscattering enhancement coefficient increases monotonically with the increase of simulated turbulence intensity, exhibiting a saturation trend. Under the same observation conditions, the backscattering enhancement coefficient also shows a saturation trend as the integral path increases. The nighttime temperature shows a good correlation with backscattering enhancement coefficient, and its Pearson correlation coefficient R is 0.95. However, the correlation between wind speed and backscattering enhancement coefficient is relatively poor, and its Pearson correlation coefficient R is 0.67. The double-telescope hard-target reflection lidar proposed in this paper possesses significant research and practical value for the detection of atmospheric turbulence.

ObjectiveWe aim to enhance the accuracy of assessing turbulence effects on laser atmospheric transmission in plateau and desert regions. Deserts and plateaus serve as important application scenarios for laser atmospheric transmission. Due to their unique geographical and climatic conditions, these regions typically exhibit high transmittance, high wind speeds, and relatively low absorption coefficients. Consequently, the influence of atmospheric optical turbulence becomes the dominant factor, while atmospheric attenuation and thermal halo effects are generally minor. In-depth research on the height distribution of atmospheric optical turbulence in plateau and desert regions is crucial for improving the application of laser atmospheric transmission.MethodsTo capture the vertical distribution of turbulence intensity near the ground, we conduct simultaneous measurements of optical turbulence at two ground elevations (2 and 5 m) in plateau and desert regions, supplemented by aerial surveys using unmanned aerial vehicles equipped with micro-temperature sensors. We first estimate the refractive index structure constant at a height of 5 m using the measured data at 2 m and local sunrise and sunset times, comparing the results with measured values. Furthermore, we extend the prediction of the refractive index structure constant to a range of 150 m near the ground using the HAP model, comparing these estimated values with profile data from unmanned aerial vehicles. We then re-fit the key parameter—exponential p—in the HAP model to optimize its predictive performance. Finally, we conduct a comprehensive evaluation of the newly fitted HAP model to ensure it provides more accurate and reliable turbulence intensity predictions in practical applications.Results and Discussions1) The refractive index structure constants at both altitudes in plateau and desert regions exhibit significant “Mexican hat” diurnal variation characteristics (Fig. 2). The structure constant is generally larger in plateau regions compared to desert regions, with slightly longer periods of strong turbulence. During the daytime, the variation trend of refractive index structure constants is consistent across different heights in both regions; however, this correlation weakens at night. 2) In our study, the traditional HAP model estimates the refractive index structure constant at a height of 5 m, which aligns well with measured values (Fig. 5). The estimated values agree closely with measured values in magnitude and trend, though the HAP model tends to underestimate values from 3 h after sunrise to 4 h before sunset, coinciding with peak turbulence. When applied to air profile estimation, the HAP model shows poorer agreement (Fig. 6), indicating a need for further optimization. 3) The newly fitted HAP model estimates the refractive index structure constant at 5 m and shows improved agreement with measured values (Fig. 7). The results indicate that the estimated values from the new model closely align with the measured values in both magnitude and trend, demonstrating a significant improvement compared to the traditional model. Notably, the newly fitted HAP model enhances the agreement between estimated results and measured values when estimating the air profile (Fig. 8). 4) A comparison of subsequent data analysis using the traditional and newly fitted HAP models (Fig. 9) clearly shows that the estimation results from the newly fitted HAP model are more consistent with the measured values, significantly enhancing the accuracy of the model’s estimations.This improvement not only enhances the predictive capability of the model but also provides greater accuracy for evaluating the turbulence effects on laser atmospheric transmission in plateau and desert regions.Conclusions1) The correlation coefficients between the estimated and measured values of HAP model are 0.934 and 0.943, respectively, with root-mean-square errors of 0.165 and 0.150. However, the model’s consistency in air profile estimation declines significantly. 2) For the HAP model at 5 m altitude in plateau and desert areas, correlation coefficients increase to 0.965 and 0.978, respectively, and root-mean-square errors decrease to 0.086 and 0.101. The air profile estimated by the new HAP model aligns well with measured values. The new HAP model provides a more accurate method for estimating the atmospheric optical turbulence profile distribution in the boundary layer over the plateau and desert regions, and thus offering new possibilities for improving the evaluation accuracy of laser atmospheric transmission in these environments.

ObjectiveWith the rapid advancement of remote sensing technology, the spatial and spectral resolution of optical imaging systems has improved significantly, with ground resolution advancing from tens of kilometers in the early stages of development to today’s sub-meter levels. While low-resolution and low-detection precision space optical imaging systems are relatively insensitive to environmental disturbances, the increase in space imaging resolution has revealed that optical imaging systems are highly sensitive to their operational environment. Uncontrolled disturbances can lead to a significant decline in imaging quality, with micro-vibration being a key contributing factor. In this paper, we investigate the degradation of image quality caused by micro-vibrations in space optical systems. Micro-vibrations are minute vibrations generated by moving parts of a satellite, such as flywheels and refrigerators, during in-orbit operation. These vibrations are amplified through structural transmission, causing the overall movement of the space optical payload and the micro-movements of optical elements. The acceleration amplitude of these vibrations is about 10-3g, and their frequency ranges from 10-2 to 103 Hz. While traditional research on the effects of micro-vibration has focused on theoretical and experimental studies of specific optical systems, there remains a need for generalized quantitative analysis methods.MethodsIn this paper, we propose a quantitative analysis method for evaluating the degradation of imaging quality caused by micro-vibrations in space optical imaging systems. A quantitative image quality degradation model is developed using the modulation transfer function (MTF) as the evaluation standard. This method is based on Fourier series expansion principles, modeling optical surface micro-vibrations as a linear combination of sinusoidal components. Each sinusoidal component is analyzed independently. The vibration influence boundary (VIB) and optical structure influence boundary (OSIB) are defined based on the distribution of exposure duration within the vibration cycle. The light tracing principle is employed to derive the intersection point between light rays and quadratic surfaces, obtaining the point spread function (PSF) of the vibrating optical system. Fast Fourier transform (FFT) is then used for spectral analysis, producing MTF curves for the two influence boundaries. These curves facilitate the quantitative analysis of the disturbed optical system, providing insights into the relationships among MTF values, exposure duration, vibration periods, and spatial frequencies, and enabling the identification of sensitive frequency bands.Results and DiscussionsIn the simulations verifying the extremum properties of the two influence boundaries, exposure durations of 10.000 ms and 15.125 ms are analyzed. The results demonstrate a consist relationship between the initial exposure offset and the influence boundaries, confirming that both VIB and OSIB exhibit extremum properties under specific conditions (Fig. 9). The quantitative analysis model is validated through simulations, involving various vibration directions, forms, and exposure duration parameters. Translational vibrations along the x-axis cause MTF reductions in both the x and y directions, with greater influence observed on the x-axis itself (Fig. 10). For optically symmetric systems, rotational vibration has a more pronounced effect in directions orthogonal to the axis of rotation (Fig. 11). The analysis also reveals that the relationship between the influence boundary MTF values and exposure duration is non-linear. Sensitive frequency band analysis is conducted using the proposed model and reveals the relationship among MTF values, vibration frequency, and spatial frequency under conditions of 15 ms exposure duration, 70?100 Hz vibration frequency, and 0?100 lp/mm spatial frequency (Fig. 12). Using the DFS-SQP optimization algorithm, the sensitive frequency center is determined to be approximately 74.8 Hz. Analysis of sensitive exposure duration shows the relationship among MTF values, exposure duration, and spatial frequency under conditions of 80 Hz vibration frequency, 3.125?34.375 ms exposure duration, and 0?100 lp/mm spatial frequency (Fig. 13).ConclusionsAnalysis and simulation confirm that VIB and OSIB exhibit extremum properties. Translational vibrations along a given axis have a greater influence on the MTF in that direction compared to rotational vibration. The sensitive vibration frequency for relational movements of the main optical surface in the x-direction is identified at approximately 74.8 Hz. The minimum MTF value of 0.2310 is observed at the OSIB in the y-direction. Structural designs should avoid matching the natural frequency of the main optical surface with the central sensitive frequency. The MTF values at Nyquist frequencies of the two boundaries are influenced by exposure duration. Selecting exposure durations that avoid coinciding with the minimum points of the MTF curve is critical.

ObjectiveSpace debris in Earth’s orbit is rapidly increasing, posing significant collision risks to spacecraft and threatening their normal operations. Monitoring and predicting their orbits are essential. Space-based optical monitoring, unconstrained by geographical constraints, can enhance observation coverage and frequency of space debris. However, it provides only angular measurements, making orbit determination challenging, especially for initial orbit determination under short-arc observations. To improve convergence and computational efficiency, we construct an extended objective function model and propose an initial orbit determination algorithm using the adaptive-moment-estimation (Adam) optimization in the range-range difference solution space.MethodsWe introduce an extended objective function model that considers proximity between predicted and observed values and evaluates eccentricity when the orbit exists and the observation constraints are met (within the admissible region). Outside this region, the objective function ensures its value does not exceed boundaries and has no local minimum. This design aims to achieve two objectives: 1) Both the objective function and its derivative can be calculated at any point in the range-range difference solution space, thereby facilitating optimization methods based on the first derivative. 2) The solution will only converge to permissible region extrema. In addition to pre-processing angular measurements, the proposed method comprises four steps: first, set weight factors, hyper-parameters and threshold values for the cost function; second, calculate the initial value in the range-range difference space; third, perform iterative updates following the Adam optimization algorithm while evaluating the optimization objective function using the extended objective function introduced herein; finally, based on predefined convergence criteria, decide whether to continue or terminate the iterations and subsequently output the result.Results and DiscussionsSimulation experiments confirm the method’s effectiveness, adaptability to various orbit types, initial value sensitivity, computational efficiency, convergence, and accuracy. The results (Fig. 5) indicate good performance for geostationary earth orbit (GEO), medium earth orbit (MEO), and low earth orbit (LEO) debris optical measurements. Sensitivity to initial values is low (Fig. 6), but appropriate initial values reduce iterations (Table 4). The Adam optimization algorithm outperforms stochastic-gradient-descent (SGD), Momentum, and adaptive-gradient (AdaGrad) algorithm (Fig. 7). The elapsed time (Table 5) associated with the proposed method across various arc segments spans from tens of milliseconds to a few seconds. This performance generally surpasses that of the admissible region particle swarm optimization algorithm, which also guarantees convergence. For a specific Leo optical surveillance platform observing a GEO target under consistent observation intervals (3 s), accuracy improves with longer observation arcs. The root mean square errors (Table 7) for the position at intermediate observation points measure 49.82, 34.73, and 16.37 km, respectively. Conversely, with a fixed observation arc length of 3 min, the root mean square errors (Table 8) for the initial orbit determination results at the mid-observation for 3, 6, and 9 s amount to 34.73, 51.65, and 66.24 km, correspondingly.ConclusionsTo enhance the convergence and computational efficiency of initial orbit determination in space-based optical surveillance, we have developed an extended loss function model and introduced an initial orbit determination algorithm that utilizes Adam optimization to find the optimal solution within the range-range difference space. The method’s adaptability to various orbit types and initial values, along with its algorithm efficiency, convergence, and accuracy, have been rigorously assessed. The results show that our approach is well-suited for the initial orbit determination of space debris in GEO, MEO, and LEO. While convergence to an acceptable solution is achievable even under stringent initial conditions, at the expense of increased iteration, we advocate for the proposed initial settings to ensure high efficiency. The initial orbit determination error of the proposed method is statistically analyzed. The root mean square error for the position at the mid-observation epoch is on the order of 10 km, and the root mean square error for the semi-major axis of the orbit is on the order of 100 km, in the context of space-based optical surveillance with an angular measurement error of 2 arc seconds.

ObjectiveIn response to the urgent demand for high-precision global greenhouse gas (GHG) emissions monitoring, essential for carbon inventories and enforcement, achieving low-cost, high-resolution detection has become a key research focus. The array Fabry-Pérot (F-P) spectrometer, with its compact structure, lack of moving parts, and ability to account for both the sampling density and range of optical path differences, presents an effective solution for achieving accurate and cost-efficient GHG detection. The parameters of the array F-P interferometer are critical to the system’s optical performance and directly affect detection accuracy. To establish optimal detection parameters, we explore the effects of variables such as F-P interval thickness, interferometric cavity reflectivity, F-P quantity, and adjacent F-P optical path difference sampling interval on system sensitivity. By analyzing the variation in integral sensitivity with changes in GHG volume fraction, we determine the optimal parameters for spectrometer design, providing a theoretical foundation for further research on array F-P spectrometers for GHG detection.MethodsUsing the upwelling radiance spectra of GHGs at varying concentrations as input, we propose a simulation model for raw interferometric data from the array F-P spectrometer. The influence of spectrometer parameters on system detection sensitivity is analyzed using this model. To maximize integral sensitivity, the analysis focuses on how varying the thickness of the F-P intervals affects integral sensitivity and determines the optimal thickness of the F-P plates. To achieve maximum normalized sensitivity for the detection system, the relationship between signal-to-noise ratio (SNR), spectral resolution, detection sensitivity, and interferometric cavity reflectivity is analyzed, confirming the optimal reflectivity value. In addition, the effect of the number of F-P cavities and the adjacent F-P optical path difference sampling interval on integral sensitivity is evaluated.Results and DiscussionsThis analysis quantitatively evaluates how integral sensitivity varies with F-P interval thickness, cavity reflectivity, F-P numbers, and the sampling interval of the adjacent F-P optical path difference. Specific parameters are confirmed for both carbon dioxide and methane detection systems. To thoroughly assess the influence of interferometric cavity reflectivity on SNR and detection sensitivity, the normalized sensitivity for various reflectivities is simulated (Fig. 12). For both the carbon dioxide and methane systems, normalized sensitivity exceeds 0.98 at reflectivities between 0.35 to 0.49 and 0.39 to 0.50, respectively, with optimal values observed around 0.42 and 0.47. The influence of F-P numbers on integral sensitivity is shown (Fig. 14). As the number of cavities increases, the sampling range of the optical path difference increases linearly, leading to a corresponding increase in integral sensitivity. The influence of the adjacent F-P optical path difference sampling interval on both the sampling range and integral sensitivity is simulated (Fig. 17). As the adjacent F-P optical path difference sampling interval decreases, the overall optical range decreases; however, both the sampling density of the interferometric signal and the integral sensitivity increase. When the adjacent F-P optical path difference sampling interval is reduced to λ/4 or less, further reductions have minimal effect on integral sensitivity.ConclusionsIn this paper, we introduce the fundamental principles of the array F-P spectrometer and its application in GHG detection. By analyzing the magnitude of the Fourier expansion term coefficients in relation to variations in the reflectivity of the interfering cavity, we confirm that the reflectivity of the F-P flat plate approximation for double-beam interferometry falls within the range of 0.3 to 0.7. A raw data simulation model for the array F-P interferometer is developed using the upwelling radiance spectra of greenhouse gases with varying concentrations as a system input. Based on this model, we conduct a simulation analysis to assess the effects of F-P spectrometer parameters on detection sensitivity, defining the guiding principle for parameter selection and determining their optimal values. The simulation results indicate that the interferometric cavity reflectivity for the carbon dioxide and methane systems are 0.42 and 0.47, respectively, at which point the system’s normalized sensitivity reaches its maximum. The integral sensitivity of the detection system is positively correlated with the number of F-P cavities. When the adjacent F-P optical path difference sampling interval is set to a quarter-wavelength, the system achieves high integral sensitivity and a broad optical path difference.

ObjectiveStar sensors, the most accurate optical sensors for space attitude determination, are widely used in various applications. These sensors require high measurement accuracy and robust stellar spectral detection capabilities. However, ground calibration experiments for star sensors often encounter issues due to mismatches between simulated stellar spectra and observed stellar spectra, adversely affecting the accuracy of optical signal calibration. To address this, we propose a design method for a structurally simple spectral tunable stellar spectral simulation system. This system employs a supercontinuum laser as the illumination source and a digital micromirror for spectral modulation. We achieve multiplexing, spectral splitting, and collimation imaging based on dual grating dispersion. Compared with traditional stellar spectral simulation systems that rely on spatial light modulation devices, our system features a simpler structure, easier installation and adjustment, and avoids common aberrations such as spectral line coma and bending, thereby reducing reliance on complex spectral simulation algorithms.MethodsWe first analyze the factors affecting spectral simulation accuracy and utilize Gaussian distribution functions to represent the smallest spectral fitting units in spectral synthesis. We theoretically investigate the influence of varying half-peak widths and spectral peak intervals on simulation accuracy. Our findings indicate that, for an ideal smooth curve, the accuracy of the spectral simulation depends on the spectral peak interval ω rather than the half-peak width. Consequently, reducing the peak interval is crucial for achieving smooth spectral simulation. Based on this insight, we design a dual grating dispersion multiplexing adjustable stellar spectrum simulation system. To enhance energy utilization, we incorporate a laser shaping and beam expansion system instead of traditional slits and collimators in the splitting mechanism. Additionally, we implement a dual grating dispersion multiplexing splitting system that uses grating 1 for splitting and grating 2 for combining and collimating the separated beams. This approach eliminates the need to determine the optimal image position, simplifying system installation and adjustment.Results and DiscussionsWe construct the system and conduct comparative experiments. The results demonstrate that the half-peak width of the monochromatic light output is approximately 40 nm, with a peak interval of about 4 nm. The simulation accuracy for the 2600 K color temperature spectrum is -4.9%, while the accuracies for the 7000 and 11000 K spectra are better than -4.7% and -4.2%, respectively. The system achieves a magnitude test accuracy better than ±0.031 Mv within the range of 0 to +5 Mv, with a simulation accuracy of +0.221 Mv at +6 Mv. The increase in magnitude simulation error is attributed to the limited adjustment capability of the digital micromirror device (DMD), necessitating consideration of the star color temperature curve during magnitude adjustments. In contrast, the traditional Czerny-Turner-based stellar spectral simulation system shows a simulation accuracy of -6.2% for the 2600 K spectrum, better than -5.9% for 7000 K, and better than +6.1% for 11000 K. Analysis of the simulation curves reveals that the output curve of our dual grating dispersion multiplexing spectrum adjustable stellar spectrum simulation system is smoother.ConclusionsWe analyze the factors influencing the accuracy of stellar spectral simulation and establish the conditions required for simulating stellar spectral information accurately. We propose a design method for a dual grating dispersion multiplexing spectrum adjustable stellar spectral simulation system that effectively simulates stellar spectra and magnitudes, fulfilling the requirements for ground optical signal calibration experiments for star sensors. Through comparative experiments, we demonstrate that our system offers high spectral and magnitude simulation accuracy, while its simple structure facilitates installation and adjustment, reducing dependence on complex spectral simulation algorithms.

ObjectiveThe ocean, covering 71% of the Earth’s surface, serves as the cradle of life and a repository of resources. The development and utilization of ocean resources have significantly altered the ocean environment, which adversely affects the sustainable development. Ocean color, determined by the optical properties of the seawater and the suspended matter, reflects changes in seawater quality and the ocean environment. The imaging spectrometer, integrating optical imaging and spectroscopic technology, is a primary tool for ocean color monitoring. It can not only monitor the coastal environment and the distribution of the elements in the ocean but also can distinguish the composition effectively. With the increasing ocean color application and the urgent requirement of ocean studies, the imaging spectrometer optical system with wide swath and high resolution has important research significance and application value.MethodsTo address the ocean color monitoring requirements, we propose and design an optical system of an imaging spectrometer with a wide swath and high resolution. The system operates within a waveband of 0.4?0.9 μm and features a swath width of 140 km, a ground sampling distance of 20 m, and a spectral resolution of 5 nm. The imaging spectrometer is composed of the fore-optics and spectral imaging module. The fore-optics is an off-axis three mirror anastigmat (TMA) system. The spectral imaging module is designed with a unique Offner-like configuration instead of the common Offner configuration. This configuration has similar optical components but with a non-unit magnification. The parameters requirement of the ocean color imaging spectrometer is analyzed and the optical specifications are given out first. Then, the initial structural parameters of the Offner-like configuration calculation method are introduced. Using these calculated parameters, we design and evaluate the optical system for the fore-optics and spectral imaging module. Finally, we compare the optical design results using the conventional Offner configuration, detailing imaging performance characteristics, system sizes, and surface sags to demonstrate the advantages of the proposed system.Results and DiscussionsIn our study, the magnification of the Offner-like configuration is set to be 0.6. At this magnification, the focal length of the fore-optics is 590.75 mm, with an F# of 5. The fore-optics is designed and optimized with even aspherical surfaces. After optimization, the modulation transfer function (MTF) exceeds 0.8 at the Nyquist frequency, and the maximum root mean square (RMS) radius of the spot diagram is less than 2.63 μm (Fig. 4). Given the selected magnification, the image space F# of the spectral imaging module is 3, and the total slit length of is 198.33 mm. Two identical spectral imaging modules, each with a slit length of 99.66mm, are used to achieve the required long slit length. Compared to the traditional Offner configuration, this Offner-like configuration, which still includes a convex spherical surface grating and two mirrors (Fig. 5), offers three advantages compared with the common Offner configuration. First, the magnification is not 1 and can be used as the optimization variable in the optical design. Second, the object and image space F# of the spectral imaging module are no longer equal, and it is possible to reduce the difficulty in designing, manufacturing, and assembling the fore-optics when the selected magnification is less than 1. Third, the incident arm of the spectral imaging module is longer when the magnification is less than 1, and the system size of the imaging spectrometer can be compressed by adding the folding mirror. To enhance imaging performance and reduce the system size, the Zernike freeform surface primary mirror and the tertiary mirror are used. The MTF of the designed spectral imaging module at the Nyquist frequency is larger than 0.8 and the maximum RMS radius of the spot diagram is less than 3.13 μm (Fig. 6). The maximum smile and keystone are about 1.5 μm, which is less than 10% of the pixel size (Table 3).ConclusionsOur paper presents the design and optimization of an ocean color imaging spectrometer with a wide swath and high resolution, utilizing a unique Offner-like configuration. The dispersion element remains a convex spherical grating, while the spectral imaging module features a non-unit magnification. Compared with the common Offner configuration, the proposed optical system delivers superior imaging quality, reduced size, and enhanced system performance. Our work provides a technical foundation for the development of a wide swath, high-resolution imaging spectrometer for ocean color detection.