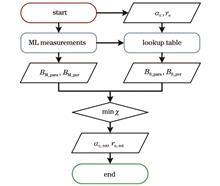

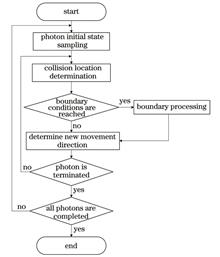

ObjectiveHigh-precision detection of the optical and microphysical properties of water clouds is essential for understanding climate change processes. Effective retrieval of the extinction coefficient and effective radius of water clouds can be achieved by utilizing the multiple scattering effect in water cloud signals detected by lidar. In this work, two water cloud retrieval methods based on polarized Mie-scattering lidar (ML) and dual-field-of-view high spectral resolution lidar (HSRL), respectively, are introduced. The performances of these methods are compared through the retrieval results from four representative water cloud cases. The results indicate that while both methods exhibit comparable retrieval accuracies for water cloud extinction coefficients, the dual-field-of-view HSRL method demonstrates superior performance in retrieving the effective radius retrieval. Enhancing the retrieval accuracy of the polarized ML method is possible by increasing the resolution of the lookup table points, though this comes at the cost of some algorithmic efficiency. Due to the weaker signal intensity at the HSRL molecular channel, the retrieval stability of the dual-field-of-view HSRL method is more sensitive to the signal noise from the molecular channel. The evaluation presented in our study provides an important reference for the future development of instruments and algorithms for observing water clouds based on lidar.MethodsThis paper presents and compares two water cloud retrieval methods based on polarized ML and dual-field-of-view HSRL, respectively. The modified gamma distribution is adopted to parameterize the droplet size distribution of water clouds, while the adiabatic model is used to characterize the vertical distribution of water cloud properties. The Monte Carlo model and analytical model simulate multiple scattering lidar signals from water clouds during the retrieval process. Lastly, a detailed description of the polarized ML method and the dual-field-of-view HSRL method is provided, along with their respective flowcharts illustrated in Figs. 1 and 2.Results and DiscussionsA series of Monte Carlo simulations involving various water clouds is conducted to investigate the multiple scattering effect on the depolarization ratio of signals and signal variations at different field-of-views (Fig. 3). Subsequently, four representative water cloud cases are defined, and their signals are simulated using the Monte Carlo model as input for two retrieval methods (Fig. 4). The retrieved values of water cloud properties (extinction coefficient and effective radius) at a reference height by polarized ML method are illustrated in Fig. 5. For the dual-field-of-view HSRL method, the dual-field-of-view molecular signals reconstructed by retrieved water cloud properties are compared with the input signals (Fig. 6). Comparing the water cloud properties retrieved from the two methods to the true input values is depicted in Fig. 7. The results reveal that both methods accurately retrieve the extinction coefficient, while the dual-field-of-view HSRL method showing higher retrieval accuracy for the effective radius.ConclusionsWe introduce the fundamental principles of two water cloud retrieval methods based on polarized ML and dual-field-of-view HSRL. The methods utilize signals simulated by the Monte Carlo model as the input for retrieval, and the accuracy of their retrieval results is compared. The findings demonstrate that both methods accurately retrieve the extinction coefficient of water clouds. However, the polarized ML method encounters limitations in retrieving the effective radius due to the point resolution of the lookup table, resulting in a larger retrieval error. In contrast, the dual-field-of-view HSRL method, unrestricted by this limitation, achieves higher retrieval accuracy. Specifically, the root-mean-square error of the retrieved effective radius in the HSRL method is approximately 22% to 89% of that obtained by the polarized ML method. The lookup table-based polarized ML method is constrained by the computational speed of the Monte Carlo model, necessitating a reduction in the point number of the lookup table (100×100 in our study) to enhance algorithm efficiency. On the other hand, the dual-field-of-view HSRL method faces challenges with weaker molecular channel signals compared to the ML signals, leading to increased susceptibility to signal noise and greater fluctuations in retrieval results, especially at lower signal-to-noise ratios near cloud tops. Overall, while the dual-field-of-view HSRL method offers higher accuracy in retrieving water cloud properties without lookup table resolution constraints, the higher signal intensity of polarized ML signals ensures more stable retrievals in the presence of larger signal noise. Future research could enhance the retrieval performance of both the polarized ML and dual-field-of-view HSRL methods by improving the lookup table resolution or the signal-to-noise ratio, respectively, to advance lidar-based water cloud research.

ObjectiveAs the demand for ocean development continues to increase, underwater target detection technology becomes increasingly crucial. Radar offers numerous advantages over sonar, which currently dominates the ocean detection field. Radar provides higher imaging resolution and better anti-interference capability and features a compact detection structure. It can be deployed on ships, aircraft, or satellites, offering high detection efficiency and promising applications in ocean exploration. However, due to water’s scattering and attenuation effects on lasers, underwater lidar echo signals often exhibit significant weakness and backscattered noise. This issue is exacerbated in turbid waters, where water attenuation and backscattering combine to obscure target signals. Simply increasing laser power can intensify backscattering noise, saturating the receiving system without improving overall performance. Backscattering noise severely impacts detection accuracy and imaging quality in underwater lidar systems. Common methods to mitigate water-induced backscattering noise include distance gating, polarization detection, spatial filtering, and carrier modulation. These methods leverage differences in time distribution, polarization characteristics, spatial distribution, and frequency characteristics between signal light and scattered light to suppress unwanted scattering. Currently, underwater target detection radar faces challenges such as limited detection range and low resolution. Addressing these challenges by integrating advanced backscattering noise suppression technologies is crucial for enhancing underwater lidar performance. Our study applies the fast independent component analysis (FastICA) algorithm to process underwater lidar echo signals, aiming to effectively remove backscattering noise and thereby improve ranging accuracy and imaging quality.MethodsDue to water’s scattering effect, photons received by underwater lidar detection systems typically consist of three components: 1) photons directly reflected by underwater targets; 2) backscattered photons, which are scattered and returned by the water without contacting the target directly; 3) forward scattered photons, which are scattered by water on their return path after initially reflecting off the target. The transmission process of photons reveals that backscattered photons do not interact with the target and are independent of photons reflected by the target. Therefore, the FastICA algorithm can be utilized to separate backscattered photons from the detection signal. Photon scattering and absorption caused by water present a typical random problem, hence the Monte Carlo method is employed to simulate photon transmission in non-uniform media. The FastICA algorithm is applied to the photon underwater transmission model based on the Monte Carlo algorithm. This facilitates the separation of underwater target echo signals from backscattering signals for studying the algorithm’s effectiveness. Subsequently, a scanning experiment system is established in a laboratory setting to conduct scanning experiments on underwater targets, generating three-dimensional point cloud images. The three-dimensional point cloud images of the targets processed using the FastICA algorithm are compared with those without algorithmic processing under various turbid water conditions. This comparison aims to assess the FastICA algorithm’s impact on enhancing the accuracy of underwater lidar ranging and improving imaging quality.Results and DiscussionsThe FastICA algorithm effectively separates the target echo signal from the backscattering signal (Fig. 3). By removing most of the backscattering signals from the echo signals, the ranging accuracy of underwater targets is notably improved. During the experiment, three-dimensional scanning and imaging of underwater four-level ladder targets are conducted under varying water turbidity conditions of 4.2, 12.2, and 20.5 NTU. As water turbidity increases, the discrepancy between the distances measured in the underwater target point cloud and the reference values becomes more pronounced (Fig. 6). After applying the FastICA algorithm, the point cloud distribution more closely aligns with the reference values under different turbidity conditions, reducing the deviation between measured and reference values and enhancing the quality of three-dimensional point cloud imaging (Fig. 7). The root mean square error of all three-dimensional point cloud rangings in water with varying turbidity levels is calculated. Applying the FastICA algorithm reduces the root mean square error of three-dimensional point cloud ranging in different turbid waters. The reduction effect is more evident as turbidity increases. For instance, when water turbidity reaches 20.5 NTU, statistical analysis indicates that the root mean square error of point cloud ranging decreases from 3.9 cm to 3.5 cm. Experimental results show that applying the FastICA algorithm to underwater lidar effectively mitigates the impact of backscattering noise on ranging accuracy, thereby improving both the accuracy of ranging and the quality of imaging in underwater lidar applications.ConclusionsBy employing the Monte Carlo simulation method, our study simulates the detection echo signals of underwater lidar in water bodies with varying scattering coefficients. The simulation results indicate that as the water scattering coefficient increases, the backscattering noise in the lidar echo signal significantly increases, leading to a notable decrease in the echo signal-to-noise ratio. Under high scattering coefficients, the target echo can become submerged in scattered noise. The FastICA algorithm is applied to the photon underwater transmission model established using the Monte Carlo algorithm to separate the echo signal from the backscattering noise. The results demonstrate that the FastICA algorithm effectively separates the underwater target echo signal and backscattering noise, thereby improving the signal-to-noise ratio of the denoised echo signal. An experimental system for underwater lidar scanning imaging is constructed to scan and range underwater targets. The host computer controls the vibrating mirror scanning and signal acquisition card, processes the signals, and achieves three-dimensional point cloud imaging of underwater targets. FastICA is implemented in the scanning of underwater lidar for three-dimensional point cloud imaging. Post FastICA processing, the quality of three-dimensional point cloud imaging is significantly boosted. Statistical analysis is conducted on ranging errors of all three-dimensional point clouds in water with varying turbidity levels. Applying the FastICA algorithm results in a notable reduction in the root mean square error of three-dimensional point cloud ranging. Experimental findings unveil that integrating the FastICA algorithm into an underwater lidar system effectively mitigates the influence of backscattering noise, thereby strengthening system ranging accuracy and imaging quality.

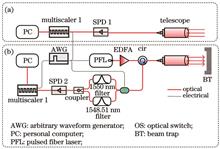

ObjectiveAtmospheric environmental problems such as air pollution and greenhouse gas emissions not only impact climate change but also seriously threaten human life. Both greenhouse gas emissions reduction and air pollution control are related to changes in atmospheric compositions. In the context of “double carbon”, China aims to increase its national contribution, striving to peak carbon dioxide emissions by 2030 and achieve carbon neutrality by 2060. The basis for all policies formulated on atmospheric environmental improvement relies on accurate data of air pollution and greenhouse gas emissions. For this reason, the solution to atmospheric environmental problems depends on accurate monitoring technologies and forecasting methods of atmospheric parameters. Lidar has obvious advantages in atmospheric parameters monitoring because of its high spatial and temporal resolution, high sensitivity, real-time operation, non-contact, etc. To achieve compactness and lightweight design suitable for various load platforms, the all-fiber coaxial lidar is an attractive option. In recent years, all-fiber lidar has been widely applied in atmospheric parameters measurement due to its flexible transformation of light beams, less susceptibility to temperature, pollution, and other environmental factors, and ability to achieve higher precision measurement. Compared with the biaxial system, the all-fiber coaxial system has significant advantages, such as low cost, simple, compact and stable structure, and small blind zone. Meanwhile, the amplified spontaneous emission (ASE) noise from the fiber amplifier is inevitable for a coaxial lidar system and degrades the performance of the lidar system. The ASE backscattering from specular reflection results in a decreased signal-to-noise ratio, shortened effective measurement distance, and even misidentification. The ASE noise of the amplifier could be regarded as a fingerprint function. To improve the performance of the all-fiber coaxial lidar, a method for calibrating ASE noise is proposed and changes in mirror reflectivity of telescope and laser power have been included. By calibrating the function of the ASE noise of the fiber amplifier in a lidar system, the ASE noise of the all-fiber coaxial lidar is mitigated, thus improving the signal-to-noise ratio and performance of the all-fiber coaxial lidar.MethodsTo acquire accurate data for all-fiber coaxial lidar, it is necessary to remove the ASE noise, requiring a calibration method for ASE. Coaxial lidar and biaxial lidar simultaneously measure atmospheric backscattering signals along the same optical path. The backscattering photon counts received by both the coaxial and biaxial systems are first denoised. Subsequently, the denoised photon counts are normalized to indicate those received by the coaxial system. By comparing the backscattering data from the coaxial lidar with that from the biaxial lidar, specifically subtracting the biaxial system’s photon counts from the coaxial system’s, the ASE noise is revealed. The ASE noise function, which can be seen as a “fingerprint function”, is derived by fitting the ASE noise data. This ASE noise function enables the determination of the true photon counts for the coaxial lidar and effectively mitigates the ASE noise. Atmospheric aerosol extinction coefficients and other atmospheric parameters are then derived from the true data. In the case of laser power or telescope specular reflectivity changes due to long-term use or operation in a poor environment, the ASE noise function may also change. By measuring the laser power or reflectivity of the telescope, estimating the ratio of variation, and adjusting the ASE noise function accordingly, accurate results can be obtained without needing to recalibrate the ASE noise function. Additionally, time-sharing optical switching allows for accurate background noise measurement of the detector.Results and DiscussionsAn experiment has been conducted to verify the validity of the calibration method of ASE noise by comparing the aerosol extinction coefficient data retrieved from the coaxial lidar with the biaxial lidar. The measured data and the fitted function of ASE noise of coaxial lidar are shown, and the ASE noise function agrees with the measured data perfectly [Fig. 2(a)]. The photon counts of coaxial lidar after ASE noise mitigation are compared with biaxial lidar, and the result shows that the ASE noise function could be applied to mitigate ASE noise in coaxial lidar [Fig. 2(b)]. A field experiment conducted in Hefei on October 25, 2021, was performed to verify the effectiveness and reliability of this method. For a coaxial lidar, after the measured data are denoised and ASE noise mitigated, the backscattering photon counts are obtained. The extinction coefficients are retrieved with the photon counts by means of Fernald’s method. Extinction coefficient data retrieved for coaxial and biaxial single-photon lidar are compared (Fig. 3), and the relative deviation of data of coaxial lidar with biaxial lidar is shown [Fig. 3(b)]. The result shows that the atmospheric extinction coefficient obtained by the coaxial lidar agrees well with that of the biaxial lidar, with a maximum difference between ±10%. The result indicates that the calibration method could mitigate ASE noise effectively.ConclusionsThe ASE noise from the fiber amplifier is inevitable and degrades the performance of all-fiber coaxial lidar significantly. The ASE backscattering from specular reflection results in decreased signal-to-noise ratio, shortened effective measurement distance, and even misidentification. A calibration method is proposed by comparing the backscattering data received from the coaxial lidar with that of the biaxial lidar, and then the ASE noise function is derived. The ASE noise is subtracted from the backscattering data of coaxial lidar and true data of photon counts is obtained by the ASE noise function. An experiment has been conducted and verified the validity of the method by comparing the aerosol extinction coefficient data retrieved from the coaxial lidar with the biaxial lidar. The results show that the method could effectively improve the performance of coaxial lidar with a data relative deviation of less than ±10% when compared with biaxial lidar. The calibration method effectively improves the performance of the coaxial lidar. Additionally, changes in the mirror reflectivity of the telescope and laser power have been included in the method.

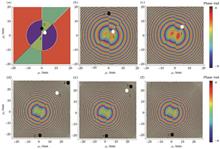

ObjectiveSingular optics has been associated for decades with the study of phase singularities in fully coherent beams. There are two main types of phase singularities: optical vortices and edge dislocations. Recent research has shown that the correlation functions of partially coherent beams can also exhibit types of phase singularities. This has led to the introduction of a new type of singularity, namely the correlation vortex, which is similar to the optical vortex and is defined as a phase singularity of the two-point cross-spectral density (CSD) function of the fields. While much research has focused on fully coherent beams, partially coherent beams have practical advantages due to their greater resistance to degradation when propagating through random media. In addition to correlation vortices, we propose the existence of another type of correlation singularity: the coherent edge-dislocation. Therefore, we introduce the concept of the coherent edge dislocation carried by the Gaussian Schell-model (GSM) beams, since GSM is a classic example of a partially coherent beam. We then study the interaction of two coherent edge dislocations carried by GSM beams as they propagate through free space and atmospheric turbulence, both theoretically and numerically.MethodsBy drawing an analogy with edge dislocations in coherent beams, we show that coherent edge dislocation exists in partially coherent beams. Based on the extended Huygens-Fresnel principle, we derive the analytical expression for the CSD of GSM beams carrying two edge dislocations propagating through atmospheric turbulence. This expression is used to study their interaction in both free space and atmospheric turbulence. The positions of the correlation singularities of partially coherent beams in the z-plane can be determined from the real and imaginary components, as well as from the phase distribution of the spectral degree of coherence of the GSM beams.Results and DiscussionsThe CSD of partially coherent beams has a well-defined phase with respect to two points, and the phase singularities of the CSD are called the correlation singularities. In line with previous research, we propose the existence of another type of correlation singularity: the coherent edge dislocation, which exhibits a π-phase shift along a line in the transverse plane of the correlation function. The refractive index structure constant Cn2=0 leads to an expression for the CSD that degenerates to the CSD formula of GSM beams in free space, allowing us to discuss their interaction in this environment. The two coherent edge dislocations disappear with propagation, while two correlation vortices with opposite topological charges emerge due to their interaction. However, the total topological charge of the correlation vortices is not conserved due to the possible appearance or disappearance of correlation vortices during propagation, unlike the interaction of two edge dislocations, where the total topological charge is zero and conserved during propagation (Fig. 1). The total topological charge is not conserved in the propagation of initial beams with coherence vortices, and off-axis edge dislocation in oceanic turbulence due to the possible appearance or disappearance of correlation vortices. This result is compared with the interaction of a phase vortex and an off-axis edge dislocation in free space, where the total topological charge is conserved. When GSM beams carrying two parallel coherent edge dislocations propagate, the coherent edge dislocations disappear, but no correlation singularities appear in the fields. However, coherent edge dislocations can appear with propagation, which is different from the evolution of two parallel edge dislocations in free space (Fig. 3). The result is compared with the evolution of two parallel edge dislocations in free space, where phase singularities disappear with propagation. When GSM beams carrying two perpendicular coherent edge dislocations propagate, the coherence edge dislocations disappear while perpendicular coherent edge dislocations vanish, with one or two correlation vortices appearing during free space propagation (Fig. 4). This result differs from the interaction of two perpendicular edge dislocations, where no optical vortices appear during propagation. The value of the refractive index structure constant affects the appearance, number, and position of correlation vortices when GSM beams carrying two coherent edge dislocations propagate through atmospheric turbulence (Fig. 5). Correlation singularities may appear, but no coherent edge dislocations are observed with the propagation of GSM beams when the two coherent edge dislocations are parallel in the initial plane, which is different from the free-space case, where coherent edge dislocations may recur, but no correlation singularities appear (Fig. 6). Conversely, coherent edge dislocations may appear but no correlation vortices appear, if the two coherent edge dislocations are perpendicular at the initial plane, which is different from the free space case (Fig. 7).ConclusionsIn addition to correlation vortices, coherent edge dislocations are shown to exist. The CSD of GSM beams carrying two coherent edge dislocations is derived based on the extended Huygens-Fresnel principle. The coherent edge dislocations are generally unstable and disappear, while correlation vortices or edge dislocations may appear during propagation. The number of correlation vortices can change due to the creation or disappearance of these vortices in the fields. A comparison of the interaction of coherent edge dislocations in atmospheric turbulence with that in free space is made.

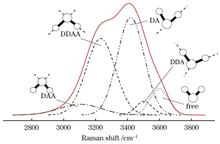

ObjectiveThe monitoring of temperature and salinity (electrical conductivity) in seawater is of significant importance for understanding and predicting the responses of marine ecosystems, hydrological cycles, climate change, and the sustainable utilization of marine resources. The high spatial gradient characteristics of extreme environmental regions, such as hydrothermal or cold seep areas, pose new requirements for in situ measurements of temperature and salinity. Traditional conductivity, temperature, and depth (CTD) equipment, based on contact measurement, cannot achieve high spatial resolution simultaneous measurement of temperature and salinity, nor perform simultaneous measurement of temperature and salinity at a single point. It has been proven that the Raman spectrum of water exhibits a clear linear relationship between temperature and salinity. Raman spectrum can offer non-contact measurements and simultaneous detection of various water parameters. These capabilities provide the potential for measuring temperature and salinity in extreme submarine environments. In this study, we aim to achieve fast, accurate, and real-time insitu detection of seawater temperature and salinity using the Raman spectrum.MethodsA 532 nm excitation optical setup (Fig. 2) is established in the laboratory to acquire Raman spectra of OH bonds at different temperatures and salinities. Simulated seawater samples are prepared with varying concentrations of NaCl, and their salinities are measured using a conductivity meter. Temperature control is achieved using a Peltier-based cuvette holder for precise temperature regulation. A total of 170 sets of Raman spectra are obtained (Tables 1 and 2), divided into training and prediction sets at a ratio of 7∶3. The acquired Raman spectra are baseline subtracted and normalized for consistency. The Levenberg-Marquardt (L-M) method is employed to decompose the Raman spectra into five Gaussian peaks (Figs. 3 and 4). The extracted peak heights, widths, and positions of these Gaussian peaks are used as training features, in conjunction with machine learning methods including partial least squares regression (PLSR), minimum absolute shrinkage and selection operator (LASSO) regression, support vector regression (SVR), and long short-term memory network with an integrated attention mechanism (LSTM+AM). To enhance predictive performance, a Stacking ensemble learning model is constructed using PLSR, SVR, LASSO, and multiple linear regression (MLR) as primary learners, with MLR serving as the secondary learner to simultaneously predict temperature and salinity. Evaluation metrics are utilized such as mean squared error (EMS), mean absolute error (EMA), and coefficient of determination (R2).Results and DiscussionsThe OH stretching vibration peak spectra of water are compared at different temperatures under the same salinity and at different salinities under the same temperature. Figure 5 illustrates that the Raman shifts at 3170 and 3536 cm-1 exhibit the highest sensitivity to temperature, whereas 3195 cm-1 shows the highest sensitivity to salinity. Changes in spectral intensity demonstrate a clear linear relationship with both temperature and salinity. The OH stretching vibration peak is resolved into five sub-peaks, each of which also displays a robust linear relationship with temperature and salinity. Quantitative analysis is independently conducted using PLSR, LASSO, SVR, and LSTM+AM. LSTM+AM yields the best simultaneous predictions for temperature and electrical conductivity, with mean squared errors below 0.28 ℃ and 1.89 mS/cm, respectively (Fig. 8). A subsequent Stacking model incorporating PLSR, LASSO, SVR, and MLR achieves even better quantitative results (Fig. 9), with mean squared errors of 0.23 ℃ for temperature prediction and 1.63 mS/cm for electrical conductivity prediction.ConclusionsThe Raman OH stretching vibration peak of water molecules consists of multiple sub-peaks due to the local hydrogen bond network effect. Changes in temperature and salinity influence the hydrogen bond composition, thereby altering the spectral shape. Analysis of simulated seawater Raman spectra across varying temperatures and salinities reveals that OH sub-peak intensities exhibit clear linear relationships with temperature and salinity respectively. With the help of this linear relationship, the Raman spectrum proves capable of accurately measuring seawater temperature and salinity. The L-M algorithm decomposes the water peak into five sub-peaks corresponding to different hydrogen bonds, utilizing peak intensity, width, and other sub-peak characteristics for simultaneous calibration of temperature and salinity. While several traditional multivariate calibration methods are employed for simultaneous prediction, the LSTM+AM model outperforms them. To further strengthen accuracy and robustness, we use a Stacking ensemble learning model to integrate multiple base models such as PLSR, LASSO, and SVR during training. Quantitative results demonstrate the proposed method’s effectiveness in simultaneously measuring water temperature and salinity. Mean squared errors for temperature and electrical conductivity are 0.23 ℃ and 1.63 mS/cm respectively. This method of using Raman spectrum for simultaneous prediction of seawater temperature and salinity holds promise for in situ Raman spectrum research, particularly in extreme deep-sea environments.

ObjectiveChina has vast water areas and a long coastline. Unlike sensor detection technology, imaging detection technology provides a direct display of water conditions through non-contact remote sensing images, which is of great significance for the development of water economy and water-related scientific research. In an outdoor environment, sunlight reflected on the water surface forms strong polarized glints and affects the water brightness, making it extreme challenging to obtain clear images and affecting water surface imaging. This leads to large-area pixel saturation and pixel information loss in imaging detector. Therefore, high dynamic range (HDR) imaging is required for water surface scenes. Fully utilizing polarization information can provide new insights for HDR technology in water scenes. Although glint on the water surface exhibits distinct polarization characteristics, polarized images can reduce such impacts on the rough water surface. In this study, we report a method called water surface polarization HDR (WP-HDR), which utilizes a division of focal plane (DOFP) system to suppress sunlight glints and achieve HDR imaging of water scenes. Real-time water surface HDR imaging is achieved based on the DOFP system. We hope that our basic strategy and findings can contribute to applications such as water environment protection and aquatic meteorological monitoring.MethodsWe focus on the polarization water surface HDR method and use the DOFP system to obtain four images in polarization directions in 0°, 45°, 90°, and 135°, respectively. Firstly, based on the principles of polarization imaging and Stokes vector calculation, we process a frame of an image from the DOFP system to obtain an image in any polarization direction. Through polarization processing, we suppress the high-intensity glints on the water surface, obtaining the maximum and minimum grayscale images simultaneously, Imax and Imin. We then use the Otsu segmentation method and filtering to segment Imax and identify the regions of interest Idark for enhancement. Finally, we apply pixel-wise linear fusion to enhance the underexposed regions. Based on Imin and Idark, we employ Laplacian filtering to enhance image details. The liner enhancement coefficient is determined based on the variance and mean of the dark regions targeted for enhancement.Results and DiscussionsWe use the DOFP system to capture images for testing in three actual water scenes. The experimental results indicate that the selection of the polarization direction is consistent with theoretical analysis. The employed segmentation strategy effectively extracts dark regions. The WP-HDR and DOFP systems produce an effect that cannot be achieved with single polarization direction images, highlighting the necessity of our method and device selection. Results from real-world experiments demonstrate that bright spots in HDR images are effectively suppressed. The contour information of both background and target details becomes clearer, and the composite contrast, standard deviation, and average gradient show significant improvement. The proposed composite contrast, reflecting the degree of light suppression and the accuracy of target representation, can be increased by up to three times. The experiments confirm that our method is suitable for water surface imaging under strong reflection interference and can identify targets in aquatic environments. It also possesses advantages of scene universality, processing adaptability, and real-time handling of dynamic targets. The results are shown in Fig. 8(d).ConclusionsBased on the polarization of water surface reflections, we propose an HDR imaging method called WP-HDR, which employs a DOFP system to suppress sunlight reflection in water scenes. The method utilizes the DOFP system to capture images with different polarization directions at the same moment, enabling polarization measurements of reflective water surface. The image processing involves three main steps. First, The bright spot areas in the image exhibit strong polarization characteristics. By leveraging the optimal polarization angle, minimum average grayscale, and the polarization image, the reflection on the water surface is effectively suppressed. Based on the principles of polarization imaging, we can calculate the images with the maximum and minimum grayscale, Imax and Imin. Imax features the largest inter-class variance, while Imin suppresses the light. Second, applying the Otsu image segmentation and filtering on Imax, we determine the enhancement region to reduce the introduction of discrete pixels caused by reflections, accurately extracting background and targets. Third, based on image information, we apply an adaptive linear fusion to the regions requiring enhancement, enhancing the darker areas. Experimental results demonstrate that the processed images effectively suppress glints, resulting in clearer details and contour information of both the background and targets. The composite contrast, standard deviation, and average gradient show significant improvements. The proposed composite contrast reflects the degree of glare suppression and target accuracy, a potential threefold enhancement effect. The correctness and necessity of the proposed method are validated. Compared to time-domain algorithms, this method has advantages such as good real-time performance, a simplified mechanical structure, and accurate regional computation, making it more versatile and flexible. The WP-HDR method exhibits pivotal practical applications in water imaging technologies like target detection, recognition, and tracking. By utilizing polarization information, glint interference can be effectively suppressed, enhancing the effectiveness of image observations. It holds significant practical application significance for water surface environmental engineering.

ObjectiveWireless ultraviolet scattering communication is a wireless communication technology based on atmospheric particle scattering. Due to its strong scattering characteristics, wireless ultraviolet can be applied to special scenarios such as non-direct vision. However, this strong scattering effect can lead to an obvious multipath effect of wireless ultraviolet and cause serious pulse broadening. In the case of a high data rate, this phenomenon will cause inter-symbol interference and even cause information misjudgment, leading to the increase of bit error rate and poorer communication performance. To improve wireless ultraviolet communication, it is necessary to study the signal processing technology for ultraviolet scattering channel. As a key technology in wireless optical communication, channel equalization can effectively suppress or eliminate inter-symbol interference. As an artificial intelligence method, deep learning has developed rapidly in recent years. With wide application, it can also be applied to the signal processing of wireless optical communication, which inspires channel equalization. In this paper, we combine deep learning technology with ultraviolet optical communication to achieve more efficient and intelligent wireless ultraviolet optical communication.MethodsWe study the channel problem of wireless ultraviolet (UV) scattering communication, and establish a single scattering channel model for non-line-of-sight UV. We analyze the scattering channel characteristics in terms of impulse response and path loss, to provide a suitable channel model for subsequent equalization. Then, we combine long short term memory recurrent neural network (LSTM) and deep neural network (DNN) to develop a blind equalization method for UV scattering channel based on a hybrid neural network, which can preprocess the training data into a time sequence, and process the temporal dependence of the input signals through LSTM to extract useful temporal features. The nonlinear features of the signal data are further explored using DNN to enhance the prediction performance of the model, which features flexibility, adaptivity, and nonlinear modeling capability, and is capable of learning and adapting to complex UV scattering channels without prior information. With sufficient training sample data and the learning capability of the hybrid neural network, the signal can be equalized accurately and efficiently.Results and DiscussionsBased on the bit error rate (BER) and mean square error (MSE) as indicators, the proposed scheme (LSTM-DNN), the classical adaptive equalization algorithms [least mean square (LMS) and recursive least square (RLS)], and the DNN-based channel equalization scheme are comprehensively compared and analyzed. When the signal-to-noise ratio (SNR) is less than 5 dB, the BER curves of the algorithms are close to coincident; when the SNR is greater than 5 dB, it is observed that the BERs of DNN and LSTM-DNN begin to be gradually lower than those of LMS and RLS, with the BER of LSTM-DNN significantly lower than that of the DNN; when the SNR exceeds 9 dB, the BER of LSTM-DNN can be reduced by 0.5 to 2 orders of magnitude compared with that of the traditional algorithm [Fig. 10(a)]. Similarly, when the SNR is less than 5 dB, the MSE curves of LSTM-DNN and DNN are close to coincident, and the MSE is slightly lower than those of LMS and RLS; when the SNR is greater than 5 dB, the MSE of the LSTM-DNN is the lowest of all the algorithms [Fig.10(b)]. These results show that with a high SNR, the neural network model can better capture the difference between signal and noise, so DNN and LSTM-DNN show better equalization performance when the SNR is greater than 5 dB, while the LSTM in LSTM-DNN can automatically capture the temporal correlation in the signal, so it is more suitable for feature extraction of signal sequences.ConclusionsAiming at the serious pulse broadening and signal attenuation of non-line-of-sight wireless UV optical communication due to various factors such as atmospheric scattering, we propose a blind equalization method based on a hybrid neural network for the UV scattering channel. In this method, LSTM and DNN are combined, and the received signal is treated as a time sequence, without the need to study prior channel information. Also, LSTM’s powerful learning ability regarding temporal memory sequence is used to extract the characteristics of the received signal to recover the original signal. Simulation results show that when the SNR is greater than 11 dB, the BER of the proposed algorithm can be reduced by one to two orders of magnitude and the MSE is reduced by more than 0.5 orders of magnitude compared with the LMS algorithm and the RLS algorithm; compared with DNN, the equalization performance of the proposed algorithm is better, and the BER and the MSE of the proposed algorithm are reduced by 81.0% and 27.8% respectively when the SNR is equal to 11 dB, proving that the hybrid neural network has stronger noise suppression ability.

ObjectiveChlorophyll-a (Chl-a) mass concentration is a primary indicator for water color retrieval. In the visible wavelength band, compared to the contribution of atmospheric radiance, the contribution of water radiance constitutes only a small fraction of the total radiance received by the remote sensing sensor at the top of the atmosphere. Therefore, there is a high demand for the radiance detection accuracy of the remote sensors, which requires accurate on-orbit radiometric calibration before the data can be applied. The large field-of-view remote sensor, the directional polarimetric camera (DPC), is not designed with an on-board calibration and cross-calibration system. With the development of remote sensing technology, site calibration has become a common method for alternative calibration of satellite remote sensors in orbit. We use the ocean scene to carry out the on-orbit calibration of the visible band and verify the water color remote sensing products before and after the calibration. This proves that Rayleigh scattering calibration based on the ocean scene can improve the authenticity and accuracy of the water color remote sensing products of the DPC.MethodsGuided by this concept, to expand the application scope of the Carbon Monitoring in Terrestrial Ecosystems Satellite (TECIS)’s DPC, we initially perform Rayleigh scattering calibration on the DPC for ocean scenes from the CM Satellite, screening according to surface atmospheric conditions to obtain calibration sample points. Subsequently, we estimate the top-of-atmosphere apparent reflectance of the DPC in the blue and green bands, comparing it with the measured reflectance of the DPC to derive the radiometric calibration factor A. Finally, we validate the accuracy of the radiometric calibration. Then, combining the MODIS aerosol optical thickness product with the look-up table method, we estimate the atmospheric range radiation for atmospheric correction. The DPC bands at 443, 490, and 565 nm, pre- and post-radiometric calibration, are used as inputs to the Chl-a retrieval algorithm. The inversion region is selected from data detected in the northwest Australia ocean region on August 22, 2022, with authenticity testing conducted using Moderate Resolution Spectroradiometer (MODIS) water color retrieval data from the same region and date.Results and DiscussionsWe obtain results from Rayleigh scattering calibration, atmospheric correction, and validation of retrieved Chl-a mass concentrations: 1) At ocean calibration sites, we verify the accuracy of radiometric calibration in the blue-green bands of the DPC (443, 490, 565, 670 nm bands). Results indicate that the radiometric calibration factors for each band are close to 1, with correlation coefficients (R2) above 0.9, root-mean-square errors (RMSE) below 2%, and mean absolute errors (MAE) below 0.02, suggesting minimal dispersion in these calibration results. Rayleigh scattering calibrations are validated using desert and polar calibrations, with radiative calibration factors for the ocean scene deviating about 3% from those for snow/ice and desert scenarios (Figs. 3-4 and Tables 6-8). 2) Utilizing the 6SV atmospheric radiative transfer model and combining it with the MODIS aerosol optical thickness product along with other observational and atmospheric environmental parameters, we performed atmospheric corrections for the study area. Results show that the corrected surface reflectance is generally lower than the apparent reflectance before correction, with the Surface Reflectance Product MOD09GA verifying relatively with an error of less than 25% in each band (Fig. 5 and Table 9). 3) Employing the OC3 Chl-a retrieval algorithm, we test the authenticity of water color remote sensing products before and after calibration. Results reveal that the R2 for Chl-a mass concentrations measured by the post-calibration DPC is higher at 0.7720, with a lower RMSE at 0.0578 and MAE at 0.0457 (Fig. 6 and Table 10).ConclusionsIn this study, we establish a water color retrieval algorithm suitable for the DPC. To enhance the retrieval accuracy of water color remote sensing products, we employ a Rayleigh calibration method combining multiple ocean scenes for rapid on-orbit calibration tests in the visible wavelength band before applying water color products. We also conduct authenticity tests on water color remote sensing products pre- and post-adjustment of calibration coefficients. The consistency between calibration results and measurements is good, demonstrating minimal dispersion in calibration outcomes, thereby affirming the effectiveness and reliability of this calibration method. We also use MODIS aerosol optical thickness products and look-up tables to carry out atmospheric correction, and the results show that the atmospheric correction results are good, basically eliminating the influence of the atmosphere. Finally, we perform water color product retrieval and authenticity tests on water color remote sensing products pre- and post-calibration coefficient adjustment, confirming that Rayleigh scattering calibration based on ocean scenes significantly improves the authenticity and accuracy of DPC water color remote sensing products. Test outcomes substantiate a notable rise in the correlation between Chl-a mass concentrations measured by the post-calibration DPC and MODIS data.

ObjectiveIn coal mining regions, extensive coal dust is generated during mining, transportation, and storage, coupled with substantial black carbon produced resulting from incomplete coal combustion in the industry chain. Over time, these materials form absorbable substances, evolving into core-shell aerosols with inorganic salt shells. These aerosols, including sulfate, nitrate, and water, exert significant climate impacts through direct and indirect radiation effects. The environmental and radiative forcing effects are substantial. Absorbing aerosol demonstrates strong solar radiation absorption across the ultraviolet to infrared spectrum. However, past studies primarily focus on their optical properties in visible and infrared bands, overlooking ultraviolet band absorption. Current research often assumes a lognormal particle size distribution for absorbing aerosols, neglecting variations in distribution and optical properties resulting from diverse emission scenarios. Therefore, a thorough analysis of absorbing aerosol optical properties at local scales is crucial. Quantitative assessments of particle size distribution, mixing state, and spatio-temporal variations are vital for elucidating the intricate interactions with boundary layer development, radiative forcing changes, and air pollution.MethodsIn our study conducted in the coal mining area of Changzhi City, Shanxi Province, various datasets are collected, including surface black carbon concentration, particle size distribution, and columnar aerosol optical depth (AOD). The investigation commenced with the utilization of the variance maximization method to categorize AOD data into distinct pollution events. Subsequent analysis involved evaluating the particle size distribution corresponding to different pollution degrees through probability density functions. The uncertainty of particle size for the desorption aerosol core and shell is then determined by integrating black carbon mass concentration data and particle size distribution information. These uncertainties are then used as input parameters to run the Mie scattering model based on the “core-shell” structure. This process results in the inversion of the multi-band optical characteristic parameters of absorbing aerosol in the coal mining area. The computations are carried out under both the assumption of a uniform distribution and a non-uniform distribution, representing different mixing degrees of aerosols. To complete the picture, the uncertainty interval for the single scattering albedo (SSA) of absorbing aerosol was constrained through the application of absorption Ångström exponent (AAE) theory. This comprehensive approach provides a nuanced understanding of the complex dynamics of absorbing aerosol in the specific context of coal mining environments.Results and DiscussionsIn the coal mining area, absorbing aerosols are influenced by emission sources, manifesting a particle size distribution divergent from the lognormal model. Under various pollution conditions, robust peaks are discernible in smaller particle size ranges (0.28-0.3 μm), with weaker peaks present around 0.58-0.65 μm. The relative proportion between the two peaks fluctuates in tandem with the pollution severity (Fig. 3). Using the Mie scattering model, the optical characteristics of absorbing aerosol are inverted based on AOD information, black carbon mass concentration, and particle number concentration. Results indicate that under the assumption of a uniform distribution (Fig. 4), the average size of the “core” particles at 0.28, 0.58, and 0.7 μm is relatively low, leading to corresponding patterns in SSA with changes in “core” particle size. Additionally, the average “core” particle size shows no significant variation with changes in wavelength in different size ranges. SSA decreases with increasing wavelength, with greater fluctuations in the smaller particle size range (0.25-0.58 μm) and more stable changes in the larger particle size range (0.58-1.6 μm). Under this assumption, the AAE theory is found to be inapplicable. In the case of a non-uniform distribution (Fig. 5), SSA values exhibit a slow, followed by a gradual and then rapid increase in the shortwave region, while in the longwave region, SSA first rapidly increases and then gradually levels off. For shorter wavelengths (500 nm and above), AAE theory proves effective for absorbing aerosol with smaller particle sizes. For longer wavelengths (675 nm and above), AAE theory is applicable to absorbing aerosol with moderate particle sizes. However, for larger particles such as coal dust, AAE theory is not suitable. It is noteworthy that, under both assumptions, the inversion results of SSA values in the longwave spectrum (such as 870 and 936 nm) are relatively lower compared to the shortwave spectrum (such as 440 and 500 nm). This discrepancy will lead to an underestimation of emission quantities.ConclusionsWe conduct on-site observations in the coal mining area of Changzhi City, Shanxi Province, aiming to capture the variation characteristics of AOD, particle concentration, and black carbon mass concentration. Utilizing the Mie scattering model based on the “core-shell” hypothesis, we simulate the SSA of absorbing aerosol under two different mixing states. Additionally, we calculate the optical variations of absorbing aerosol constrained by the AAE. The research findings reveal the following:1) The particle size distribution of absorbing aerosol in the coal mining area deviates from the assumptions made in previous studies, which typically assumed single or double-peaked distributions. Influenced by emission sources, the characteristics vary under different pollution conditions. Smaller particles predominantly originate from the incomplete combustion of coal in local power plants and coking factories, producing black carbon. Larger particles stem from the aging processes of black carbon in the atmospheric environment and coal dust generated during coal transportation.2) Comparison of the SSA variations under different mixing states simulated by the two hypotheses indicates that particle size, mixing state, and spectral range significantly impact the SSA of absorbing. In contrast to previous studies using the infrared spectrum, the present investigation reveals higher SSA values in the ultraviolet and visible light spectrum, suggesting a potential underestimation of black carbon emissions.3) The AAE theory is applicable only to certain particle size ranges in different spectral bands. For large-sized absorbing aerosol in the coal mining area, using the AAE theory to estimate SSA introduces uncertainty, and applying the AAE assumption across all particle size ranges leads to an underestimation of emissions. These findings underscore that the distribution characteristics of SSA in absorbing aerosol do not strictly adhere to the power-law relationship of the AAE index but are collectively determined by particle size distribution, mixing state, and spectral range.

ObjectiveThe quantum yield of photosynthesis is a crucial parameter that reflects the efficiency of utilizing absorbed light quanta in initial photochemical reactions. It plays a pivotal role in assessing the strength of plant photosynthesis and primary productivity, with extensive applications in plant physiology, pathology, and toxicology. Various technologys have been developed for measuring photochemical quantum yield (FV/FM) since the advent of fluorescence kinetics. The pulse amplitude modulation (PAM) technology, proposed by Schreiber et al., involves inducing fluorescence dynamics using saturating light exceeding 10000 μmol/(m2·s), which fully reduces all PSII reaction centers in a short duration. This approach allows chlorophyll fluorescence to peak before measuring photochemical quantum yield with weak modulated light. While widely applied in higher plant research, PAM suffers from a low signal-to-noise ratio due to the low intensity of measuring light, making it challenging for low-chlorophyll environments, and unsuitable for phytoplankton monitoring. Kolber et al. introduce the fast repetition rate (FRR) fluorescence measurement technology, utilizing rapid, repeated saturation pulses to block the photosynthetic electron transfer chain and modulate chlorophyll fluorescence. By fitting the fluorescence dynamics curve using the exponential curve, FV/FM can be obtained. FRR employs high-frequency sequences (up to 250 kHz) of narrow light pulses (0.3 to 2 μs full width at half maximum) as the excitation light source, providing a high signal-to-noise ratio for measuring FV/FM in phytoplankton. However, it poses high demands on the excitation light source driving circuit and high-speed data acquisition circuit design, increasing the system design complexity and cost. Building upon FRR, Shi et al. propose the tunable pulse light induced fluorescence (TPLIF) technology. The TPLIF technology changes the fast-repeated pulse light excitation in the single turnover mode to single pulse light excitation, reducing the requirements for signal sampling rate and simplifying system design complexity. Based on this, Wang et al. study an adaptive excitation light intensity method. By regulating the saturation excitation light to block the photosynthetic electron transfer chain based on fluorescence saturation parameters, they accurately obtain photochemical quantum yield for different growth stages of phytoplankton. However, phytoplankton classes vary significantly in their light-harvesting pigment compositions and characteristic absorption bands. Eukaryotic algae cells like green algae, diatoms, dinoflagellates, and coccolithophores mainly concentrate their characteristic absorption of light-harvesting pigments in the blue-green light region, with lower absorption in the longer wavelength range. Cyanobacteria, as large single-celled prokaryotic organisms, concentrate their characteristic absorption in the orange-red light region, with lower absorption in the shorter wavelength range. Therefore, when measuring photochemical quantum yield for different classes of phytoplankton, the TPLIF technology using single-wavelength excitation faces challenges in simultaneously saturating different classes of phytoplankton, leading to large errors in the measurement of photochemical quantum yield.MethodsBased on the light absorption characteristics of different classes of phytoplankton, focusing on Microcystis aeruginosa (belonging to phylum Cyanophyta) and Chlorella pyrenoidosa (belonging to phylum Chlorophyta), we employ a dual-band pulse excitation comprising red and blue light. Saturation conditions are optimized to achieve 99% closure of PSII photosynthetic reaction centers within a single turnover cycle, with adaptive adjustments made to excitation wavelength and intensity. Fluorescence kinetics curves are fitted under saturation excitation to accurately measure photochemical quantum yield across phytoplankton classes.Results and DiscussionsFor Microcystis aeruginosa, errors in 10 repeated FV/FM measurements compared to FastOcean sensor results are 26.50%, 1.58%, and 1.12% under blue light, red light, and combined light excitation modes, respectively (Fig. 3). Similarly, for Chlorella pyrenoidosa, errors are 1.12%, 8.99%, and 0.53% under the respective excitation modes (Fig. 4). Measurements of mixed algae with varying volume ratios show errors of 11.95%, 14.02%, and 0.94%, under blue light, red light, and combined light excitation modes compared to FastOcean sensor results (Fig. 6).ConclusionsTo address the limitations of the single-wavelength TPLIF technology, which fails to simultaneously saturate different classes of phytoplankton and leads to significant errors in measuring photochemical quantum yield, we propose a dual-band TPLIF technology. This method is designed for the accurate measurement of the photochemical quantum yield of photosynthesis based on the light absorption characteristics of various classes of phytoplankton. Our measurements of pure algae demonstrate that the error in measuring photochemical quantum yield using the characteristic absorption of the excitation wavelength is similar to that of the dual-band excitation mode. However, the error is significantly higher when using non-characteristic absorption of the excitation wavelength compared to the dual-band excitation mode. Under blue light, red light, and dual-band excitation modes, the measurement errors of photochemical quantum yield for Microcystis aeruginosa are 26.50%, 1.58%, and 1.12%, respectively, in comparison with FastOcean sensor measurements. For Chlorella pyrenoidosa,the measurement errors are 1.12%, 8.99%, and 0.53%, respectively. In addition, measurements of mixed algae show that the error in measuring the photochemical quantum yield using the dual-band excitation mode is significantly lower than that using the single wavelength excitation mode. Relative errors for measuring the photochemical quantum yield of mixed algae are 11.95%, 14.02%, and 0.94%, respectively, when compare to FastOcean sensor results. The dual-band excitation mode effectively saturates and excites different phyla of phytoplankton simultaneously, leading to high accuracy in measuring photochemical quantum yield. We introduce a dual-band TPLIF technology for the measurement of phytoplankton quantum yield of photosynthesis, enabling precise measurement of the quantum yield of photosynthesis across different classes of phytoplankton. This technology offers a significant advancement for assessing photosynthetic strength and calculating primary productivity.

ObjectiveWith the development of infrared and laser technology, the computational demand for high-resolution atmospheric gas absorption spectra is continuously increasing. Instruments featuring ultra-high spectral resolution, exemplified by the Tropospheric Emission Spectrometer (TES), have already been developed internationally. To retrieve valid information from high spectral resolution devices, rapid computation of atmospheric transmittance at higher spectral resolutions is imperative. At the same time, in specialized application fields such as the simulation of high-altitude flying object plumes, reliance on specialized spectral databases like the high-temperature molecular spectroscopic database (HITEMP) becomes essential for high spectral resolution calculations. However, some specialized models addressing these challenges have already been developed abroad, and domestic resources for addressing the above issues are still relatively scarce. In atmospheric radiation transmission calculations, the computation of transmittance presents a significant challenge. Currently, the line-by-line integration method offers the highest calculation accuracy, up to 0.5%, but it is extremely time-consuming. Consequently, calculating absorption coefficients for broader bands imposes numerous practical engineering usage restrictions. In recent years, graphics processing unit (GPU) parallel computing technology has been widely applied in scientific computation. We design a general high-resolution atmospheric spectral line parallel computing model based on GPU, which has increased the computing speed by one to three orders of magnitude. On this basis, combined with the correlated K distribution algorithms, a correlated K distribution coefficient table with a spectral resolution of 1 cm-1 has been constructed, achieving a parameterized representation of line-by-line integration calculation results and enhancing the universality of computational products. Our work endeavors to present a novel technical approach for high-resolution, rapid radiation transmission calculations under standard atmospheric conditions and high-temperature gases.MethodsWe first design a parallel computation for both the thermodynamic state and spectral line calculations based on the computational characteristics of the line-by-line integration method. Then, through a central processing unit (CPU) +GPU heterogeneous platform, the design processes for both the CPU and GPU sides are optimized by employing parallel computing techniques such as shared memory optimization, atomic operations, loop unrolling, and pre-processing of complex calculations, thereby constructing an efficient parallel computing model. Subsequently, this model is utilized to verify the accuracy of absorption cross-section calculations under atmospheric conditions and radiance calculations under non-uniform paths, demonstrating the computational accuracy of the model. Tests and analyses are also conducted on the parallel computation between spectral lines and under various thermodynamic states, confirming the model’s computational efficiency. Furthermore, based on this model and employing the Malkmus band model parameter fitting method, we conduct a correlated K distribution coefficient table with a resolution of 1 cm-1, enabling rapid atmospheric transmittance calculation under non-GPU hardware conditions. Finally, we compare the transmittance calculated using the correlated K distribution coefficient table with that calculated by the line-by-line integration method, verifying the accuracy of the correlated K distribution table.Results and DiscussionsWe design a universal high-resolution atmospheric spectral line parallel computing model based on GPU, according to the computational characteristics of the line-by-line integration method, which achieves an acceleration effect of one to three orders of magnitude (Table 3). Without compromising computation accuracy, the method significantly improves the efficiency of atmospheric spectral line calculation, providing a powerful tool for atmospheric radiation transmission calculation. On this basis, combined with the correlated K distribution coefficient table constructed by the Malkmus band model parameter fitting method, it is compared with the calculation results of the line-by-line integration method under the same computing conditions, also demonstrating good computational accuracy (Fig. 9).ConclusionsWe combine line-by-line integration with GPU parallel computing, utilizing techniques such as shared memory optimization, atomic operations, loop unrolling, and the preprocessing of complex computations to construct an efficient parallel computing model. This model facilitates rapid calculations of high-resolution absorption coefficients, atmospheric transmittance, and other typical radiative transfer results in environments ranging from 1 to 5000 K. Subsequently, we use the model to calculate CO2 infrared radiation problems in atmospheric and high-temperature environments and conduct accuracy verification, followed by an in-depth analysis of the model’s parallel acceleration capability in different environments. The research results show that the designed parallel computing model can accurately produce the required computation results and achieve a speed-up ratio of over 800 times when processing large-scale spectral line calculations. Finally, by integrating the parametric method of the Malkmus spectral band model, a new process for quickly generating correlated K distribution coefficient tables is realized. This approach, distinct from previous research, extends the parallel computing achievements to devices without GPU or with limited memory. This technological approach not only expands the application field of existing technologies but also provides a new and efficient solution for research and practical applications in related fields.

ObjectiveSince the industrialization era, with the continuously growing industrialization, urbanization, and energy consumption, greenhouse gas emissions have risen sharply, thus causing a constant increase in global temperatures. Atmospheric CO2 is a crucial factor in global warming, and as a major anthropogenic greenhouse gas emission, it has caught continuous attention from the international community. Current high-precision CO2 observations primarily rely on ground-based measurements and satellite remote sensing. While ground-based observations have advantages such as high accuracy and strong reliability, they are essentially single-point measurements and sparsely distributed globally, unable to provide detection on a global scale. Therefore, atmospheric CO2 satellite remote sensing has become the main method for high-precision CO2 monitoring on a global scale. However, with the development of satellite remote sensing from discrete to imaging observation techniques, there has been a substantial increase in remote sensing data volume, and existing retrieval algorithms struggle to meet computational time requirements. In our study, we propose a fast retrieval method for atmospheric CO2. By constructing a suitable look-up table to replace the time-consuming components in the original algorithm, we aim to achieve fast atmospheric CO2 retrieval.MethodsWe focus on the observational data from China’s Gaofen-5 satellite (GF-5), equipped with the greenhouse gas monitoring instrument (GMI), and present a fast retrieval algorithm for atmospheric CO2. First, by leveraging the spectral characteristics of GMI, a line-by-line integration method is employed to construct a gas absorption cross-section look-up table suitable for GMI data, thereby expediting the calculation of gas absorption optical thickness. Secondly, via adopting data from the Modern-Era Retrospective analysis for Research and Applications, Version 2 (MERRA-2), and based on Gaussian line shapes, fitting is performed on aerosol optical thickness profiles to establish a look-up table for Gaussian parameters of aerosol optical thickness, thus facilitating the computation of aerosol optical parameters. Finally, combined with atmospheric environmental parameters and satellite data, the atmospheric XCO2 results are obtained by utilizing a radiative transfer calculation model and a physical retrieval algorithm, achieving fast retrieval of atmospheric CO2.Results and DiscussionsWe conduct a comparative validation of retrieval accuracy and computational efficiency by adopting total carbon column observing network (TCCON) site data and GMI observational data. Regarding computational efficiency, the original GMI retrieval algorithm and the proposed improved algorithm are compared in terms of processing time. In the context of single forward model calculation time, the improved algorithm reduces the forward model calculation time by over 85% compared to the original GMI algorithm, leading to an approximately 21.5 times improvement in calculation time. In terms of total computation time, the proposed algorithm achieves a time scale in minutes, significantly lower than the original algorithm’s computation time of over 1.5 h, which represents a substantial improvement in computational efficiency (Table 4). Regarding retrieval accuracy, a comparison is conducted between the retrieval results of the proposed algorithm and the original GMI algorithm. The error in the column concentration of CO2 between the two algorithms remains within 2×10-6 [Fig. 4(a)]. The average absolute error of XCO2 between the two algorithms reaches 0.75×10-6, with the high consistency of results reaching 85.5% [Fig. 4(b)]. This indicates that the proposed algorithm has a minimal influence on the error in the calculation results of GMI retrieval. By comparing the retrieval results of the original GMI algorithm, the improved algorithm, and TCCON site observational results, it is observed that the concentration discrepancies between the proposed algorithm and TCCON mostly stay within 4×10-6. The average absolute error in the results is 3.01×10-6, and the retrieval error is less than 1% (Fig. 5). Furthermore, the retrieval results of both algorithms are generally consistent, meeting the precision requirements for CO2 retrieval.ConclusionsTo address the inefficiency in atmospheric CO2 retrieval, we propose a fast atmospheric CO2 retrieval method by adopting look-up tables for acceleration based on the practical requirements of GMI retrieval calculations. By constructing look-up tables for gas absorption cross-sections, the method achieves fast calculation of atmospheric layer-wise gas absorption optical thickness. Combined with molecular scattering calculations and fitting calculations for aerosol optical thickness based on aerosol parameter look-up tables, it reduces the computational time for time-consuming molecular absorption calculations in radiative transfer. By comparing the original GMI retrieval algorithm and the improved algorithm, the average absolute error between their retrieval results is 0.75×10-6 with high consistency. When compared to TCCON site observational results, the average absolute error in the retrieval results is 3.01×10-6, meeting the 1% precision requirement for retrieval accuracy. In terms of computation time, the improved retrieval algorithm significantly reduces the computation time while ensuring retrieval accuracy. The retrieval computation time can be reduced by over 80%, shifting the computational performance from the hourly level to the minute level. By conducting retrieval experiments and result verification, the proposed fast atmospheric CO2 retrieval algorithm can substantially enhance the retrieval calculation speed while maintaining retrieval accuracy. In the future, this algorithm can be applied to multi-year GMI data at a global scale and other satellite observational data.

ObjectiveIn recent years, the growing demand for ocean exploration and exploitation has led to an increasing need for underwater high-rate, high-capacity, and low-latency communications. Orbital angular momentum (OAM), as a new multiplexing dimension, can provide additional multiplexing degrees of freedom that are structurally independent of amplitude, polarization, phase, and subcarriers. This is expected to substantially improve spectral efficiency and communication capacity, which makes it a recent hotspot in research for underwater wireless optical (UWO) communications. However, when the OAM beam propagates in an oceanic random turbulence channel, the seawater medium causes both absorption and scattering of the transmitted beam. Additionally, seawater turbulence, influenced by salinity and temperature fluctuations, disrupts the phase profile of the helical wavefront, which greatly affects the performance of the UWO-OAM communication system. In practical applications, the beam must carry information from the deep ocean to the shallow ocean, encountering vertical or slant optical links with seawater parameters that vary with water depth. Furthermore, there has been no research on the performance of the UWO-OAM communication system based on real ocean data. Therefore, it is of great significance to construct a more generalized ocean-inclined optical link.MethodsBased on the power spectrum inversion method, a random phase screen of ocean turbulence related to seawater depth is generated and compensated for. The propagation channel model for vortex beams in an oceanic turbulent slant optical link is established using the multi-phase screen approach. Numerical simulations and analyses are conducted to study the effects of the scintillation index and detection probability of Laguerre-Gaussian (LG) vortex beams transmitted through slant oceanic turbulence channels across varying transmission distances, seawater turbulence parameters, average temperature and salinity, and link tilt angles. Finally, the performance of the OAM modulation communication system for turbulence slant channels is assessed numerically using real data from Argo, a global real-time ocean observing network. The results underscore the effectiveness of the proposed channel model for underwater vortex beam turbulence slant optical links.Results and DiscussionsIn our study, we present two-dimensional and three-dimensional plots depicting random phase screens of ocean turbulence at different seawater depths. These plots illustrate how the intensity and phase of LG beams vary across various transmission distances and modes. Additionally, through numerical simulations, we analyze the scintillation index and the probability of detecting LG beams. This analysis takes into account factors such as transmission distance, seawater turbulence, average temperature, salinity, and the tilt angle of the link. Utilizing data from the Argo network, we investigate how the depth of the transmitter and the slant angle of the link influence the bit error rate (BER) of the UWO-OAM communication system at specific nodes.ConclusionsWe propose a channel model for turbulent slant link communication using underwater vortex beams, which correlates the distribution of seawater temperature and salinity across different depths with the optical turbulence in the ocean. Based on this underwater slant optical channel model, the transmission process of LG beams is simulated in a generalized ocean turbulence environment. The results indicate that an increase in the scintillation index of the LG beam and a decrease in the probability of detection are influenced by an increase in the rate of dissipation of mean-squared temperature of turbulence, a decrease in the rate of dissipation of kinetic energy per unit mass of fluid, an increase in seawater temperature or salinity, or an increase in transmission distance. Real data from the Argo, a global real-time ocean observing network, is used to numerically simulate the effects of transmitter depth and link slant angle on the BER of the UWO-OAM communication system at specified nodes. This research holds substantial practical significance for enhancing the understanding and optimizing the performance of actual underwater communication systems.