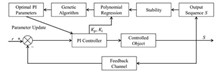

BackgroundThe Hefei Advanced Light Facility (HALF) is a fourth-generation synchrotron radiation source based on a diffraction-limited storage ring. Its electron beam energy is 2.2 GeV, with an emittance target of less than 100 pm·rad which requires stability within 5×10-5 for the magnet steady-state power supply and reduces overshoot in step response when changing the current operating point. Due to the need for over a thousand magnet steady-state power supplies at HALF, the empirical tuning method using conventional proportional-integral-derivative (PID) controller parameters requires a significant amount of time.PurposeThis study aims to design a PID controller parameter auto-tuning algorithm for the magnet steady-state power supply of HALF, to achieve optimal PI parameters.MethodsBased on polynomial regression and genetic algorithms, an auto-tuning algorithm for PI controller parameters was developed for the magnet steady-state power supply controller. Then, BUCK circuit structure was adopted for the magnetic steady current power supply, and the simulation and physical experiment verification of the prototype of the magnetic steady current power supply were carried out. Finally, this algorithm was integrated with the supervisory computer system to record various data points. Development and testing of this algorithm were conducted on the magnet steady-state power supply to verify the stability of the output current.ResultsThe test results of the power supply step response and output current stability show that the step response achieves a smooth transition, and the rising speed is increased several times. The output current stability is within 1×10-5. The key technical indicators meet the operation requirements and significantly improve the debugging efficiency.ConclusionsThe algorithm proposed in this paper provides an effective method for improving the debugging efficiency of large-scale power supplies in the future.

BackgroundThe β-ray surface density measurement instrument is widely used for the online measurement of the surface density of electrode sheets in the coating process of lithium batteries. A significant measurement error was observed when the source-to-film distance (SFD) varied under fixed source-to-detector distance (SDD).PurposeThis study aims to investigate and minimize the impact of SFD variations on the accuracy of β-ray surface density measurements.MethodsFirstly, experimental tests were conducted using the β-ray surface density measurement device with a fixed SDD to quantify the influence of SFD changes (4~8 mm) on copper film surface density measurements. Then, Geant4 Monte Carlo simulations were performed to model β-ray scattering effects under three collimator configurations: detector-side single collimator, source-side single collimator, and dual collimators. Finally, the optimized source-side single collimator geometry was experimentally validated by reproducing SFD variations and recalculating measurement deviations.ResultsInitial experimental results reveal that 1 mm SFD variation causes a maximum measurement deviation of 13.3% in surface density values. Monte Carlo simulation results demonstrate that the source-side single collimator configuration minimizes scattering effects, showing the smallest mean deviation among all three geometries tested. Experimental validation confirms this improvement with the maximum deviation decreasing from 13.3% to 3.5% after device optimization.ConclusionsResults of this study show a 73.7% reduction in measurement error caused by SFD variations is achieved by adopting a source-side single collimator, providing a practical solution for enhancing online surface density measurement accuracy in lithium battery production.

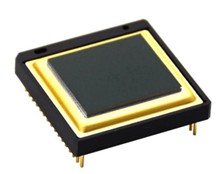

BackgroundVanadium oxide (VOx) detectors play a crucial role in the fields of space infrared detection and imaging due to their sensitivity to infrared radiation, particularly in the Long-Wave Infrared (LWIR) range, as well as their advantages of high sensitivity, low noise, uncooled operation, and cost-effectiveness. However, performance degradation caused by space ionizing radiation, particularly the decrease in responsivity and the increase in noise levels, poses a significant threat to the imaging quality and reliability of these detectors.PurposeThis study aims to bridge the current gap in research on the space radiation effects on VOx detectors and provide input for their radiation hardening.MethodsGround-based radiation simulation experiments were conducted to analyze the radiation damage to VOx detectors. Both online and offline testing methods were employed to systematically investigate the changes in detector output under varying radiation doses. Additionally, post-irradiation annealing tests were performed to examine the specific patterns of performance degradation in VOx detectors under space radiation environments.ResultsThe results indicate that space radiation significantly affects the output performance of VOx detectors. When the accumulated dose reaches 31 krad(Si), the non-uniformity of the detector's blackbody temperature response and the number of dead pixels surge. At 39 krad(Si), the non-uniformity of the blackbody response escalates to 88%, the dead pixel rate climbs to 66%, and the output image becomes aberrant. Within 24 h after the irradiation test, the annealing effect of the VOx infrared array detector is evident, with the average grayscale value of the blackbody response output closely aligning with the pre-irradiation results after 72 h of annealing, and the number of dead pixels tending towards 0 after one week of annealing.ConclusionsSpace radiation significantly impacts the output of VOx detectors, with the detector's output performance declining as the radiation dose accumulates, reaching a point where the device cannot recognize objects in images at 24 krad(Si). The total ionizing dose effect at lower accumulated dose values does not constitute permanent damage, as the detector's performance can be restored through room-temperature annealing. This study provides valuable insights for the subsequent design of radiation-hardened VOx detectors for space applications.

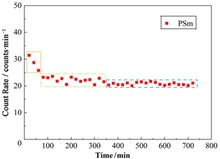

BackgroundPlastic scintillation microspheres (PSm) are a novel luminescent material with significant potential in the measurement and continuous monitoring of radionuclide activity.PurposeThis study aims to optimize the application of PSm in radionuclide activity analysis by exploring the impact of different measurement conditions on the background count rate and counting efficiency of PSm.MethodsFirstly, PSm samples were prepared by suspension polymerization method, with spherical particles mainly distributed between 95~200 μm in size, and a Hidex 300SL liquid scintillation counter was employed to measure the chemiluminescence and photoluminescence of PSm. Then, the effect of PSm dosage and the type of liquid scintillation vial on the background count rate of PSm were investigated, and the counting efficiency of PSm under different conditions was determined using 14C as a reference radionuclide. Finally, a 20 mL plastic liquid scintillation vial with the lowest detection limit was selected for the activity analysis of 14C solution.ResultsMesaurement results show that weak interference signals from chemiluminescence and photoluminescence are observed when PSm is mixed with water. This interference can be mitigated by keeping the samples in a light-protected environment. The background count rate is positively correlated with the volume of PSm, with larger volumes resulting in higher background counts. The counting efficiency is positively correlated with the height of PSm, with higher heights corresponding to higher counting efficiency. Differences between plastic and glass liquid scintillation vials of comparable specifications are minimal. The minimum detectable activity (MDA) of the 20 mL vial is lower. Analysis of 14C solutions in the range of 574.5 Bq·L-1 to 3 825.6 Bq·L-1 shows good agreement between measured and expected values.ConclusionsThe study demonstrates that the background count rate and counting efficiency of PSm are influenced by various factors such as sample volume. Under fixed conditions, PSm measurements exhibit good accuracy. PSm shows promising potential in radionuclide analysis.

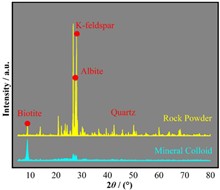

BackgroundThe surrounding rock of a repository is the last barrier to block the migration of radionuclides, and there is a potential risk of co-migration of mineral colloid and nuclides in the fissure.PurposeThis study aims to investigate the main components of the surrounding rock mineral (SRM) colloid and its adsorption mechanisms for radionuclide Cs+, as well as to assess the co-migration risk of mineral colloid and Cs+.MethodsFirstly, SRM colloid was prepared from rock samples collected from a cavern disposal repository, and its chemical composition and microscopic structure were characterized using X-ray fluorescence spectroscopy (XRF), X-ray diffraction (XRD), Fourier transform infrared spectroscopy (FT-IR), and scanning electron microscopy (SEM) techniques. Then, batch adsorption experiments were conducted to investigate the effects of contact time, temperature, initial Cs+ concentration, pH value, and coexisting ions on Cs+ adsorption by SRM colloid. Finally, adsorption kinetics, thermodynamics, and isotherm models were used to analyze the adsorption mechanism.ResultsThe characterization results show that SRM colloids are mainly composed of silicate minerals including biotite, K-feldspar, and albite. The adsorption experiments reveal that the saturated adsorption capacity of SRM colloid for Cs+ is 47.13 mg·g-1, and the adsorption process follows pseudo-second-order kinetics (R2=0.999). Under acidic conditions (pH<6) and in the presence of high concentrations of K+ and Ca2+, colloid stability decreases and Cs+ adsorption is significantly inhibited. Conversely, in alkaline environments (pH>6), colloid stability improves and Cs+ adsorption increases to a maximum of 50.7 mg·g-1 at pH value of 8. Interestingly, Mg2+ shows a promoting effect on Cs+ adsorption as its concentration increases, with adsorption capacity reaching 53.1 mg·g-1 at 1 mmol·L-1 Mg2+.ConclusionsThe adsorption of Cs+ by SRM colloid is a monolayer, irreversible chemical process that fits well with the Langmuir isotherm model (R2=0.942). Mg2+ promotes Cs+ adsorption by altering the microstructure of SRM colloid and increasing the interlayer spacing. Experimental results demonstrate that SRM colloid can facilitate Cs+ co-migration, but this risk can be mitigated by modifying the pH of backfill materials to acidic conditions or by using calcium-based materials instead of magnesium-based materials during repository construction.

BackgroundQuick and accurate detection of the effective quality of 240Pu in special nuclear materials (SNM) is of great significance for preventing nuclear proliferation whilst traditional thermal/epithermal neutron multiplicity counting systems based on 3He tubes have relatively low measurement accuracy and high development costs. Fast neutron multiplicity counting (FNMC) technique based on liquid scintillation detectors has become a research hotspot. However, FNMC systems currently built are still in the experimental research stage.PurposeThis study aims to develop a passive FNMC system that can be used for practical measurement.MethodFirstly, a passive FNMC system was built using 32 self-developed EJ-309 liquid scintillation detectors. To improve the uniformity of neutron detection efficiency of the system, these detectors were arranged in a spherically symmetrical geometry. A self-designed and developed 32-channel high-voltage power supply and a user-friendly application software were integrated for the system. Then, the Doubles parameter fitting method and the three-parameter fast neutron multiplicity analytical method were employed to evaluate the spontaneous fission rates of the 252Cf neutron sources respectively. Finally, the performance of the system under different neutron radiation backgrounds (with Singles rates of 111.42±0.46, 73.97±0.28, and 47.27±0.29, respectively) was also investigatted.ResultsThe experimental results show that the Doubles parameter fitting method can accurately evaluate the spontaneous fission rates of 252Cf neutron sources. The relative differences of the spontaneous fission rates of the 252Cf neutron sources evaluated by using the fast neutron multiplicity analytical method are less than ±4%. The measurement results under different neutron radiation backgrounds are basically consistent with those under natural environment background (the Singles rates were 0.51±0.02).ConclusionsThe FNMC system developed in this study can accurately determine the spontaneous fission rate of the 252Cf neutron source (with an equivalent 240Pueff mass range of 5.37~209.62 g), and has very good anti-background interference capabilities.

BackgroundContinuous SiC fiber-reinforced SiC-matrix composites (SiCf/SiC) can be used as the fuel cladding, control rod sheath, intermediate heat exchanger, and tube components for nuclear reactor, the reliability of its joining is very crucial to the safety of nuclear energy.PurposeThis study aims to explore the microstructure and mechanical properties of SiCf/SiC brazed joints, and optimize processing of connecting SiCf/SiC with SiCf/SiC using brazing method.MethodsSiCf/SiC was firstly prepared by chemical vapor infiltration (CVI) method, and active brazing method was used to join SiCf/SiC under 850 ℃, 870 ℃, and 890 ℃ with Ag-26.77Cu-4.4Ti (wt.%) filler metal. Then, the microstructure and interfacial phase of the brazed joint under different temperatures were analyzed for a high joining performance using optical microscopy (OM), scanning electron microscope (SEM), and energy dispersive spectrometer (EDS). The mechanical properties of the brazed joint were analyzed using thermal simulated test machine.Results & ConclusionsObservation results indicate that AgCuTi filler realizes stable joining for SiCf/SiC, and the surface smoothing of SiCf/SiC is beneficial to improve the shear strength of the joint. The reaction between Ti, Si, and C becomes more intense with the increase of the brazing temperature. When the brazing temperature reaches 890 °C, the brittle phase of Ti5Si3 gradually diffuses and disperses to the brazing seam, and the reinforced phase TiC becomes the main component of reaction layer, which effectively improves the microstructure and significantly increases the strength of the SiCf/SiC brazed joint.

BackgroundAnalog-based instrumentation and control systems in nuclear power plants (NPP) are being progressively supplanted by comprehensive digital technologies, enabling the deployment of sophisticated and efficient advanced control methodologies. Although there are studies on improving the control performance of pressurized water reactor (PWR) NPP control systems by advanced control algorithms, most of them only focus on the control system itself without considering the interconnection and coupling among multiple control systems.PurposeThis study aims to propose a setpoint decision optimization system for coordinating multiple control systems from the top level to optimize the overall control performances and achieve better task execution results.MethodsThe intelligent decision system for PWR control system was optimized based on particle swarm optimization (PSO) method. Both the decision objective function and operation constraint conditions of the intelligent decision system were proposed. Considering the actual operation of PWR, the setpoint was optimized offline and the intelligent decision operation was performed online according to the operation condition to provide the directions and amplitudes of the control targets for the underlying control systems. Subsequently, the typical operation process of the PWR NPP was taken as an example to carry out the simulation of the designed PSO-based intelligent decision-making system, and the simulation results were compared with that of traditional setpoint decision method in term of Integral of Time multiplied by the Square Error (ITSE).ResultsCompared with the control scheme using traditional setpoints, the ITSE values of average coolant temperature in primary loop, pressurizer fluid level, pressurizer pressure and steam generator fluid level obtained by optimized setpoint are decreased by 58.9%, 67.7%, 99.9% and 83.3%, respectively. The peak values are decreased by 62.4%, 3.0%, 100% and 66.3%, respectively.ConclusionsThe simulation results show that the system proposed in this study effectively reduce the ITSE and peak value of the system. The overall control performances and safety margin of the control systems of PWR NPP are improved.

BackgroundWith the further improvement of engine thrust, specific impulse, and inherent load requirements in modern aerospace systems, nuclear thermal propulsion (NTP) technology has considerable development potential. Air-breathing nuclear thermal propulsion engines do not need to carry oxidants and chemical fuels, directly drawing air from the atmosphere, heating, and generating thrust, further reducing the inherent load of the engine.PurposeThis study aims to explore the response of air-breathing NTP reactors to flow-blockage accidents, and study the influence of blockage factors on the stability of reactor operation under different blockage ratios and positions to obtain the pre-judgment parameters.MethodsFirst of all, a cross dimensional neutronics-thermal hydraulics-mechanicals multi-physics field coupling model was established with zero-dimensional point reactor kinetics equations, one-dimensional fluid, and three-dimensional solid thermo-mechanical forms. Then, multiple verification and validation (V&V) tests were conducted to verify the correctness of the code for efficiently calculating the transient response of the system, including multiple feedback mechanisms, with a deviation of less than 5%. Finally, the response of reactor flow-blockage accidents at different degrees and positions at a blockage area rate of 0 to 100% under rated operating conditions was investigated.ResultsThe calculation results indicate that as the proportion of blockage areas increases, the limiting factor for core safety and stability shifts from temperature to blockage thrust loss, and the maximum blockage factor allowed for the engine to achieve self-stabilization generally decreases to 0.74. In large-scale blockage accidents, temperature and stress are maintained within safe levels. When the blockage factor exceeds the limit, the low total pressure behind the reactor rapidly decreases the thrust and forms positive feedback, causing engine instability. As the blockage position moves downstream, the reactor is more likely to enter an unstable state under the same blockage factor conditions. When the initial total pressure behind the reactor after blockage is less than 1.53 MPa, it can be considered that the current blockage factor of the reactor is only 15.3% at most different from the critical instability value. After organizing a large amount of data, the total pressure of 1.53 MPa behind the reactor can be considered as a pre-judgment condition for approaching instability. The value may vary under different reactor conditions, but the parameter has system conservation and consistency. Taking the allowable temperature limit and the initial total pressure behind the reactor into account, it can provide early warning for any uncontrolled flow conditions.ConclusionsSimulation results of this study provide experience for early warning and intervention of flow-blockage accidents in air-breathing nuclear thermal propulsion engine working.

BackgroundHydride is a common defect caused by the reaction of zirconium water with primary coolant in the normal operation of zirconium alloy clad tubes in nuclear power plants.PurposeThis study aims to study the effect of hydride on mechanical properties of zirconium alloy.MethodsIn this study, molecular dynamics method and third-generation charge-optimized many-body (COMB3) potential function were used. Firstly, the molecular dynamics software large-scale atomic/molecular massively parallel simulator (LAMMPS) was used to construct zirconium base models containing different hydrides. Relaxation was performed at 300 K for 50 ps. Then uniaxial stretching was performed at a strain rate of 1010 s-1 in the direction [0001] for 30 ps, which was 30% strain.ResultsThe results show that the yield strength, strain and Young's modulus of the alloy decrease with the increase of hydride density in the range of 0~1 078 μg·g-1. When the hydride density is between 1 078 μg·g-1 and 2 311 μg·g-1, the yield strength, strain and Young's modulus increase with the increase of hydride density. When the hydride density is 1 078 μg·g-1, the yield stress of the model drops to the lowest value of 7.69 GPa, which is 42.22% lower than that of the pure Zr model. The yield strain decreases to the lowest value 0.089 5, which is 39.34% lower than that of the pure Zr model. Young's modulus drops to the lowest value of 112.18 GPa, which is 8.94% lower than that of the pure Zr model.ConclusionsWhen the hydride density is in the range of 0~1 078 μg·g-1, in the elastic stage, the increase of hydride density increases the stress concentration area, which is conducive to dislocation nucleation. In the plastic deformation stage, with the increase of hydride density, the initial dislocation is more inclined to expand around the hydride. When the hydride density is in the range of 1 078 μg·g-1 to 2 311 μg·g-1, a large number of dislocations are generated due to the high hydride density, resulting in dislocation plugging.

BackgroundIn accelerator operations, ensuring stable performance is critical for supporting scientific research, particularly for complex systems such as the China Spallation Neutron Source (CSNS). Traditional threshold-based alarm mechanisms often struggle to detect certain intricate anomalies, especially those with complex or transient patterns, leading to gaps in monitoring and increased challenges for operators during fault diagnosis. These undetected anomalies can significantly lower operational efficiency and delay fault resolution.PurposeThis study aims to develop an intelligent monitoring system for CSNS accelerators to detect complex anomalies and enhance fault detection reliability.MethodsA machine learning-based framework was proposed to improve anomaly detection in accelerator operations. The method employed unsupervised algorithms to analyze operational data, with a focus on jitter-type anomalies that are challenging for traditional alarms to capture. Cooling water temperature variables were selected as the research objects. The workflow involved data preprocessing, feature extraction, and the application of unsupervised learning models to detect deviations from normal operational patterns. To validate the method, a prototype system for intelligent accelerator monitoring was developed, incorporating real-time data analysis and anomaly detection capabilities.ResultsThe proposed method successfully detected jitter-type anomalies in various operational datasets, such as cooling water temperatures and power supply parameters, demonstrating its generalizability across different subsystems. Additionally, the prototype system was deployed and validated in the CSNS operational environment, where it effectively identified anomalies.ConclusionsThis machine learning-based anomaly detection approach improves the accuracy and reliability of monitoring in accelerator operations. By addressing the limitations of traditional methods, it provides a more effective and scalable solution for real-time anomaly detection. The prototype system demonstrates the feasibility of implementing intelligent monitoring for complex accelerator systems, contributing to the stability and efficiency of their operation.

BackgroundNuclear separation energies play pivotal roles in determining nuclear reaction rates and thus significantly impact astrophysical nucleosynthesis processes. The separation energies of many neutron-rich nuclei are still beyond the capacity of experimental measurements even in the foreseeable future.PurposeThis study aims to employ two machine learning approaches to improve nuclear separation energy predictions, including double neutron (S2n), double proton (S2p), single neutron (Sn), and single proton (Sp) separation energies.MethodsThe Kernel Ridge Regression (KRR) and Kernel Ridge Regression with odd-even effects (KRRoe) approaches were applied to predict nuclear masses. Nuclear separation energies were calculated with the KRR and KRRoe mass models. The accuracies of these two approaches in describing experimentally known separation energies were compared. In addition, the extrapolation performances of KRR and KRRoe approaches for single nucleon separation energy and double nucleon separation energy were also compared.ResultsBoth KRR and KRRoe methods improve descriptions of double nucleon separation energies S2n and S2p. However, only the KRRoe method achieves enhanced improvement for single nucleon separation energies Sn and Sp, owing to its kernel function that incorporates odd-even effects, effectively capturing the staggering behavior in these energies, unlike the KRR's flat Gaussian kernel.ConclusionsThe study demonstrates the importance of incorporating odd-even effects to accurately describe single nucleon separation energies, highlighting the superiority of the KRRoe method over the standard KRR method in the predictions of single nucleon separation energies.

BackgroundFor the High Energy Photon Source (HEPS) High-Performance Pixel Array Detector (HEPS-BPIX4), the HEPS-BPIX4 (High Energy Photon Source-Beijing PIXel4) DAQ data acquisition system must meet high real-time performance requirements. Online compression of image data can significantly reduce the pressure on subsequent data transmission and storage.PurposeThis study aims to overcome the limitations of traditional compression algorithms in terms of compression ratio and real-time performance by proposing an online image compression method based on deep learning object detection.MethodsThe end-to-end object detection model YOLOv10 was trained on an experimental dataset, and its training performance was tested and evaluated to ensure the model achieved the expected level of accuracy. Subsequently, the model's performance and effectiveness in data compression were tested and analyzed on the Intel Xeon 8462Y+ CPU and the NVIDIA A40 GPU. Finally, deployment of the model was optimized within the HEPS-BPIX4 DAQ framework under the multi-threaded scenario, and its practical performance was comparatively evaluated across different GPU platforms.ResultsExperimental evaluations indicate that the proposed method achieves an average compression ratio of 5.88 for online image data. Furthermore, an efficient deployment strategy is devised and validated, achieving a compression processing rate in the GB?s-1 range under single-threaded operation. Building upon this, a multi-threaded deployment framework for the HEPS-BPIX4 DAQ system is developed to fulfill more demanding compression performance requirements.ConclusionsThis research presents a novel approach to mitigate the processing burden imposed by high-bandwidth image data in the HEPS-BPIX4 DAQ system.

BackgroundThe nuclear equation of state (EoS) delineates the thermodynamic relationship between nucleon energy and nuclear matter density, temperature, and isospin asymmetry. This relationship is essential for validating existing nuclear theoretical models, investigating the nature of nuclear forces, and understanding the structure of compact stars, neutron star mergers, and related astrophysical phenomena. Heavy-ion collision experiments combined with transport models serve as a pivotal method to explore the high-density behavior of the EoS. With the rapid development of next-generation high-current heavy-ion accelerators and advanced detection technologies, the variety, volume, and precision of data generated from heavy-ion collision experiments have significantly improved. Effectively utilizing and analyzing these experimental datasets to extract critical insights into the EoS represents one of the central challenges in contemporary heavy-ion physics research. Bayesian analysis, a statistical approach, can extract reliable physical information by comparing experimental data with theoretical calculations and quantifying parameter uncertainties, thereby gaining widespread attention. In determining the range of EoS parameters using Bayesian inference, Monte Carlo sampling is employed to extract observables from final-state particle information simulated by transport models under various EoS parameters. However, the complexity of transport model calculations significantly hinders data generation efficiency and limits exploration of the full parameter space.PurposeThis study aims to a more efficient approach to simulate transport models, particularly one that leverages modern computational techniques to accelerate the process.MethodsHere, a machine learning-based approach was proposed to develop a transport model emulator capable of significantly reducing computation time. We evaluate three machine learning algorithms—Gaussian processes, multi-task neural networks, and random forests—to train emulators based on the UrQMD transport model. The selected observables include protons' directed flow, elliptical flow, and nuclear stopping extracted from the final state of Au+Au collisions at Elab=0.25 GeV/nucleon under different EoS parameters (incompressibility K0, effective mass m*, and in-medium correction factor F for nucleon-nucleon elastic cross sections). A total of 150 parameter sets of the UrQMD model are run, with K0=180 MeV, 220 MeV, 260 MeV, 300 MeV, 340 MeV, 380 MeV, m*/m=0.6, 0.7, 0.8, 0.9, 0.95, and F=0.6, 0.7, 0.8, 0.9, 1.0. For each case, 2×105 events with a reduced impact parameter b0<0.45 are simulated to ensure negligible statistical errors. The results from these 150 parameter sets are used to train the emulators via the three machine learning algorithms. Additionally, 20 parameter sets of the UrQMD model with randomly chosen K0, m*, and F are run, and the resulting observables are used to test emulator performance.ResultsThe results obtained from Gaussian processes and multi-task neural networks align with those calculated by the UrQMD model, indicating high accuracy and suitability for use in Bayesian analysis. However, when predicting v11 and v20 with a reduced impact parameter b0<0.25, some data points predicted by random forests exhibit significant errors, suggesting that random forests are relatively less effective in predicting observables. To further compare the prediction performance of the three emulators, we use the coefficient of determination R2 as the evaluation index. The R2 values for Gaussian processes, multi-task neural networks, and random forests in the test set are 0.95, 0.93, and 0.85, respectively. These results demonstrate that both Gaussian processes and multi-task neural networks achieve high accuracy when simulating UrQMD model data and can effectively accelerate the calculation process. However, for complex tasks involving a large number of parameters and observables, the efficiency and accuracy of Gaussian processes may decline. Thus, relying solely on Gaussian processes may be insufficient. In such cases, multi-task neural networks exhibit greater adaptability, better handling of complex datasets, and enhanced capability to learn information within parameter spaces.ConclusionsIn summary, Gaussian processes generally perform well within Bayesian frameworks as transport model emulators, particularly for moderate-sized datasets, while multi-task neural networks may be a more suitable choice for complex tasks involving numerous parameters and observables. In practical applications, the most appropriate emulator should be selected based on specific task requirements and data characteristics.

BackgroundIn nuclear density functional theory (DFT), uncertainties in theoretical predictions can be categorized into two types: statistical errors originating from intra-model parameter uncertainties and systematic errors arising from inter-model discrepancies. The former results from the propagation of experimental uncertainties during parameter calibration, whereas the latter reflects systematic deviations in predicting the same physical quantity across different models.PurposeThis study aims to review the application of Bayesian uncertainty quantification in nuclear DFT, addressing both intra- and inter-model uncertainties.MethodsThe Bayesian inference approach was first introduced. Subsequently, two representative applications in DFT uncertainty quantification were presented: 1) Bayesian parameter estimation utilizing machine learning techniques to quantify parameter uncertainties within the nonlinear relativistic mean field (RMF) model; 2) Bayesian model averaging to analyze systematic uncertainties in symmetry energy at 2/3 saturation density between Skyrme energy density functionals and RMF models.ResultsThe Bayesian parameter estimation method effectively quantifies statistical intra-model uncertainties, while Bayesian model averaging offers a robust statistical framework for quantifying inter-model uncertainties, enhancing the reliability of nuclear property predictions.ConclusionsThe application of Bayesian inference in both parameter estimation and model averaging provides valuable tools for addressing uncertainties in nuclear physics.

BackgroundWith the increasing number of astronomical observational data, it becomes feasible to constrain the equation of state of neutron star matter via data-driven method.PurposeConstrain nuclear EOSs (Equation of State) with massive neutron stars in the framework of relativistic mean field (RMF) models according to various astrophysical observations on neutron star properties.MethodsThis study investigated the nuclear EOS and neutron star structures using RMF models constrained by Bayesian inference and astrophysical observations.ResultsBy analyzing density-dependent coupling constants across different critical densities, the work demonstrates that higher critical densities tighten constraints on the EOS, leading to softer intermediate-density behavior and increased central energy densities for massive neutron stars. Key ?ndings include a high probability of the squared sound speed exceeding the conformal limit (vs2>1/3) in massive neutron star cores. The inferred maximum neutron star masses (Mmax≥2.5 M⊙) align with interpretations of gravitational wave events like GW190814, where secondary components may represent massive neutron stars. The symmetry energy and pressure profiles at supranuclear densities exhibit critical-density dependence, consistent with multi-messenger constraints.ConclusionsThese findings highlight the interplay between EOS stiffness, phase transitions, and observational constraints, providing critical insights for future studies to refine nuclear matter properties through multi-messenger data and advanced density functional analyses.

BackgroundAccurately constructing the Equation of State (EoS) for Quantum Chromodynamics (QCD) matter in the region with finite baryon chemical potential (μB) is a central challenge in modern high-energy nuclear physics research.PurposeThis study aims to address this challenge and explore the QCD phase diagram structure and locate the critical endpoint.MethodsFirst, the study constructed three deep neural networks to achieve high-precision reconstruction of the QCD EoS at zero μB. Then, we analyzed the monotonic behavior of the fourth-order generalized susceptibility χ4B at different temperatures T and μB, and calculated the dependence of the fourth-order cumulant ratio R42 on collision energy sNN.ResultsWe have estimated the possible location of the QCD critical endpoint (CEP) as (T, μB) = ((0.113±0.019) GeV, (0.634±0.11) GeV). The results for the fourth-order cumulant ratio R42 not only match the experimental data well but also show significant fluctuation behavior near 6 GeV.ConclusionsThe deep-learning quasi-parton model provides a self-consistent theoretical framework for studying the thermodynamic and transport properties of QCD matter at finite baryon density. The obtained EoS parameters can be directly applied to hydrodynamic simulations for the beam energy scan program of the Relativistic Heavy Ion Collider (RHIC), offering a new research tool for exploring the QCD phase diagram structure and searching for CEP.

BackgroundThe exponential growth of scientific literature, particularly in physics and nuclear physics, poses significant challenges for researchers to track advancements and identify cross-disciplinary solutions. While large language models (LLMs) offer potential for intelligent retrieval, their reliability is hindered by inaccuracies and hallucinations. The arXiv dataset (2.66 million papers) provides an unprecedented resource to address these challenges.PurposeThis study aims to develop a hybrid retrieval system integrating vector-based semantic search with LLM-driven contextual analysis to enhance the accuracy and accessibility of scientific knowledge across disciplines.MethodsWe processed 2.66 million arXiv paper titles/abstracts using BGE-M3 model to generate 1 024-dimensional vector representations. Cosine similarity metrics were computed between user queries (vectorized via the same model) and pre-encoded paper vectors for preliminary semantic ranking. The top 50 candidates underwent contextual relevance analysis by DeepSeek-r1, which evaluated technical depth, methodological alignment, and cross-domain connections through multi-step reasoning. A nuclear physics case study validated the system using 1 000 AI-human-annotated documents. The framework incorporating four specialized agents: query generation, relevance scoring, structured data correction, and PDF analysis.ResultsWe constructed a vector database comprising 2.66 million arXiv papers (including titles and abstracts), occupying 30 GB of disk space. Our vector-based semantic search system demonstrated superior performance in a nuclear physics query benchmark, achieving 90% precision and 60% recall for the top-10 retrieved documents. This significantly outperformed traditional keyword-based search methods, which yielded only 20% precision and 10% recall under the same evaluation conditions.ConclusionsBy synergizing vector semantics with LLM reasoning, this work establishes a new paradigm for scientific knowledge retrieval that effectively bridges disciplinary divides. The open-sourced system (https://gitee.com/lgpang/arxiv~~vectordb) provides researchers with scalable tools to navigate literature complexity, demonstrating particular value in identifying non-obvious interdisciplinary connections.

BackgroundIn recent years, machine learning methods have been widely applied to the predictions of nuclear masses.PurposeThis study aims to employ the continuous Bayesian probability (CBP) estimator and the Bayesian model averaging (BMA) to optimize the descriptions of sophisticated nuclear mass models.MethodsThe CBP estimator treated the residual between the theoretical and experimental values of nuclear masses as a continuous variable, deriving its posterior probability density function (PDF) from Bayesian theory. The BMA method assigned weights to models based on their predictive performance for benchmark nuclei, thereby balancing each model's unique strengths.ResultsIn global optimization, the CBP method improves the Hartree-Fock-Bogoliubov (HFB) model by approximately 90%, and the relativistic mean-filed (RMF) and semi-empirical formulas by 70% and 50%, respectively. In extrapolation analysis, the CBP method improves the prediction accuracy for the HFB models, RMF models, and semi-empirical formulas by approximately 80%, 55%, and 50%, respectively, demonstrating the strong generalization ability of the CBP method. To assess the reliability of the BMA method, the two-neutron separation energy for Ca isotopes was extrapolated, and its two-neutron drip line was predicted.ConclusionsThe methods proposed in this paper provide an effective way to accurately predict the nuclear mass, with potential applications to research on other nuclear properties.

As high-energy nuclear physics research enters a phase characterized by multi-dimensional and highly complex data analysis, deep learning techniques are gradually becoming essential tools for understanding nuclear matter behavior under extreme conditions. This shift is driving a fundamental transformation in research paradigms from experience-driven approaches toward data-driven methodologies. This article briefly reviews the evolution of machine learning in this field, emphasizing recent advancements involving deep learning techniques. Early research (from the late 20th century to the 2010s) primarily employed traditional algorithms such as artificial neural networks and support vector machines. These studies validated the feasibility of machine learning approaches in nuclear physics through tasks like nuclear mass prediction and phase transition identification. However, due to limitations in manual feature extraction and computational capabilities, such methods did not yet extend to autonomous exploration of physical features. In the deep learning era (2010s to present), researchers have innovatively introduced point-cloud neural network architectures, enabling direct processing of final-state particle four-momentum data. This advancement has overcome the constraints of traditional methods that relied heavily on manually constructed statistical observables and initiated a conceptual leap from superficial data representations toward intrinsic physical insights. Simultaneously, unsupervised learning methods have shifted research focus from hypothesis validation to autonomous, data-driven discovery of physical laws, facilitating not only sensitive detection of anomalous signals but also opening new avenues for investigating emergent physical phenomena. Looking ahead, from developing deep learning algorithms incorporating physical priors to enhance the model physical interpretation, to meta-learning and self-supervised frameworks deepening rare event analysis; from quantum machine learning accelerating high-dimensional feature extraction, to generative models reconstructing the physical data ecosystem, these advancements will potentially propel high-energy nuclear physics research from the passive interpretation of observational data toward active discovery of physical laws, shifting analysis from fragmented, local feature exploration toward holistic comprehension of systemic behaviors. Ultimately, this progression may pave the way toward constructing an intelligent physics research system capable of autonomous knowledge discovery.

BackgroundHeavy-ion fusion reactions, as the exclusive means to synthesize superheavy elements and novel nuclides, hold paramount significance in nuclear physics. However, conventional physical models demonstrate limitations in characterizing the fusion cross section (CS) of heavy-ion reaction, while experimental measurements for numerous systems remain incomplete or lack sufficient precision. Machine learning (ML), which has been widely applied to scientific research in recent years, can be used to investigate the inherent correlations within a large number of complex data.PurposeThis study aims to establish the relationship between fusion reaction features and CS by training the dataset using LightGBM (Light Gradient Boosting Machine).MethodsSeveral basic quantities (e.g., proton number, mass number, and the excitation energies of the 2+ and 4+ states of projectile and target) and the CS obtained from phenomenological formulas were fed into the LightGBM algorithm to predict the CS. Meanwhile, to evaluate the impact of different features on model predictions, the Shapley additive explanations (SHAP) method was employed to rank the importance of input features. A visual analysis was also conducted to illustrate the relationships between each feature and the CS, enabling the identification of key features that are highly sensitive to the CS and helping to uncover the underlying physical mechanisms.ResultsOn the validation set, the mean absolute error (MAE) which measures the average magnitude of the absolute difference between log10 of the predicted CS and experimental CS is 0.138 by only using the basic quantities as the input, this value is smaller than 0.172 obtained from the empirical coupled channel model. MAE can be further reduced to 0.07 by including an physical-informed input feature. The MAE on the test set (it consists of 175 data points from 11 reaction systems that not included in the training set) is about 0.17 and 0.45 by including and excluding the physical-informed feature, respectively. By analyzing the predicted CS for systems 18O+S116n and 36S+T50i in the test set, the fusion barrier distributions were further extracted. The results demonstrate that the incorporation of physics-informed features significantly enhances the agreement between calculated barrier distributions and experimental data. In addition, the SHAP method was used in the study to construct a visual correlation map between input feature parameters and CS. Through feature importance ranking, it revealed that the excitation energies of the 2+ and 4+ states of the target nucleus play an important role in predicting the CS.ConclusionsPhysical information plays a crucial role in machine learning studies of heavy-ion fusion reactions.

BackgroundThe origin of elements is a significant research topic in nuclear physics and astrophysics. Some heavy nuclei are produced through photonuclear reactions, known as p-nuclei. The study of photonuclear reactions plays a crucial role in understanding the origins of elements. The existing data on photoneutron reactions have significant discrepancies. It is well-known that the (γ, n) reaction cross-sections from Saclay are higher than those from Livermore, while conversely, the (γ, 2n) cross-sections from Livermore are higher than those from Saclay. To resolve these divergences, we need to either remeasure these data or evaluate them based on theoretical models.PurposeThis study aims to predict the photoneutron reaction cross-sections, specifically (γ, n) and γ, 2n reactions, using a Bayesian neural network (BNN). The goal is to develop a physics-informed BNN model that improves the accuracy of photoneutron reaction predictions and resolves divergence in experimental data from different laboratories.MethodsA physics-informed Bayesian Neural Network (PIBNN) model was constructed using PyTorch, designed to predict the photoneutron reaction cross-sections. The network was trained with a consistent dataset from Livermore's photoneutron experimental data, incorporating physics-informed such as cross-sections are zero, below reaction thresholds. The B2, B3 and B4 network architectures include various hidden layers (2, 3, and 4 layers), with an Adam optimizer and a learning rate of 0.000 1.ResultsAs the number of hidden layers increases, the model's description of the training set improves with the same number of training iterations. Among them, the B4 model not only effectively reproduces the single and double giant dipole resonance (GDR) peak structures of the (γ, n) reaction channel in the training set, but also accurately captures the magnitude of the γ, 2n cross-section. The physics-informed incorporated into the training set, particularly the inclusion of zero cross-sections below reaction thresholds, improved the model's accuracy in predicting the cross-section near the threshold and ensuring that cross-sections approach zero at high energies. The predictions of the (γ, n) and γ, 2n reaction cross sections for 175Lu by the three models are compared with the Saclay experimental data. The B4 model accurately provides the position and relative heights of the double-peak structure, reflecting the inherent systematics of the training set. By predicting the (γ, n) and γ, 2n reaction cross-sections for 197Au and 175Lu, it has been validated that the trained physics-informed Bayesian neural network model possesses generalization ability.ConclusionsBased on the physics-informed Bayesian neural network, the model can effectively learn the (γ, n) and γ, 2n reaction cross-sections, reproducing the data in the training set and predicting cross-section data outside the training set. Furthermore, as the number of hidden layers increases, the model's learning ability gradually improves. In the future, the trained B4 model can be used to predict reliable photoneutron reaction cross-sections, resolve data discrepancies between different laboratories.