ObjectiveChromophoric dissolved organic matter (CDOM) is an important component controlling the propagation of light in coastal and open sea areas, and it constitutes the largest organic carbon pool in the ocean, playing a significant role in the global carbon cycle. The laser induced fluorescence (LIF) technique is a well-known analytical method for rapid water environment monitoring. By measuring the emission spectrum of laser-induced seawater, we can quickly and in real-time obtain qualitative and quantitative information about CDOM in the ocean. Previous simulation models for marine LIF detection often ignore the influence of sea breeze and treat the sea surface as an ideal stationary interface. In reality, affected by a sea breeze, the sea surface fluctuates during LIF system operations for marine remote sensing detection. The fluctuating rough sea surface affects the laser and fluorescence, thereby influencing the fluorescence information detected by the receiving system. Therefore, based on the Monte Carlo method and a sea surface simulation model, we construct a simulation model of CDOM fluorescence characteristics under a rough sea surface and use this model to obtain the fluorescence distribution of CDOM received under an ideal stable sea surface and analyze the influence of different wind directions and wind speeds on the fluorescence signal of the rough sea surface.MethodsWe use the Monte Carlo method to simulate the transmission of photons through the sea surface in seawater. When the weight of the photon drops below the threshold, we introduce a new photon to continue the simulation. Throughout the simulation process, we record the position, weight, and direction of motion of each photon until it is finally emitted from the sea surface, and we collect and analyze the outgoing fluorescence information. The motion direction of the photon after refraction is determined based on the refraction law and relevant optical theory. We achieve the simulation of a rough sea surface by inverting the Pierson-Moscowitz wave spectrum using a linear superposition method, assuming that the rough sea surface results from the superposition of several triangular waves with varying frequencies, amplitudes, and random phases. We analyze the influence of sea surface fluctuation on CDOM detection by simulating the distribution of CDOM fluorescence under ideal smooth and rough sea surfaces. We then analyze the fluctuation of ocean CDOM fluorescence received under different wind speeds and wind directions. Finally, we validate the reasons for the fluctuations of the received fluorescence signal under the rough sea surface due to changes in the slope of the sea surface through experiments.Results and DiscussionsThe simulation results show that due to the isotropy of fluorescence and the differing transmission distances of outgoing fluorescence at various zenith angles in seawater, the overall fluorescence distribution of received CDOM under an ideal and stable sea surface presents a center-symmetric hemispherical shape (Fig. 1). This indicates that the fluorescence receiving intensity is negatively correlated with the received zenith angle, and is independent of the received azimuth angle. In actual detection, due to sea breeze influences, the sea surface is not ideally stable and fluctuates (Fig. 2). The fluctuating sea surface causes variations in the fluorescence transmission distances along different azimuth angles, leading to an asymmetric fluorescence distribution (Fig. 3). Additionally, the total reflection phenomenon may diminish due to changes in the slope of the sea surface. The fluorescence intensity received at a 90° zenith angle may no longer be zero at certain azimuth angles. The overall fluctuation direction of the sea surface aligns with the wind direction (Fig. 4), with greater wind speeds resulting in larger changes in sea surface height (Fig. 7). As sea surface height and slope change significantly in the downwind and upwind directions, remote sensing detection in these directions will experience stronger fluorescence fluctuations, whereas detection along the vertical wind direction will yield more stable results (Figs. 5 and 6). The stronger the gradient change of the ocean surface with wind speed, the greater the influence on photon refraction, leading to more stable fluorescence signals detected at lower wind speeds. Experimental designs indirectly verify that changes in sea surface slope in different directions affect fluorescence reception intensity.ConclusionsWe establish a fluorescence simulation model of marine CDOM based on the Monte Carlo method, incorporating a rough sea surface generated by the Pierson-Moscowitz wave spectrum. The simulation results reveal how CDOM fluorescence characteristics vary with different wind directions and speeds. Finally, we validate through experiments the changes in fluorescence signals caused by sea surface tilt. The simulation results show that without considering sea breeze influences, the fluorescence signal of marine CDOM is independent of the azimuth angle and inversely proportional to the zenith angle. Therefore, during actual detection, the setting of the receiving zenith angle should not be too large. Compared with a calm sea surface, CDOM fluorescence distribution fluctuates under a rough sea surface, with received fluorescence values fluctuating more sharply downwind or upwind due to the influence of sea surface fluctuations in different wind directions. Wind speed affects the degree of fluorescence fluctuation; as wind speed increases, larger sea surface dip angles occur, leading to greater fluctuations in the received CDOM fluorescence. In remote sensing detection, the fluorescence signal measured by CDOM is relatively more stable in the direction of vertical wind. Choosing a time of lower wind speed also enhances detection stability.

ObjectiveMethane (CH4) is the second most important greenhouse gas in the atmosphere after carbon dioxide. Mastering precise methods for monitoring atmospheric CH4 is essential for addressing the greenhouse effect and environmental changes. This helps us better understand and predict climate change and provides policymakers with the data needed to formulate effective emission reduction measures. By accurately monitoring CH4 variations, we can identify major emission sources and assess the effectiveness of mitigation strategies, thus promoting the achievement of global climate goals.MethodsWe use ground-based high-resolution Fourier transform infrared (FTIR) spectroscopy to collect near-infrared solar absorption spectra. These spectra are then analyzed using a nonlinear least squares fitting algorithm to retrieve the column concentrations of atmospheric CH4 in Hefei from 2018 to 2022. Our algorithm, GFIT, is the standard retrieval method of the Total Carbon Column Observing Network (TCCON), consisting of a forward model and iterative fitting process. The forward model calculates atmospheric absorption spectra through an atmospheric radiative transfer model, combining solar parameters, atmospheric parameters, and instrument line shape parameters to generate solar absorption spectra. The iterative process then compares calculated and measured spectra, adjusting retrieval parameters to achieve the best fit. Next, we process the atmospheric CH4 concentration data monitored by FTIR spectroscopy to determine the annual growth rate of atmospheric CH4 and study its seasonal variations. We then validate the ground-based FTIR CH4 data against the TROPOspheric Monitoring Instrument (TROPOMI) satellite data. Finally, by calculating the incremental values of CH4 and carbon monoxide (CO) relative to their background values (ΔCH4 and ΔCO), we analyze the sources of atmospheric CH4 in Hefei, examining the seasonal correlations between ΔCH4 and ΔCO.Results and DiscussionsOur study first uses FTIR spectroscopy to investigate the variation characteristics of atmospheric CH4 in Hefei from 2018 to 2022, as shown in Figs. 2, 3, and 4. The results show an annual increase in atmospheric XCH4 in Hefei with seasonal variations, peaking in autumn and decreasing to its lowest in spring (March to April) of the following year. Second, we use ground-based CH4 data to validate TROPOMI satellite observations in Hefei, revealing good consistency between datasets, as shown in Figs. 5 and 6. The average absolute deviation between the two datasets is 5×10-9, with an average relative deviation of 0.26%, indicating a slight overestimation of CH4 column concentration by TROPOMI. Additionally, the correlation coefficient between satellite and ground-based data is 0.91, confirming TROPOMI’s high reliability in monitoring atmospheric CH4. Finally, we analyze the correlation between atmospheric CH4 and CO in Hefei to infer CH4 sources. As CO primarily originates from human activities, its correlation with CH4 can indicate the main sources of CH4. A high correlation coefficient would suggest CH4 is mainly anthropogenic, while a low correlation would suggest natural sources as the primary contributor, as shown in Fig. 7.ConclusionsAtmospheric CH4 column concentrations in Hefei show a slow annual increase, with an approximate growth rate of 0.73%. The atmospheric CH4 column concentrations are lower in spring and winter, and higher in summer and autumn. Monthly averages peak in September and reach their lowest in March, at 1940×10-9 and 1890×10-9, respectively, with a seasonal variation amplitude of 50×10-9. Subsequently, we compare satellite data from the TROPOMI onboard the ESA Sentinel-5P satellite with ground-based FTIR data. The results demonstrate strong consistency between the two datasets, with an average absolute deviation of 5×10-9 and a correlation coefficient of 0.91. Finally, we conducted a correlation analysis between atmospheric CH4 and CO observed in Hefei, calculating the correlations of ΔCH4and ΔCO across four seasons over the five-year observation period from 2018 to 2022. The analysis shows a poor correlation between these gases in all seasons, suggesting that natural emissions are the primary source of atmospheric CH4 in Hefei. These findings provide effective methods for monitoring greenhouse gases in Hefei, particularly CH4, and offer valuable data support for the formulation and implementation of scientifically grounded environmental protection policies and emission reduction measures.

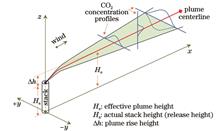

ObjectivePoint source emissions are a significant feature of industrial output, with thermal power plants serving as prominent examples. Globally, CO2 emissions from thermal power plants make up a substantial portion of energy-related emissions. Effective monitoring of these emissions aids in refining top-down carbon estimates worldwide. The portable EM27/SUN spectrometer, known for its mobility and ease of use, provides highly reliable and sensitive measurements and is adaptable across various environments. It has been widely adopted for satellite data validation and greenhouse gas quantification at multiple scales. However, thermal power plants, typically situated in or near urban areas, experience CO2 plume dispersion influenced by local surface features, such as buildings, which can affect EM27/SUN observation accuracy, a factor seldom addressed in previous studies. To overcome this, we focus on Hefei Wanneng Power Plant. Through multiple ground-based observations using the EM27/SUN instrument, we analyze the effect of nearby buildings on plume dispersion and assess how the spatial relationship between the measurement line and plume geometry influences site selection. This approach enables a better understanding of CO2 quantification capabilities and associated uncertainties under varying conditions, providing a foundation for optimizing measurement methods and enhancing accuracy.MethodsTo improve CO2 emission estimation accuracy from industrial point sources using the ground-based EM27/SUN, we explore optimized measurement methods. Using the Lagrangian particle dispersion model, we analyze the influence of nearby buildings on plume dispersion and assess the role of geometric positioning between the measurement line and plume in site selection. By synchronizing EM27/SUN data with TCCON observations and implementing a quality control criterion based on solar intensity, we enhance data reliability. The distance from the emission source is divided into three segments to evaluate the influence of building on measurement and examine plume positioning’s influence on CO2 column volume fraction data. Our final analysis provides insights into the limitations of current measurement methods and demonstrates how the optimized measurement method can improve emission estimation accuracy.Results and DiscussionsWe begin with a data preprocessing method, using strict quality controls to minimize anomalies and ensure measurement accuracy. Corrected EM27/SUN data from 2021, benchmarked against TCCON, show significant accuracy improvement, with an R2 of 0.978 and RMSE of 0.271, compared to pre-correction values of 0.85 and 0.412. Using Hefei Wanneng Power Plant as a case study, repeated measurements from 2021?2023 indicate that downwind XCO2 increase by 12×10-6?16×10-6 relative to background levels, highlighting the influences of CO2 emissions. Our findings reveal that building-induced wind field complexity near the plant introduces spatial variability in CO2 plumes and increases estimation uncertainty, with an average deviation of (7.98±10) kg/s in the far section. In both the near and middle sections, deviations in the observation path from the plume’s intersection point influence CO2 column volume fraction, with rapid changes in column volume fraction observed in the near section and more stable changes in the middle section. The study indicates that, based on an annual average CO2 emission intensity of 195 kg/s for the power plant, the average deviation in emission intensity estimates in the middle section (1.50?2.24 km) is only (7.13±5.39) kg/s. In contrast, the near section (0.30?1.49 km) has a larger deviation of (28.63±17.57) kg/s, and the far section (2.25?3.50 km) has a deviation of (27.01±17.98) kg/s. In addition, emission estimates for 2022, which primarily rely on middle section measurements, show notably smaller fluctuations than those from 2021 and 2023, with a deviation of just (11±9.67) kg/s. Overall, measurements taken in the middle section of the plume contribute to more reliable results, facilitating a more accurate assessment of the power plant’s CO2 emission.ConclusionsOur analysis reveals that nearby buildings and the observation path significantly influence EM27/SUN measurement accuracy. Close to the emission source, CO2 diffusion is limited, making deviations from the plume axis critical. In contrast, far-section measurements face challenges from complex wind patterns shaped by surrounding buildings, resulting in increased variability in XCO2 observations. The middle section, however, benefits from relatively uniform CO2 distribution and moderate diffusion, yielding more consistent results. In the emission estimation results, using Hefei Wanneng Power Plant as an example, the average deviation of CO2 emission intensity in the middle section (1.50?2.24 km) of this experiment is only (7.13±5.39) kg/s. In contrast, the average deviation in the near section (0.30?1.49 km) is (28.63±17.57) kg/s, and in the far section (2.25?3.50 km), it is (27.01±17.98) kg/s. The dispersion in the near and far sections is significantly greater than that at measurement points within the 1.50?2.24 km range, indicating that measurements taken in the middle section of the plume are more likely to yield reliable results. The experimental and model analysis shows that, in ground-based remote sensing of CO2 emissions from thermal power plants, the near section (close to the emission source) and the far section (with potential high-rise obstructions) present considerable limitations. Therefore, focusing measurements on the middle section of the plume is recommended to achieve high-precision results.

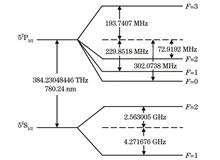

ObjectiveHigh-spectral-resolution LiDAR (HSRL) is essential for precise detection and retrieval of aerosol optical properties, making it a valuable tool in atmospheric aerosol studies. While the HSRL technique has seen rapid advancements in ultraviolet and visible wavelengths 355 nm/532 nm, development in the near-infrared HSRL domain is constrained by the limitations of spectral discriminators. In 2017, the National Center for Atmospheric Research (NCAR) has proposed a 780 nm near-infrared micro-pulse HSRL technique using rubidium (Rb) atom absorption lines and 780 nm semiconductor lasers. This approach provides a promising solution to the challenges facing near-infrared HSRL and has become a research focal point worldwide. However, the effect of various Rb absorption cell parameters on detection errors in the 780-nm HSRL system remains unexplored. In this paper, we address this gap by analyzing the influence of Rb cell parameters, system signal-to-noise ratio (SNR), and laser frequency stability on detection results, based on the absorption spectrum of rubidium isotope (87Rb). This study offers theoretical guidance for designing 780-nm near-infrared HSRL systems, particularly in optimizing the temperature settings of the Rb cell spectral discriminator.MethodsWe employ the Monte Carlo method in this analysis. First, the HSRL error formula is derived, and the absorption spectrum is obtained based on the hyperfine structure of rubidium atoms. An error analysis model for the 780-nm HSRL system is then established. Subsequently, a simulated atmospheric model is developed (Fig. 6), incorporating the U.S. Standard Atmosphere Model for background aerosols, urban aerosols, and dust. Using this model, we evaluate the effects of system detection SNR, Rb cell temperature fluctuations, laser frequency stability, and the omission of Mie scattering signal transmittance Ta (Ta=0) on detection errors. The Monte Carlo method is applied to establish LiDAR equations under the conditions described, enabling backscattering coefficient retrieval based on theoretical derivation. Retrieval errors are then computed to demonstrate the integrated effect. Specifically, the retrieval error of the backscattering coefficient is calculated under the conditions where the Rb cell operates at 70 ℃ with a ±1 ℃ temperature fluctuation and laser output frequency fluctuation within 100 MHz.Results and DiscussionsHSRL system measurement accuracy is highly sensitive to the SNR, especially at elevated Rb cell temperatures, which can degrade the molecule channel signal. When the Rb cell temperature exceeds 65 ℃, SNR becomes the primary factor affecting measurement results, with retrieval errors reaching up to 20%. In addition, the retrieval error of the backscattering coefficient increases with higher Rb cell temperature due to decreased Rayleigh echo transmittance (Fig. 9). If the Rb cell temperature fluctuation is within ±1 ℃ when the temperature exceeds 65 ℃, the influence on backscattering coefficient retrieval error is relatively minor (Fig. 12). Higher Rb cell temperatures can also help reduce the measurement error from temperature fluctuations. With an Rb cell temperature above 65 ℃ and Mie scattering transmittance Ta set to zero, the backscattering coefficient retrieval error remains below 1%. Moreover, higher Rb cells correlate with reduced retrieval error at higher aerosol concentrations. Finally, fluctuations in laser source frequency significantly influence retrieval results. When frequency fluctuations reach 1 GHz, retrieval errors exceed 10%, even in the absence of other error factors. By contrast, at a 70 ℃ operating temperature with a 100 MHz frequency fluctuation range, the relative retrieval error reduces to 0.1% (Fig. 14).ConclusionsThe operational temperature of the 87Rb absorption cell critically influences HSRL system retrieval accuracy. With an absorption cell length of 63 mm, the recommended temperature range is 65 ℃ to 75 ℃. Within this range, system SNR, laser frequency stability, and Rb cell temperature stability are vital factors influencing detection accuracy. The simulation results demonstrate that when the 87Rb absorption cell is 63 mm in length, operating at 70 ℃ with laser frequency stability within 100 MHz, the comprehensive retrieval deviation of the backscattering coefficient remains below 10% (Fig. 15).

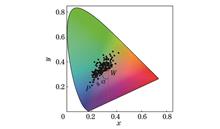

ObjectiveWater color is a fundamental parameter for describing the optical properties of water bodies and encapsulates vital information about the aquatic environment. As the most visually direct indicator in marine surveys, water color reflects not only change in the aesthetic quality of water bodies but also plays a key role in environmental impact assessments, especially in sensitive areas. Water color is influenced by light scattering and changes in environmental conditions, closely related to factors such as chlorophyll, suspended particulate matter (SPM), and the absorption and scattering of colored dissolved organic matter. Previous research has mainly relied on the Forel-Ule index (FUI) for measuring water color. However, due to the complexity and variability of China’s coastal waters, the FUI may not capture detailed water color information or accurately represent the environmental conditions of the water body. In contrast, the hue angle (α) in the Commission internationale de l’éclairage (CIE) color system, as a continuous variable, provides a more accurate representation of water color characteristics and helps extract detailed water quality information. Monitoring water color not only provides vital information on global and regional water quality assessments but also plays a crucial role in marine environmental protection and the maintenance of ecological balance.MethodsBased on data collected during research cruises, including Secchi depth (Zsd), mass concentration of suspended particulate matter (SPM), and phytoplankton absorption coefficient (aph), we develop inversion models for Zsd, SPM, aph (443), and aph (670) using the hue angle as the key variable. These models are validated using the leave-one-out cross validation method. Utilizing hyperspectral remote sensing reflectance (Rrs) data collected during the cruise, we perform stepwise regression analysis with SPSS software. The hue angle serves as the dependent variable, while Rrs values at the MODIS’s central spectral bands act as the independent variables. The data are divided so that 2/3 of data are used to calculate the chroma parameter K. Finally, long-term hue angle information for China’s coastal waters is obtained using satellite Rrs data. This study also compares the effectiveness of the hue angle and the FUI in characterizing changes in water color parameters based on cruise-measured data. The results reveal that the hue angle provides a more detailed and continuous representation of variations in water color parameters.Results and DiscussionsThis study uses Rrs data of MODIS and SeaWiFS to obtain long-term hue angle information for China’s coastal waters, utilizing a method that extracts hue angles from multispectral Rrs (Fig. 6). The highest hue angles are recorded near the coast (around 200°), with values decreasing offshore. The Bohai Sea has the highest average hue angle (180°), followed by the Yellow Sea, which also shows the most significant seasonal variation, with offshore values ranging from 70° to 140°. The East China Sea has the lowest average hue angle (60°) and the least seasonal fluctuation. Seasonal patterns are observed, with hue angles decreasing from spring to summer, reaching their lowest in summer, then increasing in autumn and peaking in winter. In certain characteristic sea areas, the hue angle shows strong covariation with water quality parameters like mass concentrations of Chl-a and SPM. In areas with high hue angles, a significant correlation is observed between hue angles and mass concentration of SPM, while in areas with lower hue angles, strong covariation is observed among hue angles, mass concentration of SPM, and mass concentration of Chl-a (Fig. 7). Using in-situ data from the East China Sea, we develop models for several water quality parameters based on hue angles and validate their accuracy using the leave-one-out cross validation method. This approach can be applied to portable high-definition imaging devices, such as smartphones and digital cameras, to capture ocean water color images, extract hue angle information, and obtain water quality data. A comparison of the hue angle (α) and FUI reveals that the discrete nature of FUI leads to the loss of water color information (Fig. 8). In contrast, the continuous nature of the hue angle captures more detailed color data (Figs. 9 and 10).ConclusionsWe applied the CIE-XYZ color system and a hue angle retrieval method based on satellite multispectral remote sensing reflectance. By combining data from the MODIS and SeaWiFS satellites, a long-term dataset of hue angle for China’s coastal waters was obtained. The study reveals that the hue angle exhibits distinct spatiotemporal distribution characteristics. The Bohai Sea has the highest monthly average (180°), followed by the Yellow Sea (100°), both showing significant seasonal variability. In contrast, the East China Sea has a lower monthly average (60°) with minimal seasonal variability. In addition, there is a strong covariation between hue angles and water quality parameters. Based on cruise-measured data, we developed retrieval models for water quality parameters using hue angle. The results demonstrate that the models for transparency (R²=0.79), suspended particulate matter concentration (R²=0.90), phytoplankton absorption coefficient at 443 nm (R²=0.79), and phytoplankton absorption coefficient at 670 nm (R²=0.80) exhibit high goodness of fit and accuracy. Furthermore, we analyzed and discussed the advantages of using hue angle over traditional water color indices for representing water color information in the complex coastal waters of China. The findings suggest that the hue angle provides a more accurate and effective measure of water color, offering superior capability in conveying aquatic environmental information. This highlights the potential application value of the hue angle as a parameter for accurately expressing oceanic water environmental information.

ObjectiveThe vertical number density of ozone from the ozone mapping and profiler suite (OMPS) limb measurements is firstly retrieved using wavelength pairing and multiplicative algebraic reconstruction technique. Our retrieved algorithm uses radiance in the visible (VIS) band to obtain ozone concentrations at altitudes of 12.5‒39.5 km, with a vertical resolution of 1 km. The results are compared with the OMPS/LP v2.6 ozone profile products provided by the national aeronautics and space administration (NASA), showing high consistency (<6%) between 16‒38 km. The correlation coefficient for the total ozone column in the stratosphere is 0.953. Using these retrievals, we investigate changes in stratospheric ozone concentration following the Tonga volcanic eruption and analyze the key physical and chemical processes affecting ozone concentration. The Tonga eruption releases a large amount of sulfate aerosols into the middle and lower stratosphere at 20°‒60°S. In mid-to-low latitudes of southern hemisphere, the enhanced sulfate aerosols increase ozone concentration in the middle stratosphere while reducing it in the lower stratosphere. Additionally, sulfate aerosols are transported through the Brewer‒Dobson (BD) circulation to Antarctica, where the enhanced Antarctic upwelling and polar stratospheric clouds (PSC) contribute to the enlargement of the polar ozone hole. Ozone plays a crucial role in the evolution of life on Earth. As an important trace gas in the atmosphere, ozone regulates the Earth’s climate, particularly in terms of temperature and energy distribution. Therefore, its concentration, distribution, and temporal evolution of ozone are closely related to research on ozone layer recovery, stratospheric circulation, and temperature response to increasing greenhouse gases. Therefore, obtaining daily global ozone profiles is crucial. In this study, we report the stratospheric ozone concentrations monitored by OMPS, validated against NASA’s OMPS/LP v2.6 products. Additionally, based on the retrievals, we analyze annual changes in stratospheric ozone before and after the Tonga submarine volcano eruption, as well as the chemical and kinetic mechanisms underlying these changes. This research is crucial for understanding how stratospheric ozone protects life on Earth from harmful ultraviolet radiation. We hope our research can provide a technical foundation for future data products from China’s space-based atmospheric remote sensing limb observations.MethodsFirst, the limb radiances of the selected wavelengths are normalized to a reference tangent height that is insensitive to ozone. Using wavelength pairing, we retrieve ozone concentrations at different heights based on the variation in ozone’s absorption of solar radiance in the VIS band. Second, the SCIATRAN radiative transfer model is used to establish limb-simulated radiance. The observed and simulated radiances are transformed into retrieval vectors through radiance normalization and wavelength pairing, respectively. Finally, the multiplicative algebraic reconstruction technique (MART) algorithm is applied iteratively to correct and converge the ozone profile.Results and DiscussionsTo verify our retrievals, we compare them with OMPS/LP v2.6 profiles provided by NASA. The retrieved ozone profiles are in good agreement with OMPS/LP v2.6, with high consistency in structure, peak height, and size [Fig. 5(a)], and a deviation of less than 2% between 18‒31 km [Fig. 5(b)]. The tropical profiles also show good consistency [Fig. 6(a)], with deviations of less than 10% across six latitude bands between 15‒38 km, except for the southern latitude band [Fig. 6(b)]. In addition, the total ozone column in the stratosphere shows a correlation coefficient of 0.953 with OMPS/LP v2.6 (Fig. 7). The retrievals from September 1, 2021, September 1, 2022, and September 1, 2023, are highly consistent with the global map of OMPS/LP v2.6 ozone concentrations (Fig. 8). Based on our data, we compare ozone concentrations on September 1, October 1, and November 16 from 2021 to 2023 (Fig. 9). We find that in mid-to-low latitudes, the abundance of active nitrogen in the stratosphere decreases due to sulfate aerosols formed after the Tonga volcanic eruption, leading to an increase in ozone concentration in the middle stratosphere. Sulfate aerosols also weaken solar radiation, resulting in negative ozone anomalies in the lower stratosphere. These aerosols are transported southward by the BD circulation, causing significant ozone loss in the lower polar stratosphere (60°S). After over a year of sedimentation, total ozone columns in mid-to-high latitudes of the southern hemisphere recover in 2023 (Fig. 11).ConclusionsIn this study, we use wavelength pairing and the MART algorithm to retrieve the stratospheric ozone profiles from OMPS limb measurements. Our retrievals are validated against OMPS/LP v2.6 products provided by NASA, showing good consistency. Additionally, we analyze the influence and mechanisms of the Tonga submarine volcanic eruption on stratospheric ozone before and after the event. Our results demonstrate the effectiveness of wavelength pairing and MART in retrieving OMPS/LP ozone profiles, providing a solid technical foundation for future applications. However, studying stratospheric ozone recovery requires long-term, consistent datasets, and using the same retrieval scheme is critical in minimizing discrepancies between different satellite data.

ObjectiveCarbon dioxide (CO2) and water vapor (H2O) play crucial roles in global climate change through the carbon?water cycle. Semi-heavy water, as a stable isotope of water vapor, helps deepen our understanding of the water cycle process. A large number of active and passive remote sensing technologies based on absorption spectroscopy have been applied to the detection of atmospheric CO2 and H216O. However, passive remote sensing, which relies on sunlight, cannot provide continuous day-and-night monitoring, while active remote sensing based on differential absorption LiDAR (DIAL) can effectively compensate for this limitation. Research has shown that H216O is the primary interfering gas in CO2 detection that causes measurement errors. Since dual-wavelength DIAL can only measure one type of gas, the interference effect of H216O on CO2 is minimized by selecting an appropriate absorption line, where the absorptions of H216O at the on-line and off-line wavelengths are almost equal. However, HD16O, a stable isotope of H216O, also affects the detection accuracy of CO2, though this is rarely addressed. In addition, in terms of vertical height, especially within the troposphere, H2O and atmospheric parameters can vary significantly. To date, no DIAL system can simultaneously provide vertical profile measurements for CO2 and HD16O, and there has been limited theoretical analysis and feasibility verification of this. In the present study, we report the sensitivity analysis of simultaneous remote sensing of atmospheric CO2 and HD16O profiles using LiDAR. This analysis is based on the MODTRAN atmospheric model. This sensitivity analysis aids in improving the inversion algorithm for the concentration profiles of CO2 and HD16O, which is significant for enhancing the detection capability and inversion accuracy of both ground-based and airborne DIAL systems. It also provides a theoretical framework for accurate remote sensing of greenhouse gases and a deeper understanding of climate change in the context of carbon neutrality.MethodsThe sensitivity analysis of simultaneous remote sensing of atmospheric CO2 and HD16O profiles using LiDAR, based on the MODTRAN atmospheric model, is conducted. First, the mixed spectral lines of CO2 and HD16O under different atmospheric models are calculated using HITRAN spectroscopy parameters, and the feasibility of simultaneous measurement of these two gases by LiDAR is verified by selecting appropriate absorption lines. Next, considering the variation of atmospheric parameters with vertical height, the column and range-resolved concentration inversion errors of CO2 and HD16O are evaluated based on the optimization of weight functions and spectral line shapes. Further investigation is conducted on atmospheric factors, the frequency stability of LiDAR laser emissions, the overlapping effects of CO2 and HD16O, and the influence of altitude changes on concentration inversion errors.Results and DiscussionsIn response to potential sources of error in the inversion of column and range-resolved concentrations of CO2 and HD16O, we comprehensively consider atmospheric factors, laser frequency stability, the overlap effect of the two gases, and altitude-induced changes in concentration inversion. When the temperature variation is ±1 K, the column concentrations of CO2 and HD16O reach their maximum temperature sensitivity of 0.18% and 0.09% at altitudes of 12.6 km and 23.4 km, respectively. The range-resolved concentrations of the two gases show maximum sensitivity of 0.21% and 0.38% near the tropopause. At a pressure variation of ±0.5 hPa, the sensitivity of both column and range-resolved concentrations of CO2 and HD16O gradually increases with altitude. The column concentration errors for CO2 and HD16O reach 0.33% and 0.03% at the top of the stratosphere, while the range-resolved concentration errors reach 0.54% and 0.83% at 20 km. The frequency sensitivity for CO2 column and range-resolved concentrations is generally higher than that of HD16O, and the frequency sensitivity at the center of both gas absorption lines is close to zero at any altitude. When the H2O mixing ratio variation is 5%, errors in CO2 column and range-resolved concentrations due to overlapping effects decrease with increasing altitude, reaching 0.15% and 0.01% at sea level in tropical and sub-arctic winter models, respectively. For altitudes greater than 5 km, the range-resolved concentration error of CO2 at the line centers for all atmospheric models is less than 0.001%, and the error caused by the overlapping effect can be ignored. When the tropic model and the 1976 U.S. standard atmospheric model are used, without considering the absorption of HD16O, the errors for the range-resolved CO2 concentrations at sea level are 1.87% and 0.60%, respectively. Even under the sub-arctic winter model, the column concentration error at the center line reaches 0.17%, which confirms the non-negligible role of HD16O in CO2 inversion. In addition, with an 80 dB signal-to-noise ratio at the LiDAR origin in mid-latitude regions, the altitude sensitivity of CO2 and HD16O column concentrations at the top of the troposphere is 0.10% and 0.18%, respectively, while the sensitivity of range-resolved concentrations is 1.1% and 6.3%, respectively.ConclusionsTo meet precise greenhouse gas monitoring requirements, we conduct a theoretical analysis on the simultaneous remote sensing of atmospheric CO2 and HD16O using LiDAR. Based on the MODTRAN atmospheric model, independent and mixed optical depth spectra of CO2, H216O, and HD16O are derived under different models. The absorption lines suitable for detecting CO2 and HD16O are identified by analyzing the relative absorption intensities and spectral parameters of each gas. The spectral broadening of CO2 and HD16O caused by collision and Doppler effects is calculated based on temperature and pressure profiles from the 1976 U.S. standard atmospheric model. The two spectra exhibit Voigt line shapes in the altitude ranges of 9.4?34.5 km and 8.3?33.8 km, and their absorption lines are optimized for different altitudes. The systematic errors in range-resolved and column concentrations of CO2 and HD16O, considering factors like atmospheric conditions, laser frequency stability, and the overlapping effect of the two gases, are analyzed. Our findings confirm that HD16O plays a critical role in CO2 inversion at the R16 line. Considering the errors introduced by these factors, inversion accuracy for CO2 and HD16O column concentrations of better than 1% and 2%, respectively, and range-resolved concentrations of 2% and 8%, respectively, can be achieved in the troposphere of mid-latitudes.

ObjectiveWhen laser propagates through the atmosphere, it encounters atmospheric turbulence that distorts the laser wavefront, resulting in turbulence effects such as beam drift, spot expansion, scintillation, and fluctuations in the angle of arrival. In studies about these turbulence effects, Fried’s atmospheric coherence length (r0) expresses changes in both the amplitude and phase of light waves and serves as a representation of the overall intensity of atmospheric turbulence, making it a standard metric for quantitative description. We propose the use of the differential image motion monitor (DIMM) to measure this parameter. During the measurement, relevant parameters, including the device’s exposure time, must be specified. Since the turbulence freezing time varies across different regions, the selected exposure time for measuring atmospheric coherence length also varies. The different exposure times of the equipment affect the measurement results. Therefore, we propose a dual-camera synchronous measurement scheme based on a beam-splitting prism, which is built upon the traditional DIMM, to construct a system for measuring the optimal exposure time. This system employs a beam-splitting prism with a 50∶50 splitting ratio to achieve comparative measurements of different exposure time under “atmospheric freezing” conditions. Through comparative experiments and evaluation methods based on the ratio of system uncertainty and centroid variance, the optimal exposure time is selected.MethodsWe first summarize the theory of atmospheric coherence length, the relationship between coherence length and exposure time, and the theoretical basis for selecting exposure time. Based on this, we improve the existing atmospheric coherence length measurement instrument (aperture of 280 mm, focal length of 2800 mm, sub-aperture center distance of 205 mm, sub-aperture diameter of 61 mm) by using a beam-splitting prism to achieve dual-camera synchronous measurement. Experiments are conducted using this system to collect image data generated during the process. Since the star images exhibit Gaussian spot characteristics, we propose an image processing method based on centroid extraction. This method first locates and separates the star spots using adaptive threshold segmentation and morphological operations, and then calculates the centroid position through a grayscale-weighted subpixel subdivision algorithm, achieving subpixel accuracy in star centroid extraction. Based on the centroid coordinates and theoretical formulas, we derive the atmospheric coherence length parameter. Next, we analyze the effect of different exposure time on the system’s measurement results. By comparing the centroid variance ratio and system uncertainty ratio between the experimental and control groups, we evaluate the measurement accuracy under different exposure time and determine the optimal exposure time under local measurement conditions. Finally, we compare the selected optimal exposure time measurement results with long-term measurement results under conventional exposure time, confirming that the optimal exposure time significantly improves measurement accuracy and system performance. This study provides theoretical support and experimental evidence for the accurate measurement of atmospheric coherence length and offers a reference for optimizing exposure time selection in various measurement scenarios.Results and DiscussionsIn the synchronous measurement system (as shown in Fig. 1), we implement an adaptive exposure time measurement function. Through this system, we dynamically adjust the exposure time to adapt to changes in atmospheric turbulence. Statistical analysis of the star image centroid extraction (Fig. 3) demonstrates that our proposed method can accurately extract the centroid of the spots, further validating the precision and stability of centroid extraction. Regarding the selection of the optimal exposure time, we evaluate measurement performance at different exposure time through statistical analysis of system errors and centroid variance (Fig. 6). The analysis results show that when the exposure time is 5 ms, the system error is minimized, and the centroid variance fully reflects changes in atmospheric turbulence. Therefore, for measuring atmospheric coherence length in the Changchun region, we recommend using 5 ms as the standard exposure time. Subsequent long-term measurement data comparisons further validate the effectiveness of this choice. The 5 ms exposure time captures richer atmospheric turbulence signals and accurately reflects the dynamic turbulence changes at the time. This provides a reliable experimental basis for efficient measurement of the system in practical applications.ConclusionsWe propose a dual-camera synchronous measurement scheme based on a beam-splitting prism, constructing a system to determine the optimal exposure time for measuring atmospheric coherence length in this region. The scheme uses a beam-splitting prism to replace the original single detector with two detectors, ensuring that each detector receives the same amount of energy. By simultaneously detecting atmospheric turbulence with both detectors, we conduct comparative experiments under atmospheric freezing conditions, avoiding the impact of continuously changing turbulence on the experiment. We process the images collected by the detectors for centroid extraction and calculate the atmospheric coherence length at different exposure time. By analyzing the system’s measurement accuracy and the variance of the spot centroids, we determine the optimal exposure time. Our research results indicate that a 5 ms exposure time should be chosen for measuring local atmospheric coherence length. Under strong wind conditions, we suggest an exposure time of 4 ms to better capture high-frequency signals, while in windless conditions, we consider 6 ms. Comparative measurements of local atmospheric coherence length at these exposure time verify that the exposure time derived from our experimental conclusions can better reflect changes in atmospheric turbulence. This study is of great significance for improving the accuracy of atmospheric coherence length measurements.

ObjectiveAccurate measurement of seawater absorption coefficients is important for ocean radiative transfer simulations, biogeochemical parameter inversion, and calibration and validation of ocean color satellites. Reflective-tube absorption meters are the most commonly employed instruments for seawater absorption coefficient measurement, but the measured absorption coefficient must be corrected for scattering due to instrumental design limitations. There have been a few studies evaluating the ac-9/ac-s scattering correction methods. However, there is a lack of application evaluation of these correction methods applied to the coastal waters of China. We evaluate the ac-9/ac-s scattering correction methods based on field measurements from the coastal waters of China’s sea. Based on the evaluation, guidance is provided for the selection of scattering correction methods when reflective-tube absorption meters are employed in different water bodies.MethodsWe introduce five scattering correction methods to evaluate their performance on the field measured data from the Bohai Sea, Yellow Sea, East China Sea, and South China Sea. The data includes the absorption coefficient measured by the reflective-tube absorption meters of ac-9, ac-s, and the point source integrating cavity absorption meter (OSCAR), the backscattering coefficients measured by the backscattering instrument HS6, the volume scattering function measured by LISST-VSF and the temperature, salinity, and depth data measured by CTD. The absorption coefficients are measured by adopting ac-9 and ac-s for two cruises to independently evaluate the performance of different scattering correction methods applied to ac-9 and ac-s. In the South China Sea, the absorption coefficient measured by OSCAR is taken as the true value to evaluate the application of scattering correction methods to the ac-s/ac-9 measurements. In the Bohai Sea, Yellow Sea, and East China Sea, there is no synchronous OSCAR measurement. Meanwhile, the absorption coefficient corrected by the volume scattering correction method is utilized as a reference for evaluating other scattering correction methods. Since the volume scattering function is measured independently, the error obtained by integrating it in the limited angle is the scattering error measured by the reflection-tube absorption coefficient measurement instrument, thereby making this method a more accurate scattering correction method. Prior to each cruise, all instruments have undergone rigorous calibration, including pressurized flow ultra-pure water calibration for ac-s and ac-9 in the laboratory, integrator cavity reflectivity calibration for OSCAR, and calibration for HS6 to ensure the accuracy of field measurements.Results and DiscussionsThe results show that for the clean water in the South China Sea, little difference is found among different scattering correction methods. The baseline method and volume scattering correction method have better correction effects, and the relative errors after data correction are 25.05% and 23.24% respectively. The relative error of the semi-empirical correction method is 36.01%. The performance of the proportional method and iteration correction method is poor. In the Bohai Sea, the proportional method and semi-empirical correction method perform better, with relative errors of 29.22% and 25.02% respectively. After the correction of the baseline method and iterative method, the correction results of each band have a large deviation from the reference value. In the Yellow Sea, the proportional method is relatively sound, with 23.17% of the relative error. The baseline method and the semi-empirical correction method are similar, and the relative error after data correction is 30.94% and 31.68% respectively. In the East China Sea, the semi-empirical correction method has the best correction effect, and the relative error after data correction is 14.71%, followed by the proportional method. Additionally, the relative error after data correction is 24.02%, and the baseline method performs slightly worse.ConclusionsWe evaluate five representative scattering correction methods for reflective-tube absorption coefficient measurement based on field data from several regions of China’s sea. These methods include the baseline method, proportional method, semi-empirical correction method, iterative correction method, and volume scattering function correction method. Generally, all these scattering correction methods can reduce the scattering error from reflective tubes, and make the absorption coefficient approximate to the true value. However, the performance of each method varies between different types of water. Based on the analysis results of all the data, our suggestions for selecting scattering correction methods for the reflective-tube absorption meters are as follows. The baseline method is more suitable for clean water bodies (the South China Sea), the semi-empirical correction method is suited for turbidity water (the Bohai Sea, the Yellow Sea, the East China Sea, and coastal water), and the volume scattering method is preferred if the measured volume scattering function of water is available.

ObjectiveLidar is a powerful tool for detecting atmospheric temperature, humidity, and aerosols. The lidar echo signal forms the basis for retrieving these parameters. Currently, data acquisition cards with fixed sampling rates are widely used to sample lidar echo signals. However, the bandwidth of the lidar echo signal is much larger than the sampling rate of the acquisition card, which inevitably leads to distortion of the signal. In addition, high-speed data sampling consumes substantial resources for data acquisition, storage, and processing, which is particularly problematic in space-borne lidar systems. With the development of digital signal processing technologies, compressed sensing has found widespread application in data compression, noise reduction, and other fields. We aim to reconstruct the lidar echo signal precisely with fewer sampling points using compressed sensing.MethodsIn this paper, compressed sensing theory is used to reconstruct the lidar echo signal with fewer sampling points. First, the sparsity of the lidar echo signal is analyzed using the db4 wavelet base. Then, based on the wavelet basis and the orthogonal matching pursuit (OMP) algorithm, the downsampling and compressed reconstruction of the lidar echo signal are investigated under different noise conditions. The error between the reconstructed and original lidar echo signals is analyzed in detail. Finally, the extinction coefficient of aerosols below 10 km is retrieved using the reconstructed lidar echo signal through the Fernald method, and the error between the retrieved extinction coefficient and the standard atmosphere model is analyzed. The validity of the reconstructed lidar echo signal for data retrieval is confirmed.Results and DiscussionsIn the wavelet decomposition, three decomposition layers are used, with 0.01% of the maximum value of the wavelet coefficient set as the threshold. The research results show that most of the wavelet decomposition coefficients are close to zero, which indicates the sparsity of the lidar echo signal in the wavelet basis (Fig. 3). When the lidar detection height is 10 km, the total number of decomposition coefficients is 10020, with 1238 non-zero coefficients after thresholding. Using these non-zero coefficients, the lidar echo signal is reconstructed through inverse wavelet transform. The similarity of the reconstructed lidar echo signal is as high as 0.999, and the root-mean-square error is 8.666×10-17, further confirming the high sparsity of the lidar echo signal in the wavelet basis (Fig. 4). Based on the high sparsity of the lidar echo signal, downsampling and compressed reconstruction are performed under different noise conditions. The results show that the lidar echo signal can be reconstructed using fewer sampling points through compressed sensing. The signal-to-noise ratio (SNR) and the similarity of the reconstructed signal improve as the SNR of the input signal increases. When the data compression ratio is 5∶1 and the input signal’s SNR exceeds 25 dB, the similarity between the reconstructed and original signals is greater than 0.997 (Table 3). The SNR of the reconstructed signal is higher than that of the input signal, indicating that the OMP algorithm has a certain denoising effect (Fig. 8). Finally, the extinction coefficient of aerosols below 10 km is retrieved using the reconstructed lidar echo signal through the Fernald method. The lidar echo signal is reconstructed from the input signal, with an SNR of 30 dB. The profile of the extinction coefficient is consistent with the standard atmosphere model. The relative error of the retrieved extinction coefficient at different heights is below 15.81%, confirming the validity of the reconstructed lidar echo signal for retrieving aerosol extinction coefficients (Fig. 11).ConclusionsFirst, the high sparsity of the ground-based lidar echo signal is confirmed. The results show that when the detection distance is 10 km, the sparsity of the lidar echo signal in the wavelet basis is only 1238. Second, the lidar echo signal can be accurately reconstructed using the orthogonal matching pursuit algorithm. The SNR of the input signal significantly affects the reconstruction quality. When the data compression ratio is 5∶1 and the SNR of the input signal exceeds 25 dB, the similarity between the reconstructed and original signals exceeds 0.997, and the root-mean-square error is less than 1.769×10-13. Finally, the extinction coefficient of aerosols below 10 km is retrieved using the reconstructed lidar echo signal through the Fernald method. When the input signal’s SNR is 30 dB, the absolute error in the retrieved aerosol extinction coefficient is less than 2.440×10-7 m-1, and the relative error is less than 15.81%, which verifies the effectiveness of the reconstructed signal for retrieving aerosol extinction coefficients.

ObjectiveThe in-situ measurement of cloud microphysics parameters such as cloud droplet spectrum and particle number concentration, is of great significance to research in cloud and precipitation physics, weather modification, and optoelectronic engineering for national defense. Currently, various methods for observing cloud microscopic characteristics have been developed, combining space-based in-situ observation, ground-based remote sensing, and space-based remote sensing. Due to differences in data and the theoretical basis among different inversion methods, significant variations exist among different cloud inversion products. Scholars typically aim to validate the accuracy of various remote sensing inversion products using in-situ measurements of cloud parameters. The measurement of cloud particles using research aircraft is a relatively representative in-situ method. However, the high-speed motion of the aircraft can cause particle breakup, and the rapid changes in the flow field can lead to errors in particle spectrum measurement. Additionally, due to safety concerns, research aircraft cannot operate in harsh environments such as thunderstorm centers and supercells. Lightweight cloud particle detectors, mounted on balloons and UAVs, have garnered increasing attention as an important supplementary method for in-situ measurements. Therefore, we design a lightweight cloud particle imager (LCPI) based on forward scattered light imaging. The LCPI can meet the needs of both ground-based and balloon-borne observations.MethodsLCPI primarily utilizes the Tyndall effect to capture particle images in dark-field conditions. LCPI is mainly composed of three parts: a ring-shaped light source, a magnifying lens, and an imaging unit (CMOS). Designed for field experiments, LCPI features a lightweight and miniaturized design, weighing less than 2 kg and measuring 110 mm (base diameter) ×310 mm (height). The CMOS has a resolution of 640 pixel×480 pixel with a pixel size of 3.75 μm. A magnifying lens with a magnification of 2.5 is used in front of the CMOS, which results in a theoretical resolution of 1.5 μm and an effective sampling field reduced to 960 μm×720 μm. The ring-shaped light source consists of 8 high-brightness white LEDs with uniform specifications, mounted on a circular base to minimize beam divergence. When there are no cloud particles in the sampling volume, the 8 illumination beams intersect at the front of the magnifying lens and illuminate the sampling volume. Since light cannot directly enter the CMOS, the image appears visually pure black in this scenario. As cloud particles enter the sampling volume with airflow, these 8 illumination beams are scattered by the cloud particles. Forward scattered light from particles forms bright images on the CMOS through the magnifying lens. To avoid repeated sampling of the same particles, sampled air flows out through small holes beneath the fan, which are located underneath the fan.Results and DiscussionsTo verify the accuracy of microphysical characteristic parameters such as particle size, shape, and spectrum distribution of cloud particles detected by LCPI, we first design a high-precision calibration device for it and calibrate the instrument in the laboratory (Fig. 3). The magnification of the imaging system can be directly obtained through magnification calibration, and the actual magnification of LCPI is 2.5 (Fig. 4). Measurement accuracy calibration shows that LCPI can accurately measure particle sizes under sunny, cloudy, and night conditions (Fig. 5). The measurement error of LCPI is within ±10% (Fig. 6). Sampling volume calibration demonstrates that the sampling volume increases linearly with particle diameter (Fig. 7). To further evaluate LCPI’s performance in measuring actual cloud and fog conditions, we conduct a comparative experiment in Lushan Mountain using LCPI and FM-120. The experimental results show good agreement between the two instruments (Fig. 9). The consistency in particle number density, volumetric water content, and mean diameter obtained by the two instruments reach 0.9316, 0.8221, and 0.8645, respectively, which indicates that LCPI can accurately measure microscopic cloud characteristics (Fig. 11).ConclusionsIn this paper, we present the calibration and preliminary experimental results of a novel LCPI. Based on the dark-field imaging principle, the instrument uses a ring-shaped light source composed of 8 high-brightness white LEDs to improve the imaging effect of small particles, which effectively solves the problem where small particles are easily submerged by background light and cannot be imaged. To ensure measurement accuracy, we design and complete magnification, measuring accuracy, and sampling volume calibration using a high-precision calibration platform developed for this purpose. The calibration results show that the actual magnification of the detector is 2.5, the measurement error is within ±10%, and there is a linear relationship between sampling volume and particle diameter. The particle size can be accurately measured under different lighting conditions. Comparative observational experiments with LCPI and FM-120 are conducted at Lushan Mountain. The comparison reveals high consistency in cloud particle number density (0.9316), volumetric water content (0.8221), and average diameter (0.8645), which proves the LCPI’s accurate measurement of cloud particle microphysical characteristics. Compared to the FM-120, this instrument can also capture images of particles, which can subsequently be used to study cloud microphysical processes involving particles of various shapes, such as ice clouds and mixed-phase clouds.

ObjectiveWireless UV scattering communication is a technology that leverages the scattering of atmospheric particles to enable wireless communication. Its strong scattering characteristics make it suitable for specialized applications, such as non-line-of-sight (NLOS) communication. However, these same characteristics limit it to short-range transmissions and cause significant path loss. Moreover, atmospheric temperature and pressure variations can lead to fluctuations in the air’s refractive index, causing turbulence and random signal fluctuations at the receiver. To mitigate high path loss and signal scintillation from turbulence, relay-assisted UV optical communication has emerged as an effective solution. Most existing UV relay systems are designed for terrestrial applications, while ground-to-air communications remain underexplored. Unmanned aerial vehicles (UAVs) offer a promising option for mobile air relays due to their high maneuverability, compact design, and low cost. As UAV technology continues to advance, UAV-based communication systems are expected to play a vital role in next-generation wireless networks. UAV-assisted UV communication dynamically optimizes relay positions and establishes flexible NLOS links, which traditional ground relays lack.MethodsIn this paper, we propose a novel framework for analyzing and optimizing a decode-and-forward (DF) relay in UAV-assisted NLOS UV communication systems. The analysis accounts for attenuation losses and atmospheric turbulence. Specifically, the effects of log-normally distributed turbulence on both the source-to-UAV and UAV-to-destination links are evaluated. The probability density function (PDF) of the ground-to-air link is derived to establish closed-form expressions for the end-to-end outage probability and the average bit error rate (ABER) under the DF relay protocol. In addition, we explore the optimal system and channel parameters to enhance the UAV-assisted UV relay system’s performance. We evaluate the performance of UAV-assisted NLOS UVC systems, analyze the influence of various system and channel parameters, and provide valuable engineering insights for optimizing UAV-assisted NLOS UVC systems.Results and DiscussionsThe system’s performance is analyzed based on two key metrics: outage probability and ABER. At a power margin of 6 dB, an increase in turbulence intensity from Cn2=5×10-15 m-2/3 to Cn2=1×10-14 m-2/3 leads to approximately a fourfold rise in the outage probability for M=1. However, an outage probability of 1.6×10-6 remains within acceptable performance limits. Interestingly, when atmospheric turbulence intensity rises from Cn2=5×10-15 m-2/3 to Cn2=1×10-14 m-2/3, the UAV-assisted system demonstrates improved performance rather than degradation. This is attributed to the UAV’s ability to reduce the effects of turbulence through its flexible positioning (Fig. 11). In addition, the optimal distance between the UAV and the source transmitting node remains nearly constant regardless of changes in turbulence intensity (Fig. 13), indicating that the optimal relay position is unaffected by atmospheric variations.ConclusionsUsing UAVs as relay nodes in UV optical communication systems not only extends communication coverage but also mitigates atmospheric impairments affecting UV signal quality. In this paper, we propose and evaluate a UAV-assisted UV optical communication system using a DF relay under low-altitude turbulence. A log-normal fading model, accounting for both path loss and turbulence-induced fading, is developed. Closed-form expressions for the source-to-destination outage probability and ABER are derived from the PDF of the channel power fading factor. Using these analytical expressions, we investigate the effects of various atmospheric turbulence intensities, system parameters, and channel parameters on the performance of the airborne UV relay system. Simulation results show that the proposed system achieves a performance improvement of about 10 dB with an outage probability of 10-6. The relay position significantly influences system performance, although the optimal relay position remains unaffected by changes in atmospheric turbulence.

ObjectiveEfficient identification of orbital angular momentum (OAM) modes in vortex beams is critical for enhancing capacity and spectral efficiency in wireless optical communication systems. However, turbulent atmospheric channels pose significant challenges due to phase distortion in vortex beams and the complexity of traditional optical approaches. In this paper, we propose a novel methodology that integrates an enhanced convolutional neural network (CNN-transformer) hybrid model with double-slit interference. The proposed approach enables simultaneous and precise identification of both the magnitude and sign of high-order OAM modes under turbulent atmospheric conditions, offering significant improvements in recognition accuracy and system performance.MethodsTo address the challenges of identifying OAM mode magnitude and sign in turbulent atmospheric environments, we propose a novel method combining an improved CNN-transformer hybrid model with double-slit interference. When Laguerre-Gaussian (LG) beams propagate through turbulent atmospheres, phase distortions result in skewed and twisted interference fringes when passing through a double slit. These patterns are captured and processed using the proposed CNN-transformer hybrid model, named CACSIV3-Net. The model employs Inception~~V3 as its backbone and incorporates a coordinate attention module (CAM) to dynamically weight channel relationships and spatial features. In addition, the cross-shaped window transformer (CSWT) is introduced to extract multi-scale features and long-range dependencies, achieving high-precision OAM mode recognition.Results and DiscussionsIn this paper, we propose an improved CNN-transformer hybrid model, CACSIV3-Net, designed to enhance the recognition accuracy of OAM modes in turbulent atmospheric environments. To evaluate its performance, we compare CACSIV3-Net with mainstream classification networks (AlexNet, VGGNet, ResNet, and Inception~~V3) using identical system configurations and hyperparameter settings. Training is conducted on an LG beam double-slit interference dataset across varying atmospheric turbulence conditions. The performance results, illustrated in Fig. 4 show that CACSIV3-Net achieves the highest Top-1 accuracy for OAM modes, reaching 96.45%. This represents improvements of 24.00, 14.25, 11.12, and 5.34 percentage points over AlexNet, ResNet, VGGNet, and Inception~~V3, respectively. In addition, CACSIV3-Net demonstrates the fastest reduction in average loss within the first 50 epochs and maintains convergence after approaching 0.1. Comprehensive analysis indicates that CACSIV3-Net offers superior adaptability and higher recognition accuracy for LG beam datasets under unknown intensity turbulence compared to other networks. To further analyze its components, ablation experiments are conducted by progressively integrating CAM and CSWT to evaluate their influence on OAM mode recognition, with results provided in Fig. 5. As shown in Fig. 5(a), the ROC curve of CACSIV3-Net is closest to the upper-left corner, achieving a micro-averaged area under the curve of 0.79, outperforming models such as Inception~~V3, Inception~~V3+CAM, and Inception~~V3+CSWT. This indicates superior decision-making ability and stability. CACSIV3-Net processes 24, 15, and 7 more images per second compared to the baseline models, including Inception~~V3, Inception~~V3+CAM, and Inception~~V3+CSWT, respectively, reducing the total recognition time by 2.8, 0.9 and 0.4 s, as shown in Fig. 5(b). This demonstrates the higher recognition efficiency of the CACSIV3-Net model. The classification performance metrics indicate that incorporating both CAM and CSWT into the Inception~~V3 model results in optimal performance, with an accuracy of 91.55%, precision of 91.29%, recall of 91.33%, and F1-score of 91.29%, as shown in Fig. 5(c). The confusion matrix shown in (Fig.6) illustrates the prediction performance of CACSIV3-Net across 20 OAM modes, with sparse and low-proportion off-diagonal elements, signifying excellent classification capabilities. Moreover, robustness tests conducted on three newly added test sets under conditions of noise intensity σ=0.1, transmission distance z=2000 m, and beam wavelength λ=850 nm achieve OAM mode recognition accuracies of 80.1%, 85.4%, and 87.95%, respectively, as shown in Table 1 and Fig. 7.ConclusionsIn this paper, we propose an improved CNN-transformer hybrid model integrated with double-slit interference for high-precision OAM mode recognition, By embedding CAM into the Inception~~V3 backbone and utilizing CSWT, the model captures long-range dependencies and enhances recognition accuracy in turbulent atmospheric environments. The trained model achieves 96.45% accuracy for OAM modes ranging from -10 to +10 at a transmission distance of z=1000 m, an improvement of 4.9 percentage points over the baseline network. In addition, robustness tests are conducted on three newly added test sets under conditions of noise intensity σ=0.1, transmission distance z=2000 m, and beam wavelength λ=850 nm, yielding OAM mode recognition accuracies of 80.1%, 85.4%, and 87.95%, respectively. This method provides a novel and effective solution for high-order OAM mode recognition in turbulent environments, with significant potential for OAM multiplexing communications.

ObjectiveFree-space optical communication (FSOC) offers significant advantages in high bandwidth, low latency, electromagnetic interference resistance, and high confidentiality due to its excellent beam characteristics, making it well-suited for long-distance, large-capacity data transmission. However, optical signal propagation inevitably passes through the Earth’s atmosphere, which significantly influences the signal. In particular, the scintillation caused by atmospheric turbulence results in received power jitter and inter-symbol interference, leading to a degradation of the signal-to-noise ratio (SNR) and an increase in the bit error rate (BER), which limits the transmission efficiency, stability, and reliability of high-speed optical communication systems. Existing compensation methods often require additional communication feedback links, and for atmospheric turbulent channels with rapidly changing fading characteristics, compensation within the turbulence coherence time is challenging. Utilizing the channel reciprocity property to obtain real-time channel state information can substantially reduce delay, but the actual accuracy of turbulence fading compensation is still limited by device noise and nonlinear effects. In this paper, we propose a real-time scintillation suppression system for atmospheric optical transmission based on fuzzy adaptive control, establishing a correlation model between reciprocal channel state information and optical intensity scintillation. A turbulence scintillation adaptive suppression algorithm is proposed and deployed on an field programmable gate array (FPGA) platform to improve the pre-compensation of light intensity scintillation at the transmitter. To demonstrate the effectiveness of our approach, we build an experimental system for atmospheric optical transmission scintillation suppression and show that it suppresses amplitude jitter in received optical signals across various atmospheric turbulence environments. This represents a breakthrough in stable optical signal transmission technology.MethodsA real-time optical intensity scintillation suppression model is established based on the bidirectional reciprocal channel by leveraging the relationship between channel state information and optical intensity scintillation. By adaptively controlling the transmitted optical power, pre-compensation for optical intensity scintillation at the transmitter is achieved. In this scenario, the optical terminals at both ends of the communication, Alice and Bob, interact with each other. The detector at Alice receives a beacon optical signal from Bob, which is affected by atmospheric turbulence. The system extracts transient turbulence decay characteristics from the received signal. Based on this, Alice generates an optical power compensation signal to adaptively adjust the transmit power, compensating for the turbulence fading the signal will experience in the turbulent channel. The accuracy and real-time performance of the compensation signal generation are crucial for effectively compensating turbulence fading. To address the challenges posed by photoelectric conversion device noise, as well as the nonlinear effects of optical power regulation devices such as optical attenuators and erbium-doped optical fiber amplifiers, we propose a transmit power adaptive control algorithm to ensure stable control of the devices during the duration of reciprocity. The algorithm is implemented on an FPGA, which enables powerful parallel data processing. During each operation cycle, the light intensity jitter signal is filtered and extracted. The optical power compensation value required for steady amplitude transmission control is calculated based on the turbulence decay signal and the feedback control signal. The fuzzy PID control algorithm then adjusts the control parameters according to the system state, calculating the optimal compensation signal for the next moment, thus achieving adaptive transmit power control.Results and DiscussionsTo verify the suppression effect of turbulence perturbations, an amplitude jitter suppression experiment based on channel reciprocity is conducted (Fig. 5). The test is set up with bidirectional reciprocity between Alice and Bob, with acquisition cards at both ends sampling at 1 kHz to synchronously capture turbulence fading signals (Fig. 6). By calculating the correlation coefficient, the reciprocity of the communication link is maintained above 0.9. To assess the stability of signal amplitude transmission, the received light intensity before and after transmit power adaptive compensation is compared under conditions of maximum and minimum light intensity scintillation (Fig. 7). The results show that after compensation, the received light intensity stabilizes in a straight line, with only minimal jitter due to measurement equipment noise. The experiment, measuring optical signal amplitude jitter suppression over several hours with varying turbulence intensity from afternoon to night, compares the received signal scintillation index before and after compensation (Fig. 9). The results show that before compensation, the received signal power fluctuates between -22 dBm and -31 dBm. At maximum turbulence intensity during midday, the scintillation index reaches 0.6602, while at lower turbulence intensity during the night, the scintillation index drops to 0.0287. The depth of scintillation index compensation remains consistently above -16 dB, regardless of changes in turbulence intensity.ConclusionsIn this paper, we investigate a real-time method for suppressing free-space optical signal amplitude jitter caused by atmospheric turbulence. Based on bidirectional reciprocity, a real-time optical intensity scintillation suppression model is developed, utilizing the relationship between channel state information and optical intensity jitter. To meet the real-time requirement for turbulence fading compensation, we design a transmit power adaptive control algorithm, which is deployed on the FPGA platform to ensure that signal processing converges faster than the channel coherence time. The proposed transmit power adaptive system is verified through an outfield wireless laser transmission test under varying turbulence intensity. The experimental results demonstrate that the transmit power adaptive compensation significantly reduces the scintillation index of the received optical signal. Specifically, the scintillation index decreases from 0.6602 to 0.0127 under maximum turbulence intensity and from 0.0287 to 0.0002 under minimum turbulence intensity. In addition, the depth of scintillation index compensation remains consistently above -16 dB, effectively mitigating the amplitude jitter caused by turbulence and enabling stable transmission under varying turbulence conditions.