View fulltext

View fulltext

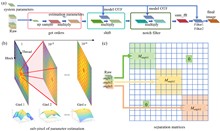

The demand for high-resolution low-photo-toxicity imaging in life sciences has led to the development of the Cu-3DSIM, a high-fidelity CUDA-accelerated parallel reconstruction method for 3D structured illumination microscopy (3DSIM). This method addresses the challenges of reconstruction time and quality, which are crucial for live cell imaging, by leveraging graphics processing unit parallel computing to achieve significant speedups in super-resolution reconstruction. Cu-3DSIM optimizes storage space within the limited graphics processing unit (GPU) memory, enabling stack 3DSIM reconstruction with high accuracy and reduced computational resource consumption. The method includes parallel computing for cross-correlation parameter estimation, enhancing frequency vector computation to sub-pixel accuracy. Experiments demonstrate Cu-3DSIM’s superior performance over traditional methods, with an order-of-magnitude improvement in reconstruction speed and memory efficiency, while maintaining the quality of Open-3DSIM. The approach also incorporates Hilo information to suppress background noise and reduce artifacts, leading to clearer reconstruction results. Cu-3DSIM’s capabilities allow for rapid iteration and observation of cellular states, advancing our understanding of cellular structures and dynamics.

We present a novel approach for capturing gigapixel-resolution micron-scale three-dimensional (3D) images of large complex macroscopic objects using a 9×6 multi-camera array paired with a custom 3D reconstruction algorithm. Our system overcomes inherent trade-offs among resolution, field of view (FOV), and depth of field (DOF) by capturing stereoscopic focal stacks across multiple perspectives, enabling an effective FOV of approximately 135×128 degrees and capturing surface depth maps at lateral resolutions less than 40 µm and depth resolutions of about 0.5 mm. To achieve all-in-focus RGB (red, green, and blue) composites with precise depth, we employ a novel self-supervised neural network that integrates focus and stereo cues, resulting in highly accurate 3D reconstructions robust to variations in lighting and surface reflectance. We validate the proposed approach by scanning 3D objects, including those with known 3D geometries, and demonstrate sub-millimeter depth accuracy across a variety of scanned objects. This represents a powerful tool for digitizing large complex forms, allowing for near-microscopic details in both depth mapping and high-resolution image reconstruction.

Lensless fiber endomicroscopy is an emerging tool for minimally invasive in vivo imaging, where quantitative phase imaging can be utilized as a label-free modality to enhance image contrast. Nevertheless, current phase reconstruction techniques in lensless multi-core fiber endomicroscopy are effective for simple structures but face significant challenges with complex tissue imaging, thereby restricting their clinical applicability. We present SpecDiffusion, a speckle-conditioned diffusion model tailored to achieve high-resolution and accurate reconstruction of complex phase images in lensless fiber endomicroscopy. Through an iterative refinement of speckle data, SpecDiffusion effectively reconstructs structural details, enabling high-resolution and high-fidelity quantitative phase imaging. This capability is particularly advantageous for digital pathology applications, such as cell segmentation, where precise and reliable imaging is essential for accurate cancer diagnosis and classification. New perspectives are opened for early and accurate cancer detection using minimally invasive endomicroscopy.

Super-resolution optical fluctuation imaging (SOFI) achieves super-resolution (SR) imaging through simple hardware configurations while maintaining biological compatibility. However, the realization of large field-of-view (FoV) SOFI imaging remains fundamentally limited by extensive temporal sampling demands. Although modern SOFI techniques accelerate the acquisition speed, their cumulant operator necessitates fluorophores with a high duty cycle and a high labeling density to ensure that sufficient blinking events are acquired, which severely limits the practical implementation. For this, we present a novel framework that resolves the trade-off in SOFI by enabling millisecond-scale temporal resolution while retaining all the merits of SOFI. We named the framework the transformer-based reconstruction of ultra-fast SOFI (TRUS), a novel architecture combining transformer-based neural networks with physics-informed priors in conventional SOFI frameworks. For biological specimens with diverse fluorophore blinking characteristics, our method enables reconstruction using only 20 raw frames and the corresponding widefield images, which achieves a 47-fold reduction in raw frames (compared to the traditional methods that require more than 1000 frames) and sub-200-nm spatial resolution capability. To demonstrate the high-throughput SR imaging ability of our method, we perform SOFI imaging on the microtubule within a millimeter-scale FoV of 1.0 mm2 with total acquisition time of ∼3 min. These characteristics enable TRUS to be a useful high-throughput SR imaging alternative in challenging imaging conditions.