The quest for larger aperture telescopes with high angular resolution is driven by numerous scientific objectives, such as astrophysics and remote sensing. Optical synthetic aperture (OSA) provides a feasible solution to form a large-aperture telescope by combining coherently the light coming from several small apertures. Here, we demonstrate a scattering-assisted coherent diffraction imaging (CDI) approach to realize OSA. In our approach, we collect the diffraction pattern of the targeting object by incorporating a relatively large scattering layer in front of the aperture lens. Light scattering, traditionally considered a hindrance, is now exploited to efficiently capture the object’s high-frequency spatial information. Experimentally, we achieve single-shot full-field imaging ranging from the Fraunhofer regime to the Fresnel regime with a spatial resolution of 1.74 line pairs/mm over 40 m. This is equivalent to synthesizing a 5.53 cm aperture telescope using only a 0.86 cm aperture lens, achieving a resolution enhancement by about 6.4 times over the diffraction limit of the receiving aperture. Our approach offers a new pathway for OSA and scattering-assisted optical telescopes.

Seamlessly integrating cameras into transparent displays is a foundational requirement for advancing augmented reality (AR) applications. However, existing see-through camera designs, such as the LightguideCam, often trade image quality for compactness, producing significant, spatially variant artifacts from lightguide reflections. In this paper, a physics-informed neural network approach is presented to correct spatially varying artifacts for the LightguideCam. To resolve this, we propose a physics-informed neural network framework for high-fidelity image reconstruction. The proposed method obviates the need for slow iterative algorithms, achieving a 4,000-fold speedup in computation. Critically, this acceleration is accompanied by a substantial quality gain, demonstrated by an 8 dB improvement in peak signal-to-noise ratio. By efficiently correcting complex optical artifacts in real time, our work enables the practical deployment of the LightguideCam for demanding AR tasks, including eye-gaze tracking and user-perspective imaging.

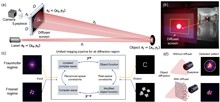

Neural-network-based computer-generated hologram (CGH) methods have greatly improved computational efficiency and reconstruction quality. However, they will no longer be suitable when the CGH parameters change. A common phase-only hologram (POH) encoding strategy based on a neural network is presented to encode POHs from complex amplitude holograms for different parameters in a single training. The neural network is built up by introducing depth-wise separable convolution, residual modules, and complex-value channel adaptive modules, and it is randomly trained with traditional training input image datasets, a built wavelength pool, and a reconstructed distance pool. The average peak signal-to-noise ratio/structural similarity index measure (PSNR/SSIM) for the proposed network encoded POHs can reach 29.19 dB/0.83, which shows 117.35% and 144.12% improvement compared with the double-phase encoding strategy. The variances of the PSNR and SSIM in different reconstructed distances and different wavelengths are increased by 86.83% and 80.65%, respectively, compared with traditional networks in this strategy. Such a method makes it possible to encode multiple complex amplitude holograms of arbitrary wavelength and arbitrary reconstructed distance without the need for retraining, which is friendly to digital filtering or other operations within the CGH generation process for CGH designing and CGH debugging.

Phase contrast microscopy is essential in optical imaging, but traditional systems are bulky and limited by single-phase modulation, hindering visualization of complex specimens. Metasurfaces, with their subwavelength structures, offer compact integration and versatile light field control. Quantum imaging further enhances performance by leveraging nonclassical photon correlations to suppress classical noise, improve contrast, and enable multifunctional processing. Here, we report, a dual-mode bright–dark phase contrast imaging scheme enabled by quantum metasurface synergy. By combining polarization entanglement from quantum light sources with metasurface phase modulation, our method achieves high-contrast, adaptive bright–dark phase contrast imaging of transparent samples with arbitrary phase distributions under low-light conditions. Compared to classical weak light imaging, quantum illumination suppresses environmental noise at equivalent photon flux, improving the contrast from 0.22 to 0.81. Moreover, quantum polarization entanglement enables remote switching to ensure high-contrast imaging in at least one mode, supporting dynamic observation of biological cells with complex phase structures. This capability is especially valuable in scenarios where physical intervention is difficult, such as in miniaturized systems, in vivo platforms, or extreme environments. Overall, the proposed scheme offers an efficient non-invasive solution for biomedical imaging with strong potential in life science applications.

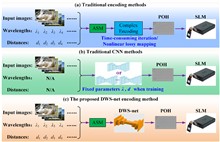

Overcoming the diffraction barrier in long-range optical imaging is recognized as a critical challenge for space situational awareness and terrestrial remote sensing. This study presents a super-resolution imaging method based on reflective tomography LiDAR (RTL), breaking through the traditional optical diffraction limit to achieve 2 cm resolution imaging at a distance of 10.38 km. Aiming at challenges such as atmospheric turbulence, diffraction limits, and data sparsity in long-range complex target imaging, the study proposes the applicable methods of the nonlocal means (NLM) algorithm, combined with a self-developed RTL system to solve the problem of high-precision reconstruction of multi-angle projection data. Experimental results show that the system achieves a reconstruction resolution for complex targets (NUDT WordArt model) that is better than 2 cm, which is 2.5 times higher than the 5 cm diffraction limit of the traditional 1064 nm laser optical system. In sparse data scenarios, the NLM algorithm outperforms traditional algorithms in metrics such as information entropy (IE) and structural similarity (SSIM) by suppressing artifacts and maintaining structural integrity. This study presents the first demonstration of centimeter-level tomographic imaging for complex targets at near-ground distances exceeding 10 km, providing a new paradigm for fields such as space debris monitoring and remote target recognition.

Optical coherence refractive tomography (OCRT) addresses the anisotropic resolution in conventional optical coherence tomography (OCT) imaging, effectively reducing detail loss caused by resolution non-uniformity, and demonstrates strong potential across a range of biomedical applications. Full-range OCRT technique eliminates conjugate image artifacts and further extends the imaging field, enabling large-scale isotropic reconstruction. However, the isotropic resolution achieved through OCRT remains inherently limited by the maximum resolution of the acquired input data, both in the axial and lateral dimensions. Enhancing the resolution of the original images is therefore critical for achieving higher-isotropic reconstruction. Existing OCT super-resolution methods often exacerbate imaging noise during iterative processing, resulting in reconstructions dominated by noise artifacts. In this work, we present sparse continuous full-range optical coherence refractive tomography (SC-FROCRT), which integrates deconvolution-based super-resolution techniques with the full-range OCRT framework to achieve higher resolution, expanded field-of-view, and isotropic image reconstruction. By incorporating the inherent sparsity and continuity priors of biological samples, we iteratively refine the initially acquired low-resolution OCT images, enhancing their resolution. This model is integrated into the previously established full-range OCRT framework to enable isotropic super-resolution with expanded field-of-view. In addition, the FROCRT technique leverages multi-angle Fourier synthesis to effectively mitigate reconstruction artifacts that may arise from over-enhancement by the super-resolution model. We applied SC-FROCRT to phantom samples, sparse plant tissues, and cleared biological tissues, achieving the Fourier ring correlation (FRC) metric improved by an average of 1.41 times over FROCRT. We anticipate that SC-FROCRT will broaden the scope of OCT applications, enhancing its utility for both diagnostic and research purposes.

Super-resolution optical fluctuation imaging (SOFI) achieves super-resolution (SR) imaging through simple hardware configurations while maintaining biological compatibility. However, the realization of large field-of-view (FoV) SOFI imaging remains fundamentally limited by extensive temporal sampling demands. Although modern SOFI techniques accelerate the acquisition speed, their cumulant operator necessitates fluorophores with a high duty cycle and a high labeling density to ensure that sufficient blinking events are acquired, which severely limits the practical implementation. For this, we present a novel framework that resolves the trade-off in SOFI by enabling millisecond-scale temporal resolution while retaining all the merits of SOFI. We named the framework the transformer-based reconstruction of ultra-fast SOFI (TRUS), a novel architecture combining transformer-based neural networks with physics-informed priors in conventional SOFI frameworks. For biological specimens with diverse fluorophore blinking characteristics, our method enables reconstruction using only 20 raw frames and the corresponding widefield images, which achieves a 47-fold reduction in raw frames (compared to the traditional methods that require more than 1000 frames) and sub-200-nm spatial resolution capability. To demonstrate the high-throughput SR imaging ability of our method, we perform SOFI imaging on the microtubule within a millimeter-scale FoV of 1.0 mm2 with total acquisition time of ∼3 min. These characteristics enable TRUS to be a useful high-throughput SR imaging alternative in challenging imaging conditions.

Lensless fiber endomicroscopy is an emerging tool for minimally invasive in vivo imaging, where quantitative phase imaging can be utilized as a label-free modality to enhance image contrast. Nevertheless, current phase reconstruction techniques in lensless multi-core fiber endomicroscopy are effective for simple structures but face significant challenges with complex tissue imaging, thereby restricting their clinical applicability. We present SpecDiffusion, a speckle-conditioned diffusion model tailored to achieve high-resolution and accurate reconstruction of complex phase images in lensless fiber endomicroscopy. Through an iterative refinement of speckle data, SpecDiffusion effectively reconstructs structural details, enabling high-resolution and high-fidelity quantitative phase imaging. This capability is particularly advantageous for digital pathology applications, such as cell segmentation, where precise and reliable imaging is essential for accurate cancer diagnosis and classification. New perspectives are opened for early and accurate cancer detection using minimally invasive endomicroscopy.

We present a novel approach for capturing gigapixel-resolution micron-scale three-dimensional (3D) images of large complex macroscopic objects using a 9×6 multi-camera array paired with a custom 3D reconstruction algorithm. Our system overcomes inherent trade-offs among resolution, field of view (FOV), and depth of field (DOF) by capturing stereoscopic focal stacks across multiple perspectives, enabling an effective FOV of approximately 135×128 degrees and capturing surface depth maps at lateral resolutions less than 40 µm and depth resolutions of about 0.5 mm. To achieve all-in-focus RGB (red, green, and blue) composites with precise depth, we employ a novel self-supervised neural network that integrates focus and stereo cues, resulting in highly accurate 3D reconstructions robust to variations in lighting and surface reflectance. We validate the proposed approach by scanning 3D objects, including those with known 3D geometries, and demonstrate sub-millimeter depth accuracy across a variety of scanned objects. This represents a powerful tool for digitizing large complex forms, allowing for near-microscopic details in both depth mapping and high-resolution image reconstruction.

The demand for high-resolution low-photo-toxicity imaging in life sciences has led to the development of the Cu-3DSIM, a high-fidelity CUDA-accelerated parallel reconstruction method for 3D structured illumination microscopy (3DSIM). This method addresses the challenges of reconstruction time and quality, which are crucial for live cell imaging, by leveraging graphics processing unit parallel computing to achieve significant speedups in super-resolution reconstruction. Cu-3DSIM optimizes storage space within the limited graphics processing unit (GPU) memory, enabling stack 3DSIM reconstruction with high accuracy and reduced computational resource consumption. The method includes parallel computing for cross-correlation parameter estimation, enhancing frequency vector computation to sub-pixel accuracy. Experiments demonstrate Cu-3DSIM’s superior performance over traditional methods, with an order-of-magnitude improvement in reconstruction speed and memory efficiency, while maintaining the quality of Open-3DSIM. The approach also incorporates Hilo information to suppress background noise and reduce artifacts, leading to clearer reconstruction results. Cu-3DSIM’s capabilities allow for rapid iteration and observation of cellular states, advancing our understanding of cellular structures and dynamics.

As a novel imaging paradigm, single-pixel imaging (SPI) has shown significant potential across various fields. However, intricate nonlinear reconstruction algorithms, such as compressive sensing or deep neural networks, are crucial to enable real-time imaging. In general, the former incurs substantial reconstruction time, and the latter often results in substantial energy consumption. Optical neural networks (ONNs) offer a promising alternative because of the intrinsic alignment between the sampling of SPI and the fully connected layers in ONNs. Nevertheless, achieving nonlinear reconstruction via ONNs remains a challenging task since the nonlinear activation functions still pose significant difficulties. Here, we propose an all-optical ONN architecture with controllable nonlinear order that mitigates the reliance of ONNs on nonlinear activation functions. Following the scheme of compressive SPI, we demonstrate the fitting capability of the structure by two linear tasks—compressive SPI and linear-edge extraction—and two nonlinear tasks—nonlinear-edge extraction and handwritten dataset classification. Experimental results show that this structure has good fitting capabilities for both regression tasks and classification tasks, even with low order.

Imaging through strongly scattering media noninvasively faces a key challenge: reconstructing the object phase spectrum. Speckle correlation imaging (SCI) effectively reconstructs the amplitude spectrum but struggles with stable and accurate phase spectrum reconstruction, particularly in variable or background-light-interfered scenarios. In this study, we proposed a speckle spectrum autocorrelation imaging (SSAI) approach for complex strongly scattering scenarios. SSAI employs the spectrum autocorrelation of centroid-aligned speckle images for independent and stable reconstruction of the object phase spectrum, markedly reducing interference from medium dynamics and object motion. Then, SSAI reconstructs the object spectrum by combining the object phase spectrum with the object amplitude spectrum recovered from the speckle autocorrelation. In the experimental validation, we compared SSAI and SCI in reconstructing stationary and moving objects hidden behind dynamic media, both with and without background light interference. SSAI not only exhibits stability and fidelity superior to SCI but also functions in scattering scenarios where SCI fails. Furthermore, SSAI can reconstruct size-scaling objects hidden behind dynamic scattering media with high fidelity, showing significant scalability in complex scenarios. We expect this lensless and noninvasive approach to find widespread applicability in biomedical imaging, astronomical observations, remote sensing, and underwater detection.

Computational imaging enables high-quality infrared imaging using simple and compact optical systems. However, the integration of specialized reconstruction algorithms introduces additional latency and increases computational and power demands, which impedes the performance of high-speed, low-power optical applications, such as unmanned aerial vehicle (UAV)-based remote sensing and biomedical imaging. Traditional model compression strategies focus primarily on optimizing network complexity and multiply-accumulate operations (MACs), but they overlook the unique constraints of computational imaging and the specific requirements of edge hardware, rendering them inefficient for computational camera implementation. In this work, we propose an edge-accelerated reconstruction strategy based on end-to-end sensitivity analysis for single-lens infrared computational cameras. Compatibility-based operator reconfiguration, sensitivity-aware pruning, and sensitivity-aware mixed quantization are employed on edge-artificial intelligence (AI) chips to balance inference speed and reconstruction quality. The experimental results show that, compared to the traditional approach without hardware feature guidance, the proposed strategy achieves better performance in both reconstruction quality and speed, with reduced complexity and fewer MACs. Our single-lens computational camera with edge-accelerated reconstruction demonstrates high-quality, video-level imaging capability in field experiments. This work is dedicated to addressing the practical challenge of real-time edge reconstruction, paving the way for lightweight, low-latency computational imaging applications.

Achieving cellular-resolution insights into an organ or whole-body architecture is a cornerstone of modern biology. Recent advancements in tissue clearing techniques have revolutionized the visualization of complex structures, enhancing tissue transparency by mitigating light scattering caused by refractive index mismatches and fast-changing scattering element distribution. However, the field remains constrained by predominantly qualitative assessments of clearing methods, with systematic, quantitative approaches being scarce. Here, we present the ClearAIM method for real-time quantitative monitoring of the tissue clearing process. It leverages a tailored deep learning-based segmentation algorithm with a bespoke frame-to-frame scheme to achieve robust, precise, and automated analysis. Demonstrated using mouse brain slices (0.5 and 1 mm thick) and the CUBIC method, our universal system enables (1) precise quantification of dynamic transparency levels, (2) real-time monitoring of morphological changes via automated analysis, and (3) optimization of clearing timelines to balance increased transparency with structural information preservation. The presented system enables rapid, user-friendly measurements of tissue transparency and shape changes without the need for advanced instrumentation. These features facilitate objective comparisons of the effectiveness of tissue clearing techniques for specific organs, relying on quantifiable values rather than predominantly empirical observations. Our method promotes increased diagnostic values and consistency of the cleared samples, ensuring the repeatability and reproducibility of biomedical tests.

Single-pixel imaging (SPI) uses modulated illumination light fields and the corresponding light intensities to reconstruct the image. The imaging speed of SPI is constrained by the refresh rate of the illumination light fields. Fiber laser arrays equipped with high-bandwidth electro-optic phase modulators can generate illumination light fields with a refresh rate exceeding 100 MHz. This capability would improve the imaging speed of SPI. In this study, a Fermat spiral fiber laser array was employed as the illumination light source to achieve high-quality and rapid SPI. Compared to rectangular and hexagonal arrays, the non-periodic configuration of the Fermat spiral mitigates the occurrence of periodic artifacts in reconstructed images, thereby enhancing the imaging quality. A high-speed data synchronous acquisition system was designed to achieve a refresh rate of 20 kHz for the illumination light fields and to synchronize it with the light intensity acquisition. We achieved distinguishable imaging reconstructed by an untrained neural network (UNN) at a sampling ratio of 4.88%. An imaging frame rate of 100 frame/s (fps) was achieved with an image size of 64 pixel×64 pixel. In addition, given the potential of fiber laser arrays for high power output, this SPI system with enhanced speed would facilitate its application in remote sensing.

Deconvolution is a computational technique in imaging to reduce the blurring effects caused by the point spread function (PSF). In the context of optical coherence tomography (OCT) imaging, traditional deconvolution methods are limited by the time costs of iterative algorithms, and supervised learning approaches face challenges due to the difficulty in obtaining paired pre- and post-convolution datasets. Here we introduce a self-supervised deep-learning framework for real-time OCT image deconvolution. The framework combines denoising pre-processing, blind PSF estimation, and sparse deconvolution to enhance the resolution and contrast of OCT imaging, using only noisy B-scans as input. It has been tested under diverse imaging conditions, demonstrating adaptability to various wavebands and scenarios without requiring experimental ground truth or additional data. We also propose a lightweight deep neural network that achieves high efficiency, enabling millisecond-level inference. Our work demonstrates the potential for real-time deconvolution in OCT devices, thereby enhancing diagnostic and inspection capabilities.

Describing a scene in language is a challenging multi-modal task as it requires understanding various and complex scenes, and then transforming them into sentences. Among these scenes, the task of video captioning (VC) has attracted much attention from researchers. For machines, traditional VC follows the “imaging-compression-decoding-and-then-captioning” pipeline, where compression is a pivot for storage and transmission. However, in such a pipeline, some potential shortcomings are inevitable, i.e., information redundancy resulting in low efficiency and information loss during the sampling process for captioning. To address these problems, in this paper, we propose a novel VC pipeline to generate captions directly from the compressed measurement, captured by a snapshot compressive sensing camera, and we dub our model SnapCap. To be more specific, benefiting from signal simulation, we have access to abundant measurement-video-annotation data pairs for our model. Besides, to better extract language-related visual representations from the compressed measurement, we propose to distill knowledge from videos via a pretrained model, contrastive language-image pretraining (CLIP), with plentiful language-vision associations to guide the learning of our SnapCap. To demonstrate the effectiveness of SnapCap, we conduct experiments on three widely used VC datasets. Both the qualitative and quantitative results verify the superiority of our pipeline over conventional VC pipelines.

Wavefront shaping enables the transformation of disordered speckles into ordered optical foci through active modulation, offering a promising approach for optical imaging and information delivery. However, practical implementation faces significant challenges, particularly due to the dynamic variation of speckles over time, which necessitates the development of fast wavefront shaping systems. This study presents a coded self-referencing wavefront shaping system capable of fast wavefront measurement and control. By encoding both signal and reference lights within a single beam to probe complex media, this method addresses key limitations of previous approaches, such as interference noise in interferometric holography, loss of controllable elements in coaxial interferometry, and the computational burden of non-holographic phase retrieval. Experimentally, we demonstrated optical focusing through complex media, including unfixed multimode fibers and stacked ground glass diffusers. The system achieved runtime of 21.90 and 76.26 ms for 256 and 1024 controllable elements with full-field modulation, respectively, with corresponding average mode time of 85.54 and 74.47 µs—pushing the system to its hardware limits. The system’s robustness against dynamic scattering was further demonstrated by focusing light through moving diffusers with the correlation time as short as 21 ms. These results emphasize the potential of this system for real-time applications in optical imaging, communication, and sensing, particularly in complex and dynamic scattering environments.

Light field microscopy can obtain the light field’s spatial distribution and propagation direction, offering new perspectives for biological research. However, microlens array-based light field microscopy sacrifices spatial resolution for angular resolution, while aperture-coding-based light field microscopy sacrifices temporal resolution for angular resolution. In this study, we propose a differential high-speed aperture-coding light field microscopy for dynamic sample observation. Our method employs a high-speed spatial light modulator (SLM) and a high-speed camera to accelerate the coding and image acquisition rate. Additionally, our method employs an undersampling strategy to further enhance the temporal resolution without compromising the depth of field (DOF) of results in light field imaging, and no iterative optimization is needed in the reconstruction process. By incorporating a differential aperture-coding mechanism, we effectively reduce the direct current (DC) background, enhancing the reconstructed images’ contrast. Experimental results demonstrate that our method can capture the dynamics of biological samples in volumes of 41 Hz, with an SLM refresh rate of 1340 Hz and a camera frame rate of 1340 frame/s, using an objective lens with a numerical aperture of 0.3 and a magnification of 10. Our approach paves the way for achieving high spatial resolution and high contrast volumetric imaging of dynamic samples.

In this study, we propose a ghost imaging method capable of penetrating dynamic scattering media through a multi-polarization fusion mutual supervision network (MPFNet). The MPFNet effectively processes one-dimensional light intensity signals collected under both linear and circular polarization illumination. By employing a multi-branch fusion architecture, the network excels at extracting multi-scale features and capturing contextual information. Additionally, a multi-branch spatial-channel cross-attention module optimizes the fusion of multi-branch feature information between the encoder and the decoder. This synergistic fusion of reconstruction results from both polarization states yields reconstructed object images with significantly enhanced fidelity compared to ground truth. Moreover, leveraging the underlying physical model and utilizing the collected one-dimensional light intensity signal as the supervisory labels, our method obviates the need for pre-training, ensuring robust performance even in challenging, highly scattering environments. Extensive experiments conducted on free-space and underwater environments have demonstrated that the proposed method holds significant promise for advancing high-quality ghost imaging through dynamic scattering media.

Confocal microscopy, as an advanced imaging technique for increasing optical resolution and contrast, has diverse applications ranging from biomedical imaging to industrial detection. However, the focused energy on the samples would bleach fluorescent substances and damage illuminated tissues, which hinders the observation and presentation of natural processes in microscopic imaging. Here, we propose a photonic timestamped confocal microscopy (PT-Confocal) scheme to rebuild the image with limited photons per pixel. By reducing the optical flux to the single-photon level and timestamping these emission photons, we experimentally realize PT-Confocal with only the first 10 fluorescent photons. We achieve the high-quality reconstructed result by optimizing the limited photons with maximum-likelihood estimation, discrete wavelet transform, and a deep-learning algorithm. PT-Confocal treats signals as a stream of photons and utilizes timestamps carried by a small number of photons to reconstruct their spatial properties, demonstrating multi-channel and three-dimensional capacity in the majority of biological application scenarios. Our results open a new perspective in ultralow-flux confocal microscopy and pave the way for revealing inaccessible phenomena in delicate biological samples or dim life systems.

Realizing real-time and highly accurate three-dimensional (3D) imaging of dynamic scenes presents a fundamental challenge across various fields, including online monitoring and augmented reality. Currently, traditional phase-shifting profilometry (PSP) and Fourier transform profilometry (FTP) methods struggle to balance accuracy and measurement efficiency simultaneously, while deep-learning-based 3D imaging approaches lack in terms of speed and flexibility. To address these challenges, we proposed a real-time method of 3D imaging based on region of interest (ROI) fringe projection and a lightweight phase-estimation network, in which an ROI fringe projection strategy was adopted to increase the fringe period on the tested surface. A phase-estimation network (PE-Net) assisted by phase estimation was presented to ensure both phase accuracy and inference speed, and a modified heterodyne phase unwrapping method (MHPU) was used to enable flexible phase unwrapping for the final 3D imaging outputs. The experimental results demonstrate that the proposed workflow achieves 3D imaging with a speed of 100 frame/s and a root mean square (RMS) error of less than 0.031 mm, providing a real-time solution with high accuracy, efficiency, and flexibility.

Endoscopic imaging is crucial for minimally invasive observation of biological tissues. Notably, the integration between the graded-index (GRIN) waveguides and convolutional neural networks (CNNs) has shown promise in enhancing endoscopy quality thanks to their synergistic combination of hardware-based dispersion suppression and software-based imaging restoration. However, conventional CNNs are typically ineffective against diverse intrinsic distortions in real-life imaging systems, limiting their use in rectifying extrinsic distortions. This issue is particularly urgent in wide-spectrum GRIN endoscopes, where the random variation in their equivalent optical lengths leads to catastrophic imaging distortion. To address this problem, we propose a novel network architecture termed the classified-cascaded CNN (CC-CNN), which comprises a virtual-real discrimination network and a physical-aberration correction network, tailored to distinct physical sources under prior knowledge. The CC-CNN, by aligning its processing logic with physical reality, achieves high-fidelity intrinsic distortion correction for GRIN systems, even with limited training data. Our experiment demonstrates that complex distortions from multiple random-length GRIN systems can be effectively restored using a single CC-CNN. This research offers insights into next-generation GRIN-based endoscopic systems and highlights the untapped potential of CC-CNNs designed under the guidance of categorized physical models.

Considering the image (video) compression on resource-limited platforms, we propose an ultralow-cost image encoder, named block-modulating video compression (BMVC) with an extremely low-cost encoder to be implemented on mobile platforms with low consumption of power and computation resources. Accordingly, we also develop two types of BMVC decoders, implemented by deep neural networks. The first BMVC decoder is based on the plug-and-play algorithm, which is flexible with different compression ratios. The second decoder is a memory-efficient end-to-end convolutional neural network, which aims for real-time decoding. Extensive results on the high-definition images and videos demonstrate the superior performance of the proposed codec and the robustness against bit quantization.

Pathological examination is essential for cancer diagnosis. Frozen sectioning has been the gold standard for intraoperative tissue assessment, which, however, is hampered by its laborious processing steps and often provides inadequate tissue slide quality. To address these limitations, we developed a deep-learning-assisted, ultraviolet light-emitting diode (UV-LED) microscope for label-free and slide-free tissue imaging. Using UV-based light-sheet (UV-LS) imaging mode as the learning target, UV-LED images with high contrast are generated by employing a weakly supervised network for contrast enhancement. With our approach, the image acquisition speed for providing contrast-enhanced UV-LED (CE-LED) images is 47 s/cm2, ∼25 times faster than that of the UV-LS system. The results show that this approach significantly enhances the image quality of UV-LED, revealing essential tissue structures in cancerous samples. The resulting CE-LED offers a low-cost, nondestructive, and high-throughput alternative histological imaging technique for intraoperative cancer detection.

Fourier ptychography (FP) is an advanced computational imaging technique that offers high resolution and a large field of view for microscopy. By illuminating the sample at varied angles in a microscope setup, FP performs phase retrieval and synthetic aperture construction without the need for interferometry. Extending its utility, FP’s principles can be adeptly applied to far-field scenarios, enabling super-resolution remote sensing through camera scanning. However, a critical prerequisite for successful FP reconstruction is the need for data redundancy in the Fourier domain, which necessitates dozens or hundreds of raw images to achieve a converged solution. Here, we introduce a macroscopic Fourier ptychographic imaging system with high temporal resolution, termed illumination-multiplexed snapshot synthetic aperture imaging (IMSS-SAI). In IMSS-SAI, we employ a 5×5 monochromatic camera array to acquire low-resolution object images under three-wavelength illuminations, facilitating the capture of a high spatial-bandwidth product ptychogram dataset in a snapshot. By employing a state-multiplexed ptychographic algorithm in IMSS-SAI, we effectively separate distinct coherent states from their incoherent summations, enhancing the Fourier spectrum overlap for ptychographic reconstruction. We validate the snapshot capability by imaging both dynamic events and static targets. The experimental results demonstrate that IMSS-SAI achieves a fourfold resolution enhancement in a single shot, whereas conventional macroscopic FP requires hundreds of consecutive image recordings. The proposed IMSS-SAI system enables resolution enhancement within the speed limit of a camera, facilitating real-time imaging of macroscopic targets with diffuse reflectance properties.

Far-field super-resolution microscopy has unraveled the molecular machinery of biological systems that tolerate fluorescence labeling. Conversely, stimulated Raman scattering (SRS) microscopy provides chemically selective high-speed imaging in a label-free manner by exploiting the intrinsic vibrational properties of specimens. Even though there were various proposals for enabling far-field super-resolution Raman microscopy, the demonstration of a technique compatible with imaging opaque biological specimens has been so far elusive. Here, we demonstrate a single-pixel-based scheme, combined with robust structured illumination, that enables super-resolution in SRS microscopy. The methodology is straightforward to implement and provides label-free super-resolution imaging of thick specimens, therefore paving the way for probing complex biological systems when exogenous labeling is challenging.

The single-photon sensitivity and picosecond time resolution of single-photon light detection and ranging (LiDAR) can provide a full-waveform profile for retrieving the three-dimentional (3D) profile of the target separated from foreground clutter. This capability has made single-photon LiDAR a solution for imaging through obscurant, camouflage nets, and semitransparent materials. However, the obstructive presence of the clutter and limited pixel numbers of single-photon detector arrays still pose challenges in achieving high-quality imaging. Here, we demonstrate a single-photon array LiDAR system combined with tailored computational algorithms for high-resolution 3D imaging through camouflage nets. For static targets, we develop a 3D sub-voxel scanning approach along with a photon-efficient deconvolution algorithm. Using this approach, we demonstrate 3D imaging through camouflage nets with a 3× improvement in spatial resolution and a 7.5× improvement in depth resolution compared with the inherent system resolution. For moving targets, we propose a motion compensation algorithm to mitigate the net’s obstructive effects, achieving video-rate imaging of camouflaged scenes at 20 frame/s. More importantly, we demonstrate 3D imaging for complex scenes in various outdoor scenarios and evaluate the advanced features of single-photon LiDAR over a visible-light camera and a mid-wave infrared (MWIR) camera. The results point a way forward for high-resolution real-time 3D imaging of multi-depth scenarios.

Optical fiber bundles frequently serve as crucial components in flexible miniature endoscopes, transmitting end-to-end images directly for medical and industrial applications. Each core usually acts as a single pixel, and the resolution of the image is limited by the core size and core spacing. We propose a method that exploits the hidden information embedded in the pattern within each core to break the limitation and obtain high-dimensional light field information and more features of the original image including edges, texture, and color. Intra-core patterns are mainly related to the spatial angle of captured light rays and the shape of the core. A convolutional neural network is used to accelerate the extraction of in-core features containing the light field information of the whole scene, achieve the transformation of in-core features to real details, and enhance invisible texture features and image colorization of fiber bundle images.

Structure illumination microscopy (SIM) imposes no special requirements on the fluorescent dyes used for sample labeling, yielding resolution exceeding twice the optical diffraction limit with low phototoxicity, which is therefore very favorable for dynamic observation of live samples. However, the traditional SIM algorithm is prone to artifacts due to the high signal-to-noise ratio (SNR) requirement, and existing deep-learning SIM algorithms still have the potential to improve imaging speed. Here, we introduce a deep-learning-based video-level and high-fidelity super-resolution SIM reconstruction method, termed video-level deep-learning SIM (VDL-SIM), which has an imaging speed of up to 47 frame/s, providing a favorable observing experience for users. In addition, VDL-SIM can robustly reconstruct sample details under a low-light dose, which greatly reduces the damage to the sample during imaging. Compared with existing SIM algorithms, VDL-SIM has faster imaging speed than existing deep-learning algorithms, and higher imaging fidelity at low SNR, which is more obvious for traditional algorithms. These characteristics enable VDL-SIM to be a useful video-level super-resolution imaging alternative to conventional methods in challenging imaging conditions.