High-speed 3D topography measurement technology is important for research and application in virtual and augmented reality, intelligent manufacturing testing, material performance testing, and other fields that require 3D modeling and deep analysis for dynamic scenes and processes. With the increasing demand for high-speed dynamic scene measurement and the rapid development of measurement equipment, researchers have gradually shifted their attention from 3D measurements of simple static scenes to measurements of complex dynamic scenes. Based on the requirements of measurement projects, this study reviewed the hardware and algorithmic research progresses of high-speed 3D measurement technology based on fringe projection. Then, it compared the advantages and disadvantages of existing technologies in different categories, suggested method selection under different measurement tasks, and summarized the challenges and potential development trends of high-speed 3D measurement technology based on fringe projection.

Computed tomography (CT) technology is widely used in clinical medical diagnosis thanks to the excellent visualization of the CT imaging technology for the internal cross-sectional structure of objects. Because X-ray radiation will be harmful to the human body, it is demanded to reduce the dose of X-ray radiation to patients by reducing the X-ray intensity of the scan or number of the view of the scan. However, low-dose CT images reconstructed from sub-sampling projection data will produce severe stripe artifacts and noise. In recent years, deep learning techniques have developed rapidly, and convolutional neural networks have shown great advantages in image representation and feature extraction, helping to achieve high speed and quality CT reconstruction from sparse-view or limited-angle projection data. This paper mainly focuses on the sparse-view or limited-angle CT reconstruction techniques, and reviews the latest research progresses of deep learning techniques in CT reconstruction in five directions, including image post-processing, sinogram domain pre-processing, joint processing of dual domain data, iterative reconstruction algorithms, and end-to-end mapping reconstruction. In the end, we analyze the technical characteristics, advantages, and limitations of existing sparse-view or limited-angle CT reconstruction methods based on deep learning, and discuss possible future research directions to address these challenges.

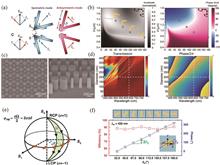

Three-dimensional (3D) imaging enables the elaborate numerical description of the physical space, leading to one of the most critical technologies in consumer electronics, automatic drive, machine vision, and virtual reality. The existing 3D imaging is limited by the physical mechanism of the traditional refraction element and diffraction optical element, so it is difficult to meet the performance requirements of miniaturization, integration, multi-function, large field of view, large numberical aperture, high spatial resolution, and so on. Metasurface, an intelligent surface composed of subwavelength nano-antenna arrays, can realize the artificial control of the amplitude, phase, polarization, and other parameters of the light field. Therefore, it has the advantages of small size, high spatial bandwidth product, high efficiency, multi-function, large field of view, and so on, showing the potential as a new generation of optical elements for 3D imaging. In this paper, the progress of mesurface-based 3D imaging technology is reviewed. Based on the analysis of the physical mechanism and application advantages of the metasurface, the application and performance of the mesurface-based 3D imaging technology such as structured light technology, time-flight method, optical field imaging, and point spread function engineering are introduced in detail. The challenges and future development directions of metasurface-based 3D imaging technology are summarized and prospected.

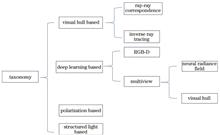

The three-dimensional (3D) reconstruction technology has considerable application prospects in industrial fields such as autopilot and metauniverse. The 3D reconstruction of transparent rigid bodies exhibits some challenges owing to its complex imaging laws. This review examines the 3D reconstruction technology of transparent rigid bodies, focuses on the methods based on optimized visual hull and deep learning, discusses the principle and different optimization methods based on visual hull reconstruction, describes the RGB-D depth completion and multiview reconstruction based on deep learning, presents the current dataset of transparent objects, and finally discusses the possible development direction of the 3D reconstruction technology.

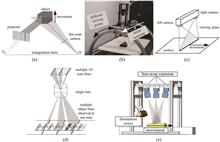

Three-dimensional (3D) shape measurements based on high-density point clouds are currently replacing conventional discrete coordinate measurements and are thus a new development trend in the field of geometric measurement. In addition to their narrow-sense manufacturing applications, the multi-spatial-resolution characteristics of high-density point clouds provide a broad range of applications in the operational monitoring of mechanical equipments or large engineering projects such as high-speed trains, aircrafts, and tunnels. However, unlike with the 3D shape measurement problem that occurs under static conditions in the field of manufacturing, for the measured objects in service state, new characteristics are required, including in-motion high-speed continuous extension of point clouds and high-resolution acquisition. The new problem of extendable surface measurements poses a major challenge to conventional measurement methods. One-dimensional image sensors, represented by line-scan CCD and CMOS, can collect images rapidly and continuously in motion, providing excellent hardware potential for 3D shape measurements to meet the requirements of high-speed, continuous, and high-density measurements. One-dimensional image sensors have been in regular development and attracted considerable interest in recent years. In this paper, the key technologies including parameter calibration, one-dimensional image matching, multi-sensor layout and synchronization, and motion error compensation are reviewed for high-resolution shape measurements of extendable surfaces based on one-dimensional images, and their possible developmental directions are discussed.

Micro-nano particles, such as micro-nano bubbles, colloidal particles, and microorganisms, exist widely in daily life and the natural environment. Observations of the dynamic behavior of such particles and their accurate quantitative characterization can enlighten us on the many core issues in life sciences, medicine, material, and environmental science. This paper introduces a digital holographic microscopy technology that can perform real-time, large depth-of-field, unmarked, high-precision three-dimensional tracking of multiple particles. Additionally, this paper expounds the working principle and application of the method and finally discusses its prospects in the further development and challenges faced by similar technologies.

With the rapid development of precision instrument manufacturing and semiconductor processing industry, the observation and measurement of micro-structure surface profile has become an important orientation of scientific research. Laser scanning confocal microscopy becomes popular in three-dimensional (3D) surface topography because of its high resolution, high signal-to-noise ratio, and excellent optical sectioning ability. In this paper, we first introduce the basic principle of the confocal microscopy. Further, various confocal microscopic methods used in 3D surface topography measurement are reviewed, including different scanning methods, different detection methods, and different spectral-based confocal imaging methods. Finally, the future developments of confocal microscopy are also prospected.

Drivers usually use in-car displays and portable electronic devices to obtain navigation information. However, the focus transition between near distance and far distance distracts drivers' attention from the driving environment and affects driving safety. With the advancement of automobile intelligence, augmented reality head-up display (AR-HUD) projects virtual information to the distance. As a result, the physical driving environment is superimposed with virtual information, which considerably improves driving safety. The present study reviews the development of AR-HUD technology, including single-plane head-up display, multi-plane head-up display, and 3D head-up display. The key optical parameters that evaluate the HUD display is introduced. The working principles, state-of-the-art performances, and critical challenges of various technologies are highlighted. Finally, the potential future development of AR-HUD technology is discussed.

Mobile electronic devices are playing a more and more important role in people's daily life. Portable 3D display with thin and light characteristics has attracted wide attention. According to the application requirements of mobile information terminals, this paper mainly introduces the research progresses of four portable 3D display technologies, namely directional backlighting display, compressive light field display, integral imaging display, and directional light field display, and analyzes how to effectively utilize the display bandwidth according to the application scenes of portable devices. Finally, the future development of portable 3D display is prospected.

Binocular stereo vision simulates the human visual system to perceive the environment in three dimensions. The parallax between two images can be obtained by stereo-matching the corrected left and right images and calculating the scene depth based on the triangulation principle. This area has been identified as a research hotspot in computer vision and has made significant progress in the past few decades. Traditional stereo matching methods use hand-designed features that perform poorly in weak or repeated texture and occlusion areas. Recently, stereo matching methods based on deep learning have significantly progressed, showing strong application potential. In this review, we conducted a comprehensive survey on this developing field, discussed the advantages and limitations of the different methods, introduced the binocular products currently on the market, and assessed the research and application prospects in this field.

Photometric stereo three-dimensional (3D) reconstruction is a hot topic in the fields of machine vision and photometry. This method is widely used because of its simple equipment, low cost, and high resolution. With the rapid advancement of artificial intelligence and deep learning technology in recent years, the development of photometric stereo technology has entered a new era. This paper reviews the progress in the application of depth learning technology to photometric stereo 3D reconstruction. First, the research background and the basic principles of photometric 3D reconstruction are introduced. Next, various types of photometric stereo 3D reconstruction methods are summarized. Then, the commonly used synthetic and real-photoed datasets are briefly introduced. Further, a detailed description of the applications of depth learning technology in photometric stereo 3D reconstruction is provided, wherein the physical model-based photometric stereo technology is transformed into a “data-driven” technology to achieve high prediction accuracy. Finally, the paper analyzes and summarizes the challenges and opportunities for future research in the application of deep learning technology to photometric stereo reconstruction.

The fingertip is one of the most convenient part for collection of the human body to be used for measurements and has been the focus of biometric identification research. As external fingertip biometrics, such as fingerprints, have shown poor resistance to environmental interference and easy forgery, internal fingertip biometrics have begun to receive attention. Optical coherence tomography (OCT) enables non-invasive three-dimensional imaging of the internal structure of a fingertip. From the measured volume data, secure and stable internal fingertip biometric features, such as internal fingerprints, sweat pores, and sweat glands, have been obtained. Therefore, in the past two decades, the use of OCT has received substantial research attention. Research progresses on OCT in fingertip biometrics acquisition, and its applications in biometric identification and anti-counterfeiting are presented in this paper. Finally, an analysis of the current technical limitations and development prospects is presented. The findings can provide theoretical support for sustainable development of this research field.

High-accuracy panoramic three-dimensional (3D) dynamic deformation measurements are of considerable significance for studying the mechanical properties of large or ultra-large structures. As a simple, non-contact, and high-precision full-field deformation measurement technology, three-dimensional digital image correlation (3D-DIC) can provide an effective measurement method for large-scale structural deformation measurement. This paper introduces the basic principles of 3D-DIC measurement and presents key technical advancements in the panoramic deformation measurement of large structures with multiple cameras. These advancements include large-scale speckle fabrication, 3D calibration of large field-of-view, coordinate unification of multi-camera systems, and real-time calibration of camera extrinsic parameters. Furthermore, this paper presents practical applications of high-accuracy 3D dynamic deformation measurements for large structures in civil engineering and aerospace engineering fields, such as the assessment of the cassette structure in seismic shaking table tests, panoramic high-speed deformation measurement of suspension cable dome structures in progressive collapse tests, and panoramic deformation measurement of cabin structures of launch vehicles in load tests. Using high-accuracy 3D dynamic deformation measurements for large structures provides a reliable experimental method for seismic performance analysis, mechanical modeling of large structures, and in-depth study of failure mechanisms.

Speckle projection profilometry (SPP) encodes depth information of a scene by projecting a single random speckle pattern and establishes the global correspondences between stereo images using speckle matching technology, thereby achieving a single-shot three-dimensional (3D) reconstruction. However, it is still difficult to encode a globally unique feature for every pixel in an entire measurement space by projecting only a speckle pattern due to the measured object surface with complex reflection characteristics and the perspective differences between stereo cameras. The resulting mismatching problem leads to the low measurement accuracy of SPP, making it difficult to meet the high-precision measurement requirements of some industrial scenarios. In this study, we propose a speckle structured-light-based 3D imaging technique and its sensor design method using a vertical-cavity surface-emitting laser (VCSEL) projection array. The proposed 3D structured-light sensor integrates three miniaturized speckle projection modules to project a set of speckle patterns with different spatial positions, spatially and temporally encoding the depth information of the measured scene efficiently. In addition, a coarse-to-fine spatial-temporal speckle correlation algorithm is proposed to improve the measurement accuracy and reconstruct the fine shapes of complex objects. Experiments, including precision analysis, 3D model scanning, detection of small target metal parts, and measurement of complex scenes, indicate that the proposed 3D structured-light sensor can realize high-precision 3D measurements at a long distance over a large field of view, which can further be applied to industrial scenarios such as part sorting and robot stacking.

Projection speed of a digital light processing projector is low, which limits the three-dimensional (3D) measurement speed of structured light. To solve this problem, a 3D measurement system of fringe-structured light based on a high-speed light emitting diode (LED) array is proposed using the LED array with terahertz switching speed as the projection light source. Particularly, the high-speed LED array is used to project a binary fringe pattern, and a sinusoidal fringe is obtained on a measured 3D object's surface by slightly defocusing the lens of the projection system, and the object's height is calculated. Afterward, the object is reconstructed by combining phase-shifting and multifrequency heterodyne methods. As an experiment, the proposed system was used for 3D measurement of a stepped object with a rotation speed of 3000 r/min at a projection speed of 21000 frame/s. The proposed system can measure a dynamic object at a speed of 6000 Hz, and the measurement accuracy reaches 0.1 mm, which realizes 3D shape reconstruction of high-speed moving objects. In addition, it shows the feasibility of using a high-speed LED array as a projection light source to improve 3D measurement speed to megahertz.

Optical microscopy has the advantages of low sample damage as well as high specificity imaging and is an indispensable imaging technique in many fields, such as biomedicine, life science, and material chemistry. However, the conventional optical microscope uses parallel light to illuminate the entire sample, which cannot effectively distinguish the in-focus signal from the defocused background, and does not have the ability of realizing a three-dimensional optical section imaging. Hence, a sparse structured illumination three-dimensional sectioning microscopy (SSI-3DSM) technology based on resonant scanning is proposed herein. The sparse structured illumination is rapidly generated via the resonant scanning focusing spot, and the three-dimensional optical section imaging of the sample to be measured is realized using a multistep phase shift to determine and eliminate the background noise. Compared with scanning wide-field imaging, the proposed method improves the axial resolution by 1.3 times and the signal-to-background ratio by 12 times. Moreover, the proposed method demonstrates stable performance, is cost effective, and can be easily commercialized. It can be combined with super-resolution microscopy imaging techniques such as structured illumination microscopy and single-molecule localization to further improve lateral resolution.

This paper presents a novel 3D measurement method for a light field camera (LFC) in which 3D information of object space is encoded by a microlens array (MLA). The light ray corresponding to each pixel of the LFC is calibrated. Once the matching points from at least two subviews exhibit sub-pixel accuracy, the 3D coordinates can be calculated optimally by intersecting light rays of these points matched through phase coding. Moreover, the proposed method obtains high-resolved results that exceed the subview resolution due to the virtual continuous phase search strategy. Finally, we combine the LFC and coaxial projection to solve the 3D data loss caused by shadowing and occlusion problems. Experimental results verify the feasibility of the proposed method, and the measurement error is about 30 μm in a depth range of 60 mm.

The processing of modern industrial parts has realized the technology for directly inputting 3D computer-aided design models into numerical control systems without the need for two-dimensional marking blueprints. The advancement of processing technology has introduced new challenges to workpiece measurement and quality evaluation methods. The profile modeling quality evaluation method based on obtaining high-precision and dense 3D point cloud models of workpieces has received attention, particularly for the manufacturing of parts with complex structures. However, the high-precision 3D measurement of parts with complex structures presents two challenges. The first is the very high surface finish of metal parts after processing. Actively projected patterns cause strong reflections on the metal surface, resulting in the problem of blindness or interreflections between complex structures, the latter of which results in aliasing. The second is the subsurface scattering of projected light owing to the translucency of composite materials. These factors induce measurement failure. The existing structured light-active vision 3D reconstruction technology cannot solve the aforementioned problems. Thus, a complex light separation model based on a single-pixel imaging method is established to address the problem of 3D reconstruction under complex illumination without spraying. This method realizes the separation of direct and complex illumination and resolves the aforementioned difficulties. To tackle the problems faced by the single-pixel imaging method, including low efficiency, speed, and its practicability in real-world measurement scenarios, a parallel single-pixel imaging method based on a local region extension method is proposed. Experiments demonstrate that the parallel single-pixel imaging method can solve the problem of 3D reconstruction under the influence of interreflections and subsurface scattering that may occur in practical measurement situations.

Multiscreen splicing can realize integral imaging light field 3D displays with various sizes; however, the 3D source generation process is complex. This study proposes a 3D source rendering platform that can provide sources for the multiscreen splicing realized integral imaging light field 3D display. The obtained 3D images can be reproduced accurately without misalignment. In the process of the 3D source rendering, first, the parameters such as the resolution of an elemental image array are calculated. The Open Graphics Library (OpenGL) is employed to render an initial elemental image array. Second, an elemental image array for the splicing realized integral imaging light field 3D display is obtained by splitting the initial elemental image array, using the projection transform, and splicing the sub-elemental image array. The 3D source rendering program is packaged as a visual 3D source rendering platform based on Qt (Application Development Framework), which supports the input of multiple formats of 3D models, such as .obj and .fbx, as well as the output of an elemental image array with resolution of 16K and above. The experimental results demonstrate that the elemental image array generated using the 3D source rendering platform can realize an accurate 3D image on an integral imaging light field 3D display that is spliced using dual 8K resolution screens. The proposed 3D source rendering platform can meet the 3D source generation needs of the multiscreen splicing realized integral imaging light field 3D display, which can be used during exhibitions and in other fields.

Because of features such as continuous viewpoints and visual-aid elimination, a integral imaging three-dimensional (3D) display technology has gained widespread acceptance; however, its common phenomenon of visual crosstalk seriously affects the stereoscopic viewing effect. In this study, the effective pixel region of each element image that corresponds to the stereoscopic area was examined, and the corresponding crosstalk-pixel distributions were deduced. Then, a crosstalk-free integral imaging 3D display structure with a full stereo viewing area was proposed, and an accurately designed mask array with a gradient aperture was created. On one hand, the mask array penetrates the effective pixel light, allowing the light to be imaged onto the main view area and all the levels of the stereoscopic view area, thereby retaining all the integral imaging system stereo viewing areas. On the other hand, it blocks the outgoing crosstalk-pixel light, thereby removing crosstalk between adjacent view areas and achieving a complete crosstalk-free integrated imaging 3D display of the full stereoscopic view area. The proposed device is straightforward to build, and it will help popularize the integral imaging 3D display technology.

Holographic technology is crucial for realizing a true three-dimensional (3D) display of space suspension. The spatial light modulator (SLM) is currently the only device capable of real-time dynamic holographic true 3D image projection. However, the lack of pixels and resolution limits its application in the field of space suspended true 3D displays. This study examines the real 3D display of space suspension with high resolution and low noise realized using multi-SLM splicing. First, the high resolution hologram with the 360° viewing angle of a 3D object was calculated by Fresnel tomography combined with the spatial coordinate transformation technology. Then, each hologram was divided into four pieces of the image with the same resolution, which were loaded onto four SLMs spliced in an array. After filtering the light beams other than the first order, a complete holographic 3D real image with high information capacity and resolution was reconstructed. Finally, the ultrasonic atomized medium was used for carrying the 3D real image, realizing real-time dynamic space suspension true 3D display. Additionally, this study investigates the noise suppression for the reconstructed image using the time-averaged method, and the experimental results prove that this method can effectively improve the quality of the spatially suspended holographic display image.

In this study, we propose a lensless coding edge enhancement imaging technology based on a spiral zone aperture whose system comprises a spiral zone aperture and an image sensor. A captured image is reconstructed by backpropagation. In backpropagation, isotropic edge enhancement imaging can be realized by taking the intensity value, whereas anisotropic edge enhancement imaging can be realized by taking the real or imaginary part. The theoretical derivation and verification of the anisotropy edge enhancement imaging achieved by taking the real part are performed, and an initial phase factor is introduced to realize the anisotropic edge enhancement imaging with selective directions. Our numerical simulations and experimental results verify the consistency between theoretical analysis and experimental results, and a quantitative comparative analysis is performed for the edge enhancement reconstruction results using the lensless imaging system with Fresnel and spiral zone apertures respectively. The results verify that a spiral zone aperture is more suitable for edge enhancement imaging. The proposed technology can be applied to intelligent recognition, defect detection, and virtual reality tasks.

A laser velocimetry method based on a single-photon array camera is proposed here. This approach can measure the lateral and radial velocity of a target simultenously under the single-photon echo intensity. A set of indoor array velocimetry lidar system is built to confirm the feasibility of array time-correlated single-photon counting technology for lateral and radial velocity measurement of moving targets. Background noise from the space and time dimensions is removed using signal preprocessing. The target's time-varying three-dimensional spatial coordinate is identified through centroid and peak detection and the target's lateral and radial velocity values are estimated using the least square method's linear fitting of the data. The result demonstrates that the frame accumulation number is associated with the best speed measurement performance. This method can measure the velocity of long-distance moving targets in poor light condition, and it is also expected to obtain a target velocity image in the future, giving technical support for the identification of long-distance complex moving targets.

The phase-shifting method is a powerful tool for analyzing the phase information of fringe patterns. This paper presents the fundamental principle of the newly developed spatiotemporal phase-shifting method (ST-PSM) and its applications in non-contact three-dimensional shape measurement. The simulation results show that ST-PSM can significantly reduce random noise, and eliminate the influence of nonlinear response and small dynamic range of image sensor on the measurement results. The experimental results show that ST-PSM can perform stable non-contact shape measurement under extremely low exposure conditions.

The phase recovery and dephasing of single-frame structured light images are crucial in the field of three-dimensional (3D) imaging. Theoretically, short-time Fourier transform (STFT) can efficiently complete phase recovery under the condition of selecting appropriate parameters; however, the prerequisite of "appropriate parameters" is often challenging to achieve due to the lack of prior information. Herein, a single-frame 3D imaging algorithm of structured light field based on adaptive STFT is proposed using a structured light field system to take full advantage of the characteristics of light field imaging. The suggested technique fuses the structured light field image from three angles of texture, instantaneous frequency, and depth estimation information and further divides the light field image into several regions that meet the STFT constraint. The associated STFT parameters are estimated for each zone using the instantaneous frequency, and then the relative phase is recovered. Next, the phase separation method of the structured light field is used to achieve 3D imaging. The experimental results show that the proposed adaptive STFT algorithm can successfully accomplish the 3D imaging of the measured object.

Three-dimensional (3D) imaging technology obtains massive points (MPs) of a surface in a very short time. In this study, we recognize points on 3D edge contour lines (ECLs) from MPs of a surface. First, the position features of the points on the ECLs, which are called edge contour points (ECPs), are analyzed, and it is proposed to detect ECPs from the vertical section lines (VSLs) of the ECLs. Then, the position of an ECP needs to be calculated based on the middle point of the chamfered arc (MPCA) on a VSL. Considering the distribution character of points on the CA (PsCA), three methods are proposed here to recognize the MPCA: parabola fitting for its vertex method, calculating the accumulated normal angle (AcNA) of each PsCA for the point whose AcNA is closest to the half central angle of the CA, and calculating the approximate arc length (AAL) of each PsCA for the point whose AAL is closest to the half arc length of the CA. Finally, the effectiveness and accuracy of the three methods are verified on several ECLs.

This paper proposes an objective evaluation model of stereo image visual perception based on convolutional neural network (CNN) and support vector regression (SVR) to solve the issue of multidimensional influencing factors for stereo images and improve the accuracy of prediction results. In this proposed model, the plane saliency map using color and the disparity map based on differences are combined, and the potential salient discomfort regions of visual perception are obtained using threshold segmentation. Then, global features, such as contrast, color, and structural complexity, are extracted using feature extraction along with the local features, such as disparity, texture, and spatial frequency. Finally, the objective evaluation model of multifeature visual perception is constructed by combining CNN and SVR to obtain the final objective prediction value. Experimental results show that the Pearson linear correlation coefficient and Spearman's rank correlation coefficient of the proposed method are higher than 0.87 and 0.83, respectively. In addition, compared with other existing methods, the objective evaluation model proposed in this paper is better on the public dataset, and the prediction results have higher consistency with the subjective evaluation results.

A swinging harmonic error occurs between the measured distance of the indirect time-of-flight (ITOF) camera and the real distance due to the nonideal factors of the optical waveform of the laser transmitter and the tap response of the imaging sensor. The harmonic error can interfere with the accuracy of distance measurement. This paper analyzes the causes of harmonic error in ITOF cameras and clarifies the relationship between the harmonic error period, ranging period, and number of pixel taps. Based on triangular series fitting, this work proposes a method to correct such harmonic errors in a short calibration range and a large calibration step, which improves the calibration efficiency. The effectiveness of the proposed method is verified through 66.67 MHz 3-tap ITOF camera ranging experiments. The experimental results show that the average distance measurement accuracy of the principal point pixel is 2.5787 mm, while the average plane fitting error in the plane distance measurement is 4.3876 mm. Thus, the findings emphasize that the proposed method can effectively reduce the harmonic errors in distance measurements using ITOF cameras.

Binocular camera calibration is the foundation of stereo vision research, and its accuracy is the key to achieve precision in vision measurement. The basis of camera calibration is image corner extraction, but in real applications, the accuracy of detected corner points is often low due to unclear acquisition of images due to external influences, which affects calibration accuracy. To solve the problem of low-quality corner detection in terms of the feature level, an end-to-end algorithm based on super-resolution subpixel corner detection is proposed. First, the fuzzy kernel of a low-resolution image is estimated using the blind hyperspectral part, and its features are fused to reconstruct its high-resolution version. Subsequently, the subpixel position of the corner points is obtained. Finally, a binocular camera is calibrated with high accuracy and tested via ranging experiments. Experimental results show that the proposed subpixel corner detection method based on super-resolution has advantages in real scenarios.

The three-dimensional reconstruction technology of asphalt pavement textures based on image processing has the advantages of being fast, comprehensive, and high-resolution. Therefore, we propose a binocular vision technology based on multiscale image fusion aimed at the low accuracy problem of three-dimensional texture detections caused by the concentrated color of asphalt pavements and the lack of prominent feature points. First, we implemented multiscale decomposition of the image via weighted least squares filtering. Next, we fused the multiscale image information with a cross-scale aggregation model to three-dimensionally reconstruct the asphalt pavement, improving the accuracy of the binocular reconstruction. The proposed method was compared with a laser scanner through regional texture parameters. The results show that the regional texture parameters obtained by the proposed method are close to those obtained by the laser scanner.

Single-photon counting imaging technology has demonstrated potential for applications in faint target detection, laser remote sensing, and automatic driving. Therefore, in order to explore it for obtaining more dimensional target information, a target attitude acquisition method is proposed and verified. The single-photon counting three dimensional (3D) images (depth images) of the target in different attitudes are built into a database as a priori information. By calculating the correlation coefficient between the database image and the actual single-photon counting image (unknown attitude) of the target, the most relevant attitude in the database is selected as the actual attitude. A single-photon array detector is then used to establish the experimental system, and the target is illuminated by a diverging laser. The target depth images with attitudes between -60° to 40° in the database are constructed with 20° at increments. Combining database depth images and target depth images with attitudes of -45° and 25°, the proposed method is used to estimate the target attitude as well as verify its accuracy. Under these two postures, depth images with photon counts of 10, 50, and 100 are made, respectively,to explore the relationship between the similarity and photon count between the actual posture of the target and the corresponding posture in the database. The multi-axis rotation of the target was conducted at 15° and 20° to explore the proposed method's feasibility when the multi-axis change of target attitude occurs. To explore the influence of background noise on estimation success rate, the background noise was changed and 3D imaging was conducted for the target under the condition that the signal photon counts to background photon counts ratio (SBR) is 8.13, 4.83, 3.21, and 0.72, respectively. Using wood man as the target, the database is established with 30° as the unit, and the wood man at -20° and 20° is imaged while the proposed method's feasibility for complex target attitude estimation is verified. Experimental results show that the proposed method can successfully estimate the actual attitude of the target. Meanwhile, correlation between the actual attitude of the target and the corresponding attitude in the database is positive with the photon count. High SBR is also conducive to accurate estimation of the target attitude.

This paper proposes an improved shape-from-focus (SFF) algorithm based on wavelet transform to realize rapid 3D surface reconstruction of laser damage via online microscopic imaging. Here, the depth was retrieved point by point through the 3D focus evaluation function, thereby reconstructing the 3D surface of the laser damage of optical elements. A 3D surface reconstruction experiment was performed on the damaged area of the silver mirror surface using the online microscopic imaging device for laser damage, based on the proposed algorithm. The results show that the definition ratio, sensitivity, and flat fluctuation of the proposed improved algorithm are 2.608, 0.131, and 0.356, respectively, and the relative error is 1.56% for the identified area with a maximum depth of 169.8 μm. As the proposed algorithm's results are compared to those of the traditional method, it is discovered that the definition ratio is increased by approximately one time, the sensitivity is increased by approximately three times, the relative error of depth measurement is reduced to one-tenth, and the flat fluctuation can be 1.1 times that of the conventional method. The proposed algorithm has already been employed in the development of an online microscopic damage detection system to achieve on-line rapid reconstruction and measurement for 3D laser damage topography.

In low-light conditions, the single-photon light detection and ranging (Lidar) technique based on time-correlated single-photon counting (TCSPC) is suited for collecting a three-dimensional (3D) profile of the target. We present a rapid 3D reconstruction approach for single-photon Lidar with low signal-to-background ratio (SBR) and few photons based on a combination of short-duration range gate selection, photon accumulation of surrounding pixels, and photon efficiency algorithm in this paper. We achieve the best noise filtering and 3D image reconstruction by choosing the optimal combined order of simple methods. Experiments were carried out to validate the various depth estimation algorithms using simulated data and single-photon avalanche diode (SPAD) array data under varying SBR. The experimental results demonstrate that our proposed method can achieve high-quality 3D reconstruction with a faster processing speed compared to the existing algorithms. The proposed technology will encourage the use of single-photon Lidar to suit practical needs such as quick and noise-tolerant 3D imaging.In low-light conditions, the single-photon light detection and ranging (Lidar) technique based on time-correlated single-photon counting (TCSPC) is suited for collecting a three-dimensional (3D) profile of the target. We present a rapid 3D reconstruction approach for single-photon Lidar with low signal-to-background ratio (SBR) and few photons based on a combination of short-duration range gate selection, photon accumulation of surrounding pixels, and photon efficiency algorithm in this paper. We achieve the best noise filtering and 3D image reconstruction by choosing the optimal combined order of simple methods. Experiments were carried out to validate the various depth estimation algorithms using simulated data and single-photon avalanche diode (SPAD) array data under varying SBR. The experimental results demonstrate that our proposed method can achieve high-quality 3D reconstruction with a faster processing speed compared to the existing algorithms. The proposed technology will encourage the use of single-photon Lidar to suit practical needs such as quick and noise-tolerant 3D imaging.

A dual-frequency digital Moiré measurement method (DFDM) is proposed for the three-dimensional (3D) shape measurement of an object. The high- and low-frequency fringes are modulated separately along orthogonal direction using different carrier frequencies before being projected onto the measured object. After collecting and demodulating the composite fringe, the digital π phase shift is used to remove the DC component of the demodulated fringes, resulting in high-precision Moiré fringes for calculating the wrapped phase. The unwrapping of the high-frequency wrapped phase is guided by the low-frequency phase to further realistically reconstruct the surface of the measured object. When compared with existing single-shot digital Moiré profilometry, DFDM effectively removes the DC component of the fringe and calculates the phase more accurately.A dual-frequency digital Moiré measurement method (DFDM) is proposed for the three-dimensional (3D) shape measurement of an object. The high- and low-frequency fringes are modulated separately along orthogonal direction using different carrier frequencies before being projected onto the measured object. After collecting and demodulating the composite fringe, the digital π phase shift is used to remove the DC component of the demodulated fringes, resulting in high-precision Moiré fringes for calculating the wrapped phase. The unwrapping of the high-frequency wrapped phase is guided by the low-frequency phase to further realistically reconstruct the surface of the measured object. When compared with existing single-shot digital Moiré profilometry, DFDM effectively removes the DC component of the fringe and calculates the phase more accurately.