View fulltext

View fulltext

ObjectiveDue to the complex ground background environment, single uncooled infrared photoelectric stabilizing device has the shortcomings of low target recognition and tracking reliability in the application.MethodsTo resolve the issue, firstly, temperature character difference between typical ground targets and the background, and the influence of background environment on infrared imaging are studied. Based on this, a dual optical Photoelectric stabilizing device with infrared channel (IR channel) and television channel (TV channel) (two channels independently) is designed. By comparing the imaging performance of the composite stabilized tracking device and the single infrared stabilized tracking device, the feasibility of the composite stabilized tracking device was analyzed.Results and DiscussionsThe influence of background environment on infrared imaging is as follows. In the morning/evening hours, the temperature difference between the target and the ground is small (less than 5 K), and the infrared image has the defects of low target contrast and low signal-to-noise ratio; During the midday period, the infrared image has the defect that the target is completely submerged in the background. The design of a composite stabilized tracking device with infrared channel and TV channel, the TV channel has little influence on the imaging quality of the infrared channel (the actual light transmission of the infrared channel is relatively reduced by less than 5%). Under the condition of large interference of ground background infrared radiation noise, the image of the TV channel is clear, and the image of the infrared channel is clear under the condition of sufficient temperature difference of the target, which verifies the feasibility of the overall scheme of the infrared-TV stable tracking device.ConclusionsThe IR-TV dual optical photoelectric stabilizing device form a good complementary relationship, which can significantly expand the application range of the stabilized tracking device.

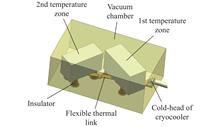

ObjectiveAdjusting the temperature of 2nd temperature zone of cryogenic optical system by applying the electrical heating control method will increase heat load on cryocooler, increase needs for electric power and heat dissipation resources of cryocooler, even decrease dependability of cryocooler in space application. When the cryogenic optical system in space payload is applicated on-orbit, a method without extra heat load on cryogenic optical system, which controlling the temperature of 2nd temperature zone accurately, is urgently needed under the condition of limited electric power and heat dissipation resource on the satellites.MethodsIn view of the above problems, thermal resistance control method was proposed to adjust the temperature of 2nd temperature zone of cryogenic optical system in this study. A mechanical structure used for thermal resistance control, consisted of screw, screw sleeve and heat insulated pad, was designed (Fig.2), which based on the model of dual-temperature zone of cryogenic optical system (Fig.1). A one dimensional (1D) mathematical model was developed based on this mechanical structure. The mathematical model was used to investigate heat balance temperature level and heat leakage of 2nd temperature zone. The effects of material parameters such as TC4 and 4J36 and geometric parameters such as length of screw, height of heat insulated pad, height of screw sleeve and thickness of heat insulated pad were investigated. Meanwhile, different temperature conditions of vacuum chamber were considered.Results and DiscussionsThe experiment results show that the temperature fluctuation of 2nd temperature zone is smaller than ±0.5 ℃ within 4 hours in a row, which can be determined that the condition of thermal equilibrium is acquired. Different environmental temperature and different thermal resistance lead to the difference of heat balance temperature of 2nd temperature zone, which verifies the feasibility of thermal resistance method (Fig.5). The computed results of 1D mathematical model are in good agreement with the experimental data. The maximum deviation between the computed results and the experiment results is only 1.6% (Fig.6-7), which proved the reliability of the calculation method in this paper. Users can obtain more parameters of thermal resistance based on different temperatures of 2nd temperature zone by using above mathematical model. When the thermal resistance is taken constant, the temperature of 2nd temperature zone increases with increasing the temperature of vacuum chamber, which is result of increasing heat leakage caused by increasing environmental temperature. When the temperature of vacuum chamber is taken constant, the temperature of 2nd temperature zone increases with increasing length of screw, decreasing thickness of heat insulated pad and decreasing thermal conductivity, but heat leakage decreases. When the temperature of vacuum chamber is 253.15 K, the adjustment range of the temperature of 2nd temperature zone is 111.1-185.2 K, and the corresponding heat leakage decreases from 1.75 W to 1.1 W. When the temperature of vacuum chamber is 293.15 K, the adjustment range of the temperature of 2nd temperature zone is 120.9-219.4 K, and the corresponding heat leakage decreases from 2.58 W to 1.57 W (Fig.8-Fig.11). According to the above results, there is no extra heat load on cryogenic optical system with thermal resistance control method. The effect of heat load on 2nd temperature zone with the electrical heating control method and thermal resistance control method is calculated and compared, while the temperature of vacuum chamber is 293.15 K. With the raising of target temperature of 2nd temperature zone, heat load on 2nd temperature zone decreases with thermal resistance control method, but increases with electrical heating control method. When the temperature of vacuum chamber is around 220 K, the heat load on 2nd temperature zone is 16.8 W with electrical heating control method, which is nearly 11 times higher than that with thermal resistance control method (Fig.12).ConclusionsUsers can select the parameters of thermal resistance they need according to the appointed temperature of vacuum chamber and temperature of 2nd temperature zone. The cryocooler’s needs for electric power resources and heat dissipation resources can be reduced by using thermal resistance control method compared with electrical heating control method. This study may provide a reference for the thermal design of similar cryogenic optical system in space application.

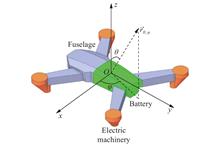

Objective The wide use of small rotorcraft brings new challenges to the safety of high-value targets. At present, there are many researches on the infrared radiation characteristics of fixed-wing aircraft. The optical characteristics of rotorcraft are mainly reflected in infrared/visible light fusion and infrared small target detection, etc. However, there are few theoretical studies on the characteristics of temperature distribution, infrared radiation spectrum and space distribution of rotorcraft. In order to detect and defend small rotorcraft effectively, it is of great significance to study the spatial distribution characteristics of infrared radiation of small rotorcraft.Methods In order to accurately grasp the infrared radiation characteristics of rotorcraft, firstly the geometric model of a small rotorcraft is established (Fig.1), and the model is divided into unstructured grids to generate surface grids (Fig.2), and the area and direction vector of each surface grid are determined. Secondly, temperature measurement and infrared radiation image acquisition are carried out for the rotorcraft at stationary, no-load flight for 10 min and 100 g load flight for 10 min respectively (Fig.3). Finally, based on the Monte Carlo method (Fig.5), and the absorption and reflection of light beams by radiation grid elements are considered, the distribution and law of the middle and far infrared radiation intensity of the rotorcraft under different working conditions and different detection directions are calculated.Results and Discussions According to three different working conditions, the spatial distribution of infrared radiation intensity of the rotorcraft in the band of 3-5 μm and 8-14 μm is calculated (Fig.6). At the zenith angles θ of 78°, 101°, and 160°, the radiation intensity of the rotorcraft presents the peak value. The peak value is the highest at about 78°, and the maximum values are 1.13, 1.28, 1.35 W/sr respectively. When the zenith angle θ is 0°, 90° and 180, the radiation intensity is small. In the horizontal direction, when the circumferential angle φ is about 90° and 270°, the radiation intensity of the rotorcraft is relatively strong. The infrared radiation intensity distribution curve of the rotorcraft at stationary and 100 g load flight for 10 min is compared and analyzed (Fig.7-8). When φ is 90°, the radiation intensity of the band 3-5 μm increased by about 56.1%, and the radiation intensity of the band 8-14 μm increased by about 19.5%. When θ is 78.3°, the radiation intensity of 3-5 μm increases by about 53.7%, and the radiation intensity of 8-14 μm band increases by about 19.5%. It can be seen that a longer flight time and a heavier load will significantly enhance the infrared radiation of the rotorcraft.Conclusions The geometric model of a small rotorcraft is established, and the flight and data collection of the rotorcraft are carried out to obtain the temperature field distribution data of the surface of the rotorcraft. On this basis, Monte Carlo method is used to simulate the radiation transfer process of rays, and the middle and far infrared radiation intensity distribution of the rotorcraft in different detection directions under different working conditions is obtained, and the following conclusions are drawn. The rotor motor and battery is the main infrared radiation source of rotor vehicle; The longer the flight time of the rotorcraft, the larger the load, the larger the output power of the motor and battery, the more waste heat will be released, and the stronger the infrared radiation of the rotorcraft; When the zenith angle θ is 78°, 101° and 160°, the radiation intensity of the rotorcraft shows the peak value, while when the zenith angle θ is 0°, 90° and 180 °, the radiation intensity is small. The radiation intensity of small rotorcraft in 3-5 μm band is about 0.05 W/Sr, and that in 8-14 μm band is about 0.8 W/sr. The radiation intensity of rotorcraft is mainly concentrated in long-wave infrared band.

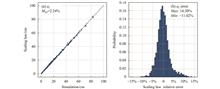

ObjectiveLaser beams propagating in turbulent atmosphere suffer from various factors such as diffraction, turbulence, aberration and jitter, which broaden the beam radius and significantly affect laser applications including laser propagation, communication and imaging. In recent years, researchers have made efforts to develop fast and high-precision methods for calculating far-field effective radius. Scaling laws and artificial intelligence models are commonly used for fast evaluation of laser beam propagation. However, comparison between these two methods is rarely reported. In this paper, the fast calculation method of far-field radius is studied, and the performance of the different models is compared in many aspects, hoping to provide reference for the selection and application of fast calculation methods for evaluation of laser atmospheric propagation.MethodsFirstly, numerical simulation is carried out by using wave optical program, and the scaling index of the scaling law is determined by genetic algorithm. Secondly, three AI (artificial intelligence) models, MLP, LightGBM and FTT, are selected to evaluate the effective radius of the far field, Additionally, two specific improvements are made to the FTT model (Fig.2). Then, the hyperparameters of AI models are determined by using TPE algorithm (Fig.3-4). Finally, three artificial intelligence models are constructed to evaluate the far-field effective radius (Fig.5).Results and DiscussionsWhen the data is divided into 70% training set and 30% test set, the accuracy of the scaling law model and the three AI models are compared in the test set (Fig.1, Fig.6). The results show that the accuracy of the three artificial intelligence models is higher than that of the scaling law model, and the MFTT model has the highest accuracy with mean relative error of 1.36%. The effect of training set sample size on the evaluation accuracy of 4 models was studied (Fig.7). From the perspective of changing trend, the scaling law model is relatively stable, while the three AI models change sharply, which indicates that the accuracy of the AI model is more dependent on the amount of modeling data. When the training set ratio is greater than 8%, the accuracy of MFTT begins to be higher than that of the scaling law model, and the accuracy of MFTT is consistently higher than that of the other two models in the three AI models.The generalization ability of each model is compared under different propagation scenarios (Fig.8). The results show that the scaling law model and MFTT model have strong generalization ability, while the LightGBM and MLP model have poor generalization ability. The evaluation speed of each model is compared (Fig.9). The results show that all the models can evaluate the effective radius of the far field faster, in which the scaling law is the fastest.ConclusionsThe far-field effective radius of Gaussian beam propagating through turbulent atmosphere has received much attention in engineering. This paper studies its fast calculation method. Based on the wave optics program, simulation of Gaussian beam propagating through turbulent atmosphere in multiple scenes is carried out, the scaling law model and three artificial intelligence models (LightGBM, MLP, MFTT) are constructed. The accuracy, generalization ability, the influence of the sample size and the speed of calculation of the scaling law model and three artificial intelligence models are compared. The results show that the accuracy of four models is influenced by the sample size of the training set. The accuracy of the scaling law model is the highest under the small sample size, and the accuracy of the MFTT model is the highest under the large sample size. When the data is divided into a 70% training set and a 30% test set, the mean relative error of the MFTT model is 1.36% under test set, MFTT has the best generalization ability and the scaling law model has the fastest calculation speed. This paper hopes to provide reference for the selection and application of fast calculation methods for evaluation of laser atmospheric propagation.

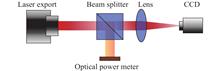

ObjectiveAccurate measurement of beam quality is crucial to the evaluation of laser performance, and compared with the traditional method of measuring and then correcting after long-distance transmission, the beam quality after short-distance transmission is closer to the real situation at the export of the laser. When high-energy laser propagates in the air, as the power increases, the interaction with the air will also become more obvious, mainly reflected in atmospheric turbulence and thermal blooming effects, which will affect the distortion and expansion of the spot shape, leading to a decrease in beam quality. However, current simulations and experiments on high-power large-caliber laser transmission often involve long-distance transmission to obtain target power. After accounting for the effects of atmospheric turbulence and thermal blooming on the transmission path, they then deduce beam quality information for further evaluation. Due to the complexity and randomness of atmospheric turbulence and thermal blooming, as well as the inability to obtain real-time relevant parameters of each point on the transmission optical path, the deduction amount is much greater than the true value of the beam quality under poor atmospheric conditions, resulting in significant errors. Therefore, measuring beam quality directly at the system export, given the short transmission distance, is less affected by nonlinear effects such as atmospheric turbulence and thermal blooming, and thus produces more accurate results.MethodsFirstly, the theories regarding the atmospheric turbulence effect and thermal blooming effect in short-distance transmission were elaborated. Then, we simplified the optical path diagram for measuring beam quality (Fig.1), and established a model simulation based on this optical path diagram, by changing different environmental factors and conducting simulation experiments. Using a parallel light tube with a diameter of 700 mm, at a distance of 20 m, a Charge Coupled Device (CCD) was used to receive the focused spot and calculate its beam quality. While measuring the beam quality, a temperature pulsation meter was used to collect the indoor turbulence intensity during the experimental process, and methods for reducing turbulence intensity were analyzed.Results and DiscussionsIn the case of 20 m transmission, the beam quality $ \beta $ factor was severely affected by turbulence intensity $ C~~n^2 $, and the spot shape underwent significant distortion and expansion as turbulence increases (Fig.2). Further analysis was conducted on the variance of the $ \beta $ factors under different intensities of turbulence. The variance did not exceed 0.08% under weak turbulence, approximately 12.63% under moderate turbulence, and over 100% under strong turbulence (Fig.3). For lateral winds of different speeds, the difference in $ \beta $ factors was within 10%. For different relative humidity of the air, the difference in $ \beta $ factors did not exceed 15%. For different apertures, $ \beta $ factors increased with the increase of aperture size. In this case, the difference in $ \beta $ factors between different apertures was about 15% (Fig.4). When strong turbulence intensity is reached, the difference in $ \beta $ factors between different apertures could reach up to 80% (Fig.5). For different powers, the error in $ \beta $ factors calculation was within 2%. The beam transmission with different initial $ \beta $ factors was also simulated, and the beam quality measurement deviation of the laser system was less than 5% for the laser system with the air turbulence control intensity controlled below $ 1 \times {10^{ - 14}}\;{{\text{m}}^{{{ - 2/3}}}} $ and the beam quality $ \beta $ factor not less than 3 (Fig.6). Different transmission distance also had an impact on the $ \beta $ factors of laser beam quality. When the $ \beta $ factor measurements are stable in the moderate turbulent intervals for a transmission distance of 2 m, whereas it increases approximately linearly for 20 m (Fig.7). The actual measured beam quality of the 700 mm caliber collimator (Fig.8) was consistent with the simulation results (Fig.9). Collecting indoor turbulence intensity variation maps, both static and stirred air could achieve lower turbulence intensity values (Fig.10), which means that in actual measurement processes, atmospheric turbulence intensity can be reduced by stirring air to obtain more accurate values.ConclusionsThis article introduces the research progress of high-power laser beam quality measurement. We separately discussed the effects of atmospheric turbulence and thermal blooming on laser transmission over short distances. After short distance transmission, the atmospheric turbulence effect has a greater impact on beam quality, and as $ C~~n^2 $ increases, the $ \beta $ factors increases from 1.0848 to 8.9933. The influence of factors such as thermal blooming effect and humidity is minimal. In case of moderate and weak turbulence, the difference in $ \beta $ factors for different lateral wind speeds, relative air humidity, and power is within 3%. But for different apertures, when reaching $ C~~n^2 \leqslant {10^{ - 14}}\;{{\text{m}}^{{{ - 2/3}}}} $ , the difference in $ \beta $ factors for different apertures is about 15%. When strong turbulence intensity is reached, the difference in $ \beta $ factors for different apertures can reach up to 80%. And in actual measurements, it has been verified that stirring air can also reduce atmospheric turbulence to a certain value. Therefore, it is necessary to minimize the disturbance of the air within the distance from the outlet to the beam splitter, making stirring air more practical. The $ \beta $ factors deviation is within 8% when $ C~~n^2 \leqslant {10^{ - 14}}\;{{\text{m}}^{{{ - 2/3}}}} $ at 20 m transmission distance. The beam quality degradation after transmission is different for lasers with different initial factors, and the more excellent the initial beam quality is, the more serious the degradation is. The experimental results of this paper show that the beam quality measurement deviation of the high-power, large-caliber laser system with beam quality $ \beta $ factor not less than 3 is less than 5% by controlling the air turbulence control intensity below $ C~~n^2 < {10^{ - 14}}\;{{\text{m}}^{{{ - 2/3}}}} $ , which provides a solution for the beam quality measurement of large-caliber laser export.

ObjectiveHigh-power lasers are being used in an increasingly wide range of applications. Coherent beam combining (CBC) of multiple lasers represents the most effective method for obtaining high-quality and high-power laser output. Fast and accurate phase control of each laser is crucial for achieving laser CBC, and the stochastic parallel gradient descent (SPGD) algorithm is widely used because of its advantages of parallel control and simple structure. However, the traditional SPGD algorithm faces challenges where the convergence speed and effectiveness are compromised due to fixed gain coefficients and disturbance amplitudes. Specifically, when the gain coefficient or disturbance amplitude is small, the algorithm tends to converge slowly. Conversely, setting a large gain coefficient or disturbance amplitude may cause the algorithm to fall into local optimal solutions. Moreover, as the number of composite beams increases, the required iteration steps for algorithm convergence will significantly rise, failing to meet the iteration time requirements for large-scale CBC systems. Therefore, optimizing the SPGD algorithm is essential to enhance its convergence speed, stability, and scalability. This optimization is a necessary step for realizing large-scale laser CBC.MethodsIn order to improve the issue that the traditional SPGD algorithm converges slowly and tends to fall into local optimal solutions when it is applied to large-scale laser CBC, a staged adaptive gain SPGD (Staged SPGD) algorithm is proposed. This algorithm adaptively adjusts the gain coefficient based on the performance evaluation function, using different strategies to enhance the convergence speed. Additionally, a control voltage update strategy with a gradient update factor is introduced to effectively update the control voltage, accelerate convergence, and mitigate instances where the algorithm gets stuck in local extreme values.Results and DiscussionsThe performance of the traditional SPGD algorithm and the proposed algorithm in the 19-laser CBC system is analyzed. The results show that the fixed gain coefficients of the traditional SPGD algorithm significantly impact convergence, with improper gain settings potentially leading to slow convergence or local optima (Fig.3). In contrast, the proposed algorithm significantly improves convergence performance by adaptively adjusting the gain coefficient and reduces sensitivity to the initial gain (Fig.4). Additionally, the random perturbation voltage affects convergence performance; The SPGD algorithm is more sensitive to changes in perturbation voltage, while the proposed algorithm exhibits better robustness within a certain range (Fig.5). The gradient update factor C also significantly impacts the convergence speed of the proposed algorithm, and setting C within a reasonable range (0.1 to 0.4) can substantially enhance algorithm performance (Fig.6). To validate the efficacy of the proposed algorithm's dual optimization strategy, independent simulation experiments were conducted on 19-laser CBC system under different improved operations (Fig.7). The results indicate that the proposed dual optimization strategy can effectively improve the convergence speed and stability of the SPGD algorithm when applied to the laser CBC system. The superiority of the proposed algorithm is assessed by comparing the convergence curve of the algorithm (Fig.8) and the number of iterative steps required for the convergence of the algorithm (Fig.9). The results show that when the evaluation function J of the proposed algorithm converges in 193 761 and 91 laser CBC systems, the number of iteration steps required is always smaller than other schemes, which has obvious advantages in convergence speed, and its advantages gradually increase with the increase of the number of beams. By calculating the time required for algorithm iteration (Tab.3), it can be seen that the proposed algorithm has improved overall efficiency. By analyzing the distribution of iteration steps when each algorithm converges (Fig.10), it can be concluded that the proposed algorithm has strong stability. Applying phase noise of different frequencies and amplitudes to both the Staged SPGD algorithm and the traditional SPGD algorithm (Fig.11) reveals that as the phase noise frequency, amplitude, and number of beams increase, the average J value of the system decreases, and convergence performance worsens. However, under the same phase noise condition, the average J of the system is higher after phase control with the Staged SPGD algorithm, which indicates that the proposed algorithm has the best control effect on the beam phase and better noise suppression performance.ConclusionsTargeting the slow convergence and observed susceptibility to local extremes when applying the traditional SPGD algorithm to large-scale laser CBC systems, a staged adaptive gain SPGD algorithm is proposed by improving the traditional SPGD algorithm. The proposed algorithm changes the fixed gain into the staged adaptive gain according to the performance evaluation function, and improves the real-time performance of the system. Additionally, the control voltage updating strategy with gradient updating factor is introduced to effectively update the control voltage and reduce the probability of getting stuck in the local optimal solution. The simulation results show that in the 19-laser array CBC system, compared with the traditional SPGD algorithm, the proposed algorithm improves convergence speed by 36.84%. The algorithm demonstrates better convergence in phase noise environments with varying frequencies and amplitudes, which greatly improves the stability of the algorithm, and the algorithm also has certain advantages in the iteration time. Furthermore, when directly applied to 37-laser, 61-laser, and 91-laser CBC systems, the Staged SPGD algorithm improves the convergence speed by 37.88%, 40.85%, and 41.10%, respectively, compared to the traditional SPGD algorithm. The improvement effect is more significant with the increase of the number of lasers, which indicates that the algorithm has certain advantages in convergence speed, stability and scalability, and has the potential to be extended to large-scale CBC systems.

Significance In addition to being used for information collection, monitoring, and rescue missions, aviation optoelectronic turrets can also cooperate with other platforms or local sensors to undertake tasks such as target search and target feature analysis, and have become indispensable equipment for airborne platforms. The aviation optoelectronic turret is based on optoelectronic technology, relying on high-tech such as optical engineering, precision machinery, information processing, automatic control, and computers, and integrates various optical imaging sensors. By utilizing the physical characteristics of the object's own radiation or reflection spectrum, the detection, recognition, and tracking of the target can be achieved through automatic or manual identification of the target. It has advantages such as passive detection, anti-electromagnetic interference, and high-resolution visual imaging. The turret equipped with laser ranging or irradiation functions can achieve precise target positioning and guidance, and has been highly valued by countries around the world. At present, a large number of aviation optoelectronic turrets have been equipped with modern aviation platforms, playing an important role.Progress Firstly, the development path of aviation optoelectronic turrets was introduced, which was divided into four stages. The first stage products were mainly used for information collection tasks, and only had daytime information collection capabilities. Typical products include the M65 system of the US AH21 Cobra helicopter and the M397 system of the French Antelope helicopter. The second stage of the product integrates thermal imagers, lasers, etc. internally, and has the ability to perform tasks all day long. Typical products include the MMS system of the OH-58D Kiowa helicopter and the TADS system of the Apache helicopter. At this stage, the products are generally in a two axis, two frame servo configuration and can output black and white imaging simulation videos. The third stage of product integration includes high-resolution infrared thermal imagers, visible light televisions, shortwave infrared televisions, laser rangefinders/irradiators, and other sensors, expanding geographic tracking and positioning capabilities. Generally, a two axis four frame servo configuration is adopted, which improves control accuracy and imaging quality. At present, it has entered the fourth stage of development, with a large number of high-performance turrets emerging. With high control accuracy, strong imaging ability, continuously improving intelligence level, it integrates various types of advanced sensors internally, is capable of executing diverse tasks, and able to complete ultra long range target detection and recognition. Foreign aviation optoelectronic turrets mainly include Euroflight series, Star Safire series, MX series, MTS series, and PV series products, all of which have been loaded in large quantities on aviation platforms. Through comprehensive investigation, the specific models, functions, and detailed technical parameters of foreign aviation optoelectronic turrets were excavated. From the aspects of system design, servo design, structural design, optical technology, new imaging detection technology, image enhancement technology, and artificial intelligence technology, the development trend of aviation optoelectronic turrets is revealed: 1) miniaturization and lightweight. 2) Multi sensor collaboration and modularization. 3) The development direction of servo systems for universal joint support or active damping devices. 4) Development direction of large aperture, long focal length, and large array optical systems and sensors. 5) Technical directions include infrared image enhancement, visible light image dehazing, multispectral image fusion, image stitching, and image super-resolution reconstruction. 6) Intelligent monitoring, control, and analysis based on artificial intelligence. 7) Application of technologies such as infrared polarization imaging, multispectral imaging, and single photon detection 3D imaging.Conclusions and ProspectsThe development of aviation optoelectronic turrets is flourishing, and in response to the needs of different platforms for turrets, it is necessary to plan the development of aviation optoelectronic turrets from a hierarchical development perspective and an overall perspective. The purpose of this study is to provide some reference for the development and optimization of future aviation optoelectronic turrets. In the future, aviation optoelectronic turrets that achieve ultra-high level servo stability can achieve functions such as detecting, identifying, and locating targets at ultra long distances under typical mission conditions, playing an increasingly important role in executing diverse tasks on aviation platforms.

ObjectiveWith the continuous improvement of power system transmission capacity and voltage level, previous voltage transformers have exposed some fundamental shortcomings, such as poor insulation performance, large volume, electromagnetic resonance, easy magnetic saturation, etc., which are difficult to meet the needs of smart grid development. Optical Voltage Transformer (OVT) adopts optical sensing technology, which can overcome the above defects and better meet the development needs of China's smart grid, with good development prospects. However, there are still many unresolved issues with OVT. In practical applications, small positional deviations between optical devices may occur due to vibration, unstable connections, and thermal expansion and contraction, resulting in uneven distribution of internal electric fields and affecting measurement results. However, current research methods have only reduced the unevenness and coefficient of the internal electric field, and have not improved the accuracy of measurement results when small crystal and optical path offsets occur in OVT crystals. Therefore, this article conducts electric field simulation on the longitudinal modulation OVT structure of 110 kV, analyzes the non-uniformity and coefficient inside the electric field, and proposes a new dielectric layering and wrapping method when offset occurs.MethodsThe sensing unit of OVT is simulated and analyzed using ANSYS Maxwell. Firstly, the distance between electrodes is determined (Fig.2), and then a layered structure is selected (Fig.3). Next, the packaging material and structure are selected (Tab.5), and finally the thickness of the packaging is determined (Fig.6). By using calcite with relatively high dielectric constant to divide the voltage of BGO and wrapping it with Al~~N, the adverse effect of electric field non-uniformity after contact between BGO with higher dielectric constant and SF6 gas with lower dielectric constant is greatly eliminated. And an experimental setup is built to verify its simulation results (Fig.9).Results and DiscussionsThe non-uniformity of the electric field inside the OVT sensing unit is mainly caused by direct contact between SF6 (1.002) with a relatively small dielectric constant and BGO (16) with a relatively large dielectric constant. Direct contact between the two is avoided by using calcite layering with a relative dielectric constant of 8.3 and Al~~N wrapping with 8.8. And a set of two ends Φ10 mm×75 mm calcite layers, with the middle being Φ10 mm×10 mm BGO and an OVT sensing unit with a thickness of 0.5 mm wrapped around it. Through system simulation, it can be found that the unevenness of the field integration voltage and the coefficient are significantly reduced (Fig.6), and the errors caused by crystal shift or optical path shift due to external factors are also significantly reduced. Finally, an experimental setup was constructed and signals were collected through CMOS. The results are shown (Fig.10), which presents the standard deviation calculated before and after the improvement, as well as under different voltages. The optimized effect can be clearly seen.ConclusionsThis article conducts simulation analysis on longitudinal modulation OVT with a voltage level of 110 kV. Detailed analysis is conducted on electrode spacing, additional medium, and wrapped medium, and the most suitable structure is found to effectively reduce the unevenness of the internal electric field of the crystal. In addition, compared to other studies, this article greatly reduces the errors generated in the case of crystal or optical path offset. By improving the internal structure of the OVT system, the uneven electric field along the crystal axis has been reduced from 1.157 3 to 1.008 8 (Fig.6), and the maximum electric field integration error caused by optical path offset has been reduced from 0.090% to below 0.008%; The maximum electric field integration error caused by crystal displacement has been reduced from 0.075% to about 0.006% (Fig.8), and the improvement effect is significant. And through experiments, it can also be demonstrated that the model can effectively improve the distribution of electric field inside the crystal and reduce the integration voltage error (Fig.10), which further confirms the effectiveness and reliability of our proposed method.

Objective Fiber optic tweezers have characteristics of compactness, high integration capability, and excellent portability, rendering them advantageous in applications such as chemical analyses, biosynthesis, and drug delivery systems. Single-hole suspension core fiber naturally integrates fiber waveguide and microfluidic channel, which can not only capture particles but also store, transport, analyze, and detect particles such as cells or drug molecules if applied in fiber optic tweezers. However, fiber-optic tweezers typically necessitate integration with microchannels or microfluidic technologies to perform multidimensional manipulations like transportation and sorting. The manufacture of microfluidic devices is complicated, and microfluidic devices and optical fibers as mutually independent devices with low system optical coupling efficiency and integration. Therefore, a simpler more efficient, and highly integrated method for particle or cell manipulation and transport is needed. For this reason, this thesis carries out research on fiber optic tweezer technology based on single-hole suspension core fibers to address the key issue of particle manipulation by suspension core fibers with hollow hole structures.Methods The particle manipulation principle of single-hole-suspended-core fiber optical tweezers is analyzed, the analytical model of single-hole-suspended-core fiber optical tweezers is established from the mechanism of the double-beam focused light field, the analytical calculation method of the light trapping force is determined, and the characteristics of single-hole-suspended-core fibers with symmetric and off-core structures are analyzed at the same time. A parabolic-shaped single-hole-suspended-core fiber optical tweezers probe is designed, and its simulation model is used to calculate the optical field and optical trapping force, analyze the energy distribution and the characteristics of the optical trapping force, and investigate the specific effects of the hollow aperture, particle size, and core power on the optical trapping force manipulation performance. A parabolic-shaped single-hole-suspended-core fiber probe with a diameter of 9 μm at the tip of the probe was prepared by the CO2 laser melt-drawing cone method with pneumatic pressure control, and an experimental system was constructed to realize the manipulation experiments on polystyrene particles with diameters of 2 μm, 5 μm, and 10 μm.Results and Discussions Simulations using Rsoft's Beamprop module were performed to analyze the optical field intensity distribution of single-hole-suspended-core fiber optical tweezers with different hollow apertures and core powers. The results show that increasing the hollow aperture enhances the light convergence effect (Fig.2). A model was established based on this simulation to investigate the effects of hollow aperture diameter, particle size, and fiber core power on the force on the particles. It was found that, the large aperture facilitates the provision of stable transverse and longitudinal capture points (Fig.4); Large-diameter particles facilitate longitudinal capture, while transverse capture requires appropriately sized particles (Fig.5); And the power of the suspension core has a significant effect on the transverse and longitudinal optical trapping forces, while the bias core has a lesser effect on the optical trapping forces (Fig.6). Finally, through the preparation and experimental verification of optical tweezers probes, it was confirmed that this parabolic single-aperture, dual-core, bias-suspended fiber optic tweezers could effectively manipulate particles with diameters of 2 μm, 5 μm, and 10 μm, and in particular showed the best performance for the manipulation of 5 μm particles (Fig.12).Conclusions A parabolic single-aperture dual-core biased suspended fiber probe structure is proposed. The structural parameters of the probe are optimized through simulation analysis, which significantly affects the optical tweezer optical field and capture force, and the optical tweezer probe is experimentally prepared, thus verifying that the probe can flexibly manipulate particles with diameters of 2 μm, 5 μm, and 10 μm. In particular, it demonstrates an excellent capture and ejection ability for 5 μm particles. These optical tweezers probe enhances the integration potential of fiber optic tweezers and brings new perspectives on particle manipulation and sorting technology, which is of great scientific value.

Significance Small object detection holds a crucial position in various industrial fields and everyday life. In remote sensing, it is used to identify and track small objects, providing key support for military reconnaissance and national defense security. For instance, infrared small object detection can detect invasive targets and take subsequent interception measures against them. In autonomous driving, the system automatically detects objects such as traffic signs, vehicles, pedestrians, and obstacles, to help to deeply analyze the meaning of the driving scene and to predict the behavior of surrounding objects to ultimately make appropriate decisions. In public safety and surveillance, small object detection systems can accurately identify and track small objects hidden in the distance or complex backgrounds, enabling functionalities such as pedestrian face recognition, vehicle identification, and detection of illegal crowd lingering, counting of individuals, and estimation of crowd density. For industrial automation, small object detection is also necessary to locate visible small defects on the surface of materials. Overall, small object detection technology significantly enhances the work efficiency across various sectors, demonstrating its broad application prospects and profound research value.Progress This paper provides a comprehensive review of current deep learning-based small object detection techniques and systematically categorizes, analyzes, and compares existing algorithms. We initially outline the definition of small object detection, the challenges, and its application areas. The definition of small objects is elaborated from two perspectives of based on absolute and relative scales. We also summarize the main challenges encountered in small object detection, including information compression loss, low signal-to-noise ratio and detectability in complex backgrounds, high sensitivity to minor deviations in bounding boxes, complexity in network structure and optimization, and the scarcity of large-scale small object datasets. Following, we delve into several key optimization approaches. These include the enhancement of model robustness through data augmentation, the improvement of small object visibility via super-resolution methods, the augmentation of detection accuracy through the application of multi-scale information fusion, and the refinement of feature representation using contextual information and large-kernel convolution techniques. Moreover, the discussion extends to anchor-free detection frameworks, DETR technology, and dual-modal strategies for small object detection tailored to particular contexts, offering an exhaustive evaluation of their benefits and drawbacks. We ultimately provide a comprehensive introduction to currently available small object datasets, encompassing twelve major datasets of DOTA, AI~~TOD, DIOR, VisDrone2019, TT100K, BSTID, TinyPerson, CityPerson, WiderPerson, WIDER FACE, BIRDSAI, and MS COCO. These datasets offer a rich resource for research purpose and performance evaluation of small object detection. Further, we also conduct a detailed performance evaluation of existing small object detection algorithms on several widely-used public datasets, such as MS COCO, DOTA, AI~~TOD, TinyPerson, and TT100K. Additionally, we forecast future research directions in this field, proposing four main potential challenges: feature fusion, contextual learning, optimization of large kernel convolution, and improvements in DETR technology. These directions not only illustrate the development trends of small object detection, but also highlight technical challenges that current research needs to overcome, providing guidance and inspiration for future studies.Conclusions and Prospects Small object detection is one of the most critical and fundamental tasks in the field of computer vision, with broad application demands in the real world, such as military reconnaissance, autonomous driving, public safety and surveillance, and robotic vision. Although substantial algorithms have shown relatively satisfactory performance in specific applications and scenarios, overall, their effectiveness, robustness as well as speed still need improvement. This paper aims to provide references and bases for the development of algorithms through in-depth research and analysis of small object detection technology.

ObjectiveAs an advanced measurement tool, 3D reconstruction technology has demonstrated significant application advantages in a number of key areas such as biomedicine, aerospace, and industrial manufacturing due to its unique non-contact nature, efficient data processing capability, and ability to provide in-depth multi-dimensional analysis. However, as the application of the technology continues, the existing light bar extraction methods face the dual challenges of slow reconstruction speed and large accuracy errors when dealing with objects with complex geometries and under variable environmental noise conditions. These challenges not only affect the accuracy of 3D reconstruction results, but also constrain the efficiency of data processing, limiting the potential application of 3D scanning technology in a wider range of scenarios. In order to overcome the limitations of existing techniques and enhance the overall performance of 3D scanning technology, proposes an efficient light bar extraction method based on 3D reconstruction (ELE-3D). The ELE-3D method is developed with the goal of achieving a significant speed-up of the light bar extraction process, while at the same time guaranteeing or even improving the accuracy and reliability of the 3D reconstruction. Specifically, the ELE-3D method aims to accurately extract the centerline of the light bar through advanced image processing techniques and methodic optimisation, effectively reduce noise interference and increase data processing speed.MethodsThe ELE-3D method is optimized for its limitations in dealing with complex-shaped objects and noise interference through an in-depth analysis of the shortcomings of existing light strip extraction techniques. The method first uses the Sobel operator to perform edge enhancement to improve the contrast and clarity of the edges. Subsequently, binarization is performed based on the maximum gray value of the image to effectively separate the laser stripes from the background noise. Noise filters with area and aspect ratio thresholds are then applied to refine the extraction process, ensuring that only the most relevant features are preserved. It adjusts the laser strip projection ratio to significantly reduce computational demands. Finally, the ELE-3D method adopts a sub-pixel technique for accurate centerline extraction of the segmented region (Fig.1).Results and DiscussionsIn the comprehensive experimental analysis of the ELE-3D method, first compare the extraction speed and effect of the ELE-3D method with the traditional Gray-gravity method and Steger method in light bar centerline extraction by efficiency test, which shows that the ELE-3D method significantly outperforms the other two methods by an average time consuming of 64.2 ms, demonstrating excellent extraction speed and stability. (Fig.2-3, Tab.1-2). Then, in the robustness validation experiment, we added Gaussian noise of different intensities to the standard image, and the ELE-3D method can maintain high accuracy in centerline extraction even in a high noise environment, showing stronger anti-interference ability compared with the traditional methods (Fig.4). Finally, in the 3D reconstruction accuracy experiments, the ELE-3D method scanned a standard ball, a doll and a mannequin from multiple viewpoints, and the complex shapes and details were clearly captured by the generated point cloud data, which verified the validity and reliability of the ELE-3D method in practical applications (Fig.5-7, Tab.3-4). Taken together, these experimental results show that the ELE-3D method performs well in terms of efficiency, robustness and accuracy, providing solid technical support for the advancement of 3D scanning technology.ConclusionsThe experimental results show that the ELE-3D method has improved the light bar extraction speed by 89.0% compared to the traditional Steger method and by 85.3% compared to the Gray-gravity method, and the running time is stable, showing high efficiency and stability. In terms of robustness, the ELE-3D method can maintain high extraction accuracy under different noise levels, showing good anti-noise performance. Through the multi-view point cloud 3D reconstruction experiments, the ELE-3D method is able to capture rich details on different test objects, such as standard balls, dolls, and mannequins, which proves its high efficiency and accuracy in scanning complex shapes and surfaces. These results not only prove the feasibility of the ELE-3D method, but also lay the foundation for future applications in a wider range of fields, which indicates that the ELE-3D method has a broad application prospect in the field of 3D scanning.

Significance The advancement of high tech has raised the bar for precision interferometric imaging. In modern optics and biomedicine, label-free imaging techniques, which do not rely on traditional dyes or fluorescent markers, allow for 3D in situ observation and analysis of living cells, thereby promoting the development of quantitative phase microscopy. In the field of optical detection, there is an urgent need for on-site and real-time applications of interferometric systems, such as transient analysis of laser wavefronts, high-speed flow field detection, monitoring and control of adaptive optics, and high-precision optical system aberration analysis. These applications demand a compact, environmentally robust, and transient imaging interferometric system.Progress To address these needs, this paper focuses on years of research in common-path shearing interferometry. The advantage of common-path interferometry is that it overcomes the instability of measurement results caused by environmental disturbances affecting the reference and test paths in dual-beam interferometry. The quadriwave lateral shearing interferometer can achieve transient phase imaging by capturing four sheared wavefronts in two orthogonal shearing directions from a single interferogram. This was enabled by a novel four-wave interferometric sensor (FIS4), composed of a randomly encoded grating and a phase chessboard based on the principle of quadriwave lateral shearing interferometry. The FIS4 interferometric wavefront sensor’s compact structure, which does not require a reference flat due to its self-interfering nature, effectively suppresses environmental vibrations.Conclusions and Prospects This review comprehensively introduces the principles, development history, wavefront reconstruction methods, and the wide applications of the FIS4 interferometric sensor based on quadriwave lateral shearing interferometry. With its unique advantages, such as compactness, robustness, high temporal resolution, and compatibility with existing microscopy systems, the FIS4 interferometric wavefront sensor shows broad application prospects in fields like biomedicine, optical measurement, and material characterization. The development of this technology not only provides new research tools for related fields but also opens up new possibilities for interdisciplinary innovation and discovery.

Significance Transparent objects such as glass, lenses, and displays have a large number of needs and applications in different fields, which need to be reconstructed in 3D (three-dimensional) quickly, accurately and completely, so as to objectively describe the object’s profile, inspect the surface quality and ensure the function of the components. However, due to the specular reflection and refraction characteristics of transparent surfaces, the traditional 3D reconstruction methods can’t be directly applied to 3D topography measurements. This dissertation focuses on the non-intrusive 3D reconstruction methods for transparent objects, and reviews the main research work in recent years from two aspects: reconstruction based on reflected light and reconstruction based on transmitted light. Subsequently, the advantages, disadvantages, and applicable scenarios of various techniques are detailed. Users can select appropriate reconstruction schemes for transparent objects based on different application requirements and measurement conditions. At the same time, it also looks forward to the future research direction of this field, expecting to provide ideas for researchers to improve existing methods or explore new ones.Progress Most of the various non-invasive measurement systems and technologies use cameras to capture deformed patterns that have been reflected or refracted on the surface of transparent objects to restore 3D topography. These patterns carry a wealth of information about the surface shape of the transparent object, but they also contain a lot of detail in the surrounding background caused by the reflection and refraction of light, i.e., this distortion is the result of the combination of the surface shape, the surrounding background, and the lighting conditions. Researchers have done a lot of work to extract the appearance of transparent objects from it. Depending on the type of optical transmission mode in the measurement, this dissertation divides it into reflected light-based reconstruction methods and transmitted light-based reconstruction methods.Reflected light-based reconstruction methods include scan-based methods, polarization and its combination with other techniques, and reflective phase measuring deflectometry (RPMD). Transmitted light-based reconstruction methods include: shape from distortion, transmission phase measuring deflectometry (TPMD), stereo vision, etc. The principles and current development status of each method are introduced, along with a summary of their application scenarios, key advantages, and disadvantages. To provide a comprehensive evaluation, these methods are further compared from multiple perspectives, including speed, accuracy, cost, computational complexity, and calibration complexity.Finally, this dissertation summarizes the general situation of non-intrusive 3D reconstruction methods for transparent objects, and looks forward to the possible future development directions.Conclusions and Prospects This dissertation aims to provide an updated and general overview of non-intrusive 3D reconstruction methods for transparent objects. The measurement speed of the scanning method is limited by the way it is scanned on a point-by-point or line-by-line basis. Shape recovery from polarization is characterized by fast detection speed, high accuracy, and easy calibration. The accuracy and speed of RPMD depend on the specific method used to deal with parasitic reflections. TPMD is competitive in wavefront measurement and defect detection. Stereo vision technology, with its high accuracy and low cost, has become a powerful tool for dealing with complex transparent objects. Deep learning and multimodal fusion have demonstrated new vitality in the 3D reconstruction of transparent objects, and future developments are expected to increase speed, reduce costs, and enhance environmental adaptability and robustness.

Significance Gastrointestinal cancer represents a major global health challenge and is among the leading causes of death worldwide. By the time symptoms manifest, the disease is often at an advanced stage, making early detection and treatment critical for improving patient survival rates. Endoscopic surveillance is vital in identifying early lesions; However, traditional white-light endoscopy has limitations in accurately detecting early-stage lesions. This is primarily due to its reliance on replicating human vision, which can make it difficult for clinicians to precisely identify target tissue areas requiring resection. Although advanced endoscopic technologies, such as magnifying endoscopy combined with narrow-band imaging (ME-NBI), have improved the visualization of early gastrointestinal cancers, these techniques are still complex, time-consuming, and heavily dependent on the expertise of endoscopists and pathologists. In this context, Light Scattering Spectroscopy (LSS) emerges as a non-invasive and highly sensitive optical technique that has been widely applied for early cancer detection. LSS is particularly effective in detecting subcellular changes in epithelial tissues, which are often the first indicators of malignancy. By capturing single-scattered light and analyzing it through Mie scattering theory, LSS can infer crucial parameters such as cell nucleus size, shape, and refractive index—key factors in accurate and early disease diagnosis. The significance of LSS lies in its ability to provide real-time, non-destructive diagnostic information, thereby guiding clinical decisions and improving patient outcomes. However, the clinical application of LSS is currently constrained by interference caused by diffuse scattering from underlying tissues. Overcoming these challenges is essential to unlock the full potential of LSS in cancer diagnostics.Progress Over the past two decades, substantial advancements in LSS have focused on improving the accuracy and reliability of this technology. Four experimental techniques have been introduced for extracting single-scattered light, each contributing to the enhancement of LSS in various applications (Fig.4). The first significant development is the coherent interference technique, which combines the depth-resolving capabilities of Optical Coherence Tomography (OCT) with low-coherence interferometry. It excels in obtaining single-scattered signals from specific tissue depths. Despite the system's complexity and the high demand for data processing, this method offers a significant advantage in signal-to-noise ratio, making it particularly suitable for high-resolution imaging scenarios where precise depth information is crucial. Another important technique is the azimuthal technique, which measures the differences in reflected signals at 0° and 90° azimuthal angles. This approach takes advantage of the differences in single-scattering signals from large particles, such as cell nuclei, when viewed from different angles. However, the high demands on optical path design and detector precision present certain challenges in practical applications. The third technique, spatial gating, involves adjusting the source-detector distance to differentiate between shallow and deep tissue scattering signals. Although the design of spatial gating probes is complex, the small size of the probe makes it ideal for applications in confined spaces, such as in the detection of pancreatic cancer. The fourth technique is polarization gating, which leverages the polarization state preservation of single-scattered light to distinguish it from multiple-scattered signals. Among these, the Polarized Light Scattering Spectroscopy (PLSS) systems (Fig.21, Fig.23), based on polarization gating, have been the most extensively researched and have shown high early cancer detection rates in clinical settings (Fig.24), demonstrating immense potential for clinical application. However, traditional PLSS techniques rely on the rotation of polarizers or the orthogonal arrangement of two polarizers to capture single-scattered light, which reduces measurement efficiency and increases system complexity. To overcome these challenges, the snapshot PLSS technique was developed by combining polarization spectrum modulation technology with PLSS (Fig.25). This technique uses a spectral modulation polarizer to directly record the single-scattered light from the sample, allowing the system to operate without the need for rotating polarizers. Building on this, the dual-optical-path snapshot PLSS endoscopic system was developed (Fig.26). This system uses a single retarder to modulate the intensity of polarized scattered light, which is then transmitted in intensity form through a multimode optical fiber to the other end of the endoscope. Compared to traditional three-optical-path PLSS endoscopic systems, this system expands the effective sampling area of PLSS and eliminates the problem of signal aliasing from non-overlapping regions (Fig.26(a)-(b)). Integrating this innovative system with advanced computational algorithms has significantly improved the analysis and interpretation of scattering data, enabling more precise identification of early-stage malignancies. Clinical studies using these enhanced systems have shown high sensitivity and specificity, validating the practicality of PLSS in early cancer diagnostics (Fig.27) and providing new tools and methods for early cancer detection.Conclusions and Prospects Recent advancements in LSS underscore its potential in non-invasive cancer diagnostics. The introduction of the snapshot PLSS technique has improved the efficiency and accuracy of single-scattered light measurements while simplifying traditional PLSS systems, offering promising solutions for biomedical imaging. However, both traditional PLSS endoscopic systems and the dual-optical-path snapshot PLSS endoscopic system lack image resolution, which can lead to missed diagnoses when cancerous cell signals are masked by healthy cells in the same area. Thus, developing pixel-level PLSS imaging is a crucial research direction. While LSS, particularly when combined with advanced computational methods, shows significant promise, it currently relies on complex mathematical models that may not fully account for tissue morphology and optical properties. Future research should focus on refining these models by incorporating quantitative measurements and more accurate tissue characterization, improving their clinical applicability. Continued efforts are needed to enhance light scattering measurement accuracy and develop robust data processing algorithms. Additionally, integrating LSS with other imaging modalities and creating more user-friendly, portable systems will be vital for widespread clinical adoption.

Significance Since Gauss’s Day, the design and development of imaging systems have been continuously dedicated to the iterative improvement and optimization of lenses, aiming to collect light emitted in various directions from a point on the object plane and converge it as perfectly as possible onto a point on the image plane. However, imaging sensors can only capture and record the spatial position information of the light field, losing the angular information, which causes them to completely lose the ability to perceive perspective transformation and depth of a three-dimensional scene. To compensate for this deficiency, computational light field imaging technology was born, which can record the complete distribution of the radiance, and jointly recording spatial position and angle information, breaking through the limitations of classical imaging. It is gradually being applied to fields such as life sciences, national defense security, virtual reality/augmented reality, and environmental monitoring, with important academic research value and broad application potential. However, light field imaging technology is still jointly constrained by digital imaging devices and image sensors. The limited spatial bandwidth product (SBP) of the imaging system makes light field imaging often have to make trade-offs between spatial resolution and angular resolution in practical applications, making it difficult to achieve the high spatial resolution of traditional imaging technology. Since the birth of light field imaging technique, how to endow it with higher degrees of freedom, that is, to maintain high-resolution imaging while improving temporal resolution and angular resolution, in order to achieve clearer and more stereoscopic imaging performance, is a key problem that light field imaging technology urgently needs to solve, and has always been a hot topic in this field.Progress We first reviews the development history of light field imaging technology, and elaborates in detail on the basic concepts of the seven-dimensional full light field function and the simplified four-dimensional light field. Subsequently, the paper delves into the latest research progress of light field imaging technology in enhancing temporal, spatial, and angular resolution, including the application of microlens arrays, phase scattering plates, heterodyne coding, camera arrays, and other methods in the recording of high-speed dynamic three-dimensional scenes; the achievements of techniques such as transfer function deconvolution, prior information constraints, multi-frame scanning, aperture coding, confocal, and hybrid high/low light field imaging in improving spatial resolution; and methods for enhancing angular resolution through depth constraints, sparse prior constraints, and other approaches. In addition, the article also looks forward to the future development directions of light field imaging technology, including the control of high-dimensional coherence in light fields, the integration of artificial intelligence with light field imaging technology, the development of miniaturized and portable light field imaging devices, new imaging mechanisms, and the prospects for the combination of light field imaging and light field display technology, as well as the potential of light field imaging in special fields. Finally, the paper emphasizes the challenges of light field imaging technology in achieving optimal imaging performance, especially the importance of finding an efficient balance among the three key dimensions of temporal, spatial, and angular resolution. With the continuous progress and innovation of technology, light field imaging technology is expected to further break through the limitations of traditional optical imaging, inject new momentum into the advancement of imaging technology, and open up new application prospects in various fields such as life sciences, remote sensing, computational photography, and spectral imaging.Conclusions and Prospects This review delves into the current state of development and challenges faced by light field imaging technology. Light field imaging still utilizes existing two-dimensional sensor devices, which currently possess only spatial resolution, thus necessitating a trade-off between spatial and angular resolution to achieve the latter. To enhance the spatial resolution of light field imaging, one approach is to improve hardware, such as increasing the pixel resolution of sensors and arranging large-scale camera arrays. On the other hand, temporal resolution can be used to enhance spatial sampling, for instance, by employing aperture coding techniques and multi-frame scanning methods to increase spatial resolution. However, directly upgrading hardware resources can lead to increased costs, as well as potential issues with size, weight, and data transmission processing. Therefore, if one does not wish to enhance performance by physically adding hardware, it is necessary to rely on algorithmic innovation, such as optimizing imaging results using prior information and deep learning. This method is referred to as "punching above one's weight," meaning that performance is improved through algorithms without increasing physical resources. However, we also recognize that the development of light field imaging technology still faces challenges on multiple fronts. To achieve broader applications, future research needs to find a better balance between algorithmic innovation, hardware optimization, and cost-effectiveness. In summary, the future of light field imaging technology is promising, and it will continue to serve as an important tool in fields such as biomedical imaging, materials science, and industrial inspection. Through interdisciplinary collaboration and innovative thinking, we believe that more efficient and accurate imaging technologies can be realized, opening up new horizons for scientific research and practical applications.

ObjectiveMultimode fiber (MMF) has become one of the important media for short-reach fiber communication because of its high throughput property. However, modal dispersion of the MMF results in the formation of seemly chaotic speckle at the distal end of the MMF, where the input information cannot be directly decoded using simple intensity detection. Information decoding from the speckle output of the MMF requires accurate characterization of the MMF's multiple-input-multiple-output (MIMO) transmission properties. Currently, popular computational imaging methods for retrieving the mapping functions of MIMO systems include the transmission matrix (TM)-based methods and the deep learning-based methods, both of which have their advantages and disadvantages. To solve the problem of precise decoding over an MMF, this study explores the parallel information transmission over a kilometer-scale MMF based on the inverse transmission matrix (ITM) method. The results of this study could provide new insight for the development of short-reach fiber communication, spatial optical communication and secure optical communication.MethodsThis article utilizes wavefront shaping to accurately measure theTM of the MMF, where self-interference optical setup is used to measure the complex optical field at the distal end of the MMF. Binary information and 256-level grayscale image with a resolution of 32×32 are used as the phase encoded information of the input wavefront, which are modulated by a digital micromirror device and coupled into the MMF. Then the ITM method is used to retrieve the encoded information from the chaotic speckle patterns. If the TM is not a square matrix, pseudo-inversion of the matrix is performed instead.Results and DiscussionsThe experimental results are shown (Fig.3(a)). It can be seen that the ITM method can retrieve the phase encoded information over the MMF, providing the TM of the MMF and the complex light field at the output can be precisely measured. The comparisons with the experimental results using the scattering-correlation scattering matrix (SSM) method are also shown (Fig.3(b)), where two methods share the same TM. As can be seen from the retrieval results of uncorrelated random binary information, the highest accuracy of the SSM method and the ITM method are achieved when γ = 121 (γ = 64) are 75.9% (64.3%) and 100% (100%), respectively, where γ is the ratio between the output channel number and the input channel number. The high-fidelity reconstruction of 256-level grayscale images and transmission of high definition full-color video over the MMF using the ITM method are also experimentally demonstrated, respectively, where the results are shown (Fig.4-5). These experimental results verify the effectiveness of the ITM method in unscrambling the modal dispersion in the MMF and achieving computational imaging.ConclusionsAs a high-throughput transmission carrier, MMFs have unique advantages in the field of information transmission. This article experimentally verifies the feasibility and advantages of the ITM method in achieving parallel information transmission over the MMF compared with the SSM method. The results show that high fidelity decoding can be achieved for uncorrelated random binary information, 256-level grayscale images, and high definition full-color video. Although precise measurement of the TM of the MMF has been achieved under our experimental conditions, the robustness of the ITM method to time-varying environment may not be better than that of deep learning-based methods, where the TM measured at a certain moment cannot adapt to the long-term dynamic changes of the MMF. Combing the TM-based methods with the deep learning-based methods is an important future perspective. It is anticipated that the results presented in this article could facilitate the development of short-range optical communication, spatially multiplexed optical communication, optically secured and confidential communication, and long-distance optical logic communication. Meanwhile, the verified capability of grayscale image transmission could provide new ideas for practical applications such as holographic imaging, light-field projection, and endoscopic imaging.

ObjectiveWith the rapid development of precision machining and smart manufacturing, there is an increasing demand for multi-dimensional information, including the shapes, deformations, and strains of complex structures like engine components and honeycomb structures, during their production and usage across fields such as aerospace, automotive industries, and industrial testing. The recently emerging digital image correlation-assisted fringe projection technology has demonstrated unique advantages in measuring the shape and deformation of complex structures. However, due to the distinct technical characteristics of these two methods, combining them for shape and deformation measurement presents several challenges in terms of measurement accuracy and efficiency, making it difficult to meet high-resolution and multi-dimensional measurement requirements, thus hindering the further application of this technology. This study reviews a multi-dimensional information sensing technology for complex structures based on fringe projection, developed by the research group in recent years, along with the latest research progress. It systematically revisits the technical challenges encountered when combining the two methods, such as fringe and speckle information interference, destruction of the original texture, and low encoding efficiency, and summarizes the corresponding solutions. Ultimately, it achieves multi-dimensional information sensing of complex structures such as composite woven structures, particle expansion damping structures, and laminated structures. The proposed solution upgrades the traditional fringe projection measurement system into a multi-dimensional information sensing system without additional hardware, enabling simultaneous measurement of 2D texture (T), 4D shape (x, y, z, t), and mechanical parameters related to analytical dimension (deformation and strain). This breakthrough overcomes technical barriers to high-resolution, high-precision texture shape reconstruction and deformation-strain analysis of complex structures, and is expected to find applications in the reliability analysis of complex structures and the performance evaluation of composite materials.MethodsThe multi-dimensional information sensing method based on the FPP system involves creating highly reflective and vivid fluorescent speckles on the surface of the object and then efficiently encoding the pattern using a structured light interlacing method to enhance projection efficiency. High-quality stripes, textures, and speckle information are then separated from the captured images using a general information separation method, providing accurate original data for subsequent deformation analysis. The shape data is matched, and three-dimensional displacement is calculated using DIC. Finally, deformation is further analyzed using the chained strain analysis to improve calculation efficiency. This provides a comprehensive technical solution for combining FPP and DIC, suitable for complex dynamic scenes (Fig.6).Results and DiscussionsThe measurement results of woven structures and standard balls show that the strength-chromaticity information separation method performs better in terms of phase accuracy, speckle quality evaluation functions SSSIG and MIG compared to the other two traditional integration methods (Fig.14-15). By comparing the shape reconstruction of honeycomb structures and woven structures with 3D-DIC, the proposed method can better obtain shape data for complex regions (Fig.16-Fig.17). Additionally, better shape data can further guide sub-region division, especially for sub-regions that are difficult to observe in images and have discontinuities, which can be improved by depth constraint to enhance deformation analysis accuracy (Fig.18). Finally, compared to 3D-DIC, the multi-dimensional information sensing method based on the FPP system has lower computational time and hardware cost (Fig.19). These comparative experiments verify the superiority of the proposed system.ConclusionsThe three-dimensional shape measurement technology based on fringe projection has been widely used in industrial inspection, cultural heritage preservation, intelligent driving, and other fields due to its high speed, accuracy, and full-field measurement capabilities. It has become a research hotspot in both scientific and engineering fields. To further enhance the application of fringe projection in the analysis of mechanical properties, researchers have proposed the DIC-assisted FPP technology, conducting extensive research in multiple areas. This study delves deeply into key technical issues such as the reconstruction of complex, high-noise surface topography, high-quality information separation, and accurate deformation and strain calculation within existing combined methods. The proposed methods have been experimentally validated by the research team, showing advantages in information separation capability, handling complex region topography, analyzing fracture region deformation, computational efficiency, and hardware cost. The multi-dimensional information sensing method based on the FPP system upgrades the existing hardware structure into a multi-dimensional measurement system, breaking through technical barriers in high-precision, high-resolution topography reconstruction and deformation and strain analysis of complex surfaces. The successful application of this technology in complex structures demonstrates its great potential in analyzing complex structures and fracture regions, and it is expected to be widely used in aerospace, the automotive industry, biomedicine, and other fields.