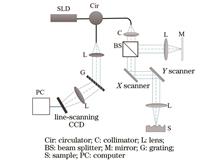

ObjectiveIn conventional spectral domain optical coherence tomography (SD-OCT), depth information is calculated by fast Fourier transform (FFT) to bring an axial resolution typically within the range of 10 μm. Sub-micrometer resolution is achieved by employing broadband light sources. Phase-sensitive SD-OCT (PSSD-OCT) provides nanometer-level precision and can be employed for film thickness measurement, displacement sensing, optical fiber Fabry-Perot sensors, quantitative phase microscopy, and surface profile imaging. Phase wrapping is an inherent issue in optical interference techniques, and various phase unwrapping algorithms have been proposed to enhance the dynamic range. The current approaches are typically to first calculate a low-precision solution by frequency estimation methods, followed by determining the phase cycle number. However, the frequency estimation methods are highly susceptible to noise, which makes them suitable only for interference spectra with high signal-to-noise ratio (SNR). Synthetic wavelength methods are widely adopted for expanding the phase dynamic range. Since the synthetic wavelength is much larger than the wavelength of the light source, it can increase the dynamic range to the synthetic wavelength size. However, when the measurement range exceeds the synthetic wavelength, phase wrapping still occurs. To improve the dynamic range of existing synthetic wavelength methods, we propose a high dynamic range synthetic wavelength (HDR-SW) phase unwrapping method. This method eliminates the phase wrapping limitation and achieves a dynamic range of millimeters. Finally, a method is provided for displacement measurements with a large dynamic range, high sensitivity, and high speed.MethodsThe experimental system mainly consists of a fiber Michelson interferometer, a SLD light source, and a spectrometer. Light from the SLD is directed into a fiber circulator. Then, it is split into reference and sample beams by a beam splitter. The beams reflected from the sample and reference arms enter a spectrometer. The spectrometer has a spectral width of 30 nm and a spectral resolution of 0.0146 nm. Both the reference and sample arms are in free space, and achromatic lenses are utilized to eliminate the dispersion mismatch between the two arms.Firstly, the synthetic phase is calculated by splitting the interference spectrum into two sub-spectra. Then, the correct integer number of phase cycles is computed from the full-length spectrum and the half-length spectrum located in the middle of the spectrometer. The method combines the demodulation results of the interference spectra with full-length and half-length to eliminate the ±1 phase cycle jump that is easily affected by noise.Results and DiscussionsThe experimental results demonstrate that the HDR-SW method enables high-sensitivity phase demodulation for a large dynamic range. Compared with the linear regression method, the HDR-SW method has higher anti-noise ability and higher precision [Fig. 2(f)-(i)]. The linear regression method conducts phase unwrapping by comparing the phase differences between adjacent points. For the case of low SNR, phase unwrapping may result in a 2π error and consequently a larger linear fitting error. In contrast, the proposed method directly calculates the unknown phase cycles. By combining the results of the spectra with full-length and half-length, the phase cycle jump can be corrected. However, when the error in the low-precision solution exceeds λc/2 with λc of the central wavelength, Eq. (7) introduces an error of λc in the high-precision solution.Conventional SD-OCT is frequently employed for conducting imaging on multi-layer samples using FFT for optical path demodulation. Due to the inherent frequency resolution limitations of FFT, the results of the FFT method show lower precision [Fig. 4(b) and (c)]. When the proposed method is applied to multi-layer samples, it also suffers from frequency resolution limitations. The interlayer spacing must be greater than π/Δk, and the interference spectra must be separated by filtering. The theoretical sensitivity of PSSD-OCT primarily depends on the phase sensitivity. In the case of a common-path configuration, the sensitivity of this experimental system reaches the nanometer level. In the non-common-path configuration, due to the influence of environmental vibrations, the sensitivity reduces to tens of nanometers.ConclusionsPhase wrapping is an inherent issue in optical interference techniques to cause a limited dynamic range in PSSD-OCT. A large dynamic range synthetic wavelength-based phase unwrapping method is proposed to improve the dynamic range in the traditional synthetic wavelength methods. By selecting the full-length interference spectrum and the half-length interference spectrum located in the middle of the spectrometer, the correct integer number of phase cycles is computed. The method combines the demodulation results of the interference spectra with full-length and half-length to eliminate the phase cycle jump that is easily affected by noise. Imaging experiments using a step calibration block, a coin, and a circuit board demonstrate that this method enables high-sensitivity displacement demodulation with a large dynamic range (millimeter-scale).

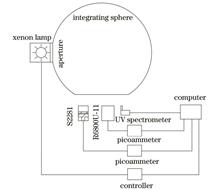

ObjectiveUltraviolet detection technology is widely used in military and civilian fields, playing an important role in missile warning, ultraviolet/infrared composite guidance, detection of solar ultraviolet radiation intensity, ozone detection, biomedicine, and other fields. In recent years, with the development of ultraviolet optical remote sensing detection technology, quantitative research on ultraviolet radiation information has become particularly important. As a type of ultraviolet detector, the solar blind phototube can reduce the impact of out-of-band leakage in ultraviolet radiation measurement, thereby improving the detection accuracy of the ultraviolet band. Based on its characteristics, it is often used in various large military equipment missile approaching warning systems and also commonly used in corona detection to effectively and quickly detect fault locations. In Europe and America, it has been used as a standard power detection method and widely applied in equipment. At present, research on solar blind phototube preparation has gradually begun in China, and the study of its radiation quantification is one of the key links for application. Therefore, in the face of the urgent need for high-precision radiation calibration in the ultraviolet band and the exploration of the application of solar blind photocell, it is necessary to study its response nonlinearity.MethodsThe research on the response nonlinearity of detectors can be divided into two methods:direct method and indirect method. Based on the indirect method, the responsivity standard is transferred to the detector to be calibrated by the standard detector method. The response nonlinearity of the solar blind phototube detection system is studied, and a standard transfer chain based on the detector is established. In terms of the measurement method, we use an external xenon lamp integrating sphere as the radiation source and control the luminous flux of the external xenon lamp entering the integrating sphere through an adjustable aperture. The adjustment of the radiance of the sphere exit portal is achieved and the spectral consistency during the adjustment process is ensured. In this way, the synchronous measurement of the reference detector and the detector to be tested is easy to achieve under the same radiation conditions. In traditional measurement methods, the optical power level of the radiation source is selected with a set of neutral density filters used either one at a time or in combinations of several filters. It needs to construct a dual optical path to achieve synchronous measurement between the reference detector and the detector to be tested. Otherwise, it needs to establish a standard transfer chain by a motor to continuously exchange the positions of the two detectors. Therefore, we provide a new approach for the study of detector response nonlinearity. Compared to traditional measurement methods, the proposed approach simplifies the complexity of the optical path, reduces the strict requirements for the stability of the light source, and eliminates errors and drifts introduced by non-synchronization during the measurement process. In addition, it reduces sensitivity to environmental and interference factors, improves measurement repeatability and accuracy, and obtains more reliable and accurate measurement results.Results and DiscussionsWe first analyze the stability of the xenon lamp light source spectrum, indicating that there is no drift phenomenon in its relative spectral radiance within 2 h. The peak value (wavelength: 308.558 nm) has a relative standard deviation of 0.254% during the measurement period, with little fluctuation. Then, we propose a method for selecting a reference point, and based on the relative error calculated in Table 3, 0.147 is selected as the reference responsivity. Subsequently, we focus on the influencing factors of indirect synchronous measurement and discuss the interference of light source fluctuations and dark background noise on linear measurement devices from the perspective of the correlation between the measured data of the detector to be tested and the reference detector. Results show that the response nonlinearity of the detector to be tested can be studied within a range of 2.97×10-10-6.61×10-8 A. Finally, based on the principle that the transmittance of the neutral density filter is independent of the radiation output of the light source, we study the nonlinearity of the reference detector responsivity, indicating that its linear error is within the range of 0.69% when the response photocurrent is greater than 1.239×10-9 A, which can be used as a standard detector with excellent linearity in the ultraviolet band.ConclusionsWe introduce an indirect method to establish a transfer chain based on a standard detector and study the application of the standard detector method in laboratory calibration. Compared to the typical light flux superposition method, this method reduces the tedious measurement procedures and the requirements for light source stability. The experiment adopts an external xenon lamp light source integrating sphere as the radiation source, which has good illumination uniformity and reduces the complexity of the optical path compared to the typical dual optical path measurement method. The research results indicate that the response photocurrent of the solar blind phototube detection system is within 2.97×10-10-6.61×10-8 A, and its linear error is within 5.2%. The value range of the nonlinear correction factor is 0.948-1.006. The main factor affecting the nonlinear correction factor is the dark background noise of the detector to be tested, and the uncertainty of the measurement system is 3.59% (k=2).

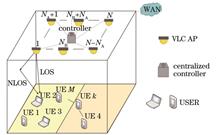

ObjectiveIndoor visible light communication has been widely studied for its simultaneous illumination and secure communication functions. In practical indoor multiple-input and multiple-output (MIMO) visible light communication (VLC) environments, users usually concentrate their work in specific areas, resulting in a non-uniform user distribution. As the number of users increases, the way users tend to access to the nearest access point (AP) may overload some APs, a problem that has rarely been considered in most studies. In this paper, we introduce a backtracking AP assignment scheme using a channel gain weighting model, which aims to balance the distribution of connections between APs and users, thereby reducing the load on APs and increasing the system sum rate. However, the power allocation method using power allocation coefficients designed based on user channel gains cannot meet the communication needs of all users. At the same time, users are subject to interference from other users' signals during the communication process, which may affect the rate that users can achieve, depending on the amount of power allocated to other users' signals. Therefore, the scheme of power allocation by the dimension-by-dimension dynamic cosine algorithm (DDSCA) improved by the optimal parameters r1 combined with the optimal solution direction adaptive exploration strategy is adopted. It redistributes the user's power to solve the problem that each user's achievable rate is lower than the threshold value, which can improve the overall communication performance.MethodsThe non-uniform distribution of indoor users in MIMO scenarios leads to poor user communication quality due to accessing too many users at APs. To this end, we propose a joint AP assignment and power allocation scheme to improve the sum rate of data transmission in the system. A candidate list ℒ is constructed by generating the channel gain weight parameters of users and APs, and all APs are divided into subsets. The performance of the scheme is dynamically explored based on ℒ for users joining each AP subset. Power allocation coefficients are designed during the dynamic exploration of the AP allocation scheme, and the APs allocate the power to the users based on the power allocation coefficients. Then the AP allocation algorithm based on the channel gain weight model backtracking is adopted to balance the connection distribution between APs and users, and the centralized controller controls the APs to provide communication services for different users. In addition, after obtaining the optimal AP allocation scheme, the DDSCA is improved by designing the transformation parameters and the strategy of adaptive exploration in the direction of the optimal solution to satisfy the communication needs of all users.Results and DiscussionsIn the case of non-uniform distribution of indoor users (Fig. 5), the proposed scheme is compared with other schemes under different assumptions. The results show that the proposed scheme outperforms the other schemes in increasing the system sum rate with a larger number of users (Fig. 6). By introducing an improved DDSCA (IDDSCA) for power allocation, it can effectively improve user satisfaction index. In addition, IDDSCA enables all users to reach the achievable rate threshold (Fig. 9), which leads to an increase in the average user satisfaction index. The system sum rate (Fig. 7) and average user satisfaction indices (Fig. 10) are examined for users equipped with receivers having different field-of-view (FOV) angles. It is observed that a larger FOV angle can have a significant impact on the channel gain, resulting in a decrease in the system sum rate. However, the proposed solution effectively mitigates the impact of FOV on the system sum rate and user satisfaction index through dynamic APs and power allocation. In addition, we analyze the effect of the maximum transmit power of the AP on the system performance (Fig. 8), and as the AP transmit power increases from 6 W to 20 W, the proposed scheme increases the sum rate of the system by 15.62%, which shows that the higher transmit power can allow users to ensure stable communication while bringing a higher achievable rate.ConclusionsIn this paper, we study the problem of maximizing the system sum rate and improving the user quality of service in MIMO VLC systems with non-uniformly distributed indoor users. We propose an AP allocation algorithm based on the backtracking of the channel gain weight model so that the user can select the AP that can improve the system sum rate. To improve the system sum rate while meeting the user's communication requirements, it is necessary to allocate the power to the user after the user accesses to the AP. We propose an IDDSCA, which allocates the power to the user to reach the achievable rate threshold. The analysis examines the system sum rate and user satisfaction of the proposed scheme under various environmental assumptions. Simulation results indicate that the improvement in the proposed AP allocation scheme becomes more pronounced as additional users are added, and the improved power allocation scheme can effectively increase user satisfaction.

ObjectiveIn-band full-duplex (IBFD) communication technology transmits and receives signals simultaneously in the same frequency bands, theoretically doubling the spectrum efficiency. However, the leakage from the transmitter to the receiver leads to severe self-interference (SI) that must be eliminated. Conventionally, the SI signals are canceled in the electrical domain using electronic circuits, but due to the electronic bottleneck, it is difficult to realize the SI cancellation (SIC) of large bandwidth signals, with poor tunability. Photonics-assisted SIC methods have been proposed to break the electronic bottleneck. Nevertheless, considering multipath SI signals introduced by wireless channels or even complex multiple-input multiple-output (MIMO) scenarios, the existing photonics-assisted analog SIC schemes employ multiple parallel photonic links and a large number of delay and amplitude tuning devices to construct the reference signals for multipath SI signals. This is complex and difficult to track the rapid change of multipath channel response in actual wireless systems. The digital domain method is combined with the photonics-assisted SIC scheme as an auxiliary means to reduce the complexity of constructing the complex multipath SI signals and meet the multipath SIC requirements. However, in the IBFD MIMO system, besides the multipath SI signal, the nonlinear distortion caused by the power amplifier and crosstalk among different transmitting links will bring channel model changes. Till now, the photonics-assisted SIC scheme simultaneously considering the inter-channel crosstalk, nonlinear distortion, and multipath effect has not been studied, which should be urgently studied.MethodsIn IBFD MIMO communication systems, the inter-channel crosstalk, nonlinear distortion, and multipath effect collectively lead to exceptionally complex SI signals. To eliminate the complex SI signals in large bandwidth application scenarios, we propose a least square (LS) algorithm-assisted scheme for the cancellation of MIMO nonlinear SI in the optical domain and combine subsequent digital domain SIC. A continuous-wave light wave is modulated in a dual-drive Mach-Zehnder modulator (DD-MZM) by the received signal and the digitally constructed reference signal. The complex SI in the received signal can be suppressed after the optical signal from the DD-MZM is beaten in a photodetector (PD). To construct the analog reference signal, this method models the MIMO multipath SI signals in the presence of inter-channel crosstalk and nonlinear distortion. The model parameters are estimated by the LS algorithm, and then the analog reference signal for the analog optical domain SIC is constructed via the obtained model. Additionally, we reduce the order of the LS algorithm and improve the reference construction speed by setting a threshold and ignoring the components with low power in the SI signal while ensuring the analog SIC depth. Based on a two-step SIC of digital-assisted analog optical domain SIC and digital domain SIC, the complex SI signals in the IBFD MIMO communication systems can be well eliminated.Results and DiscussionsAn IBFD MIMO scenario with two transmitting antennas is assumed in the experiment. The SI signal has a center frequency of 1 GHz, a baud rate of 0.5 Gbaud, and a signal duration of 3.8 μs. The dominant third-order nonlinear distortion is only considered and the SI signal from each antenna has seven multipath components. The crosstalk coefficient is first set to 0.1. After estimation by the LS algorithm, the tap coefficients of the filter are obtained and then normalized. Based on the normalized tap coefficients, the running time of the LS algorithm is tested. When the threshold of the normalized tap coefficients increases from 0 to 0.2, the running time of the algorithm in MATLAB is significantly reduced from around 0.25 s to 0.07 s. With the increasing threshold, the construction of complex SI signals using the LS algorithm will not be accurate enough, and the cancellation depth of the analog optical domain SIC will decrease from around 27 dB to around 15 dB. However, after further digital domain SIC, the overall SIC depth is around 35 dB, which is similar to that when the threshold is low and the digital domain SIC is also employed. When the crosstalk coefficient is set to 0.3, increasing the threshold of the normalized tap coefficients can also greatly reduce the running time of the algorithm in MATLAB. Due to the large inter-channel crosstalk in this case, which indicates relatively large multipath SI signal power, the effect of analog optical domain SIC does not decrease significantly during increasing the threshold from 0 to 0.2, and the SIC depth of optical domain analog SIC can be maintained at about 28 dB. The experimental results show that a reasonable setting of the order of LS algorithm adopted for analog optical domain SIC can reduce the order of parameter estimation and computational complexity, and improve the construction speed of analog reference signals.ConclusionsWe propose and experimentally demonstrate a low-complexity digital-assisted nonlinear analog optical domain SIC method for the IBFD MIMO communication systems. By utilizing this method, the complex SI signal in the IBFD MIMO communication systems can be well constructed, which can be leveraged for the analog optical domain SIC. Additionally, when the LS algorithm is adopted to construct the analog reference signal, the low-power components in the SI signal are ignored by setting a reasonable threshold to reduce the order of parameter estimation and computational complexity of the LS algorithm and improve reference construction speed. The experimental results show that the proposed method can eliminate the MIMO multipath SI signals with inter-channel crosstalk and nonlinear distortion, and achieve an SIC depth of about 35 dB after analog and digital SIC when the SI signal carrier frequency and baud rate are 1 GHz and 0.5 Gbaud respectively. The proposed method provides a promising solution for the optical domain elimination of complex multipath SI signals in IBFD MIMO communication systems.

ObjectiveWith the rapid growth of internet traffic, the demand for large transmission capacities from all walks of life has grown dramatically. In the context of limited device bandwidth and high hardware update costs, typical expansion methods include wavelength division multiplexing (WDM) and mode division multiplexing (MDM). At the same time, optical signal impairments caused by devices and fiber optic channels make advanced digital signal processing technology crucial to achieving high-speed fiber optic communications. With the emergence and development of deep learning, equalization based on machine learning has become a hot topic in the field of optical communications. At present, the MDM field mainly uses the intensity modulation direct detection (IMDD) method for experiments and most use a single-channel single-model traditional linear equalizer for channel compensation. However, in scenarios with many mode multiplexing channels and polarization multiplexing, the requirements for nonlinear equalization capabilities are gradually increasing. We adopt WDM, MDM, polarization multiplexing, and advanced digital signal processing technology to construct a homodyne coherent transmission system based on multiple input-multiple output neural network equalizer (MIMO-NNE). We successfully achieve the equalization of 16 channels of 48 Gbaud 16QAM signals after transmitting 100 km of few-mode fiber (FMF) on six modes: LP01, LP02, LP11a, LP11b, LP21a, and LP21b. The bit error rate (BER) of the MDM-WDM system can meet the 15% soft decision forward error correction (SD-FEC) threshold of 1×10-2.MethodsA 16-channel signal with 50 GHz spacing is generated at the transmitter. The channels are divided into two groups according to odd and even, and each group uses 8 external cavity lasers (ECLs) to couple into optical carriers. After the high-speed signal undergoes the transmitting side digital signal processing(tx-DSP), it is loaded onto the in-phase/quadrature modulator (I/Q MOD) through the arbitrary waveform generator (AWG). A delay line is adopted to divide the optical signal modulated by a single modulator into multiple channels for multiplexing. The odd and even signals are divided into two by a 1×2 optical coupler (OC) respectively and are sent to the polarization multiplexer (PM) after being decorrelated by a delay line, and coupled by a 1×2 optical coupler. After the signal is amplified by an erbium-doped fiber amplifier (EDFA), it is divided into 6 beams by a 1×6 optical coupler, and a delay line is again used to decorrelate LP01, LP02, LP11a, LP11b, LP21a, and LP21b. After being multiplexed by a mode multiplexer, the wavelength division multiplexed signal is transmitted on a 100 km FMF. We use 6-mode EDFA to simultaneously amplify and compensate for each channel mode signal. At the experimental receiving end, after decoupling by the mode demultiplexer, the 6-channel signals pass through a dense wavelength division demultiplexer (DWDM), and an optical switch is applied to gate the 6 wavelengths respectively. Finally, the coherent receiver (CR) performs polarization demultiplexing and homodyne coherent reception. We adopt a real-time digital storage oscilloscope (DSO) to capture the baseband electrical signal and perform offline DSP. In the receiving side digital signal processing (rx-DSP), the precise down conversion (DC) is conducted on signals to compensate for the frequency offset of the system. Then the signals undergo Bessel filtering, resampling, and Gram-Schmidt orthogonalization (GSOP) to solve the problem of IQ imbalance. Additionally, we perform clock recovery (Retiming) to eliminate timing errors and perform chromatic dispersion compensation (CDC). Finally, we adopt the MIMO-NNE to perform channel nonlinear equalization to compensate for nonlinear damage and calculate BER.Results and DiscussionsFigure 5 shows the BER of the traditional MIMO-LMS algorithm and the proposed MIMO-NNE algorithm under different 6-mode EDFA current. MIMO-NNE algorithm has an average bit error gain of about 0.02 compared to MIMO-LMS algorithm. At the same time, MIMO-NNE algorithm can make the BER of 16QAM transmitted over 100 km lower than the 1.0×10-2 SD-FEC threshold. Fig. 6 shows the convergence process of the mean square error (MSE) of MIMO-NNE algorithm and MIMO-LMS algorithm under an approximate iteration data amount. MIMO-NNE algorithm has better convergence performance than MIMO-LMS algorithm. As shown in Figs. 7 and 8, the difference in BER of each mode and different wavelength sub-channels is not significant. At the same time, the BER of each channel in 100 km transmission is lower than the 1.0×10-2 SD-FEC threshold.ConclusionsIn this study, we experimentally build a 6-mode 16-wavelength dual-polarization homodyne coherent transmission system. At the receiving end, the MIMO-NNE based on multi-label technology is used for channel equalization. When transmitting 100 km, the total system rate reaches 36.864 Tbit/s. With the help of MIMO-NNE, the MDM-WDM system BER can meet the 15% SD-FEC threshold of 1×10-2. The experimental results confirm the nonlinear equalization potential of MIMO-NNE in future high-capacity long-distance transmission systems.

ObjectiveThe growing emphasis on renewable energy sources in sustainable societies is evident, indicating a shift towards cleaner energy solutions. Solar photovoltaic modules harness solar radiation to generate electricity and meet the power requirements for the normal operation of instruments and devices. Typically, there are two primary approaches to increasing electricity generation, including using high-efficiency solar panels and expanding the deployment areas of solar arrays. However, the former approach has limitations in improving efficiency, while the latter significantly increases the satellite launch cost. Non-imaging solar compound parabolic concentrators have caught considerable attention due to their efficient and stable operation, easy construction, and compatibility with satellite systems for reducing energy costs and improving the effective payload capacity of satellites. The utilization of solar concentrators in satellite systems enhances sunlight capture by solar wings, thus increasing energy output, reducing weight and volume, improving the stability and durability of solar panels, and expanding the application range of concentrators. Taking these advantages into account, we design a truncated compound planar concentrator for the operational characteristics of solar wings. Coupled with real-time sun-earth distance, earth-satellite space relationships, and solar radiation theory, a model for receiving solar radiation by solar wings is developed. The findings provide valuable insights for the structural design and optimization of solar wings.MethodsFirst, via carefully analyzing the shortcomings of traditional S-CPC systems, a TMS-CPC surface structure is designed based on the edge-ray tracing principle, and its three-dimensional geometry is modeled by software. Meanwhile, a scaled-down model is built using 3D printing technology to verify the focusing performance of the constructed TMS-CPC. In the ground laboratory, parallel lasers are employed to simulate sunlight and enable visual ray tracing of the coupled TMS-CPC system. This allows for observing and recording the concentration process and characteristics of TMS-CPC on "solar rays". Simultaneously, optical simulation software is adopted for ray tracing simulations, and the obtained experimental values are compared and analyzed against the simulated values to validate the model reliability. Secondly, by considering the spatial relationships among the sun, the earth, and the satellite, a real-time distance model is built. The solar radiation amount received by the solar wing can be calculated via spatial radiation theory. Simulations and analyses are conducted using the Satellite Tool Kit (STK) to study the characteristics of solar wing reception of solar radiation based on a congruent concentrating surface.Results and DiscussionsDuring the laser validation experiment of the solar wing TMS-CPC, factors such as the laser divergence angle, high reflectivity of the flexible reflective membrane, and manufacturing errors associated with 3D printing all affect the experimental results. However, in this scenario, the simulated values of the concentrating performance of the solar wing TMS-CPC tend to align with the experimental values, with a maximum average absolute error of 1.49 mm and a minimum of 0.75 mm (Fig. 3). When the incident angle of the light exceeds 6°, optical efficiency decreases within the TMS-CPC system (Fig. 4). A comparison between the theoretical and simulation values of solar radiation on the solar wing, along with the satellite exposure characteristics, reveals an average absolute error of only 0.04 W/m2 in the radiation model calculation values and 18.2 s in satellite exposure characteristics (Fig. 7 and Table 1). During variation analysis in energy flux density on the solar surface with different incident angles of sunlight, it is observed that in the constructed solar wing TMS-CPC system, when sunlight is incident vertically (at an angle of 0°), the energy flux density on the surface of the solar panels is symmetrically distributed on both sides of the central axis. However, when sunlight is incident at angles within the acceptance half-angle (0°, 1°, and 2°), the peak energy flux density increases with the rising incident angle, while the average energy flux density remains constant (Fig. 8). The closer distance of the incident angle to the acceptance half-angle leads to more uniform distribution of energy flux density on the solar panel surface (Fig. 9). Theoretical peak power generation with the solar wing TMS-CPC is approximately 87% higher than that of traditional solar wings. However, there is a reverse trend in power generation with variations in sun-satellite distance (Fig. 10).ConclusionsOur study is based on the edge-ray tracing principle to construct a truncated structure compound planar concentrator TMS-CPC, and incorporates real-time sun-earth distance calculations, earth-satellite spatial relationships, and solar radiation theory to build a model for solar radiation reception by solar wings. Laser experiment results show that the experimental data are in good agreement with the simulation results, thereby confirming the reliability of the built model. The solar wing TMS-CPC expands the acceptable angle range beyond that of the conventional S-CPC, providing sufficient error margin in satellite tracking systems. Significantly, within the acceptance half-angle range, the average uniformity index on the solar panel surface reaches 0.615, greatly enhancing its capability to capture solar radiation. During one orbital cycle, the satellite predominantly stays in the sunlit region, ensuring favorable conditions for photovoltaic components of the solar panels and guaranteeing the satellite's long-term stable operation. This reduces energy costs and enhances overall economic benefits for satellites. Numerical simulations of power generation from a single solar wing coupled with TMS-CPC, along with a comparative analysis against traditional solar wings, illustrate that the built model effectively enhances theoretical power generation.

ObjectiveDisplay technology is essential for human beings to obtain information. In display technology, holographic display is considered the most influential display technology, as it can reconstruct all the information of real or virtual scenes without visual fatigue. Color holographic display is a significant technology that can record and reconstruct the color and three-dimensional (3D) information of the original object. Compared with monochrome holograms, color holograms can reflect the real information of objects, having a more wide application. In this paper, we propose an iterative method for generating multiplane color phase-only holograms based on time-division multiplexing. This method is based on the Gerchberg-Saxon (GS) algorithm. When holograms are recorded, amplitude constraints are imposed on each channel plane, which is repeated. The red (R), green (G), and blue (B) channel information of color images is recorded in three phase-only holograms respectively. During reconstruction, RGB channels overlap at the same distances, and the target color images are reconstructed. The reconstruction results of one, three, and five color images are displayed. Compared with the deep-division multiplexing (DDM) method, the quality of reconstructed color images by the proposed method is improved, and the crosstalk between different channel planes is effectively avoided. Numerical simulation and optical reconstruction results prove the effectiveness of the proposed method.MethodsThe red, green, and blue channels of color images are set to the same distances when encoding in this study. When recording, we set the amplitude of the initial holograms of three-color channels as a constant of 1 and the phase as a random. When the wavefront propagates to the object plane through angular spectrum diffraction, its amplitude information is replaced by the amplitude of the object plane. The amplitude constraint is relaxed by applying a small nonzero value to the zero-intensity region of the object plane, and the phase is preserved. The wavefront continues to propagate backward, and the amplitude in the hologram plane is replaced by a constant of 1. The phase is preserved, and the process is repeated. Eventually, their three-color channel information is recorded in three holograms respectively. When reconstructing, the three-color channels of the color images are reconstructed at the same distances, and then the color images are reconstructed. When the wavefront propagates to the object plane through angular spectrum diffraction in holographic recording, a small nonzero value is applied to it to relax the amplitude constraint of the object plane. In holographic reconstruction, the original color images are reconstructed at a set distance. It can effectively reduce the speckle noise of the target color images by padding with zeros to the original images. As laser speckle often reduces the quality of the reconstructed images in optical experiments, we adopt the time averaging method. Through the time integration effect, the intensity information of reconstructed images of multiple holograms is superimposed to suppress speckle noise. For the optical reconstruction system, the chromatic aberration caused by the objective lens may lead to different image sizes in red, green, and blue channels. In this study, we construct an optical system with achromatic optical elements to avoid the problem of inconsistent size and distance of reconstructed images.Results and DiscussionsOur proposed method shows excellent performance in both numerical simulation and optical experiment (Fig. 6). The proposed method and DDM method can reconstruct the single-color image well. However, the original color image reconstructed by the DDM method has color deviation, which may be caused by the hologram recording images of different color channels during recording. The original color image can be reconstructed well by our method. We introduce the correlation coefficient as an index to measure the quality of color image reconstruction. The correlation coefficient values of our proposed method in reconstructing single and multiple color images are higher than those of the DDM method (Fig. 7). The DDM method reconstructing multiplane color images is very limited. When recording holograms, we need to keep multiple color channels at different distances, whereas this work will be very hard within a limited calculation distance. Eventually, crosstalk will inevitably occur between different channels, leading to color deviation. Because reconstructing n color images will eventually reconstruct n×3 channels, the possibility of crosstalk between different channels greatly increases. However, when we reconstruct n color images, only n channels will be reconstructed. Our method can reconstruct more color images, but we need to pay attention to the distance setting between different images to avoid crosstalk.ConclusionsIn this paper, we propose a phase-only hologram generation method for reconstructing multiplane color images. In holographic recording, the red, green, and blue color channels of color images are recorded in three holograms respectively, and finally, the original color images are reconstructed at the set distances. The traditional DDM method needs to record multiple information of different color channels when encoding. Therefore, the quality of the reconstructed images is poor and crosstalk occurs. Our method effectively avoids crosstalk between different planes by setting the distance between different planes reasonably during recording. When reconstructing multiplane color images, it can still maintain high quality. The correlation coefficients of our proposed method are significantly higher than that of the DDM method when reconstructing single and three images. Both numerical simulation and optical experiment results show the novelty and effectiveness of our proposed method.

ObjectiveGray level residual (GLR) field refers to the intensity differences between corresponding voxel points in the digital volume images acquired before and after deformation. Typically, internal damage in materials induces substantial variations in grayscale values between corresponding voxel points. Therefore, the GLR field helps to reveal the damage location. In the finite element-based global digital volume correlation (DVC) method, the GLR field, as the matching quality evaluation criteria, can be readily calculated and has been employed to characterize the evolution of internal cracks. However, the widely used subvolume-based local DVC, which can output displacement, strain, and correlation coefficient at discrete calculation points, cannot obtain the GLR directly. Compared with correlation coefficient and deformation information, the GLR field achieves voxelwise matching quality evaluation, thus demonstrating superior performance in visualizing internal damage. Therefore, accurate GLR calculation in local DVC is undoubtedly valuable in compensating for its shortcomings in fine-matching quality evaluation and expanding its applications in internal damage observation and localization.MethodsThe GLR field is obtained by subtracting the reference volume image from the deformed volume image after full-field correction. The key of its calculation is to utilize the continuous voxelwise data, including contrast and brightness correction coefficients and displacement, to correct the deformed volume image. In this work, a dense interpolation algorithm based on finite element mesh is adopted to estimate the voxelwise data within the volume of interest (VOI). 3D Delaunay triangulation algorithm is first utilized to generate tetrahedron element mesh from the discrete calculation points, and then the data of voxel points inside each tetrahedron element can be determined with the shape function of finite element. After acquiring the voxel-wise data of VOI within the reference volume image, the corrected deformed volume image can be reconstructed. Given that the corresponding voxel points in the deformed volume image normally fall into the subvoxel positions, a subvoxel intensity interpolation scheme is required during the calculation of correlation residual in local DVC. In this work, the advanced cubic B-spline interpolation method is adopted to estimate the grayscale of the corrected deformed volume image. In addition, a simulated mode I crack test and a tensile test of nodular cast iron are carried out to verify the feasibility of the GLR field based on local DVC and the reliability and robustness in damage observation and detection.Results and DiscussionsIn simulated mode I crack test, the results show that the uncorrected GLR field still keeps a higher grayscale even in the region away from the crack compared with the corrected GLR field (Fig. 7), which degrades the damage observation and location. Therefore, contrast and brightness correction are necessary during the calculation of the GLR field. The crack plane can be detected clearly from the GLR field after threshold processing, and the position of the crack plane is very close to the preset value (Fig. 7). The proposed GLR based on local DVC effectively eliminates the influence of contrast and brightness changes and achieves precise crack location. Additionally, more information about the damage can be acquired from the GLR field. The crack morphology and orientation can be determined from the slice image at y=40 voxel in the real test. Besides, the debonding between the nodular graphite and matrix can also be detected roughly from the GLR field (Fig. 10). It should be noted that the GLR field after post-processing can only reflect the approximate morphology of damages and fails to reflect the opening of crack and debonding accurately since the interpolation used in displacement correlation may enlarge the region with damage. Despite all this, the location and morphology of damages extracted from the GLR field are helpful in understanding the fracture mechanics properties of nodular graphite cast iron.ConclusionsA simple and practical method for GLR field calculation based on post-processing of local DVC measurements is proposed. The method addresses the limitations of existing local DVC in fine-matching quality evaluation. Compared with correlation coefficient and deformation information, the GLR field not only accurately reflects the location of internal damage but also facilitates visual observation of internal crack morphology and interface debonding behavior. It holds the potential for broader applications in visualizing and precisely locating internal damage within materials and structures.

ObjectiveAs the number of spectral channels significantly affects the system complexity, data load, time resolution, and spatial resolution of images, a multispectral color imaging system that aims to accurately reproduce the visible spectrum reflectance of object surfaces is preferred using a limited number of spectral channels. However, seldom literature has been explained statistically for the determination of numbers of spectral channels. The heuristic or even arbitrary number of spectral channel configurations challenges the purpose of multispectral color imaging for accurate spectral reconstruction and color reproduction. It is even more crucial with the emergence of various modalities of color imaging sensors, such as those of with liquid crystal tunable filters (LCTFs) and multispectral filter arrays (MSFAs) and recently developed nanostructure color filters. Previous evidence shows that the spectral transmittance of the optimal filter set for multispectral color cameras is Gaussian curves. We build upon the previously proposed multi-objective optimization method for filter selection with specific channels and systematically explore the way to determine the optimal number of spectral channels for typical multispectral color imaging systems with filters modeled by Gaussian functions.MethodsThe workflow for optimizing the number of spectral channels in the multispectral color imaging system studied in this research is illustrated in Fig. 1. Firstly, we provide a systematic theoretical presentation of the spectral sensitivity optimization by filter selection for the broadband multispectral imaging and the method for the channel numbers optimization, which could scarcely be found in the literature published so far to the best of our knowledge. The highlight of the proposed method is built upon the previously proposed multi-objective optimization method for filter selection, and we systematically explore the way to determine the optimal number of spectral channels for typical multispectral color imaging systems. Then, we investigate the optimal number of channels experimentally. Using the Munsell spectral reflectance dataset to construct the spectral imaging targets, imaging simulations and reflectance reconstruction under 10 noise levels are conducted by the spectral sensitivity of an actual CCD image sensor, the spectral distribution of the D65 illuminate, and the transmittance curves of the filters generated by Gaussian filter model. it involves 29 virtual multispectral cameras, or in other words, the channel numbers are 3-31, respectively.Results1) Determination of the optimal number of channels. The optimal filters' serial numbers and the corresponding accumulative scores under different channel numbers are presented in Table 1. Figure 3 illustrates the concentration index of multi-objective functions (CMFs) under different channel numbers. Moreover, Fig. 4 depicts the performance of the best filter sets in terms of CIEDE2000 and MSE, respectively, under different channel numbers. Additionally, Fig. 5 displays the accumulative scores of the best filter sets' performance within the 29 numbers of channels.2) Characterization of the filter set with the optimal channel number. The optimal number of channels for a multispectral color imaging system is 5 (Fig. 6) when the maximum number of channels is not greater than 8. Figure 7 presents the transmittance curves of the optimal Gaussian filter sets with five and nine channels, respectively. Table 3 presents the characteristics of the optimal Gaussian filter sets with five channels under two different illuminates. Table 4 compares the performance indices of the optimal Gaussian filter sets with five channels under different illuminates.ConclusionsFrom the results, the following six items could be concluded: 1) For broadband multispectral color imaging, increasing the number of channels does not always lead to an improvement in spectral reconstruction accuracy. It is observed that a smaller number of spectral channels has the potential to simultaneously satisfy the requirements of color difference reproduction and spectral reconstruction error accuracy; 2) By employing the multi-objective optimization method within the optimal filter range for each channel, that is to say, extending the concept of CMF, a unique optimal number of channels can be obtained; 3) In general, a higher noise level (i. e., a lower signal-to-noise ratio) often indicates worse performance indicators, but the specific performance indicators may exhibit varying nonlinear characteristics with the noise; 4) CIEDE2000 is more sensitive to noise when compared to the relevant indicators, MSE and PSNR, as indicated in Fig. 7, and the latter two are more discrete; 5) Based on the principles of multi-objective optimization in this study, the optimal number of spectral channels for Gaussian filters with less than eight channels is 5 under the illuminant D65. Moreover, compared with illuminant A, D65 enhances the performance of the Gaussian filters with five channels in terms of spectral reconstruction and color reproduction; 6) Optimal filters with the same number of channels may differ under different spectral distribution light sources. Differences can be observed in terms of the geometric characteristics of transmittance curves, primarily the varying bandwidths. Furthermore, significant differences can be observed in performance, including color reproduction and spectral reconstruction.Briefly, the spectral transmittance of the optimal color filter set can be described by a series of Gaussian curves, and the number of spectral channels significantly impacts its performance and complexity. We systematically explore the way to determine the optimal number of spectral channels for typical multispectral color imaging systems. It would be of great theoretical and practical significance to model the spectral channel of color imaging systems with different physical modalities as Gaussian spectral channels and then explore the necessary color filter channels to optimize multi-spectral color imaging systems.

ObjectiveLiquid crystal lens is an emerging liquid crystal device that can be electrically controlled to modulate the focus, zoom, and depth measurement without mechanical movement, and thus it is widely employed in many fields such as photographic camera, microscopic imaging, and virtual reality. Due to the anisotropy of the liquid crystal material, the liquid crystal lens can only modulate the extraordinary ray, and the ordinary ray is not modulated, which causes reduced image contrast. Optical imaging systems of liquid crystal lens can be retrofitted with polarizing devices to remove the ordinary ray component of the incident light. However, the utilization of polarizers drastically reduces the optical flux and degrades the imaging quality. Additionally, there are three main polarizer-free imaging techniques. The first one is to adopt blue-phase liquid crystals instead of nematic-phase liquid crystals to prepare liquid crystal lenses. However, the blue-phase liquid crystal features small birefringence effect, narrow temperature range, and high voltage, and has not yet reached the practical level. The second is to leverage multilayer liquid crystals instead of single-layer liquid crystals to modulate the two components of incident light. However, the multilayer liquid crystal structure increases the thickness and fabrication cost of the device. The third one is to apply a polarizer-free imaging algorithm and an innovative combination of unsharp masking models to acquire high-quality images.MethodsThe non-ideal low-frequency component introduced by the unmodulated extraordinary ray is decreased by utilizing an optical imaging system of liquid crystal lens with a polarizer-free device to acquire one focused image and one unfocused image respectively and perform image processing on the two images. Meanwhile, the unsharp masking model for polarizer-free imaging is proposed to analyze pixel value changes of the images to estimate the percentage of the ordinary ray component, and then the unfocused and focused images are adopted to obtain a high-contrast image.Results and DiscussionsThe optical properties of the liquid crystal lens in the experiment are examined. The experimental results show that the optical focal length is linearly related to the optical aberration of an ideal glass lens. Additionally, the liquid crystal lens is close to the ideal optical aberration of a glass lens with high imaging quality, and can be adopted as a focusing unit in the imaging system (Fig. 7). A polarizer is placed in front of the liquid crystal lens to remove the ordinary ray component in the incident light, and different voltages are applied to the ends of the liquid crystal lens to capture the ISO 12233 chart. The fMTF50 is to characterize the resolution capability of the system and evaluate the image quality to determine the optimal operating voltage value (Fig. 10). Meanwhile, we adopt fMTF50 to characterize the system's resolving power, evaluate the image quality, and then determine the optimal operating voltage value (Fig. 10). Focus and non-focus images are captured, the values are set to process the two images, and the unsharp masking model is adopted for edge detection, with observations conducted on whether there is any abnormal "depression" and "bulge". The polarization direction of the incident polarized light is detected and recorded (Table 1). The relationship between the polarization angle and the ideal and actual values is plotted, and the values obtained by experimental measurements are near the ideal value curve, which indicates that the experimental method can obtain the actual values more accurately and process the images (Fig. 14). Meanwhile, we photograph the actual scene and process the image, and the image obtained by the imaging method of polarizer-free liquid crystal lens is clear and natural with high contrast. The value calculated by the unsharp masking model can be applied to the actual measurement (Fig. 16).ConclusionsWe propose a polarizer-free imaging technique of liquid crystal lens based on the unsharp masking model and experimentally verify the feasibility of the technique. The technique combines the unsharp masking model in image processing to estimate the proportion of the ordinary ray component in ambient light by analyzing the changes of the image pixel. Then, this value is employed to process the focused and unfocused images to reduce the non-ideal low-frequency component introduced by non-modulated unusual light and obtain a natural high-quality image. Additionally, values of the ratio of the o-light component are obtained by simulating the ambient light incidence conditions with different polarization directions, and the experimental results are consistent with the theoretical analysis.

ObjectiveSpectral computed tomography (CT) is a technology that utilizes the differences in attenuation coefficients of substances across different channels, which can demonstrate significant capabilities in material identification and analysis. Particularly, photon-counting spectral CT, which significantly curtails electronic noise and enhances resolution, signifies the latest technological advancements in CT imaging. However, effects such as photon starvation, charge sharing, and pulse pile-up engender severe noise in photon-counting spectral CT, directly undermining the image reconstruction quality and hampering the applications of photon-counting spectral CT technology. Our paramount research focus lies in accurately characterizing the statistical properties of projection data noise in photon-counting detectors, designing precise spectral CT reconstruction algorithms, and suppressing noise.MethodsInitially, a theoretical analysis is conducted on the statistical noise characteristics in the projection data of photon-counting detectors. Specifically, by comprehensively considering the statistical distribution of photon flux and electronic noise in the projection data, where photon flux can be characterized by a compound Poisson distribution and approximated by a Gamma distribution, and electronic noise follows a Gaussian distribution. A theoretical noise distribution model of projection data is derived by the Bayesian formula. Subsequently, a statistical inference is carried out on the proposed theoretical noise distribution model of projection data. On the one hand, the probability distribution of the noise is fitted via actual data experimentation. On the other hand, a goodness-of-fit test is conducted on the theoretical noise distribution model. Ultimately, by adopting time series analysis for prediction, the predicted values are employed to restore outliers in the projection data.Results and DiscussionsWe derive a rigorous theoretical noise distribution model in photon-counting spectral CT projection data (Eq. 9), bearing a similar expression to the univariate p-norm distribution. The rationality of characterizing the noise distribution of projection data using univariate p-norm distribution is then analyzed from three perspectives. By fitting the probability distribution of the actual data, the proposed univariate p-norm noise distribution model aligns more closely with the actual data than Gaussian, Poisson, and Gamma distributions, especially under extremely low photon flux, and the fitting degree of the proposed noise distribution model is optimal (Fig. 2). A goodness-of-fit test is conducted on the proposed noise distribution. The results are shown in Table 1. The proposed noise distribution is consistent with various collected datasets and consistency is the best in datasets with low photon flux. Lastly, the restoration of outliers using predicted values shows clear improvement from both visual images (Fig. 4) and quantitative assessments (Table 2). The proposed univariate p-norm distribution aptly characterizes the statistical properties of photon-counting spectral CT. However, the probability density function of the univariate p-norm distribution is challenging to calculate, and it should be transformed into a linear combination of Gaussian distribution and Laplace distribution for approximation, according to the p-value selection.ConclusionsWe investigate the statistical noise characteristics in the projection data of photon-counting spectral CT, and propose to employ univariate p-norm distribution to model the projection data noise. The distribution is verified by fitting actual data probability density functions and statistical inference tests. The univariate p-norm distribution can fully characterize the statistical law of observational errors. Especially under the insufficient number of photons, the univariate p-norm distribution can reach optimal when fitting the actual data distribution. The statistical probability model of projection data from the devised photon-counting detection system allows for an in-depth analysis of the system performance and accurate noise simulation during simulation experiments, and provides an accurate objective function for optimizing the likelihood functions in statistical iteration reconstruction. We explore the statistical noise characteristics of projection data in photon-counting detectors, enrich the theoretical results of X-ray spectral CT imaging systems, and provide theoretical support for the design and optimization of multi-spectral image reconstruction.

ObjectiveIn the precision polishing stage of optical element processing, optical interference detection methods are often employed to detect the surface shape and transmitted wavefront. Among them, the shearing interference method is a measurement technology that adopts its light wave and copied light wave, and there is a dislocation between the light wave and copied light wave in space, which makes it unnecessary to introduce the reference light wave. At present, the synchronous phase-shifting technology is the interference measurement method with the best anti-vibration effect. It can obtain multiple phase-shifting interferograms spontaneously, and then adopt the phase-shifting algorithm to restore the wavefront information to be measured. The combination of shearing interferometry and synchronous phase-shifting technology can realize the absolute common optical path phase-shifting measurement of the phase to be measured and remove the influence of environmental vibration and air disturbance on the interferometry. The study of synchronous phase-shifting shearing interferometry is significant for detecting transmission wavefront pairs and the surface shape of optical elements. In the wavefront measurement, due to the influence of the surface error of the reference mirror, insufficient utilization of light energy, environmental vibration, and air disturbance on the interference measurement results, we propose a synchronous phase-shifting shearing interferometry method based on polarization grating splitting. This can achieve high-precision detection of transmission wavefront and reflection wavefront.MethodsThe proposed method is based on polarization grating beam splitting to achieve the wavefront test method of synchronous phase-shifting shearing interference, which can be utilized to test the transmission wavefront. The shearing module is a reflective transverse shearing structure composed of a polarization grating, a plane mirror, and a quarter wave plate. The polarization grating is a diffractive optical element that realizes selective beam splitting based on the polarization state of the incident light. The beams carrying the wavefront to be measured are divided into two orthogonal circularly polarized beams by the polarization grating, and then transverse shearing occurs again after being reflected by the plane mirror via the polarization grating. The orthogonal polarized light with certain transverse shear is formed after passing through a quarter wave plate. The phase-shifting module adopts a synchronous phase-shifting structure composed of a two-dimensional phase grating, a small aperture diaphragm, and a phase delay array. The orthogonal linearly polarized light is diffracted by the two-dimensional phase grating, and the diffracted light of (±1, ±1) order is selected by the small aperture diaphragm. Then the phase shifting is generated by the phase delay array, and the interference occurs after passing through the linear polarizer. The vertical phase-shifting shearing interferogram can be obtained by rotating the polarization grating. Meanwhile, via adopting the transformation of the test scheme, the surface wavefront generation module of the optical element is added in front of the shear module, which can detect the surface shape of the optical element. For the shearing interference fringes in x and y directions collected by CCD, the image registration algorithm based on phase correlation, the four-step phase-shifting algorithm, and the phase unwrapping algorithm based on DCT are leveraged to obtain the phase distribution to be measured. Subsequently, the wavefront to be measured is reconstructed by the least square wavefront reconstruction algorithm based on differential Zernike polynomials.Results and DiscussionsWe build a phase-shifting shearing interferometer based on polarization grating on the optical platform of the laboratory, and measure a lens with a diameter of 25.4 mm and a focal length of 50 mm. The PV value of the wavefront to be measured is 0.5366λ and the RMS value is 0.1519λ (Fig. 7). The results are compared with the measured results of the SID4 wavefront sensor (Fig. 8), which proves the accuracy of this method. The repeatability experiment proves the stability of the measurement results of this method. Then, we construct a measuring device of optical element surface shape based on polarization grating synchronous phase-shifting shearing interferometry on the optical platform of the laboratory. A concave mirror with a diameter of 25.4 mm and a focal length of 50 mm is measured. The PV value of the wavefront to be measured is 0.6044λ and the RMS value is 0.1669λ (Fig. 13). The comparison experiment with the measurement results of the SID4 wavefront sensor (Fig. 14) and the repeatability experiment are also carried out. This can verify the accuracy and stability of the measurement results of the synchronous phase-shifting shearing interferometry based on polarization grating.ConclusionsA phase-shifting shearing interferometry based on polarization grating splitting is studied to detect the transmission wavefront and the surface shape of optical elements. The method employs a reflective shearing structure based on polarization grating splitting, with a compact and flexible optical configuration. Compared with traditional grating, the polarization grating has ultra-high diffraction efficiency, the energy of the two beams is uniform, and the light energy utilization is high. By combining shearing interference with synchronous phase-shifting technology, the quasi common path phase-shifting measurement of the wavefront to be measured is realized, which removes the influence of environmental vibration and air disturbance on the interferometry. The shearing interferograms in X and Y directions are processed by the image registration algorithm based on phase correlation, and the four-step phase-shifting algorithm and phase unwrapping algorithm based on DCT are adopted to obtain the shearing phase distribution. Then the wavefront to be measured is reconstructed by the least square wavefront reconstruction algorithm based on differential Zernike polynomials. The results show that the measurement results of this method are accurate and stable, and can achieve high-precision wavefront dynamic measurement, which is of significance for detecting the surface shape and transmission wavefront of optical elements.

ObjectiveAs an important part of optical materials, optical transmission materials are widely employed in optical display and optical communication, and their optical properties play a key role in the whole optical system. The optical properties of optical transmission materials mainly include optical uniformity, optical thickness, surface shape, fringes, and bubbles. The optical parallel plate is strictly controlled by its design parameters. If the optical uniformity, optical thickness, surface shape, and other optical parameters of the plate are inconsistent, the optical wave front will be changed when the light wave passes through, thus degrading the optical system performance. Therefore, the optical uniformity, thickness, and surface shape of optical materials are significant performance indicators for high-precision optical systems. To solve the problem of slow speed, low efficiency, and small measurement range of optical parameters of parallel plates with different thicknesses, we propose a wavelength phase-shifting interferometry method based on characteristic polynomial.MethodsThis method combines the two-step absolute measurement method to carry out theoretical research on multi-surface interference technology. Then we design a weighted multi-step wavelength shift algorithm based on the feature map and feature polynomial theory, which is employed to extract and calculate the surface shape, optical thickness changes, and optical uniformity information of the plate. The specific process is as follows. The evaluation function and Fourier expression of the phase-shifting algorithm show the immunity of the algorithm to the errors. Finally, the algorithm is compared with the OPL algorithm. Firstly, the two-step absolute measurement method is combined with the theoretical research on multi-surface interference technology. Then a weighted multi-step wavelength phase-shifting algorithm is designed based on the feature map and feature polynomial theory, which is adopted to extract and calculate the surface shape, thickness changes, and optical uniformity information of the plate surface. The evaluation function of the phase-shifting algorithm and its Fourier expression are utilized to show the immunity of the algorithm to errors. Among them, the weighted multi-step wavelength shift algorithm based on the characteristic polynomial theory is designed as follows. First, the measured plate is placed in an interference cavity, and 77 interferograms are obtained by wavelength-tuned phase-shifting interference. The superimposed interference region of each interferogram is composed of six groups of first-order interference fringes. According to these interferograms, the feature graphs are designed and the corresponding feature polynomials are written. The characteristic polynomial is polynomial expanded, and the sampling amplitude is obtained by simultaneous solution combined with the relative frequency amplitude of the target information. The phase information is obtained by taking the two into the phase calculation formula, and the corresponding wave front information is obtained after the phase information is unpacked and de-tilted. Then, the measured plate is removed for cavity measurement, and the cavity phase information obtained by cavity measurement is unpacked and de-tilted to obtain cavity wavefront information. Finally, the optical uniformity of the parallel plate can be obtained by bringing the information of the two wave fronts into the calculation formula.Results and DiscussionsThe proposed method features high speed and high precision in measuring the optical uniformity of parallel plates with different thin thicknesses. The PV and RMS errors of the 77-step phase-shifting algorithm and OPL algorithm are in the order of 10-8, and the PV errors of the surface shape and optical thickness changes are within λ/100. The PV and RMS errors calculated for the optical uniformity of parallel plate are in the order of 10-7, and the PV errors of surface shape and optical thickness are within λ/100. The data show that the calculation results of the two methods are basically consistent, and the measurement accuracy of 40 mm parallel plate is slightly higher than that of 5 mm parallel plate (Table 4). However, the 77-step algorithm is much better than the OPL algorithm in computational efficiency and speed because the required number of interference samples is much smaller than that of OPL algorithm (Table 5).ConclusionsWe study a multi-step wavelength phase-shifting algorithm based on the characteristic polynomial theory. Based on the conventional phase-shifting algorithm and the characteristic polynomial theory, three groups of 77-step algorithms are designed according to the target requirements for measuring and calculating the surface shape, optical thickness changes, and optical uniformity of parallel flat surfaces. Additionally, the evaluation function diagram of the algorithm is drawn to show the sensitivity and immunity of the algorithm to errors. The measurement results show that the 77-step phase-shifting algorithm can suppress harmonic errors, phase-shifting errors, and other coupling errors. Meanwhile, the algorithm requires fewer interferograms with high detection efficiency, takes into account the computational efficiency with high precision, and is suitable for optical parameter measurement of parallel plates with different thicknesses. The problems are solved such as the large number of interferograms required by existing algorithms, large computational amount, partial error compensation, and sensitivity to harmonic frequency mismatch or deviation.

ObjectiveKnown as the digital speckle correlation method, the digital image correlation method is a non-contact optical measurement method. The deformation information of the region of interest is obtained by correlation calculation of two digital images before and after the specimen deformation. DIC method is mainly composed of integral pixel displacement search and sub-pixel displacement iterative calculation, among which the commonly adopted sub-pixel displacement calculation methods include surface fitting, gray gradient, Gauss-Newton (G-N) method, Newton-Raphson (N-R) method, and inverse compositional Gauss-Newton (IC-GN) method. In sub-pixel displacement search algorithms, N-R and G-N methods as second-order nonlinear optimization methods have faster convergence speed and global optimal solutions. However, in the G-N method, when the Hessian matrix is approximately non-positive definite, the error of solving the inverse matrix will increase to result in incorrect final solution results. Additionally, when the texture features of speckle images are weak and the deformation amount is large, the error of solving the inverse matrix will rise. The whole pixel displacement search algorithm can not provide accurate initial value estimation, and eventually, the calculation fails. Since the inverse compositional algorithm has higher computational efficiency than these algorithms, it is employed to calculate the displacement field of speckle deformation images by sub-pixel displacement, with several algorithms explored.MethodsThe inverse compositional diagonal approximation algorithm and the inverse compositional Dog-Leg algorithm adopting to image matching are applied to the displacement field calculation of speckle images, and the parameter update strategy of the inverse compositional Levenberg-Marquardt algorithm is simplified. By the compression deformation experiment of the memory simulation speckle image and the real speckle image, the performance of these three algorithms is explored from three aspects including convergence speed, convergence evaluation rate, and computation speed. In terms of convergence rate, the speckle image is evaluated in different displacement and Gaussian noise conditions. The convergence speed and calculation speed are evaluated by different small windows and with or without Gaussian noise. Finally, three algorithms are utilized to measure the deformation of the rubber block and compared with the open-source software Dice.Results and DiscussionsAccording to the speckle simulation deformation experiment, the convergence speed and final calculation accuracy of several first-order algorithms are almost the same, and in simple rigid body translation deformation, the convergence speed and final calculation accuracy of the first-order algorithm are higher than those of the second-order algorithm. Generally, the convergence speed and the final calculation accuracy of the second-order algorithms IC-LM2, IC-DogLeg2, IC-Diag2, and IC-GN2 decrease from high to low values. In terms of convergence speed, the convergence frequency of the first-order algorithm is higher than that of the second-order algorithm. When the displacement is less than five pixels, several algorithms can successfully calculate the displacement of all POI, and the convergence frequency gradually decreases with the increasing deformation. In the second-order algorithm, the convergence frequency of IC-Diag2, IC-DogLeg2, IC-LM2, and IC-GN2 algorithms decreases from high to low values. With the rising subarea window size, the convergence radius of several algorithms gradually increases, and the convergence frequency of IC-GN2, IC-DogLeg2, and IC-LM2 algorithms tends to be the same, while IC-Diag2 algorithm gradually ranks first in other algorithms. Among first-order algorithms, the convergence frequency of IC-DogLeg and IC-LM algorithms is slightly higher than that of IC-GN and IC-Diag algorithms. The calculation speed of IC-GN, IC-LM, IC-DogLeg, and IC-Diag algorithms decreases from high to low values, and with the increasing displacement, the calculation speed of several algorithms is also decreasing. Meanwhile, since with the rising size of the subarea window, the pixel number in the subarea that needs to participate in the calculation is also increasing, and its calculation speed is also slowing down. In the deformation experiment of rubber blocks, both the proposed algorithm and Dice software can successfully calculate the displacement field and strain field of the experimental deformation. In the large deformation experiment, the maximum shape variable exceeds 100 pixel, and it is difficult for the Dice software to accurately calculate the displacement field and strain field of the deformation for some regions. The three algorithms can still successfully calculate the displacement field and strain field of deformation and are more applicable under large deformation measurement scenarios.ConclusionsIn measuring image displacement, different sub-pixel displacement iteration algorithms deal with the different performances of displacement measurement. We adopt the inverse compositional diagonal approximation algorithm and inverse compositional Dog-Leg algorithm in the digital image correlation method for displacement measurement. Additionally, the parameter update strategy of the inverse compositional Levenberg-Marquardt algorithm is simplified, and the performance of the three algorithms is compared and evaluated by the compression deformation experiment of the simulated and real speckle images. The experimental results show that in the simulation speckle experiment, each algorithm has a different convergence speed, convergence frequency, and calculation speed. In real experiments, the accuracy of a small deformation experiment is similar to that of the inverse combined G-N method, and the convergence radius of a large deformation experiment is larger.