Ptychography is a newly developed phase-retrieval technique based on lighting-probe scanning of a specimen in which the scanning step is smaller than the probe diameter. By using an iterative calculation, the complex-amplitude distributions of the probe and the specimen could be reconstructed simultaneously. Ptychography is a non-lens phase imaging technique with theoretically diffraction-limited resolution. Initially, the performance of Ptychography is limited by its basic assumptions. With the development of related researches in recent years, its characteristics have been gradually understood, and it has become increasingly mature as well. Nowadays, Ptychography has been applied to phase imaging, wavefront diagnostics, and optical metrology in the fields of visible, X-ray, and electrons. This paper discusses key factors that affect the reconstruction process and accuracy, including multiple modes, scanning errors, light-spot errors, distance errors, and non-negligible specimen thickness, and summarizes a development of key techniques to help overcome these factors.

High performance imaging of large-scale dynamic scenes is substantial to vision intelligence. The light field is a 3D plenoptic function that describes the amount of light flow in every direction through every point in space. By recording the high dimensional light signal, the light field can accurately perceive the complex dynamic environment, supporting the understanding and decision-making of the intelligent system. Computational light field imaging technique, based on the light field and the representation of plenoptic function, aims to combine computation, digital sensors, optical system, and intelligent lighting, thereby combining the hardware design and software computing power. This technique breaks through the limits of classical imaging model and digital camera, establishes the relationship among light in spatial, angular, spectral, and temporal dimensions, realizes coupling perception, decoupling reconstruction, and intelligent processing, and leads to the multi-dimensional and multi-scale imaging ability for large-scale dynamic scenes. Light field imaging technique plays vital role in various fields, including life science, industrial inspection, national security, unmanned system, VR/AR, etc., attracting broad interests from both academia and industry. With the discrete sampling of high dimensional data, light field imaging faces the challenge of dimension trade-off between spatial resolution and angular resolution. How to reconstruct light field for sparse sampled data becomes a fundamental problem in computational light field imaging and its applications. Meanwhile, limited by high dimensional data perception of light field signals, light field process faces the contradiction between effective data perception and computational efficiency. How to replace the traditional two-dimensional imaging visual perception method with light field which is a high-dimensional information acquisition means and how to combine intelligent information processing technique to realize intelligent efficient perception, are huge challenges for industrial applications of the light field imaging technique. In this paper, we conduct a thorough literature review of devices and algorithms of computational light field imaging, including the representation and theory of light field, light field signal sampling, and light field reconstruction with super-resolution in spatial and angular domain.

In recent years, deep learning (DL) has been widely used in computational imaging (CI) and has achieved remarkable results; as such, DL has become a research hotspot in this field. To gain an in-depth understanding of how DL-based CI works, this manuscript mainly introduces the basic theory and implementation steps of DL as well as its applications in scattering imaging, digital holography, and computational ghost imaging to demonstrate its effectiveness and superiority. Some typical applications of DL in CI are summarized and compared herein, and the CI methods based on deep learning are prospected.

In recent years, optical imaging techniques have entered into the era of computational optical imaging from the traditional intensity and color imaging. Computational optical imaging, which is based on geometric optics, wave optics, and other theoretical foundations, establishes an accurate forward mathematical model for the whole image formation process of the scene imaged through the optical system and then sampled by the digital detector. Then, the high-quality reconstruction of the image and other high dimensional information, such as phase, spectrum, polarization, light field, coherence, refractive index, and three-dimension profile, which cannot be directly accessed using traditional methods, can be obtained through computational reconstruction method. However, the actual imaging performance of the computational imaging system is also limited by the “accuracy of the forward mathematical model” and “the reliability of inverse reconstruction algorithm”. Besides, the unpredictability of real physical imaging process and the complexity of solving high dimensional ill-posed inverse problems have become the bottleneck of further development of this field. In recent years, the rapid development of artificial intelligence and deep learning for the technology opens a new door for computational optical imaging technology. Unlike “physical driven” model that traditional computational imaging method is based on, computational imaging based on deep learning is a kind of “data-driven” method, which not only solves many problems considered quite challenge to be solved in this field, but also achieves remarkable improvement in information acquisition ability, imaging functions, and key performance indexes of imaging system, such as spatial resolution, temporal resolution, and detection sensitivity. This review first briefly introduces the current status and the latest progress of deep learning technology in the field of computational optical imaging. Then, the main problems and challenges faced by the current deep learning method in computational optical imaging field are discussed. Finally, the future developments and possible research directions of this field are prospected.

Restoration of scattered optical imaging is among the most important research topics in optical imaging. Various techniques have been proposed for the restoration of imaging in different scattering environments. The deconvolution-based method using speckle correlations is a promising technique achieving high image quality, fast recovery speed, and ease of integration. Herein, we briefly review the current progress of speckle correlation imaging. After discussing the principle of optical memory effect and deconvolution, we introduce new physical characteristics of the point spread functions (PSF) and their applications to image recovery processes, concluding with indirect methods for obtaining the PSF. Finally, a new concept called plenoptics is introduced. Studies on the plenoptics of light fields are expected to provide more information in more complex scattering environments and realize specific applications of scattering optical imaging technique in biology, medicine, ocean, military, and daily life.

In practical optical imaging, light scattering phenomena cannot be avoided. Conventional optical imaging technologies, however, are less effective in solving the problems of wavefront distortion and image degradation, which are caused by scattering. Recent research results have revealed considerable evidence that the imaging technologies fully utilizing the effect of scattering can realize imaging through scattering media or other complex media and have super-resolution characteristics. This paper clarifies the basic principle of scattering imaging, focuses on the research progress of existing imaging methods through scattering media and related technologies, and discusses the current issues related to scattering imaging. Finally, potential research directions of scattering imaging are prospected.

The early application of compressive sensing in optical imaging focuses on spatial compressive imaging. In recent years, increasing compressive imaging systems have employed detector array instead of a single detector for collecting measured values. Moreover, the scope of compressive imaging expands from two-dimensional space to three-dimensional ranging, high-speed imaging, multispectral imaging, ghost imaging, and holography imaging. Herein, we analyzed recent works on high-resolution compressive imaging, compressive sensing ranging, and temporal high-speed compressive imaging with details, summarized the research progresses of measured matrix design by combining spatial compressive imaging, works on sensing matrix design in spatial compressive imaging, discussed their challenges and future development opportunities, and reviewed the applications of compressive sensing in multispectral imaging, ghost imaging, and holography imaging. Furthermore, we summarized the improvement of reconstruction performance of system targets by applying deep learning to compressive imaging.

Ghost imaging (GI) is a novel imaging technique which is different from conventional imaging techniques, which extracts image information via high-order correlation of light-field fluctuations. In recent years, compared with conventional imaging techniques, GI has some advantages such as high sensitivity, super-resolution ability and anti-scattering,which make it widely studied in remote sensing, multi-spectral imaging, thermal X-ray diffraction imaging, and other fields. With these developments, mathematical theory and methods play a more prominent role in GI. For example, based on compressed sensing (CS) theory, we can optimize the sampling mode of GI system, design the algorithm of image reconstruction and analyze the quality of image reconstruction. In this paper, we discuss a few interesting mathematical problems in GI, including preconditioning, optimization of light fields, and phase retrieval. Studying these problems can be useful for enriching the theory of GI and promoting its practical applications.

The near-field region is confined within a subwavelength range from the surface of an object. Evanescent waves exist in the near field, and by utilizing their interaction with matter, near-field high-resolution imaging as well as high sensitivity detection of physical changes of some specimen within the near field region can be realized. Near-field imaging and measurement methods based on total internal reflection (TIR) and surface plasmon resonance(SPR) have a wide range of applications in many fields. By combining digital holography with these near-field measurement methods, accurate, dynamic, and full-field measurements of the phase distribution of a light wave reflected from the near field region can be achieved. This article mainly reviews near-field imaging approaches and measurement applications based on TIR digital holography and SPR holographic microscopy.

Optical imaging technology has greatly expanded the limits of human vision and improved people's ability to observe and understand the real world. The more optical information of the object we can acquire, the more understanding we will get. Digital holography is an imaging technique that allows the three-dimensional information of a sample to be encoded and recorded in the form of a two-dimensional hologram. By obtaining the interference pattern generated by the superposition of the object light wave carrying object information and the reference light wave, multiple reconstruction modalities such as image recovery, phase imaging and optical sectioning can be realized in a digital way. Optical scanning holography (OSH) is a unique digital holography technique that uses active two-dimensional (2D) scanning to image a three-dimensional (3D) object and then uses a single-pixel detector to capture the complete wavefront information of the 3D object. By using optical heterodyne detection to demodulate the signal, a complex hologram is recovered. This review discusses and overviews the recent progress in the OSH technique, including three types of advancements. The first one focuses on the advancements in the performance of optical system, such as spatial resolution improvement and scanning-time reduction, using specially designed hardware and algorithm based on the characteristics of a two-pupil imaging system. The second type of advancement relates to reconstruction modalities, such as sectioning and 3D imaging, where reconstruction of high-quality image is achieved by improving and optimizing the holographic reconstruction algorithms based on the principle of computational imaging. The third one is the development of various research topics related to the OSH technique. This paper also discusses potential and presents some topics for future investigations.

Calibration of the intrinsic and extrinsic parameters of a camera as well as the lens distortion parameters is a crucial step in many camera-based vision applications. Further, ensuring the simple operation of the calibration process and the accuracy of the calibration results is critical. The calibration process of the line-scan cameras is more complicated when compared with that of the area-scan cameras. Herein, the imaging geometric model and the lens distortion model, which are suitable in case of line-scan cameras, are described; subsequently, the general calibration procedure of the line-scan camera is summarized. Furthermore, the calibration methods that have been previously proposed in the literature based on static imaging and dynamic scanning imaging are classified and analyzed, and their characteristics are briefly reviewed.

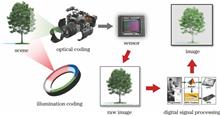

The coded photography acquires coded coupled scene information by modulating the illumination, optical path and sensors, with decoding calculation and image signal processing to achieve high-precision scene reconstruction, thereby breaking the limitations of traditional optical imaging models and physical devices, effectively improving the information transmission efficiency of the imaging system. With the continuous innovation of imaging components such as light sources, optical elements, sensors, and the emergence of new imaging methods, the coded photography technology has achieved rapid development and has a broad application prospect. In this paper, progress of the coded photography technology is reviewed, and three aspects such as illumination coding, optical path coding and sensor coding are emphatically introduced.

Pulsar navigation requires the precise measurement of pulsars. Pulsar X-ray radiation has an extremely short coherence time, and satellite detectors receive only ultralow photon flux. Therefore, to obtain pulsar information and under the conditions of ultralow photon flux, intensity-correlated detection should be implemented. To solve this problem, we conducted an experimental study of intensity-correlated interferometry using a simulated visible-light source. We obtained second-order interference fringes and a corresponding angular diameter. We analyzed herein how coincidence counting affected measurement errors and how the spatial and temporal resolutions of the detecting system affected the intensity-correlated interferometry. The results provide a basis for determining the hardware parameters for X-ray intensity-correlated detection of pulsars.

In this study, light field imaging theory and three-dimensional (3D) particle tracking velocimetry (PTV) are combined to evaluate a 3D flow field using a single camera. Further, a relation is derived between the depth and the optimal refocusing coefficient based on the Gaussian optics and the similarity principle. Subsequently, the light field calibration and flow field measurement systems are established. A depth calibration method is proposed based on the theoretical model of light field imaging. When compared with the Taylor polynomial fitting method, the proposed method is proved to have high robustness. An all-in-focus image is obtained based on the principle of maximum sharpness. The particles in the all-in-focus image are positioned using the corner detection algorithm based on the minimum eigenvalue; further, the 3D velocities of the particles are obtained using the 3D PTV technology. A processing flow is established for the light field images and applied to the flow field measurement on a back step. The results prove that the light-field-imaging-based PTV technology can reconstruct the volumetric flow field.

In light field imaging, the view extraction resolution and data accuracy cannot be balanced. To solve this problem, a nonperiodic view extraction algorithm is proposed herein. First, the microlens array sampling principle is analyzed, and center offset of each microlens is calculated. To analyze the range of the view angle, the projection area of each microlens is calculated by synthesizing multiple viewpoints. Finally, the nonperiodic extraction of pixel blocks is utilized to form a view. A Lytro camera is utilized to obtain light field data, and a nonperiodic algorithm is used to extract 2×2 and 3×3 pixel blocks to form views. Compared to the traditional method, the proposed method improves the resolution by 2×2 and 3×3 times, while simultaneously improving the image quality.

In general, disordered speckles occur when a laser beam passes through a scattering medium. Based on wavefront phase modulation technology, we apply a continuous sequential algorithm combined with a four-step phase-shifting technology to phase modulation of the wavefront of the incident laser so that it can focus on the target position when the laser passes through the scattering medium. The experiments primarily investigate the focusing of the laser passing through the strong scattering-medium samples with different thicknesses, and discuss the influence of the scattering-medium thickness upon the enhancement factor of intensity at the focal point. The experimental results show that with the increase in the thickness of the scattering medium, the size of the focal spot and the enhancement factor of intensity of the focal point at the target position decrease.

Detection angle is closely related to background noise, detection sensitivity, signal-to-noise ratio, and other parameters in the study of tube-excited X-ray fluorescence computed tomography (XFCT) imaging. Accordingly, we simulate a polychromatic tube-excited XFCT imaging system with multi-pinhole collimator using Geant4 to investigate the effect of detection angle on the signal-to-noise ratio and the reconstructed image quality. By changing the tube voltage, mass fraction of gold nanometer solution, and diameter of the region of interest, the signal-to-noise ratio is calculated by simulating the detection processes of the X-ray fluorescence projection data and scattering noise at four angles: 60°, 90°, 120°, and 150°. The simulation results reveal that the signal-to-noise ratio and reconstructed image quality could be effectively improved by increasing the detection angle. For most circumstances, the highest signal-to-noise ratio could be obtained when the detection angle is set to 120°.

To address the limitations of hardware storage resource and power consumption in infrared-remote-sensing ship detection and the inadequate precision of the output boundary rectangular box form of target detection, a lightweight and pixel-level-output segmentation network TRS-Net (ternary residual segmentation network) is proposed. We apply the encoder-decoder structure of image segmentation to ship detection to obtain the pixel-level output. Further, we binarize the 32-bit floating-point parameters to compress the size of the network model and propose a binary segmentation network (BS-net). Then, to solve the problem of poor detection accuracy caused by BS-Net, we introduce residual connection and propose a binary residual segmentation network (BRS-Net). Furthermore, owing to the sparsity of the neural network, we introduce ternary parameters and propose a ternary segmentation network (TS-Net); therefore, we propose a ternary residual segmentation network (TRS-Net) to further improve the detection effect. Using a long-wave infrared camera independently developed by the laboratory for imaging experiments, we obtain infrared images of ships, make the datasets, and compare and analyze the results of four kinds of networks. The results demonstrate that the detection precision, recall rate, F1-score, and intersection-over-union of TRS-Net are 88.73%, 83.34%, 85.95%, and 75.36%, respectively. Furthermore, the model size is reduced to one-sixteenth of its original size. Therefore, the proposed TRS-Net has practical engineering value for real-time infrared ship detection.

In this study, we propose a method to interpolate missing data that can be attributed to a disparity hole in binocular vision phase matching based on the reliable phase data obtained using a single camera. In the calibration stage, the measurement system only needs to consider the plane phase and height data as reference. Further, the implicit phase-height mapping relation can be established based on the phase and height difference observed with respect to the effective three-dimensional point clouds around the area of the hole and the reference plane, and the missing point clouds are reconstructed and interpolated using the reliable phase data obtained using a single camera. The interpolation of the missing point cloud data of a standard sample denotes a reconstruction accuracy of 0.07 mm. Furthermore, binocular measurement and hole interpolation are conducted using a facial mask and gourd model, and the results denote that the proposed method can appropriately interpolate the missing point cloud data in the occluded areas.

In this study, the integration of the rotation region proposal network with Faster R-CNN network along with an improved remote sensing image object detection method based on the convolutional neural network is proposed. The aim is two-fold: 1) to realize rapid and precise detection of remote sensing image objects; 2) to address the problem caused by objects with rotated angle. Compared to the mainstream target detection methods, the proposed method introduces the rotation factor to the region proposal network and generates proposal regions with different directions, aiming at the characteristics of variable direction and relative aggregation of most targets in the remote sensing image. The addition of a convolution layer before the fully connected layer of the Faster R-CNN network has the advantages of reducing the feature parameters, enhancing the performance of classifiers, and avoiding over-fitting. Compared with the state-of-the-art object detection methods, the proposed algorithm is able to combine the features extracted by the convolutional neural network in the rotation region proposal network with the multi-scale features. Therefore, significant improvement in remote sensing image object detection can be achieved.

A method to dynamically refocus a single image is presented; by combining deep learning-based light field synthesis with geometric structure-based circle of confusion rendering, it simulates the light field refocusing effect. In the proposed method, the depth map is estimated and converted into disparity, and then the circle of confusion diameter is measured at different depths to resample the pixels. Two neural network structures are designed, supervised by multi-views and refocused images of the light field camera. Experiments are conducted on multiple datasets and real scenes. Compared with other techniques, the results obtained using the proposed method show superior visual performance and evaluation indicators, along with an acceptable computational cost, with the peak signal-to-noise ratio and structural similarity index reaching 34.55 and 0.937, respectively.

When performing high-resolution imaging using a single-photon compressive technique, a long imaging time is required owing to numerous measurements and a large number of image-reconstruction calculations. We demonstrate a sampling-and-reconstruction-integrated residual codec network, namely SRIED-Net, for single-photon compressive imaging. We use the binarized fully connected layer as the first layer of the network and train it into a binary-measurement matrix to directly load onto the digital micromirror device for efficient compressive sampling. The remaining layers of the network are used to quickly reconstruct the compressed sensing image. We compare the effects of the compressive sampling rate, measurement matrix, and reconstruction algorithm on imaging performance through a series of simulations and system experiments. The experimental results show that SRIED-Net is superior to the current advanced iterative algorithm TVAL3 at a low measurement rate and that its imaging quality is similar to that of TVAL3 at a high measurement rate. It is superior to current deep-learning-based methods at all measurement rates.

Inspection of the coplanarity of pins in an integrated circuit (IC) is a very important process for ensuring the mounting quality of IC. In this paper, a coplanarity inspection method for IC pins based on a single image is proposed. First, a relation model that contains a camera, light source, and measured surface is constructed. This model is based on a monocular vision system. Then, the light intensity calibration method of a light emitting diode (LED) ring-structured light source is described, and the correlative parameter of the measured IC pin material is experimentally obtained. Further, a method for estimating the height information based on the constructed relationship model of light intensity and image grayscale is presented. Finally, the surface three-dimensional morphology of the IC pins and their solder joints are recovered using the height information. In comparison with the actual measurement results for IC pins and their solder joints, the experimental measurement results obtained in this work show that the measurement error for height is less than ±0.08 mm, and the relative error is within -2.6%, which verifies the effectiveness of the proposed method.

This study uses a B-spline surface error fitting function based on reflected light intensity and depth two-dimensional variable with additive weights to correct the weak light intensity-related depth error that occurs because of the low reflectivity or long distance of the target scene. In contrast to the traditional B-spline surface error fitting, the improved model uses weight parameters to optimize partial control points of each surface to obtain a more accurate error-fitting surface. It allows average correction errors to be less than 2 mm, which is nearly three times the accuracy of the traditional model. Furthermore, the additive weight parameter is added to each control point, which ensures that the parameter matrices of control points and additive weight can be obtained in a single step during the camera parameter calibration process,overcoming the issue that the multiplicative weight matrix cannot be obtained by the least squares method. Moreover, considering that the harmonic-related error is also connected to depth, the correction of the harmonic-related error is unified into the proposed correction method.

In this study, a regularization method of point spread function (PSF) estimation and image reconstruction considering multiple blur factors about image degradation is proposed for wide-field polarization-modulated microscopic imaging. The adaptive regularization model of variable exponential function is used for the PSF estimation, which is degraded due to the fitting deviation of polarization-angle modulation curve, the degradation of optical system, and discrete under-sampling of charge-coupled device (CCD) during the blurring process. The aim is to fully utilize the acquired image content features, adaptively select a variable regularization norm, and effectively restrain the staircase effect of total variation regularization and the disadvantage of poor edge-preserving property of Tikhonov regularization. The optimized Split-Bregman iterative algorithm is adopted in the solution process, which can ensure the estimation accuracy and reduce the computational complexity as well. Experimental results show that the proposed method can effectively estimate the degraded PSF and improve the noise robustness of image reconstruction.

A coded aperture spectral imaging system uses a spatial light modulator to encode target information. It subsequently maps the signal onto a two-dimensional detector array and forms spatial and spectral aliasing information. Further, a spectral data cube can be reconstructed using a suitable reconstruction algorithm. In this study, we propose a multi-slot combination coding method, which is encoded in only one direction, to improve the coding efficiency. This procedure is selected because dispersion occurs in only one direction. When compared with the two-dimensional random coding method that is currently being applied, this approach simplifies the mathematical model and its analysis while reducing the coding complexity,under the premise of obtaining identical reconstruction results. Further, the switching characteristics of the liquid crystal light valve are used in the coding. The spectral imaging system is assembled by incorporating a PGP (prism-transmission grating-prism) beam-splitting component. Different sampling rate experiments are conducted, and highly accurate recovery results are obtained. The feasibility of the proposed coding method can result in innovation in the future coded spectral imaging systems.

To address the overlapping and defocusing of images with blurred diffuse speckles, a synthetic-aperture occlusion removal algorithm using a microlens array (MLA) is proposed. In this study, a single light-field imaging system with an MLA is designed to acquire data of the original image. The synthetic aperture method is utilized to digitally focus for recognizing occlusion objects in a scene. According to the gray variance value, the threshold value is set to distinguish an occlusion object from the target object. Then, the occlusion object is removed when it is recognized, and the target object can be extracted by refocusing. The experiment shows that the no-reference image quality assessment value is improved by at least 18.83% compared with that using other similar algorithms. The proposed method not only improves the image quality, but also presents 3D information of the scene. It also obtains the target image with a high contrast and high signal-to-noise ratio.

Using an incoherent scattering density function in the statistical sense to satisfy the hypothesis of sparse priori, the compressive holography of diffuse objects can realize the tomographic reconstruction of diffuse objects from multiple speckle patterns, avoiding speckle and crosstalk among defocusing images in different planes. In this paper, a single-wavelength illumination condition is extended to the red, green, and blue wavelengths. A new compressive holographic tomography method for color diffuse objects is proposed. A tomography model of diffuse objects under multi-wavelength illumination conditions is proposed, and the decompression reasoning method is used to effectively separate the three-dimensional incoherent density functions of different planes. The numerical simulation results show that the method can realize compressive reconstruction of the color tomography diffuse object from multiple two-dimensional color speckle patterns, and effectively suppress the speckle effect and crosstalk among defocusing images in different planes.