ObjectiveThe characteristics and laws of atmospheric turbulence in the atmospheric boundary layer over the ocean region are studied, which can be employed to guide the parameter setting of the adaptive optical system. Therefore, the influence of turbulence is greatly reduced, and the imaging quality and the performance of the photoelectric system are improved to meet the engineering application. In this method, the effects of temperature, humidity, and wind velocity on the calculation results are fully considered, and the physical phenomena that produce optical turbulence effects are fully captured. Additionally, the ultrasonic anemometer array has the advantages of high spatial and temporal resolution and high automation degree, which greatly improves data continuity and reliability. Finally, continuous all-weather measurement can be carried out for a long time, and the limitation of high labor costs and sensitivity to weather conditions is effectively compensated.MethodsBased on the multi-layer ultrasonic measurement experiment in the tropical South China Sea, the ultrasonic wind velocity in three directions in the air is measured, and the velocity structure constant is obtained. In addition, the refractive index structure constant is calculated by combining the refractive index gradient affected by temperature and humidity. Firstly, according to the fluctuation relations of atmospheric refractive index with temperature, humidity, and pressure, the relationships of refractive index structure constant with potential temperature structure constant, humidity structure constant, and temperature-humidity correlation structure constant are obtained. At the same time, the velocity structure constant obtained from Tatarskii theory and the relationship between the energy dissipation rate and the velocity structure constant are discussed. Secondly, based on the relationship between atmospheric refractive index and density, the major large-scale refractive index gradients are removed to ensure consistency and maintain the basic properties of the gradient origin, i.e., turbulent mixing. The expression of the turbulent refractive index gradient is obtained through the high frequency (small scale) fluctuation in the refractive index gradient which determines the refractive index structure constant.Results and Discussions1) This paper verifies the feasibility and reliability of the method by analyzing and comparing the 144-day raw data (Fig. 3). The correlation analysis between the ultrasonic anemometer array calculation method and the ultrasonic single point virtual temperature estimation method is shown in Fig. 6. The Spearman correlation coefficient (R) reaches 0.96113; a fitting slope of 0.95096 is obtained through the least squares linear fitting, and the intercept is 0.48645. The results of the ultrasonic single point virtual temperature estimation method and the ultrasonic anemometer array calculation method are shown in the horizontal and vertical coordinates respectively. The results indicate that both methods can reflect the daily variation of turbulence in the real atmosphere. The result of the ultrasonic anemometer array estimation method at some time is larger than that of the ultrasonic single point virtual temperature estimation method, and the consistency of the two methods also fluctuates slightly. However, the trend is the same with high correlation, which proves the feasibility of the method to estimate the refractive index structure constant of the ultrasonic anemometer array estimation method. 2) The effects of temperature, humidity, and wind velocity on the calculation results are fully considered, and the physical phenomena that produce the optical turbulence effect are fully captured (Table 3). The correlation coefficients between the estimated temperature and the temperature structure constant are 0.98, 0.8, 0.7, and 0.6, respectively. However, the correlation between the estimated results of the ultrasonic anemometer array and the velocity structure is very low, and the correlation coefficient is close to 0. The correlation coefficients with relative humidity, virtual temperature, temperature gradient, and wind shear are 0.8, 0.8, 0.5, and 0.4, respectively. In conclusion, the all-day virtual temperature exerts a major influence on the calculation results, in which the humidity affects the results by affecting the ultrasonic virtual temperature. In addition, the influence of the dynamic factors on the calculation results cannot be ignored, and it further shows the comprehensiveness and superiority of the estimation method of the ultrasonic anemometer. Notably, the dependence of the refractive index structure constant on temperature-related parameters such as ultrasonic virtual temperature, temperature gradient, and relative humidity is lower at night than during the day and is negatively correlated at night. The correlation with the average wind velocity and wind shear of the dynamic factor parameters increases significantly.Conclusions1) The correlation analysis shows that the average correlation coefficient is 0.85 with the highest value of 0.99 and the lowest value of 0.71, which is compared with the 174-day results of the ultrasonic anemometer array. By error analysis, the average ΔlgCn2 is 0.3. 2) Through the analysis of the influence factors of the two estimation methods at night, the correlation between the refractive index structure constant and the temperature-related parameters decreases by 68% on average, and the correlation between the refractive index structure constant and the wind speed-related parameters increases by 59% on average.

ObjectiveCloud base height (CBH) is a crucial cloud parameter affecting the water cycle and radiation budget of the earth-atmosphere system. Additionally, CBH has a great impact on aviation safety. Low CBH often leads to a decrease in visibility, which poses a great threat to flight safety. Therefore, it is meaningful to acquire accurate CBH for related scientific research and meteorological services. It is valuable but challenging to use satellite passive remote sensing data to retrieve CBH. Some cloud products such as cloud top height (CTH) and cloud optical thickness (COT) are often used in previous research, related to CBH retrieval, from which two ideas to retrieve CBH can be summarized. The first idea employed independent methods to obtain CBH of different types of clouds respectively, and the second one directly retrieves CBH using cloud products of satellites without regarding cloud types. At present, there is no CBH products of FY-4A. Therefore, a CBH retrieval method for FY-4A is introduced in this paper. According to the two ideas mentioned above, two schemes of CBH retrieval are designed, which are compared to find more suitable ideas to retrieve CBH for FY-4A and to provide reference for subsequent development of FY-4A CBH products.MethodsA CBH retrieval method based on ensemble learning is proposed in this paper. CTH, COT, and cloud effective radius (CER) from FY-4A are used. Additionally, CBH and cloud types from CloudSat are employed for their widely recognized data quality. First, data of FY-4A and CloudSat are matched spatiotemporally and are divided into training data, validation data, and test data. Second, CBH retrieval models are built based on two ensemble learning algorithms, random forest (RF), and gradient boosting tree (GBT). Two schemes of CBH retrieval are designed in this paper. In the first scheme, matched data are divided into eight types according to the eight cloud types of CloudSat. For each type of cloud, two retrieval models are built based on RF and GBT using training data and validation data through ten-fold cross validation. The optimal model is selected according to the models' results on test data. In the second scheme, retrieval models are built without regarding cloud types. Training data of the eight cloud types are combined together. Validation data and test data are processed similarly. The three data sets are used to obtain the RF model and GBT model, and to select the optimal retrieval model. Finally, the optimal scheme and model of CBH retrieval for FY-4A are selected according to the models' performance.Results and DiscussionsRoot mean squared error (RMSE), mean absolute error (MAE), correlation coefficient (R), and mean relative error (MRE) are used to evaluate models' performance. In the first scheme, the GBT model is the optimal retrieval model for Cirrus (Ci), Altostratus (As), and Altostratus (Ac). RF model is the optimal retrieval model for Stratus/Stratocumulus (St/Sc), Cumulus (Cu), Nimbostratus (Ns), deep convective cloud (Dc), and multilayer cloud (Multi). In the second scheme, the GBT model is the optimal retrieval model. The models of the two schemes are compared on test data with 129515 samples. Overall, the retrieval model of the first scheme outperforms that of the second scheme. Specifically, RMSE of the model in the first scheme is 1304.7 m. MAE is 898.3 m, R is 0.9214, and MRE is 63.93%. For the eight types of clouds, RMSE, MAE, R, and MRE of the model in the first scheme are also superior to those of the model in the second scheme. Although the first scheme can obtain better results, the retrieval model of the first scheme still needs to be improved in the future. For example, the performance of the retrieval model for Dc is not a patch on that of other types of clouds. Additionally, the paper discusses how to apply the proposed method to practice. First, level 1 data (i.e. reflectance and brightness temperature) and level 2 data (i.e. CTH, COT, and CER) of FY-4A can be used to acquire the eight cloud types according to a cloud type classification model proposed by Yu et al. Second, according to the cloud type classification results, the retrieval models of the first scheme can be adopted to retrieve CBH for the eight types of clouds respectively.ConclusionsCBH is a critical cloud parameter, but there are no CBH products of geostationary meteorological satellites currently. Thus, a CBH retrieval method for FY-4A based on ensemble learning is introduced in this paper. Two schemes of CBH retrieval are designed, and corresponding CBH retrieval models are built based on two ensemble learning algorithms, namely, RF and GBT. Data of CTH, COT, and CER from FY-4A are used in this paper. The first scheme employs eight independent models to retrieve CBH for eight types of clouds (i.e. Ci, As, Ac, St/Sc, Cu, Ns, Dc, and Multi) respectively. Specifically, for Ci, As, and Ac, the GBT model is used to retrieve CBH. For the other five types of cloud, the RF model is used to retrieve CBH. The second scheme uses a GBT model to retrieve CBH without regarding cloud types. CBH from CloudSat is used to evaluate the results of the two schemes, and the retrieval model of the first scheme outperforms that of the second scheme. For the eight types of clouds, the retrieval model of the first scheme also obtains better results.

ObjectiveAs one of the spaceborne detection schemes with the strongest comprehensive aerosol capability at this stage, the polarization crossfire (PCF) strategy is developed in China. It is composed of the particulate observing scanning polarimeter (POSP) and the directional polarimetric camera (DPC) and has been carried by China's Gaofen 5-02 and the Chinese Atmospheric Environmental Monitoring Satellite (DQ-1), which are launched in 2021 and 2022, respectively. To explore the detection ability of the POSP based on PCF in the ultraviolet (UV) band for aerosol layer height (ALH), we study the sensitivity of ALH with the synthetic data in the UV and near-UV bands and further assess the impact of different conditions on the information content and posterior error of ALH. It is expected that our findings can be helpful for the retrieval algorithm development of ALH.MethodsOptimal estimation (OE) theory and information content analysis are employed in this study. OE provides statistical indicators such as the averaging kernel matrix and the degree of freedom for signal (DFS), which can represent how much information on the retrieved parameters we can obtain from the satellite measurements. Therefore, combined with the forward modeling of specific satellite sensor observations, information content analysis is used to provide support for satellite sensor design and retrieval algorithm development. The advantage is that the retrieval capability can be quantified without the development of true inversion. Additionally, it provides top-level physics-based guidance on algorithm design. Firstly, the unified linearized vector radiative transfer model (UNL-VRTM) is used as the forward model to calculate the normalized radiance and polarized radiance at the top of the atmosphere (TOA), as well as the Jacobians of TOA results with respect to corresponding parameters. Then, the DFS and posterior error are introduced to quantify the information content of ALH from the aspects of the intensity and polarization measurements, respectively. Under the assumption of different surface types, aerosol models, and different typical observation geometry cases, the sensitivity analysis results for different situations can be finally obtained.Results and DiscussionsWe analyze the sensitivity variation of ALH with the scattering angle at the solar zenith angle of 40°. The results show that a smaller scattering angle (within 90° to 140°) of the satellite observation geometry is accompanied by higher sensitivity of Stokes parameter I to scale height H (Fig. 10). After that, we choose a fixed observation geometry to calculate the DFS under different schemes. The research shows that the DFS of the bare soil is lower than that of the vegetation surface in the band of 380 nm (Table 6). Generally, the surface reflectance has more impact on the information content of H in terms of POSP measurements than aerosol optical properties, which leads to the lowest information content over bare soil. Meanwhile, with the addition of multi-band measurements and constraints of polarization information, the DFS of ALH is significantly improved (Fig. 11). Different cases indicate that the addition of intensity and polarization measurements for the retrieval of ALH at 380 nm and 410 nm can improve the H information effectively, and the posterior error of the ALH retrieval is also reduced by 5-30 percentage points (Fig. 13). It is shown that the polarization measurement in the UV band has a good constraining effect on the posterior error of H. In addition, with the addition of intensity and polarization information in the near-UV band of 410 nm, the posterior error is further reduced by 7-10 percentage points, and the measurements particularly improve the retrieval of ALH when the H value is low (Fig. 14).ConclusionsThe UV and near-UV bands are important sources of information content for ALH in satellite remote sensing. Compared with the case only using intensity observations at 380 nm, the addition of polarization detection in the same band can provide an extra DFS of 0.06-0.26 for the retrieval of ALH. Meanwhile, the posterior error is reduced by 5-30 percentage points. Combined with near-UV detection information at 410 nm, the posterior error for the retrieval of ALH is further reduced by 7-10 percentage points. In particular, the retrieval of ALH at low scale height is improved. In addition, the sensitivity of observation information to ALH decreases gradually with the increase in the corresponding scattering angle ranging from 90° to 140°. Moreover, the bare soil case with aerosols dominated by the coarse mode provides less content information on ALH than the vegetation surface case with aerosols dominated by the fine mode. Although ALH information between the two types of aerosols shows some distinctions because of their different single-scattering optical properties, the dependence of information on surface types and the impact of polarization measurements generally appear similar. The information content analysis shows that the potential capability of the POSP instrument is good over various surface types and aerosol models on the basis of the use of spaceborne PCF strategy.

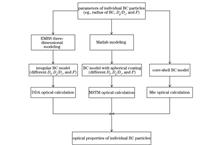

ObjectiveBlack carbon (BC) aerosols strongly absorb solar radiation in the atmosphere and directly or indirectly influence regional and global climate. However, the shape and mixing structures of BC particles are complex, and their optical properties are largely unquantified. Previous studies have used several numerical simulation tools to analyze the optical properties of BC particles, while the mixing structures of BC models in these studies are still quite different from those of the real individual BC particles in the atmosphere. In addition, most studies use only one numerical simulation tool to calculate the optical properties of BC particles. Therefore, the differences in optical results from different numerical simulation tools are still uncertain. In this study, a novel three-dimensional (3D) modeling tool, namely, Electron-Microscope-to-BC-simulation (EMBS), is applied to construct realistic 3D BC models. The EMBS can construct optical models of particles with arbitrary shapes and structures and can be applied in discrete dipole approximation (DDA). Then the influence of complex shapes and mixing structures of atmospheric BC particles on optical characteristics can be estimated. The absorption intensity Eabs, single scattering albedo (SSA), and absorption cross section Cabs of individual BC particles with different fractal dimensions (Df =1.8 and Df =2.6) and mixing structures are calculated by three numerical simulation methods, including DDA, multi-sphere T-matrix (MSTM), and Mie theory. The numerical simulation results obtained by different methods are compared, and the reasons for the difference in the results of different numerical simulation tools are analyzed.MethodsIn this study, the EMBS is used to construct BC particle models with different fractal dimensions (Df =1.8 and Df =2.6) and mixing structures. The BC models from the EMBS are applied by the DDA. The Eabs, SSA, and Cabs of BC particles constructed by the EMBS are calculated by the DDA method and then compared with the results from MSTM and Mie methods (Fig. 1). The parameters of individual BC particles (e.g., the radius of BC, Dp/Dc, F, etc.) are identical for the three methods. Each BC aggregate consists of 100 monomers with a radius of 20 nm. The coating thickness Dp/Dc and embedded fraction F are in the range of 1.5-2.7 and 0.10-1.00, respectively. The wavelength of incident light λ is 550 nm. The complex refractive index of BC is m=1.85+0.71i, and that of the BC coating is m=1.53+0i. This study assumes that particles are randomly oriented in the atmosphere, and 1000 incident light directions are used for each particle. The Mie method corresponds to the core-shell BC model and is conducted by the BHCOAT program.Results and DiscussionsFor BC particles with loose structures (Df =1.8) and compact structures (Df =2.6), the Eabs of MSTM model is more sensitive to embedded fraction, while that of DDA model is more sensitive to the coating thickness (Fig. 4). The SSA of DDA and MSTM methods increases with the increase in the coating thickness, and that of DDA method is smaller than that of MSTM method (Fig. 5). In addition, the SSA of DDA and MSTM methods decreases with the increase in F, but the sensitivity of both models to F is not high (Fig. 5). The optical properties calculated by DDA and MSTM methods are still different when the parameters (such as Dp/Dc, F, and fractal dimension) are consistent. The results of this study prove that there are indeed obvious differences between DDA and MSTM in the simulation of optical properties of individual BC particles. The model shape and mixing structures of BC models for DDA method are more flexible, while MSTM has limitations in constructing models. On the one hand, there is the influence of the shape of BC models. For the bare BC model without coating, the relative deviation caused by different shapes of BC aggregates is large. The relative deviations of Cabs and Qabs are 13% and 9%, respectively (Fig. 6). For the fully embedded BC model, the relative deviations of Eabs, SSA, and Cabs reach 20%, 7%, and 23%, respectively (Fig. 7). On the other hand, the relative position of BC aggregate and coating results in a relative deviation of 2%-4% (Fig. 8). The BC models used by the MSTM method are quite different from the real atmospheric BC particles, so the deviation generated by the simulation may be larger than that of the DDA.ConclusionsIt is found that the Eabs results of MSTM method are more sensitive to the embedded fraction, while those of DDA method are more sensitive to the coating thickness. The difference between the two methods mainly results from: 1) differences in the shape of BC aggregates and coating in DDA and MSTM methods lead to the relative deviation of Eabs and Cabs up to 20% and 23%, respectively; 2) relative position and shape of the coating can produce relative deviation in 2%-4%. Due to the differences in BC model shapes and structures between DDA and MSTM methods, the optical simulation results may differ greatly.

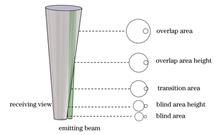

ObjectiveLidar has been widely used in the field of atmospheric detection with the advantages of high spatial-temporal resolution and high detection sensitivity. The overlap factor of a lidar system arises from the incomplete overlap between the laser beam and the field of view of the receiver, which results in the distortion of the received backscattered signal in the near-field range. The accurate observation of aerosol optical parameters near the ground is important for the monitoring of the atmospheric environment, air quality, and atmospheric visibility. The overlap factor at a certain distance is defined as the ratio of the beam energy entering the receiving field of view to the actual backscattering energy. In general, the overlap factor is estimated by either theoretical methods or experimental methods. The theoretical methods are to calculate the overlap factor according to the structural parameters of the lidar system. However, some parameters are often rather difficult to accurately obtain in practice or theory, such as the performance of the optical elements, the beam divergence angle, and the beam direction. The experimental methods are to calculate the overlap factor with the experimental observation data. Some use the deviation between the Raman solution and the Fernald solution of a backscattering coefficient to calculate the overlap factor. The main limitation is that the Fernald method of aerosol backscattering coefficient requires the assumption of lidar ratio or boundary conditions, which will introduce great errors. Besides, the experimental methods strongly depend on the accurate estimation of atmospheric conditions. Thus, it is necessary to propose a stable algorithm for overlap factors to correct signals and aerosol optical parameters in the near-field range.MethodsAn experimental method for the overlap factor of Raman-Mie scattering lidar is proposed in this paper, which is applicable to lidar systems equipped with a Raman scattering channel. The method is based on the Raman inversion method for aerosol optical parameters. By analyzing the inversion characteristics of aerosol optical parameters, it is found that in a transition area, the aerosol backscattering coefficient is not influenced by the overlap factor, while the aerosol extinction coefficient is influenced greatly. In the Raman inversion method, the aerosol extinction coefficient and backscattering coefficient are independently inversed without the assumption of lidar ratios. Thus, the lidar ratio profile can be obtained in a complete overlap area. According to the inversed optical parameters, the overlap area height is determined preliminarily, and then the lidar ratio in the transition area is assumed to be equal to that at the overlap area height. The product of the aerosol backscattering coefficient and the lidar ratio is used to preliminarily correct the missing signal of the aerosol extinction coefficient in the transition area. The Raman scattering signal is derived from the inverse equation of the aerosol extinction coefficient, and then the preliminarily corrected Raman scattering signal is forward modeled. The overlap factor is obtained by dividing the experimental observed Raman signal by the forward modeled Raman signal. The blind area, transition area, and overlap area are distinguished according to the overlap factor profile. The Raman scattering and Mie scattering echo signals and the aerosol optical parameters in the near-field range are corrected, respectively. For the transition area, the definition of the overlap factor is used for signal correction. For the blind area, the slope consistency method is used to supplement the signal, that is, the slope of the standard atmospheric model is used to linearly estimate the signal.Results and DiscussionsThe atmospheric observation experiment is carried out with an independently developed Raman-Mie scattering lidar system. For a single set of experimental observation data, the aerosol extinction coefficient and backscattering coefficient are retrieved, respectively. The overlap factor profile is obtained, and then the echo signals and aerosol optical parameters are corrected, respectively. The estimated extinction coefficient on the ground by lidar is compared with that observed via a visibility meter to verify the correctness of the algorithm. For the observation data from an experiment lasting for 4 hours, the time-height-intensity (THI) diagrams of the aerosol extinction coefficient before and after the correction by an overlap factor are given (Fig. 5). The corrected aerosol extinction coefficient below about 0.6 km can be obtained, which can obviously reflect the stratification structure and spatial-temporal variations of atmospheric aerosols near the ground. The estimated aerosol extinction coefficients of long-term observations on the ground are compared with those simultaneously observed via a visibility meter, and they show good consistency with a regression coefficient R up to 0.993 (Fig. 7). The measured overlap factors are compared with those calculated with the theoretical method, and the relative biases are analyzed separately. The error of overlap factors can be controlled within ±8%.ConclusionsThe error of the proposed method is calculated and analyzed. The results show that the proposed method can accurately calculate the overlap factor profile of the Raman lidar system. After correction by an overlap factor, the signal profile in the transition area and the estimated linear signal in the blind area can be obtained. The improved method is of great significance for the correction and supplement of near-field signals with lidar.

Results and Discussions To validate the retrieved SO2 VCD results, we compare the SO2 VCD from EMI-Ⅱ with that from TROPOMI at the La Palma volcano on 27, 29, and 31 October 2021. The SO2 VCD from EMI-Ⅱ showes similar spatial distributions to those of the SO2 VCD from TROPOMI (Fig. 4) with R of 0.891, 0.901, and 0.915 (Fig. 5), respectively. In addition, the SO2 VCD from EMI-Ⅱ is also compared with that from TROPOMI in the region of the Tonga submarine volcano from 14 to 18 January 2022 (Fig. 6), and the SO2 VCD from EMI-Ⅱ is found to have similar spatial distributions to those of the SO2 VCD from TROPOMI. The SO2 plume transported from Tonga to Australia, which corresponds to the wind field results from a hybrid single-particle Lagrangian integrated trajectory (HYSPLIT) model (Fig. 7). However, the SO2 VCD from EMI-Ⅱ is lower than that from TROPOMI in the grid with a high SO2 SCD, which is mainly because the a priori profile of TROPOMI is different from that of EMI-Ⅱ in the radiative transfer model. According to the calculated SO2 VCD and wind field data, the fluxes of SO2 on 14 and 15 January 2022 in the region of Tonga submarine volcano are 345.83 and 504.85 t/s, respectively.ObjectiveThe SO2 slant column density (SCD) of environmental trace gases monitoring instrument Ⅱ (EMI-Ⅱ) from China is firstly retrieved using differential optical absorption spectroscopy (DOAS). The air mass factor (AMF) look-up table of SO2 is established using the SCIATRAN radiative transfer model. The vertical column density (VCD) is obtained after destriping. With the La Palma volcano at the end of October 2021 as an example, the SO2 VCD obtained by retrieval from EMI-Ⅱ data is consistent with that from TROPOspheric monitoring instrument (TROPOMI) with the correlation coefficients (R) of 0.89, 0.90, and 0.92. In addition, the retrieved SO2 VCD in the region of Tonga submarine volcano is also compared with that from TROPOMI. The EMI?Ⅱ results show similar spatial distributions to those of the TROPOMI results, and the transmission process (from the east to the west) of the SO2 plume is monitored. With the wind field data, this paper calculates the fluxes of SO2 generated from the eruption of the Tonga submarine volcano on 14 and 15 January 2022. The results of the paper show that EMI-Ⅱ can yield reliable SO2 VCD in volcanic regions via retrieval and realize the early warning of global volcanic eruptions.SO2 not only affects human health (e.g., respiratory diseases) but also is closely related to climate and environment (e.g., acid rain). Its oxidation may lead to the formation of aerosols and photochemical smog. SO2 is an important indicator of air quality and is closely associated with volcanic eruptions. The SO2 VCD can provide a data basis for tracing the SO2 pollution caused by industrial emissions and early warning signals for volcanic eruptions around the world. Therefore, it is extremely important to obtain the daily global SO2 VCD. In this study, we report the SO2 VCD results in volcanic regions from EMI-Ⅱ and validate the retrieved results with those from TROPOMI. In addition, the fluxes of SO2 from the eruption of the Tonga submarine volcano are calculated, which may help make clear the dynamics of magma degassing. We hope that our results can contribute to the development and global validation of the EMI-Ⅱ SO2 VCD.MethodsThe SO2 SCD is calculated using the QDOAS software with DOAS method. DOAS retrieves the concentrations of trace gases depending on their characteristic absorption and the measured optical intensity, which is based on the Lambert-Beer's law. Then, the corresponding SO2 AMF of the EMI-Ⅱ is calculated using the established AMF look-up table, which is simulated in the SCIATRAN radiative transfer model. The SO2 VCD is then obtained from SCD and AMF. We use spatial filtering following the Fourier transform method to remove obvious stripes caused by the irradiance calibration error when retrieving the SO2 VCD from EMI?Ⅱ. The fluxes of SO2 from satellite-based measurements can be calculated using the above method. For the Tonga submarine volcano, the effect of distance can be ignored for the long lifetime of the stratospheric SO2 plume.ConclusionsIn this paper, the SO2 VCD is retrieved from EMI-Ⅱ and validated in volcanic regions. With the La Palma volcano and the Tonga submarine volcano as examples, the SO2 VCD from EMI-Ⅱ presents similar spatial distributions to those of the SO2 VCD from TROPOMI. In addition, the transmission process of SO2 plume in a volcanic region can be monitored using the retrieved SO2 VCD from EMI-Ⅱ. The results of this study confirm that EMI-Ⅱ can monitor SO2 in volcanic regions and realize the early warning of global volcanic eruptions. This paper is of great importance for the development and global validation of SO2 VCD from EMI-Ⅱ.

Results and Discussions Shipboard sun-photometer has been successfully developed to meet the performance indexes, and long-term observation has been carried out in Bohai bay. The experimental results show that the daily average aerosol optical thickness in Bohai bay is mostly concentrated in the range of 0.1-0.3. Although human-made pollution is increasing in recent years, the frequency distribution of the daily average ?ngstr?m index in Bohai bay is basically Gaussian, which indicates that the sea area has not caused large pollution with the relatively clean atmosphere (Fig. 7). The aerosol in Bohai bay shows a bimodal spectrum structure, and there are significant differences between August, September, and November. In August and September, the atmosphere is dominated by small particles, while in late autumn, November, is dominated by large particles. The average ?ngstr?m index of the two periods is about 1.1 and 0.5 (Fig. 6).ObjectiveAerosols play an important role in the balance of the earth's atmospheric radiation budget due to their complex composition and increasing particle concentration. Sun-photometer is an effective measurement device for remote sensing atmospheric parameters by measuring solar spectral radiation and is widely employed in ground-based remote sensing of aerosols. However, the sea covers a large area. In the research on the optical characteristics of aerosols on earth and climate change, there is an urgent need for a device that can measure the optical characteristics of aerosols with high accuracy under the shipboard platform to compensate for the lack of atmospheric data at sea.MethodsThe traditional sun-photometer is difficult to track the sun on a moving shipboard platform, which cannot meet the needs of sea aerosol observation. The research group develops a novel shipboard sun-photometer with a new tracking method. The sun can be tracked on a shipboard platform with an accuracy of better than 1'. This new sun-photometer could obtain radiation information in nine spectral bands of 400, 440, 532, 550, 780, 870, 940, 1050, and 1064 nm at once. The aerosol information of the sea area can be obtained by direct solar radiation remote sensing on the mobile platform.The instrument adopts a two-segment image tracking method instead of the traditional four-quadrant tracking method. Firstly, the fish-eye imaging system is employed to obtain the whole sky image, and the coarse tracking of the sun is completed. Then, the precision tracking imaging system is leveraged to improve the resolution of the solar image to improve the tracking accuracy. The development process of the shipboard sun-photometer is introduced in detail, including the instrument's two-dimensional turntable, image tracking system, and measurement optical path. The function and working flow of each main structure of the instrument are described in detail with the two-dimensional turntable of the shipboard sun-photometer as the starting point. After that, the theoretical tracking accuracy of the image tracking system is calculated in detail by image processing technology and can reach 0.744' to meet the tracking accuracy requirements of offshore measurement. Finally, a spectrometric measurement system is introduced based on the integrated design of spectrometric measurement systems. The structure and operation flow of the spectrometric measurement system are analyzed specifically, and the influence of stray light and detector saturation on the measurement results is thoroughly considered with corresponding improvement approaches.ConclusionsThe Langley calibration method and the improved Langley method are adopted to calibrate the bands without atmospheric molecular absorption and water vapor absorption, respectively. The 550 nm aerosol optical thickness and ?ngstr?m index measured by the shipboard platform sun-photometer are compared with the measurement results of the POM-01 MK III marine sun-photometer. The diurnal variation trend of the 550 nm aerosol optical thickness is basically similar, the determination coefficient is 0.968, and the average relative measurement error is 4.83%. The ?ngstr?m index has an average relative measurement error of 2.55%. The reliability and stability of the shipboard sun-photometer are verified, and the optical properties of other atmospheric parameters can be further retrieved via the radiation information of visible and near-infrared bands. This instrument enriches the technical means for measuring the parameters of aerosol optical characteristics at sea and lays a solid experimental technical foundation for the research on space remote sensing, climate change, and atmospheric environment.

ObjectiveMarine aerosol is a significant part of atmospheric aerosols, which has an important impact on the changes in marine meteorology, such as visibility and precipitation. Marine aerosols also play a vital role in Earth's energy budget, atmospheric environment, and climate change as they can directly scatter and absorb solar and Earth's radiance and indirectly modify cloud properties. In studies of the size distribution of marine aerosols, micron aerosols are the object of analysis in most cases. There are few studies based on the aerosol mode, and the research on submicron particles with a particle size of less than 1000 nm is scarcely reported. However, submicron particles in the atmosphere perform a crucial role in aerosol formation processes such as gas-to-particle conversion and the formation of cloud condensation nuclei. It is of great significance to study the temporal and spatial variation characteristics of the number concentration, particle size, and component distribution of submicron aerosols. This can help grasp the evolution of particle size distribution in the air mass from land to sea and improve the understanding of the formation and evolution process of marine aerosols.MethodsA navigation observation lasting 12 days is conducted in the northern South China Sea by a Chinese research vessel "Shenkuo" from June 8 to June 20, 2019. The particle number concentrations of submicron aerosols with a diameter of 14-680 nm are measured by scanning mobility particle sizer (SMPS) placed on the right frontal side of the ship. The conventional meteorological data (temperature, humidity, and atmospheric pressure) on the sea surface mainly comes from the automatic meteorologic station onboard the ship. In addition to the data collected on site, the atmospheric reanalysis dataset (MERRA-2) provides data on the sea surface, such as wind speed, wind direction, and aerosol composition. In this paper, the HYSPLIT model is used to simulate the trajectory of the continental air mass during a cold front. After correcting the discrete data and eliminating the polluted data caused by ship discharge, we analyze the temporal and spatial change in the number concentration and size distribution. The size distribution spectra are fitted on the basis of the nucleation mode, Aitken mode, and accumulation mode with the log-normal function. The influence of a cold front encountered during the voyage on the number concentration, particle size, and component distribution is discussed.Results and DiscussionsThe meteorologic process of a cold front is found through the combination of the data from the shipboard automatic meteorologic station and the meteorological reanalysis dataset. When a cold front is encountered, the wind speed, specific humidity, and temperature all decrease significantly, and the wind direction changes from southwest to northeast (Fig. 5). Therefore, the aerosols before and after the cold front are divided into marine aerosols and continental aerosols polluted by the Taiwan Island. The differences in aerosol number concentration, particle size, and component distribution before and after the cold front are compared. It can be seen from the aerosol particle size distribution (Fig. 8) that the number concentrations of the contaminated continental aerosols (? and ? in Fig. 8) are higher than the marine background level, that is, the level of marine aerosols (? and ? in Fig. 8) before the cold front. On June 15 and June 16, the peak number concentration of aerosols appears in the nucleation mode, which means that there are more new particles in the aerosols at this time, and they are in a polluted state. It can be seen from the changes in aerosol components (Fig. 7) that except for the decrease in the proportion of sea salt (SS), the proportions of other components increase on June 15 and June 16, especially the sulfate component (SO4). The increase in the total number concentration and nucleation-mode number concentration of continent aerosols and the increase in the proportions of SO4 and other components may be due to the air mass, impacted by the Taiwan Island, carrying sulfate, organic carbon, and other components into the observation sea area.ConclusionsFirstly, with the increase in offshore distance, the total number concentration of marine aerosols gradually decreases from the coastal level (6812 cm-3) to the background level (1745 cm-3). Compared with the situation of the offshore sea, the air in the far sea is cleaner, and the proportion of the nucleation-mode number concentration gradually decreases (2.35%), the proportion of the Aitken mode remains stable (52.70%), and the proportion of the accumulation mode increases (44.95%). Secondly, the fitted spectra show that 62.15% of the median size distributions are single-peak, and 36.27% are double-peak, which agrees with the log-normal distribution. The median size distributions show the double-peak mode along the coast with a peak value of about 200 cm-3, but display the single-peak mode with a total number concentration between 60 cm-3 and 100 cm-3 on the open sea. As the offshore distance grows, the average geometric particle size of the main modes of particle size spectra increases, and the peak number concentration decreases. Finally, the aerosol samples obtained before the cold front are only affected by the ocean, and the number concentration of marine aerosols is lower. The SS component is the main component (94.33%), and the particle size distribution presents a single-peak characteristic, with the peak appearing in the accumulation mode, which reflects the characteristics of the background marine aerosol. After the cold front transits, the aerosol is affected by the polluted air mass from the Taiwan Island. The SO4 proportion in continental aerosols is significantly increased (44.73%), and the particle size distribution presents a double-peak characteristic in the nucleation and accumulation modes.

ObjectiveQuantum communication uses the quantum state as an information source to achieve the effective transmission of the information of quantum state carries. It has the advantages of high security, high transmission speed, and large communication capacity and is thus a hotspot in the current communication field. However, when an equivalent optical signal is transmitted underwater, it is inevitably affected by environmental factors, resulting in the degradation of transmission performance. Sea ice is one of the important factors that affect the transmission of optical quantum signals underwater. It is composed of freshwater ice crystals, brine bubbles, and bubbles containing salt. When an optical quantum signal is transmitted underwater, the bubbles, brine bubbles, and other microbial particles in sea ice absorb and scatter the optical signal, seriously interfering with the transmission of the signal and resulting in reduced communication performance. The propagation, reflection, and absorption of optical quantum signals in sea ice are affected by the particles and sol organics condensed in the sea ice. However, the influence of sea ice on the performance of underwater quantum communication channels has rarely been reported. Therefore, it is of great significance to analyze the extinction characteristics of sea ice as a whole according to the absorption and scattering characteristics of each component of sea ice and study the influence of sea ice with different density and salinity on link attenuation, channel utilization, and the bit error rate and bit rate of a quantum key distribution system.MethodsSea ice is composed of bubbles, brine bubbles, and other particles. To study the influence of sea ice on the performance of underwater quantum communication channels, this paper analyzes the absorption and scattering characteristics of each component of sea ice. Subsequently, on the basis of the absorption and scattering characteristics of sea ice with different density and salinity, it explores and simulates the relationships of sea ice parameters with the extinction coefficient. Then, according to the extinction characteristics of sea ice with different density and salinity, a constant incident wavelength is adopted, and the relationships of sea ice parameters with link attenuation and channel utilization are determined and simulated experimentally. Finally, the paper examines the effects of sea ice with different density and salinity on the bit error rate and bit rate of a quantum key distribution system and implements data simulation. The theoretical analysis and simulation results can provide a reference for the design of underwater quantum communication in sea ice environments.Results and DiscussionsUnder the same incident wavelength, the extinction coefficient of sea ice increases with sea ice density and sea ice salinity, and it is more markedly affected by the change in sea ice salinity (Fig. 2). When the transmission distance is short and sea ice salinity is small, the link attenuation caused by sea ice is also small. As the transmission distance of the optical quantum signal and sea ice salinity increase, link attenuation increases rapidly (Fig. 4). As sea ice density rises, the extinction effect on the quantum state of the light becomes more obvious, which leads to a decrease in channel utilization (Fig. 5). Since the scattering of light by sea ice changes the polarization of photons constituting the qubit and causes bit errors, the bit error rate of the underwater quantum system increases with sea ice salinity (Fig. 8). The bit rate of the key distribution system is affected by sea ice salinity and transmission distance. When sea ice salinity is small and transmission distance is short, the system bit rate changes slowly. When sea ice salinity is large and transmission distance is long, the attenuation of the optical quantum signal is serious, and the value of the bit rate decreases rapidly (Fig. 10).ConclusionsAccording to the extinction characteristics of sea ice, this paper determines the relationships of sea ice density and sea ice salinity with link attenuation, channel utilization, and the bit error rate and bit rate of the quantum key distribution system. Furthermore, it comparatively analyzes the influence of sea ice on the performance of underwater quantum communication under different parameters. The simulation results show that the link attenuation and the bit rate of the underwater quantum key distribution system tend to decrease as transmission distance and sea ice salinity or sea ice density increase. Moreover, the utilization rate of the quantum communication channel and the system bit rate decrease to varying degrees. In comparison, the change in sea ice salinity interferes more strongly with communication quality and influences the channel parameters more saliently. Therefore, the effects of sea ice density and sea ice salinity on the quantum state of light, especially the effects of sea ice salinity, must be fully considered when underwater quantum communication is conducted.

Results and Discussions The linear polarization of different oil spills is different, but the general law remains the same. When the incident zenith angle is unchanged and the relative azimuth is 180°, the linear polarization tends to increase first and then decrease with the increase in the observed zenith angle (Fig. 6). When the incident zenith angle is constant, the degree of linear polarization also tends to increase first and then decrease with the increase in the observed zenith angle (Fig. 10). The degree of linear polarization rises before it declines as the relative azimuth grows, and it reaches the maximum at the relative azimuth of 180°. The linear polarization degree is high in the relative azimuth angle range of 120°–240° and low otherwise (Fig. 7). In addition, the improved pBRDF model of oil spills has high confidence accuracy, which is more than 80% (Tables 2-5).The relationships between the linear polarization and the incident zenith angle, observation zenith angle, relative azimuth, and wavelength are studied. The results indicate that the incident zenith angle, observation zenith angle, relative azimuth, and wavelength have an impact on the linear polarization of oil spills. The linear polarization increases slightly with the increase of wavelength in the visible light range, but the linear polarization of the observation zenith angle and relative azimuth barely changes relative to that of the incident zenith angle. The linear polarization tends to increase first and then decrease as the relative azimuth rises, and the peak appears at the relative azimuth of 180°. At this time, the same trend of linear polarization holds as the observation zenith angle and the incident zenith angle grow, and the contrast is high. When the relative azimuth is 0°, the linear polarization registers the smallest when the incident zenith angle is equal to the observed zenith angle. The construction of the pBRDF model for oil spills on the sea surface and the investigation of the influence of the incident zenith angle, observation zenith angle, relative azimuth, and wavelength on linear polarization are conducive to realizing the accurate detection of oil spills on the sea surface and can provide a reference in this regard. The model and experimental scheme will be further optimized to study the influence of temperature on the linear polarization of oil spills and build a more accurate model.ObjectiveOil spill pollution has caused great harm to the marine environment and human society. Accurate identification of marine oil spills can help formulate oil spill treatment strategies and assess disaster losses. Optical characteristics, essential characteristics of marine targets, can reflect the physical and chemical properties, geometric surface, and other characteristic information of marine targets from the aspects of wavelength (frequency), energy, phase angle, polarization state, scattering or radiation characteristics, etc. In addition to the intensity, spectrum, and multi-angle detection methods, there is also polarization information in the reflected radiation of the detected target. When the light wave interacts with the surface of seawater, oil film, and other media, the polarization characteristics of the reflected light wave will change. Polarization detection has fogged permeability to a certain extent, which can weaken the influence of sea fog, significantly improve the contrast between target and background, and weaken or even eliminate the influence of solar flares. However, research on the polarization detection mechanism and the modeling of marine oil spills is still insufficient, which restricts the understanding of polarization characteristics and affects practical applications. Therefore, it is necessary to study the polarization detection mechanism of marine oil spills and build a theoretical model to improve the marine detection ability.MethodsOn the basis of the Priest and Germer (P-G) theory, this study comprehensively considers specular reflection, diffuse reflection, and volume scattering, optimizes the traditional model for oil spills on the sea surface, and proposes a polarized bidirectional reflection distribution function (pBRDF) model for oil spills on the sea surface that includes the scattering part. Then, it tests the polarization characteristics of five different oil spill targets (i.e., engine oil, crude oil, diesel oil, kerosene, and gasoline) in the rough water surface environment. By the comparison of the experimental data with the simulations, the visible light polarization characteristics of different oil spills are obtained, and the correctness of the model is verified.ConclusionsAccording to the micro panel theory, this paper comprehensively considers specular reflection, diffuse reflection, and volume scattering, optimizes the traditional model for oil spills on the sea surface, and proposes a pBRDF model of oil spills on the sea surface that includes the scattering part. The model can reduce the error caused by the case only considering specular reflection. The comparison with the experimental data shows that the fitted curve is in good agreement with the measured data, and the model built in this paper is accurate.

SignificanceWind field is an important parameter characterizing the dynamic characteristics of the earth's atmospheric system, and it serves as basic data necessary for business work and scientific research in fields such as weather forecasting, space weather, and climatology.The wind field measurement based on satellite remote sensing is not limited by geographical conditions. It can determine the intensity and direction information of the atmospheric wind field at different altitudes by monitoring the motion state of ocean waves, clouds, aerosols, and atmospheric components. It can not only obtain the observation data of ocean, desert, and polar regions, which are difficult to be collected by conventional methods, but also obtain the profile information of the wind field along the height distribution.As one of the main techniques in atmospheric wind field measurement, passive optical remote sensing has the characteristics of high accuracy, large altitude coverage, and small resource occupation. Great progress in the past half century has been made, and various wind measurement technologies have been developed such as atmospheric motion vectors, infrared hyperspectral analysis of water vapor, wind imaging interferometer, and Doppler modulated gas correlation, which can realize wind field measurement in an altitude ranging from 1 km near the surface to 300-400 km and form a reliable verification and capability complementation with active wind field measurement technologies such as lidar and microwave.In order to promote the development of spaceborne passive optical remote sensing for measuring atmospheric wind fields, it is necessary to summarize and discuss the existing research progress and future development trends, so as to provide a reference for the development of future passive optical remote sensing detection technology for atmospheric wind field and the task planning in atmospheric wind field detection.ProgressThis review focuses on two types of spaceborne optical passive techniques for wind field measurement based on atmospheric motion vector monitoring and atmospheric spectral Doppler shift detection. The fundamental theories, basic inversion methods, and the progress of research and application of representative payloads of various passive wind field detection technologies are summarized (Table 4).The atmospheric motion vector detection technology relies on cloud map observation to realize wind field detection. It has the characteristics of high spatial resolution and high detection accuracy and can obtain meter-level and precise wind field data at a sub-kilometer scale. However, limited by its detection technology mechanism, its detection altitude and efficiency are also significantly restricted.Infrared hyperspectral wind field measurement technology is based on infrared images of specific water vapor spectral channels and profile data to track the movement of characteristic image targets at specific altitudes to invert atmospheric wind speed, which is used for troposphere wind measurement, with high vertical resolution and profile data, and it is less affected by the cloud. Compared with those of the cloud-derived motion vector (CMV) technology, its measurement accuracy and horizontal spatial resolution of wind speed and direction need to be improved. However, as infrared hyperspectral loading and wind field inversion algorithms develop, infrared hyperspectral wind field measurement technology will become an important technology for troposphere wind.The wind field interferometer obtains the interferogram of the fine atmospheric spectrum from the limb observation, inverts the Doppler frequency shift of the atmospheric spectrum through the intensity position or phase change in the interferogram, and then realizes the measurement of the atmospheric wind field. The spaceborne application of this technology began in the late 1960s, and three technical systems have been developed, namely, Michelson interferometer, Fabry-Pérot interferometer, and Doppler asymmetric spatial heterodyne interferometer. The detection altitude covers most of the atmosphere including the stratosphere, mesosphere, and thermosphere. It features continuous profile detection capability, vertical resolution with an order of kilometers, and horizontal spatial resolution with an order of 100 km, and the highest peak accuracy of wind speed measurement has reached 3 m/s.The Doppler modulated gas correlation technology modulates and filters the incident spectrum through a molecular filter with its composition the same as the target atmospheric composition, so as to realize the frequency shift detection of the atmospheric spectrum and the detection of the wind. Compared with traditional spaceborne wind field measurement technologies, it has the advantages of high horizontal resolution, small size, light weight, and low power consumption and has a good application prospect in the field of small satellite network observation. At present, the technology is still in the stage of technical verification and application testing, and it is expected to further improve the vertical resolution of the limb observation, but the space for improving the effective horizontal resolution is limited.Conclusions and ProspectsThrough the technical research and payload application in the past 20 to 30 years, China's spaceborne passive optical atmospheric wind field detection technology is gradually narrowing the gap with the international leading level. However, in general, the spaceborne atmospheric wind field detection capability based on passive optical remote sensing still has problems such as discontinuous altitude profile coverage, incomplete local coverage of middle and high level wind field data, and limited spatial resolution of high level wind field data. In the future, the accuracy and resolution of profile data products for tropospheric wind field elements should be improved, and the gaps in China's middle and upper level atmospheric wind field observation data in terms of global scale should be filled. In addition, As China's planetary scientific research and deep-space exploration plans develop, the wind field detection for the atmospheres of Mars, Jupiter, and other planets is also an important direction for the development of wind measurement technology based on passive optical remote sensing.

ObjectiveDue to the sub-meter higher spatial resolution of panchromatic satellite images, the imaging process is easily affected by atmospheric scattering and absorption and adjacency effect under low atmospheric visibility, resulting in blurred edges of image objects and reduced image quality, and seriously affects the accuracy of quantitative remote sensing application. Before the application of panchromatic satellite image, atmospheric correction should be carried out to improve image quality. At present, the conventional atmospheric correction software can not correct the panchromatic satellite image, so the digital image processing method is often used to improve the quality of panchromatic satellite image. However, the digital image processing method often brings the problems of noise and excessive enhancement while improving the image quality. Therefore, it is urgent to develop a set of atmospheric correction methods suitable for panchromatic satellite images, eliminate the influence of atmosphere and surrounding environment on the target pixel satellite entry pupil signal, recover the real surface information, and improve image quality in the panchromatic satellite image quantitative remote sensing application.MethodsTaking the panchromatic satellite image of GF-2 as an example, this paper develops a set of atmospheric correction method for panchromatic satellite image by using the atmospheric radiative transfer model and the exponential decay point spread function. This method is simple to calculate, and fully considers the influence of atmospheric parameters (parameters of aerosol, water vapor, ozone, and other absorbing gases), spatial resolution, and adjacency effect between background pixels and target pixels on the entry pupil signal of target pixels, which further improves the image quality on the premise of ensuring the truth of panchromatic satellite image information. As an important evaluation index of an optical satellite imaging system, the modulation transfer function (MTF) can comprehensively and objectively characterize the sharpness of the image edge and the expression degree of spatial details, and its value can directly reflect the quality of imaging. Therefore, in order to comprehensively evaluate the quality of panchromatic satellite images after atmospheric correction, the traditional image quality evaluation indexes (clarity, contrast, edge energy, and detail energy) and MTF are simultaneously adopted in this paper to comprehensively and fully evaluate the atmospheric correction results.Results and DiscussionsThe atmospheric correction method for panchromatic satellite images developed in this paper is used to correct the GF-2 panchromatic satellite images of Baotou calibration site under two atmosphere conditions: clean atmosphere and polluted atmosphere. The results show that whether the atmospheric conditions are polluted or clean, the visual effect of the corrected panchromatic satellite images has been improved, the contours of ground objects become clear, the texture information is more abundant, and the recognition of ground objects has also been significantly improved. For high resolution panchromatic satellite images, the atmospheric correction method ignoring the adjacency effect can only improve the image brightness, but does not improve image clarity much. Especially in the case of air pollution, the edge of ground objects in the corrected image is still relatively fuzzy, which is not conducive to the visual interpretation of the image and the extraction of ground objects contour. This further proves that adjacency effect correction is essential for high resolution panchromatic satellite images. By comparing the quality evaluation parameters of each image before and after correction, it can be seen intuitively that the clarity increases by at least 155%, the contrast increases by at least 115%, the edge energy increases by at least 247%, the detail energy increases by at least 204%, and MTF increases by at least 169%.ConclusionsBased on the 6SV radiative transfer model, the atmospheric correction method developed in this paper combines the atmospheric point spread function based on the exponential decay model, and fully considers the influence of atmospheric parameters (parameters of aerosol, water vapor, ozone and other absorbing gases), spatial resolution, and the spatial distance between background pixels and target pixels on the adjacency effect. It can effectively remove the influence of atmosphere and surrounding environment on the satellite load entry pupil signal in the process of panchromatic satellite image imaging, recover the surface truth information in the imaging area which is covered by atmospheric influence, and fully improve the quality of panchromatic satellite image under low atmospheric visibility. After the evaluation of the corrected panchromatic satellite image quality, it is found that compared with the traditional image quality evaluation index, MTF can better reflect the improvement and promotion of the sub-meter panchromatic satellite image quality by the proximity effect correction, which highlights the indispensability of the adjacency effect correction in the atmospheric correction of the panchromatic satellite image. At the same time, the trend of MTF curve and the level of the value can reflect the spatial acuity of the image and the advantages and advantages of the image quality more comprehensively and objectively. Therefore, MTF index is recommended to be included in the image quality evaluation system when sub-meter satellite images (such as panchromatic satellite images) are evaluated.

ObjectiveVortex beams carry orbital angular momentum and have a phase factor exp( ilθ ), where l is the topological charge number and is direction angle. Theoretically, l can take any integer value, and different orbital angular momentum modes are mutually orthogonal. Therefore, in optical communication, the orbital angular momentum can be used for information transmission and exchange or multiplexed to improve communication capacity. However, vortex beams are affected by turbulence when transmitting in atmospheric turbulence, which results in the distortion of their spiral phase and causes inter-mode crosstalk and reduced communication reliability. Many studies focus on compensating the phase distortion of vortex beams, with adaptive optics systems commonly used. However, such methods require multiple iterations, converge slowly, and easily fall into local minima. In recent years, convolutional neural networks have attracted extensive attention in various fields due to their powerful image processing capabilities. Therefore, in this paper, convolutional neural networks are used to extract atmospheric turbulence information from distorted light intensity distribution and recover its distortion. This deep learning-based compensation method has even more accurate and faster correction capability than adaptive optics systems. In view of this, convolutional neural networks are employed herein for the phase prediction of atmospheric turbulence to achieve the phase compensation of Laguerre-Gaussian (LG) beams and improve modal detection accuracy and communication reliability.MethodsIn this paper, a novel convolutional neural network, i.e., deep phase estimation network, is constructed to achieve the prediction of turbulent phases. With this proposed deep network, a mapping between light intensity and turbulent phase caused by atmospheric turbulence is established. Here two strategies are used for learning and predicting the turbulent phase respectively: one uses a Gaussian beam as the probe beam, and the other makes a direct prediction with an LG beam carrying information without a probe beam. In the target plane, the turbulent phase is predicted by intensity, and the field is corrected by the predicted phase. The inputs of the networks of the two schemes are a Gaussian beam and an LG beam, respectively, and the output is the corresponding predicted phase of atmospheric turbulence. The deep phase estimation network performs feature extraction of the input light intensity profile by under-sampling through the encoder, learns the atmospheric turbulence feature parameters by up-sampling through the decoder to achieve the reconstruction of the equivalent atmospheric turbulence phase screen, and finally outputs the results. By learning and training a large number of samples, the network structure proposed in this paper can achieve good prediction results at a transmission distance of 500 m. In addition, five sets of intensity profiles with different turbulence intensities are set for testing and verifying the network to prove that the network has strong generalization ability. Then, the compensation is achieved by loading the reverse phase of the predicted phase on the distorted beam to exert the correction effect.Results and DiscussionsIn this paper, we construct a deep phase estimation network consisting of 15 convolutional layers, 3 deconvolutional layers, and 3 skip connections (Fig. 6) by using an encoder-decoder architecture, which can achieve phase prediction at long transmission distances. At a transmission distance of 500 m, after the network is trained with the distorted beam at different turbulence intensities, it can predict the turbulence phase screen with a high agreement with the simulation results of tests at five different turbulence intensities (Fig. 7). The prediction results are evaluated by calculating the mean square error between them and the simulation results, and it is found that the network can effectively extract turbulence information and has strong generalization ability (Table 2). The beam phase correction is achieved by loading the predicted phase reverse to the distorted beam, and the intensity profile (Fig. 8) and phase (Fig. 10) are corrected to a large extent. The mode purity of the corrected beam is greatly improved, and the mean square error of the intensity image is significantly reduced (Table 3).ConclusionsThe results show that the deep phase estimation network created in this paper can achieve phase prediction more accurately, and it is trained to automatically learn the mapping relationship between the input sample light intensity distribution and the turbulent phase and output the predicted phase. The phase compensation of the vortex beam is achieved based on the predicted phase. The compensation effects of two schemes using a Gaussian probe beam and not using a probe beam are investigated separately, and both of them are effective in correcting the distorted phase. They can predict the turbulent phase accurately under tests at five different turbulent intensities. After compensation, the mode purity of the beam reaches more than 95%, and the mean square errors of the compensated light intensity image and the source plane are both significantly reduced. Even in the case of Cn2=1×10-13 m-2/3 and transmission distance of 500 m, the mode purity of the two schemes is improved from 20.5% to 95.2% and 96.8%, respectively, after the compensation of the prediction results with the deep phase estimation network, and the mean square error also decreases significantly. In summary, the prediction results of the network model proposed in this paper are reliable, and the compensation performance is good.

ObjectiveThe working environment of aerial cameras is complex. In the process of acquiring aerial remote sensing images, the optical system is defocused due to the influence of external environments such as ground elevation difference, temperature, and air pressure. The obtained aerial remote sensing images are not clear enough. The sharpness detection methods based on image processing complete the sharpness detection through the spectrum analysis of high-frequency information in aerial remote sensing images. Taking advantage of the fast running speed of computers, the sharpness detection of aerial remote sensing images is completed in real time. Therefore, it has become the main method of sharpness detection both in China and abroad. However, the weak characteristic areas such as oceans, grasslands, and deserts, which cover more than half of the earth, have less high-frequency information in aerial remote sensing images. When using conventional image methods for sharpness detection, the error rate is high. According to the characteristics of overlapping areas between the two images, a method of aerial camera image sharpness detection based on a digital elevation model (DEM) is proposed. This method introduces a high-precision DEM, and according to the acquired weak characteristic areas, the two images before and after the aerial remote image sensing feature overlapping areas. The aerial imaging model is modified by minimizing the re-projection error, and the sharpness is detected according to the offset of feature points in the weak characteristic overlapping areas. It makes up for the defect that the sharpness detection methods based on image processing can't detect the sharpness in weak characteristic areas and expands the applicability of the sharpness detection methods based on image processing.MethodsIn this study, an aerial imaging model and feature point matching are used to obtain image sharpness parameters. Firstly, a DEM is used to provide the elevation data of the ground object in the aerial imaging model. The sum of the re-projection error of each pixel in the overlapping areas is regarded as the re-projection error function. By minimizing the re-projection error, relative error coefficients of various influencing factors can be obtained, so as to modify the aerial imaging model. Then, according to the characteristics of the overlapping areas between the two images, the geographical information of sceneries in the overlapping areas is regarded as public knowledge. The modified aerial imaging model is used to realize the feature point matching algorithm. In addition, according to the error between the feature matching points and the scale-invariant feature transformation (SIFT) algorithm matching points, the change in the azimuth elements in the aerial camera is calculated. Finally, the change in the principal distance is used as the sharpness detection result. Through the focal plane driving device of the aerial camera, the focal plane of the aerial camera can be quickly adjusted to an appropriate position, so as to obtain aerial remote sensing images with sufficient sharpness.Results and DiscussionsIn the experiment, aerial remote sensing images of the weak characteristic areas obtained by the aerial camera are transmitted to an image processing computer, and the DEM images with the accuracy of millimeter level processed by the computer in advance are introduced. SIFT algorithm is used to extract the features of the weak characteristic images in the overlapping areas, and the change in principal distance is calculated by the offset of feature points. Finally, the corresponding mechanical structure is adjusted according to the change in the principal distance, and aerial remote sensing images with sufficient sharpness are obtained. In this experiment, we select the second dimension with insufficient sharpness and the previous dimension with enough clear feature points in multiple groups of overlapping areas to verify the sharpness detection effect of the proposed algorithm between images with different sharpness (Fig. 5 and Fig. 6). In areas with abundant ground sceneries, 15 repeated experiments are carried out using different sharpness detection algorithms. The root-mean-square error of the sharpness detection parameters of the algorithm in this paper reaches 15.8 μm (Table 1). After 15 repeated experiments in areas with scarce ground sceneries, the root-mean-square error of the sharpness detection parameters of proposed algorithm can reach 16.3 μm. The classic sharpness detection algorithms such as Robert and proposed algorithm are used for weak characteristic areas, and 15 experiments are repeated. The sharpness detection curves are shown in Fig. 7. The root-mean-square error of sharpness detection parameters in the weak feature areas calculated by proposed algorithm can reach 16.275 μm (Table 2), and it meets the actual engineering accuracy requirements of aerial cameras. It is proved that proposed algorithm has a certain engineering application value.ConclusionsIn order to meet the application requirements of aerial cameras in military reconnaissance and topographic mapping, it is necessary to obtain clear aerial remote sensing images in real time. The key to obtaining a clear image is precise sharpness detection technology. In order to solve the problem of aerial camera imaging sharpness detection in weak characteristic areas, the characteristics of overlapping areas between the two images are analyzed, and a method of image sharpness detection of aerial cameras based on DEM is proposed. Based on DEM data, an aerial imaging model is optimized by minimizing the re-projection error. According to the geographical information of the sceneries in the overlapping areas of the aerial remote sensing images in the front and back formats, the change in the principal distance of the latter format relative to the previous format is calculated, so as to obtain the sharpness detection results. After many experiments, the root-mean-square error of sharpness measurement in areas with few features is 16.275 μm, which is within the range of half focal depth of an aerial camera optical system (19.2 μm). The accuracy meets the actual engineering accuracy requirements of aerial cameras, and the proposed algorithm has a certain engineering application value.