ObjectiveModulation instability is a crucial phenomenon in the study of nonlinear dynamics, where an unstable system results in the destruction of its original states, accompanied by the rapid growth of small perturbation instabilities. The Bose-Einstein condensate serves as an ideal platform for exploring modulation instability due to its precise experimental control over the system's nonlinear dynamics. Therefore, studying modulation instabilities holds profound significance in comprehending the nature of Bose-Einstein condensate systems. In this paper, we reveal that spin-orbit coupling can always introduce modulation instability into a kind of specific state. We call it spin-orbit-coupling-induced modulation instability. The states are specific as they are zero-quasimomentum states. We find that there exist four different zero-quasimomentum states, and we classify them as no-current-carrying states and current-carrying states according to whether the states carry current or not. In literature, modulation instability of the no-current-carrying states has been investigated. The current-carrying states are unique due to their current originating from spin-orbit coupling, and their existence is unstable due to nonlinearity. We find that all these zero-quasimomentum states are modulationally unstable in all parameter regimes. The consequence of such modulation instability is the formation of complex wave structures.MethodsThe properties of modulation instability and the corresponding nonlinear dynamic images are primarily investigated using Bogoliubov de Gennes (BdG) instability analysis and the split step Fourier method. BdG instability analysis is a widely employed technique for analyzing instability in the study of superfluidity and Bose-Einstein condensates. It primarily examines the system's stability and its response to perturbations by solving nonlinear eigenvalue equations. By diagonalizing the BdG Hamiltonian matrix, the eigenvalues can be obtained. The eigenvalues of the matrix may be complex due to the non-Hermitian nature of the BdG Hamiltonian. If one or more complex numbers exist in the eigenvalues, the state becomes unstable. Consequently, any imposed disturbance experiences exponential growth, leading to the instability of the state. In addition, the split step Fourier method is commonly used for handling time evolution. The underlying principle of this method is to separate the terms of the system Hamiltonian and process them individually. The key step involves employing distinct treatments for the nonlinear and linear terms of the equation to be solved.Results and DiscussionsInitially, we investigate the case of g>g12 and observe that the system exhibits a four-band modulation instability image in Fig. 1. Among these bands, the two branches positioned near the lower quasi-momentum region are referred to as the primary modulation instability band, while the two branches near the higher quasi-momentum region are known as the secondary modulation instability band. Notably, it is determined that identical chemical potentials of the two current-carrying states yield the same modulation instability image. Furthermore, we perform calculations to ascertain the nonlinear dynamic images (Figs. 2 and 3). The investigation reveals that the density evolution of the two components follows similar ways, exhibiting trends of movement in both positive and negative directions along the x-axis. As time progresses, both components undergo chaotic oscillations. In the quasi-momentum space, distinct motion trends and reversal symmetry are observed between the two components. After a certain period of evolution, significant separation occurs. This phenomenon arises from the modulation instability being predominantly influenced by different modulation instability bands at various stages. Initially, the primary modulation instability band dominates, while in later stages, the secondary modulation instability band takes control. Ultimately, the system tends to approach the quasi-momentum space of the secondary modulation instability band, leading to chaotic propagation. Simultaneously, we also examine the scenario where g<g12 and observe that the system's modulation instability image consists of only two bands (Fig. 4): the primary modulation instability band. This disappearance of the secondary modulation instability band occurs as the repulsive interaction between the components intensifies, causing the two unstable branches to merge. Following a nonlinear dynamic analysis (Figs. 5 and 6), we observe that the motion trends become less pronounced due to the absence of the secondary modulation instability band. Nevertheless, in this case, the two components still exhibit distinct motion patterns and maintain reverse symmetry. The reason behind this phenomenon remains consistent with the previous situation. However, since there are only two branches of modulation instability, the system consistently resides near the quasi-momentum space of the main modulation instability band once the wave function enters chaotic oscillation.ConclusionsWe delve into the examination of modulation instability and its consequential dynamic patterns in one-dimensional two-component Bose-Einstein condensates with spin-orbit coupling. The study reveals the existence of four distinct zero momentum states within the system, where two of them carry currents while the remaining two do not under specific conditions. It should be noted that the generation of these four states is not solely determined by spin-orbit coupling; however, the presence of spin-orbit coupling does impact the modulation instability of the system. Previous research predominantly focuses on the zero quasi-momentum state without current carrying, neglecting the investigation of the zero quasi-momentum state with current carrying. We specifically explore the modulation instability of current-carrying zero momentum states. The findings indicate that in the presence of Rabi coupling, when the intra-component interaction surpasses the inter-component interaction, the modulation instability image manifests four branches, consisting of two main modulation instability bands and two secondary modulation instability bands. Conversely, when the intra-component interaction is lower than the inter-component interaction, the modulation instability image presents only two branches. We also establish a correlation between modulation instability and the nonlinear dynamic evolution of the system. Additionally, the presence of modulation instability can trigger the emergence of intricate patterns.

ObjectiveFiber Fabry-Perot tunable filters (FFP-TF) controlled by piezoelectric ceramics are prone to temperature drift in fiber Bragg grating (FBG) sensing systems. During the long-term measurement process, FFP-TF will cause continuous drift of the output wavelength, which will adversely damage the FBG sensing system's measurement accuracy. At the moment, FFP-TF temperature drift compensation primarily entails adding hardware calibration modules to the FBG sensing system, such as the reference grating method, F-P etalon method, gas absorption method, and composite wavelength reference method. Although these technologies can efficiently adjust for temperature drift, they greatly increase the system's cost and complexity. As a result, utilizing software approaches to compensate for temperature drift in FFP-TF is a practical and low-cost method. However, most contemporary temperature drift compensation approaches based on artificial intelligence technologies neglect temperature drift data's temporal features. In fact, the fresh sample has a higher impact on the prediction outcomes of the following data than the old sample. As a result, this work extensively addresses the impact of temporal features on temperature drift compensation when processing temperature drift and other highly time-dependent data. A tunable filter temperature drift compensation approach with time weight is suggested based on the AdaBoost-SVM algorithm and time weight.MethodsWe use FBG0 as the reference grating and the other three FBGs as sensing gratings, and each sensing grating is modeled individually. The temperature-related values of the experimental environment are chosen as the model's input features in this investigation. Furthermore, because the wavelength drift errors of each FBG in the FFP-TF output spectrum have a high correlation, we use the drift of the reference grating as an input feature of the dynamic compensation model to compensate for the lack of accurate temperature information in the F-P cavity. The significant link between the temperature drift sequence data before and after is taken into account in full by this investigation. The idea of time weight is introduced in the process of modeling the temperature drift of FFP-TF to assign various temporal attributes to each sample. After that, temperature drift samples are modeled using support vector machines (SVM) as weak learners, and several SVM learning models are integrated using the AdaBoost framework. In the integrated prediction process, the time attribute of samples has an impact on the update of sample weights in addition to the prediction performance of each model. Multiple temperature change modes have been used to validate the aforementioned procedure.Results and DiscussionsFirst, the temperature drift compensation results of the proposed algorithm are compared with the conventional AdaBoost-SVM algorithm for three transmission gratings in the 2 ℃ narrow changing temperature environment experiment of cooling and heating (Table 3). Secondly, in the 15 ℃ cooling amplitude experiment, the temperature drift compensation results of the proposed algorithm are compared with the traditional AdaBoost-SVM algorithm for three transmission gratings. The experimental results show that the maximum temperature drift compensation error of the traditional AdaBoost-SVM algorithm is 10.83 pm, while the maximum temperature drift compensation error of the AdaBoost-SVM based on time weight is reduced to 7.04 pm. The results show that the classic AdaBoost-SVM algorithm's maximum error is approximately 11.57 pm, whereas the maximum error of the AdaBoost-SVM based on time weight is only approximately 4.05 pm. The strategy suggested in this research, however, outperforms unoptimized machine learning methods in terms of superior stability, stronger reliability, and higher prediction accuracy (Table 4). The aforementioned findings show that the method suggested in this article may successfully determine samples' temporal properties, allowing for more reasonable sample weight allocation, a decrease in model performance fluctuations, and an increase in model accuracy.ConclusionsFirst, the high link between the temperature drift sequence data before and after is thoroughly taken into account in this article. The ratio of new and old samples is altered by applying various new weights at various time points, which makes the distribution of sample weights more logical and enhances the model's performance. The experiment next establishes a nonlinear model between the filter surface temperature and output drift error using the spectral locations of three reference gratings as input features. Experiments are carried out on two datasets with different temperature change patterns, and the results reveal that the first dataset does not fully comply with the more important rule of closer samples in general time series proposed in this article due to the short-term fluctuation of temperature changes, so the performance improvement of the model is not significant; the temperature change in the second dataset demonstrates a monotonic cooling trend with apparent gradients, which is more consistent with the more important principles of closer samples, and the performance gain is more significant. Unlike typical hardware techniques, the method suggested in this paper does not require any additional hardware, resulting in a novel approach to temperature drift compensation of tunable filters.

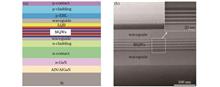

ObjectiveAlthough visible light communication has become a research hotspot, its development continues to focus on how to improve the transmission rate, transmission distance, and equipment utilization. The development of metal organic chemical vapor deposition technology leads to micro-light-emitting diodes (micro-LEDs) and other high-performance Ⅲ‑nitride devices. Compared with the white commercial LEDs, the micro-LED has the advantage of high modulation bandwidth, high brightness, and low coherence in the visible light communication (VLC). A variety of optical wireless transmissions using multiple quantum well LEDs or photodetectors has recently been reported. Considering that miniature high-speed visible light communication using LEDs is a potential complementary technology for dual-functional wireless communication network towards 6G, we propose that GaN-based multiple quantum well (MQW) diodes on the silicon substrate can simultaneously emit and detect light, which in practice can perform transmitting devices and receiving devices simultaneously in the VLC.MethodsBased on the schematic of the cross-sectional structure of the InGaN/AlGaN diodes, we design and fabricate a Si-substrate micro-ring light-emitting diode (MR-LED) using a standard semiconductor process. We begin by evaluating the photoelectrical performance and communication performance of MR-LED. The optoelectronic characteristics of the MR-LED including I-V relation, electroluminescence, and the response curve of the LED are measured by an Agilent Instrument B1500A source meter and an Oriel Instrument IQE-200 B quantum efficiency system. Subsequently, for characterizing the communication performance, we propose out-of-plane visible light communication where a Hamamatsu C12702-11 photodiode module detects spatial modulated light emission by MR-LED, and the MR-LED pluses its light in coded pseudorandom binary sequence signals or carries image information. The photogenerated electron-hole pairs lead to an induced photocurrent when we employ a 375 nm and 20 mW laser beam to illuminate the MR-LED. We extract the signals detected by MR-LED. When the diode is turned on with external illumination, the measured current is a summation of the driving current and photocurrent. In this situation, the diode simultaneously emits and detects light. When appropriately biased and illuminated, the induced photocurrent is distinguishable from the driving current. We can then extract the photocurrent signal for analysis and implement a spatial full-duplex communication system.Results and DiscussionsAccording to the photoelectrical performance of MR-LED, the turn-on voltage of the diode is 2.8 V, and the dominant EL peak is measured at approximately 379.4 nm and an injection current of 5 mA. The overlap area between the luminescence spectrum and the detection spectrum of the MR-LED is 20 nm, which proves that the communication system of simultaneous light transmission and light reception is feasible from an optical point of view (Fig. 4). The MR-LED is observed to provide a -3 dB frequency response exceeding 66.8 MHz, and thus is suitable for high-speed VLC. The external photodiodes detect the spatial light emission to convert the photos back into electrons at a rate of 150 Mbit/s. The KEYSIGHT DSOS604A digital storage oscilloscope shows resolved eye diagrams at the rate of 150 Mbit/s (Fig. 5). In optical wireless image transmission systems, MR-LED emits signals carrying the image information. The signal received by the photodetector is amplified and then restored in MATLAB, and an eye diagram is displayed on the oscilloscope (Fig. 6). As a receiver, the MR-LED based on negative voltage of -2 ,-4,and -6 V detects the 375 nm laser modulated light signal. The received signal amplitude is around 150, 280, and 350 mV respectively. Therefore, the higher negative bias voltage loaded on the MR-LED leads to better detection performance of the MR-LED. When biased at 4.15 V, the diode as a receiver operating in the simultaneous emission-detection mode can still receive different frequency laser signals. As the frequency of the external light signal increases, the amplitude of the received signal is distorted when the MR-LED is emitting light. The amplitude of the received signal increases from 38.8 mV to 110.8 mV as the Vbias rises from 3.85 V to 4.15 V (Fig. 7). Above the turn-on voltage of 2.8 V, the increase in the biased voltage slightly influences the amplitude of the received signals. The results show that the MQW-diode can sense light in either the detector or emitter mode, indicating the possibility of spatial full-duplex communication using visible light.ConclusionsWe propose, fabricate, and characterize GaN-based MQW diodes with micro-ring geometry. Due to the spectral overlap between the emission and absorption spectra, a multifunctional MR-LED allows light emission and detection simultaneously. As a transmitter, the MR-LED demonstrates out-of-plane data transmission at 150 Mbit/s using on-off keying modulation. The optical wireless transmission of image data is also implemented by software processing. As a receiver, whether illuminated or not, the MR-LED can detect free-space optical signals under different bias voltages. The realization of space full-duplex communication shows that the multi-functional MR-LED can reduce material costs and processing costs in a miniature high-speed VLC system.

ObjectiveDuring shale gas exploitation, a distributed optical fiber acoustic sensing system (DAS) based on phase-sensitive optical time domain reflectometry (Φ-OTDR) is a commonly employed solution for monitoring microseismic waves generated during hydraulic fracturing operations. Signal-to-noise ratio (SNR) is an important parameter reflecting the performance of the Φ-OTDR system, and obtaining microseismic signals with good SNR is the basis for monitoring the fracturing effect of shale gas. However, due to the thermal noise and scattering noise of the photodetector, the phase noise and frequency drift of the laser, and the environmental noise, the SNR of the Φ-OTDR system will deteriorate, resulting in difficult vibration localization and distorted phase signal obtained by demodulation. The solution to this problem is essential for broad applications of Φ-OTDR systems in the engineering field.MethodsTo improve the SNR of vibration signals measured by Φ-OTDR systems, we propose a vibration signal denoising method based on variational mode decomposition (VMD) and mutual information (MI). The in-phase orthogonality (I/Q) demodulated phase signal is further processed, and the number of VMD layers K is determined by the scaling index calculated by detrended fluctuation analysis (DFA). The process of the DFA method is as follows. First, the input noisy signal is decomposed into K(K=1,2,3…) IMF components by VMD, and then the scaling index of each mode is estimated by DFA. The relationship between the number of decomposition layers K and the scaling index is K=argmaxKnum(α1:K≥θ)=J,K=1,2,3,⋯, where the parameter Jis determined by the scaling index of the input noisy signal. When the value of K is determined, the MI between the IMF components generated by the K-layer VMD and the input noisy signal is calculated. The mean value of the normalized MI of each component and the input signal is taken as the threshold value. Additionally, when the normalized MI of a component and the input signal is greater than this threshold value, the component is considered to be a correlated mode, otherwise it is a non-correlated one. The distortion and noise of the phase signal are suppressed by discarding the non-correlated modes determined by the MI method.Results and DiscussionsA coherent detection Φ-OTDR system is set up to verify the denoising effect of the VMD-MI method. The 500 Hz single-frequency vibration signal (Fig. 6) and the 500, 1000, and 1500 Hz multi-frequency vibration signals (Fig. 10) are processed by VMD, wavelet denoising (Wavelet), empirical mode decomposition (EMD), and complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN). For the 500 Hz single-frequency vibration signal, the number of decomposition layers K is determined as 4 by the DFA method, and the MI between each component and the original phase signal is calculated (Fig. 7) to determine IMF3 as the correlated mode to be retained, and the remaining components are discarded as non-correlated modes. For the original phase signal with the SNR of 18.34 dB (Fig. 6), the proposed method improves the SNR to 41.45 dB, which is significantly better than the 18.46, 34.87, and 38.60 dB of the Wavelet, EMD, and CEEMDAN methods, respectively (Fig. 8). For the multi-frequency vibration signals of 500, 1000, and 1500 Hz, the number of decomposition layers K is determined to be 7 by the DFA method, and the IMF3, IMF4, IMF5, and IMF6 are determined to be correlated modes and the remaining components are non-correlated modes by the MI method (Fig. 9). Meanwhile, the noise reduction is reduced by discarding the non-correlated modes. For the original phase signal with SNR of 18.82, 20.38, and 17.41 dB, the proposed method improves the SNR to 32.28, 33.77, and 30.68 dB respectively, significantly better than Wavelet, EMD, and CEEMDAN methods (Fig. 10).ConclusionsThe DAS system based on Φ-OTDR is a promising detection device in the microseismic monitoring of shale gas fractures. The SNR is an important criterion to evaluate the quality of the detection signal, and enhancing the SNR is significant to improve the overall sensing performance of the DAS system. We propose a method to improve the SNR of Φ-OTDR based on VMD. The DFA method is adopted to determine the appropriate number of decomposition layers, and the correlated modes are selected and retained by calculating the MI between the components obtained from VMD and the original phase signal to achieve noise removal. The experimental results show that the VMD-MI algorithm is significantly better than Wavelet, EMD, and CEEMDAN in improving the SNR of 500 Hz single-frequency vibration signal, and 500, 1000, and 1500 Hz multi-frequency vibration signals. This proves the effectiveness and superiority of the proposed method in improving the measurement performance of the Φ-OTDR system. Meanwhile, this method can help acquire high-fidelity microseismic information in microseismic monitoring of shale gas.

ObjectiveSurface-enhanced Raman scattering (SERS) is a powerful analytical tool that can provide molecular vibrational fingerprint information. Due to its unique analytical advantages, SERS has been widely applied in various fields such as biomedical research, environmental monitoring, and food analysis. During the development of SERS technology, silver nanoparticles have often been used as SERS substrates due to their excellent localized surface plasmon resonance properties. However, their susceptibility to oxidation poses a significant limitation in the construction of nano-probes for practical applications. In contrast, gold nanoparticles have better chemical stability and lower biotoxicity but relatively weaker Raman signal enhancement capability. Therefore, bimetallic SERS substrates combining gold and silver are characterized by high sensitivity and stability. Compared with traditional substrates, tapered optical fibers not only have the advantages of in-situ detection and remote signal transmission but also have great potential for enhancing laser reflection and improving light collection capability, which is beneficial for collecting weak Raman signals and achieving lower detection limits. Therefore, we proposed a simple and cost-effective bimetallic tapered optical fiber SERS probe. This probe utilized a light-induced method to deposit gold and silver nanoparticles on the surface of the tapered optical fiber, providing high sensitivity and good stability.MethodsThe bimetallic tapered optical fiber SERS probe was prepared by using a light-induced method in this study. First, the tapered fiber was prepared by using the fiber fusion tapering machine. After clamping the processed fiber onto the fiber holder, the fiber fusion tapering machine was started to initiate the tapering process. Once the machine stopped operating, two tapered optical fibers were obtained. Second, gold and silver nanoparticles with an approximate diameter of 50 nm were prepared by using a chemical reduction method. Finally, the bimetallic tapered optical fiber SERS probe was prepared through the light-induced method. The laser beam emitted by a helium-neon laser was coupled into the tapered optical fiber. Subsequently, the tapered end was immersed in a mixed solution of Ag sol and Au sol (volume ratio of 1∶1, both 0.3 mL) for 60 seconds. Then, the tapered end was moved from the solution to the air and kept in that position for 90 seconds while the laser was still operating. This process was repeated 15 times [Fig. 1(a)]. At the end of the entire procedure, the bimetallic tapered optical fiber SERS probe was obtained. The surface morphology of the tapered optical fiber was characterized by scanning electron microscopy (SEM), and the performance of the optical fiber probe was tested by a confocal Raman spectrometer.Results and DiscussionsIn this study, SEM analysis [Fig. 2(a1) and (a2)] of the prepared samples revealed a uniform distribution of metal nanoparticles on the fiber surface, exhibiting a mostly monolayer arrangement. The relative mass percentages of Ag and Au elements were found to be 2.36% and 9.21%, respectively [Fig. 2(b) and (c)]. The average particle size of the metal particles on the sample was 49 nm, with an average gap of 6.8 nm [Fig. 2(e1) and (e2)]. In the paper, Rhodamine 6G (R6G) was selected as the analyte molecule to evaluate the SERS performance of the prepared samples. The bimetallic tapered optical fiber SERS probe was immersed in R6G solutions with concentrations ranging from 10-8 to 10-10 mol/L for three minutes respectively. After removal from the solution and natural drying, Raman signal detection was performed, and the obtained enhancement factor (AEF) for the samples reached 2.07×108. To demonstrate the capability of detecting non-single molecule analytes, the samples were immersed in a mixed solution of 10-6 mol/L R6G and 10-4 mol/L crystal violet (CV) for three minutes. After removal from the solution and natural drying, the detection was conducted, and the results indicated that the unique Raman peaks of different probe molecules could be detected in the mixed solution [Fig. 6(b)]. To demonstrate the excellent stability of the bimetallic tapered optical fiber SERS probe, it was placed for different durations of 24 hours, 48 hours, 72 hours, and 96 hours. Afterward, a comparison was made by using Raman testing of 10-7 mol/L R6G. The results indicated that the sample exhibited good stability (Fig. 7).ConclusionsIn this study, to enhance the enriched density of metal nanoparticles on the surface of optical fibers and improve the stability of the Raman-enhancing properties of the optical fiber SERS composite structure, we proposed a bimetallic (gold and silver) tapered optical fiber SERS probe structure. First, gold and silver nanoparticles with uniform morphology were prepared using the chemical reduction method. Then, the bimetallic particles were enriched on the tapered optical fiber using light-induced methods. The prepared optical fiber SERS probe exhibited excellent experimental performance. In this study, the lowest detected concentration of R6G was as low as 10-10 mol/L, and the enhancement factor was 2.07×108; compared with single-metal silver optical fiber SERS probes, the stability of the bimetallic sample was improved by seven times (after 96 hours). The bimetallic tapered optical fiber SERS probe is expected to be applied in in-situ and remote detection in the future. The next research direction is to explore the key process of controllable double metal modified optical fiber, so as to further optimize the detection sensitivity and stability of the sample.

ObjectiveThe rapid evolution of bionic flexible tactile sensors is driven by the overarching goal of emulating human tactile perception to augment robots' perceptual acuity. Conventional electric sensing paradigms grapple with a myriad of challenges, including elevated manufacturing costs and susceptibility to signal interference. Meanwhile, due to the small size, strong flexibility, and high sensitivity, optical sensing modalities are pushing micro/nano fibers (MNFs) into the spotlight. Domestically, the Zhejiang Lab is at the forefront of developing various MNF-based sensors, enabling single/dual-modal detection for applications in human-machine interaction and physiological parameter monitoring. Nevertheless, the challenge of balancing sensitivity and operational range remains unresolved in current methods, compounded by susceptibility to wear-related issues. Thus, we introduce a micro/nano fiber-based flexible tactile sensor unit inspired by fingertip skin microstructures (FIMF). By simulating the biological microstructures and tactile conduction mechanisms of fingertip skin, FIMF achieves the detection of mechanical stimuli and object feature recognition. The advanced sensor structure and functional attributes are significant for applications in flexible bionic devices and advanced robotics technology.MethodsFirstly, the proposed flexible tactile sensing unit FIMF is inspired by the microstructure of fingertip skin and is achieved by embedding an MNF between two layers of polydimethylsiloxane (PDMS) films. The structure is further enhanced by introducing two layers of elastic resin annular ridges on the surface, each with varying stiffness. This design aims to replicate the intricate microstructure of biological fingertip skin and its underlying tactile conduction mechanism. Subsequently, we delve into the influence of PDMS film thickness and the dimensions of the annular ridges on the tactile pressure response of the FIMF sensor. Based on meticulous simulation results, the optimal sensor parameters are identified with a PDMS film thickness of 50 µm, an upper annular ridge thickness of 0.2 mm, and a lower annular ridge thickness of 0.4 mm. Furthermore, we extensively examine the FIMF sensor's response to diverse tactile stimuli, including static and dynamic pressure, and vibrations. Finally, the FIMF's ability to discern object hardness and surface textures is investigated by employing a synergistic approach integrating the mechanical finger's travel distance and the FIMF force feedback to discern object hardness characteristics. Meanwhile, we conduct waveform analysis of transmitted intensity changes over time to perceive and compute object texture. The pursuit of further insight into different textures is accomplished by the application of short-time Fourier transform (STFT) to extract frequency domain features.Results and DiscussionsThe experimental findings underscore that the devised FIMF inspired by the microstructures of fingertip skin presents an amalgamation of wide-ranging dynamic detection capabilities and high sensitivity. Remarkably, it boasts response and recovery times of less than 100 ms, providing the sensor with the capacity to swiftly discern mechanical stimuli (Fig. 7). Furthermore, the sensor exhibits exceptional robustness and elevated static/dynamic stability, which is a testament to the robust encapsulation of its diverse structural layers (Fig. 8). Expanding its sensing range is proven instrumental in significantly enhancing the sensitivity for minute pressure ranges (0-2 N), thereby achieving an enhancement of approximately fourfold compared to recently reported MNF tactile sensors. A pivotal facet arises from the microstructure integration to amplify tactile mechanical stimuli and translate them into MNF deformations. This innovative approach does not need to employ tapering processes that would require reducing the MNF diameter to below 2 µm, which not only streamlines manufacturing but also augments the overall structural resilience (Table 1). In object hardness/texture perception, the FIMF divulges a pertinent trait that the transmitted intensity diminishes with the escalating hardness. This phenomenon arises because stiffer objects induce greater forces and stresses during contact, thus culminating in a more conspicuous attenuation of optical intensity (Fig. 9). The FIMF employs a spatial frequency-based characterization for discerning object texture, and the texture wavelength is derived by dividing the sliding speed by the dominant frequency. Additionally, the STFT of the transmitted light intensity signal provides a richer depiction of intensity fluctuations over time. During scans across regular surface patterns, the light intensity signal engenders periodic motifs at frequencies below 10 Hz. Notably, the number and positioning of these motifs amplify in tandem with increased scanning speeds in the temporal domain (Fig. 11).ConclusionsWe propose a novel micro-nano optical fiber flexible tactile pressure sensor inspired by the fingertip skin microstructure. This sensor combines force sensing with object hardness/texture detection capabilities. The sensor's force conduction performance is enhanced by bionic design to offer a wide detection range (0-16 N), high sensitivity (20.58% N-1), short response time (86 ms), extended lifespan, and low cost. By demonstrating its functionality, we directly connect this soft sensor to a robotic manipulator, enabling it to differentiate between soft and hard objects, perceive object textures, and measure gripping forces. Consequently, this sensor is suitable for robotic gripping operations. Thus, the proposed sensor possesses structural and functional features reminiscent of human fingertip skin and has promising potential for applications in bionic artificial skin and advanced robotics technology.

ObjectiveDue to its non-invasive, high-resolution, and real-time imaging capabilities, optical scanning microscopy has been widely applied in biomedicine, physical chemistry, and materials science to realize three-dimensional imaging detection of cells, tissue analysis, and microstructure samples. Beam scanning systems play an important role in optical microscopy. When scanners are leveraged for scanning and imaging, it is necessary to add a relay system composed of a scan lens and tube lens between the scanner and the objective lens. As a result, the beam does not deviate from the rear pupil of the objective lens to ensure the imaging quality. However, traditional relay systems adopt lenses design, and their inherent defects can cause optical aberration, which affects the resolution of optical microscopy imaging systems. Commercial scan lens and tube lens are optimized to suppress aberrations, but they feature high price, large volume, and long focal length, making them unsuitable for miniaturized design occasions. The relay system composed of doublet lenses can be miniaturized according to requirements, but it is difficult to effectively suppress aberrations, especially under large beam scanning angles. The utilization of parabolic reflectors to form a relay system can effectively eliminate the chromatic aberration, but the coma aberration of the reflection system is difficult to eliminate. This is the same as the lens relay system, which requires a one-to-one correspondence of the focal position and high installation accuracy. Therefore, how to design aberration-free beam scanning systems with miniaturization and simple structures is still an important problem facing optical microscopy imaging technology.MethodsAiming at the large size, large aberration, and high alignment accuracy of the relay systems in existing beam scanning systems, we propose a dual two-dimensional (2D) MEMS mirror beam scanning method. This method adopts two 2D MEMS mirrors to realize beam telecentric scanning. One mirror replaces the scan lens and tube lens in the traditional relay system to avoid the introduction of aberrations, reduce the system size, and finally design an aberration-free beam scanning system with miniaturization and simple structures. There is a one-to-one correspondence between the scanning angle of the scanning beam and the tilt angle of the MEMS mirrors. By controlling two MEMS mirrors to cooperate with different tilt angles, this method can make the excitation beam arrive the rear pupil of the objective lens at different angles to complete the 2D lateral scanning.Results and DiscussionsTo obtain the relationship between the angle of the scanning beam and the deflection angle of the two MEMS mirrors, we build a mathematical model of the dual 2D MEMS mirror scanning system, and analyze it in detail. First, the relationship between the tilt angle of the MEMS mirror and the beam scanning angle is analyzed. Under different d/l ratios (with dbeing the distance between two MEMS mirrors,and l being the distance between the second MEMS mirror and the rear pupil of the objective), the tilt angle of the MEMS mirror and the beam scanning angle have different relations. It is found that the dual 2D MEMS mirror scanning system can achieve a large angle beam scanning by adjusting the d/lvalue when the deflection angle of the first MEMS mirror is constant (Fig. 4). Additionally, the values of d and l can be selected arbitrarily, which is flexible in design and convenient for system miniaturization. Then, the aberrations of the traditional relay system and the dual 2D MEMS mirror scanning system are analyzed by Zemax optical design software, and their performances are compared. According to the simulation results, the dual 2D MEMS mirror scanning system avoids the introduction of aberrations and has better imaging quality than the traditional relay system (Figs. 6-7). Meanwhile, the system structure is simple, easier to adjust, and can avoid the influence of installation error on the system (Fig. 10). Finally, based on this method, a miniaturized confocal scanning microscope is constructed, and the step sample is utilized to obtain the height and period information of the sample (Fig. 13), which verifies the feasibility of the method.ConclusionsWe propose a dual 2D MEMS mirror beam scanning method, which leverages a 2D MEMS mirror instead of the traditional relay system to design an aberration-free beam scanning system. In addition, the dual 2D MEMS mirror structure has no requirements for the focus position, making it convenient for miniaturization design and installation. A dual 2D MEMS mirror scanning confocal microscope is constructed based on this method, and the feasibility of this method is verified by testing standard step samples. This method provides a new beam scanning approach for optical microscopy, which is of great significance for optical microscopic systems with strict aberration requirements such as confocal microscopes, two-photon microscopes, optical coherence scanning microscopes, and chromatic confocal microscopes.

ObjectiveThe action of implementing computational photography for targets out of the field of view (FOV) such as behind the barrier by a reflective relay surface is defined as non-line-of-sight (NLOS) imaging. NLOS technology has a promising future in the fields of medicine, road safety, and scientific research due to its ability to expand the human's FOV in scenarios where a direct view is impossible to obtain by devices or human eyes. The present NLOS technology mostly includes transient imaging, range-gated imaging, and passive pattern imaging, and they are mostly dedicated to Lambert reflector relay surfaces. However, the materials in common scenarios are usually non-Lambertian reflectors, whose scattering characteristics are random and diverse, with different degradation characteristics of NLOS imaging results. The traditional methods always have complex system structures, slow imaging speeds, and high costs. We propose a new NLOS method based on the time of flight (TOF) camera, which requires lower maintenance costs, indicates higher availability than the transient imaging and range-gated imaging, and can also realize 3D reconstruction compared with the passive pattern. The NLOS 3D imaging can be achieved for the relay surface with non-Lambertian scattering characteristics by the proposed method to provide theoretical references and an experimental basis for the application.MethodsThe NLOS images collected by the reflective relay surface suffer from serious degradation of which the process is directly determined by the optical scattering characteristics of the relay surface. The following theoretical hypothesis is provided by analyzing the propagation method of the target optical signal (Fig. 1). The degraded images are formed from convolution between the clear image and the relay surface with scattering characteristics. As a result, reconstruction for clear images can be realized through the deconvolution of degraded images if scattering characteristics of the relay surface are obtained. Depth imaging can be achieved for the TOF camera by calculating the phase differences between the incoherently modulated illumination and the returned light signal from targets. The phase difference calculation relies on the signal intensity, and the relay surface scatters the optical signal and degrades the depth image. Therefore, the implementation method of the reconstruction algorithm is as follows. First, scattering characteristics of the relay surface are obtained with the genetic algorithm (GA) through clear and degraded images respectively of the given target. Second, clear images are reconstructed through deconvolution for degraded images of other targets based on the above scattering characteristics. Finally, the pixel value of the depth image for the TOF camera is the floating number which is different from common 2D images. Thus, most of the traditional imaging method evaluation is unsuitable for depth evaluation. A kind of difference image is employed for subjective evaluation and mean square error (MSE) for objective evaluation.Results and DiscussionsWe put forward an NLOS depth imaging method based on the TOF camera. The scattering characteristics of the relay surface should be solved first to realize the reconstruction through deconvolution. However, the scattering characteristics of the relay surface are completely unknown in the NLOS mathematical model. For any of two different materials (Fig. 5), several different scales of matrices are adopted to express them in the algorithm, while the GA calculates matrix value by the fitness function [Eq. (8)]. The results are approximate numerical values of scattering characteristics of the relay surface (Fig. 7), and the 2D matrix with minimum fitness is set to be the deconvolution kernel. Then reconstruction is conducted for degraded NLOS depth images of the unknown target by Lucy-Richardson (LR) deconvolution. The experimental results reveal the effectiveness and feasibility of the algorithm both from subjective and objective evaluation (Figs. 8 and 9), but the results are not always convergent without complete expression of scattering characteristics in the 2D matrix. Although the solving process takes a long time, the calculated matrix of scattering characteristics can be applied. However, the GA-LR algorithm provides a low-cost NLOS 3D imaging technique, which can be directly applied to practical scenarios if the scattering characteristics database of common materials is established in advance. Therefore, the proposed method both provides theoretical guidance and an experimental basis for NLOS imaging, and also provides solutions to practical problems, with economic significance.ConclusionsBased on a TOF camera, we propose a 3D imaging method for NLOS targets. By analyzing the optical phenomena, we put forward a hypothesis that the degraded 3D image results from the clear 3D image convolution with some unknown scattering process, where the unknown scattering process is caused by the relay surface. Therefore, the GA is adopted to solve the approximate optical model of the relay surface, and then the LR deconvolution algorithm is to perform the 3D reconstruction of NLOS targets. The experimental results show that the NLOS targets are well reconstructed from both subjective and objective aspects. In practical applications, after the approximation model of the relay surface is determined, this algorithm can quickly capture the NLOS target and perform 3D imaging when a TOF camera is utilized, which indicates high application significance. In the future, deep neural networks may be leveraged to fit the degradation process to realize reconstruction.

The proposed method adopts a Fourier single-pixel imaging algorithm that includes a differential operation to enable the suppression of out-of-focus background noise. However, due to the utilization of single-pixel imaging, the measurement number of the proposed method is more than that of the traditional multi-mode microscopic imaging method. Through taking the experimental results in our paper as an example and employing Intel(R) Core(TM) i7-10700 CPU @ 2.90 GHz, 32.0 GB memory, it takes about 4 min to reconstruct four multi-mode images with a pixel count of 850×850, and the calculation time depends on the image number of pixels. Therefore, the proposed method is not suitable for dynamic imaging scenes.ObjectiveIn the manufacturing of micro-nano devices such as semiconductor chips, their morphology characterization is helpful for manufacturing process evaluation and defect detection. To obtain complete information on the sample to be tested, one has to use bright field microscopes and dark field microscopes for joint characterization of multiple imaging modes. However, the existing methods to achieve multi-mode imaging need to change the experimental device or adopt a different imaging system, which leads to different fields of view of the acquired multi-mode images and is not conducive to comprehensively analyzing the samples to be tested by synthesizing the multi-mode imaging results. Therefore, it is necessary to develop multi-mode microscopic imaging technology to deal with the above problems. For example, microscopes based on LED array light source and multi-mode microscopic imaging technology using spatial light modulators are utilized to perform different filtering in the spectral plane of traditional microscopes.MethodsOur paper proposes a multi-mode microscopic imaging technology based on the single-pixel imaging principle. It employs wide-field structured light to encode the spatial information of the sample and then leverages each pixel of the camera as a single-pixel detector to reconstruct an Ariy image. Different points on the Airy disk image correspond to different orders of signals diffracted by different object points. Therefore, by designing different digital pinholes to extract the values at different positions on the Airy disk image and arranging them according to the camera pixel coordinates, multi-mode images can be reconstructed, such as bright field images, bias images, and dark field images.Results and DiscussionsTo design the digital pinhole to extract the signals of different diffraction orders of the sample, we should calibrate the conjugate relationship between the camera and the pixels of the spatial light modulator. We adopt an affine matrix to represent the correspondence between the camera and the spatial light modulator, and the calibrated reprojection error is shown in Fig. 7. After the affine matrix is calibrated, digital pinholes can be generated according to the calibrated affine matrix. The proposed method is employed to perform multi-modal imaging on a circuit chip and obtain the multi-modal results shown in Fig. 10. However, due to the directionality of digital pinholes, the reconstructed multimodal results are also anisotropic. To obtain isotropic multi-mode results, we design digital pinholes with the same radius and different directions as shown in Fig. 12 to obtain multiple images and then synthesize these images to obtain isotropic results. The isotropic multi-mode results are shown in Fig. 13. We have also verified through the resolution board experiment (Fig. 14) that the contrast of the bright field images reconstructed by extracting the bias signals is higher than that of the bright field images constructed by extracting the zero-frequency signals.ConclusionsOur paper proposes a multi-mode microscopic imaging technique based on the single-pixel imaging principle. It adopts each pixel of the camera as a single-pixel detector to reconstruct an Airy disk image, and the values at different positions in the Airy disk image represent different orders of signals diffracted by different object points. The experimental results show that this technology does not need to change the experimental device or replace different microscopes. By designing different digital pinholes, the light intensity values at different positions can be extracted from the single-pixel reconstructed Airy disk images. These light intensity values correspond to different orders of diffraction signals from different object points. By arranging these extracted signals according to the camera pixel coordinates, images of different modes can be reconstructed, such as bright field imaging, bias imaging, and dark field imaging. The fields of view of these multi-mode images are the same, which is conducive to the comprehensive analysis and acquisition of the complete shape characteristics of the samples. As a new computational imaging method, the proposed method is expected to be applied to the offline characterization of micro-nano devices.

ObjectiveThe diagnosis technology of laser-driven inertial confinement fusion (ICF) is an essential research direction to promote the development of controllable nuclear fusion. Specifically, the velocity interferometer system for any reflector (VISAR) is the most extensively employed diagnostic device of ICF, and it is adopted to record one-dimensional wavefront information with picosecond time resolution generated by ICF. However, the information is only confined to the velocity changes of a line on the target surface and cannot provide two-dimensional (2D) velocity field information of all locations on the target surface. A new type of 2D-VISAR diagnosis system is obtained by combining the compressed ultra-fast photography (CUP) system which can implement 2D ultra-fast imaging with line-VISAR and is applied to ICF diagnosis. The compressed 2D images with high time resolution are obtained, and the 2D stripes of time-varying shock waves are obtained by the inversion algorithm. However, the current mainstream inversion algorithms are readily affected by their regularization parameters, with unstable imaging effects. Therefore, we propose a compressed image inversion algorithm based on variable-accelerated generalized alternating projection (GAP) to optimize the CUP-VISAR inversion effect.MethodsWe put forward a novel CUP-VISAR compressed image inversion algorithm. First, considering the strong low-rank and gradient sparsity characteristics of 2D fringe images, low rank (LR) regularization and total variation (TV) regularization are employed as the prior information of image processing, and the problem is transformed into an optimization problem based on double prior constraints of LR and TV. The GAP algorithm is utilized as an iterative solution framework to decompose the objective optimization problem into two sub-problems, and TV and LR are extended to the sub-problems respectively to give full expression to the synergistic effect of double prior constraints. Finally, considering the influence of error accumulation in iterative GAP under chaotic images, the structure of the algorithm is optimized and improved, and the variable-accelerated processing is proposed to reduce iterative error accumulation.Results and DiscussionsIn the simulation experiment, the shock wave velocity recorded by line-VISAR is extracted to generate a 2D simulation image (Fig. 3). Furthermore, the 2D-VISAR simulation fringe image is extended in the time dimension as the original data (Fig. 4), which is encoded, chopped and compressed to obtain a 2D image with 60 compressed frames (Fig. 6). The inversion effect is simulated and contrasted in noise-free and noisy environments. The results show that compared with the existing algorithms, the average peak signal-to-noise ratio of the proposed algorithm is increased by 11.0 dB and the average structural similarity is increased by 11.4% in the case of no noise (Fig. 8). In the case of noise, the algorithm has stable inversion effect and sound anti-noise ability (Table 1 and Fig. 9). In the real experiment, the experimental optical path is set up (Fig. 10), the CUP-VISAR branch system is adopted to obtain coded images and 2D compressed images, and the line-VISAR branch system is leveraged to obtain 1D shock wave velocity data as the experimental control group (Fig. 11). In the CUP system, the DMD coding aperture is 8×8, the stripe camera slit is entirely opened (about 5 mm), and the temporal resolution is 200 ns. Pulse width of the probe is 5 ns, the image detection frame rate is 5 frames/ns, and the pixel size of the compressed image is 349×788, with the number of compressed frames being 25. The results show that compared with the actual compressed images, the proposed algorithm can still invert the 2D shock wave periphery with clear contour (Figs. 12 and 13). The inversion results are transformed into line-VISAR images and the one-dimensional shock wave velocity is extracted for comparison. Compared with that of the line-VISAR shock wave velocity, the maximal relative error of the inversion results of the proposed algorithm decreases from 20.38% to 11.66%, with a reduction of 8.72% (Fig. 15).ConclusionsIn the proposed CUP-VISAR compressed image inversion algorithm, we introduce LR and TV regularization terms according to the characteristics of fringe images and build a double prior constraint optimization model to promote piecewise smoothing and preserve image features and details. Then, we utilize the GAP framework to solve the optimization model iteratively. Finally, we propose a variable-accelerated method to enhance the anti-noise ability of the algorithm for addressing the error accumulation problem caused by noise factors in GAP iteration. The experimental results show that the proposed algorithm performs well in subjective visual and objective evaluation parameters for the inversion quality of CUP-VISAR compressed images. This means that the algorithm can retain image structure details and smooth characteristics, with sound denoising performance, which verifies the feasibility of the proposed algorithm in CUP-VISAR.

ObjectiveWith the vigorous development of technology in optics and semiconductors, transparent devices with smooth surfaces such as high-precision optical glass are widely employed in semiconductor and other fields. During grinding and polishing, optical glass inevitably produces a large number of scratches, pockmarks, bubbles, pollution particles, microcracks, and other defects in the subsurface. Micron/nanoscale subsurface defects will reduce the physical properties of transparent samples such as optical components, and seriously affect the development of processing and manufacturing technologies in optics and semiconductors. How to detect the subsurface defects of transparent optical components with high precision and provide key parameters for the high-precision preparation of transparent optical components has become an urgent problem in optical inspection. Subsurface defect detection technologies include destructive and non-destructive ones. Destructive detection technologies are simple to operate, and can intuitively and effectively observe the detection results, but they will make the test defects and the actual defects different. Therefore, the existing subsurface defect detection methods mainly focus on non-destructive detection technologies, including total internal reflection microscopy (TIRM), optical coherence tomography (OCT), and laser confocal scanning microscopy (CLSM), but these detection technologies cannot take into account both resolution and detection speed. Through-focus scanning optical microscopy (TSOM) is a model-based optical computational imaging method that can achieve non-contact, non-destructive, and fast measurement of three-dimensional nanostructures. TSOM features high sensitivity, simple hardware system, and sensitivity to nanoscale size changes, and it is not limited by the optical diffraction limit and can conduct online detection. To quickly and non-destructively detect subsurface defects of transparent samples, we propose a new method for detecting micronscale defects in the subsurface of optical components by TSOM and explore it in detail.MethodsThe incident light from the halogen lamp source is irradiated to the subsurface of the sample. Scattering occurs where a defect exists and the scattering light is imaged by the objective lens to the CCD target detector. This method is based on traditional light microscopy and equipped with a high-precision piezoelectric ceramic displacement stage to control the Z movement of the sample, with the movement positioning accuracy of 1 nm. A series of optical images of the subsurface defects are obtained at a certain range of defocus positions from above to below the focus point by scanning along the propagation direction of the light field (Z direction). The images series are stacked according to spatial positions to form an image cube (TSOM cube). Then, the image cube is sliced along the Z direction to generate the TSOM image. The TSOM image is processed through data analysis algorithms to obtain three-dimensional information such as size, shape, and position of micronscale and nanoscale structures, and the target is located by the maximum gray value.Results and DiscussionsThe method can be adopted to detect and locate micronscale defects (Fig. 5). As the refractive index of scattered light is different in different materials, compensation and correction of the refractive index are necessary to obtain the actual depth of the defects (Fig. 7). According to the refraction law, the compensation and correction formula for the refractive index can be derived [Eq. (1)]. After TSOM scanning, the actual depth of the subsurface defects can be calculated based on Eq. (1). Experimental comparison and simulation (Fig. 10) show that larger subsurface defects exhibit volume effects. The position of the maximum light intensity corresponding to the defect in TSOM scanning is point p at the intersection of the radius parallel to the optical axis and its tangent (Fig. 11). To accurately determine the depth from the sample surface to the center of the defect, we should add the defect radius to the depth calculated in the TSOM scanning. After the radius correction, the average depth of the defect is 2000.3 μm, with a standard deviation of 2.4 μm and a relative standard deviation of 0.12%. Compared with other measurement methods, the depth deviation is 1.8 μm (Table 2).ConclusionsThe TSOM method can be employed to detect micronscale subsurface defects in transparent glass and locate the defect depth with a relative standard deviation of up to 0.12%. Theoretically, when the absolute depth of subsurface defects is reduced to hundred-microns, the standard deviation of subsurface defect location is only sub-microns. When TSOM is utilized to locate the depth of subsurface defects at the micrometer scale, it is necessary to compensate and correct the refractive index to further improve the accuracy of defect depth location. When the size of the subsurface defect is large, both the simulation and experiment show that the scattering light intensity distribution of TSOM is affected by the volume effect of the defect itself, which has an important influence on the depth of locating the center of micronscale defects.

ObjectiveWith the rapid development of construction and operation scale of infrastructure such as bridges and tunnels, structural safety is becoming increasingly important. Structural health monitoring is a vital issue in structural safety, operation, and maintenance. Displacement monitoring is one of the most fundamental and routine tasks in structural health monitoring. Among various displacement measurement methods, the contact displacement measurement method is conceptually straightforward to implement. However, it requires specific environmental conditions for accurate measurements. The non-contact displacement measurement methods (e.g., level gauges and total stations) have widespread applications in engineering. However, they cannot satisfy the measurement requirement of long-span structures due to the large-scale range and high accuracy requirements. For deformation monitoring of long linear structures like bridges and tunnels, the displacement-relay series camera network method has been proven to be effective. With the increasing number of camera stations, the accumulated error can be caused due to uncertain factors such as feature extraction errors and model simplifications. It is a challenge to reduce the accumulated error of the camera network. Hence, this study aims to suppress the accumulated error effect, consequently enhancing the measurement accuracy of the camera network.MethodsWe first conduct the theoretical analysis to demonstrate the solution conditions for the displacement-relay series camera network method. Before the simulation study, the basic network configuration is defined. Then, the accumulated error effect of the camera network is investigated through numerical simulations. According to the simulation results, we propose a method based on error coefficients to reduce the accumulated error of the camera network. The error coefficient consists of the condition number of the measurement matrix and the station number of the network. Finally, we provide a direct characterization of network measurement errors to enable the investigation of cumulative effects resulting from displacement transmission errors in the camera network. The influence of benchmarks and survey marks' positions, and their numbers on the measurement accuracy of the displacement-relay camera network is thoroughly analyzed. Based on the analysis results, the camera network configuration is optimized, and an optimal distribution pattern for camera stations and mark points is advised. Finally, the feasibility of the proposed method is verified by field experiments.Results and DiscussionsWe initially discuss the fundamental principle of displacement-relay series camera network and the necessity of benchmarks through the derivation of Eqs. (5)-(10), which also paves the way for extending the theoretical model to a complex camera network. In Section 3.1, the accumulated error effect of the camera network is investigated by integrating the theoretical foundation and formula derivation from Section 2. Subsequently, a detailed discussion of the error transmission effect and error suppression method is carried out through numerical simulations for the basic configuration of the series camera network. Next, the influence of network composition parameters on the transmission error is investigated (Figs. 5 and 6). The transmission errors for the distribution positions of all benchmarks and survey marks are studied (Figs. 7 and 10). A theoretical model that reflects the transmission error of the camera network is proposed by introducing the error coefficient as an evaluation index and leveraging the highly linear correlation between the measurement error and the error coefficient [Eq. (18)]. Finally, the proposed error reduction method is verified by the observation data obtained from a long-span cable-stayed bridge.ConclusionsWe focus on the mechanism and suppression methods of transmission error in the displacement-relay series camera networks. The results show that the displacement transmission link of dual-head cameras requires at least two benchmarks. There is a positive correlation among the number of camera stations, settlement amplitude, pitch angle variation, and network transmission error, while there is a negative correlation between the number of measurement marks and network transmission error. The proposed design method of camera network error suppression based on error coefficient can guide network configuration optimization. The measurement error of the camera network is highly correlated with the defined error coefficient, and the smaller error coefficient leads to a smaller measurement error. The camera stations should be placed at benchmarks to suppress accumulated errors. Replacing the mark points at the benchmarks with camera stations shows that the error suppression effect can reach over 60%, but the error suppression effect will weaken as the number of camera stations increases. The actual bridge verification results indicate that the measurement error is suppressed by 69.13%. The mark points are advised to be placed at camera stations to suppress accumulated errors. The simulation results show that this suppression method can optimize the transmission error of the basic configuration network from 2.88 to 1.01 mm. The actual bridge verification results show that the camera error is suppressed from 10.14 to 3.07 mm.

ObjectiveIn recent years, with the development of computer vision, image processing, data fusion, and other technologies, visual measurement has been widely applied in various fields of modern industry. The inertial measurement unit (IMU) has fast response speed, good dynamic performance, and high short-term accuracy, which can well improve the robustness of visual positioning in complex industrial environments represented by large-scale equipment manufacturing sites such as shipbuilding and aerospace. However, traditional filtering-based visual-inertial fusion algorithms maintain the fusion weights of visual and inertial information unchanged. When visual observation conditions are poor, traditional algorithms will greatly reduce the accuracy of visual-inertial positioning. Therefore, in order to solve the problems of low accuracy, poor adaptability, and low robustness caused by poor visual observation conditions in complex industrial environments, we propose a visual-inertial adaptive fusion algorithm based on measurement uncertainty. To address the situation of poor visual observation conditions, we dynamically adjust the data fusion weights of the visual sensor and inertial sensor by analyzing the measurement uncertainty of visual positioning. It can greatly improve measurement accuracy while enhancing measurement robustness.MethodsIn order to complete the real-time assembly and positioning tasks of large-scale and complex equipment such as spacecraft and ship hulls, we use a wearable helmet as the carrier, combined with immersive measurement technology, and calibrate the visual-inertial system by using a three-axis precision turntable. Loosely coupled filtering is used to fuse visual and inertial information, and real-time global pose estimation of the surveyor is obtained. In this paper, the measurement uncertainty of visual positioning based on the implicit function model is analyzed. The global control point position error and the image point extraction error are taken as the input of the uncertainty propagation model, and the measurement uncertainty of visual positioning is obtained as the output. Then, the error state extended Kalman filter (ESKF) is used to achieve visual-inertial fusion localization. Updating the state of ESKF relies on the covariance matrix of observation information, which directly affects the accuracy of ESKF. We also use cameras to provide observation information, but the visual positioning results are often greatly affected by the measurement environment. When the observation condition is poor, the accuracy of visual positioning decreases, and the observation confidence in ESKF does not match the measurement uncertainty of visual positioning, resulting in the inability of ESKF to achieve optimal estimation. In order to adapt to different visual observation conditions, we establish an adaptive filtering fusion positioning model. The observation noise covariance matrix in the ESKF model is represented by the measurement uncertainty of visual positioning, so the fusion weights of visual and inertial information in the ESKF model are adaptively adjusted. When the measurement uncertainty of visual positioning is small, which means that visual positioning is accurate, the Kalman gain is large, increasing the influence of camera observation on ESKF results. When the measurement uncertainty of visual positioning is large, which means that visual positioning is inaccurate, the Kalman gain is small, reducing the influence of camera observation on ESKF results.Results and DiscussionsWe use a T-mac pose measurement system of the laser tracker and a precision three-axis turntable to experimentally verify the positioning accuracy of the proposed fusion positioning algorithm (Fig. 3). In the process of system movement, the visual positioning uncertainty is solved according to the implicit function model (Fig. 4), and it is substituted for the covariance matrix of observation information of ESKF model to obtain the results of the proposed method. In the actual measurement, the relative pose between T-mac and helmet measurement system remains fixed, but due to measurement errors, the results obtained are not fixed. The standard deviation is used to measure the dispersion degree of relative pose to evaluate the pose measurement accuracy of the helmet measurement system. Compared with the results obtained by pure visual positioning and traditional ESKF (Fig. 6), when the measurement uncertainty of visual positioning is small, and the visual observation condition of the proposed method is good (Table 2), the standard deviation of each axis angle is less than 0.04°, and the standard deviation of each axis position is less than 2 mm. All three methods can get good positioning results. When the measurement uncertainty of visual positioning is large, and the visual observation condition is poor (Table 3), the positioning results of pure visual positioning and traditional ESKF have a significant deviation. In addition, by using the proposed method, the standard deviation of each axis angle is less than 0.2°, and the standard deviation of each axis position is less than 7 mm. Compared with the traditional ESKF method, the standard deviation of the three-axis angle of the proposed method decreases by 46.4% and 28.7% except for the X-axis, and the standard deviation of the three-axis position decreases by 66.4%, 60.4%, and 43.7%ConclusionsThe industrial environment is complex, so it is difficult to ensure that visual observation is always in good condition. Pure visual positioning and traditional ESKF methods require good visual observation conditions to obtain accurate pose estimation. The visual-inertial adaptive fusion algorithm based on measurement uncertainty proposed in this paper can provide better pose fusion results than pure visual positioning and traditional ESKF methods under poor visual observation conditions. The proposed method adjusts the weight of camera observation information in a timely manner, better adapts to different observation conditions, enhances the positioning robustness of the system, and improves the accuracy of filtering-based visual inertial positioning measurement by solving the measurement uncertainty of visual positioning, so it meets the needs of visual-inertial positioning in complex industrial environments.

ObjectiveTraditional vehicle-mounted supporting platforms have large shaking amounts and are difficult to meet the requirements of high and non-landing measurement accuracy of the vehicle-mounted optoelectronic theodolite. We design a novel supporting platform with the truss skinned structure based on a discrete topology optimization method with considering the demand for high stability, light weight, and easy manufacturing. The vehicle-mounted theodolite characterized by stronger mobility, faster, and more convenient deployment processes, has been the major trend in test ranges. The supporting platform provides a new measurement reference for vehicle-mounted theodolite. Therefore, the stability of the supporting platform is an important factor enabling theodolite to achieve high-accuracy measurement. Due to the limited size, weight conditions, and dynamic characteristics of theodolite, the platform stability is consistently low. Generally, the shaking amount is over 40″, even up to hundreds of arc seconds. Some appropriate correction methods can be employed to improve the pointing accuracy of the theodolite, but the timeliness is limited. As a result, it is necessary to design a kind of supporting platform featuring high stiffness, good dynamic characteristics, and light weight.MethodsA truss discrete topology optimization method is adopted to design the supporting platform. The platform frame is established according to its basic shape, and the design domain and non-design domain of the structure are also determined according to the finite element grids. The solid isotropic material with penalization (SIMP) interpolation model is adopted in the topology optimization. The minimum flexibility is set as an objective function and the volume fraction as a constraint. The topology optimization layout is then obtained (Fig. 3). Based on the above topology optimization results, a detailed model of the optimized platform system, which consists of theodolite, platform, and lifting legs, is developed for simulation (Fig. 5). The platform truss structure is discretized by the truss element. The theodolite has a mass of 30000 N, and the maximum angular accelerations of 20 (°)/s2, which are set as the static load and dynamic load in the analysis respectively. The static and model properties of the supporting platform are simulated successfully, and the supporting platform is also manufactured. The stability experiment is then carried out.Results and DiscussionsSimulations are conducted to determine the stability of the optimized platform. The mass of the optimized platform is reduced by 411.1 kg to ensure the support stiffness and dynamic characteristics (Table 1). The deformations of the optimized platform under gravity loading are obtained (Fig. 6). The maximum deformation is 0.142 mm, which occurs on the position fixed by the theodolite. The surface tilt of the position is 3.9″. The static deformations under the torques in the direction of the length, width, and height of the platform are also acquired (Fig. 7). The maximum amount of platform shaking is 4.3″, with the sound performance of the platform to resist torque load. The first four vibration mode shapes for the platform system are obtained (Fig. 8). The first order frequency is 19.2 Hz. Square steel tubes are welded to the trusses. The upper and lower platform surfaces are fitted with metal skins for protection and as mounting bases for theodolite and legs. The platform weighs 2000 kg, with the length of 3150 mm, width of 1830 mm, and height of 300 mm. We also set up the experimental apparatus, which consists of the theodolite, the platform, four lifting legs, a dual-axis collimator, and a collimator target (Fig. 9). The theodolite works on the platform and each lifting leg is mounted separately on all four corners of the platform. The legs utilize servo motors and CAN communication technology to achieve automatic leveling with the help of a program-controlled computer. The theodolite does sinusoidal motion at the set angular accelerations from 0.5 (°)/s2 to 20 (°)/s2. The response accelerations for the basis of theodolite are 0.008-0.55 m/s2 (Figs. 11 and 12). The maximum amplitude is 0.22 m/s2 when the frequency is 21.7 Hz. There is no obvious resonance response that affects the tracking performance of the theodolite. The shaking amplitude of the platform is measured by an inclination sensor, with a maximum amount of 7.2″. The pointing error of vehicle-mounted optoelectronic theodolite is also measured, with an accuracy of 13.8″ at azimuth and 14.9″ at pitch. The supporting platform has a high support stability.ConclusionsThe stability of the vehicle-mounted supporting platform is an important factor enabling theodolite to achieve high measurement accuracy. In our paper, a novel supporting platform with the truss skinned structure is designed based on the discrete topology optimization method. The mass of the optimized platform is reduced by up to 26.5% to ensure the support stiffness and dynamic characteristics. The stability experiment of the supporting platform is carried out. The response accelerations for the basis of theodolite are 0.008-0.55 m/s2 in the whole angle acceleration range from 0.5 (°)/s2 to 20 (°)/s2. The peak response acceleration appears from 20 Hz to 21 Hz. There is no obvious resonance response that affects the tracking performance of the theodolite. The shaking amplitude of the platform is measured by an inclination sensor. The maximum amount of platform shaking is 7.2″. The supporting platform has a high support stability and has been applied to a vehicle-mounted photoelectric theodolite. The real-time and non-landing pointing accuracy is better than 15″. It is suitable for vehicle-mounted optoelectronic theodolite to achieve high and non-landing measurement accuracy.