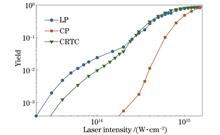

ObjectiveThe interaction of atoms, molecules, and laser fields can generate many interesting nonlinear phenomena in the research on strong field physics. Among them, non-sequential double ionization (NSDI) has become a research hotspot. In the past, people mainly studied phenomena related to NSDI in the monochromatic laser field. With the continuous development of laser technology, a combined electric field has been applied to the study of NSDI for atoms and molecules. The electric field is composed of two circularly polarized (CP) laser fields with fixed frequency and is also called two-color CP laser field. At present, the counter-rotating two-color circularly polarized (CRTC) laser field is widely applied in research on enhancing the NSDI yield due to its special electric field structure. In recent years, studies have shown that the CRTC laser field is beneficial to increase the NSDI yield for O2. However, for triatomic molecules with more nuclei, whether the CRTC laser field can still increase the NSDI yield is an unexplored question. The dynamics of linear triatomic molecules (CO2) in the linearly polarized (LP) laser field and CP laser field have been studied, but there are few studies on the CO2 dynamics in CRTC laser fields. Therefore, we compare and analyze the NSDI yield for CO2 driven by intense laser fields, and further complement the research on the electron dynamics process in NSDI of linear triatomic molecules.MethodsThe method we adopt is based on the classical ensemble method for solving the time-dependent Newton equation. This method has been widely employed in the study of strong laser fields and atomic-molecular interactions. The NSDI electron dynamics of atomic molecules are simulated through the classical ensemble method in the following three steps. First, a stable initial ensemble is obtained. Second, the laser field components are added to the time-dependent Newton equation, and the initial ensemble is substituted to obtain the final coordinates and momentum distribution of the electrons. Third, the data with double ionization is screened. The initial ensemble is mainly obtained by the following ways. At first, the spatial positions of two electrons are given by the Gaussian random matrix, the total potential energy of two electrons is calculated, and the coordinates of the potential energy less than the total energy are filtered. Then the total kinetic energy is obtained by subtracting the total potential energy from the total energy, and the total kinetic energy is randomly assigned to the electrons to obtain their momentum and coordinates. Finally, the coordinates and momentum of two electrons are substituted into the time-dependent Newton equation without the laser field for a period of time, and then a stable initial system synthesis is obtained. The LP laser field we leverage has a wavelength of 1200 nm, the CP laser field has a wavelength of 1200 nm, and the CRTC laser field is a combination of two circularly polarized laser beams with wavelengths of 1200 nm and 600 nm.Results and DiscussionsFirst, we calculate the NSDI yield for CO2 in LP, CP, and CRTC laser fields for various laser field intensities (Fig. 1). The results show that the yield of CO2 molecules under the CRTC laser field is higher than that under the LP laser field when the laser field is higher. However, the opposite results are obtained when the laser field intensity is lower. Since the knee structure doesn't occur in the yield curve under the action of the CP laser field, it is not discussed in our paper. Then, we calculate the electron return energy diagram based on the main time distribution of the recollision (Fig. 3). The return energy diagram can help us derive the reason for the intersection of the CO2 yield curves. Second, we investigate the factors affecting the CO2 NSDI under the action of intense laser fields. By comparing the single ionization rate and double ionization rate of CO2 in the LP laser field and CRTC laser field (Fig. 4), we can conclude that the main factors affecting the CO2 NSDI are the laser intensity and laser field type. Third, we explore the electron dynamics process for CO2NSDI in the areas with lower laser intensity and higher laser intensity respectively. The results show that under lower laser intensity, the NSDI yield driven by the LP laser field is higher than that driven by the CRTC laser field because of the lower suppression barrier (Fig. 5). However, when the laser intensity is higher, the suppression barrier will be distorted and then the main factor affecting the NSDI yield is the structure of the laser field in this case. As the CRTC has a three-lobed structure (Fig. 7) which helps to increase the number of electrons undergoing recollision, the NSDI yield in the CRTC laser field is higher than that in the LP laser field.ConclusionsOur paper investigates the NSDI yield for linear triatomic molecular (CO2) driven by LP, CP, and CRTC laser fields. The results indicate that the NSDI yield in the CRTC laser field is lower than that in the LP laser field under lower laser intensity. This is because the interaction between the laser field and the molecular coulomb potential forms a suppression barrier, and the suppression potential in the CRTC laser field is higher than that in the LP laser field. As a result, the ionization of the second electron in the CRTC laser field is limited. However, when the laser intensity is higher, the yield in the CRTC laser field is higher than that in the LP laser field. This is because with the increasing laser intensity, the molecular coulomb potential is distorted, and then the molecular structure almost no longer exerts an effect on the ionization rate, which is largely influenced by the laser field structure. The CRTC laser field is characterized by a special three-lobed structure, and it can help to increase the number of returning electrons and the electron recollision possibility. Therefore, the CO2 yield is higher under the action of the CRTC laser field. We further complement the study of the NSDI electron dynamics process of linear triatomic molecules driven by intense laser fields, and our results also provide references to improve the NSDI yield of molecules in experiments.

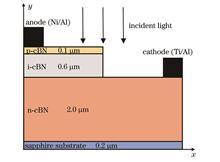

ObjectiveIn recent years, there have been many studies on the preparation of high-quality hexagonal boron nitride (hBN) materials and the application of hBN ultraviolet (UV) photodetectors. Cubic boron nitride (cBN) has a higher band gap compared with hBN [cBN: (6.4±0.5) eV, hBN: (5.9±1.0) eV], and a higher hardness and melting point, which makes cBN-based UV photodetectors more advantageous. However, on one hand, due to a large number of spontaneous defects inside cBN and the non-uniform process, which result in poor doping efficiency of the prepared devices; on the other hand, different doped impurities exhibit different optical and electrical properties, both making the poor performance of the detectors. Additionally, different photodetector structures such as pin, APD, and heterostructure can also bring about performance differences. Silvaco TCAD software is based on a series of physical models and physical equations that rely on well-established solid-state and semiconductor physics theories or on some empirical formulas to accurately predict the electrical, thermal, and optical results of semiconductor devices. Meanwhile, mesa pin photodetectors feature low dark current and high internal quantum efficiency. Therefore, a numerical model of cBN-based mesa structured pin photodetector is built by Silvaco TCAD software, and the effects of different doping concentrations and thicknesses of the cBN layer on photocurrent, dark current, and internal quantum efficiency of this model are calculated.MethodsThe numerical calculation model of cBN-based mesa-structured pin is built by Silvaco TCAD software (Fig. 2). As the intrinsic layer is n-type by default in the undoped case, it is replaced by a n-type cBN with a doping concentration of 1×1015 cm-3 and a thickness of 0.6 μm, and p-type and n-type background carrier concentrations are set as 1×1014 cm-3 and 1×1019 cm-3 with thicknesses of 0.1 μm and 2.0 μm respectively. Based on the constant low-field mobility model (conmob), parallel electric field-dependent mobility model (fldmob), Auger recombination, Shockley-Reed-Hall (SRH) recombination, and basic semiconductor equations of Poisson's equation, carrier transport equations, and carrier continuity equations, the effects of doping concentrations of each layer and thicknesses of each layer on the photocurrent, dark current and internal quantum efficiency are simulated and calculated by the "control variate" method. Firstly, the spectral response of the initial structure is obtained in the deep UV band (Fig. 3), which indicates that the device has a strong response to deep ultraviolet. Secondly, on this basis, the doping concentrations of p-type, i-type, and n-type layers are varied to analyze the changes in performance parameters and select the better doping concentration values with sound device performance. Finally, the thicknesses of p-type, n-type, and intrinsic layers are changed to analyze the performance and select better values.Results and DiscussionsThe doping concentration of p-type rises, and the photocurrent, dark current, and internal quantum efficiency firstly increase and then decrease (Figs. 4-6). This is because the concentration of holes in the p-type region is higher and the probability of recombination increases, resulting in fewer electron-hole pairs generated by photoexcitation. The photocurrent and dark current decrease with the increasing doping concentration of the i-type layer, while internal quantum efficiency is hardly affected (Figs. 7-9). The possible reason is that the intrinsic layer is replaced by a n-type layer and the rising electron concentration increases the recombination probability, leading to the decreased photocurrent. The dark current decreases with the rising doping concentration which enhances the built-in electric field. The dark current increases with the increasing doping concentration of the n-type layer (Fig. 11), but the photocurrent and internal quantum efficiency decrease with it (Figs. 10 and 12). The possible reason is that the heavy doping concentration of the n-type layer increases the diffusion current inside the region, which causes decreased photocurrent and internal quantum efficiency and increased dark current. The photocurrent and internal quantum efficiency decrease while the dark current increases with the rising thickness of the p-type layer (Figs. 13-15). Many photogenerated carriers will be absorbed by the p-type layer, which is too thick to allow carriers to diffuse into the electric field region and form photocurrent. The dark current increases with the thickness of the intrinsic layer while the internal quantum efficiency decreases with it (Figs. 17 and 18). Differently, the thicker intrinsic layer thickness leads to a smaller photocurrent at low bias,but the photocurrent is positively correlated with thickness at high bias(Fig. 16). Thus, the effect of bias voltage on photocurrent should be considered. An increase in the n-type layer thickness increases the photocurrent but causes a little decrease in the internal quantum efficiency, without clear regularity of thickness and dark current (Figs. 19-21). The increasing thickness of the n-type layer means rising light absorption area, and the rising volume/area ratio decreases the recombination. Those could be the possible factors for the photocurrent increase, which causes the current crowding phenomenon, then local heat concentration, and higher thermal energy obtained by electrons. Finally, Auger recombination is enhanced to reduce the internal quantum efficiency.ConclusionsThe increase in the doping concentration of the p-type layer makes all the parameters increase first and then decrease, but the overall change has little effect. The increasing doping concentration of the i-type layer decreases the photocurrent and dark current and has little effect on the internal quantum efficiency. The rising doping concentration of the n-type layer makes the photocurrent and internal quantum efficiency increase, and the dark current greatly decreases to around 10-20 A. Increasing the thickness of the p-type layer exerts almost no effect on the dark current, but decreases the photocurrent and internal quantum efficiency. The rise in intrinsic layer thickness will increase the dark current and decrease the internal quantum efficiency. The photocurrent change with the thickness of the intrinsic layer may also be controlled by the bias voltage. The larger thickness of the n-type layer leads to larger photocurrent, but it has little effect on the dark current and internal quantum efficiency. Since there are no defects in the material during the simulation and the impurities are uniformly distributed, the calculation results are ideal. However, the actual experimental preparation of the device is influenced by the process factors, and the various defects introduced in the material and the non-uniform distribution of impurities make the actual value worse than the simulated calculation value. Finally, the doping concentrations of p-type, i-type, and n-type layers are set as 1×1017 cm-3, 1×1015 cm-3, and 1×1015 cm-3, and the thicknesses of p-type layer, i-type layer, and n-type layer are 0.1 μm, 0.8 μm, and 2.2 μm respectively, the performance obtains the photocurrent is 3. 910×10-8 A, with a maximum dark current of 8.177×10-20 A and internal quantum efficiency of 98.565%.

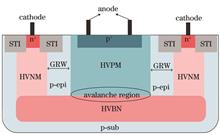

ObjectiveOwing to outstanding advantages such as small size, low power consumption, and high time resolution, direct time of flight (dTOF) detectors have attracted great interest in numerous fields, including automotive driving, facial recognition, augmented and virtual reality (AR/VR), and 3D imaging. Such systems exploit a fast on-chip time-to-digital converter (TDC) in conjunction with single-photon avalanche diode (SPAD) to measure the TOF value, which can achieve high interference immunity and wide dynamic range. Currently, they are rapidly developing towards the direction of low-cost and high integration density compatible with the silicon-based process. However, some problems still exist in practical applications, such as the low safety threshold of human eyes and mutual restriction between resolution and dynamic range. To this end, we implement a near-infrared single-photon dTOF detector with high sensitivity, high time resolution, and wide dynamic range based on 0.18 μm Bipolar-CMOS-DMOS (BCD) technology.MethodsThe detector is mainly composed of a TDC circuit, 16 SPADs, and an analog front-end (AFE) circuit that is coupled with each SPAD (Fig. 3). The integrated SPAD device (Fig. 1) adopts a new structure with a deep high-voltage p well (HVPW)/buried n+ junction as avalanche multiplication region to significantly improve the near-infrared photon detection probability (PDP). A certain distance is set aside between the high-voltage n well (HVNW) and HVPW, and is the guard ring width (dGRW). The virtual guard ring formed by the low doping p-type epitaxial layer helps lower the dark count noise. The proposed SPAD devices with dGRW is 0.5, 1.5, 2.5, and 3.5 μm respectively are simulated by technology computer-aided design (TCAD) based on 0.18 μm BCD technology to study the influence of dGRW on device performance (Fig. 2). The simulation results show that the device can work normally at dGRW=2.5 μm, and the high avalanche field can be obtained to ensure a higher detection probability for near-infrared photons. Meanwhile, the low field in the guard ring region can avoid the dark count noise caused by the carriers generated at the shallow trench isolation (STI) interface. In the readout circuit, the AFE circuits are directly connected with the SPADs to cut off avalanche current and generate narrow pulses. These narrow avalanche pulses are combined by OR tree into one signal which is fed into TDC as a stop signal. Furthermore, a three-step hybrid TDC consisting of the coarse counter, fine counter, interpolator, and phase-locked loop (PLL) is designed to obtain high time resolution and wide dynamic range (Fig. 4). The PLL is a third-order type-Ⅱ loop and the voltage-controlled oscillator (VCO) is composed of a four-stage ring oscillator to offer four-channel multi-phase clocks (P1, P2, P3, and P4) with low jitter, low phase noise, and uniform phase distribution. The fine counter adopting an asynchronous counter can not only count the clock number of P1 but also generate a lower frequency clock to drive the coarse counter. The coarse counter driven by a lower frequency clock adopts a synchronous counter with a linear feedback shift register structure, which can easily expand the dynamic range by increasing the number of counter bits. The start/stop interpolator employs D flip-flop and transmission gate (TG) to latch the state of four-channel multi-phase clocks when the rising edge of the start and stop signal arrives, achieving a high resolution which is 1/8 period of the clock P1.Results and DiscussionsThe proposed dTOF detector is fabricated in the 0.18 μm BCD technology and its electrical and optical properties are verified. The I?V characteristic of the SPAD is firstly measured with avalanche breakdown voltage of around 42.5 V, which matches well the TCAD simulation results (Fig. 7). DCR measurement results (Fig. 8) show that the DCR variation with temperature is not obvious and the overall level is lower than 1 kHz when the temperature is below 60 ℃. More importantly, the data demonstrates excellent performance of 200 Hz at 24 ℃ and 5 V excess bias voltage (Vex). The PDP measurements (Fig. 9) reveal that the PDP reaches a peak of 43.3% (600 nm) at Vex=5 V. Additionally, due to the deep avalanche region, there is a higher response sensitivity for near-infrared photons (780-940 nm), and a PDP of 7.6% is obtained at 905 nm. The measurement is performed by the external triggering to evaluate the dTOF readout circuit performance. Driven by a 30 MHz clock, the dTOF readout circuit can achieve a high resolution of 130 ps and a dynamic range of 258 ns (Fig. 11), with a differential nonlinearity (DNL) and integral nonlinearity (INL) less than ±1 LSB (1 LSB=130 ps) respectively (Fig. 12). In addition, the precision of the proposed detector has also been evaluated by carrying out almost 1000 consecutive single-shot measurement for different fixed TOF values. The measured results show that the statistic histogram of the fixed TOF (80 ns) presents Gaussian distribution and the peak histogram data matches well with the actual TOF (Fig. 13).ConclusionsA near-infrared single-photon dTOF detector with high sensitivity, high time resolution, and wide dynamic range is implemented by the 0.18 μm BCD technology. The test results show that at Vex=5 V, the PDP peak of the SPAD reaches 45%, the near-infrared PDP at the 905 nm wavelength is larger than 7.6 %, and the dark count rate (DCR) is as low as 200 Hz. Furthermore, the TDC circuit driven by multi-phase clocks with low jitter, low phase noise, and uniform phase distribution achieves a high resolution of 130 ps and a dynamic range of 258 ns with excellent linearity. The proposed dTOF detector features a high safety threshold for human eyes, high sensitivity, low noise, and high linearity, which is suitable for the application of low-cost and high-precision lidar systems.

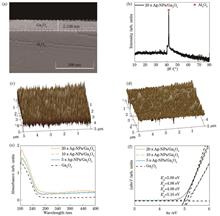

ObjectiveThanks to the low false alarm rate and high signal-to-noise ratio, deep ultraviolet (DUV) photodetector (PD) shows great application potential in ozone hole detection, high-voltage electric fire alarm, stealth bomber, and missile alarm. Gallium oxide (Ga2O3) is one of the most ideal materials for DUV PDs due to its suitable and tunable bandgap (4.5-5.3 eV), simple preparation process, and high stability. Nowadays, many studies focused on crystalline Ga2O3 film DUV PDs, but the lattice mismatch and strict growth parameters during the preparation put forward higher requirements for substrate materials and growth equipment. Compared with crystalline Ga2O3, amorphous Ga2O3 films have low preparation requirements and are easier to generate larger photocurrents due to the promotion of carrier separation by intrinsic defects. However, amorphous Ga2O3 is prone to higher dark current due to more defects. It is necessary to introduce noble metal Ag nanoparticles (Ag-NPs) to improve the photodetection performance of amorphous Ga2O3. On one hand, the formation of Schottky barriers between Ag-NPs and Ga2O3 films helps reduce the dark current of amorphous Ga2O3. On the other hand, the surface plasmon vibration of Ag-NPs can enhance the absorption of Ga2O3to UV light. Additionally, Ag-NPs can generate a large number of hot carriers under UV light to allow hot electrons with sufficient energy to overcome the Schottky barriers. We provide a feasible approach to realize DUV PDs with low dark current and high photo-to-dark current ratio (PDCR).MethodsAmorphous Ga2O3 films are grown on sapphire substrates by the facile radio frequency (RF) magnetron sputtering technology. The sputtering is carried out at room temperature for 70 min with chamber pressure and Argon flow rate of 1 Pa and 20 sccm respectively. The obtained Ga2O3 films are cut into four parts, and three of them are continuously sputtered with Ag-NPs on the Ga2O3surface by direct-current (DC) magnetron sputtering. The sputtering time is 5, 10, and 20 s respectively. The obtained samples are labeled as 5 s Ag-NPs/Ga2O3, 10 s Ag-NPs/Ga2O3, and 20 s Ag-NPs/Ga2O3. Finally, the four samples obtained previously are annealed in a tube furnace for 2 h at an annealing temperature of 200 °C. The crystal structure of the sample is characterized by X-ray diffractometer (XRD), the cross-section morphology of the sample is by scanning electron microscope (SEM), and the surface morphology of the sample is by atomic force microscope (AFM). Additionally, the absorption spectrum features Q6 ultraviolet-visible (UV-Vis) spectrophotometer, and the low-pressure mercury lamps with wavelengths of 254 nm and 365 nm are employed as the ultraviolet light source. A pair of cylindrical metal indium (In) with a diameter of 1 mm and a spacing of 1 mm is pressed on the surface of samples as electrodes, and the current-voltage (I-V) characteristics and transient response curve (I-t) of the PDs are measured by a B1505A power device analyzer.Results and DiscussionsAFM results confirm the introduction of Ag-NPs on the surface of amorphous Ga2O3 films, and the surface mean square root (RMS) roughness of the Ag-NPs/Ga2O3 film after sputtering Ag nanoparticles for 20 s is significantly increased from 0.218 to 6.390 nm [Figs. 1 (c) and 1(d)]. Meanwhile, the absorption of Ga2O3 to UV light also increases obviously after sputtering Ag-NPs [Fig. 1 (e)]. 20 s Ag-NPs/Ga2O3 presents a lower dark current than amorphous Ga2O3 due to the Schottky junction formed between Ag-NPs and Ga2O3 films, which further forms a potential barrier and reduces the dark current [Fig. 2 (a)]. Under the irradiation of 254 nm UV light, Ag-NPs/Ga2O3 films exhibit a higher photocurrent than amorphous Ga2O3. In particular, the photocurrent of 20 s Ag-NPs/Ga2O3 at 5 V is 18.8 times higher than that of amorphous Ga2O3[Fig. 2 (b)]. This may be due to the enhanced scattering of UV light by the plasmonic vibrations of the Ag-NPs on the surface of Ga2O3 films, thus leading to enhanced absorption of UV light by Ga2O3 and an increase in the photocurrent of the Ag-NPs/Ga2O3 PD. Additionally, the Ag-NPs vibration may generate a large number of hot carriers to have enough energy to cross the Schottky barriers between Ag-NPs and Ga2O3 films, which leads to an increase in the photocurrent of the PD. At this point, the PDCR is as high as 5.9×105, the rejection ratio (254 nm/365 nm) is 1.6×104[Fig. 2 (c)], and the responsivity is 36.1 mA/W [Fig. 3 (c)], with the detectivity of 2×1014 Jones and external quantum efficiency of 17.7% [Fig. 3 (d)]. Meanwhile, both the amorphous Ga2O3 detector and the Ag-NPs/Ga2O3 detector have short response time (Fig. 4).ConclusionsIn summary, the Ag-NP composite amorphous Ga2O3 film DUV PD is prepared by a room temperature magnetron sputtering technology. The PD exhibits an excellent photodetection performance. Under 5 V voltage, the dark current of the detector is as low as 94 fA and the PDCR is as high as 5.9×105, and the rejection ratio (254 nm/365 nm) is 1.6×104, with the responsivity of 36.1 mA/W, detectivity of 2×1014 Jones, and external quantum efficiency of 17.7%. This is not only attributed to the plasmonic vibration of Ag-NPs under UV light, which scatters more incident light into the Ga2O3 film layer to enhance the UV light absorption of the Ga2O3 films, but also to the generation of a large number of hot carriers by Ag-NPs under UV light. These hot carriers enable the hot electrons to overcome the Schottky barriers formed by Ag-NPs and Ga2O3 films, which brings about a significant increase in the PD photocurrent. In addition, the formation of the Schottky barriers between Ag-NPs and Ga2O3 films helps reduce the dark current in the amorphous Ga2O3. This study implies that the introduction of noble metal nanoparticles provides a viable solution to DUV PDs with low cost, dark current, and high PDCR.

ObjectiveThe emergence of new data services and the rapid development of cloud computing have put forward urgent demands for improving transmission capacity in optical interconnection networks. The capacity of traditional single-mode fibers is difficult to enhance due to the nonlinear Shannon limit. Space division multiplexing (SDM) technology has caught extensive attention for its ability to reach maximum capacity. Few-mode fibers are a typical design using SDM, and the mode crosstalk that occurs during the few-mode fiber transmission is a major problem. The multiple-input multiple-output (MIMO) digital signal processing technology is usually introduced at the receiver to solve this problem. However, as the number of guided modes increases, the system complexity will grow nonlinearly, resulting in significant power consumption. Therefore, the communication system should be simplified by suppressing the mode coupling from the root, which is separating the adjacent eigenmodes to the maximum extent. The polarization-maintaining few-mode fibers with special structures can improve the capacity and ensure a higher mode separation degree. However, the previous fiber designs ignore the contradiction between the lower-order mode separation and the higher-order mode separation. Thus, we take this as a breakthrough point to introduce concentric-circular stress-applying region, improving this contradiction relationship and increasing the effective refractive index difference between adjacent modes. Meanwhile, the polarization characteristics, mode effective area, wavelength dependence, mode dispersion, bending resistance, and other indicators are considered to yield better transmission performance and reliability.MethodsTo adapt to the development of optical interconnection networks with short-distance and large-capacity transmission, we propose a concentric-circular stress-applying region-assisted panda polarization-maintaining few-mode fiber. The prominent feature of this fiber is that this concentric-circular region is set around the elliptical-ring core. Since the core mainly determines the guided mode number, parameter optimization is first performed on the semi-major axis (bx) and semi-minor axis (by) of the elliptical-ring core. Subsequently, the concentric-circular stress region with a lower refractive index is introduced between the core and the cladding to improve the effective refractive index difference between the higher-order modes and ensure the separation of fundamental modes. Comparison conducted on optical fibers with the same parameters without concentric-circular stress regions or stress regions of other shapes indicates that the concentric-circular stress region has an excellent ability to separate degenerate modes. Additionally, frequency sweeping is conducted at 1530-1570 nm to investigate the modal wavelength dependency and mode dispersion of the fiber. Finally, the beam propagation method (BPM) is adopted to simulate the fiber bending, and the bending resistance is analyzed. Our study provides ideas for the design of short-distance and large-capacity optical fibers.Results and DiscussionsThrough the design and optimization of the fiber (Fig. 1), the results show that the proposed optical fiber can transmit 10 modes stably (Fig. 6). The introduction of concentric-circular stress-applying region in the structure can enhance the effective refractive index difference between higher-order modes by nearly an order of magnitude, balancing the lower-order mode separation and the higher-order mode separation (Fig. 4). The minimum effective refractive index difference between adjacent modes reaches 2×10-4 at 1550 nm (Table 1) and not less than 1.8×10-4 over the C-band. At 1530-1570 nm, mode dispersion is not higher than |-55.0219| ps·nm-1·km-1 (Fig. 7) and can be further reduced by increasing the semi-minor axis of the fiber core to better adapt to the short-distance transmission. In addition, the bending resistance of the fiber is analyzed. The results indicate that when the bending radius is no less than 9.5 cm, none of the 10 modes will be leaked into the cladding and the maximum bending-induced loss is in the order of 10-7 dB/m.ConclusionsWe put forward a panda polarization-maintaining few-mode fiber with concentric-circular stress-applying region. The effects of elliptical-ring core size and concentric-circular stress region size on the effective refractive index of 10 modes and the effective refractive index difference between adjacent modes are studied. Numerical results show that by optimizing the parameters, this structure can separate the degenerate modes transmitted in the fiber. The mode characteristic changes before and after the introduction of the concentric-circular stress region are analyzed. It is proven that this low refractive index stress region can significantly improve the effective refractive index difference between higher-order modes. The bending resistance of the fiber is sound, with small mode dispersion. In addition, the fiber structure is expected to further increase the number of guided modes by changing the size of the core and other parts. The element of the concentric-circular stress-applying region is also suitable for designing other fiber structures. Our research has application value in the future optical interconnection transmission systems to provide a new idea for the development and design of polarization-maintaining few-mode fibers.

ObjectiveSound source localization (SSL) technology is vital in a wide range of applications such as smart robots, unmanned aerial vehicle (UAV) detection, and unmanned driving. Acoustic sensor arrays are the main solution to SSL. However, with the development of small devices, it is difficult for these arrays to simultaneously satisfy the requirements of miniaturization and high precision. Inspired by small animals' auditory organs, bio-mimetic acoustic vector sensors are an alternative to acoustic sensor arrays. The parasitic fly Ormia ochraceainspires mechanical coupling between two membranes with an interaural phase difference (IPD) gain. Bio-mimetic acoustic vector sensors based on mechanical coupling inherit the IPD gain function. The gain effect of the current bio-mimetic acoustic vector sensors is limited to around the eigenfrequency. Meanwhile, electrical sensors are highly susceptible to extreme environments such as strong electromagnetic and high temperatures, while fiber-optic sensors can endure these conditions. We propose a flywheel-like fiber-optic Fabry-Perot (F-P) acoustic vector sensor for wide-range IPD gain based on the diaphragm coupling gain principle. We hope that the diaphragm-coupling fiber-optic F-P acoustic vector sensor can achieve the IPD gain of several kilohertz frequency ranges, adapting to ambiguous sound source direction in extreme environments.MethodsThe flywheel-coupling diaphragm is simplified to a two-degree-of-freedom (2-DOF) mass-spring-dashpot system with two shape modes of rocking mode and bending mode. COMSOL Multiphysics is employed to analyze diaphragm vibration characteristics and the structure parameters of the diaphragm are optimized based on the simulation results. The flywheel-coupling structure on stainless steel sheet is produced by ultraviolet laser etching technology. The adjoint spokes of two flywheel vibration units naturally couple to form a simplified intermembrane bridge coupling structure. The vibration units combined with individual fiber form independent fiber-optic F-P sensing units. The displacement of the vibration units changes the light intensity of the F-P sensing units detected by the intensity demodulation system. The intensity demodulation contains a tunable laser, 1×2 fiber splitter, optical circulators, photoelectric detectors, and data acquisition card (Fig. 8). The operating wavelength is determined in a common linear region of two sensors. The real-time IPD calculation is acquired by a phase-sensitive detection algorithm, and the incident angle of the sound wave is localized based on the IPD. The uncoupling two-sensor array is simultaneously subjected to SSL experiments to contrast with the flywheel-coupling acoustic vector sensor.Results and DiscussionsThe proposed sensor has a wide frequency range of IPD gain. The rocking mode and bending mode eigenfrequency is simulated as 7.2 kHz and 7.6 kHz. The simulation results exhibit a significant gain in the frequency range of 5 kHz to 7.4 kHz, with a maximum gain of 4.5 at 7.2 kHz (Fig. 4). The experimental results are in good agreement with simulations conducted in COMSOL Multiphysics (Fig. 10). The measured eigenfrequency is 7.2 kHz and 7.6 kHz with a slight discrepancy. The sensitivities of the sensing units are S1=0.24 V/Pa@7.6 kHz and S2=0.21 V/Pa@7.6 kHz. Two-dimensional planar SSL in -90°-90° based on IPD cues is achieved (Fig. 11). The experiment results from 5 kHz to 7.4 kHz present a wide frequency range IPD gain with a maximum gain of 5.05 at 7.2 kHz (Fig. 12). Cavity length and fiber end face inclination affect the spectrum of each sensing unit (Fig. 7). As a result, sensor consistency is difficult to achieve due to unavoidable processing errors. Since the phase-sensitive-detection algorithm is affected by noise, low signal-noise-ratio (SNR) signals may incur high localization errors. Both experimental and simulation results characterize that the sensor has a wide frequency range of IPD amplification effect.ConclusionsWe propose a flywheel-like fiber-optic F-P acoustic vector sensor for wide-range IPD gain based on the diaphragm coupling gain principle. The proposed flywheel-coupling diaphragm has two vibration modes of rocking and bending. The corresponding eigenfrequencies of 7.2 kHz and 7.6 kHz are calculated by COMSOL Multiphysics. The sensor has an obvious IPD amplification effect from 5 kHz to 7.4 kHz in the frequency ranges. The maximum sensitivity and gain are acquired at 7.2 kHz in the simulation. Cavity length and fiber end face inclination affect the spectrum of each sensing unit, limiting the SSL accuracy based on interaural intensity difference. Our paper applies a phase-sensitive-detection algorithm to obtain the phase difference between the two signals in real time. However, the method does not apply to low SNR signals. Meanwhile, the algorithm accuracy is affected by DC components, harmonics, and other factors. Finally, the scheme based on IPD is chosen and a flywheel-coupling diaphragm fiber-optic F-P acoustic vector sensor is fabricated. The first-order eigenfrequency is measured at around 7.2 kHz. The structure achieves SSL with IPD gain in the frequency range from 5 kHz to 7.4 kHz, compared with an uncoupling fiber-optic F-P acoustic sensor array. The measured maximum gain factor of 5.05 is better than the simulation results. The maximum line size of the proposed sensor is smaller than the wavelength of the test acoustic wave to realize a miniaturized acoustic vector sensor with a simple structure and easy processing. The detection method using optical principles can be applied to satisfy SSL needs in extreme environments.

ObjectiveVortex beams, distinguished by their unique spiral wavefront structure, phase singularity, and orbital angular momentum, offer possibilities for enhancing system performance. Notably, vortex beams with different modes are spatially orthogonal, enabling their use in orbital angular momentum multiplexing for augmenting the channel capacity and spectral efficiency. Moreover, demultiplexing these beams at the receiving end provides an additional boost to system capabilities.This paper focuses on the application of coherent detection technology to a bi-directional slant path optical wireless communication system, employing orbital angular momentum multiplexing. This approach eliminates the need for image recognition steps, such as diffraction interference, thereby reducing errors introduced during the process. It analyzes the impact of the topological charge, altitude, and transmission distance on the performance of a vortex optical multiplex communication system. By incorporating an adaptive optics system for uplink and downlink correction, we can minimize crosstalk between modes, leading to improved detection sensitivity and channel capacity.MethodsThe architecture of the orbital angular momentum multiplexing coherent detection system for optical wireless communication using bi-directional slant transmission is illustrated in Fig. 1. Fig.2 presents a schematic view of the system's transmitting and receiving ends. At the transmitting end, the source signal laser is split into four beams using a 1×4 coupler. The signal for each channel undergoes external modulation after series-to-parallel conversion and is then transformed into a vortex beam, with topological charges of 1, 2, 3, and 4, through spiral phase plates. These channels are then combined with a 4×1 coupler for coaxial transmission. At the receiving end, a 1×4 coupler divides the beam into four channels, each mixed with local oscillator vortex beams with corresponding topological charges. Following balanced detection, the electric signal is recovered, demodulated, and then converted back into signals through parallel-to-series conversion, enabling signal transmission from the source to the end. The uplink and downlink employ a single adaptive optics system, located near the downlink receiver, for correction. This system's working principle is depicted in Fig. 3. Given the reversibility of the transmission link and the reverse superposition of the wavefront, the distortion of the uplink signal wavefront at the transmitting antenna is conjugate with the wavefront distortion of the downlink received by the receiving antenna. This feature allows for post-correction of the downlink and pre-correction of the uplink.Results and DiscussionsFig. 4 illustrates the coherent gains of signal and local oscillator light with varying topological charges after mixing. These gains include uplink, downlink, corrected, and uncorrected scenarios. When the topological charge of the signal light (ls1) is 1, and the topological charges of the local oscillator (LO) light (llo) are 1 and 2, the corresponding coherent gains stand at 0.867 and 0.156, respectively. Atmospheric turbulence, which is most potent near the surface, induces wavefront distortion in vortex light, reducing coherence between signal and LO light, and affecting their orthogonality. As a result, the downlink's correction effect is superior to that of the uplink. Fig. 8 reveals the bit error rate of each channel and the system's bit error rate under varying transmission distances. With increasing transmission distances, the wavefront distortion caused by strong turbulence in the uplink exceeds the adaptive optics' correction capability. Insets in Fig. 8 show the uncorrected and corrected light intensity and phase distribution after uplink and downlink transmission. The uplink utilizes pre-correction processing, leading to a larger corrected spot diameter compared with the uncorrected one. Wavefront correction does not influence the light intensity distribution, so no differences are observed in the light intensity distribution before and after downlink correction.ConclusionsThe study concludes that atmospheric turbulence can trigger mode crosstalk during vortex optical multiplexing transmission, and extending the transmission distance heightens the system's bit error rate. At the same transmission distance, mode crosstalk becomes more pronounced as the topological load increases. Adaptive optics is typically apt for phase compensation in weak turbulence conditions, with the correction effect of the downlink more obvious than that of the uplink. The vortex beam orbital angular momentum multiplexing coherent detection significantly enhances the system's detection sensitivity and channel capacity. These findings apply to coherent detection communication involving multiple orbital angular momentum multiplexing with an expanded multiplexing interval.

ObjectiveTo solve the nonlinear impairment during transmitting high-order quadrature amplitude modulation (QAM) signals, we propose a high-order QAM transmission system based on Delta-Sigma modulation (DSM). With the commercialization of 5G networks, the number of mobile internet traffic terminals has surged to increase the demand for fronthaul network transmission rates. At present, the mobile fronthaul network interface common public radio interface (CPRI) usually adopts 15-bits sampling width, which means that at least 15 times of oversampling is required, which results in low spectral efficiency (SE). To this end, researchers proposed a fronthaul network based on DSM technology. Under ten times of oversampling, one-bit quantized DSM can provide a signal-to-noise ratio (SNR) of about 33 dB and support 1024QAM mobile fronthaul. Therefore, facing ever-increasing traffic demands, we study the transmission performance of broadband DSM signals to provide certain references for the future design of high-speed fronthaul networks based on DSM technology.MethodsTo study the transmission performance of broadband DSM signals, we carry out the transmission experiment of 100 Gbaud DSM signal. For the power fading caused by chromatic dispersion that often occurs in intensity modulation/ direct detection (IM/DD) systems, we have chosen the O-band transmission. For the insufficient bandwidth for the transmission system, we employ pre-equalization technology to address the high-frequency fading caused by narrow-band channels. We adopt the on-off-keying (OOK) signal as the training sequence and carry out 100 Gbaud optical back-to-back (BTB) transmission. Then, the frequency domain corresponding to the tap coefficient in the steady state of the constant modulus algorithm (CMA) in the digital signal processing (DSP) algorithm is taken as the inverse response of the channel. Afterward, the finite impulse response (FIR) is generated according to the tap coefficients of CMA. Finally, the generated FIR filter is utilized to filter the transmission signal after two-up-sampling to complete the pre-equalization. Through O-band transmission and pre-equalization technology, the transmission of 100 Gbaud DSM signals is successfully realized.Results and DiscussionsFor the transmission of 50 Gbaud DSM signals, the corresponding experimental results are shown in Fig. 4. In the case of 25 km transmission, when the received optical power (ROP) is -2 dBm, the DSM-OOK signal can realize the error-free transmission. In the case of 50 km fiber transmission, error-free transmission is realized when ROP is 0 dBm. Compared with BTB transmission, the transmissions of 25 km and 50 km introduce 2 dB and 4 dB of power penalty respectively. For the transmission of 100 Gbaud DSM signals, the corresponding experimental results are shown in Fig. 6. When the ROP is 2 dBm, the BER of the DSM-OOK signal after BTB transmission and 10 km transmission is 10-5 and 3×10-5 respectively. However, in the case of 15 km transmission, the conventional DSP algorithm can only achieve BER of 10-4. Therefore, by adding post-filtering (PF) and maximum likelihood sequence estimation (MLSE) algorithm after the traditional DSP, the BER is successfully reduced to 10-5. The final experimental results are summarized in Table 1.ConclusionsThis study realizes the 50/15 km transmission of 50/100 Gbaud DSM signals on the O-band IM/DD link by utilizing one-bit quantized DSM and pre-equalization technology. Thanks to one-bit quantization DSM with SNR of 33 dB, the system supports up to 4096QAM signal transmission that meets the soft decision threshold of 4.0×10-2, and the EVM of 1024QAM signal meets the EVM standard of 2.5% for mobile fronthaul. In the transmission of 50 Gbaud DSM signals, the 1024QAM mobile fronthaul with a rate of 5×10×127/256=24.8 Gbit/s on 50 km fiber is achieved. In the transmission of 100 Gbaud DSM signals, by utilizing PF and MLSE, the 1024QAM signal mobile fronthaul with a rate of 10×10×127/256=49.6 Gbit/s on 15 km fiber is realized. In addition, the system supports a maximum net bit communication rate of 12×10×127/256×0.75=44.6 Gbit/s. The broadband DSM signal transmission system demonstrated in the experiment provides a solution for high-order QAM signal transmission in the IM/DD link and also provides references for future design of high-speed mobile fronthaul approaches based on DSM technology.

ObjectiveIn the case of a non-line-of-sight azimuth transmission system based on polarization-maintaining fiber, aligning the output light passing through the fiber with the photoelectric conversion receiver is necessary to increase the extremely small diameter of the outgoing light after transmission through the fiber. A beam-expanding system can be introduced to solve this problem, and the azimuth transmission can be achieved in a non-line-of-sight condition. Because the classical refractive beam spreading system is composed of lenses, the influence of lenses in polarization transmission determines the accuracy of the azimuth transmission. However, general studies of the lens focused on the influence of polarization states of incident light and lacked analyses regarding lens parameters and beam-expanding systems comprising lens groups. In the non-line-of-sight azimuth transmission system, the influence of lenses on polarization transmission is key to introducing the beam-expanding system. This system has broad application prospects in many fields, including spacecraft docking in space stations, tunneling engineering, and high-precision instrument measurement.MethodsIn this study, the analysis related to lenses is based on the Jones matrix principle and the Fresnel equation. First, the Jones vector is used to characterize the incident polarized light. Second, the incident light gets refracted after passing through the lens and the Jones vector relationship between the incident and refracted light is calculated using the Jones matrix with respect to the lens. Subsequently, the Jones matrix with respect to the lens can be characterized using the amplitude transmission ratio, which is the ratio between the angle of incidence and angle of refraction. These angles are derived based on the Fresnel equation. Finally, the geometric relationship between the light and lens is analyzed using the ray-tracing method to determine the angle of incidence and refraction. Using the Galileo beam expanding system as an example, the process of polarization transmission with respect to the lens is analyzed in detail and the equation of the deflection angle comprising lens parameters is derived. The influence of the lens parameters on polarization azimuth deflection is simulated and verified via experiments.Results and DiscussionsIn this study, the influencing factors with respect to the linearly polarized light using lenses are divided into three categories: first, the polarization state of the incident light; second, the refractive effect of lens spheres; and third, the material properties of lenses. The polarization-azimuth-deflection equation comprising the lens parameters was obtained based on the study of the lens parameters and beam-expanding system comprising the lens group (Eq. 12). Simulations and experiments conducted herein show that the polarization azimuth deflection is inversely related to the radius of curvature of the lens. When the curvature radius of the lens increases, the polarization azimuth deflection decreases and tends to zero (Fig. 5 and Fig. 11). The polarization azimuth deflection is squared with the incident light radius. When the radius of incident light increases, the polarization azimuth deflection increases, and when the incident radius tends to zero, the polarization azimuth deflection tends to zero (Fig. 6 and Fig.12). The central thickness of the lens is linearly related to the polarization azimuth deflection; that is, when the center thickness of the lens increases, the polarization azimuth deflection increases (Figs. 7 and 13). Furthermore, the polarization azimuth deflection is squared with the refractive index; that is, when the refractive index increases, the polarization azimuth deflection increases (Fig. 8).ConclusionsBased on Fresnel equations and Jones matrixes, this study analyzes the influence of lens parameters on polarization transmission, which is mainly reflected in the polarization azimuth deflection of polarized light. Using the Galileo beam spreading system as an example, the geometric relationship of light in the beam spreading system is analyzed via the ray-tracing method. Then, the polarization azimuth deflection equation comprising the lens parameters is derived. Subsequently, the influence of curvature radius, center thickness, the radius of incident light, and refractive index on the polarization azimuth deflection are simulated, and the principle is analyzed. The results of our study show that the curvature radius is inversely related to the polarization azimuth deflection; that is, when the curvature radius decreases, the polarization azimuth deflection increases, and when the curvature radius tends to approach ∞, the polarization azimuth deflection tends to be zero. Meanwhile, the center thickness is linearly related to the polarization azimuth deflection; that is, when the center thickness increases, the deflection angle increases. The incident light radius is squarely related to the polarization azimuth deflection; that is, when the incident light radius increases, the polarization azimuth deflection increases, and when the incident light radius tends to be zero, the polarization azimuth deflection also tends to be zero. The refractive index is squared with the deflection angle; that is, when the refractive index increases, the polarization azimuth deflection also increases, and when the refractive index tends to zero, the polarization azimuth deflection tends to be zero. This study provides a reference for the introduction of the beam-expanding system in the non-line-of-sight azimuth transmission system based on the polarization-maintaining fiber.

ObjectivePerimeter security technologies, such as electronic fences and tension networks, are currently outperformed by the phase-sensitive optical time domain reflectometer (Φ-OTDR). Φ-OTDR, known for its antielectromagnetic interference, high concealment, and large monitoring range, provides efficient large-scale monitoring at a reduced cost. Moreover, it not only can locate intrusion events but also identify event types when combined with signal recognition methods. These unique attributes make it valuable for perimeter security applications. Existing identification methods for perimeter intrusion signals are predominantly reliant on machine learning and deep learning techniques. However, machine learning methods require a high level of expert knowledge and their classification efficacy heavily depends on the chosen combination of features and classifiers. Furthermore, the currently available deep learning methods suffer from inadequate learning ability for time-series signals and require complex calculations. To address these challenges, we propose a deep learning recognition model that incorporates a multiattention mechanism. This model was designed to enhance the extraction of critical signal features and improve network learning capabilities. We used the DAS system to gather signals from climbing, knocking, trampling, and no intrusion events, to validate the effectiveness of our proposed method. We also contrasted the recognition rate and efficiency of various deep learning models and assessed the differential impacts of machine learning and deep learning for large sample multiclassification issues.MethodsWe first extracted the time-domain waveform of the vibration signal using a signal demodulation technique and then employed a mobile difference method to locate the intrusion event. Following this, we introduced a multiattention temporal convolutional network (MATCN) recognition model, which provides the collected vibration signals directly for identification. This model utilized the channel attention mechanism to optimize the residual module, thereby enabling the selective learning of crucial information from different feature channels. Moreover, we employed the leaky rectified linear unit (Leaky ReLU) to mitigate the issue of neuron death during convolution and to enhance the model's robustness. Furthermore, we incorporated a temporal attention mechanism to help the network identify critical information-laden time slices. We determined the depth of the MATCN based on number of stacked layers in the residual module, informed by the changes in the validation sample's loss function value during training. We conducted ablation experiments to validate the proposed strategy's effectiveness. We also compared MATCN with other typical networks for timing signal recognition tasks, including long short-term memory networks (LSTM), convolutional layers incorporated into long short-term memory networks (CNN-LSTM), and temporal convolutional networks (TCN). An early stop mechanism was added during the network training process to prevent model overfitting. We compared the iteration speed, training epoch, and recognition rates of the different deep learning models. Lastly, we contrasted the recognition effects of MATCN and machine learning methods using two feature sets: zero crossing rate, kurtosis, energy entropy, and approximate entropy; zero crossing rate, kurtosis, skewness, and permutation entropy. These features were combined with common classifiers such as random forest (RF), K-nearest neighbor (KNN), and support vector machine (SVM) for recognition. We compared the recognition effectiveness of different feature group-classifier combinations.Results and DiscussionsWe devised performance comparison experiments for different deep learning models, employing LSTM, CNN-LSTM, TCN, and MATCN to process the same training and validation samples. The network training effectiveness is evaluated by comparing the iteration time, number of epochs, training time, and recognition rate of validation samples throughout the training process for each network (Fig. 13, Table 2). Network performance was assessed based on the recognition rate of each event and the testing time for nontraining samples (Table 3). The results demonstrate that although the iteration speed of MATCN is marginally slower than that of TCN, MATCN require less training time to converge, resulting in the highest overall training efficiency. Moreover, the recognition rate of MATCN for nontraining samples reaches 98.50%, and the recognition time is a mere 0.53s, thus outperforming LSTM and CNN-LSTM. Machine learning methods were also employed to identify the same training and nontraining samples, revealing that the recognition efficacy of machine learning relies heavily on feature extraction and classifier selection. The highest recognition rate achieved by machine learning is 88.67%, falling short of MATCN and even LSTM, thereby underlining the advantages of deep learning for large sample multiclassification problems (Fig. 14).ConclusionsTo address the issue of high expert reliance in machine learning and inadequate learning ability in deep learning for critical time-series signal features in optical fiber perimeter security pattern recognition, we propose a MATCN-based optical fiber perimeter signal recognition model. This model considers the temporal sequence of vibration signals and combines channel and temporal attention mechanisms to extract critical information from various angles. It enhances network learning capability and employs Leaky ReLU to mitigate neuron death during the convolution process, thereby boosting the model's robustness. The recognition results for the four signals indicate that the recognition rate of MATCN for nontraining samples attains 98.50%, thus surpassing LSTM and CNN-LSTM. Furthermore, MATCN outperforms machine learning in handling large sample multiclassification problems. The proposed model can selectively learn critical information across different channels and time slices, facilitating precise and efficient identification for perimeter intrusion signals.

ObjectiveBirefringence is a key parameter to judge whether polarization-maintaining fiber can maintain polarization state, which has research significance. The birefringence of traditional single-mode fibers is very sensitive to subtle changes in the external environment, and two orthogonal polarization modes in fibers are easy to couple. Generally, polarization-maintaining fibers have strong birefringence, and two orthogonal polarization modes with different propagation constants are not easy to couple. The birefringence caused by external environment changes is far less than that of the fiber itself. Therefore, polarization-maintaining fibers have good polarization-maintaining ability and resistance to external interference, with a wide application prospect in optical fiber sensing, optical components, optical fiber communication, and other fields. We design a high birefringence elliptical-core pseudo-rectangle polarization-maintaining fiber with both shape birefringence and stress birefringence. The shape birefringence depends on the ellipticity of the core, while the stress birefringence depends on the pseudo-rectangle structure in the stress region, which has a simple structural design. The structural parameters are optimized by numerical simulations, and the birefringence of the designed fiber is nearly doubled compared with that of the traditional panda-type polarization-maintaining fiber. The designed high birefringence fiber will be helpful to practical engineering and provide the possibility for the sensing of high birefringence polarization-maintaining fibers.MethodsWe study the structure design and birefringence characteristics of numerical simulations in elliptical-core pseudo-rectangle polarization-maintaining fibers. Firstly, the birefringence characteristics of the circular-core pseudo-rectangle polarization-maintaining fiber model are studied and compared with the existing research results to verify the correctness of the proposed polarization-maintaining fiber model. Then, based on the circular-core pseudo-rectangle polarization-maintaining fiber model, the changes in core ellipticity and birefringence characteristics are studied when the core ellipticity changes from less than 1 to more than 1. Then, the von Mises stress distribution and stress-induced birefringence distribution in the x direction of the cross section of the elliptical-core pseudo-rectangle polarization-maintaining fiber are analyzed by simulation software. The influence of the length and width of the stress zone on the birefringence of elliptical-core pseudo-rectangle polarization-maintaining fiber is studied and compared with that of circular-core pseudo-rectangle polarization-maintaining fiber. Next, we research the relationship between the effective refractive index and birefringence of the core fundamental modes in the x and y polarization directions at different wavelengths. Finally, the designed birefringent fibers in other references at home and abroad in recent years are compared.Results and DiscussionsWhen the ellipticity of the designed elliptical core rectangular polarization-maintaining fiber is less than 1, the mode birefringence decreases with the increasing short semi-axis a of the core [Fig. 4(a)]. When the ellipticity of the core is greater than 1, the mode birefringence increases with the rising long semi-axis a of the core [Fig. 4(b)]. The relationship between elliptical-core pseudo-rectangle polarization-maintaining fiber and circular-core pseudo-rectangle polarization-maintaining fiber with the distance between the core and the stress zone is compared and analyzed. As the distance between the core and the stress zone increases, the birefringence decreases significantly (Fig. 5). The functional relationship between the birefringence of elliptical-core pseudo-rectangle polarization-maintaining fiber and circular-core pseudo-rectangle polarization-maintaining fiber is studied respectively. With the increasing length of stress zone, the mode birefringence of the two types of polarization-maintaining fibers tends to rise, but the birefringence is quite different [Fig. 7(a)]. With the increase in the width of the stress zone, the basic trend of the mode birefringence of the two types of polarization-maintaining fibers is the same, with a faster growth rate and smaller birefringence difference [Fig. 7(b)]. Additionally, the birefringence of the designed fiber reaches 8.0794×10-4 at the wavelength of 1550 nm [Fig. 8(b)], which is nearly doubled compared with that of the traditional panda-type polarization-maintaining fiber. Thus it has a good polarization-maintaining ability.ConclusionsWe design an elliptical-core pseudo-rectangle polarization-maintaining fiber based on shape birefringence and stress birefringence, which has an elliptical core and two symmetric rectangular stress regions. The influence of the size of the rectangular stress region and the core ellipticity on the birefringence of the polarization-maintaining fiber is studied by numerical simulations. When the ellipticity changes from less than 1 to more than 1, the birefringence increases with better polarization-maintaining ability. Under other conditions unchanged, the width of the stress zone required by elliptical-core pseudo-rectangle polarization-maintaining fiber should be smaller than that of circular-core pseudo-rectangle polarization-maintaining fiber to obtain the same birefringence, which indicates a smaller area of stress zone. By optimizing the parameters, the birefringence of elliptical-core pseudo-rectangle polarization-maintaining fiber at 1550 nm is 8.0794×10-4, which is nearly double that of the traditional panda-type polarization-maintaining fiber. Additionally, the relationship between birefringence and wavelength of the proposed fiber in the C communication band (1530-1565 nm) is also studied. The proposed elliptical-core pseudo-rectangle polarization-maintaining fiber has a simple structure and potential applications in optical fiber communication and sensing.

ObjectiveDepth estimation is an important research topic in the field of computer vision, which is used to perceive and reconstruct three-dimensional (3D) scenes using two-dimensional (2D) images. Estimating depth based on a focal stack is a passive method that uses the degree of focus as a depth clue. This method has advantages including small imaging equipment size and low computational cost. However, this method relies heavily on the measurement of image focus, which is considerably affected by the texture information related to a scene. Measuring the degree of focus accurately in regions with poor lighting, smooth textures, or occlusions is difficult, leading to inaccurate depth estimation in these areas. Previous studies have proposed various optimization algorithms to increase the accuracy of depth estimation. These algorithms can generally be classified into three categories: designing satisfactory focus-measurement operators, optimizing the focus-measurement volume data to correct errors, and using all-in-focus images for guided filtering of the initial depth map. However, numerous factors, including scene texture, contrast, illumination, and window size, can affect the performance of focus-measurement operators, resulting in erroneous estimates in initial focus-measure volume data, resulting in inaccurate depth estimation. Effectiveness of the methods that optimize an initial depth map heavily depends on the accuracy of the initial depth map. Because the initial depth values may be estimated incorrectly owing to insufficient illumination, introducing considerably valid information to improve depth estimation through postprocessing is difficult. Therefore, intermediate optimization methods are ideal for improving the accuracy of a depth map. To solve the problem of inaccurate depth clues in regions showing weak texture and occlusion, this study proposes a novel method based on 3D adaptive-weighted total variation (TV) to optimize focus-measure volume data.MethodsThe proposed method consists of two key parts: 1) defining a structure consistency operator based on the prior geometric information related to different dimensions between the focal stack and focus-measure volume data, which is used to locate the depth boundary and area with high reliable depth clues to increase the accuracy of depth optimization; 2) incorporating the prior geometric information related to the scene hidden in the 3D focal stack and focus-measure volume data into the 3D TV regularization model. The structure of the image is measured using pixel-gradient values. Gradient jumps in the focal stack reflect changes in physical structure, while those in the focus-measure volume data reflect changes in focus level. When the physical structure and focus level exhibit considerable variations at the same position, the structure is consistent and corresponds to an area with reliable depth change. By measuring the structural consistency between the focal stack and focus measure, we can determine the positions exhibiting reliable depth clues and guide the optimization process related to the focus-measure data highly accurately. The traditional 2D TV optimization model has some edge-preserving ability while performing denoising. However, when the noise-gradient amplitude exceeds the edge-gradient amplitude, this model faces a dilemma between balancing denoising and preserving edge details. Based on the guided filtering method, the edge information of a reference image is used to denoise the target image, effectively resolving the dilemma. This leads to a weighted TV optimization model; however, when applying guided filtering to 2D images, the optimization information that can be introduced is limited. Therefore, we attempt to extend this method to a 3D image field. A weighted 3D TV regularization model can balance denoising and edge-preserving abilities high effectively owing to the rich information in 3D data. Herein, the process of optimizing the focus-measure data is modeled as a 3D weighted TV regularization method, and the adaptive weight is determined based on structural consistency.Results and DiscussionsFirst, an analysis is conducted on the selection of model parameters. We observe that adjusting these parameters can considerably impact the performance of the proposed algorithm, thereby optimizing the accuracy of depth estimation. Second, herein, a detailed analysis is conducted on the impact of structural consistency during the optimization process and the problems that may arise because of focusing solely on texture information for optimization. A comparative analysis is also performed with the introduction of 3D structural consistency. Finally, the proposed algorithm is tested on simulated and real image sequence datasets and the results are compared with those from two other methods: mutually guided image filtering (MuGIF) and robust focus volume (RFV). The proposed method computes 3D structural consistency, which is an additional dimension of information, as opposed to the MuGIF method, which uses consistent structural guidance filtering on inputs from all-in-focus and depth maps. The RFV method uses focal stacks to guide focus measure for optimizing depth estimation in 3D. Compared with the RFV method, the proposed method considers the property issues related to focal stack and focus measure and uses their consistent structure to guide optimization. Furthermore, three evaluation metrics are used to analyze and validate the three algorithms with respect to simulated data. The experimental results demonstrate that the proposed method exhibits better performance than the other methods, providing more accurate information for correcting the focusing measure process through 3D structural consistency. The proposed method not only preserves edge information but also preserves texture information with high accuracy and reduces errors in depth estimation.ConclusionsFocal stack contains physical color information of a scene, while focus measure contains textural and geometric structure information of the scene. In this study, we propose a method for measuring the structural consistency between the two to effectively locate the depth discontinuities. A structural consistency weighted TV model enhances the ability of the model to preserve edge information while avoiding the introduction of color information into a depth map. Thus, effectively addressing the problem of loss of depth clues related to focal-stack depth estimation in regions with weak texture and occlusion and increasing the accuracy of depth reconstruction. The computation of the L1 model of TV is relatively easy; however, this computation suffers from local distortion. Using highly advanced regularization terms may further improve the reconstruction effect. Future research needs to consider ways of incorporating increased data and investigating methods to improve the regularization term during the optimization process.

ObjectiveOptical coherence tomography (OCT) is employed as a safe and effective diagnostic tool for a variety of ophthalmic diseases due to its high resolution and non-invasive imaging, which is regarded as the "gold standard" in ophthalmic disease diagnosis. However, various kinds of noise, especially speckle noise, seriously affect the quality of retinal OCT images to reduce the contrast and resolution, which makes it difficult to segment and measure retinal sublayer thickness at the pixel level. Therefore, it is of significance to reduce the noise of retinal OCT images and retain structural details such as layering and edges of the images to the greatest extent. The deep learning-based noise reduction method shows advantages in image quality, especially in preserving edge details. However, for in vivo imaging, it is difficult to obtain a large number of multi-frame registration ground truth images, which affects the performance of the supervised learning method. Therefore, the realization of unsupervised denoising independent of ground truth images is vital in the clinical diagnosis of eye diseases.MethodsWe propose an unsupervised deep residual sparse attention network (DRSA-Net) based on the Noise2Noise training strategy for retinal OCT image denoising. DRSA-Net consists of local sparse attention block (LSAB), depth extraction block (DEB), global attention block (GAB), and residual block (RB). The TMI_2013OCT dataset publicly provided by Duke University is selected and preprocessed, and a total of 7800 Clean-Noisy and Noisy-Noisy image pairs are obtained. The proposed DRSA-Net is compared with the classical deep learning denoising networks U-Net and DnCNN from two aspects of qualitative visual evaluation and quantitative numerical evaluation and is also compared with the traditional BM3D algorithm. Then the denoising effects of three convolutional neural networks under supervised learning and unsupervised learning strategies are compared. Finally, generalization ability tests and network module ablation experiments are performed based on another public retinal OCT image dataset.Results and DiscussionsThe results of unsupervised training denoising (Fig. 3) show that the built model has better denoise and intra-layer fine structure preservation ability for retinal OCT images. U-Net-N2N tends to destroy the details and boundary of layers and introduces some fuzzy structures among layers. DnCNN-N2N brings degradation of layer boundary and blurring of the outer limiting membrane. The comparison between the results of supervised training and unsupervised training (Fig. 4) indicates that when ideal ground truth images cannot be provided, the denoised images of the supervised learning model have more noise, while the unsupervised learning model has a higher denoise degree and can provide clearer structures and edge information. The denoising numerical evaluation results of supervised learning and unsupervised learning (Table 1) show that compared with the original noise images, the supervised learning and unsupervised learning models realize great improvement in various evaluation indexes of the images. Additionally, compared with the traditional block matching algorithm BM3D, the denoising algorithm based on deep learning reduces the denoising time by two orders of magnitude. High-quality noise reduction of OCT images can be achieved within 1 s, and the proposed algorithm can get ahead of most evaluation indexes regardless of what kind of training strategy is adopted. The test results of generalization ability of unsupervised learning (Fig. 5) show that our proposed model has better generalization ability among different datasets, and can obtain a cleaner background than ground truth in terms of background denoise. In terms of structural information retention, it has clearer interlayer structures and more uniform layers. The results of ablation experiments on different modules of the denoising network proposed (Table 3) indicate that the combination of LSAB+DEB+GAB+RB is better in various evaluation indexes, which fully demonstrates the contribution of each module in the network structure to high-quality noise reduction.ConclusionsWe put forward an unsupervised depth residual sparse attention denoising algorithm independent of ground truth images to solve the noise interference in retinal OCT images and the difficulty of acquiring high-quality multi-frame average images in in vivo imaging. The attention mechanism is combined with sparse convolution kernel to complete the information mining between data efficiently and fully, and the Noise2Noise training strategy is adopted to complete the high-quality training with noise images, which achieves a high level of noise reduction and preserves the multi-layer structure information of retinal OCT images. The traditional denoising algorithm and the classical deep learning network are compared and analyzed from the visual evaluation and numerical evaluation including PSNR, SSIM, EPI, and ENL respectively. The denoising effect of supervised learning and unsupervised learning and the experimental results of the generalization ability test on the public retinal OCT image dataset show that the proposed noise reduction algorithm yields good results in various evaluation indexes and has strong generalization. Compared with supervised learning, unsupervised learning can still obtain better noise reduction performance under insufficient data sets.