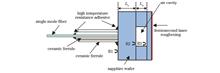

ObjectivePressure monitoring in high-temperature environments is necessary for aerospace, chemical smelting, and petroleum power. Optical fiber sensors can be applied in the measurement of various parameters under high-temperature and harsh environment due to their advantages of passivity, anti-electromagnetic interference, high-temperature resistance, and compactness. The optimal technical approach for pressure measurement is the optical fiber extrinsic Fabry-Pérot interferometer (EFPI), which includes two typical structures of diaphragm-based type and diaphragm-free type. The principle of the diaphragm-free type is that as the refractive index of the gas in the open cavity changes linearly with the ambient pressure, diaphragm-free optical fiber pressure sensors can only measure the gas pressure, and its sensitivity is greatly affected by temperature. The diaphragm-type optical fiber EFPI pressure sensor based on different materials has been widely employed in pressure measurement. The working temperature of the sensor is mainly determined by the material of pressure sensing films. For example, the EFPI pressure sensor based on silicon dioxide is limited by the softening of the glass diaphragm at high temperature, and the working temperature for a long time is generally lower than 800 ℃. With a melting point of 2045 ℃ and a wide transmission spectral range, sapphire is an ideal material for developing ultra-high temperature optical fiber sensors. To measure pressure in high-temperature and harsh environment, we propose and experimentally demonstrate a sapphire Fabry-Pérot (F-P) interferometer with high temperature and large pressure range. The sensor is fabricated by direct bonding of three-layer sapphire wafers, including the sapphire substrate, the sapphire wafer with a through hole, and pressure-sensitive sapphire wafer.MethodsFirstly, a femtosecond laser is adopted to slice the sapphire wafer. The sapphire wafer is fixed on the six-dimensional micro-motion platform, and the laser power is adjusted to 30 mW through the attenuator. The laser beam is vertically focused on the surface of the sapphire wafer through a plano-convex lens with a focal length of 100 mm. The laser is scanned on the sapphire wafer at 5 mm intervals by controlling the six-dimensional micro-motion platform. Secondly, a through hole is inscribed on a sapphire wafer with a thickness of 175 μm. The laser power is adjusted to 5 mW, and the laser beam is focused by a 20× objective lens. The laser scans in the center of the sapphire wafer until the inner wafer falls off automatically. Thirdly, the outer surface of the sapphire diaphragm is roughened. The laser power is adjusted to 1 mW to roughen the diaphragm without changing the thickness of the diaphragm as much as possible. The laser scans the surface by line-by-line method with a spacing of 50 μm. Finally, to improve the stability of the sensor at high temperature and high pressure, the direct bonding process of sapphire wafers is designed. After RCA cleaning, sapphire wafers are immersed in 85% (mass fraction) H3PO4 solution to remove residual oxides on the surface. Then wafers are immersed in a H2SO4 diluted solution to deposit a hydrophilic layer. The wafer pair is successfully bonded after being kept at 1300 ℃ for 20 h and pressure test systems are set up to investigate the pressure response of the proposed sensor.Results and DiscussionsThe EFPI interference signal collected by the white light interference demodulator with a center wavelength of 1550 nm is shown in Fig. 4(a), and its frequency spectrum is shown in Fig. 4(b). The reflection spectrum is formed by three-beam interference. The second peak is the F-P signal formed by two surfaces (R1, R2) of the sapphire substrate, which is utilized to measure the temperature. The third peak is the F-P signal formed by the front surface of the sapphire substrate and the front surface of the pressure-sensing sapphire diaphragm (R1, R3), which is leveraged to measure the pressure. The main frequency signal is extracted by the Gaussian window function, and the interference signals of the two cavities are obtained by inverse Fourier transform. The optical cavity length can be calculated by demodulating the phase information of the interference signal. The ultra-high pressure test shows that the pressure sensitivity of the sensor is 0.1253 μm/MPa within the pressure range of 0-30 MPa, and the sensor has no leakage at 45 MPa. As the temperature increases, the sensitivity of the sensor increases slightly, reaching 0.1322 μm/MPa at 700 ℃. Figure 9 shows that the measurement resolution of the optical cavity length is about 1.5 nm. Combined with the pressure sensitivity of the sensor at room temperature, the pressure resolution of the ultra-high pressure measuring system is 12 kPa, and the relative resolution is 0.04% FS (full scale).ConclusionsIn this study, an optical fiber pressure sensor based on sapphire wafers processed by femtosecond laser is proposed. Sapphire wafers with through holes and rough sapphire pressure-sensitive wafers are fabricated by femtosecond laser micromachining. The experimental results show that the sensor can measure the pressure within the temperature range of 25-700 ℃ and the wide pressure range of 0-30 MPa, and the sensor does not break and leak under the ultra-high pressure of 45 MPa. The sensor is resistant to ultra-high pressure, high temperature, and intrinsic safety, which can solve the technical problems of pressure in-situ testing in the harsh environment of high temperature and high pressure.

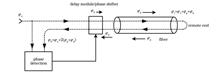

ObjectiveThe antenna array technology overcomes the aperture limitation of single antenna detection and improves the microwave detection capability by several or even dozens of times, which is regarded as a revolutionary change in microwave detection technology. Stable transmission of optically carried microwave signals via fiber links enables the long-distance distribution of microwave signals with delay (or phase) stability required in antenna arrays and plays an irreplaceable role in the new generation of microwave measurement technology with multiple antenna coordination. The core issue of this stable transmission technology is the unstable transmission delay due to environmental factors such as temperature variation and physical vibration. The key to this technology lies in how to accurately measure and compensate for the fiber transmission delay variation, thereby ensuring the delay or phase stability of the signal transmitted to the remote end.MethodsIncreasing the signal frequency not only improves the measurement accuracy of transmission delay but also weakens the effect of other sources of noise. Therefore, transmission stability can be improved by increasing the signal frequency (Fig. 2). To achieve stable transmission, it is necessary to first detect the phase variation of the high-frequency signal with high sensitivity. Therefore, we propose a phase detection method by dual optical and electrical heterodyne mixing (Fig. 3). The RF signal to be transmitted at the local end after electrical frequency shifting is modulated onto an optical carrier to generate a reference. It is then optically mixed with the signal returned to the local end after optical frequency shifting at the remote end. The resulting intermediate frequency signals are then obtained through a low-speed photodiode. Finally, the two intermediate frequency signals are mixed electrically to obtain the phase variation of the returned signal representing the transmission delay variation. This method significantly improves receiving sensitivity and phase detection precision. High-precision control of the phase of high-frequency microwave signals or the optical delay is another key technique to be addressed for stable transmission. To improve the phase control precision of microwave signals in stable-phase transmission, we put forward a high-frequency signal phase control method based on single-sideband modulation. By adding the phase of the MHz-level intermediate frequency signal to the high-frequency signal via single-sideband modulation, accurate phase control of the high-frequency signal can be achieved by controlling the intermediate frequency signal. To achieve high-precision control of optical delay in stable-time transmission, we adopt a cascaded optical delay control method. This method employs a high-precision (fs-level), small-range (ps-level) piezoelectric fiber stretcher and a medium-precision (ps-level), large-range (ns-level) motorized adjustable delay line in series, achieving remarkable performance in delay compensation accuracy, speed, and range.Results and DiscussionsBased on the above-mentioned transmission scheme and key technical solutions, we realize phase-stable and time-stable optically carried microwave signal transmission systems. For the phase-stable transmission system, the frequency of the transmitted signal is 100 GHz. Phase detection via dual optical and electrical mixing and phase control via single-sideband modulation are adopted (Figs. 6 and 7). Under 10000 s of averaging, the frequency stability after 100, 120, 140, and 160 km transmission are 1×10-17, 1.2×10-17, 4×10-17, and 6×10-17 respectively (Fig. 8). It should be noted that the Allan deviation maintains linear decrease with the increasing averaging time, indicating excellent long-term stability of the system. The corresponding root mean square (RMS) value of time jitter is 33, 37, 52.5, and 62 fs respectively. For the time-stable transmission system, the frequency of the transmitted signal is 25.00 GHz. Phase detection via dual optical and electrical mixing and delay control via cascaded optical delay lines are utilized to stabilize fiber transmission delay (Figs. 9 and 10). The phase variation of the 25.00 GHz signal transmitted over 21 km measured by a vector network analyzer is less than 0.09° (RMS) within 3800 s, corresponding to a time error of 10 fs (RMS). Due to the high-precision optical delay control capability, the stability of the transmission system is greatly improved.ConclusionsStable transmission of optically carried microwave signals is currently the most effective means for signal synchronization in antenna array systems. After analyzing the relationship between the transmission stability of the optically carried microwave and signal frequency, we propose the idea of increasing the signal frequency for achieving high transmission stability. High-sensitivity phase detection and high-precision phase and delay control have been realized by dual optical and electrical mixing, single-sideband modulation, and cascaded optical delay control. Based on these techniques, we demonstrate a phase-stable and time-stable transmission system. For the phase-stable transmission, the frequency stability of the 100 GHz signal after 160 km transmission reaches 6×10-17, and the time jitter is 62 fs. For the time-stable transmission, the RMS value of the time jitter is 10 fs within 3800 s at a transmission distance of 21 km. Our study provides an effective technical approach to signal synchronization in multi-antenna cooperative microwave measurements, such as antenna array systems.

ObjectiveIn optical communication, the measurement and analysis of high-speed optical communication signals are essential in developing high-speed optical communication devices, equipment, and systems. At present, the common equipment for time-domain measurement of high-speed optical signals is an optoelectronic hybrid broadband oscilloscope, which has a signal processing circuit limit bandwidth of about 90 GHz and requires complex clock synchronization circuitry. Additionally, this type of oscilloscope also has disadvantages such as opaque signal rate and modulation format, complex system composition, and expensive price. To overcome this electronic bottleneck, we develop an optical sampling oscilloscope prototype based on optical domain sampling technology. The oscilloscope adopts a software synchronization algorithm, and the measurable signal bandwidth is up to THz without the requirement for high-speed photodetectors, which lowers the bandwidth requirements of the clock synchronization circuit and subsequent processing circuits. The limitation of the electronic bottleneck is also overcome. However, since we previously adopt a software synchronization algorithm based on chirped z-transform (CZT), its complexity affects the signal processing timeliness. To improve the signal processing efficiency and enhance the equipment practicability, it is necessary to study a less complex software synchronization algorithm suitable for optical sampling oscilloscopes.MethodsGenerally, after the optical signal is asynchronously down-frequency optically sampled with a fixed frequency difference, the eye diagram reconstruction algorithm based on software synchronization can be employed to realize parameter measurement of high-speed optical data signals related to the eye diagram recovery, constellation diagram, and signal statistical characteristics (Fig. 2). The key to the entire software synchronous eye diagram reconstruction algorithm is to accurately obtain the down-frequency equivalent sampling time step parameter Δt of asynchronous down-frequency optical sampling from the sampled digital signal. To reduce the complexity of software synchronization, based on the CZT software synchronization method proposed by our research group, we put forward a software synchronization method based on the zoom fast Fourier transform (ZoomFFT). The proposed software synchronization algorithm is divided into two steps of coarse synchronization based on FFT and fine synchronization based on ZoomFFT (Figs. 4-6). After the FFT coarse synchronization, ZoomFFT is adopted to refine the spectrum near the peak of the amplitude spectrum to obtain a more accurate peak frequency point of the amplitude spectrum. Then a more accurate down-frequency equivalent sampling time step parameter Δt is obtained to realize fine synchronization. Among them, after replacing the low-pass filter in the ZoomFFT transform with time-domain averaging, the computational complexity of ZoomFFT is lower than that of CZT.Results and DiscussionsFirst, we measure the four-level pulse amplitude modulation (PAM4) signal and quadrature phase-shift keying (QPSK) signal at different rates through an optical sampling oscilloscope prototype. In the measurement of the PAM4 signal, two rates of 6.259 GBaud and 9.696 GBaud are sent respectively. To compare with the downsampling signal, a high-speed broadband digital sampling oscilloscope with a sampling rate of 50 GSa/s and a bandwidth of 20 GHz is utilized to oversample the two-rate PAM4 signal. The results show that the software synchronous optical sampling oscilloscope can measure the eye diagram which is in good agreement with the oversampling broadband oscilloscope (Figs. 8-9). In the measurement of the QPSK signal, two rates of 10 GBaud and 20 GBaud are sent respectively. With the results measured by Agilent's real-time oscilloscope as a comparison, the software synchronous optical sampling oscilloscope can adaptively measure the eye diagram and constellation diagram of the QPSK signal with different symbol rates (Figs. 11-12). Meanwhile, we investigate the effect of the background noise in an optical sampling oscilloscope prototype, and the change curve of the Q value is measured by changing the input optical power. The results show that when the Q value decreases by 3 dB, the corresponding input optical power reduces by about 10.3 dB, and the influence of background noise is small (Fig. 14). It is worth noting that benefiting from the proposed ZoomFFT-based software synchronization algorithm, the complexity can be greatly reduced. Compared with the CZT algorithm, the complexity is reduced by 68.8%.ConclusionsBased on the previous research results of the software synchronization algorithm of the CZT transform, our paper proposes a software synchronization algorithm of the ZoomFFT transform. The experimental results show that the software synchronization algorithm based on ZoomFFT reduces the complexity by 68.8% compared with the CZT algorithm. With the developed optical sampling oscilloscope prototype, the optical PAM4 signals of 6.259 GBaud and 9.696 GBaud rates, and the optical QPSK signals of 10 GBaud and 20 GBaud are measured. The measurement results are compared with those of a broadband electrical sampling oscilloscope with a sampling rate of 50 GSa/s and a bandwidth of 20 GHz. The measurement results verify that the optical sampling oscilloscope can adaptively measure intensity-modulated signals and phase-modulated signals at different rates. Additionally, the effect of the background noise in the optical sampling oscilloscope is investigated. The results demonstrate that when the measured input optical signal power drops by 10.3 dB, the measured Q factor decreases by 3 dB. Thus, the influence of the background noise is small.

SignificanceAs cloud computing, the Internet of Things, and 5G technologies rapidly develop, global network traffic has experienced exponential growth. This surge in traffic, both within and between data centers, has fostered an ever-increasing demand for high-speed and high-performance optical fiber transmission systems for short- and medium-reach distances. Currently, the intensity-modulation and direct-detection (IMDD) system employing four-level pulse amplitude modulation (PAM4) is the primary solution for cost-sensitive short- and medium-reach transmission scenarios. The IMDD system features a simple structure, low power consumption, and low cost. However, it utilizes only the amplitude dimension of the optical carrier to transmit information, leaving other optical domain dimensions untapped. Additionally, the IMDD system's limited receiver sensitivity poses a challenge when higher-order modulation formats are tried to improve spectral efficiency. Coherent detection systems with higher receiver sensitivity are characterized by utilizing the polarization, phase, and amplitude of optical carriers to transmit information, which leads to higher spectral efficiency. However, their practical implementation in short- to medium-reach transmission scenarios brings about challenges including increased system complexity, higher power consumption of digital signal processing (DSP) chips employed in coherent detection systems, and the need for a high-performance narrow linewidth laser as a local oscillator (LO). These factors limit the widespread adoption of coherent detection in such scenarios.To this end, researchers have explored simplified coherent schemes, including self-homodyne coherent detection (SHCD) and differential self-coherent detection (DSCD) schemes for new-generation short- and medium-reach transmission systems. These schemes strike a balance between system performance and complexity, with higher receiver sensitivity than IMDD systems, and less complexity and costs than standard coherent detection. Among these schemes, the SHCD scheme has caught considerable attention. The SHCD system eliminates the need for a narrow linewidth laser as an LO on the receiver side by splitting the laser power at the transmitter between the transmitted signal and a remote LO. This allows utilizing an uncooled large linewidth laser in SHCD systems while the receiver sensitivity remains high. Extensive research efforts have been devoted to advancing the development of this scheme. The DSCD scheme, based on a differential modulation format, provides an alternative approach. It utilizes the relative phase information between two adjacent signals for self-coherent signal demodulation. A notable advantage of this scheme is its high tolerance to laser linewidth, which eliminates the need for LO and carrier phase recovery at the receiver side. Consequently, it enables the utilization of large linewidth lasers for coherent detection to reduce system cost and improve receiver sensitivity. In contrast to the SHCD scheme, the DSCD scheme overcomes the performance degradation caused by mismatched transmission paths of the signal and the remote LO. Recent research findings presented in our paper highlight that, in systems where receiver electrical noise is the primary impairment, the theoretical performance of DSCD is equivalent to that of SHCD. Additionally, DSCD outperforms SHCD in systems dominated by optical noise introduced by optical amplifiers. As a result, the DSCD technology provides a promising solution for high-speed and high-performance optical fiber transmission systems. Its advantages include high receiver sensitivity, low-cost implementation, and low power consumption, thus making itself an appealing choice in the field.ProgressIn terms of receiver sensitivity, implementation complexity, and performance in optical power-limited and optical signal-to-noise (OSNR) limited regimes, we review and compare the optical transmission schemes, including IMDD employing PAM4, SHCD employing quadrature phase shift keying (QPSK) modulation, and DSCD employing differential quadrature phase shift keying (DQPSK) modulation. In recent years, the IMDD system faces challenges in improving system transmission rates, while the SHCD system has gained attention as a low-cost, and high-performance solution. Sowailem's group from McGill University demonstrates a bidirectional SHCD scheme employing optical circulators for short-reach systems. Deming Liu's research group from Huazhong University of Science and Technology presents an SHCD system leveraging a large linewidth distributed feedback (DFB) laser as a downstream transmission solution for optical access networks. Ming Tang's research group from Huazhong University of Science and Technology proposes a real-time 400 Gbit/s bidirectional SHCD transmission by employing low-cost uncooled large linewidth DFB lasers for data center interconnects.However, the practical implementation of an SHCD system still encounters challenges. Bidirectional transmission of signals and remote LOs requires additional optical circulators in SHCD transceivers (Table 2). Furthermore, the sensitivity of the SHCD system to transmission path differences increases with the utilization of larger laser linewidth (Fig. 4). In contrast, the DSCD system exhibits high tolerance for laser linewidth and is unaffected by transmission path differences. In optical power-limited systems, the DSCD-DQPSK system yields comparable performance to the SHCD-QPSK system with optimal power separation ratio (Fig. 6), which is significantly better than the IMDD-PAM4 system (Fig. 7). In OSNR-limited systems, the remote LO quality is inevitably affected by optical noise, which influences the optimal laser power separation ratio (Fig. 8) and the receiver sensitivity of the SHCD system (Fig. 9). Implementing a narrow bandwidth optical filter for the remote LO can filter out a portion of the noise and enhance system performance but at the expense of additional costs. Conversely, in OSNR-limited systems, the receiver sensitivity of the DSCD-DQPSK system is superior to that of the SHCD-QPSK system, and it does not require an additional narrow bandwidth optical filter.Conclusions and ProspectsIn conclusion, both the SHCD and DSCD schemes realize a significant improvement in receiver sensitivity compared to the IMDD scheme. However, the increased DSP complexity and power consumption for coherent detection is a price for this improvement. Additionally, the SHCD system faces challenges from transmission path differences and noise within the remote LO, and addressing the challenges will increase the system implementation costs. Thus, further reducing DSP power consumption, system complexity, and cost is an important direction for future research for simplified self-coherent schemes. However, compared with the IMDD-PAM4 system and the SHCD-QPSK system, the proposed DSCD-DQPSK system is inherently advantageous and promising for short- and medium-reach optical fiber transmissions.

SignificanceHolographic three-dimensional (3D) display technology can effectively reconstruct the wavefront of 3D objects and provide whole depth cues for human eyes, so it has become a research hotspot in the 3D display field. Compared with optical holography, computer-generated holography simulates the recording process of the hologram by computers and adopts the refreshable spatial light modulator instead of holographic recording material as the hologram-carrying media. Due to the above characteristics, computer-generated holography becomes an ideal technology to realize real-time holographic 3D displays and has a broad application prospect in military navigation, industrial manufacturing, medical treatment, education, and entertainment fields. At present, the development of real-time holographic 3D displays is hindered by the huge data of 3D objects, the insufficient modulation capacity of spatial light modulators, and the low display degree of holographic 3D display systems. In order to overcome these problems, researchers have made many innovations from both algorithm and hardware aspects.ProgressWe review the progress of real-time holographic 3D displays. Firstly, the basic principle and development history of holography are outlined. Next, the fast calculation methods of computer generated holograms (CGHs) and wavefront coding methods for current spatial light modulators are introduced in detail. Then, the contribution of deep learning to real-time holographic 3D displays is discussed, and some typical holographic display systems are introduced. Finally, the future development of real-time holographic 3D displays is prospected. The fast calculation methods can be classified into algorithm optimization and hardware acceleration. The algorithm optimization mainly simplifies the calculation complexity and reduces the redundant computation of traditional calculation methods, including point-based method, polygon-based method, and layer-based method. Hardware acceleration mainly speeds up the CGH calculation by designing fast calculation algorithms adapted to the hardware platform and optimizing hardware system architectures. The wavefront coding methods for current spatial light modulators can be mainly classified into iterative methods and non-iterative methods. Iterative methods solve the desired phase-only hologram by iterative calculation between the image plane and the hologram plane or pixels in the hologram plane, which are time-consuming. Non-iterative methods convert the diffracted complex wavefront to an intensity-constant distribution analytically. Compared with iterative methods, non-iterative methods are more efficient and suitable for real-time holographic 3D displays. In recent years, deep learning is also introduced into the computer-generated holography field. Deep learning completes the CGH calculation and wavefront coding through the trained neural network, which shows great potential for realizing real-time holographic 3D displays. Furthermore, with the development of algorithms, devices, and systems, the holographic display system is gradually developing towards large size, large field of view, and real-time color display.Conclusions and ProspectsReal-time holographic 3D display is the ultimate goal of the holographic 3D display. Although there is still a long way to go, it is believed that there is great potential for the further development of real-time holographic 3D displays in both software (algorithms) and hardware (devices and systems). It is expected that holographic 3D displays will eventually achieve real-time display and come gradually into the market and daily life, thus bringing revolutionary changes to our future life.

SignificanceInfrared thermal imaging technology has a wide range of applications in military and civilian fields, and real-time digital image processing, as a key link of infrared imaging systems, has become an important part of thermal imaging technology research in China and abroad. With the development of infrared focal plane detectors (IRFPA) and intelligent image processing technologies, new thermal imaging modes and corresponding image processing methods have been continuously innovated, which have achieved some effective results.ProgressWe review the research and application of the research team in the new thermal imaging modes and their image processing technologies. 1) The non-uniformity correction (NUC) method combining scene-based time-domain high-pass and air-domain low-pass filtering, namely ITHP & GM is proposed, which can effectively correct the "water streak" non-uniformity noise that the existing algorithm fails to effectively deal with, and the algorithm transplantation has been realized on the FPGA hardware platform and applied in a mid-wave infrared cooling thermal imaging camera. 2) The focal plane infrared polarizer arrays with 4-polarization and 3-polarization +1 intensity division are designed and developed, and the coupling and imaging with the refrigerated MW-IRFP movement (320 pixel×256 pixel, 25 μm) and the uncooled LW-IRFPA movement (640 pixel×512 pixel, 17 μm) are realized. A correction model of the front polarizer-based polarization thermal imaging system is proposed, which can effectively reduce the radiation and reflection effects of the polarizer in the optical path. 3) The overclocked high dynamic range (HDR) thermal imaging experimental system based on 256×256 long-wave IRFPA is developed and combined with the HDR image fusion method of multi-integration time image fusion-detail enhancement cascade, and real-time HDR thermal imaging (with delay less than 40 ms) is realized, which can normally observe and identify targets under the background of strong radiation such as the sun, jamming bombs, and flames. 4) The bionic compound eye thermal imaging mode with the partially overlapped field of view of four apertures and four/five apertures is proposed, and the experimental system of four-aperture bionic compound eye thermography based on 640×480 and 80×80 uncooled IRFPA is built, which realizes variable spatial resolution imaging with a large field of view searching and high-resolution imaging of central field of view and verifies the characteristics and effectiveness of bionic compound eye thermography. 5) The TIV-Net algorithm for the conversion of thermal infrared to visible color images is proposed. 75000 pairs of image datasets are completed, and the real-time processing that is not less than 20 Hz is realized on platforms such as vehicle platforms and drones. The real-time conversion of day and night thermal images to natural color visible light images through deep learning methods is proven, which effectively improves the situational awareness ability of day and night human eye vision through thermal imagers.Conclusions and ProspectsThe research progress of the research team in new thermal imaging modes and image processing technologies is reviewed, including the NUC method combining scene-based time-domain high-pass and airspace low-pass filtering, correction model of division of focal plane infrared polarizer arrays based on infrared polarization imaging, front polarizer-based polarization thermography system, overclocking HDR thermal imaging experimental system of long-wave IRFPA, bionic compound eye thermal imaging mode and its experimental system with the partially overlapped field of view of four apertures and four/five apertures, TIV-Net algorithm converting thermal infrared to visible color images, etc. Such technology research has made innovative technological breakthroughs or has been applied, showing a wide range of application prospects, which can be an important direction for further research and expansion.

SignificanceIn recent years, advancements in computing software and hardware have led to artificial intelligent (AI) models achieving performance levels approaching or surpassing human capabilities in perceptive tasks. However, in order to develop mature AI systems that can comprehensively understand the world, models must be capable of generating visual concepts, rather than simply recognizing them because creation and customization require a thorough understanding of high-level semantics and full details of each generated object.From an applied perspective, when AI models obtain the capability of visual understanding and generation, they will significantly promote progress and development across diverse aspects of the industry. For example, visual generative models can be applied to the following aspects: colorizing and restoring old black and white photos and films; enhancing and remastering old videos in high definition; synthesizing real-time virtual anchors, talking faces, and AI avatars; incorporating special effects into personalized video shooting on short video platforms; stylizing users' portraits and input images; compositing movie special effects and scene rendering, and so on. Therefore, research on the theories and methods of image and video generation models holds significant theoretical significance and industrial application value.ProgressIn this paper, we first provide a comprehensive overview of existing generative frameworks, including generative adversarial networks (GAN), variational autoencoders (VAE), flow models, and diffusion models, which can be summarized in Fig. 5. GAN is trained in an adversarial manner to obtain an ideal generator, with the mutual competition of a generator and a discriminator. VAE is composed of an encoder and a decoder, and it is trained via variational inference to make the decoded distribution approximate the real distribution. The flow model uses a family of invertible mappings and simple priors to construct an invertible transformation between real data distribution and the prior distribution. Different from GANs and VAEs, flow models are trained by the estimation of maximum likelihood. Recently, diffusion models emerge as a class of powerful visual generative models with state-of-the-art synthesis results on visual data. The diffusion model decomposes the image generation process into a sequence of denoising processes from a Gaussian prior. Its training procedure is more stable by avoiding the use of an adversarial training strategy and can be successfully deployed in a large-scale pre-trained generation system.We then review recent state-of-the-art advances in image and video generation and discuss their merits and limitations. Fig. 6 shows the overview of image and video generation models and their classifications. Works on pre-trained text-to-image generation models study how to pre-train a text-to-image foundation model on large-scale datasets. Among those T2I foundation models, stable diffusion becomes a widely-used backbone for the tasks of image/video customization and editing, due to its impressive performance and scalability. Prompt-based image editing methods aim to use the pre-trained text-to-image foundation model, e.g., stable diffusion, to edit a generated/natural image according to input text prompts. Due to the difficulty of collecting large-scale and high-quality video datasets and the expensive computational cost, the research on video generation still lags behind image generation. To learn from the success of text-to-image diffusion models, some works, e.g., video diffusion model, imagen video, VIDM, and PVDM, have tried to use enormous video data to train a video diffusion model from scratch and obtain a video generation foundation model similar to stable diffusion. Another line of work aims to resort to pre-trained image generators, e.g., stable diffusion, to provide content prior to video generation and only learn the temporal dynamics from video, which significantly improves the training efficiency.Finally, we discuss the drawbacks of existing image and video generative modeling methods, such as misalignment between input prompts and generated images/videos, further propose feasible strategies to improve those visual generative models, and outline potential and promising future research directions. These contributions are crucial for advancing the field of visual generative modeling and realizing the full potential of AI systems in generating visual concepts.Conclusions and ProspectsUnder the rapid evolution of diffusion models, artificial intelligence has undergone a significant transformation from perception to creation. AI can now generate perceptually realistic and harmonious data, even allowing visual customization and editing based on input conditions. In light of this progress in generative models, here we provide prospects for the potential future forms of AI: with both perception and cognitive abilities, AI models can establish their own open world, enabling people to realize the concept of "what they think is what they get" without being constrained by real-life conditions. For example, in this open environment, the training of AI models is no longer restricted by data collection, leading to a reformation of many existing paradigms in machine learning. Techniques like transfer learning (domain adaptation) and active learning may diminish in importance. AI might be able to achieve self-interaction, self-learning, and self-improvement within the open world it creates, ultimately attaining higher levels of intelligence and profoundly transforming humans' lifestyles.

SignificanceSingle object tracking (SOT) is one of the fundamental problems in computer vision, which has received extensive attention from scholars and industry professionals worldwide due to its important applications in intelligent video surveillance, human-computer interaction, autonomous driving, military target analysis, and other fields. For a given video sequence, a SOT method needs to predict the real-time and accurate location and size of the target in subsequent frames based on the initial state of the target (usually represented by the target bounding box) in the first frame. Unlike object detection, the tracking target in the tracking task is not specified by any specific category, and the tracking scene is always complex and diverse, involving many challenges such as changes in target scales, target occlusion, motion blur, and target disappearance. Therefore, tracking targets in real-time, accurately, and robustly is an extremely challenging task.The mainstream object tracking methods can be divided into three categories: discriminative correlation filters-based tracking methods, Siamese network-based tracking methods, and Transformer-based tracking methods. Among them, the accuracy and robustness of discirminative correlation filter (DCF) are far below the actual requirements. Meanwhile, with the advancement of deep learning hardware, the advantage of DCF methods being able to run in real time on mobile devices no longer exists. On the contrary, deep learning techniques have rapidly developed in recent years with the continuous improvement of computer performance and dataset capacity. Among them, deep learning theory, deep backbone networks, attention mechanisms, and self-supervised learning techniques have played a powerful role in the development of object tracking methods. Deep learning-based SOT methods can make full use of large-scale datasets for end-to-end offline training to achieve real-time, accurate, and robust tracking. Therefore, we provide an overview of deep learning-based object tracking methods.Some review works on tracking methods already exist, but the presentation of Transformer-based tracking methods is absent. Therefore, based on the existing work, we introduce the latest achievements in the field. Meanwhile, in contrast to the existing work, we innovatively divide tracking methods into two categories according to the type of architecture, i.e., Siamese network-based two-stream tracking method and Transformer-based one-stream tracking method. We also provide a comprehensive and detailed analysis of these two basic architectures, focusing on their principles, components, limitations, and development directions. In addition, the dataset is the cornerstone of the method training and evaluation. We summarize the current mainstream deep learning-based SOT datasets, elaborate on the evaluation methods and evaluation metrics of tracking methods on the datasets, and summarize the performance of various methods on the datasets. Finally, we analyze the future development trend of video target tracking methods from a macro perspective, so as to provide a reference for researchers.ProgressDeep learning-based target tracking methods can be divided into two categories according to the architecture type, namely the Siamese network-based two-stream tracking method and the Transformer-based one-stream tracking method. The essential difference between the two architectures is that the two-stream method uses a Siamese network-shaped backbone network for feature extraction and a separate feature fusion module for feature fusion, while the one-stream method uses a single-stream backbone network for both feature extraction and fusion.The Siamese network-based two-stream tracking method constructs the tracking task as a similarity matching problem between the target template and the search region, consisting of three basic modules: feature extraction, feature fusion, and tracking head. The method process is as follows: The weight-shared two-stream backbone network extracts the features of the target template and the search region respectively. The two features are fused for information interaction and input to the tracking head to output the target position. In the subsequent improvements of the method, the feature extraction module is from shallow to deep; the feature fusion module is from coarse to fine, and the tracking head module is from complex to simple. In addition, the performance of the method in complex backgrounds is gradually improved.The Transformer-based one-stream tracking method first splits and flattens the target template and search frame into sequences of patches. These patches of features are embedded with learnable position embedding and fed into a Transformer backbone network, which allows feature extraction and feature fusion at the same time. The feature fusion operation continues throughout the backbone network, resulting in a network that outputs the target-specified search features. Compared with two-stream networks, one-stream networks are simple in structure and do not require prior knowledge about the task. This task-independent network facilitates the construction of general-purpose neural network architectures for multiple tasks. Meanwhile, the pre-training technique further improves the performance of the one-stream method. Experimental results demonstrate that the pre-trained model based on masked image modeling optimizes the method.Conclusions and ProspectsOne-stream tracking method with a simple structure and powerful learning and modeling capability is the trend of future target tracking method research. Meanwhile, collaborative multi-task tracking, multi-modal tracking, scenario-specific target tracking, unsupervised target tracking methods, etc. have strong applications and demands.

SignificanceThis study reports several typical advances in three categories of computational imaging techniques based on multidimensional optical field manipulation: speckle imaging, spatial and temporal compressive imaging, and compressive computational spectral imaging. Additionally, existing problems and future research prospects are analyzed and discussed herein.High-quality imaging through scattering media has crucial applications in biomedicine, astronomy, remote sensing, traffic safety, etc. Object photons traveling through a scattering medium can be classified as ballistic, snake, or diffusive photons based on the degree of deviation from their initial propagation directions. Ballistic photons can maintain their initial directions and retain undistorted object information. Using gated ballistic photons, optical coherence tomography, multiphoton microscopy, and confocal microscopy have been employed to successfully image objects hidden behind scattering media. However, in the presence of a strong scattering medium, all incident photons become diffusive after multiple scatterings and form a speckle pattern. Hence, the abovementioned techniques based on gated ballistic photons fail to image hidden objects. Therefore, the speckle imaging technology was developed to overcome this limitation. This technology involves three main steps: first, establishing a physical model of speckle formation; second, measuring and statistically analyzing the speckle light field; and finally, computationally reconstructing the hidden objects.An imaging system with high spatial and temporal resolution can obtain rich spatial and motion details of high-speed moving scenes. Improvement in spatial and temporal resolutions depends on hardware-performance improvement, including attaining high resolution and low noise in a detector array and satisfactory optical design. However, owing to the limitations in the development of semiconductors and manufacturing technologies, manufacturing a high-performance detector is difficult and costly. Additionally, the huge volume of data collected using an imaging system mandates strict requirements for read-out circuits and back-end data processing platforms. Moreover, miniaturization of the system becomes a general concern that conflicts with these high-performance requirements. Hence, further improvement in the performance of imaging systems cannot be realized based solely on hardware improvement. Compressive imaging is an imaging technology based on the compressed sensing principle and development in computer science, which realizes signal coding and compression simultaneously. Combined with back-end reconstruction algorithms, compressive imaging greatly improves the performance of an imaging system and is widely used in various imaging applications.Spectral imaging technology combines imaging and spectral technologies; thus, this technology can obtain the spatial and spectral information of an object simultaneously. Compared with traditional imaging technologies, the spectral imaging technology possesses a remarkable advantage of sensing information from a multidimensional optical field. By analyzing spectral images, highly detailed target information can be obtained, which is helpful for target recognition as well as substance detection and classification. With the development of compressed sensing theory, a new type of computational imaging technology termed as coded aperture snapshot spectral imaging (CASSI) was proposed. Subsequently, CASSI has become an advanced research topic in the field of imaging. CASSI integrates optical modulation, multiplexing detection, and numerical reconstruction algorithm to address the issues of imaging complex systems, low efficiency of data acquisition, and limited resolution in traditional snapshot spectral imaging technologies. In future, CASSI can play an important role in agriculture, military, biomedicine, and other fields, realizing fast and accurate spectral imaging approaches using intelligent perception capability.ProgressThe speckle correlation imaging method proposed by Bertolotti et al. introduced the concept of speckle imaging. They analyzed the autocorrelation of speckle images captured under different laser illumination angles and subsequently achieved noninvasive reconstruction of objects with phase retrieval. Katz et al. simplified the speckle imaging system using incoherent light illumination and then achieved reconstruction using a single speckle image. Since then, substantial progress has been observed in speckle imaging technology, pertaining to improving accuracy and scene applicability, expanding the imaging field of view and depth of field, and enhancing the ability of the technology to decode objects' optical field parameters, thus becoming a highly researched topic in computational imaging. This study introduces our primary research results regarding key technologies related to speckle imaging, including recursion-driven bispectral imaging with respect to dynamic scattering scenes, learning to image and track moving objects through scattering media via speckle difference, and imaging through scattering media under ambient-light interference.Developing high resolution detectors in the infrared band is considerably difficult compared with developing detectors in the visible band. Therefore, herein, we focused on studying compressive imaging in infrared band. The optical hardware systems and reconstruction algorithms related to spatial and temporal infrared compressive imaging are introduced and our related research is introduced in this study. We set up a mediumwave infraredblock compressive imaging system (Fig.9) and discussed obtained results herein, including reducing block effect, removing stray light, limiting nonuniform (Fig.10), improving real-time performance (Fig.11). For the back-end processing of measured data, we reviewed the traditional methods and proposed several reconstruction algorithms based on deep learning in this study. With respect to spatial compressive imaging, we designed Meta-TR, which combined meta-attention and transformer (Fig.12); furthermore, we designed a multiframe reconstruction network named Joinput-CiNet (Fig.13). Moreover, we introduced a novel version of a 3D-TCI network to achieve temporal reconstruction (Fig.14). Moreover, the spatial–temporal compressive imaging method, which combines temporal and spatial compression, is briefly discussed herein (Fig.16).Furthermore, we reviewed relevant studies in the field of compressive computational spectral imaging that covered the development of color-coded aperture and use of the latest transformer network to improve the image-reconstruction quality. Additionally, we summarized our research achievements. First, we proposed an optical-axis-shift CASSI system based on a digital micromirror device, which can effectively suppress off-axis aberration (Fig.17). Second, we proposed a 3D coded convolutional neural network capable of realizing hyperspectral image classification (Fig.19) based on the established dual-disperser CASSI system (Fig.18). Subsequently, we proposed a hexagonal, blue-noise, complementary-coded aperture (Fig.20) and spatial-target adaptive-coded aperture (Fig.21) for improving the perceptual efficiency of CASSI systems. Finally, to enhance the quality of reconstructed spectral images, we proposed a fast alternating minimization algorithm based on the sparsity and deep image priors (Fama-SDIP) (Fig.22).Conclusions and ProspectsWe achieved remarkable results in three categories of computational imaging techniques based on multidimensional optical field manipulation: speckle imaging, spatial and temporal compressive imaging, and compressive computational spectral imaging. However, these techniques still face numerous challenges in terms of practical applications, including realizing a compact system design, mounting and error calibration, coded aperture preparation, fast and accurate reconstruction of optical fields, and lightweight design of networks. In future, researchers can combine the field of micro-/nano-optics with computational imaging mechanisms to further improve the manipulation ability of imaging systems. Moreover, artificial intelligence can be used to improve the scope of practical application of imaging systems.

ObjectiveWith the widespread application of infrared imaging guidance technology in various offensive and defensive precision guided weapons, infrared imaging guidance hardware-in-the-loop simulation technology has undergone rapid development. Missile flight tests in the laboratory can significantly reduce the outfield testing cost. This reflects the importance of developing hardware-in-the-loop simulation test systems for infrared imaging guidance. Infrared imaging scene projection technology is one of the key technologies in infrared imaging guidance of hardware-in-the-loop simulation technologies. Infrared scene projection systems are mainly employed to replicate various types of optical targets, backgrounds, and optical environment interference in different infrared bands. Nowadays, optical detection systems are complex, multi-spectral, and high-resolution with high frame rate, high dynamic range, and even scene-sensitive systems containing distance information. The main sensors include missile seeker, forward looking infrared system (FLIR), target tracking device, and automatic target recognition device. Although infrared scene projection technology has made significant progress in recent years, the current infrared scene projection technology still cannot meet the performance requirements of testing these complex optical detection systems. It is necessary to study complex infrared scene projection systems for different optical detection applications.MethodsMulti-spectral complex infrared scene projection technology is the key technology to infrared imaging guidance of hardware-in-the-loop simulation system technology, and its technical characteristics limit the overall performance of the entire simulation system. The technical approaches to multi-spectral complex infrared scene projection mainly include resistor array, photothermal image conversion array, digital micro-mirror device (DMD), liquid crystal spatial light modulator, infrared LED array, phase change material array, tunable emissivity semiconductor screen, quantum dot down-conversion chip, photoluminescent phosphor material, and photonic crystal. We review the development history of multi-spectral complex infrared scene projection technology, introduce the implementation principles of typical technologies, discuss the relative advantages and disadvantages of each technology, and summarize the research of major research institutions at home and abroad. Finally, the performance parameters of these technologies are compared.Results and DiscussionAccording to the mechanism of infrared scene projection, the current infrared scene projection systems are divided into two categories of radiation type and modulation type. Radiation type includes resistor array and photothermal image conversion array. Modulation type includes DMD and liquid crystal spatial light modulator. Other infrared scene projection technologies are also introduced, such as infrared LED array, phase change material array, tunable emissivity semiconductor screen, quantum dot down-conversion chip, photoluminescent phosphor material, and photonic crystal. Resistor array and photothermal image conversion array can provide both mid-infrared and long-infrared scenes. DMD and infrared LED arrays can only generate mid-infrared scenes, but they achieve a frame rate beyond 200 Hz.ConclusionsSome of the infrared scene projection devices discussed in this paper have been employed in hardware-in-the-loop simulation test systems, and some are under development. Resistor arrays of the SBIR company, DMD of TI company, and the infrared LED array from the University of Delaware have been applied in hardware-in-the-loop simulation test systems. The technologies developed domestically based on resistor arrays, photothermal image conversion arrays, DMD, and other devices have also been adopted in hardware-in-the-loop simulation test systems for testing infrared systems. The various technologies discussed in this paper have shown their characteristics, which can provide most of the functions in current optical guidance hardware-in-the-loop simulation experiments. This study can serve as a reference during selecting solutions for specific applications to detect infrared systems.

SignificanceHyperspectral images are made up of tens or even hundreds of contiguous spectral bands for each spatial position of the target scene. Consequently, each pixel in a hyperspectral image contains the complete spectral profile of that specific position. With the superiority of high spectral resolution and image-spectrum merging, hyperspectral imaging has emerged as a powerful tool to obtain multi-dimensional and multi-scale information and has important applications in precision agriculture, mineral identification, water quality monitoring, gas detection, food safety, medical diagnosis, and other fields.Due to the limitations of existing devices, materials, and craftsmanship, traditional hyperspectral imaging technology still suffers from the contradiction between high spatial resolution and high spectral resolution, as well as large data volume and high redundancy in practical applications. The emergence of computational imaging technology has brought new ideas to traditional hyperspectral imaging, and thus a new research field, namely hyperspectral computational imaging has been bred. Hyperspectral computational imaging uses system-level imaging methods to establish the relationship between target scenes and observation results in a more flexible sampling form and jointly optimizes the front-end optical system and back-end processing system, thus fundamentally breaking through the limitations of traditional hyperspectral imaging technology to achieve high-dimensional and high-resolution acquisition of hyperspectral information.Currently, there are numerous hyperspectral computational imaging systems based on various theories and methods, and hyperspectral computational imaging systems based on compressive sensing theory are key branches. The compressive sensing (CS) theory can acquire the signal at much lower than the Shannon-Nyquist sampling rate, solve the underdetermined problem based on the sparse a priori of the signal, and finally recover the original high-dimensional signal with high accuracy. Compressive hyperspectral computational imaging obtains spectral images of the target scene by computing the compressive projections acquired on the detector through reconstruction algorithms, thus significantly improving the system performance while keeping the characteristics of the system components unchanged.For compressive hyperspectral computational imaging, how to design the computational model is a crucial scientific challenge. The coded aperture snapshot spectral imager (CASSI) is a classical model, in which the scene information is projected onto the detector through coded apertures and dispersive elements, and the original data cube is subsequently recovered by the reconstruction algorithm. However, the CASSI system can only obtain a limited number of spectral bands due to the performance of dispersive elements and the detector, which makes it difficult to achieve high spectral resolution detection. Moreover, the reconstruction quality still has much room for improvement because the reconstruction solution problem is too underdetermined. To address the above problems, our team proposes the compressive hyperspectral computational imaging technique via spatio-spectral coding, which achieves super-resolution in both spatial and spectral dimensions and effectively solves the contradiction between high spatial resolution and high spectral resolution. Furthermore, our team has carried out a series of work on improving the quality of system reconstruction and expanding the dimensionality of acquired information, so as to achieve high quality acquisition of high-dimensional and high-resolution hyperspectral data cubes. The research on compressive hyperspectral computational imaging via spatio-spectral coding has laid a solid foundation for the hyperspectral computational imaging technology towards practical applications. Hence, it is important and necessary to summarize the background knowledge of compressive hyperspectral computational imaging and the research work of compressive hyperspectral computational imaging via spatio-spectral coding, which can bring new ideas for researchers to explore the new architecture of compressive hyperspectral computational imaging and promote the development of hyperspectral computational imaging technology.ProgressFirst, the research background and basic concepts of hyperspectral computational imaging are outlined. Then, the current development status of compressive hyperspectral computational imaging systems is summarized, and two classical forms and subsequently improved designs are detailed: one is the coded aperture snapshot spectral imager and the improved systems derived from it, and the other is the hyperspectral computational imaging system based on liquid crystal and the improved systems derived from it. Subsequently, the compressive hyperspectral computational imaging technique via spatio-spectral coding proposed by our team is highlighted, and the system composition, mathematical and theoretical models, and the latest progress are presented. Our team has worked on the coded aperture design and reconstruction algorithm optimization (Fig. 13) to improve the reconstruction quality of the system. The study on the acquisition of polarization dimension information (Fig. 14) is carried out to expand the information acquisition dimension of the proposed system. Finally, the future research trends of compressive hyperspectral computational imaging via spatio-spectral coding are discussed.Conclusions and ProspectsCompressive hyperspectral computational imaging technology has a wide range of application prospects. We review compressive hyperspectral computational imaging, including its basic principles, representative systems, and key technologies, so as to provide background knowledge for scholars to engage in related research. Compressive spectral computational imaging via spatio-spectral coding can overcome the contradiction between high spatial resolution and high spectral resolution, and it has made progress in improving the reconstruction quality and expanding the information dimension, which is expected to solve more scientific and engineering challenges. In the future, in-depth research will continue in optimizing the optical design of the system, applying deep learning algorithms for reconstruction, using adaptive compressive sensing theory to improve the imaging quality, and increasing the dimensions of time and depth, so as to promote the practical and industrial development of hyperspectral computational imaging systems.

ObjectiveIn view of the differences in polarization characteristics caused by different states of the detected targets, different radiation spectra, different sizes and shapes, complex transmission environment of soot and haze, and variable light field interference, we conduct the target multispectral polarization model and polarization characteristic transmission model, develop the optimal design method of micro-nano grating polarizer array, put forward the principle and schemes of high-resolution imaging detection instruments, and propose a novel deep network to solve the polarization image fusion problem through a self-learning strategy. We aim to use multispectral polarization imaging technology to solve the problems of complex optical field interference, limited visible distance, and insufficient classification ability of optical imaging under harsh conditions.MethodsIn this paper, the polarization imaging detection method and multispectral information fusion technology are studied, and the overall scheme of the multispectral polarization imager is proposed. Scientific methods such as the theory and model of target polarization characteristics under complex optical fields, micro-nano grid focal plane polarization device, optimization and testing of a multispectral fully polarized imaging system, and polarization image processing based on polarization difference characteristics are condensed.Key technologies and solutions such as modeling and testing of target polarization characteristics generation and transmission, optimization of metal micro-nano grating polarization elements with high extinction ratio, the optical design of multispectral polarization imaging and testing system, and multispectral polarization information processing are analyzed. This will lay the foundation for the development of a multispectral polarization imaging detection test prototype, which will meet the practical application requirements of visual assistance and guidance in optical imaging.Results and DiscussionsWe conduct the target multispectral polarization model and polarization characteristic transmission model, which reveal typical target multispectral polarization law and haze and dusty weather multispectral polarization transmission law and provide theoretical basis for multispectral polarization information processing.We develop the optimal design method of micro-nano grating polarizer array, solve the defects of the current defocused plane polarization imaging device that only has micro-nano linear grating and cannot produce circular polarization, and propose a new chiral micro-nano circular grating mechanism. We also analyze the physical nature of the low extinction ratio of the division of focal plane polarization imaging, put forward the physical mechanisms for the generation of the high extinction ratio of the linear grating, and discuss the effect of the deviation of the preparation parameter on the extinction ratio law. Combining the micro-nano linear grating and circular grating, we study the new device of full polarization high extinction ratio and split focal plane imaging, and lay the technical foundation for the development of multispectral and multi-dimensional polarization high-resolution imaging detection instruments.Aiming at the problems of close detection distance, low detection sensitivity, and narrow environmental application range of the existing instruments under the haze and smoke conditions, we put forward the principle and scheme of multispectral polarization high-resolution imaging detection instruments and the design scheme of multi-optical imaging optical system for the characteristics of large loss of light energy in polarization imaging. We break through the optical system design based on the polarization aberration correction and multispectral polarization calibration, so as to significantly improve the instrument's imaging and detection ability in the haze and dusty environment.A novel deep network is proposed to solve the polarization image fusion problem through a self-learning strategy. The network consists of an encoder layer, a fusion layer, and a decoder layer, where the feature maps extracted by the encoder are fused and then fed into the decoder to generate a fused image. Given a multidimensional polarization image, effective features are extracted from this source image, which are fused to form an information-rich polarization image for subsequent advanced image applications.ConclusionsAiming at the problems of "not recognizing", "not seeing far", and "not being able to recognize" in optical detection caused by harsh environments and meteorological conditions, we put forward a multispectral polarization imaging detection technology and expect to achieve breakthroughs in three aspects: 1) in terms of device development, through the improvement of circular micro-nano grid design method and micro-nano grid substrate material-structure, it is necessary to prepare new devices with high extinction score of focal plane imaging; 2) in terms of system design, breakthroughs are required in the design of multi-optical path bias-preserving optical system, high-transmittance and high bias-preserving optical coating and other key technologies, and high spatial and temporal matching of instrument information acquisition; 3) in terms of information processing, the study of the polarization characteristic model and polarization transmission model of the target is important.Adverse environmental and weather conditions not only have a great influence on optical imaging detection but also have an extremely serious effect on aircraft landing safety and other areas. Fog and low visibility conditions cause aircraft to fail to land properly, making flights grounded or delayed, with significant economic and social impacts. At present, to perform approach and landing under low visibility conditions, pilots mostly use ground and airborne navigation facilities/equipment to guide the aircraft to the runway in accordance with the specified flight path, mainly including instrument landing system ILS, microwave landing system MLS, satellite navigation technology GPS, laser landing system, and visual landing system. Under extremely severe conditions such as no radar guidance or no ground indication, the aircraft cannot obtain effective and safe landing guidance and assistance through the above guidance methods. Therefore, it is of great significance to develop visual aids for aircraft landing under adverse conditions. It can improve the safety of airplane landing under bad conditions such as smoke environments, sea fog environments, and low illumination environments.

SignificanceAs a significant optical precision measuring technology, optical interferometry is known for its wavelength-level measurement accuracy. As the industry calls for higher demands on measurement accuracy, the requirements on the sensing range of measurement methods are also increasingly wide. Additionally, due to the influence of human traffic, construction, and other factors, some measurement methods insensitive to environmental changes should be urgently proposed. These demands on the industry drive the development of optical interferometry. A lot of interference testing techniques have emerged in the development of optical interferometry. One of these techniques is lateral shearing interferometry which is common optical path interferometry and features a wide detection range and low need for environmental stability or coherence of the light source. As a result, this kind of interferometry has a variety of applications.The lateral shearing interferometry originated from the Ronchi test in 1923 with a history of one hundred years, it can be divided into double-beam lateral shearing interferometry and multi-beam lateral shearing interferometry. The double-beam lateral shearing interferometry needs at least two interferograms with different shear directions to recover the full wavefront phase information. This is because the interferogram of double-beam lateral shearing interferometry only contains the wavefront phase information about the shear direction, while this problem is resolved by multi-beam lateral shearing interferometry. Through this technology, the wavefront can be copied into multiple wavefronts to different emission angles at the same time, and then a multi-beam lateral shear interferogram is formed over the overlapping region of the observation surface. Therefore, each interferogram contains information about the phase differences between the wavefronts in multiple shear directions. Thus, only one interferogram is necessary to recover the wavefront to be measured through technical steps such as phase extraction, phase unwrapping, and wavefront reconstruction, which greatly improves the measurement efficiency and makes real-time detection of instantaneous wavefronts possible.In multi-beam lateral shearing interferometry, it is necessary to copy incident light waves of multiple light waves simultaneously, which requires obtaining the mutually interfering working wavefront and removing unwanted advanced diffracted light, and the key is in the design of light-splitting elements. The initial development of multi-beam lateral shearing interferometry employs prisms with high work surface processing requirements as the light-splitting elements, and then gratings are adopted to reduce the difficulty in device processing and improve the accuracy of the interference wavefront vector direction. Compared with other traditional interference methods, this technique greatly simplifies the whole measurement system. Quadri-wave lateral shearing interferometry (QWLSI) is characterized by high accuracy, large dynamic range, high resolution, and strong anti-interference ability. For example, due to the system simplicity, the QWLSI based on randomly coded hybrid grating theoretically requires only a two-dimensional grating and CCD. Thus it is the research focus of many research institutions in China and abroad. In recent years, QWLSI has been applied to the aberration detection of lithography objective lens, the surface shape detection of aspheric elements, and the wavefront sensing of large aperture splicing telescope. However, in pursuit of higher measurement accuracy today, a series of improvements still need to be conducted. Therefore, summarizing the completed work is a necessity, which is beneficial to better guide future work.ProgressThe development of our research team in several QWLSI areas is outlined. First, we describe the beam splitter design in QWLSI. Based on the randomly coded grating designed by Zhejiang University, our research group designs a global random coded hybrid grating that can better suppress the high-order secondary diffracted light and a phase-only grating based on global random coding constraint with low processing difficulty and high light transmittance. Second, we present our efforts to process interferograms. In the interferogram preprocessing field, we study the extension technique appropriate for two-dimensional QWLSI interferogram to reduce the measurement error caused by the boundary effect. The virtual grating phase-shifted Moiré fringe approach has been researched in terms of phase extraction. The present Fourier transform method's issues with substantial edge errors and weak anti-noise ability are resolved, and the algorithm's phase extraction accuracy and spectrum leaking are thoroughly investigated. In phase unwrapping, our team has studied the parallel phase unwrapping technique, which can speed up interferogram processing and significantly improve efficacy. Additionally, we research the algorithm of employing the wavefront differential phase of QWLSI in multiple directions to reconstruct the wavefront for improving the accuracy and anti-noise ability of wavefront reconstruction. Third, we investigate the QWLSI errors, including the processing error and installation and adjusting errors of the grating. Technical support for QWLSI installation and adjustment is provided by the quantitative results of the machining error tolerance of the grating and the influence of installation and adjustment error on measurement accuracy. The built fundamental QWLSI device based on the research on important technologies is then introduced, and the measurement accuracy in absolute terms is provided.Conclusions and ProspectsThe QWLSI with gratings as beam splitters have been the recent research subject in pertinent academic institutions domestically and internationally. Our research team has created the fundamental QWLSI device based on important studies. As expanding the application of QWLSI in related sectors, we will conduct research on the essential technologies to enhance the amplitude and spatial frequency of the detectable wavefront distortion in QWLSI.

SignificanceImaging photoplethysmography (IPPG) has the advantages of high cost performance, simple equipment operation, and continuous automatic measurement and observation of subjects. IPPG has become an important means to deeply understand the optical properties of biological tissue and explore the pathological mechanisms related to complex cardiovascular diseases. IPPG is developed on the basis of traditional single-point photoplethysmography (PPG). IPPG uses imaging equipment to record the tiny changes in skin colors caused by the diffuse reflected light carrying the pulsation information of the heart after the interaction between light and skin tissue in the form of continuous images. Then, through video and image processing technologies, human vital sign information such as pulse, heart rate, and heart rate variability are extracted from the video stream. IPPG can reveal the dynamic and small changes in biological tissue during physiological and pathological processes. Therefore, IPPG can be used to better understand basic life activities and realize highly sensitive diagnoses and high-precision quantitative characterization of diseases. IPPG is applicable to large-scale clinical detection and physical health monitoring in daily life and other scenarios. It is a research hotspot of human daily physiological status monitoring in the new era of medical health.ProgressIn recent years, due to the continuous improvement of imaging sensor resolution and various signal processing technologies, IPPG has made great progress in the detection and application of human physiological parameters, such as heart rate, respiratory rate, and heart rate variability, as well as the application of disease diagnosis, such as arterial disease, stiffness and aging, and chronic microcirculation disease. However, for the further monitoring and classification diagnosis of complex cardiovascular diseases, IPPG still faces the challenges of lack of pathological feature analysis, complex optical feature parameters, and the manifestation of different types of pathological mechanisms of cardiovascular diseases on pulse waves. Therefore, we summarize the basic research and application of the existing IPPG and continue to explore the optical mechanism and pathological mechanism of IPPG. These efforts are very important for guiding the future development of IPPG.Based on a comprehensive investigation of a large number of relevant literatures on the monitoring of human physiological parameters by IPPG in China and abroad in the past 20 years and our long-term research work, we first introduce the optical principle of IPPG. Then, we analyze the new method of IPPG signals in video image processing and that of improving the signal-to-noise ratio (Table 1), including a series of mainstream methods for selecting imaging sites in different areas of skin tissue and those for motion artifacts and blur. Finally, the clinical application of IPPG is introduced in detail, mainly including the extraction of IPPG heart rate under adaptive focal length, detection of living skin, measurement of blood oxygen saturation under visible light (Fig. 7), monitoring of fatigue status, evaluation of psychological stress, and analysis of the pathological mechanism of IPPG signals under cardiovascular diseases (Fig. 9).Conclusions and ProspectsIPPG is gradually developing towards miniaturization and intelligence. The monitoring indicators and accuracy of IPPG are continuously improving, which has important research significance and application value in biomedical research, clinical medicine, and daily life health monitoring. As an effective tool for early diagnosis of diseases and individual precision medical treatment, IPPG still needs in-depth and detailed exploration to promote its further development in academic and engineering fields.