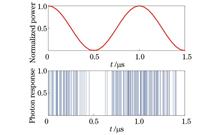

ObjectivesDue to the high sensitivity of the single-photon detector, an ultra-narrow band filter is needed to ensure the normal operation of the detector under the influence of strong background light noise in the daytime. Therefore, the anti-background noise ability of photon heterodyne detection is crucial. In addition, the dark count and post-pulse effect of the single-photon detector will lead to a false count and thus reduce the signal-to-noise ratio (SNR). Therefore, new methods are required to improve the SNR of photon heterodyne detection. In the earlier research on photon heterodyne detection, most scholars focused on signal processing for denoising. They proposed new signal processing methods to denoise echo signals and rarely made an effort to improve the detection system or change the photon counting mode. As current signal processing has limited ability to improve the SNR of photon heterodyne detection, this study applies the coincidence counting mode to photon heterodyne detection. This mode relies on multi-channel detection and filters out random optical noise at the photon counting terminal to improve the SNR.MethodsIn this study, a photon heterodyne velocity measurement system with two-channel coincidence counting is constructed by simulation. After frequency beating, light waves of heterodyne signals are evenly divided into two parts and sent into two single-photon detection channels separately. A two-channel coincidence counting mode is adopted at the single-photon detection end, and different coincidence gate widths are set for the system according to different situations. The two-channel coincidence counting system will automatically select the channel 1 as the main channel and the other channel as the slave channel and create a time window (the size of which can be set) centered on the main channel. When both channels have photon counting in this window, an effective coincidence will be generated, and the coincidence counting results within a certain collection time will be given at last. When the signal photon is detected in both channels within the coincidence time, it is judged to be an effective signal. In this way, the arrival time series of the photon can be obtained. Compared with the case of single-channel photon counting, the anti-noise capability of the system is greatly improved, and effective echo signals can be extracted under strong background noise.The traditional filtering method of photon heterodyne detection is to preliminarily process the photon response sequence to produce the cumulative photon histogram, perform the first-order filtering of the cumulative photon histogram curve, and process the filtering results as FFT to obtain the spectrum diagram. In this study, the first-order filtering algorithm adopts the moving average filtering method and compares three methods, namely, single-channel free-running mode, post-photon cumulative moving average filtering, and two-channel coincidence counting mode. The simulation results show that the power spectrum SNR of the intermediate frequency (IF) signal in the two-channel coincidence counting mode is significantly higher than that in the single-channel free-running mode and the first-order filtering.Furthermore, the variation laws of the power spectrum SNR of IF signals with the increase in the number of signal photons are studied under two counting modes. In addition, four factors such as local-oscillator light intensity, background noise, IF, and detection duration that affect the performance of photon heterodyne detection are investigated.Results and DiscussionsAccording to the simulation, the SNR of both the single-channel free-running mode and dual-channel coincidence counting mode gradually increases with the increase in the number of photons. However, as the number continues to grow, the SNR rises slowly and gradually reaches saturation (Fig. 7). When the ratio of local light intensity to signal light intensity is less than 1, the SNR changes most significantly with the increase in the number of signal photons, and when the number grows to 5 Mcps (Mcps represents the counts multiplied by 106 per second), the saturation state cannot be reached. When the ratio is equal to 1, the SNR is higher than that when the ratio is less than 1. This is because when the number of photons in the local oscillator is equal to the number of photons in the signal, the total number of photons is higher than the case when the ratio is less than 1, and the proportion of noise photons decreases. Hence, the SNR increases. However, a greater ratio of local light intensity to signal light intensity does not lead to better results. As can be seen from the figure, if the ratio is equal to 5, the SNR of the IF signal is higher than that of 1, but if the ratio is less than 3, it can be inferred that as the ratio of local light intensity to signal light intensity gradually increases, the system's SNR increases before it declines. The peak is reached near the ratio of 3 (Fig. 8). Stronger background noise means a lower SNR. In addition, the photon number of the saturated signal is different under different background noises. Stronger background noise is accompanied by a larger photon number of the saturated signal. When the background noise is 0.5 kcps (kcps represents the counts multiplied by 103 per second), the number of signal photons gradually becomes saturated at about 3 Mcps, but when the background noise is 2 kcps, the number of signal photons is close to saturation at 5 Mcps. Under different background noises, the saturation SNR achieved is also inconsistent: stronger background noise indicates a lower saturation SNR (Fig. 9). IF has a steeper change in the early fitting curve of the influence of SNR and reaches a peak when the number of signal photons is 3 MHz. As the number continues to increase, the SNR of IF slightly declines and gradually becomes stable. Although the span of IF is from 0.5 to 7 MHz, the four curves are concentrated, and it is difficult to separate them or even have crossover parts in some line segments. Therefore, the IF signal frequency, that is, the speed of the moving target, has little influence on the SNR of the system (Fig. 10). As the detection duration becomes longer, the SNR increases significantly. When the detection duration increases from 0.05 to 0.10 ms, the saturation SNR increases by about 3 dB, while when it increases from 0.15 to 0.20 ms, the saturation SNR only increases by about 1 dB. The time parameters such as the dead time and coincidence window of single-photon detection are generally of the order of ns. Thus, although the detection duration only increases by 0.05 ms each time, it has already exceeded four orders of magnitude. Therefore, longer detection duration means more photons detected and more significant cumulative effects of the photon-density distribution law, and a greater contribution of IF signal distribution to the total number of photons indicates a higher SNR (Fig. 11).ConclusionThe results show that the proposed method has significant advantages over single-channel detection. In coincidence counting, part of the background noise and dark count noise can be filtered out to improve the SNR. This study provides a new idea for the application of coincidence counting and also renders guidance for the construction of a photon heterodyne detection system with two-channel coincidence counting in subsequent experiments.

ObjectivePhotodetectors can convert incident light into electric signals and are widely used in many fields such as image sensing, optical communication, environmental monitoring, and biological detection. In recent years, all-inorganic metal halide perovskite CsPbIBr2 has been concerned in photoelectric detection due to its high light absorption coefficient, high charge carrier mobility, and low defect density. On the one hand, CsPbIBr2 film is susceptible to ambient humidity, so it is not usually prepared under an atmospheric environment but in glove boxes by methods such as spin coating. On the other hand, on account of uncontrolled nucleation during crystallization, CsPbIBr2 film has poor morphology and crystallinity, which results in weak photoelectric characteristics and instability of its photodetector. In order to overcome these problems, the morphology, crystallinity, and water/oxygen resistance of CsPbIBr2 thick film can be improved by additives and interface layer strategies. In this study, we employ an interfacial seed layer modification strategy under an atmospheric environment with relative humidity (RH) below 90% to prepare one high-quality CsPbIBr2 thick film with high crystallinity, excellent interfacial contact, and stable structure. We hope that our findings can help fabricate low-cost, high-performance, and long-lasting photodiode-type CsPbIBr2 photodetectors under an atmospheric environment.MethodsCsPbIBr2 thick films with controllable thicknesses in the range of 0.5-100 μm are prepared under an atmospheric environment with RH below 90% by pneumatic spraying. Prior to spraying, the interfacial seed layers are formed on the substrates by spin-coating followed by annealing. During spin-coating, the density distribution of the interfacial seed layers is realized by controlling the concentration of precursor solution. In this strategy, the interfacial seed layers act as the nucleating points for crystal growth, which improve the crystallization of the thick films in preparation processing. The morphology and the phase structure of the thick films are analyzed by scanning electron microscopy (SEM) and X-ray diffraction. Compared with the thick film without an interfacial seed layer, these films with interfacial seed layers have high crystallinity, excellent interfacial contact, and stable structure. In order to assess the effect of interfacial seed layers on the optical properties of the thick films, these thick films are investigated by absorption, photoluminescence (PL), and time-resolved PL spectra. In order to verify the feasibility of the thick films for photodetection, their photodiode-type photodetectors of Au/ITO/CsPbIBr2/Au are fabricated and measured. The I-V and response time curves of the photodetectors are examined under laser excitation of 405 nm. In order to characterize the long-term stability, tracing measurements on the on-off ratio of the devices are made, and the naked-eye photographs of the corresponding thick films are recorded.Results and DiscussionsCompared with the control film with small-size crystal grains and a large number of holes on the surface, the modified films by introducing interfacial seed layers exhibit large-size crystal grains and dense morphology [Fig. 1(a)]. From the cross-sectional SEM images, the improved interfacial contacts between the modified films and the substrates lead to columnar growth features [Fig. 1(b)]. The modified films show a preferred orientation on the (110) diffraction plane, especially for 0.3 mol/L, which is consistent with the SEM results [Fig. 1(c)]. Once seed layers are inserted between the thick films and the substrates, the absorption coefficients and PL peak intensities increase significantly in the whole visible range, and the fluorescence lifetime increases from 0.95 ns to 4.49 ns (Fig. 2). The dark current from the control device to the modified devices decreases from 2.05×10-7 to 5.70×10-10 A, while the on-off ratio significantly increases from 490 to 1.8×104 [Fig. 3(a)]. By fitting the I-V curves under light illumination, it is proved that the modified device of 0.3 mol/L has a stronger light response (n=0.87) and larger response range (RLD=80 dB) than the control device (n=0.60 and RLD=34 dB) [Fig. 3(b)-(e)]. The rising and falling time (ton and toff) from the control device to the modified devices decreases from 38 μs to 9 μs and from 110 μs to 13 μs, respectively [Fig. 3(f)]. After lasting 60 days, the modified device of 0.3 mol/L still retains a high on-off ratio of 1.5×104, which is 83% of the initial on-off ratio, and the naked-eye photographs of the thick films do not change significantly (Fig. 4).ConclusionsIn this study, under an atmospheric environment with RH below 90%, high-quality CsPbIBr2 thick films are prepared by pneumatic spraying via an interfacial seed layer modification strategy. In this strategy, the interfacial seed layer acts as the nucleating points for crystal growth, which results in the improvement of the crystallinity, interfacial contact, and structural stability of the CsPbIBr2 thick films. Furthermore, the introduction of interfacial seed layers has no significant effect on the optical band gaps of CsPbIBr2 thick films, ranging from 2.10 eV to 2.12 eV. It is worth noting that the absorption coefficient of visible light and the PL intensity are enhanced significantly, and meanwhile the fluorescence lifetime is increased (from 0.95 ns to 4.49 ns). The photodiode-type CsPbIBr2 photodetector (p-n CsPbIBr2-ITO) shows a low dark current (5.70×10-10 A) and possesses high-performance photodetection parameters, namely high on-off ratio (1.8×104) and microsecond-level response times (9 μs and 13 μs). Moreover, the unpackaged CsPbIBr2 photodetector is strongly resistant to water and oxygen under an atmospheric environment with RH below 90%, which is 83% of the initial on-off ratio after lasting 60 days. These results can provide an effective way to prepare low-cost, high-performance, long-lasting, and stable photodiode-type CsPbIBr2 photodetectors under an atmospheric environment.

ObjectiveIn fields such as laser processing, surface ablation, and medical testing, flat-topped beams with uniform energy distribution are more valuable than Gaussian beams directly output by lasers. Diffractive optical elements (DOEs) are widely used as beam-shaping devices with the advantages of simple structure, high design degree of freedom, accessible mass production, and wide range of material selection. Differing from traditional refractive devices, DOEs use surface relief microstructures to adjust the amplitude or phase of the wavefront, thereby tuning the distribution of the output beam. DOEs have high requirements for the wavelength, waist width, eccentricity, beam quality, and other conditions of the input beam. When these requirements are not met, the output light spot will deviate from the design results, which affects actual use. Unlike other tolerance constraints, the effect of beam quality on DOEs cannot be directly calculated from the diffraction propagation of coherent light fields, and the effect of input beam coherence must be considered. However, few studies have discussed the influence of beam quality on DOEs. In this work, we study the output results of Gaussian shell-model (GSM) beams with different beam qualities passing through the DOE and propose a new method for designing DOEs with GSM beam shaping.MethodsIn this work, an improved G-S algorithm, namely, the symmetric iterative Fourier transform algorithm (SIFTA), is used to design flat-topped DOEs. By introducing a signal window, this algorithm can obtain a highly uniform output light spot while ensuring output efficiency. With the DOE designed by SIFTA as a standard, the influence of beam quality is studied. The multimode laser can be approximately described by a GSM beam. The transmission of the GSM beam through a DOE is typically described by using a cross-spectral density (CSD) function, which involves a 4-order Fourier transform and results in significant computational complexity. In order to simplify the calculation, the mode decomposition of CSD is used to study the output light spot of the DOE under GSM beams. The coherent-mode representation uses coherent Hermite-Gaussian modes to express the CSD and requires only a limited number of modes to obtain accurate solutions. Similarly, the random-mode representation uses random modes that conform to statistical relationships to represent the CSD and reduce computational complexity. We also use variable substitution to simplify CSD transmission and find that the DOE output can be directly separated into a convolution of coherent and noncoherent parts.Results and DiscussionsBy using the mode decomposition method, the output light spot distributions of GSM beams with different beam qualities passing through the DOE are calculated (Fig. 3). It can be seen that as the M2 factor increases, the size of the flat-topped area of the output light spot gradually decreases until it deteriorates to a Gaussian-type light spot, and the flat-topped shaping effect of the DOE fails. The flat-topped DOE has a high requirement for beam quality. When the M2 factor increases to 1.5, the output result already has significant deformation. The same conclusion can be obtained using the convolutional representation of the GSM beams (Fig. 4). Only when the width of the convolution kernel is much smaller than the spot size D of the DOE will the convolution result approach the designed output light spot. Therefore, for a given DOE, the applicable maximum M2 factor is related to the output spot size D and the input beam size w0, and they can be expressed as M2=1+πDw0/αλf21/2. Specifically, α is the proportional coefficient between the output spot size and the maximum convolution kernel width; λ is the wavelength; f is the focal length. In addition, the convolution property of DOE output is similar to the image blurring effect caused by the point spread function (PSF) in optical imaging systems. Therefore, methods in photolithography systems can be applied to the design of DOEs with GSM beam shaping. A modified coherent output target pattern is obtained by directly adding serifs to the original target shape, and then a DOE is designed using traditional coherent algorithms. Figure 5 shows an example of designing a DOE with GSM beam shaping using the proximity correction method. The output light spot of the designed DOE under the GSM beam can well match the target pattern. Moreover, the DOE with GSM beam shaping effectively improves the coherent noise phenomenon.ConclusionsIn this work, the SIFTA is used to design an 8-order flat-topped DOE with a square light spot. Using the methods of coherent-mode representation and random-mode representation, the output results of GSM beams with different beam qualities passing through the DOE are studied. It is found that increasing beam quality will lead to a reduction in the size of the flat-topped area of the output light spot and make the DOE ineffective. It is shown that the output light spot is a convolution of coherent and noncoherent parts, and the convolution contribution of noncoherent parts is the cause of light spot degradation. The relationship among the applicable maximum M2 factor of flat-topped DOEs, output spot size, and input beam size is given, which provides a basis for laser selection in practical application. A design method of DOEs with GSM beam shaping is presented, which is expected to achieve the application of DOEs in lasers with low beam quality.

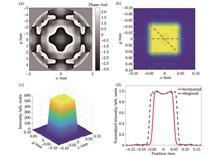

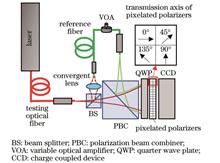

ObjectiveFiber lasers have been widely used in industry, national defense, and other fields, with the advantages of compact structure, high efficiency, and flexible energy transmission. Beam quality is one of the most important parameters of the fiber laser and directly determines the performance and propagation effects of the laser. Various beam quality parameters have been proposed so far, such as factor M2, Strehl ratio, barrel power, and diffraction limit multiples. In all these parameters, factor M2 is a relatively perfect evaluation parameter of the laser beam quality and can reflect both the near-field and far-field characteristics of the laser. With the increase in the laser power, thermal accumulation and nonlinear effects in the laser can cause dynamic changes in the beam quality. Dynamic measurement of factor M2 is beneficial to reveal the physical mechanism of mode field changes of the laser and control the real-time distribution of the laser mode field. Therefore, it has great significance to the design, manufacture, and application of lasers.MethodsIn this study, we propose a dynamic measurement method of factor M2 of a fiber laser using coaxial interferometry. The far-field complex amplitude of the laser under test is determined by the spatially phase-shifted coaxial interferograms. The intensity distributions of the laser at different places in the vicinity of the laser near-field are then obtained through angular spectrum transmission and lens transformation. Factor M2 of the laser is finally determined by fitting the beam diameters at different places. Our new method has a compact structure that avoids manufacturing and assembly errors caused by optical lenses. Compared with off-axis holographic technology, the method described in this study can obtain fast, high-precision, and complex amplitude information with high resolution and realize the fast and accurate measurement of laser beam quality factor M2 factor.Results and DiscussionsIn order to verify the feasibility of the factor M2 measurement method, the measured results of lasers from different fibers are compared with those measured by the commercial factor M2 measurement system (BEAM SQUARED). Two experimental setups are shown in Fig. 7. The fiber laser under test is collimated by an infinitely conjugated microscope objective lens and then reflected into the commercial factor M2 measurement system through two aluminized mirrors, as shown in the dashed box of Fig. 7. Through comparison, our coaxial interferometer in the solid line frame of the figure is more compact. It should be noted that to ensure the consistency of the laser under test in the two measurement systems, we only measure the beam quality of the P-light in the dashed box.Figure 8 shows the factor M2 results of the output from 630-HP fiber (Nufern), 1060-XP fiber (Nufern), and SMF-28e fiber (Corning) with two different systems shown in Fig. 7. The three fibers can transmit 1, 6, and 10 modes in a single polarization direction, respectively. For each fiber laser, we repeat the measurement three times and use their average values as the results. Figure 7 also compares the light intensity at the waist position with different methods. For 630-HP fiber, the measurement result of the proposed method is Mx_c2=1.09 and My_c2=1.03, and that of the commercial BEAM SQUARED is Mx_m2=1.04 and My_m2=1.04. The average measurement error of M2 is 0.028. For 1060-XP fiber, the measurement result of the proposed method is Mx_c2=1.16 and My_c2=1.46, and that of the commercial BEAM SQUARED is Mx_m2=1.10 and My_m2=1.58. The average measurement error of M2 is 0.065. For SMF-28e fiber, the measurement result of the proposed method is Mx_c2=1.95 and My_c2=2.53, and that of the commercial BEAM SQUARED is Mx_m2=2.06 and My_m2=2.45. The average measurement error of M2is 0.043. In the verification experiment of the beam quality, in order to avoid the system error introduced by building the measurement system repeatedly, we only move the fiber to be tested to switch the system. However, when the measurement system is switched, the attitude of the optical fiber will be slightly changed, which will change the output mode field of the laser and thus cause the measurement error of M2 of the beam to be measured. The error is more obvious when the number of modes is large, and excessive modes will lead to mode coupling, which is also the reason for the poor repeatability of multiple measurements of SMF-28e in the above experimental results.ConclusionsWe propose a new method for the dynamic measurement of beam quality factor M2 of a fiber laser by using far-field coaxial interferometry. The far-field complex amplitude of the laser under test is determined by the spatially phase-shifted coaxial interferograms. The intensity distributions of the laser at different places of free space are then obtained through angular spectrum transmission and lens transformation. factor M2 of the laser is finally determined by fitting the beam diameters at different places. In the experiment, we have measured factor M2 of the laser output from fibers with different core diameters at 633 nm. The results are consistent with those determined by the commercial beam quality instrument. In addition, it takes 0.02 s to complete the factor M2 calculation of lasers by the proposed method, which is more than two orders of magnitude faster than that of the commercial instrument. Our new method has a compact structure that avoids manufacturing and assembly errors caused by optical lenses. The method provides a technical means for the quality detection and state monitoring of few-mode fibers and their devices and is conducive to the design, manufacture, and development of fiber lasers and their fiber devices.

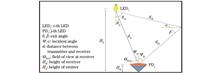

ObjectiveIn an indoor visible light communication (VLC) system, it is indispensable to optimize the uniformity of the system, ensuring the flatness of illumination and the fairness of communication. On the one hand, uniform received illuminance can provide a more comfortable lighting environment, which is also the primary purpose of indoor lighting sources. On the other hand, uniform received optical power can improve the communication quality and fairness of the VLC system. However, the layout and configuration parameters of the lighting source will directly affect the uniformity of the light signals. The existing schemes often optimize the lighting source layout based on the top of the room without considering the deployment height of the lighting source and tend to employ a sequential optimization scheme to improve the lighting source layout and power allocation. Besides, little research is conducted on simultaneous optimization. Therefore, it is extremely important to optimize the uniformity of illuminance and received power of the system in view of the uneven distribution of light signals in the indoor VLC system.MethodsGiven the above problems, a fast whale optimization algorithm (FWOA) based on a fusion improvement strategy is proposed to simultaneously optimize the indoor lighting source layout and power allocation in this study. Considering the lighting source layout and power distribution of the LED deployment height, we adopt a simultaneous optimization scheme to achieve the optimal LED position layout and uniformity of indoor light signals. At the same time, as there may be a wide range of search and long optimization time in simultaneous optimization, the whale optimization algorithm (WOA) is introduced from the perspective of swarm intelligence. The convergence speed and global optimization ability of the algorithm are further improved through the fusion improvement strategy. The specific improvement design is as follows. First, to solve the problem of insufficient convergence speed of the WOA, we employ reverse learning to optimize the initialization stage of the whale algorithm. Second, although the existing knowledge of LED position layout and power distribution scheme facilitates algorithm convergence, the primary stage of the algorithm has not been greatly improved. Therefore, the coefficient matrix is adjusted so that the optimization stage enters the local search more quickly to accelerate the convergence process of the WOA. Third, entering the local search too early will induce the algorithm to fall into the local extremum search condition. For the problem of the algorithm falling into local optimum, a global perturbation search mechanism is added to better balance the search ability of the algorithm.Results and DiscussionsAfter the simultaneous optimization of 16 LED layout models (Fig. 3), five different LED lighting source optimization schemes are selected for comparison (Table 5), and the performance indicators after optimization are listed. The results show that compared with the previous optimization schemes, the illuminance uniformity of the proposed optimization scheme has been improved by 7.39% to 109.03%, and the quality factor of received power has been improved from 5.25 to 12.23, an increase of nearly 133%. After simultaneous optimization of lighting source layout and power distribution, the factor further increases to 15.12. The simultaneous optimization scheme and the proposed FWOA have excellent optimization performance. In addition, the optimal layout (Fig. 6) and received power distribution (Fig. 7) of the system are explored when the number of LEDs is 14, 12, 9, and 6 respectively. It is found (Table 6) that in different scenarios, a better balance between system energy and performance can be achieved by selecting the appropriate number of LEDs. In addition, by introducing the FWOA, the time for simultaneous optimization is also greatly reduced, and the calculation time is shortened to less than 1 h, which verifies the superior performance of the proposed algorithm in terms of convergence speed and optimum search.ConclusionsIn the indoor VLC system considering one reflection, we propose a FWOA based on the fusion improvement strategy and realize the optimal distribution of LEDs by simultaneously optimizing the lighting source layout and power distribution model of the LED deployment height. The research results show that compared with the traditional optimization models, the uniformity of the received power, illuminance, and signal-to-noise ratio (SNR) of the optimized distribution model is better with excellent communication fairness. Besides, the distribution model of different numbers of LEDs in the room is also studied. The results show that as the number of LEDs increases, the system performance exhibits a positive convergence characteristic. By increasing the number of LEDs, the optimized lighting source layout and received power can improve system performance. However, this growth relationship converges when the number of LEDs increases to 12. Then, increasing the number of LEDs can no longer significantly improve system performance, and will in turn increase system energy consumption and optimization time. Therefore, the performance and energy efficiency of the system can be better balanced by selecting the appropriate number of LEDs. This study can provide a valuable reference for the application of VLC in indoor rooms of different sizes.

ObjectiveThe optical manipulation of particles is important in biomedicine, physics, and optics. Given the rapid development of the field of micromanipulation, there is considerable demand for improved functionality of optical tweezers. Currently, conventional optical tweezers and fiber optic optical tweezers can achieve limited particle transport without moving the probe. This limitation may have certain operational and analytical implications in the measurement of the angular frequency of cells for malaria diagnosis using optical capture techniques. Thus, there is a requirement to perform research on controlled and stable particle capture using fiber optic optical tweezers without moving the fiber and reciprocal axial transport between different capture sites. Certain researchers proposed multiple cases of reciprocal particle transport using fiber optic optical tweezers such as mode multiplexed tweezers and dual fiber optical tweezers. Most mode multiplexing tweezers use misaligned fusion to generate LP01 and LP11 modes in single-mode fibers, and these modes can realize the reciprocal motion of particles without moving the fiber. These tweezers have special requirements for shaping the fiber tip and cannot achieve reciprocal motion over long distances owing to the formation of two focal positions. Dual fiber optical tweezers are used to change the force balance of particles by adjusting the magnitude of the optical power emitted from both fiber ends so as to achieve particle capture and reciprocal motion. However, this method is a complex experimental setup, involves cumbersome operation, and has a limited particle transport distance owing to the two fiber ends. Therefore, in this study, a new fiber optic optical tweezer device is proposed that enables stable particle capture and the controlled manipulation of motion distance and velocity without moving the fiber optic probe.MethodsA new optical tweezer is proposed using the balance of solution evaporation force and optical force in which an appropriate amount of blue butyl glue is glued to each of the four corners of the slide, and then a coverslip is placed on the blue butyl glue to form the sample chamber. Here, the height of the port where the fiber optic probe is placed should be higher than the height of the port on the opposite side while the remaining two sides should be in parallel. Such an arrangement increases the contact area between the solution and air at the port where the fiber optic probe is placed, thus increasing the evaporation force of the port. Then, the configured sample suspension is injected into the sample chamber using a syringe. The liquid does not flow out of the sample chamber when the solution fills it owing to the tension between the surface molecules of the solution and sample chamber. The solution will drive the particles to the fiber side owing to the effect of evaporation force, thus providing a force opposite to the optical force. When the optical force and evaporation force of the solution reach equilibrium, a stable capture of particles can be achieved. Moreover, the output power of the fiber probe can be periodically changed by modulating the driving current of the laser. When the optical force is more than the solution evaporation force, the particles will move far away from the fiber tip, but when the optical force is less than the solution evaporation force, the particles will be pulled to the fiber tip. The distance and speed of particle movement can be controlled by adjusting the amplitude and period of the modulation signal. Finite element analysis was used to analyze the optical field distribution at the tip of the fiber and the magnitude of the force on these particles.Results and DiscussionsThe experimental and simulation results demonstrate that the proposed method can achieve stable capture of polystyrene spheres and repeatable axial reciprocal transport without moving the fiber. When the driving current of the laser increases, the peak of the particle motion curve increases as the modulation current of the laser gradually increases, moreover, the motion distance of blob increases. The slope of the particle motion curve increases as the modulation current increasing, indicating that the particle motion is faster. The motion distance of the particle is linear with the modulation current. Moreover, the laser light source with 980 nm used in the experiment can effectively reduce the photothermal effect during particle manipulation and avoid the damage caused during particle transport. As a controllable all-fiber integrated device, the method proposed in this study enhances the functionality and flexibility of the optical manipulation method, providing a potential technical support for its application in the fields of micromanipulation and biomedical research.ConclusionsIn this study, a new single-fiber optical tweezer based on current modulation is proposed to achieve stable particle capture by adjusting the magnitude of the evaporation force of the solution. Moreover, the driving current of the laser is modulated to change the output power periodically and change the force of the particle so as to achieve the controlled manipulation of particle transport distance and transport speed. In this study, a simulation model is built to analyze the force situation of the particles during the motion, and the mechanism of the periodic reciprocating motion of the particles is provided. The experimental results demonstrate that stable particle capture and reproducible axial reciprocal transport are achieved with polystyrene microspheres and yeast cells as target particles without moving the optical fiber. Moreover, the correspondence between the modulation current of the laser and the particle motion distance are analyzed, the particle motion curves under different driving currents are plotted, and the particle motion distance is demonstrated to be linearly related to its modulation current as presented in the fitting equation. As a controllable all-fiber integrated device, the proposed method extends the application possibilities of single-fiber optical tweezers.

ObjectiveIn the past three decades, Brillouin optical time domain reflectometry (BOTDR) has attracted widespread attention from researchers and has been applied to health and safety monitoring in various engineering structures. BOTDR based on short-time Fourier transform (STFT) performs signal processing on the broadband signal of the Brillouin scattering spectrum. The acquisition time of the broadband signal is shorter than that of the frequency sweep system, and therefore system response is swifter. Spatial resolution and frequency resolution are two important performance parameters of the STFT-BOTDR system. The former, spatial resolution, is proportional to optical pulse width and related to the form and length of the window function. The latter is related to the signal-to-noise ratio of the electrical signal, step length of frequency, center frequency of the Brillouin gain spectrum, and full width at half maximum. The two resolutions correlate with each other. Meanwhile, the computing time of the STFT is related to the set parameters of frequency step length and of the sliding window. As a result, improving the system frequency resolution will increase the system's computing time. How can we optimize the photoelectric design and improve the efficiency of the demodulation algorithm under the current system of typical BOTDR to obtain highly enhanced spatial resolution by using economic optical pulses of common width (instead of using narrow pulses and other costly photoelectric modules)? The solution to the question is essential to the extensive and large-scale application of BOTDR in the engineering field.MethodsWe propose a maximum-seeking method based on the BOTDR system, which realizes the rapid positioning of frequency shift and the enhancement of system spatial resolution based on fast Fourier transform (FFT) and STFT. The maximum-seeking method based on the equal division FFT process first performs FFT processing on the time-domain signal and linear fitting and then maximum-seeking processing on the spectrum within the frequency range of 100 MHz on both sides of the Brillouin center frequency. Then, by using the judgment Eq. (5), it determines whether there is temperature variation or strain information and then continuously divides the time-domain signal. Finally, it selects the corresponding length of the time-domain signal to determine the temperature variation or strain frequency shift range, thus realizing the rapid positioning of frequency shift and reducing the system's operation time. The maximum-seeking method based on STFT first processes the time-domain signal with STFT to construct a three-dimensional Brillouin gain spectrum and then builds a Brillouin frequency shift distribution through the maximum-seeking method. The Brillouin frequency shift curve is corrected by using the judgment Eq. (7) in different situations, determining the length of the short-distance temperature variation or strain segment, thereby improving the system's spatial resolution.Results and DiscussionsIn the experiment, we design a BOTDR system based on STFT and quickly locate the heated fiber in a section of 130 m the fiber of 2 km under test. We use the spectrum constructed with equal division FFT (Fig. 6) to determine the position of temperature occurrence based on whether the frequency shift peaks appear in each segment of the spectrum. The traditional STFT-BOTDR system detects fiber temperature variation data of 130 m in 12800 groups of data, with a system operation time of 482 s. By using the maximum-seeking method based on equal division FFT, the system operation time for detecting the temperature variation information of 130 m is reduced to 68 s, which is 1/8 of the original time. The calculation speed is much improved. At the same time, to verify the enhancement of spatial resolution by using the maximum-seeking method based on STFT, we design test fiber 2 (Fig. 7) with heating section temperatures set at 40 °C and 50 ℃. Under the condition of setting the probe light pulse width to 100 ns, we use the traditional peak search algorithm and the maximum-seeking method to process the constructed Brillouin frequency shift distribution (Fig. 9). From the experimental data of Brillouin frequency shift distribution (Fig. 9), it can be seen that after using the STFT-based maximum-seeking method, the system's spatial resolution is optimized from 12.8 m to 1.2 m under the heating section at 40 °C and from 4.6 m to 0.6 m under the heating section at 50 ℃.ConclusionsWe propose a new method to achieve rapid frequency shift positioning and spatial resolution enhancement in the BOTDR by using the maximum-seeking method. By continuously dividing the original signal and performing FFT processing, the maximum-seeking method processes the two-dimensional Brillouin gain spectrum to determine the position range of temperature variation or strain segments, reducing the system's computing time. At the same time, the three-dimensional Brillouin gain spectrum obtained from STFT is processed by using the maximum-seeking method to construct Brillouin frequency shift distribution, reducing the minimum detectable temperature variation or strain segment length and enhancing the system's spatial resolution. In the experiment, an STFT-based BOTDR system is designed. By using the maximum-seeking method based on equal division FFT, the heated fiber of 130 m in the test fiber of 2 km is quickly located, reducing the system's operation time to 1/8 of the original and improving calculation speed. Simultaneously, under the condition of setting the probe light pulse width to 100 ns, a spatial resolution of 0.6 m is achieved on the test fiber of 2 km. The experimental results show that this method can further improve the performance of existing STFT-BOTDR systems without sacrificing other sensing performance parameters. By using the maximum-seeking method based on STFT, the sub-meter level spatial resolution is achieved. Compared with the traditional BOTDR system, the STFT-BOTDR system based on the maximum-seeking method has faster detection speed and better spatial resolution in engineering applications. Besides, this method helps to obtain higher system performance under limited system cost, making it easier for low-cost and high-precision BOTDR systems to be used in larger quantities at construction sites, bridges, and other occasions, thereby accelerating the engineering and large-scale application of distributed fiber optic sensing technology.

ObjectiveIn response to the trade-off of spatial-angular resolution in light field data acquisition due to data flux limitations, we propose a neural radiance field-based method to achieve high-quality light field super-resolution in the angular domain. The occlusion, depth variations, and background interference make super-resolution in the angular domain a challenging task, and it is difficult to express the rich details of the texture. In order to address these issues, many solutions are proposed in terms of novel view synthesis based on explicit and implicit scene geometry. However, both explicit and implicit scene geometry methods generate new viewpoint images of the scene from the geometric features of the scene, which are prone to problems such as noise interference and difficult reconstruction of textural details. Therefore, we propose neural radiance field-based light field super-resolution in the angular domain to reconstruct densely sampled light fields from sparse viewpoint sets, which can avoid errors and noises that may be introduced during image acquisition and improve the accuracy and quality of subsequent three-dimensional (3D) reconstruction.MethodsBy training the neural network with the light field data, the neural radiance field captures the complete scene information, even for novel viewpoints, and thus enhances the scene representation performance. In order to achieve this, a multilayer perceptron is utilized to express a five-dimensional vector function that describes the geometry and color information of the 3D model. The image color is then predicted using volume rendering. The light field is subsequently represented by a neural radiance field, and dense sampling of the angular dimension is achieved by adjusting the camera pose in the light field to obtain new perspectives between the sub-aperture images. This approach overcomes the limitations of prior techniques, including occlusion, depth variation, and background interference in light field scenes. Additionally, the input variable is mapped to the Fourier features of that variable by positional encoding, effectively addressing the challenge of fitting to the high-frequency textural information of the scene.Results and DiscussionsWe propose the neural radiance field-based light field super-resolution in the angular domain by representing the light field by the neural radiance field. The main advantage of the proposed method over the selected experimental methods, such as local light field fusion (LLFF) and light field reconstruction using convolutional network on EPI (LFEPICNN) is that the proposed method is based on the neural radiance field to implicitly represent the light field scene, which can fit an accurate implicit function for the high-resolution four-dimensional light field and accurately represent the light field scene with complex conditions. The experimental results show that the super-resolution method based on the neural radiance field proposed can improve the angular resolution from 5×5 to 9×9. The peak signal to noise ratio (PSNR) is improved by 13.8% on average, and the structural similarity (SSIM) is improved by 9.19% on average (Table 1 and Table 2).ConclusionsWe propose a novel method of neural radiance field-based light field super-resolution in the angular domain. By representing the light field with the neural radiance field, the new perspective images between sub-aperture images are generated to achieve the dense sampling of the angular dimension. In the implicit representation of the scene, position encoding is utilized to map the input variables to their Fourier features to address the problem of difficult fitting for high-frequency information. Experiments on the HCI simulated light field dataset show that the proposed method achieves the best results in several super-resolution metrics and significantly outperforms other methods. Experimental results on the Stanford light field real dataset demonstrate the effectiveness of the method. Overall, the super-resolution method is not only able to deal with occlusions, depth variations, and background interference but also to obtain high-quality reproduction of rich textural details. In the future, the proposed method will be used for real-time rendering and scene reconstruction of large scenes. As a new paradigm for scene representation, neural radiance fields provide new ideas and methods for computational imaging of light fields, and we will further combine scenes' geometric and physical information to improve computational imaging performance and scene representation performance.

ObjectiveThe phase problem is encountered and required to be solved in many optical applications, such as optical metrology, adaptive optics, and biomedical imaging. This problem arises because optical detectors can only record the amplitudes of light beams, and the phases of light beams are missed. However, the transparent object, such as a living cell, does not affect the amplitude of a light beam passing through it, except for a phase shift, and phase imaging is the only way to acquire the structure information of transparent objects. Although the Zernike phase contrast microscope can convert a phase shift of the light beam passing through a transparent object, it is not quantitative and only effective for small phase shifts. Quantitative phase imaging (QPI), which is a label-free and powerful technique for providing quantitative information of transparent objects, attracts growing interest in biomedical applications. Up to now, the mainstream techniques for QPI are digital holography microscopy and phase retrieval. Digital holography microscopy, an interferometric technique, is highly accurate but extremely sensitive to the environment. Phase retrieval can recover the input phase from intensity-only measurements, but it has a stagnation problem and a limited dynamical range. These drawbacks have greatly limited the application of phase retrieval. In this study, a QPI technique based on both wavefront segmentation by a microlens array and multiplane phase retrieval is proposed for achieving QPI of phase objects with a large dynamic range. This technique has the characteristics of high accuracy, fast convergence speed, and large dynamic range, which can be a potential technique for QPI of phase objects in biomedical imaging.MethodsThe proposed method for QPI of a phase object is based on both wavefront segmentation by a microlens array and multiplane phase retrieval. In order to acquire QPI of a phase object with a large dynamic range, the proposed method imposes three constraints on the light field passing through the phase object. The first one is wavefront segmentation, which divides the input wavefront into small ones by a microlens array. The second one involves multiple intensity distributions recorded at different diffraction planes along the axial direction of the microlens array. Due to the abundant information provided by intensity maps at different diffraction distances, phase retrieval algorithms typically converge quickly. The third one is to employ multiple illuminations at different wavelengths. In order to acquire an unwrapped phase imaging of a phase object by the proposed approach, three steps need to be performed: firstly, recording multiple diffraction intensity distributions near the focal plane of the microlens array under different illumination wavelengths; secondly, retrieving the phase of the phase object using multi-plane phase retrieval at different wavelengths, respectively; finally, unwrapping the phase of the phase object using the retrieved phases at the synthetic wavelength. A series of numerical experiments are performed to evaluate the performance of the proposed method. Four different types of aberrations (the phase of a microlens array, complex random combination wavefronts, peak functions, and cell slices) are selected as the phase to be measured for exploring the versatility of the proposed method under the illumination wavelengths of 640 nm and 685 nm. Then, phase retrieval of wavefronts with different peak-to-valley (PV) values is performed to verify the large dynamic range of the proposed method. At last, the convergence of the proposed method is compared with that of the classical phase retrieval algorithm.Results and DiscussionsThe numerical experiments for retrieving four different types of phases show that the proposed method can recover phases of phase objects quickly and accurately (Figs. 5, 6, 7, and 8), which indicates that the proposed method is an effective way for QPI of phase objects. Using the proposed method, phase retrieval of a wavefront with a PV value exceeding 3 μm is achieved under the illumination wavelengths of 640 nm and 685 nm, nearly 5 times one illumination wavelength, indicating that the method covers a large dynamic range (Fig. 9 and Table 1). Furthermore, the comparison of convergence speed shows that the convergence of the proposed method is always better than that of the classical phase retrieval algorithm (Fig. 10 and Table 2).ConclusionsIn this study, a QPI technique for phase objects based on both wavefront segmentation by a microlens array and multiplane phase retrieval is proposed. This technique requires the recording of the intensity distribution maps of different diffraction distances near the focal plane of the microlens array under two different illumination wavelengths. The recorded intensity distribution maps are used to recover the digital complex light field passing through the phase object by multi-plane phase retrieval algorithm. The retrieved digital complex light field phases at different wavelengths are used to calculate the phase image of the phase object at a synthetic wavelength. In the numerical simulation experiments, the QPI for different types of phase objects with different PV values is achieved. It shows that this technique is powerful and efficient for QPI and serves as a promising technique for QPI of phase objects.

ObjectiveIn the vision-guided robot operation, it is necessary to acquire the 3D image of the target in the scene dynamically when there is relative motion between the target and the vision system. Binocular stereo vision can obtain images of the left and right cameras synchronously and quickly and has better dynamic adaptability compared with the 3D imaging methods characterized by scanning, such as laser radar and linear scanning structured light technology. The binocular stereo vision technology assisted by active speckle projection illumination enhances the texture information of the target surface and improves the matching accuracy of corresponding points of the left and right images. Thus, it is a simple and effective approach. At present, the research on the binocular stereo vision system with active speckle projection applied in the air is mature relatively, and many commercial products have been developed, such as the Kinect system of Microsoft, the Vic-3D measurement system of Correlation Solutions, and the Q-400 system of Dantec in Germany. However, when this technology is applied in the water, there are problems such as the pinhole model failure, unsatisfied matching conditions of polar constraints, and the image degradation caused by the underwater environment absorption and scattering of left and right images of the projected speckle. This will affect the matching accuracy of the corresponding points and the underwater 3D imaging effect.MethodsWe rebuild an underwater binocular vision imaging model that actively projects speckle patterns based on the 4D parameter representation of light. The influence of the speckle pattern on the matching accuracy of underwater binocular corresponding points is analyzed based on MATLAB 2015b. The experimental device of underwater binocular vision dynamic 3D imaging system with active speckle projection is mainly composed of a speckle pattern projector and two cameras. The employed speckle pattern projector is a projector. The speckle pattern generated by the computer is projected on the underwater target by the projector, and the left and right cameras synchronously and quickly shoot the underwater moving object with the speckle pattern on the surface. According to the principle of binocular stereo vision, the 3D image of the underwater target is calculated.Results and DiscussionsThe simulation results of the relationship between speckle pattern and matching accuracy of underwater binocular imaging are shown in Fig. 6. With the rising speckle size, the maximum matching error first decreases and then increases. When the speckle size is between 3 pixel and 15 pixel, the maximum matching error is less than 0.7 pixel, and when the speckle size is 9 pixel, the matching accuracy is the highest. This is because when the speckle density is constant, too large or too small speckle is not conducive to matching, and the appropriate size of the speckle can enhance the matching clues of the corresponding points of the left and right images. Fig. 7 shows the influence of speckle density on the matching accuracy when the speckle size is 9 pixel. With the increase in speckle density, the maximum matching error first decreases and then increases. When the speckle density is 1.5% to 3.5%, the matching error is less than 0.6 pixel, and when the speckle density is 2%, the matching accuracy is the highest. Fig. 8 presents that the maximum matching error gradually increases with the decreasing object distance (from 2400 mm to 3600 mm), but the maximum matching error is still less than 1.1 pixel. This method has high matching accuracy. In addition, the purpose of the underwater experiment is to investigate the underwater dynamic 3D imaging error of the established experimental device. The experimental scenario is shown in Fig. 10. The projector is connected to the computer, the speckle density generated by the computer is 2%, and the speckle size is 9 pixel. The projector projects the speckle pattern diagonally downward on the underwater target surface. The underwater target is a standard ball that is suspended by a string at about 3 m in front of the experimental device. As shown in Fig. 11, the standard ball swings on the parallel plane of two cameras, the starting angle is about 50°, and the ball can swing freely when it is released at zero initial speed. It has different instantaneous linear speeds at different positions and has the maximum instantaneous speed at the lowest point. The maximum instantaneous speed is about 1.2 m/s. The 3D point cloud can be calculated based on the captured left and right images. Fig. 13 shows the 3D point cloud images at the corresponding positions in Fig. 12. The PolyWorks software is adopted to fit the obtained 3D point cloud into a sphere (picture-in-picture in Fig. 13). The diameter of the sphere and coordinates of the center of the sphere are obtained (data in the upper right corner of picture-in-picture), and the dynamic measurement error is obtained by comparing the diameter of the standard sphere. The dynamic imaging experiment has been operated on many times. The diameter error of the standard ball at the lowest point and the standard deviation of the measured result are shown in Table 3. The experimental results show that the standard deviation of dynamic measurement error at the maximum instantaneous speed of the standard ball is 2.4 mm with a sound dynamic 3D imaging effect.ConclusionsWe conduct a study on the underwater binocular stereo vision dynamic imaging technology based on the active speckle projection, analyze the influence of active speckle pattern projection on the matching accuracy of corresponding points of underwater binocular stereo vision, and establish the experimental device of underwater binocular vision dynamic 3D imaging system based on active speckle projection. The experimental results indicate that the underwater binocular stereo vision technology with the active speckle projection has a sound dynamic 3D imaging effect, and the dynamic measurement error is within the static error determined by the structure and system parameters of the binocular stereo vision experimental device.

ObjectiveAs the demand for materials with excellent mechanical properties is increasing in scientific research and engineering, determining how to accurately measure the global displacement field of materials in mechanical experiments has become an important scientific research issue. Digital image correlation (DIC) algorithm is a non-contact optical method for measuring global speckle displacement fields based on visible light, which is widely used in experimental mechanics and engineering fields. It has the advantages of low measurement costs, high precision, high sensitivity, strong anti-interference ability, and global measurement. However, traditional DIC algorithm cannot meet the requirements of real-time measurement in practical applications, which greatly limits the development and promotion of this method. With the rapid development of deep learning in computer vision, deep learning methods gradually come into use in DIC algorithm. Thanks to the efficient calculation by general processing unit (GPU) devices, the deep learning-based method for measuring the speckle displacement field can more easily achieve real-time online calculation. Although the method is much faster than the traditional one, the model cannot accurately measure the complex large deformation displacement field in practical applications due to the incomplete dataset. Hence, this work aims to construct a more realistic and comprehensive speckle image dataset with a large deformation displacement field and propose a fast and high-precision deep learning model to measure the displacement field of speckle images with large deformation.MethodsA large number of different types of speckle images is obtained in various ways (Fig. 1) to construct a speckle image dataset with a large deformation displacement field in line with the actual situation. These speckle images are obtained from real experiments and computer simulations under different parameter combinations (Table 1). Then, a composite deformation composed of translation, stretching, compression, rotation, Gauss, shear, and other basic deformations is used to define the random displacement field. Finally, a speckle-image displacement-field dataset with a maximum displacement of 16 pixel and large deformation in line with the actual deformation is produced. In terms of the deep learning network model, a fast and high-precision network model DICNet (Fig. 5) for measuring the speckle images with a large-deformation displacement field is built upon the improvement on UNet. DICNet introduces a convolutional block attention module to increase the efficiency of feature extraction and fusion, uses depthwise separable convolution to replace some ordinary convolutional layers, and increases the convolution kernel size of some convolutional layers. It improves the displacement-field measurement accuracy and reduces the number of parameters of the network model. At the network training stage, a combination of the global shape loss function and global absolute loss function is proposed to improve the convergence speed and accuracy of the model.Results and DiscussionsNetwork selection experiments are conducted to prove that UNet is a rational basic network model for measuring the large-deformation displacement (Table 2). It has higher measurement accuracy of the displacement field, a smaller number of parameters, and faster inference speed. The DICNet proposed in this work is compared with the traditional DIC algorithm and the latest deep learning methods on the self-built dataset, and the performance of these methods in the measurement task of the large-displacement displacement field is comprehensively compared in terms of three indicators, i.e., the root-mean-square error (RMSE), the standard deviation, and mean time (Table 3). The results show that the measurement accuracy of the deep learning method is better than that of the traditional method. The RMSE of DICNet on the training set and the validation set is 0.056 pixel and 0.055 pixel, respectively, which is 67%-70% lower than that of other existing methods and about 39% lower than that of the original UNet network. On the test set, DICNet still has the smallest RMSE and the most stable performance (Table 4). The experiments of DICNet are also conducted on the public DIC challenge dataset (Fig. 8). The results show that the measurement results of the proposed method are highly consistent with those of the traditional algorithms, which indicates that the proposed method still has good generalization performance on the public dataset.ConclusionsThis work proposes a displacement field measurement method for speckle images with complex large deformation. This method uses the convolutional block attention module and depthwise separable convolution to improve the UNet network for measuring large deformation displacement fields. To train the model, this work constructs a dataset containing multiple types of speckle images and complex large-deformation displacement fields in line with the real situation and proposes a new loss function. This method is compared with traditional DIC algorithm and the latest deep learning methods on the self-built dataset and public dataset separately. The results show that the measurement results of DICNet are highly consistent with those of other methods, and the method in this work achieves the highest average accuracy with the smallest number of model parameters. The measurement speed of the displacement field is far higher than those of traditional methods, which can meet the actual real-time measurement requirements of a large deformation displacement field. The source code and network pre-trained weights of this study are available at https://github.com/donotbreeze/Large-deformation-measurement-method-of-speckle-image-based-on-deep-learning. The dataset is available athttps://pan.baidu.com/s/1KzC9g_GIkvMnGFumDYGyBA?pwd=fd5x.

ObjectiveAt present, optical elements are almost always employed in the utilization and development of a wide variety of optical instruments. Due to improper handling during processing, scratches can appear on the surface of optical elements. Scattered light from surface scratches can reduce the beam quality, increase system noise, and reduce contrast, thereby affecting the performance and normal operation of the entire optical system. Therefore, the detection of surface scratches on optical elements is significant. As the existing light scattering methods can only detect the surface scratches of optical elements, the CCD or CMOS sensor can only receive the light field distribution formed by the scattering of surface scratches, from which the two-dimensional size of the surface scratches can be obtained. However, the depth information of the scratches cannot be detected directly. Since up to 80% of the surface information such as depths and shapes of surface scratches is characterized by phase information, we propose to apply the angular spectrum iterative algorithm and transport of intensity equation (TIE) + angular spectrum iterative algorithm to the scattering method for detecting the depth of surface scratches on optical elements. Finally, a scattered light field acquisition optical path is put forward to detect the depths of surface scratches on optical elements.MethodsIn the detection of surface scratch depths, the angular spectrum iterative algorithm and transport of intensity equation (TIE) are applied to the detection of surface scratch depths by scattering method. The scratch depths can be obtained from the reconstructed surface scratch phase distribution by the phase modulation characteristics of surface scratches. In the simulation section, the forward and reverse propagation relationship models between the optical element surface and the CMOS receiving surface are built by the angular spectrum transfer function. Based on this model, the scattered light field distributions of surface scratches with different shapes are obtained. Then, the angular spectrum iterative algorithm and TIE+angular spectrum iterative algorithm are adopted to reconstruct the scratch phases. The reconstruction process of the angular spectrum iterative algorithm is to select a random phase as the initial phase of the CMOS receiving surface and iterate repeatedly between the two surfaces. Additionally, the calculated value is replaced with the amplitude value of the initial simulated scattered light field intensity and the unit amplitude of the optical element surface until the defined error reaches the preset precision or the set maximum number of iterations. The phase distribution of scratches on the surface of optical elements can be obtained, and the depths of scratches can be calculated by the modulation characteristics of the surface scratches to the phase. TIE+angular spectrum iterative algorithm is similar to the reconstruction process of the angular spectrum iterative algorithm, which means that the initial random phase is replaced by the phase calculated by TIE. Finally, the effectiveness of the two reconstruction algorithms is evaluated from the strength error, correlation coefficient, and relative root mean square error. In the experimental section, the scattered light field acquisition device is built and the scattered light field distribution on the surface of the optical element is received by the CMOS detector. At the same time, the reconstructed scratch distribution on the surface of the optical element is reconstructed by the above two reconstruction algorithms, and then the surface scratch depth size is calculated. Finally, the reconstruction results of the two algorithms are compared with the detection results of the white light interferometry, and the relative errors of the two algorithms are calculated.Results and DiscussionsIn the simulation section, scratch distribution and scattering field distribution of three different shapes, which are square scratch, triangular scratch, and oval scratch, are first simulated (Figs. 4 and 5). Then the scratch scattering field distribution is employed as the initial input of the angular spectrum iterative algorithm and TIE+angular spectrum iterative algorithm respectively to reconstruct the phase distribution of scratches on the surface of optical elements. The depth information of surface scratches is obtained based on the phase modulation characteristics of surface scratches (Figs. 6 and 7). Finally, we evaluate the effectiveness of the two algorithms from the strength error, correlation coefficient, and relative root mean square error. From the perspective of the intensity error evaluation, the number of iterations is set as 5000. The rising number of iterations leads to decreasing intensity error. Compared with the angular spectrum iterative algorithm, TIE+angular spectrum iterative algorithm has a smaller intensity error and faster convergence speed (Fig. 8). From the evaluation of the correlation coefficients, the correlation coefficients of both reconstruction algorithms are greater than 0.9 and the reconstructions are both highly correlated. However, the TIE+ angular spectrum iteration algorithm has a greater correlation coefficient and a higher degree of correlation compared to the angular spectrum iteration algorithm. From the evaluation of the relative root mean square error, the relative root mean square error of the TIE+angular spectrum iterative algorithm is 5.2%-5.3%, and that of the angular spectrum iterative algorithm is 5.8%-6.6%. The simulation results show that the scratch depth reconstructed by TIE+angular spectrum iterative algorithm is more accurate. In the experimental section, the scattered light field distribution of scratches on the surface of optical elements is collected experimentally, and the scratch depth on the surface of optical elements is reconstructed through the angular spectrum iterative algorithm and TIE+angular spectrum iterative algorithm (Fig. 12). Finally, the reconstructed results are compared with those of the white light interferometry, and the relative error range of the angular spectrum iterative algorithm is 1.35%-4.21%. The relative error range of the TIE+angular spectrum iterative algorithm is 0.90%-3.73%. The experimental results indicate that the scratch depth reconstructed by TIE+angular spectral iteration algorithm is more accurate.ConclusionsIn this paper, we apply the angular spectrum iteration algorithm and TIE+angular spectrum iteration algorithm to the surface scratch depth detection of optical elements by scattering method. During the experiment, only one image of the optical element surface light field distribution needs to be collected, which is employed as the initial input of two reconstruction algorithms to reconstruct the phase information of the scratch. Then the depth information of the scratch is calculated according to the modulation characteristics of the surface scratch to the phase. Compared with the angular spectrum iterative algorithm, TIE+angular spectrum iterative algorithm has a smaller scratch depth reconstruction error, faster convergence speed, higher reconstruction accuracy, and better reconstruction effect.

ObjectiveWith the progress of optical communication technology, optoelectronic devices are developing toward low power consumption, large data bandwidth, and high integration. The electro-optic modulator (EOM), a key optoelectronic device, plays a vital role in connecting the electric and optical fields, where the on-chip integration, high efficiency, low power consumption, and large bandwidth are always the crucial development directions of EOMs. Up to now, lithium niobate (LN) is still one of the most ideal materials for electro-optic modulation due to its excellent properties of wide transparent windows, strong Pockels effect, as well as stable physical and chemical features. However, the currently used EOMs are based on the bulk LN material, and the key modulation waveguides are formed by titanium diffusion or proton exchange on the bulk LN. Therefore, the formed waveguides have a low refractive index contrast (?n≈0.02), which leads to a large waveguide size required to well confine the optical mode, and the EOM footprint is also relatively large inevitably. Recently, the thin-film lithium niobate (TFLN) wafer has been fabricated by the smart cutting process and made available by several commercial companies. The TFLN wafer not only inherits some excellent material properties of LN but also has a high refractive index contrast (?n≈0.8), a feature considerably beneficial for shrinking the device footprint and making the on-chip compact integration available. In general, the TFLN-based EOMs can be divided into two types. One performs etching on the TFLN wafer to form the required waveguide, and the other deposits other high refractive index materials atop or below the TFLN wafer to form the waveguide, where the TFLN wafer does not need to be etched. By comparison, the etching-free TFLN scheme can reduce the fabrication difficulty. Therefore, we focus on the etching-free TFLN structure and propose a heterogeneously integrated EOM using embedded filling layers.Methods The structure of the proposed device is divided into three partsthe structural design of the modulation waveguide, the electrode structure, as well as the coupling structure between the modulation region and the input/output waveguides. The silicon nitride (SiNx) modulation waveguide is under the TFLN, and a layer of BCB is filled between them to reduce the half-wave-voltage length product (VπL) and optical loss. On this basis, we employ such structure as the interference arms in a Mach-Zehnder interferometer (MZI) waveguide structure, where the modulation electrodes are arranged as a ground-signal-ground (G-S-G) configuration. The modulation electrodes are deposited on the TFLN, and a SiO2 layer is sandwiched in between as an isolating layer to further reduce the optical loss, microwave loss, and the refractive index of the effective mode. Additionally, we propose an inverted stepped TFLN structure to achieve efficient coupling between input/output waveguide and modulation waveguide. Finally, we simulate and analyze the proposed structure using tools of COMSOL Multiphysics and FDTD Solutions to demonstrate its high-speed modulation performance.Results and DiscussionsThe BCB layer is filled between bottom SiNx modulation waveguide and TFLN. We simulate the influence of different thicknesses of the BCB layer and the SiO2 layer on VπL and the optical loss of the device. Results show that the proposed structure can effectively reduce VπL and optical loss (Fig. 2). At the same time, we optimize the electrode gap, and the optimum VπL of the device is 1.77 V·cm (Fig. 4). Further, we fill the SiO2 layer between modulation electrode and TFLN layer. The filled SiO2 layer can not only further reduce the optical loss (Fig. 3) and microwave loss (Fig. 5) of the device but also contribute to the index matching (Fig. 7). The high-speed analysis shows that the 3 dB modulation bandwidth of our proposed modulator is 140 GHz (Fig. 8). Finally, we design an inverted stepped thin-film structure, which can reduce the refractive index mismatch of the effective mode between SiNx waveguide region and SiNx-LN hybrid region. The simulation results show that the single-ended coupling loss of this structure is 0.73 dB (Fig. 9).ConclusionsIn this paper, we propose a heterogeneously integrated EOM based on TFLN. The modulation waveguide is formed by the bottom SiNx and top TFLN that are sandwiched by a BCB layer. The modulation electrodes are deposited on the TFLN, and a SiO2 layer is sandwiched in between as an isolating layer, which contributes to the index matching and the reduction in optical loss and microwave loss. Further, we construct an MZI-based EOM, where an inverted stepped thin-film structure is proposed to achieve the efficient coupling between input/output waveguide and modulation waveguide. After the high-speed matching design and optimization of the proposed electro-optic modulator, we obtain a VπL of 1.76 V·cm and a 3 dB bandwidth of 140 GHz in a modulation length of only 5 mm, and the single-ended coupling loss is reduced from 1.23 dB to 0.73 dB. Given these characteristics, we believe the proposed device structure could be applied in the large-bandwidth design of the TFLN-based EOM and would boost the development of TFLN-based photonic integrated devices.