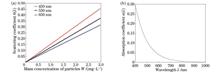

ObjectiveUnderwater quantum communication is of great significance to seabed exploration and global communication, whose quality is affected by the complex and changeable marine environment. The stable and high-quality transmission of optical quantum signals can ensure the accurate transmission of information. In the process of underwater quantum communication transmission, various marine environmental factors will inevitably lead to the attenuation of communication links, such as seawater molecules, algal suspended particles, and marine sediment particles. However, up to now, research on the effect of marine non-pigment agglomerated particles on the performance of underwater quantum channels has not been carried out. Non-pigment suspended particles in seawater mainly include suspended sediment particles, mineral particles, and excreta of marine organisms. During underwater quantum communication, the collision between non-pigment agglomerated particles and light quantum signals will cause the attenuation of optical signals, which can lead to the attenuation of communication links and reduce the reliability of underwater quantum communication. It is expected that the simulation results in this study can provide a reference for the design and optimization of quantum communication systems in the marine environment.MethodsMarine non-pigment suspended particles are agglomerated particle systems formed by multiple single particles. After linear superposition approximation treatment for marine non-pigment agglomerated particles of different sizes, they can still be regarded as spherical particles, similar to equivalent spheres. Firstly, Mie scattering theory and the Gordon model are used to analyze the absorption and scattering characteristics of marine non-pigment agglomerated particles. Then, the relationship model between mass concentration of marine non-pigment agglomerated particles and link attenuation is built, and simulation experiments are carried out. After that, a physical model for the quantum security key generation rate under the influence of marine non-pigment agglomerated particles is constructed, and the simulation experiment is conducted for the preparation and measurement quantum key distribution system. For quantum communication networks based on entangled states, the influence of marine non-pigment agglomerated particles on the establishment rate of underwater quantum channels is studied and analyzed. In addition, the capacity of the amplitude damping channel in a marine non-pigment environment is analyzed and calculated.Results and DiscussionsFor the same wavelength, the Mie scattering coefficient of marine non-pigment agglomerated particles is proportional to the mass concentration; for the same mass concentration, a shorter incident wavelength means a greater scattering coefficient. Moreover, the absorption coefficient of marine non-pigment agglomerated particles is exponentially related to the incident wavelength (Fig. 1). The link attenuation decreases with the increase in the wavelength of the incident light signal, and a larger mass concentration of marine non-pigment agglomerated particles is accompanied by a more significant decreasing trend of the quantum link attenuation (Fig. 2). When the optical signal wavelength is constant, the link attenuation shows an upward trend with the increase in the mass concentration of non-pigment agglomerated particles (Fig. 3). The generation rate of the quantum security key grows with the increase in the wavelength of the incident light signal and drops with the increase in the mass concentration of marine non-pigment agglomerated particles (Fig. 4). The establishment rate of quantum channels is greatly affected by fidelity. For the same fidelity, the establishment rate increases with the increase in the wavelength of the incident optical signal, but the overall upward trend is gentle (Fig. 6). The capacity of the amplitude damping channel shrinks with the increase in the mass concentration and transmission distance of marine non-pigment agglomerated particles (Fig. 9). Overall, a higher incident wavelength indicates better quality of underwater quantum communication when only marine non-pigment agglomerated particles are considered.ConclusionsIn the present study, the scattering and absorption characteristics of non-pigment agglomerated particles are analyzed according to the Mie scattering theory and the Gordon model. For different wavelengths of optical signals, the relationship models between mass concentration of marine non-pigment agglomerated particles and link attenuation, generation rate of the quantum security key, channel establishment rate, as well as the capacity of the amplitude damping channel are constructed and simulated. The experimental results show that for quantum communication using 510-nm optical signals, the link attenuation increases from 2.562 to 13.100 when the mass concentration of non-pigment agglomerated particles increases from 0 to 3 mg/L. When 580-nm wavelength signals are selected as the incident wavelength, the mass concentration of non-pigment agglomerated particles is 1.2 mg/L, and the transmission distance increases from 0 to 2 km, while the generation rate of the quantum security key increases from 2.17×10-4 to 8.30×10-5. When 540-nm optical signals are used for underwater quantum communication, and the fidelity increases from 0.60 to 0.99, the quantum channel establishment rate is attenuated from 93.61 to 7.39 pair/s. For underwater quantum communication with 580-nm optical signals, when the mass concentration of non-pigment agglomerated particles is 1.8 mg/L, and the transmission distance increases from 0.5 to 10 km, the channel capacity decreases from 0.726 to 0.040. When the transmission distance is greater than 7 km, and the mass concentration is greater than 2.1 mg/L, the capacity of the amplitude damping channel is less than 0.069, and the communication efficiency is extremely low.

ObjectiveAs the primary means of exploring and transmitting ocean information, the acquisition and analysis of underwater video images have undoubtedly become a research hotspot for many scholars in recent years. To solve the problems of color shift, low contrast, and blurred edge details caused by absorption and scattering of light propagating underwater, researchers have clarified underwater images based on enhancement and restoration methods. By employing digital image processing techniques, enhancement-based methods improve the quality of images from the spatial domain or transform domain, such as histogram equalization, white balance, and wavelet transform. Recovery-based methods restore image clarity by solving the underwater imaging model. The main methods include improving the dark channel prior (DCP), fitting the background light scattering component, and suppressing inhomogeneous illumination. However, the above-mentioned enhancement methods do not consider the physical propagation properties of underwater light, resulting in localized over-enhancement of the images and poor subjective evaluation. The underwater imaging model adopted in the recovery method ignores the difference in transmittance between the direct attenuation component and the back scattering component, resulting in poor robustness of the model. In addition, during the parameter solution, the complex underwater environment tends to interfere with the correct estimation of the background light and transmittance parameters by these methods. Therefore, this paper builds a more robust underwater imaging model. To reduce the interference caused by the image distortion to the parameter solution, it preprocesses the original image for red channel compensation and completes the model solution through the preprocessing image, realizing the restoration of the underwater image.MethodsBased on the traditional underwater imaging model, this paper investigates the parameter dependence of transmittance in the direct attenuation component and the back scattering component and builds a dual transmittance underwater imaging model. In solving the model, firstly, a red channel compensation algorithm is designed for preprocessing the image through the pixel correlation among the three channels to reduce the interference of color distortion to the parameter solution. Then, based on the quadtree hierarchical search algorithm, three background light candidates are obtained using smoothness, color difference, and luminance features to search, and the background light values are selected for the color channels according to the input image's luminance and edge intensity. The transmittance of the back scattering component is obtained by improving the dark channel prior and adding the saturation component refinement, and degradation-free pixel points are employed to obtain the direct component transmittance. Finally, the recovered image is obtained by inversely solving the imaging model and using histogram stretching satisfying the Rayleigh distribution to eliminate the effect of inhomogeneous illumination on imaging.Results and DiscussionsThe test dataset is classified into color distorted images, fogged images, and images with artificial light by imaging scenes, and classified images as research objects. First, an ablation study is performed to verify the proposed model's validity. The results in Fig. 8 show that the dual transmittance can more accurately describe the underwater light attenuation characteristics, and the incident light attenuation term can improve the image's overall brightness and darkness difference. Then, the underwater color correction experiment of the color plate (Figs. 9 and 10) is further carried out and compared with the common underwater image restoration algorithm. Figs. 9 and 10 indicate that the proposed method accurately restores the colors of grayscale color blocks and color blocks. Finally, three sets of classified images are selected for testing (Figs. 11, 12, and 13), and the results of all methods are evaluated by UIQM, NIQE, and entropy metrics. The experimental results show that the proposed method can not only accurately correct the color distortion of the images in different scenes but also restore the detailed information more accurately, with precise edge contours.ConclusionsThis study builds a robust underwater imaging model with dual transmittance for the problem of color shift, blurred details, and low contrast in the images obtained in different underwater scenes. A red channel compensation algorithm is proposed to preprocess the source images based on this model which is solved through the preprocessed image to restore the underwater image. The experimental results of subjective and objective indexes show that compared with common underwater image clarification methods, the proposed method performs better in terms of color balance and more realistic detail information restoration when applied to images collected from different underwater scenes. In this paper, building the dual transmittance underwater imaging model plays a crucial role in image recovery. Before solving the model, the preprocessing means can improve the parameter estimation accuracy but will reduce the operational efficiency of the algorithm. It will be essential to work in the future on how to perform the noise reduction process for underwater images more quickly and accurately.

ObjectiveAtmospheric turbulence causes laser scintillation, beam wanders, beam spreading, and angle-of-arrival fluctuation. The atmospheric coherence length (r0) proposed by Fried is a parameter related to the wavefront-phase structure function, an important parameter for characterizing the intensity of atmospheric optical turbulence. Hence, ground-based in-situ measurement of r0 is of great significance for studying the optical wave propagation effect in the atmosphere. The differential image motion monitor (DIMM), a traditional measurement method of r0, has been widely studied in that it is capable of avoiding measurement errors caused by the vibration of the observation and the tracking equipment and unstable tracking. However, in the scene of long-distance optical turbulence measurement, DIMM needs a power supply and working staff to maintain light beacon images in the center of CCD image sensors. In this study, the original passive beacon is converted into an active illumination beacon to realize single-ended optical turbulence measurement inspired by DIMM. This lidar-style r0 measurement still faces the uncertainty of reflecting media in atmospheric turbulence. Therefore, this paper proposes a fold-path r0 measurement method based on laser-active illumination of a 3M reflective film. With the help of a large-area 3M film, the observed area and distance can be enlarged, and the laser source and CCD imaging system can be integrated into one single-ended unit. Upon the construction of this new layout experiment system, the results of the fold-path r0 measurement can help validate the fold-path optical wave propagation model and explore long-range single-ended optical turbulence telemetry.MethodsFirst, the theory of atmospheric coherence length on the fold path link is summarized. Then, the results of the all-day laser-active illumination imaging data are analyzed through the switching of the active illumination beacon and conventional 650 nm laser beacon for the measurement of r0. In addition, the comparative experiments of the traditional DIMM and fold-path DIMM are conducted on a 1.1 km optical propagation link. The system is mainly composed of the YSL-SC-PRO-M fiber laser, 3M microcrystal prism array, beam expander system, Meade ACF14F8 telescope (the receiver's aperture is Φ=355 mm, and its sub-aperture is Φ=120 mm), and Allied Vison GT 1920 CCD. One DIMM device is adopted to switch between different beacons to measure r0, so as to eliminate the measurement error caused by different DIMM devices. Echo beam wave wander experiments are carried out on the same 1.1 km optical link with an atmospheric coherence meter recording atmospheric optical turbulence conditions to validate the jitter turbulence inversion methods of the active illumination beacon. A CMOS camera (with a resolution of 1936 pixel×1096 pixel) coupled with a telescope (Φ=200 mm, f=1200 mm) is utilized to record the laser speckle images. A 10 nm optical filter is set up in front of the camera to reduce the sky background radiance. All the above units are mounted on a sturdy platform. A square 1 m 3M film pasted on a flat carbon fiber board is placed at a certain distance perpendicular to the laser beam. After that, turbulence-degraded laser speckle images are obtained by the fold path imaging method, and the multi-day laser-active illumination imaging data is analyzed.Results and DiscussionsComparative statistical analysis of fold-path DIMM and traditional DIMM measurement results is shown in Fig. 3. The data of the two different methods exhibit good consistency, and fold-path DIMM can also reveal the turbulence strength variation at the transition moment. The comparison with another traditional DIMM measurement result shows that the deviation of results obtained by the two methods is about 2.5%. The measurement result of fold-path DIMM is slightly smaller than that of traditional DIMM (Fig. 4), which means the laser speckle reflected by the prisms embedded in the film has been severely degraded. Thus, the backward transmitted laser speckle should be non-coherent differing from the coherent beacon employed in traditional DIMM. The comparison results of Cn2 inversion by centroid drift and DIMM under the circumstances of unfocused and focused beams indicate that the results of the focused beam are consistent with those of DIMM measurement (Figs. 5 and 6). The coefficient of determination R2 under the unfocused condition is 0.88 while that under the focused condition is 0.94. Therefore, this paper presumes that a large laser divergence angle results in laser energy missing at the observing termination COMS, which is not reflected by 3M films.ConclusionsIn this paper, the 3M reflective film is regarded as a reflective surface with a certain correlation length and root-mean-square height characteristics. With high reflectivity, fine particles, uniform reflection, and easiness of being spliced into a large-area array, the 3M reflective film is employed as a cooperative target of laser-active illumination imaging. Compared with the results of the traditional DIMM, those of fold-path DIMM have an average deviation of 2.5% throughout the day because backward transmitted laser speckles shall be considered non-coherent. The relative position of the laser emission system and the telescope receiving system is fixed, which simplifies the complexity of the experimental optical path aiming. The results show that the coefficient of determination of the image motion method is ca. 0.94, which allows the system to be further simplified for the telemetry of optical turbulence. Additionally, this transceiver method can eliminate the influence of platform vibration. Combined with a pulsed laser and a high-sensitivity camera, it can be applied to measure the atmospheric coherence length at different distances. Relevant experimental results are conducive to validating the fold-path optical wave propagation model and exploring long-range single-ended optical turbulence telemetry.

ObjectiveAtmosphere visibility is an important and popular indicator for evaluating the atmosphere quality and is of great significance for our daily life and traffic safety. According to the observation paths, visibility can be defined as horizontal visibility and slant-range visibility. In some cases, slant-range visibility may be different from horizontal visibility to some degree. In civil aviation, horizontal visibility or ground visibility is normally measured by ground-based equipment called transmissometer. Reported horizontal visibility is employed for air traffic management. However, slant-range visibility is more important for pilots during flights. Thus, far slant-range visibility has not been applied in air traffic management, which is due to the absence of feasible methods for deriving or measuring slant-range visibility. The space-borne lidar CALIOP carried by the sun-synchronous satellite CALIPSO is capable of obtaining the atmosphere properties along the vertical direction, and therefore, it is a good candidate for deriving the slant-range visibility. We aim to develop a sufficient method of deriving slant-range visibility with high accuracy based on the aerosol data provided by CALIPSO and the theory of atmosphere radiative transfer.MethodsWe successfully derive the slant-range visibility for North China through the aerosol data provided by the CALIPSO satellite and the atmosphere radiation transfer model of SBDART. Firstly, the aerosol optical properties are characterized by optical depth, single scattering albedo, and scattering phase function provide by the CALIPSO aerosol products. They are leveraged in solving the atmosphere radiative transfer equation using the SBDART model. As the result, the spatial sky background radiance is obtained. The target-background brightness contrast is hence obtained, which is adopted to determine the slant-range visibility based on the visibility definition. Consequently, the atmosphere layer is determined for the desired brightness contrast. Finally, the δ-two-stream approximation is utilized to estimate the sky background radiation with high spatial resolution within the specific atmosphere layer. The slant-range visibility is hence derived with high accuracy.Results and DiscussionsThe radiance on the ground obtained by the proposed method is shown to have a relative difference of 12.3% from that obtained by the MARRA-2 dataset (Fig. 7). The results show that the sky background radiance obtained by the proposed method is accurate and can be applied for deriving the slant-range visibility. The slant-range visibility obtained with and without the help of δ-two-stream approximation is compared (Table 1). The results show that the invisibility under the two conditions is significantly different for low-visibility weather and small observation pitch angle, while it tends to be consistent with each other as the atmosphere visibility and pitch angle increase. When the slant-range visibility is less than 1 km, the average relative error of slant-range visibility with and without the help of δ-two-stream approximation is about 15.0%, while the average relative error is about 6.2% with the slant-range visibility of less than 10 km. For low-visibility weather and small pitch angle, the slant-range visibility obtained by the proposed method is expected to have higher accuracy because the sky-background radiance is derived with higher spatial resolution. On the other hand, slant-range visibility is obtained through the empirical expression for slant-range visibility. The results of slant-range visibility for low-visibility weather are shown to have a good correlation with the correlation coefficient of 0.928 (Fig. 10). Meanwhile, under the assumption that the slant-range visibility is less than 1 km, the average relative error of slant-range visibility retrieved by this method is about 7.1% compared with that of the empirical expression. Nevertheless, the empirical expression is valid only for air-to-ground observation angles smaller than 15° (Table 2). The proposed method is sufficient for deriving slant-range visibility with high accuracy under a wide range of observation angle.ConclusionsWe propose a new method of deriving slant-range visibility based on the aerosol products provided by the CALIPSO satellite and the atmosphere radiative transfer model SBDART. The δ-two-stream approximation is first introduced in solving the atmosphere radiative transfer equation using the SBDART model. As a result, the sky-background radiance is obtained with high spatial resolution, which enables us to derive the slant-range visibility with high accuracy. We successfully derive the slant-range visibility of North China. The results show that in certain circumstances the derived slant-range visibility obtained by the proposed method has good consistency with that obtained without employing δ-two-stream approximation, and the average relative error between them is about 15.0%. It is also the case when the derived slant-range visibility using the proposed method is compared with the slant-range visibility obtained by the empirical expression. When the slant-range visibility is less than 1 km, the average relative error of slant-range visibility is about 7.1%, which verifies the reliability of the slant-range visibility calculation results. This indicates that the proposed method also has excellent performance and solves the problems of the standard SBDART model under small pitch angles. Additionally, the proposed method exhibits higher accuracy of slant-range visibility and may be applied for extensive weather and observation conditions.

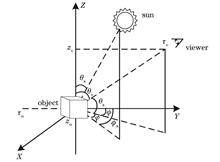

ObjectiveThe risk of spacecraft damage caused by space debris is increasing. The ground-based laser space-debris removal (GBLSDR) is an effective method for removing centimeter-scale space debris in the low-earth orbit region. However, the problem of high-power laser beam propagation through the atmosphere will be encountered by the GBLSDR. For the application of GBLSDR, the beam power is well above the critical power of the self-focusing effect in the atmosphere. Until now, several studies have been carried out to analyze the influence of the self-focusing effect in the atmosphere on the beam quality at the debris target. It is shown that the intensity at the debris target decreases because of the self-focusing effect in the atmosphere. It is found that uniform irradiation at the debris target may be achieved because of the phase modulation caused by the self-focusing effect in the inhomogeneous atmosphere. In addition, the influence of the beam spatial coherence and the beam order on the self-focusing effect in the inhomogeneous atmosphere is also studied. However, these studies are restricted to the steady-state self-focusing effect in the inhomogeneous atmosphere, and they fail to consider the quasi-steady-state self-focusing effect. It is known that a pulsed beam is more suitable for the application of GBLSDR than a continuous wave (CW) laser beam. When the response time of media to the field is much shorter than the pulse width, the self-focusing effect can be called the quasi-steady-state self-focusing effect. Therefore, it is important to study the influence of the quasi-steady-state self-focusing effect in the inhomogeneous atmosphere on the beam quality at the target surface for the application of GBLSDR.MethodsIn general, under the standard paraxial approximation, the propagation of a high-power laser beam propagating from the ground through the atmosphere to space orbits can be described by the nonlinear Schr?dinger equation. In addition, the B integral is an important characteristic parameter to quantitatively describe the beam quality degradation due to the self-focusing effect. Based on the B integral of a high-power laser beam propagating vertically from the ground through the atmosphere to the debris target, the beam propagation model can be simplified as two stages, i.e., nonlinear propagation in the homogeneous atmosphere and linear propagation in a vacuum. According to the simplified beam propagation model, the influence of the quasi-steady-state self-focusing effect of a partially coherent light pulse (PCLP) propagating in the inhomogeneous atmosphere on the beam quality at the target surface is studied analytically.Results and DiscussionsIn this study, the analytical expressions [Eq. (9), Eq. (10), and Eq. (12)] for the beam width, curvature radius, and actual focal length of a PCLP propagating from the ground through the atmosphere to the space orbit are derived, respectively. It is shown that the focal shift will take place because of the quasi-steady-state self-focusing effect, which results in an increase in the spot size on the debris target (Fig. 1). In order to suppress the quasi-steady-state self-focusing effect, the quasi-steady-state and steady-state modification methods are proposed. Furthermore, the analytical expression [Eq. (13)] for the modified focal length of the quasi-steady-state and steady-state modification methods is derived, and the applicable condition of the modified focal length is given [Eq. (15)]. It is shown that the spot size on the space-debris target decreases under the quasi-steady-state and steady-state modification methods, and smaller spot size on the space-debris target can be achieved by the quasi-steady-state modification method (Fig. 5).ConclusionsIn order to reduce the spot size and increase the laser intensity on the space-debris target (i.e., improve the beam quality on the target), the influence of the quasi-steady-state self-focusing effect of a PCLP propagating in the inhomogeneous atmosphere on the beam quality at the target surface is studied analytically in this study. The analytical expressions for the beam width, curvature radius, and actual focal length of a PCLP propagating from the ground through the atmosphere to the space orbit are derived, respectively. The focal shift takes place because of the quasi-steady-state self-focusing effect, which results in an increase in the spot size on the debris target. The quasi-steady-state and steady-state modification methods are proposed to suppress the quasi-steady-state self-focusing effect. It is found that a smaller spot size on the space-debris target can be achieved by the quasi-steady-state modification method. However, in practice, the steady-state modification method is easier to be performed than the quasi-steady-state modification method.

ObjectiveThe mesosphere-lower thermosphere (MLT) region is an important space region in the earth's atmosphere. As a significant parameter of atmospheric thermodynamics in the MLT region, the temperature is of great academic significance and application value. Since it is not affected by weather and geographical conditions, satellite-borne temperature detection can perform all-weather and long-term observation on a global scale. Thus, it becomes an important detection method to obtain the three-dimensional distribution and spatio-temporal evolution in the mid-upper atmospheric temperature. Previous satellite payloads, such as the wind imaging interferometer (WINDII) and the high-resolution Doppler interferometer (HRDI) on the UARS satellite, and the sounding of the atmosphere using broadband emission radiometry (SABER) on the TIMED satellite, have made contributions to the distribution detection of the mid-upper atmospheric temperature field. However, the MLT region still suffers from problems including incomplete space coverage or low detection accuracy. In October 2019, the Michelson interferometer for global high-resolution thermospheric imaging (MIGHTI) on NASA's ionospheric connection (ICON) explorer measured the radiation intensity of the O2-A band through five discrete wavelength channels and obtained three years of continuous observation data. Based on the onion peeling algorithm and the theory of the O2-A band airglow spectrum, this paper retrieves the atmospheric temperature profile in the 92-140 km area via the O2-A band airglow radiation intensity measured by MIGHTI. In addition, comparisons with the observation results of the SABER satellite, the simulation data of the NRLMSIS-00 atmospheric model, and the temperature product of MIGHTI obtained by the ICON team using an optimization algorithm are conducted systematically to verify the rationality of MIGHTI temperature retrieval.MethodsThe relative radiation intensity of each spectral line in O2-A band airglow which follows the Boltzmann distribution is affected by temperature. MIGHTI samples the O2-A band signal through five channels, and the strength of signals in the B and D channels increases with the rising temperature, whereas the strength of the signal in channel C is just the opposite. The ratio of signal channels with different temperature responses is independent of the emission rate, and also changes monotonously with the temperature. Therefore, the atmospheric temperature can be accurately retrieved by measuring the ratio of channel signal strengths. The relative radiance of O2-A band on the line of sight obtained from the limb-viewing observation of MIGHTI is stripped by the onion peeling algorithm to obtain the relative intensity of the target layer. Then, according to the relative intensity of the target layer of channels B, C, and D, the atmospheric temperature profile information is retrieved through combining the functional relationship between the channel strength ratio calculated by the MIGHTI instrument parameters and the temperature.Results and DiscussionsTo evaluate the rationality and reliability of the MIGHTI temperature retrieval results obtained by the onion peeling algorithm, this paper verifies the MIGHTI retrieval results by comparing the measured data of SABER and simulation data of atmospheric model NRLMSIS-00. The results show that MIGHTI temperature retrieval is in good agreement with SABER at 92-100 km, and the temperature distribution of MIGHTI is basically consistent with that of the empirical model in the altitude range below 130 km, which shows the overall retrieval reliability of MIGHTI on a global scale. According to the characteristics of annual mid-upper atmospheric temperature changes, the detected temperature ratio of MIGHTI and SABER to the model temperature is calculated in one day of four seasons respectively. In the altitude range of 92-100 km, the temperature ratio profiles of MIGHTI and SABER are similar and very close to 1, which proves that MIGHTI has a strong temperature retrieval rationality in this altitude range. It is also compared with the temperature profile obtained by the optimization algorithm adopted by the ICON team to further evaluate the rationality of the onion peeling algorithm for retrieving MIGHTI temperature. Within the height range that can be retrieved by the optimization algorithm, the difference between the temperature values retrieved by the two algorithms differs slightly within ±5%, which further verifies the rationality of the temperature retrieval by the onion peeling algorithm.ConclusionsThe O2-A band airglow measured by MIGHTI is retrieved and the atmospheric temperature distribution in this region is calculated by the onion peeling algorithm. By comparing the observation results of the SABER satellite, the NRLMSIS-00 atmospheric model data, and the MIGHTI temperature products obtained by the ICON team using the optimization method, the paper verifies the reliability and rationality of MIGHTI temperature retrieval. By measuring the shape of the O2-A band airglow radiation spectrum, MIGHTI can detect the atmospheric temperature profile between 92-140 km, which covers the MLT area effectively. The minimum temperature error is 1 K at 90 km, and the maximum temperature retrieval error is 13 K at 140 km.

ObjectiveThe surface of the object which in nature is rough relative to the wavelength of light beams is a rough target. When the light is incident on the rough target, the beam will be scattered, and the receiving end will receive the light intensity pattern of alternating light and dark waves, which is called speckle pattern. The echo scattering characteristic of the light field is one of the key technologies for the integration of underwater laser communication and detection in the future. In ocean detection technology, underwater scattering technology, and underwater wireless optical communication technology, the transmission characteristics of beams through oceanic turbulence and echo characteristics of beams through the rough surface play a crucial role in turbulent conditions. The physical characteristics of the rough target surface (surface roughness, coherence length, deformation degree, motion velocity, and rotation velocity) play an important role in influencing echo characteristics. The current studies mainly adopt the fractal method, wavelet transform, deep learning, and other methods to process the laser speckle pattern collected at the receiving end. The surface roughness, deformation degree, translation velocity, and rotation velocity of the rough target can be identified. The current underwater detection technology is mainly laser, but the laser loss during detection is serious, thus resulting in limited rough target information reflected by the received speckle. Since the vortex beam features hollow intensity distribution, phase helix, and orthogonality of orbital angular momentum (OAM), it can carry more information than the Gaussian beam. Laguerre-Gaussian (LG) beams are typical vortex beams with significant advantages in light scattering and target recognition. At present, there are two aspects to study the scattering characteristics of light beams through rough targets. The first is the speckle characteristics of light beams through the rough surface in free space, and the second is the speckle characteristics of light beams through the rough surface in atmospheric turbulence. The propagation theory of vortex beams through oceanic turbulence is very mature, but the scattering characteristics of vortex beams through Gaussian random rough surface in weak oceanic turbulence are rarely studied.MethodsSpatial coherence property is a part of the echo scattering property, which can reflect the coherence of the echo field between two points in space. The spatial complex coherence degree of the beam is utilized to represent the spatial coherence property of the echo field. The spatial distribution of the speckle is related to the surface coherence length of the rough target. We build a double-path transmission model of LG beam through Gaussian random rough surface in weak oceanic turbulence by referring to the scattering characteristics of vortex beams through the rough surface in atmospheric turbulence. Based on the generalized Huygens-Fresnel diffraction principle, the intensity of the echo speckle field of LG beams reflected by a rough surface with Gaussian distribution in oceanic turbulence is derived. The influences of the LG beam's light source parameters, oceanic turbulence intensity, and rough surface roughness on speckle field complex coherence degree are investigated.Results and DiscussionsThe effects of light source parameters, oceanic turbulence, and rough target surface parameters on the complex coherence of the echo speckle field are analyzed numerically. Figs. 4-10 show that complex coherence decreases with the increasing topological charge, waist radius, and wavelength of LG beams, decreases with the increase in oceanic turbulence intensity, and rises with the increasing coherence length of the rough surface. Additionally, when the coherent length of the rough surface is larger than that of spherical wave propagating in oceanic turbulence, the complex coherence degree does not change significantly. This shows that the influence of rough surfaces on complex coherence is much less than that of oceanic turbulence.ConclusionsThis study is based on the generalized Huygens-Fresnel diffraction principle and the relative advantages of vortex beams in suppressing turbulence effect due to the special helical phase characteristics of the beams. Then the analytical expression of the scattering intensity of LG beams through the rough surface in oceanic turbulence is innovated, and the theoretical expression of the complex coherence of the scattering field at the receiving end is obtained. The results indicate that the complex coherence decreases with the increasing topology charge, waist radius, and wavelength of the LG beam, decreases with the rising oceanic turbulence intensity, and rises with the increasing dry length of the rough surface. However, when the coherent length of the rough surface is larger than that of the spherical wave propagating in oceanic turbulence, the complex coherence degree does not change significantly. This shows that the influence of rough surfaces on complex coherence is much less than that of oceanic turbulence. The analytical expression of the light field scattered by LG beams through the rough surface and the complex coherence of the light field of the back wave derived in this paper provides a theoretical basis for underwater target detection.

ObjectiveVehicle exhaust contains gases such as NH3 and CO2 and is becoming an essential source of air pollution and greenhouse effect. The intracavity absorption gas sensing technology based on fiber ring laser has many advantages, which are very suitable for real-time detection of toxic and harmful gases in environmental protection. However, when the gas sensing system based on a thulium-doped fiber laser is applied for quantitative analysis of mixed gas, the gas detection accuracy is often affected by cross interference caused by overlapping spectral absorption lines between component gases, and a nonlinear shift led to by changes in temperature and pressure at the experimental sites. As a small sample machine learning method, support vector machine (SVM) based on statistical theory has high accuracy and good generalization ability. It can be combined with infrared spectrum analysis to build a mixed gas volume fraction regression prediction model and correct nonlinear interference, thus greatly improving the accuracy and reliability of the gas quantitative analysis.MethodsIn this paper, an active intracavity gas sensing system based on a thulium-doped fiber laser is built to collect the absorption spectrum data of NH3 and CO2 gases. The system is mainly divided into an adjustable light source (part A), a sensing part (part B), a data acquisition and processing part (part C), and a gas distribution part (part D). Before collecting the gas spectrum, sufficient nitrogen is introduced into the gas chamber to eliminate the interference of water vapor and CO2 in the gas distribution instrument. The experimental environment is 0.1 MPa under normal pressure, and the sampling rate of the acquisition card is 20 kHz, with 20 groups of data being collected and 12 samples for each group of data. Before building the model, spectral data should be preprocessed to reduce the impact of background noise and improve the signal-to-noise ratio. However, it is inappropriate to do too much preprocessing to avoid losing some important spectral information. We also preprocess the spectral data through the methods of denoising, baseline correction, and smoothing. With an aim to improve the modeling speed, principal component analysis (PCA) is employed to project the multi-dimensional linear transformation of the original gas absorption spectrum data into a high-dimensional space to obtain the principal components corresponding to the maximum variance. The principal components at this time are leveraged to replace the eigenvalues in the original data, reduce the data dimension, and prevent the correlation between variables from affecting the extraction of these components and the prediction accuracy of the regression model. The standard particle swarm optimization (PSO) algorithm has fast convergence and short optimization time, whereas it features premature convergence of the model, low accuracy of optimal solution search, and low efficiency of later iteration. Therefore, we propose an improved algorithm, which is adaptive mutation particle swarm optimization (AMPSO). By introducing an adaptive mutation operator, the updated particle positions are randomly mutated so that particles can enter other regions of the solution space to continue searching, thereby improving the ability of particle swarm optimization to jump out of the local optimal solution and avoid premature convergence of the algorithm model. The optimal combination of parameters obtained from the NH3-SVM model and the CO2-SVM model optimized by the AMPSO algorithm is input into the support vector machine to obtain the corresponding volume fraction regression model. The prediction results of training set samples and test set samples of the NH3-SVM model and the CO2-SVM model can be obtained (Fig. 8). The determination coefficient R2 is adopted to evaluate the fit between the predicted volume fraction and set volume fraction.Results and DiscussionsAlthough the optimization time of the standard PSO algorithm is the shortest, due to premature convergence, the mean square error is large, and the regression prediction of the model is not good. The mean square error of the grid search method is close to that of the AMPSO algorithm and both errors are small. However, since the grid search method is a non-heuristic algorithm, each optimization needs to traverse all points in the grid, resulting in long optimization time. Compared with the two algorithms, the AMPSO algorithm can obtain the best mean square error at a more appropriate optimization time, with higher efficiency. When regression predictions on the volume fraction of the training set samples are conducted, the mean square errors of the volume fraction set point and the volume fraction prediction value of the NH3-SVM model and CO2-SVM model are 0.000087 and 0.000128 respectively, and the determination coefficients R2 are 0.9997 and 0.9999 respectively. When volume fraction regression prediction for the test set samples is carried out, the mean square errors of the volume fraction set point and the volume fraction prediction value of the NH3-SVM model and CO2-SVM model test set are 0.000088 and 0.000170 respectively, and R2 is 0.9998.ConclusionsAn active intracavity gas sensing system based on a thulium-doped fiber laser is built to collect the absorption spectrum data of NH3 and CO2 gases. The predicted volume fraction of the regression prediction model of NH3 and CO2 gas volume fraction is in good agreement with the actual volume fraction, with sound prediction ability and effect, and small error. The built AMPSO gas volume fraction regression model has high prediction accuracy and strong accuracy and can be applied for mixed gas volume fraction regression prediction.

ObjectiveIn the satellite coherent laser communication system, the signal modulation format is mainly phase-shift keying (PSK), which is not compatible with the coherent reception of on-off keying (OOK). In view of the problem that the satellite coherent optical communication receiver is incompatible with various modulation formats, a coherent communication receiver compatible with OOK and binary PSK (BPSK) is built experimentally. At the communication rate of 1 Gbit/s, when the modulation format is OOK, and the signal optical power is -54.6 dBm, the bit error rate (BER) is 10-3, and there is a distance of 3.3 dB from the shot noise limit; when the modulation format is BPSK, and the signal optical power is -57.95 dBm, the BER is 10-3, and there is a distance of 4.2 dB from the shot noise limit. The multi-system compatible coherent receiver shares a common structure with many coherent receiver hardware at present and has high receiving sensitivity. It verifies the feasibility of the multi-system compatible technology of satellite coherent laser communication and is of great significance.MethodsAs the current mainstream satellite communication modulation formats, BPSK and OOK will exist for a long time. Therefore, this paper experimentally builds a coherent receiver compatible with OOK and BPSK. Firstly, the optimal local optical power of the balanced detector used in this experiment is measured by the experimental setup shown in Fig. 4. When the optical modulation format of the signal is OOK, the in-phase (I) and quadrature (Q) signals are firstly complexed, then the modulus of the complex signal is calculated, and finally the threshold judgment method is used for verification. When the optical modulation format of the signal is BPSK, the real-time carrier phase difference is calculated by the complex digitization and IQ arctangent method, and the baseband signal can be obtained after the carrier recovery of the BPSK signal. The demodulation of the overall signal relies on offline processing, and Fig. 8 shows the specific flow of offline processing.Results and DiscussionsAfter the experiment in Fig. 4, we finally set 12.8 mW as the optimal optical power of the local oscillator in this experiment. In this paper, when the optical frequency of the local oscillator and the signal is small, the high-speed oscilloscope is used to demodulate the OOK signal. The recovered baseband signal and eye diagram are shown in Fig. 7. When the optical power of the signal close to the shot noise limit is measured, the BER is calculated through offline processing. When the signal optical modulation format is BPSK, the baseband signal will be recovered, and the BER will be calculated after the I and Q signals are directly collected for offline processing. In this experiment, the communication rates of OOK and BPSK are both 1 Gbit/s. With the multi-system compatible coherent detection technology applied, the receiving sensitivity of the OOK signal is 3.3 dB away from the shot noise limit. The main reasons are as follows. The responsivity of the detector used is 0.85 A/W, and the ideal responsivity is 1.25 A/W when the quantum efficiency is 1 in the 1550 nm band, which will cause a loss of 1.67 dB. The output of the optical 90° bridge goes into the detector fiber, and there is a connection flange, which will cause a loss of 0.3 dB. The remaining loss of 1.33 dB may be caused by the following reasons: energy lost due to the transmittance of the detector window, imperfect heterodyne efficiency, and ADC quantization loss. With the help of the multi-system compatible coherent detection technology, the receiving sensitivity of the BPSK signal is 4.2 dB away from the shot noise limit, which is 0.9 dB higher than the distance between the receiving sensitivity of the OOK signal and the shot noise limit. The loss may be caused by an inaccurate phase-locking error from the inaccurate phase difference calculation at a low signal-to-noise ratio due to an extremely low signal optical power.ConclusionsIn this paper, a multi-system compatible coherent detection device for satellite laser communication is built experimentally, and the corresponding demodulation algorithm and offline processing method are given. When the communication rate is 1 Gbit/s, and the BER is 10-3, the receiving sensitivity of the multi-system compatible coherent detection device is only 3.3 dB away from the shot noise limit for the OOK signal, and that for the BPSK signal is only 4.2 dB away from the shot noise limit, which realizes high-sensitivity and multi-system coherent reception. It is worth mentioning that an advantage of coherent OOK is that in the PSK coherent communication system, when the carrier recovery algorithm or the phase-locked loop cannot achieve carrier synchronization for some reason, the coherent reception of OOK can be used as an important alternative method, with only a slight loss of sensitivity. In addition, multi-system compatibility is not limited to OOK and BPSK. For quadrature PSK (QPSK) modulated signals, we only need to change the square operation of eliminating the communication term to the fourth power operation. After carrier synchronization, both I and Q signals become baseband data. Multi-system compatible coherent reception can greatly improve the flexibility and interactivity of satellite laser communication networks in the future, so it is of great significance.

ObjectiveOptical wireless coherent communication employs optical hybrid to complete the mixing of signal light and local oscillator light, and balance detectors to complete photoelectric conversion. Optical fiber hybrid has been widely applied in optical wireless coherent communication systems due to its advantages such as high integration and compact structure. It is necessary to efficiently couple spatial light into the optical fiber. Adaptive optical technology can improve the coupling efficiency from spatial light into optical fiber by correcting the distorted wavefront, and it is applied to optical wireless coherent communication systems. The existence of non-common optical path aberration between the wavefront sensing branch and the coupling branch leads to the distorted wavefront in the communication branch after the closed loop of the adaptive optical system. The stochastic parallel gradient descent algorithm to correct the non-common optical path aberration is easy to fall into the local optimum, and the phase difference algorithm employed to correct the non-common optical path aberration is only applicable to the field of imaging systems. We propose a reverse transmission calibration algorithm to measure the non-common optical path aberration for the initial calibration of adaptive optical systems in optical wireless coherent communication.MethodsThe adaptive optical system in wireless optical coherent communication is shown in Fig. 1. After the laser beam is transmitted through the atmospheric turbulence, the beam is fully reflected by the deformable mirror. The reflected beam is divided into two collimated beams with a power ratio of 1:1 by the beam-splitter. One transmitted beam is to act on the wavefront sensor to monitor the current distorted wavefront. The other reflected beam is directly coupled into a single-mode fiber after being converged by a coupling lens for optical hybrid, coherent detection, and communication. Reverse transmission is sending the same beam from the receiver to the transmitter. A laser beam identical to the transmission source is supposed to be connected to the coupling optical fiber, as shown in Fig. 2. At the same time, the deformable mirror command in this state is cleared so that the deformable mirror is in a completely flat reflection state. The beam output by the coupling fiber is reflected by the beam-splitter and then reflected by the deformable mirror directly into the wavefront sensor to measure the wavefront information. At this time, the measured wavefront information includes both the wavefront phase that can maximize the coupling efficiency and non-common optical path aberration. The coupling efficiency can be improved when the measured wavefront information is converted to the closed-loop control of adaptive optics.Results and DiscussionsThe local oscillator light in the optical wireless coherent communication system is connected to the coupled single-mode optical fiber by the reverse transmission calibration algorithm. The peak-to-valley value of the non-common optical path aberration (Fig. 7) measured by the wavefront sensor is 3.71 μm, with the root mean square value of 1.34 μm. This error is enough to exert a significant impact on coupling efficiency. When the wavefront information is converted into the adaptive optical closed-loop control, coupling efficiency increases from the initial 9.04% to the closed-loop 45.21% (Fig. 8). The self-noise inside the wavefront sensor causes some synaptic data in the wavefront slope measurement and wavefront reconstruction, but does not significantly affect the fluctuation of coupling efficiency (Fig. 8). In turbulent environments, the coupling efficiency increases from 19.72% under uncorrected state to 36.93% under closed-loop state (Fig. 13), and that in complex environments increases from 3.91% in an uncorrected state to 9.13% in the closed-loop state (Fig. 16). This shows that with the increase of communication distance, the influence of atmospheric turbulence on adaptive optical correction effect is more significant than non-common optical path aberration.ConclusionsBased on the reversibility principle of optical paths, we propose a reverse transmission calibration algorithm to measure and correct non-common optical path aberration for adaptive optical fiber coupling systems in optical wireless coherent communication. This algorithm converts the non-common optical path aberration into closed-loop control and improves coupling efficiency while correcting the distorted wavefront phase. Compared with conventional stochastic parallel gradient descent algorithms, this scheme will not fall into the local optimum, and will not be affected by the external turbulent environments. It can also assist in the position alignment of optical paths, which is simple, feasible, and easy to realize in engineering. Finally, reference significance and practical value are provided for the optical fiber coupling technology of the optical wireless coherent communication system.

ObjectiveClear microscopic images of algae are the basis of accurate identification. However, the microscopic images of algae located outside the depth of field are blurred due to the limited depth of field of the high-power microscope. On the one hand, some algal cells are large or filamentous in morphology. For example, the length of Anabaena sp. can reach hundreds of microns, and the depth distance of the algal cells can easily exceed the depth of field range of the microscope during microscopic imaging, and thus the area outside the depth of field range in the microscopic image is blurred due to defocus. On the other hand, the length of algal species with small cell size such as Scenedesmus sp. is only about seven microns, and the depth distance between multiple algal cells in the same field can easily exceed the depth of field of the microscope, which results in blurred algal cells in the collected microscopic algal images. Therefore, it is of great value to collect multi-focus microscopic images of the same field at different heights of the objective table and use the multi-focus image fusion method to realize multi-focus image fusion of algal cell images, so as to obtain clear images with panoramic depth.MethodsIn this paper, the focus area, defocus area, and background area of the microscopic images of the algal cell are detected, and then the multi-focus microscopic images are fused by using a spatial domain image fusion method. First, Laplacian energy and guided filtering are used to measure the local focus degree of algal cell images, and the focus area of microscopic algal cell images is determined after binarization, as shown in Eq. 4. Because the area where the algal cell is located can be detected by the S channel of HSV color space of the microscopic algal cell image, the defocus area of the microscopic image can be detected by combining the S channel with the focus area. The remaining parts are defined as background areas. Then the multiple microscopic images are fused in the spatial domain (Eq. 8), or in other words, the output pixel value is selected from the focus area with a larger focus degree. The defocus area does not participate in the fusion, and the average value of the background area is taken as the fused output, so as to realize the spatial domain fusion of the multi-focus microscopic algal cell images.Results and DiscussionsOne microscopic image of algal cells is acquired by moving the precision displacement objective table every 1 μm in the direction of the depth of field. Anabaena sp., Scenedesmus sp., and Pediastrum sp. are used as experimental objects. The multi-focus microscopic images of Anabaena sp., Scenedesmus sp., and Pediastrum sp. are continuously acquired by moving 7, 7, and 15 μm in the direction of the depth of field of the objective table, respectively. There are different clear areas and defocus areas in each microscopic image due to the limitation of the microscope's depth field. The fusion effects of the wavelet transform, Laplacian pyramid, and pulse coupled neural network (PCNN) methods are compared with the proposed method in terms of subjective vision and objective quantitative evaluation. It can be seen from Fig. 5 and Fig. 6 that the proposed method can better transfer the focus area in the source image to the fusion image in subjective vision and has a better fusion effect. In terms of objective quantitative evaluation, Table 1 shows the edge information retention, spatial frequency, and average gradient of the fused images of Anabaena sp. (0.3529, 8.9654, and 0.0055), Scenedesmus sp. (0.3778, 7.0558, and 0.0023), and Pediastrum sp. (0.2940, 1.5445, and 0.0005), respectively, which are better than those of the compared methods. The proposed method effectively fuses the multi-focus microscopic images of algae and provides a method for obtaining the microscopic images of algae with panoramic depth.ConclusionsIn order to solve the problem of image blurring caused by the defocus diffusion effect in obtaining microscopic algal cell images, a spatial-domain multi-focus image fusion method is proposed in this paper. Laplace energy and guided filtering are used to detect the focus area of microscopic images, and obvious color characteristics of algal cell images are used to detect the defocus area by combining the S channel of HSV color space with the focus area. Then, the output image is selected according to the focus degree of the focus area in the spatial domain image fusion process. The experimental results show that the proposed fusion method can effectively fuse multi-focus microscopic images of algal cells. The fused image has better clarity, and the edge information of the source image is more effectively transmitted to the fused image. This work proposes a new method for obtaining microscopic images of algal cells with panoramic depth and provides technical support for the development of automatic monitoring instruments for algal cells.

ObjectiveShip detection plays an important role in military and civilian fields such as defense security, dynamic port monitoring, and maritime traffic management. With the rapid development of space remote sensing technologies, the number of high-resolution optical remote sensing images is increasing exponentially, which lays the data foundation for research on ship detection techniques. Meanwhile, it is required that detection systems should have real-time accuracy to match the growth rate of the number of remote sensing images. Traditional methods for object detection are mainly accomplished by the construction of mathematical models or the use of object saliency. However, most of these algorithms rely on the prior knowledge of experts and have certain limitations, which cannot cope with the complex and variable background and the multimodal and heterodyne objects. Recent years have seen the rapid development of deep learning technology. The object detection method based on convolutional neural networks (CNNs) is widely used because of its strong learning ability and high detection accuracy. Currently, mainstream object detection models based on deep learning are mainly divided into two categories, i.e., two-stage networks and single-stage networks. In general, two-stage network detection has high accuracy but is difficult to deploy on embedded devices due to a large amount of computation and huge time consumption. The YOLO series, single-stage network detection algorithms, have received extensive attention and applications due to their simple network structure and consideration of both detection accuracy and detection speed. However, due to the poor computing power and limited memory resources of embedded devices, it is difficult to directly apply single-stage detection models to embedded devices to detect objects in real time. Hence, we expect to deploy a high-performance model to detect ships in optical remote sensing images on equipment terminals with limited resources and space and achieve a lightweight ship detection network for complex remote sensing scene images to promote the landing of the model.MethodsAs the existing lightweight object detection algorithms based on deep learning have low detection accuracy and slow detection speed for ships in complex remote sensing scene images, a lightweight real-time ship detection algorithm STYOLO is proposed for embedded platforms. The algorithm uses YOLOv5s as the basic framework. First of all, considering the high memory access costs in the backbone network, the efficient network architecture ShuffleNet v2 is used as the backbone network to extract image features, which reduces memory access costs and improves network parallelism. Secondly, the Slim-neck feature fusion structure is used as the feature enhancement network to fuse the detailed information in the lower-level feature maps to enhance the feature response to small objects. In addition, the coordinate attention mechanism is applied in the multi-scale information fusion region to strengthen object attention and thus improve the ability to detect difficult samples and resist background interference. Finally, a learning strategy combining cross-domain and in-domain transfers is proposed to reduce the difference between source and target domains and improve the transfer learning effect.Results and DiscussionsAfter 100 training iterations with ShuffleNetv2-YOLOv5s, YOLOv5s, MobileNetv3-YOLOv5s, and YOLOv5n on the same test and validation sets, all the evaluated metrics have good performance (Fig. 11), which verifies the effectiveness of the proposed algorithm. On the basis of the YOLOv5s framework, ShuffleNet v2 is used as the backbone network, and Slim-neck is used as the feature enhancement network; the two detection models are trained by cross-domain transfer learning. Compared to the YOLOv5s model, the lightweight model has reduced the detection accuracy, the number of floating points, and the number of parameters by 2.12 percentage points, 62.02%, and 62.05% (Table 2), respectively. To improve the detection accuracy of difficult samples and the ability to counter background interference, we employ the coordinate attention mechanism at the intersection of different information scales in the feature enhancement network. Compared with the results of the detection model without the coordinate attention mechanism, the mAP of the proposed algorithm is improved by 4.94 percentage points, and the number of parameters is raised by 0.75% (Table 3). When different attention mechanisms are applied at the intersection of different information scales in the feature enhancement network, it is found that the model applied with the coordinate attention mechanism has the highest mAP of 90.46% at a shrinkage rate of 32, an increase of 4.94 percentage points (Table 4). A learning strategy that combines the cross-domain transfer with the in-domain transfer is proposed to reduce the discrepancy between source and target domains and improve transfer learning. The mAP of the proposed algorithm with the above learning strategy is 94.33%, which is 3.87 and 14.17 percentage points higher than that with the training methods of cross-domain transfer learning and in-domain transfer learning, respectively (Table 5). The proposed algorithm is compared with ShuffleNetv2-YOLOv5s, YOLOv5s, MobileNetv3-YOLOv5s, and YOLOv5n on desktop computers and the Jetson Nano terminal. The proposed algorithm achieves a good trade-off between detection speed and detection accuracy in the optical remote sensing ship detection task, and the overall performance is good (Table 6 and Fig. 12).ConclusionsTo address the problem that existing lightweight object detection algorithms cannot achieve real-time accurate detection of ships in complex remote sensing scenes, we propose a lightweight real-time algorithm to detect ships in optical remote sensing images for embedded platforms, called STYOLO. Compared to current mainstream detection algorithms used in embedded systems, STYOLO can effectively improve detection speed while ensuring high accuracy. On the Jetson Nano terminal, it has a detection speed of 102.8 frame/s, which is approximately 2.21 times faster than YOLOv5s, 1.36 times faster than ShuffleNetv2-YOLOv5s, 1.70 times faster than MobileNetv3-YOLOv5s, and 1.50 times faster than YOLOv5n. The precision reaches 94.33%, 2.7 percentage points higher than YOLOv5s, 4.19 percentage points higher than ShuffleNetv2-YOLOv5s, 7.27 percentage points higher than MobileNetv3-YOLOv5s, and 24.61 percentage points higher than YOLOv5n, which can meet the requirements of accurate and real-time detection of ships in optical remote sensing images on embedded platforms. In the detection tasks of ships in remote sensing images, visible images are vulnerable to the natural environment, which leads to the weakening of the target features and difficulty in improving the accuracy of the algorithm. Hence, improving the accuracy of weak object detection by combining infrared and visible images for fusion detection is a key research direction.

ObjectivesThe receiving array composed of single-photon avalanche diodes (SPADs) can effectively improve the sensitivity of the receiving end, which has important application value in the field of remote detection and imaging. For moving targets in the air, it is difficult to obtain stable target echoes due to the limited single-pixel acquisition time. In particular, in long-distance conditions, the laser energy attenuates significantly in the channel transmission process, which makes the number of echoes detected by the SPAD array in imaging lessen. As the detector does not fully accumulate the echoes, the difficulty of target recognition rises due to the lack of target image features in its imaging results. For the accurate detection of such targets, target recognition methods are required to make full use of limited echo information. Through the method of image reconstruction, the image quality can be optimized to a certain extent, so as to improve the system's ability to recognize the measured target. However, image reconstruction requires a long processing time, which is difficult to meet the real-time requirements of moving target monitoring. For timely and effective identification of moving targets in the air, the detection system should have the ability to quickly process image information.MethodsUnder the condition of array imaging with low resolution, few features, and serious noise interference, traditional image processing methods and contour processing methods can hardly ensure timeliness and accuracy due to a large amount of data and great time consumption. When the weak imaging result of the SPAD array is directly used for target recognition, it does not require high-quality reconstruction of the target shape and texture. Hence, it can effectively reduce the data requirements of image reconstruction and the complexity of algorithms and is of great significance for realizing real-time monitoring of long-distance moving targets in the air. For a common low-altitude aircraft, its deformation rate is far lower than its displacement change during the movement, and thus, it is not necessary to recognize the target synchronously during target tracking. Therefore, the following solution is proposed: the detection process can be divided into two parts, i.e., target tracking and target recognition. On the basis of target positioning and tracking in a single imaging frame, multiple imaging frames are used for target recognition to neutralize the contradiction between recognition effect and processing speed. Upon the above considerations, this paper proposes an optical flow method based on clustering analysis and optical flow features.Results and DiscussionsThe method proposed in this paper can accomplish real-time tracking and recognition of moving targets in the air without any a priori information (Figs. 1-2). Considering the complexity of the moving mode of the airborne flying target, it is necessary to simulate three-dimensional motion information with a two-dimensional optical flow field. Since dimensions are reduced by the direct removal of depth information, the overlapping problem of multiple targets occurs. Therefore, this paper uses the projection method of aperture imaging to convert motion information to optical flow information (Figs. 3-5). To verify the effectiveness of the proposed method, this paper obtains more effective classification criteria through the statistics and analysis of the optical flow angle data of "low, slow, and small" targets and verifies the feature recognition results of optical flow angles according to the change in the value of the optical flow mode (Fig. 8). Upon the removal of the imaging frames with abnormal moduli, the experimental statistical results of the overall optical flow angle vector are consistent with the theoretical analysis results (Fig. 9). In target classification, this method uses the essential motion characteristics of the flying target, which is free from the interference of various types of shape camouflage and has a wide application scope.ConclusionsUnder the condition of array imaging with low resolution, few features, and serious noise interference, it is difficult to consider real-time detection and accurate target recognition by traditional target recognition methods due to the massive data to be processed and the huge time consumption. To alleviate the contradiction between timeliness and accuracy, this paper proposes an optical flow feature recognition method on the basis of the flight characteristics of different targets, which overcomes the recognition difficulty caused by poor array imaging effects. Due to limited time, this paper only conducts experiments and analysis on typical targets such as fixed wing UAVs, rotary wing UAVs, and birds. In the future, it is expected that the optical flow recognition method will be extended to more targets such as airships, balloons, and gliders to prove the universal applicability of this method in long-range aerial target detection. As the hardware processing capability is enhanced, the method of image feature recognition will have more advantages in real-time target detection, which should be the focus of future research.

ObjectiveUnmanned aerial vehicle (UAV) shows extraordinary superiority on the battlefield with high mobility, low cost, no casualties, and other advantages, which has changed the form of modern war and made unmanned intelligent war mainstream. For example, using UAVs for reconnaissance provides helpful information for commanders on the battlefield, which has excellent intelligence support value and operational command function. It should be noted that high-precision and real-time target localization is a necessary condition for UAV reconnaissance and target strikes. The airborne electro-optical platform is equipped with high-resolution imaging equipment to recognize the target at a long distance so that the ground target can be tracked and measured without entering the target area. However, in the case of limited observation conditions, such as large inclination angles and small rendezvous angles, the traditional localization method can no longer meet the accuracy requirement. At present, most of the target localization methods to improve accuracy under limited observation conditions can be divided into two basic types. One is based on image matching, and the other is based on geometry. But under the condition of a large inclination angle, the localization method based on image matching is seriously affected by the perspective transformation. Moreover, the results show that limited observation conditions make the design matrix ill-conditioned, and the condition number of the least square method is very large, which seriously affects the accuracy of the geometric localization method. Therefore, improving the target localization accuracy under limited observation conditions is of great significance.MethodsThe laser range finder has high measurement accuracy. It is not affected by the limited observation conditions such as a large inclination angle and small rendezvous angle, which makes laser ranging have tremendous application value in the field of ground target localization. In order to improve localization accuracy under limited observation conditions, this paper proposes a global optimization method for ground target localization based on the platform's location and laser ranging. In this method, first, according to the continuous observation data of the static ground target, the weighted error equation in the earth-centered and earth-fixed (ECEF) coordinate system is established. Second, the nonlinear problem is transformed into the problem of the eigenvector solution. Third, all stationary points are found by enumerating seven eigenvectors. Finally, the actual location of the ground target is calculated according to the actual situation. In fact, the critical point of this method is to transform the nonlinear problem into an eigenvector problem through data centralization, singular value decomposition, and other steps. This not only accurately finds the global optimal solution of the equation without iteration or optimization procedure but also improves the efficiency and stability of ground target localization.Results and DiscussionsThe ground target localization system is mainly composed of an airborne electro-optical platform, integrated global position system (GPS) and inertial navigation system (INS), and laser range finder. Furthermore, the platform flies in a circle over the ground target, obtaining the UAV's longitude, latitude, and height in the world geodetic system-1984 coordinate system, as well as the distance between the UAV and the static ground target at different time. The simulation experiment results based on the Monte Carlo method show that the localization accuracy is affected by location error of the airborne electro-optical platform, distance error of laser ranging, and data size of continuous observation (Fig. 5, Fig. 7, and Fig. 8). Furthermore, the flight test results show that the proposed method is feasible and effective under limited observation conditions. The target localization error is less than 30 m when the platform is 5 km away from the target, and the observation angle is 66.42°. Moreover, the operation time can be controlled within 10 ms when the quantity of continuous observation data is within 300 (Table 3 and Table 4).ConclusionsIn view of limited observation conditions such as large inclination angles and small rendezvous angles, this paper proposes a global optimization method to improve the accuracy of ground target localization. According to the location information provided by the integrated navigation system and the distance information provided by the laser range finder, the corresponding measurement model and weighted error model are set up. A fast closed-form solution for nonconvex optimization problems is obtained by deriving equivalent eigenvectors. The simulation and flight experiments results show that the proposed method has the advantages of high localization accuracy, computational efficiency, and robustness compared with the traditional localization method under limited observation conditions, which is of great significance to the reconnaissance and attack of targets on the battlefield.