Phase-sensitive optical time-domain reflection (Φ-OTDR) technique has played a critical role in the field of pipeline intrusion monitoring. Identifying and locating intrusion events is a key topic in this field. While neural network-based solutions have been proposed frequently in recent years, a majority of them neglect the location of the events, resulting in ongoing manual labor in practical engineering applications. Based on the investigation of pipeline intrusion event identification, an automatic event recognition and location method is proposed. The proposed method is based on the concept of target detection , and the spatio-temporal diagram of 1 s time and 4 km spatial distance is used as the input of the target detection network. As such, max-min normalization, bandpass filtering, and data augmentation are employed as preprocessing methods to realize the location and identification of intrusion events at the same time. The experiment demonstrates that the proposed method can achieve an average recall of 82.9% and a precision of 70.4% in three types of events, including surface beating, surface digging, and human jumping, which can basically meet most industrial requirements.

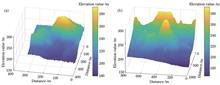

Terrain matching algorithms use terrain features to aid navigation. To improve navigation accuracy of the terrain matching algorithm, this study proposes a terrain matching algorithm based on the morphological enhanced histogram of oriented gradients (EHOG). The proposed algorithm performs morphological closed operations to preprocess the real-time elevation map (REM) obtained by the interferometric synthetic aperture radar (InSAR), thereby obtaining the EHOG features. Then, it converts the terrain matching into the matching of the HOG feature descriptors. Euclidean distance between eigenvectors is used as a measure of similarity. In the matching process, we have adopted a three-step optimization matching search strategy that combines rough matching, smaller matching, and fine matching to improve the algorithm's real-time performance. Experimental results show that, compared to the unenhanced HOG algorithm and the traditional gradient cross-correlation algorithm, the proposed algorithm has better matching accuracy and noise resistance. Simultaneously, it has shown strong robustness and practicality and is well suited for InSAR terrain matching.

In recent years, the classification of hyperspectral images (HSI) based on deep learning has attracted extensive attention in various fields. The number of HSI spectral bands is large and information redundancy is high, which increases calculation complexity. However, a lack of training samples can easily lead to overfitting of model training and reduce classification accuracy. To improve the classification accuracy and reduce the training time, this study proposes a fast dense connection network based on a three-dimensional convolutional neural network (3D-CNN) with double branch and double attention mechanism for HSI classification. In this study, the original data is dimensionless using principal component analysis (PCA) to reduce redundant information. Then, a double efficient channel attention (ECA) mechanism with a double branch dense connection structure and a fast Fourier transform (FFT) is used. It not only ensures the model's classification accuracy but also increases the model's training speed. We conducted experiments on multiple public hyperspectral datasets, and the performance evaluation indices are overall classification accuracy (OA), average classification accuracy (AA), and Kappa coefficient. The experimental results show that the proposed method can improve the classification accuracy and significantly reduce the training time and testing time.

Face pose estimation is widely used in the fields of face recognition, human-computer interaction,and facial expression analysis. One of the important indicators is the accuracy of face pose estimation. Aiming at the problem of the deflection angle in the acquired three-dimensional (3D) face model, a 3D face pose estimation algorithm based on symmetry plane is proposed. According to the zygomorphy of the face, the symmetry plane is extracted, and then the symmetry contour is calculated. The symmetry plane is aligned with the yoz coordinate plane to obtain the first posture adjustment result. The vector formed by the two ends of the symmetry contour is adjusted to be parallel to the y coordinate axis to obtain the second posture adjustment result. The pose estimation results are obtained by two pose adjustments. The accuracy of the algorithm is evaluated by the β of the face model rotating around the y-axis. 12 face point cloud models with different yaw angles are generated based on the BJUT-3D face database,and they are used as the test data set for the experiment. The experimental results demonstrate that the mean absolute error of β is less than 0.55° in the range from -30° to 30°. Compared with other face pose estimation algorithms based on feature points, the proposed algorithm has higher accuracy.

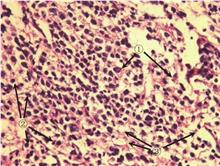

Peripheral neuroblastic tumors (pNT) are common extracranial malignant solid tumors in children, and its main prognostic evaluation is based on differentiation degree of neuroblastic tumor and mitosis-karyorrhexis index (MKI). At present, the calculation of MKI is mainly done manually by pathologists, which is a cumbersome process with a large workload. The computer image processing algorithm is used to identify pathological mitotic neuroblasts (PMN) and neuroblasts (NEU) in pathological slice images, and assist pathologists in counting, which can reduce doctors' repetitive work and improve doctors' work effectiveness. The mathematical morphology local minimum mark (H-minima) is used to modify the gradient amplitude, and the improved watershed algorithm is used to identify and count NEU. The experimental results show that, compared with the gold standard of pathologists, the average accuracy rate of the proposed algorithm for NEU recognition is 94.2%, and the average over-segmentation rate is 2.79%. From the perspective of chromaticity components, the average recognition accuracy of PMN cytoplasmic regions is 81.66%, and the average error rate of MKI value is 0.031%.

Aiming at the problems of accuracy and efficiency of military personnel image segmentation in multiple complex environments, we propose an encoder-decoder network based on improved dense atrous convolution and serial attention modules. First, we add the dense atrous convolution module in the U-shaped encoder-decoder network to improve the network's ability to segment multiscale targets and reduce parameter amounts. Second, we add the serial attention module in the U-shaped encoder-decoder network, enabling the network to focus more on the important features in the image. Finally, convolution after each downsampling in the encoder structure of the U-shaped encoder-decoder network is improved to reduce the parameter amounts. The experimental results on the multiple environments camouflage dataset show that the mean intersection of the union of the proposed network is 2.27 percent, 4.93 percent, and 10.46 percent higher than U-Net, SegNet, and FCN-8s, respectively. The parameter amounts are significantly reduced, improving the effectiveness of the network for military personnel segmentation in multiple complex environments.

To address the security risks associated with drone "abuse", aiming at the high complexity of the existing deep learning-based drone target detection algorithm, which results in lengthy model trainings, large computing resources, limited input image size, and slow detection speed, a lightweight level drone target detection (DTD-YOLOv4-tiny) algorithm is proposed. The proposed algorithm is based on YOLOv4-tiny, and we optimized the Anchor box using the K-means++ clustering algorithm, added the detection head of the 52×52 size feature map to expand the scope of the algorithm for small targets, and combined it with the ShuffleNetv2 lightweight backbone network, and the reorg_layer downsample and sub-pixel upsample methods were used to optimize the Backbone, Neck, and Head of the YOLOv4-tiny algorithm. Eventually, we obtained the DTD-YOLOv4-tiny with a model size of 1.4 MB and a floating-point calculation (GFLOPs) of 1.1, which is a lightweight detection technique. The experiments demonstrate that the DTD-YOLOv4-tiny detection model does not limit the image input size, while ensuring low computational resource occupation and high real-time detection. Simultaneously, the algorithm with reduced parameters can also maintain accuracy when facing the original large-scale image. When using 960×540 size image as input on the Drone-vs-Bird 2017 dataset, the average precision (AP) @50 of the proposed algorithm achieved 95%, and the detection speed on the RTX2060 graphics card attained 113 frame/s;when using 1920×1080 size image as input on the TIB-Net dataset, the AP@50 of the proposed algorithm achieved 85.1%, and the detection speed on the RTX2080Ti graphics card attained 119 frame/s.

One of the important tactics used by the public security bureau in a criminal investigation is to combine the related surveillance video and shoeprints on the spot to identify criminal suspects. However, the low-level automation of such a method is so labor-intensive and time-consuming,which limits its application. Therefore, this paper proposes an object detection method based on the YOLOv4 algorithm to realize the automatic detection of pedestrian shoes in surveillance video. According to the characteristics of the pedestrian shoe area, first, the K-means clustering algorithm is used to determine the scale of the anchor box and confirm its quantity; second, an appropriate detection layer was selected based on the datasets in this paper to improve the learning of shoe features; finally, a multifeature fusion method is used and the adjusted spatial pyramid pooling structure is transferred into the pruned network to improve the learning ability of the model. The experimental results demonstrate that the training weight of the YOLOv4_shoe algorithm proposed is only 39.56 MB, which is approximately one-sixth of the original model; and its mean average precision reaches 97.93%, which is 2.07% higher than that of the original YOLOv4 model.

Foreground ships can be quickly and effectively detected from sea background with the help of salient detection technology. As a result, saliency-analysis-based ship detection algorithms have received extensive research attention. However, obtaining accurate ship detection results influenced by irregular background noise, such as waves, clutter, and wakes, on the sea surface is challenging. A robust background-estimation-based salient ship detection algorithm has been proposed to solve the aforementioned problem. First, the input image is clustered into a set of superpixels, and the deep feature representation of each superpixel is extracted from a deep convolutional neural network. Then, a background noise estimation algorithm is proposed to effectively suppress the influence of background noise on ship detection and it is integrated into the solution framework of hierarchical cellular automata. Finally, the salient ship detection results can be obtained according to the difference in the feature description of various pixels in the stereo neighborhood space. Qualitative and quantitative experimental results demonstrate that the proposed algorithm could effectively enhance the salient ship detection effect under complex backgrounds.

In the current mainstream medium and long wave color fusion algorithms, except a few color mapping algorithms take the difference features of the source images as the starting point, others such as color transfer algorithms often lose more difference and detail features. However, mainstream color mapping algorithms have some problems such as color bias, narrow color gamut, and difficulty in adapting to various application scenarios. In this paper, the above problems are studied. In the color space based on a hue saturation color plane, a theoretical model of helical line mapping based on difference features is established, and a fusion algorithm is proposed based on this theoretical model. The actually collected medium and long wave dual-band images are simulated and verified. The simulation results show that the proposed algorithm is suitable for most complex scenes and can fully highlight the difference components of infrared mid-wave images. The proposed algorithm has wider color gamut distribution, better visual effect, and better objective index evaluation, and has low computational complexity and high real-time performance. Finally, the embedding of the algorithm is completed on the embedded platform. The results show that the algorithm has the premise of engineering application.

The difficulty of cross-modality person re-identification task is to extract more effective modal shared features. To solve the problems, this paper proposes a multi-loss joint cross-modality person re-identification method based on attention mechanism. Firstly, the attention model is embedded in the ResNet50 network, preserving the details. Secondly, the feature is divided into six local features to make the network focus on local deep information and enhance the representation ability of the network. Finally, the extracted local feature column vectors were normalized by batch processing, and the cross-entropy loss and improved hetero-center loss were used for joint supervised learning to accelerate the model convergence and improve the model accuracy. The proposed method achieves an average accuracy of 56.82% and 75.44% in the SYSU-MM01 and RegDB datasets, respectively. The experimental results show that the proposed method can effectively improve the accuracy of cross-modality person re-identification.

In the three-dimensional reconstruction technology, stereo matching is a key step. Aiming at the problem that local stereo-matching algorithms have poor matching effects in areas with weak texture and discontinuous depth and are easily disturbed by noise, a local stereo-matching algorithm based on multi-feature fusion is proposed. The traditional Census transform is improved to make it more robust to noise and is fused with color features and gradient features for cost calculation; the multiscale guided filtering algorithm is used for cost aggregation, and the disparity map is obtained through disparity calculation and optimization. The experimental results on the Middlebury dataset show that the proposed algorithm has strong antinoise ability, and the matching accuracy is further improved when compared with the current excellent local stereo-matching algorithms.

Segmentation of surveillance video into motion segments is the basis and premise of video synopsis. The existing video segment segmentation methods are complex in their implementation and computationally intensive, which has a detrimental effect on the real-time performance of video synopsis. To address these issues, a motion segment segmentation method of surveillance video based on the spatio-temporal flow model is proposed. The proposed technique only sparsely samples the boundary pixels of the video surveillance area to create the video spatio-temporal profile. On this basis, background modeling is used to extract the targets from the spatio-temporal profile. A spatio-temporal flow model for moving objects entering and exiting the visual surveillance area is subsequently constructed. Finally, the model is modified according to the moving target's feature matching, and the accumulative spatio-temporal flow curve of the video is obtained, from which the motion segments are then segmented. Experimental results show that the presented method not only ensures video segmentation accuracy but also dramatically increases the speed of video motion segment segmentation.

The detection using traditional features from accelerated segment test (FAST) algorithm showed the existence of clustering corner phenomenon and the threshold value depended on artificial determination. Further, the detection using image matching algorithm showed that the matching and binocular vision measurement accuracies are low. In this paper, we proposed a binocular vision measurement method using improved FAST and binary robust independent elementary features (BRIEF). First, the FAST algorithm was used to extract the feature points and simplify the detection template. Next, the adaptive threshold was used to extract the feature points, which are described using the improved BRIEF, and the descriptor was formed by comparing the gray average of the neighborhood of a pixel. Then, it was based on the Hamming distance to complete the match. Finally, we adopted the gray gradient method to obtain the subpixel coordinates of the matching points. The three-dimensional spatial coordinates of the matching points were calculated using the principle of parallax and triangulation, to complete the size measurement of the measured object. From the experimental results, the corner points detected using the improved FAST are more uniform with regard to corner detection, verifying that the proposed method effectively improves the matching accuracy compared with other algorithms. Besides, the minimum relative error of measurement of proposed method is 0.45%, which satisfies the measurement requirements.

To address the problem of image dehazing distortion caused by inaccurate estimation of atmospheric light value and transmission in existing image dehazing algorithms, an improved image dehazing algorithm based on dark channel prior and haze-line prior is proposed. First, we compute the global relative haze concentration of the image using the relationship between haze concentration and the difference in brightness and saturation in HSV space and combine the high-pixel value corresponding to the dark channel map to set the weight coefficient that can automatically select the appropriate atmospheric light value. Second, we use the rough transmittance value obtained by the dark channel prior to correct the maximum radius transmittance in each haze-line, and then introduce a tolerance parameter to optimize the transmittance of bright pixels. Next, fast guiding filtering is introduced to further optimize the transmittance maps. Finally, the final haze-free image based on the atmospheric scattering model is obtained. The experimental results show that the image dehazing algorithm proposed in this research outperforms the current algorithms in terms of subjective visual effect and objective data.

When a convolutional neural network performs real-time image semantic segmentation, processing large blocks of pixels with small color changes leads to computational spatial redundancy. Also, the accuracy of feature extraction using lightweight networks is low. We designed a real-time semantic segmentation network using the improved MobileNet v3 and lightweight OctConv high-frequency (OTCH-L) module to mitigate both problems. First, the hard-swish activation function was used to compensate for the accuracy of the lightweight network MobileNet v3. Then, we proposed an improved MobileNet v3 feature extraction network. Furthermore, we designed the OTCH-L module based on the octave convolution to solve the problem of spatial redundancy and reduce the computational size of the model while ensuring accuracy. The models were trained and verified on the Pascal VOC2012 and VOC2007 datasets, respectively. The experimental results show that the segmentation speed of the proposed model reaches 25.94 frame/s, and the mean intersection over union (MIoU) reaches 70.34%. Compared with the mainstream semantic segmentation models, such as SegNet, PSPNet, and DeepLab v3 plus, our proposed model significantly enhances the segmentation speed while maintaining segmentation accuracy.

This paper proposes a point cloud registration algorithm based on normal distribution transform (NDT) and feature point detection to address the shortcomings of traditional iterative closest point (ICP) algorithms, such as a large amount of calculation, low efficiency, and ease of being affected by the initial pose of the point cloud. The algorithm employs a “coarse and fine” registration strategy. First, the point cloud is preprocessed; thereafter, the NDT algorithm is used to coarsely register the processed point cloud for providing a more ideal initial pose for fine registration. Next, the 3D-Harris feature point detection algorithm is used to extract the point cloud feature points. Finally, the ICP algorithm is used to finely register the point cloud set after the feature point extraction to obtain an optimal solution. The simulation results show that when compared to the traditional algorithm, the algorithm used in this paper improves the efficiency and the accuracy of point cloud registration.

A three-dimensional coordinate extraction method for the circle center, based on the random Hough transform, is proposed, aiming at the problem that the central three-dimensional coordinates of the circular target cannot be obtained directly from the two-dimensional image. The random Hough transform method can quickly detect and locate the feature points of the collected circular target image's circular center. Further, according to the binocular vision algorithm, the two-dimensional coordinates of the obtained feature points are stereo matched, and finally the three-dimensional coordinate information of the center of the circular target is obtained. The proposed method is mainly used in the field of oil drilling for an intelligent single drill pipe positioning joint operation. The experimental results for drill pipe joint image positioning show that, when compared with the traditional Hough transform method, the calculation time of the random Hough transform method is greatly reduced and the efficiency of feature point detection can be significantly improved. Simultaneously, when combined with a binocular vision algorithm, it can accurately extract the three-dimensional coordinates of the center of the circular target with high precision and good stability.

Example learning is an effective single-image super-resolution reconstruction technique. The key function of this technique is determining how to establish the mapping relationship between high- and low-resolution images. Especially, when dealing with complex and diverse natural images, several studies have shown that it is difficult to reconstruct ideal high-resolution images using a single regression model. Therefore, this study uses the A+ algorithm as a starting point and proposes a super-resolution algorithm based on the theory of Boosting ensemble learning that can adapt to various types of natural images by continuously enhancing the complementarity of the regression model. First, the Boosting scheme is used to train multiple sets of complementary subregressors. Then, all sets of subregressors are merged to generate an integrated model with stronger generalization ability and better reconstruction performance. Finally, a cascaded residual regression strategy and a coarse-to-fine technique are used to gradually synthesize high-resolution images to further improve the image quality. The proposed method was compared with four state-of-the-art examples of learning-based super-resolution methods using five standard datasets. The experimental results show that the proposed method can reconstruct high-quality images with clearer edges and richer texture details.

The rise in popularity of three-dimensional video has led to an extensive usage of depth image-based rendering (DIBR) technology in entertainment, military, education, and other fields. Image quality assessment of DIBR technology has received a lot of attention in recent years because it is one of the main technologies of virtual viewpoint synthesis. The quality of the synthesized image and video is critical to the successful application of the associated technology. Thus, based on the statistical features, this paper proposes a no-reference quality assessment model for DIBR images. First, the texture distortions of DIBR images are detected by Benford's law, and then variation of discrete cosine transform (DCT) coefficients and the statistical characteristics of the natural scene are extracted. Finally, the prediction scores are obtained by training the extracted features using support vector regression (SVR). The proposed method is highly consistent with human subjective evaluation, according to the experimental results on three public image datasets: IVC, IETR, and MCL-3D.

Detecting a decenter or tilt is a key problem in aligning an off-axis telescope system. Therefore, this paper proposes a second-order sensitivity matrix method. When the ranges of decenter and tilt are from -3 mm to 3 mm and from -3 mrad to 3 mrad, respectively, the relation curves between the Zernike coefficient and misalignment are drawn. All their goodness of fit is larger than 0.99. Based on these curves, two sensitivity matrixes are solved and verified using simulation experiments. The results are as follows: The root mean squares (RMSs) of the x-axis decenter and y-axis decenter are 0.0023 mm and 0.0043 mm, respectively, and the RMSs of x-axis tilt and y-axis tilt are 0.0177 mrad and 0.0031 mrad, respectively. The simulation results show that this method is more precise than the traditional sensitivity matrix method.

Due to the lack of large scale technology of infrared focal plane arrays and the conflict between the wide field-of-view and high resolution for a single aperture infrared detection system, an infrared imaging system with wide field-of-view and high resolution based on compression sensing was designed. The system consists of the objective lens and the relay lens. Firstly, the imaging objective can image a scene with wide field-of-view and high resolution. Secondly, the relay lens images the image surface of a single image modulated by a spatial light modulator. Finally, the scene image received by detector arrays can be restored by image reconstruction algorithm. The design results show that the working wavelength of the system is 3.8‒4.8 μm, and the objective lens has large numerical aperture of F/1.999. The field-of-view is ±16°, and the pixel number can reach 1280×1024. The pixel number of the relay lens is 640×512 with a good matching with the detector array. Comparing to the current single aperture infrared detection system, the designed system has the characteristics of the wide field-of-view, high resolution, and simple and compact structure, and has wide potential application in the field of aerial remote sensing.

Space-based optical monitoring system is a critical component of situational awareness. By analyzing the photoelectric imaging process of the target under the starry sky scene, the space-based space target scene imaging simulation system is designed based on surface target imaging, and a method is proposed for increasing the sampling range of secondary reflected light according to the bidirectional reflectance distribution function (BRDF) properties of the materials used on the satellite surface. Using the reflection distribution of solar panel material and polyimide film, the light concentration areas with vertex angles of 10° and 15° were determined for Monte Carlo sampling. The target imaging module includes two modes: real-time and high-quality. When the real-time imaging mode is selected, the designed system directly contacts the OpenGL pipeline for rendering and obtains an imaging frequency of over 35 Hz. When the high-quality imaging mode is enabled, the image is captured using the improved twice-sampled ray-tracing technique. The imaging effect is accurate, as is the shadow display. The imaging and monitoring of space targets are simulated by using the designed system against a background of stars, which can serve as a certain reference for the design of a space-based optical camera.

Gait recognition is a type of noncontact remote biometric recognition technology used for identity recognition based on the walking patterns of distant pedestrians. Aiming at the problem of poor recognition effect in infrared human gait recognition using convolutional neural network (CNN), the long and short term memory network (LSTM) is used to cover the image after wearing according to the proportion of human height, so that the network can focus on extracting the change characteristics of legs and the time dimension characteristics of each infrared human gait cycle. Therefore, a new gait recognition model is developed. In the CASIA C infrared gait database provided by the Chinese Academy of Sciences, the experimental test was carried out on the data after the preocclusion processing of the wearing part, and recognition accuracy of the proposed model was higher than that of convolutional neural network model. The experimental results indicated that using LSTM for gait recognition considerably enhanced the recognition accuracy when some features were unavailable.

Photoacoustic tomography is a noninvasive medical imaging technology. It has many advantages over other imaging methods and provides a new imaging idea for early diagnosis of tumors. The analysis and denoising of photoacoustic signals can improve the signal-to-noise ratio (SNR) and imaging quality of imaging systems. Thus, an intelligent denoising algorithm for photoacoustic signals is proposed. First, the adaptive white-noise complete set empirical mode decomposition was used to decompose the photoacoustic signal. Second, the wavelet threshold denoising method was used to complete the high-frequency denoising of the specific mode photoacoustic signal. Finally, the preprocessed photoacoustic signal was reconstructed sparsely by K-singular value decomposition to realize the intelligent denoising of photoacoustic signals. The simulation and experimental results show that when compared with other denoising algorithms, the SNR and root mean square error (RMSE) of the image obtained by the proposed algorithm improved. It can effectively remove the noise and artifacts in the three-dimensional tumor phantom photoacoustic reconstruction image and retain the edge information of the image. The proposed intelligent denoising algorithm can adaptively complete denoising according to the characteristics of noisy photoacoustic signals and achieve better denoising effects, which can be used as an auxiliary method for photoacoustic imaging.

The images captured in a hazy environment are always prone to blurring. To restore the hazy images, a transmission function estimation algorithm based on the RGB color space ellipsoid model is proposed. First, the gray values of pixels in a neighborhood of a hazy image were mapped to RGB color space, and the aggregation state of pixel gray values were fitted by an ellipsoid model. Furthermore, all vectors in the ellipsoid were projected onto the atmospheric light vector to estimate the transmission function for each pixel. Finally, the transmission function is optimized and combined with atmospheric light vector estimation to restore the hazy images. Experimental results indicate that the proposed transmission function estimation algorithm, which is based on the RGB color space ellipsoid model, can effectively restore the hazy images.

The existing multiexposure fusion methods generally only determine the weight image according to the characteristics of each image. The fusion image has some problems, such as partial information loss and unclear details, especially during strong light in the image, producing nonideal fusion result. To solve these problems, a multiexposure image fusion method based on the entire sequence of image feature weights is proposed. First, the method determines the local brightness weight reflecting the importance of the brightness of each pixel in the image itself, the global brightness weight reflecting the importance of the brightness of each pixel in the whole sequence of the image, and the gradient weight reflecting the importance of the local gradient of each pixel in the whole sequence of the image; then the fused image is obtained according to the weight. Multiexposure image sequences containing various scenes were selected as the experimental data. The results show that the average multiexposure fusion structure similarity (MEF-SSIM) of the proposed method reaches 0.980, the average information entropy reaches 7.652, while the average running time is only 1.34 s. Compared with traditional methods and deep learning methods, the fusion image obtained by the proposed method has clear details, rich information, natural performance, more similar to the visual effect of the human eye, the fusion effect is better.

Aiming at the micro-video popularity prediction, we propose a micro-video popularity prediction model with a bidirectional deep encoding network. The model considers both multi-modal fusion and unimodal supervision modeling, and integrates them into a bidirectional deep encoding network. The multi-modal fusion module uses modal relevance to solve problems such as data missing and dimensional differences among original features to obtain a more comprehensive feature representation. The unimodal supervision module uses modal differences to supervise multi-modal feature fusion. Via joint training of multi-modal fusion and unimodal supervision tasks, the consistency and difference of multi-modal information are fully learned to improve the generalization ability of the algorithm. The experiments on the public NUS dataset have proved the effectiveness and superiority of our proposed algorithm.

Dual-channel contrast prior (Dual-CP) simulates contrast using the difference between the bright channel and the dark channel of an image, and it achieves good results in the blind restoration of blurred images. However, in practical applications, the values of the bright channel and the dark channel of an image are not distributed on 1 and 0 as theoretically researched. This paper proposes a blind image restoration algorithm that combines Dual-CP, L0 regularization strength, and gradient prior, wherein an effective optimization algorithm is derived using semi-quadratic splitting method to solve the nonconvex L0 minimization problem. Experiments demonstrate that the proposed method has better intuitive description recovery capabilities, and on the benchmark dataset presented by Levin et al., Köhler et al., and Lai et al., the average peak signal-to-noise ratio increased by 2.1051 dB, 1.1273 dB, and 0.4491 dB, respectively, and the average structural similarity increased by 0.1302, 0.0599, and 0.0158, respectively.

This study implements a feature extraction based on piecewise linear Morse theory to solve the problems of incomplete feature recognition in traditional surface feature extraction algorithms, complex topological relationship construction, and difficulty in topological simplification. In addition, it proposes a new feature importance measurement index for topological simplification. Firstly, critical points are identified to form critical lines, which generates Morse-Smale complex to complete the construction of surface topological features. Thereafter, a new feature importance metric is proposed using the average eigenvalue of Morse-Smale complex to backcalculate the eigenvalues of critical points, making a double evaluation of the feature importance of this complex. Finally, the simplification and the expression of the topological features of the surface are realized based on the metric. The experimental results show that the surface features are extracted and a good topology structure is constructed. The feature extraction point cloud compression rate reaches 36.29%, and the topology simplified point cloud compression rate reaches 70.73%. This considerably reduces the massive point cloud data and eliminates redundant topology, laying a good data foundation for the subsequent expression and the application of surface point clouds.

Occlusion is an essential factor that often leads to the failure of object tracking. Improving antiocclusion performance of the algorithm has been a research hotspot in tracking. First, this paper analyzes why occlusion easily leads to tracking failure. Furthermore, the importance of constructing a strong discriminant and robust object model to improve the antiocclusion performance of the tracking algorithm and an effective scheme to improve the antiocclusion performance of the target model are discussed. Then, based on the utilization information type of constructing object model, the representative methods with better antiocclusion performance are divided into three categories on the basis of effective feature, state estimation, and stable spatiotemporal informations. Further, the antiocclusion idea scheme, suitable occlusion scene, pros and cons, and improvement schemes of object tracking algorithm based on Kalman filter, particle filter, local spatial information, time context information, and spatiotemporal context information are analyzed in detail. Finally, through performance comparison with the tracking performance of different types of methods in occlusion scenarios, the antiocclusion effectiveness of the object model construction scheme is analyzed. The application and development direction of learning semantic information lightweight network design, scene context prediction, and bionic vision mechanism are presented.

Developing information acquisition equipment, such as lidar, has made intelligent target detection increasingly important. Recently, there has been an increasing need for an effective and intelligent target detection technology to realize the intelligent detection and recognition of pedestrians, vehicles, and other targets, and improve the intelligence level of unmanned driving, urban management, and other applications. Thus, to solve the lack of information in the use of light detection and ranging (LiDAR) for 3D target detection, this paper proposes a multimodal information fusion-based multitarget detection algorithm. The network model comprises three modules: LiDAR point cloud data processing module, 2D image data processing module, and information fusion and detection module. The first two extracted the point cloud and RGB image features, respectively, whereas the information fusion and detection module merged the three- and two-dimensional feature maps according to the corresponding positions to mitigate the lack of information in the monomodal data and achieve the complementarity of the feature level. The fused feature map generated the target detection frame using the three- and two-dimensional area generation networks and adopted the post-fusion strategy to fuse the detection frames of both modes to obtain the final target detection result. KITTI and VOC2007 datasets were used for evaluation and analysis. Experimental results demonstrated the superiority of the proposed algorithm.

The algorithm based on a 3D label can obtain a more accurate sub-pixel disparity map in the stereo matching problem. To overcome the random initialization of 3D labels, we proposed a label initialization approach based on superpixel structure and triangulation. An initial 3D label is generated by triangulating the feature points retrieved from the superpixel boundary. To increase the efficiency of the graph cut approach in iterative optimization of 3D labels, we conduct optimization on the superpixel structure, adding the hypothesis of current label state throughout the iteration to expand the label candidates, which improves label search efficiency. Experiments on the Middlebury2014 dataset demonstrate that the proposed approach has a lower average error rate (8.31%) than the LocalExp algorithm (8.39%), and the average processing time for single image is ~70% that of the LocalExp algorithm.

In view of the heavy task, low efficiency, and high cost of existing airport bird-repelling systems, we designed a laser bird-repelling robot system composed of a camera, dual-axis mirror, laser, video processing, and servo controls. The flying bird stabilized sighting algorithms in the system consists of target detection, target tracking, and servo control algorithm. To solve these drawbacks, we applied a simple and effective nearest neighbor classifier in the tracking process to confirm the tracking target for improving tracking stability and mitigating the lack of target loss judgment in the classical kernelized correlation filter (KCF) tracking algorithm. Furthermore, we replaced the concatenated histogram of oriented gradient (HOG) features in the original KCF algorithm with a feature map of approximate size in the middle layer of the depth network to solve the weak discrimination of the HOG feature in the KCF method. This strategy enhances the discrimination of target features while avoiding extracting depth features block by block. Experimental results show that the proposed laser bird-repelling robot system accurately stimulates and interferes with flying birds, and its detection performance and tracking performance outperform other algorithms. The proposed system serves as an effective and safe stimulus signal bird-repelling scheme in airports.

In this study, foreground occlusion occurs in the application of camera measurement due to the complex structure of objects or a complex scene environment, causing the target to be lost and the measurement to fail. An occlusion target position measurement approach based on array synthetic aperture imaging is developed using synthetic aperture imaging and image processing technology. The overall concept is to obtain a de-occluded image using a synthesis approach, identify the object to be measured in the image, and finally reconstruct the information to be measured. As the marker point approach is widely used in position observation in camera measurement, this study examines the gray distribution of typical markers from a synthetic aperture imaging process, summarizes the applicability of the existing recognition approaches under occlusion conditions, proposes a marker point appropriate for the special gray-scale distribution of synthetic images, and verifies the feasibility and the accuracy of the corresponding identification approach. Thereafter, the static and dynamic measurement experiments are conducted using the approach proposed in this study. The results show that the proposed occlusion target position measurement approach based on array synthetic aperture imaging can solve the challenges of marker points loss and information recognition missing due to foreground occlusion in complex structure measurement and complex environment measurement. The proposed approach offers a novel means to enhance the accuracy of camera measurement in related applications.

The automatic recognition of motion features of quadruped working could be widely used in animal bionics,behavior recognition, disease prediction, and individual identification recognition. In this paper, based on the computer vision technology and deep learning method, an automatic extraction method for the gait parameters of quadruped walking is established. At first, the walking image frames of quadrupeds can be obtained from the collected quadruped walking videos by using the video frame decomposition technology. Next, the moving object can be extracted via the improved semantic segmentation model DeeplabV3+. Then, according to the characteristic analysis of quadruped walking gait, the detection and matching of the motion corners can be realized based on the distance from the center point to the contour of the object. Finally, a method based on the distance from four limb motion corners to a fixed reference point is established to effectively solve the problem of quadruped motion feature parameter extraction. The experimental results show that the proposed method can give better results for the motion corner detection of quadrupeds. The maximum error is 32, 27, and 19 pixel in the motion corner detection for rhino, buffalo, and alpaca, respectively. The corners of four limbs match accurately. The results also show that the calculation error of the gait cycle and gait frequency is less than 2%, the gait sequence output is correct, and the maximum calculation error of the stride length is 2.85%.

The attitude angle parameter of the unmanned aerial vehicle (UAV) is crucial for determining its flight performance. In the standard measurement method, the various sensors of the UAV device generated considerable data errors due to the influence of the UAV. As the existing visual measurement methods are affected by light and have limited recognition accuracy of marking spots, a method for UAV attitude angle measurement based on laser projection and vision is proposed. The three-dimensional position of the marker point in the world coordinate system is determined via the total station data, and the laser transmitter mounted on UAV emits lasers toward the front, left, and right sides of the screen, while the camera captures and calculates the displacement of the laser point on the screen in real time. Through the geometric relationship between the laser and marking points and the Rodrigues rotation formula, the attitude angle of the UAV is determined. The experimental results indicate that the calculation error of the attitude angle of the proposed method is below 0.3°, which meets the accuracy criteria for the UAV attitude angle measurement.

To address the issues of low extraction accuracy, inadequate data representation, and complex algorithm in urban landscape tree extraction, a new algorithm for extracting landscape trees from Light Detection and Ranging (LiDAR) data is proposed, which is based on the marked point process with spatial feature constraints. The proposed method removes nonground points from the point cloud data and constrains the marked point process model by the density of the crown point cloud of the trees. First, the triangulation network processing densification filtering algorithm is used to separate the ground and nonground points from the LiDAR point cloud data. Second, for nonground point data, the process model of spatial distribution identification points of landscape tree is defined and the geometry of landscape tree in ground projection area is described by a circle. The geometric model of tree crown projection area is defined, and the elevation distribution model is built using the elevation distribution characteristics of landscape tree and nontree area data points. Accordingly, an elevation constraint model is built based on the spatial density characteristics of the crown point cloud of the trees. The landscape tree extraction model is established using Bayesian theory integrating the above models, and the extraction model is simulated using the Reversible Jump Markov Chain Monte Carlo algorithm. Finally, the optimal landscape tree extraction results are obtained based on the maximum a posteriori probability criterion. The experimental results show that the proposed method’s overall accuracy of the landscape tree extraction is high and the overall extraction rate and accuracy are greater than 90%. It can also achieve high accuracy for the landscape tree extraction results of complex scenes with high recognition difficulty.

The purpose of optical image anomaly detection is to train the model only with normal samples and detect abnormal samples that deviate from the normal law. To solve the universal reconstruction and low-quality interference problems in the generation-based anomaly detection algorithm, a new image anomaly detection algorithm is proposed based on the autoencoder network. First, the latent features are transformed into continuous and discrete features, namely block descriptive and hash features. Hash features have binarization characteristics; it can avoid under-sampling of latent space, thereby the problem of universal reconstruction can be effectively solved. Second, Based on the coupling relationship of discrete-continuous features, the graph shrinkage method is used to establish the block similarity matrix which constructs the association between hash and description features. Then the interblock reconstruction method is proposed to ensure high-quality reconstruction of the image and solving the problem of low-quality interference. Experiments on the international public dataset, MVTec AD, prove that the accuracy of the proposed algorithm is better than the present anomaly detection algorithms.

This study proposes an adaptive filtering stereo matching algorithm that fuses edge features to improve the problem of fuzzy matching results at the edge of the images. Moreover, a cost calculation method that fuses multiple features is proposed by introducing the image neighborhood pixel information and image edge features into the Census transform and combining the advantages of the gradient transform algorithm. Then, an edge weight factor is introduced to improve the effect of aggregation. The guided filtering is improved by setting an adaptive edge weight. Finally, the final disparity map is obtained through an optimization process. The proposed algorithm is compared with other algorithms on the Middlebury test set. The results show that the proposed algorithm is very effective in matching the effect at the edge. It also shows that the proposed algorithm has a stronger antinoise performance than other algorithms.

The existing general detection methods still have the problem of high missing rate in small target detection. To improve the detection rate of the head, the ResNet DenseNet MDC (Mixed Dilated Convolution) YOLOv3 (RDM-YOLOv3) target detection network is proposed on the basis of YOLOv3. Firstly, the feature extraction network DarkNet-53 of YOLOv3 is improved, and a feature extraction network RD-Net based on ResNet and DenseNet is proposed to extract more semantic information. Then, a mixed dilated convolution structure is constructed by sampling the feature layers using dilated convolution with different dilated rates to improve the sensitivity to small targets. Using RDM-YOLOv3 to compare with other methods on Brainwash dataset and HollywoodHeads dataset, the AP (Average Precision) values reached 93.1% and 86.8%, respectively. The experimental results are better than that of other methods, and the performance of small target detection is significantly improved.

To reveal the law of features change of metal surface topography during fatigue damage, we collected and transformed the three-dimensional surface topography information of Q235 carbon steel specimens into grayscale images at each fatigue damage stage. Then, these images were decomposed and reconstructed using fast discrete shear wave transform to obtain subimages containing roughness, waviness, and shape error. Furthermore, the gray-level co-occurrence matrix was used to describe the characteristics of the subimages' roughness. The variation rules of four characteristic parameters, namely, energy, correlation, contrast, and homogeneity, were obtained for the fatigue damage process. In addition, a series of decomposition layers were taken for multiresolution analysis, and the effects of different decomposition layers on the values of the above-mentioned characteristic parameters were compared and analyzed. Results show that the increasing fatigue cycles decrease the energy and homogeneity values and increase contrast values. The above characteristic values are related to the selection of extraction directions. Before fatigue fracture, the energy and homogeneity values suddenly increase, whereas the contrast value sharply decreases. Thus, a support vector machine classification model was constructed based on the three features, including contrast, energy, and homogeneity, and was used for fatigue damage state assessment of components.

Photoacoustic imaging technology (PAT) usually regards the absorption coefficient of biological tissue to light as a scalar. However, most biological tissues are anisotropic, and the absorption coefficients of light with different polarization states differ, limiting the use of photoacoustic imaging technology in some clinical diagnosis and treatment with polarization requirements. Based on this, a photoacoustic computed tomography (PACT) probe with an adjustable polarization angle is designed, and a polarization PACT system is built to provide an imaging basis for intraoperative polarization tissue detection. Non-polarization maintaining fiber guides and transmits the excitation light. It is polarized by a polarizer after a series of lens shapings, and the polarization angle of the excitation light is adjusted by a half-wave plate to excite the acoustic signal. In the experiment, through multiple polarized photoacoustic imaging experiments on polarizers with different optical axis directions and different depths in scattering media, the optical axis direction detection and depth imaging of polarizers are successfully realized. Furthermore, the structural information of the bovine tendon is successfully extracted by using the excitation light of two orthogonal polarization angles to the photoacoustic image of the bovine tendon, and the imaging ability and anisotropy detection performance of the designed handheld polarized photoacoustic imaging probe and polarization pact system are verified. It is expected to provide an imaging basis for the intraoperative diagnosis and treatment of anisotropic biological tissues (such as nerves and tendons).

Aiming at the complex and changeable morphological structure of retinal blood vessels, this study proposes a retinal blood vessel segmentation algorithm based on multidirectional filtering to solve the problem that the intersection and extension in blood vessel images are not easy to segment. First, the green channel of the retinal blood vessel image is selected by using histogram equalization, median filter denoising, top hat transformation, and other methods for image enhancement. Then, multidirectional Cake filtering is performed on the enhanced image. The filtered results are fused to weaken the noise in the background and enhance the contrast between the blood vessel and background. Finally, the vector field divergence method is used to extract the threshold, and the image is segmented to obtain the final retinal vessel segmentation result. The algorithms are tested on the public DRIVE and STARE datasets. The experimental results show that the proposed algorithm is simple and effective and adapts to the complex and changeable characteristics of retinal blood vessel scale information. The proposed algorithm can also handle the junction of complex blood vessels and has higher sensitivity, shorter execution time, and higher efficiency than other algorithms.

Aiming at the problem of small target recognition and segmentation, a network model based on bilateral fusion (BFNet) is proposed, which has a dual-branch structure. One has a narrow channel and a shallower structural layer, which focuses on the connections between adjacent pixels. The other introduces two modules, such as receptive field block (RFB) and dense fusion block (DFB), which have wider channels and deeper structural layers and can obtain high-level semantic context information. It is then represented by a guide aggregation layer that fuses the features of the two branches. Three open medical segmentation datasets are used to evaluate the performance of the proposed algorithm. The experimental results show that the proposed algorithm is superior to existing medical image segmentation algorithms in the segmentation task of polyps and skin lesions. Especially, in the automatic polyp detection Kvasir-SEG dataset, the average Dice and average cross ratio of the proposed algorithm reached 92.3% and 86.2% respectively, which are both higher than the existing algorithms.

To improve the efficiency of photovoltaic (PV) power forecasting, the method of feature fusion combined with improved temporal convolutional network (TCN) is proposed. The correlation coefficient approach is utilized to examine the time series features, and the effective input for feature fusion is calculated. To increase the accuracy of generating power forecasting, the TCN expansion parameters and connection modes are adjusted. The proposed method is evaluated on two different power plant data sets in South China, and it is compared to the classical algorithms LSTM, GRU, 1D-CNN, and TCN, as well as diverse weather samples. The results reveal that the approach described in this paper achieves a decisive coefficient of 0.982 and outperforms other algorithms in terms of fitting ability. The training time of the model is only 30 s, and the prediction efficiency is greatly improved.

This paper proposes a three-dimensional (3D) circular hole recognition algorithm based on the fusion of point cloud normal and projection to solve the problems of poor extraction accuracy and the incomplete edge point extraction of the existing 3D circular hole recognition algorithms. Firstly, k-dimensional tree(KD-tree) assists in establishing the spatial topological relationship of the point cloud. Secondly, K-NearestNeighbor(KNN) is used to search the k neighborhood points closest to the point. The point greater than the threshold is determined as the boundary point by defining the distance threshold. Finally, the fusion of point cloud normal and projection are combined to realize the distinction between feature points and noise points at the edge of the point cloud, and the 3D circular hole features of the point cloud data are extracted. The experimental results show that the algorithm can effectively realize point cloud edge extraction and 3D circular hole recognition.

When remote sensing satellites capture images during movement, the high-speed motion of the satellite camera causes motion blur in the remote sensing images. To solve this problem, this paper proposes a new deblurring algorithm based on the remote sensing image sequence with different integration time obtained in one shooting process. This algorithm used contour edge information extracted from short integration time images to guide the estimation of the blurred kernel of the adjacent long integration time images. Furthermore, the algorithm simplified the complexity of the single blurred kernel estimation through the idea of segmented solution. Additionally, the algorithm used the convolution operation to reconstruct the segmented blurred kernel, which greatly improved the accuracy of the blurred kernel estimation. Experimental results show that the proposed algorithm effectively improves the accuracy of blurred kernel estimation and significantly improves the clarity of blurred remote sensing images after deblurring.

Light detection and ranging (LiDAR) offers the advantages of high-ranging accuracy, negligible environmental influence, and all-day operation, making it suitable for unmanned ship obstacle detection. Since LiDAR point cloud is nearly dense and far sparse, the grid's size has a direct effect on the accuracy of obstacle detection based on a grid map. In this study, a variable size grid map with a linear increase of grid size is established. The grid is divided and clustered using the height difference discrimination method and eight-neighborhood connected component marking approach, and the obstacle information is extracted using a box model, yielding a more accurate obstacle detection result. The proposed method can effectively solve the problems of grid division and poor clustering effect in traditional approaches for the detection of small and medium fishing boats near shore, according to the results of a real ship experiment conducted at sea that compared the processing results of the proposed method with the traditional approach. The proposed method provides more accurate and real-time detection of obstacles at sea, as well as data support for unmanned ship obstacle avoidance.

Existing segmentation methods based on deep learning focus only on the global or local feature extractions of the point cloud; they ignore the shape information and semantic features between points. We propose a multifeature fusion dynamic graph convolutional neural network based on spatial attention to solve the challenges mentioned above. First, on the basis of edge geometric feature extraction, the low-dimensional geometric features of point cloud are mapped to the high-dimensional feature space to obtain the rich shape information. The multilayer perceptron is used to extract the global high-dimensional features of points. Then, a spatial attention mechanism is introduced to extract contextual semantic features between points. Finally, geometric features and high-level semantic information are effectively fused to enrich the representation of global and local features. The proposed network is tested on several public datasets. Experimental results show that the proposed network has achieved superior performance in object classification, part segmentation, and semantic segmentation.

Aiming at the problem that it is difficult for remote sensing data to achieve both high spatial and spectral resolution, a quadtree-based adaptive block area-to-point regression Kriging method (QAATPRK) is proposed to fuse the panchromatic (PAN) and multispectral (MS) data of GF-1.The proposed method is based on the area to point regression Kriging method, where the whole image is segmented into several independent fusion units and fused, splicing the results. For each individual fusion unit, spatial information of high-resolution PAN images were used for regression modeling and the residuals were treated by the regression Kriging method. The proposed method is compared with the Principal Component Analysis (PCA) method, wavelet transform method, Intensity-Hue-Saturation and Gram-Schmidt (IGS) method, and DenseNet. Root mean square error (RMSE), structure similarity (SSIM), universal image quality index (UIQI), relative global-dimensional synthesis error (ERGAS), and spectral angle mapper (SAM) demonstrate that the fusion image quality of the proposed method is the best and the spectral properties of the MS image are maintained.

To address the issue of large error when using horizontal 2D midline to extract the cross-sections of large longitudinal slope tunnel, an effective method for extracting the 3D axis and cross-sections of the tunnel is proposed. First, the 2D boundary points of the tunnel are extracted using boundary grid and angle constraints. Then, the boundary points are fitted using iterative least squares with multi-conditional constraints to extract horizontal and lateral midline points. Next, the lateral midline points are orthographically projected on the vertical curved surface containing the horizontal midline to obtain the 3D axis points. Finally, the infinitesimal method is used to calculate the normal plane equation corresponding to the 3D axis points and a point cloud with a certain thickness on both sides of the normal plane is projected onto this plane to extract tunnel cross-sections. The experimental results show that the proposed method can extract the 3D axis and cross-sections of the tunnel point cloud with high accuracy. Furthermore, the cross-sections extraction error is less than 3.06%; thus, the proposed method is applicable for straight and curved tunnels with varying longitudinal slopes.

We propose ResUNet+, an enhanced multiscale features residual U-shape network, to address issues in the extraction of small and irregular buildings from remote sensing images using the ResUNet, such as low segmentation accuracy and rough boundaries. Based on the ResUNet architecture, the squeeze and excitation module is used in the encoder to improve the network’s ability to learn effective features, and the atrous spatial pyramid pooling module is selected as the last layer of the encoding network to obtain context information of buildings at various scales. We evaluate the proposed ResUNet+ and compare it with SE-UNet, DeepLabv3+, DenseASPP, and ResUNet semantic segmentation networks on two commoly used public datasets: the WHU Aerial Imagery Dataset and INRIA Buildings Dataset. The results of the experiments show that ResUNet+ outperforms other networks in terms of pecision, recall, and F1-score. The segmentation results also show that RseUNet+ excels at extracting buildings of various sizes and irregular shapes.

Optogenetics uses optical technologies to control brain neural activity, providing important techniques and developing contemporary neuroscience. Because the noninvasive penetration depth of light is restricted in biological tissue, traditional optogenetics implants an invasive optical fiber, resulting in the inability to guarantee the spatial precision of light stimulation. Recently, with the advancement of optical technology, precision optogenetics has gradually emerged. Precision optogenetics primarily uses a deep-penetration optical system with a high spatiotemporal resolution, single-cell precision neuromodulation capabilities, and real-time detection capabilities for subcellular precision neuronal cluster activity. In this study, we analyzed and discussed the technical principles, optical path construction, and system optimization of precision optogenetics. Finally, we look forward to the future developments and applications of precision optogenetics by discussing the technical limitations and possible solutions.

In recent years, there has been an increase in the number of people diagnosed with thyroid cancer. Thyroid cancer mortality can be considerably reduced by early detection of thyroid nodules. Ultrasound is usually the first choice for thyroid imaging. This paper systematically summarizes the thyroid nodule diagnosis algorithm of convolutional neural network (CNN) for ultrasonic images based on the relevant literature published at home and abroad in recent years. The main content includes the application of CNN in the three aspects of thyroid nodule region extraction, benign and malignant classification, and calcification recognition. To provide a clearer reference to researchers, the basic design idea, network architecture form, related improvement purpose, and method of each algorithm are described. Finally, the algorithms for thyroid nodule diagnosis based on CNN are summarized and analyzed, and future research hotspots and related challenges are discussed.

Reflectance transformation imaging is a type of digital image acquisition technology, which has the characteristics of interactive arbitrary light distribution, multimode image reconstruction, and subtle three-dimensional feature presentation. This study mainly introduces the basic principle and development of the reflectance transformation imaging technology, analyzes its application in the cultural heritage and forensic science field, and presents the potential application of this technology in the forensic science field.

In view of the defects of traditional test methods, such as large consumption of edible oil, cumbersome operation, and long time consumption, a new idea of fast nondestructive test of edible oil was put forward. In the experiment, five kinds of oil samples including mixed oil samples were selected. The laser induced fluorescence system built in the experiment was used to collect 500 groups of data, 400 groups of spectral data were randomly selected as the training set, and the remaining 100 groups of spectral data were used as the test set. After comparison, the stack autoencoder algorithm with better performance was selected to extract the features of the obtained fluorescence spectral data, and then the extreme learning machine was used for classification and recognition. Finally, the edible oil samples measured at different time were used to verify the generalization of model. The experimental results show that, under the recognition model constructed in this paper, the sample test network time is only 0.2 ms, and the classification accuracy can reach 100%. The sample test network used to validate the new sample can also achieve good classification effect, the classification is fast, and the accuracy is high. That is to say, the model established in this paper is reliable, and it can also realize fast nondestructive test of edible oil types while ensuring accurate identification.

The moisture content of millet is an important indicator to measure the quality of millet. To detect millet moisture content, the two-dimensional correlation spectra of samples with different moisture contents are studied with millet moisture content as the external disturbance factor. First, obtain the near infrared spectra of 60 samples, and then, using four different preprocessing methods, create a partial least square regression (PLSR) model of sample moisture content based on the full-band spectra. Following the comparison, it is determined that the model with no preprocessing has the best effect. The calibration set coefficient of determination (Rc2) is 0.9460, root mean square error (RMSEC) is 0.49%, prediction set coefficient of determination (Rp2) is 0.9391, and root means square error (RMSEP) is 0.63%. Further, taking the moisture content of millet as the external disturbance factor, the spectral data of different moisture content gradients of millet is analyzed by two-dimensional correlation spectroscopy, and the wavelengths of the six autocorrelation peaks of the two-dimensional correlation synchronization spectrum are selected, and 1083, 951, 868, 1314, 1675, and 1865 nm are selected as the characteristic wavelength. Based on this, a millet moisture content prediction model is developed. The wavelength variable is reduced and the model is simplified when compared to full-spectrum data modeling. The correction set's coefficient of determination (Rc2) is 0.952, and the root mean square error (RMSEC) is 0.60%. The prediction set's coefficient of determination (Rp2) is 0.897, and the root mean square error (RMSEP) is 0.63%. The results show that two-dimensional correlation near infrared spectroscopy can predict millet moisture content and extract the characteristic wavelength, which provides a foundation for the design of a special millet moisture detector based on discrete wavelength components.