With the concept of metaverse gradually becoming popular, wearable near-eye display devices for virtual and augmented reality (VR/AR) have received considerable attention. An ideal VR/AR headset device must integrate the display, sensor, and processor into a compact form factor, so that people can wear it comfortably for long durations, while providing a good immersive experience and friendly human-computer interaction experience. The design concept of visual comfort is crucial for the next generation VR/AR equipment. Among many display technologies that can provide three-dimensional visual effects, holographic display technology can provide real and natural three-dimensional display effects including all three-dimensional viewing clues. Meanwhile, owing to its diffraction imaging characteristics, holographic display technology has unique advantages in human visual aberration correction and maintaining compact overall dimensions, and it has become a potential ideal technical solution for near-eye display equipment. In this review, the latest developments in the holographic near-eye display technology was investigated and summarized from the perspective of visual comfort. First, the human visual system was introduced in the context of visual perception. Then, the research works of holographic near-eye display in eyebox, field of view, speckle noise, hologram generation algorithms, and full-color display were comprehensively reviewed. Finally, the potential application scenarios of holographic near-eye display technology were summarized and discussed in this study.

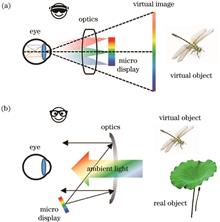

As the next-generation innovative technologies emerging after computers, smartphones, and the Internet, virtual reality (VR) and augmented reality (AR) are changing the way we perceive and communicate with the world. VR/AR head-mounted displays have flourished in the recent years, creating an increasingly urgent need for high-resolution and-brightness miniature displays and small-volume and lightweight near-eye display opto-systems. As new ultra-thin optical components with subwavelength nanostructures lined up on a two-dimensional plane, metasurfaces with powerful electromagnetic wave modulation capabilities beyond traditional optical devices are accelerating the development of VR and AR devices toward miniaturization and light weightedness. We first briefly introduce herein the basic principle of the VR/AR display technology and review its development history. We focus on the design principles, performance characteristics, and application methods of metasurfaces and metalenses in optical systems for VR/AR near-eye displays. We then introduce the role and the application effects of metasurfaces in micro-display devices. In addition, we present the micro- and nano-fabrication technologies related to metasurfaces and the large-area mass fabrication method. Finally, we provide a summary of the metasurface-based VR/AR display technology and an outlook on its development prospects.

Augmented reality display devices, as a wearable smart device, significantly enhance the experience of the real world by superimposing virtual information on the real field of view, and therefore have very strict requirements for device size and weight. Micro- and nano-optical elements, which are optical elements with thickness or size of the nanometer or micron scale, provide new ways to reduce the size and volume of systems while possessing powerful optical field modulation capabilities. This paper first reviews the principles and modulation of several types of typical micro- and nano-optical elements, and then discusses the technical paths and applications of micro- and nano-optical elements in augmented reality devices and looks into future developments.

Augmented reality (AR) display is a significant development direction of new display technology and one of the hardware entrances of the "meta-universe". Glasses-free AR-3D display has a broad range of application needs in vehicles, education, medical and other fields; therefore, it has gained close attention from scholars and industry experts. This study reviews the development of glasses-free AR-3D display technology, primarily including AR-3D display technology based on geometric optical elements, holographic optical elements, and pixelated diffractive optical elements, among others, expounds the basic principles of various technologies, analyzes the challenges of existing technologies, and anticipates its subsequent advancement. Glasses-free AR-3D display will gradually change the method people acquire information.

Augmented reality near-eye display devices have attracted significant attention in the information display technology field. To address the bottleneck in the structure of traditional near-eye display systems, which cannot balance the field of view and volume, metasurface optical elements have provided a new concept for developing a large field of view, thin, and compact near-eye displays due to their ability to modulate the multidimensional physical quantities of the optical field and the benefits of planar integration. This paper introduces the principle of metasurface optical field modulation and then focuses on the design of various optical systems for augmented reality near-eye displays based on the metasurface concept, including the design of optical systems based on the metasurface and a freeform surface, the design of a retinal projection display based on the metasurface concept, the design of an optical waveguide based on the metasurface concept, and the design of a holographic display device. The challenges faced by metasurfaces in terms of augmented reality near-eye displays are discussed, and relevant future prospects are presented.

Augmented reality is an important human-machine interface platform for the metaverse, and optical imaging units and microdisplay screens in a system are the key to the display performance. At present, there are five popular microdisplay devices, namely, the liquid crystal on silicon, organic light-emitting diode (OLED) on silicon, micro light-emitting diode (micro LED) on silicon, digital light processing (DLP), and laser scanning galvanometer, all of which has their own special features. This study introduced the construction, manufacturing process, silicon driving circuit, status quo, and the challenges of different microdisplay screens. For the silicon-driving part, this study analyzed topics such as the pixel-driving circuit and its pros and cons and the challenges related to the design and index of different display technologies. Furthermore, the indicators that can be achieved by different technologies were also summarized and compared. The design focus and development trend of silicon-based backplanes were also discussed. Finally, the application scenarios and development of different microdisplay chips were discussed.

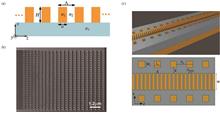

Silicon-based microdisplays are based on single crystalline Si molecules, with a CMOS drive circuit integrated into their backplanes. Recently, these microdisplays have been widely used in near-to-eye display, projection, and augmented reality/virtual reality (AR/VR) fields due to their small size, high pixel density, fast switching speed, and low power consumption. Therefore, because of their emerging importance, this study reviewed four silicon-based microdisplays: digital mirror device (DMD), liquid crystal on silicon (LCoS), organic light emitting diode on silicon (OLED on silicon), and silicon-based micro-light-emitting diode (micro-LED on silicon). Then, we discussed the key technologies and research progresses of the OLED on silicon and micro-LED on silicon in detail. Consequently, our study proposed that their outstanding active luminescence, high resolution, high refresh ability, high contrast, and low power consumption characteristics show great application potential in near-to-eye display fields.

Diffractive waveguide is a mainstream technical solution to realize augmented reality near-to-eye display, which has the advantages of a large eye-box, light volume, and good mass production. However, unified definitions for the evaluation indexes of some key parameters of diffractive waveguides are still lacking. This work provides a detailed introduction to diffractive waveguide scheme main parameters and measurement methods. The advantages and disadvantages of diffractive waveguides are investigated by clarifying key parameters to maximize their technical potential and improve product performances.

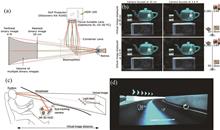

The holographic retinal projection display (RPD) technology based on wavefront modulation can converge an image beam without a lens and realize the free adjustment of the image depth of field; position, number, and spacing of viewpoints; and other parameters. At present, the amplitude-type hologram is mostly used to explore the lensless wavefront modulation hologram RPD; however, using this type of hologram involves the problems of low diffraction efficiency and conjugate image crosstalk. Hence, a calculation method of the phase-only hologram of the lensless holographic RPD is proposed. The required hologram can be obtained in two steps. First, the target image is multiplied by the uniform phase as the input. Upon performing the Gerchberg-Saxton iteration based on angular spectrum diffraction between the input surface and the hologram surface, the phase distribution is obtained on the hologram surface. Further, the phase distribution after iteration is multiplied by the phase of the converged spherical wave and encoded to obtain the final hologram. In the experiment, the color RPD was realized by the time division multiplexing technology, and the exit pupil expansion was realized by the multi spherical wave phase; thus, the effectiveness of the proposed method was verified.

Traditional methods for improving the quality of low-illumination imaging include external supplementary light and expanding the aperture, and complete source information enhancement by increasing the physical light input. However, these methods have been proposed to cause problems, such as light source pollution and shortening of the depth of field. Therefore, this paper proposes an end-to-end multispectral fusion scheme to achieve high-quality computational imaging, thereby effectively restoring the color and details of objects in low-illumination scenes while making up for the shortcomings of traditional methods. First, the multi-channel spectral information was integrated through a custom-designed deep learning network, after which scene noise was effectively eliminated. As a result, we discovered that the proposed method had a high degree of freedom, could adjust number of channels and network parameters according to the needs of specific conditions, replaced the traditional camera module, and optimized the image signal processing process. Next, we conducted detailed ablation experiments. The results show that after spectral fusion, the mean square error (MSE) and perceptual loss of image quality obtained by the proposed method reduce by 54.43% and 35.12%, respectively, compared with that obtained by traditional methods based on RGB data. Thus, the successful proposal and verification of this study's method should serve as a background for new high-quality imaging solutions within complex application scenarios, such as augmented reality/virtual reality (AR/VR), medical imaging, and autonomous driving.

The causes of the banding pattern in the images and the parameters of an optical system related to the banding contrast were studied to suppress the banding patterns that appeared in images displayed by a laser beam scanning system after a two-dimensional exit pupil expansion and to improve the display quality. Next, a method using dual-layered planar waveguide superposition was used to expand the exit pupil and suppress the banding contrast. According to the simulation, when the thicknesses of the dual-layered planar waveguide are 0.64 mm and 0.6 mm, respectively, the banding contrast of the pure color image displayed by the system decreases to 23.2% compared to single-layered waveguide system with thickness of 0.6 mm. This method can suppress the banding contrast and improve the display quality without significantly increasing the system volume or requiring complicated processing requirements. This method offers a solution by applying a laser beam scanning display to an augmented reality system.

Augmented reality near-eye display technologies based on holographic waveguide can directly provide user's eyes with virtual and real image fusion information and a relatively compact form factor; these have been developed rapidly in recent years. However, the reported displays of the near-eye holographic waveguide mostly adopt a flat waveguide structure, which require additional curved goggles, and thus the system volume is relatively large. Therefore, based on a cylindrical holographic waveguide, this paper proposes an augmented reality near-eye display method to extend the near-eye display based on the traditional flat holographic waveguide to a curved shape. Furthermore, a holographic exposure preparation method for this cylindrical waveguide is proposed, followed by the fabrication of the cylindrical waveguide. Finally, a bench-top experimental system for the near-eye display is set up using the cylindrical holographic waveguide. Investigations revealed that the novel waveguide achieved an augmented reality display effect, with an exit pupil size of approximately 10 mm and a monocular field of view of approximately 24°. Therefore, we propose that this improved holographic waveguide will provide a technical basis for combining curved waveguides with curved goggles.

Holographic display technology is considered as one of the most promising display technologies. However, the existing spatial light modulators (SLM) are insufficient for large-scale holographic display. In this research, a large-scale holographic 3D display system, which consists of a laser, a beam expander, three lenses, a beam splitter, two SLMs, and an aperture stop is proposed. First, a large-scale hologram is generated using the error diffusion method. Then, the large-scale hologram is divided into two holograms with the same resolution, and loaded onto two SLMs, respectively. Finally, the diffraction light fields of the two SLMs are seamlessly spliced together in space to realize large-scale holographic 3D display. The experimental results verify the effectiveness of the system.

Surgical guidance based on spectral imaging can effectively identify various tissue types by analyzing the spectrum differences of different tissues, which has a significant application value. However, the current sampling speed of spectral imaging and the presentation of spectral images severely limit its clinical applications. This paper proposes an augmented reality (AR) computational spectral imaging system for facilitating surgical guidance. High-quality spectral reconstruction is achieved in a single detection using RGB imaging devices and multispectral single-pixel detectors, which greatly improves the imaging speed and reconstruction efficiency. Principal component analysis and spectral angle mapping are adopted to extract the effective information of the spectral images, which are then used to highlight the characteristic tissue area. The image enhancement effect based on the fusion of the spectral image and actual surgical area is demonstrated in the head-mounted AR display device.

The near-eye aperture is used to realize the super multi-view display based on time multiplexing because it effectively limits the divergence angle of the light beam emitted from a display screen. Therefore, a large depth of field can be achieved. However, its small size also exerts a serious limitation on the field of view that can be obtained using only one near-eye aperture. This limitation can be successfully overcome by designing timing sequentially gated multiple groups of near-eye apertures and splicing up the display regions corresponding to different apertures in the same group. However, the light emanating from each display region may pass through non-corresponding apertures, thus creating a new problem of crosstalk noise. To address these problems, mutually orthogonal characteristics are endowed to the adjacent near-eye apertures in this work, which makes the light emitted from each display region with an orthogonal characteristic be blocked by the adjacent apertures of its corresponding aperture and forms a certain range of noise-free region in the observing plane. This foundation allows for the realization of a super multi-view display with a large field of view based on near-eye apertures. In this study, a proof-of-concept head-mounted super multi-view display system is constructed and M one-dimensional strip-type apertures are composed as a group, in which two adjacent apertures, respectively, only allow light with mutually perpendicular polarizations to pass through. Based on the persistence of the vision, T groups of timing sequentially gated near-eye apertures are used to realize the super multi-view display with T viewpoints for each eye and M times larger field of view compared with the case where only one aperture opens at each time point. This work only takes M=3 for verification and gets an expanded field of view of 19.7° due to the limitations by optical performances of the used convention projection lenses.

Augmented reality (AR) technology integrates computer-generated virtual information into the real world, providing users with an immersive experience. AR technology is considered next-generation display technology; however, there are issues. In this paper, an optical waveguide design based on achromatic metagratings is proposed to solve the problems of chromatic aberration, color uniformity, and light field uniformity in the AR display system. The coupling response of metagratings is simulated. In the case of a single-layer metagrating, the light with three wavelengths (473, 532, and 620 nm) is incident and emitted at the same angle, eliminating chromatic aberration. Based on this, we designed a double-layer metagrating. With the double-layer metagrating, the intensity ratio and coupling efficiency of light with different wavelengths can be adjusted, and color uniformity can be improved, which is expected to be used for pupil expansion. The proposed AR display optical waveguide design based on achromatic metagratings will offer a new design idea for AR head-mounted displays.

Due to their subjective nature, conventional virtual image viewing distance (VID) measurement methods in AR optical systems are easily disturbed by field depth of the photography system. To mitigate this issue, this study proposes a method to stabilize object distances between virtual images and actual reference objects using the field depth property based on the measurement principle of VID. In our experimental analysis, the edge-based spatial frequency response is used to measure the relative change of image sharpness in shooting virtual images and reference objects by the same photography system. During this process, the VID is converted into view distance at the corresponding focus position, and a corresponding quantitative measurement is returned. Experimental results show that the VID measurement result generated in this study (1397 mm) matches the theoretical design value of the AR waveguide lens sample (1400 mm), which demonstrates the measurement system's validity. Furthermore, a measurement error of 10-40 mm is observed, which is 1.6%-6.45% of that generated by conventional measurement methods. Because this method enables VID measurement in common experimental optical systems, it is highly applicable across AR-equipped devices, which in turn should help advance these devices' design and production.

Transverse electric (TE) polarized light and transverse magnetic (TM) polarized light cannot be used for the display in the general grating waveguide head-mounted display system owing to polarization sensitivity. To solve this problem, a design method for polarization-insensitive grating is proposed, based on a genetic algorithm and rigorous coupled-wave analysis. The method's optical design is based on a wavelength of 532 nm. The average diffraction efficiencies of the in-coupling grating in TE polarized, TM polarized, and non-polarized light increase from 6.1% to 21.0%, 13.7% to 40.5%, and 9.9% to 30.7%, respectively. The average diffraction efficiencies of the out-coupling grating in TE polarized, TM polarized, and non-polarized light increase from 3.1% to 12.1%, 0.8% to 10.7%, and 1.9% to 11.4%, respectively. A prototype of the display system is built, and the test shows that the display is clear and bright. A large field of view of 30°×22° is achieved, revealing the usability of the polarization-insensitive grating waveguide design method and the non-polarization image source in the field of augmented reality. The proposed method can be useful in the research and development of waveguide augmented reality systems.

The virtual field of view (VFOV) is an important design parameter of augmented reality (AR) optical systems. The measurement method and the standard used to assess virtual image edges are not clearly established. A measurement method for determining the VFOV of an AR optical system is investigated in this study. First, the method is designed to calculate the VFOV of the AR optical system based on image acquisition by the camera system, and the maximum virtual image displayed by the AR lens sample at different virtual image view distances (VIDs) is acquired. Then, the effects of different pixel luminance statistical parameters as luminance thresholds in the determination of the virtual image edge and different VIDs on the VFOV measurement results are analyzed. The results show that there is a difference of 11° (V)×7° (H) in the VFOV measurements obtained for the six types of statistical parameters. Further, the maximum fluctuation range in VFOV for different VIDs at the same luminance threshold is found to be ±2.630° (V)×±1.685° (H) and the minimum is ±0.228° (V)×±0.360° (H). It is concluded that the luminance threshold of edge pixels is highly correlated with the VFOV results. The influence of the VID on the VFOV measurement is found to be significantly less than that of the luminance threshold. A threshold value of 64% of the arithmetic mean of the pixel luminance is used in this study as the VFOV results obtained with this value are the most stable, with a relative fluctuation of only 0.91%. The proposed measurement method and the process can be considered to be highly significant for AR optical systems.