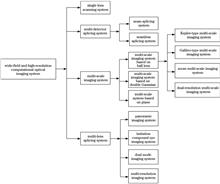

In order to overcome the mutual restriction between wide-field and high-resolution in photoelectric imaging system, wide-field and high-resolution computational optical imaging technology came into being in order to obtain more detailed information while obtaining a larger imaging field of view. So far, the computational optical imaging system, which has a lot of research results, has a wide range of applications in research fields. Single-lens scanning system, multi-detector splicing system, multi-scale imaging system, and multi-lens splicing system in wide-field and high-resolution computational optical imaging systems at home and abroad are systematically described, and their advantages and disadvantages are analyzed and summarized. Finally, the future development of wide-field and high-resolution computational optical imaging systems has been prospected.

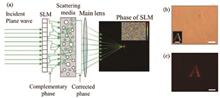

Scattering media exist widely in our daily life. Imaging through the scattering media presents important scientific significance and large application value in medicine, autonomous driving, national security, and other fields. Here, we briefly review the current progress in scattering imaging and analyze the scattering characteristics of media via distinguishing the ballistic light and the scattered light. Existing scattering imaging techniques are divided into two categories: methods that utilize the scattered light and approaches that separate the scattered light from the ballistic light. Two groups of methods that exploit scattered light are summarized. They analyze the spatial characteristics of scattering media and model the light propagation in the scattering media, respectively. Also, the spatial or temporal separability between the scattered light and the ballistic light is introduced. The scattering imaging techniques are compared in terms of illumination, complexity of imaging devices, prior information dependency, type and strength of scattering media, and filed of view. Finally, the development trend is forecasted.

The coherence of optical field is an important physical property that should be quantified to produce significant interference phenomenon. Although high spatial and temporal coherence lasers have become an essential tool in traditional interferometry and holography, in many emerging computational imaging fields (such as computational photography and computational microscopy imaging), reducing the coherence of the light source, i.e., using a partially coherent light source, is uniquely advantageous for obtaining high signal-to-noise ratio and high-resolution imaging information. As a result, the importance of “characterization” and “reconstruction” of partially coherent optical fields has become increasing prominent. Therefore, it is necessary to introduce the optical field coherence theory and develop coherence measurement techniques to answer “what light should be” and “what light is” in computational imaging. This paper provides a systematic review in the aforementioned context. The basic principles and typical optical path structures of the interferometric coherence measurement and non-interferometric coherence retrieval methods are described using the classical correlation function and phase space optics theories. Several new computational imaging regimes derived from coherence measurements and their typical applications, such as light-field imaging, non-interferometric phase retrieval, incoherent holography, incoherent synthetic aperture, incoherent cone-beam tomography, are discussed. Further, the challenges faced the current coherence measurement technology are discussed and its future development trend is predicted.

According to D. Gabor’s proposal, holography is a coherent imaging technique where the object’s three-dimensional information is first recorded and then reconstructed from the hologram that obtained through the interference of object and reference beams. The coherence of light originating from any two points on the object is required for holographic techniques. However, this requirement on spatial coherence prevents holography from being used in incoherent light applications. Indeed, the development of incoherent holography is important because the incoherent light sources widely exist and are easy to obtain in nature compared with the laser source. Merz and Young first proposed Fresnel-zone-plate coded imaging technique with incoherent illumination and explained their methodology according to holographic theory. Lohmann further presented wavefront retrieval of incoherent objects based on the skills of beam split and self-interference of an object beam and a same originated reference beam, thereby forming the academic idea of incoherent holography. The spatial coherence is no longer necessary in incoherent holography since using specific beam splitting skills to form the hologram of incoherent illuminated or self-luminous objects by the interference of two beams that originated from the same point. In the past few decades, enormous efforts have been addressed to develop the basic principle and reconstruction algorithms and improve imaging performances and applications of incoherent holography. This review focuses on demonstrating the basic concept and applications of various incoherent coded aperture correlation digital holography techniques, with or without a two-beam interference, to provide a clear picture of recent progress in incoherent holography. We also discussed the main challenges and limitations of existing methods and potential directions in which efforts can be made to advance incoherent research holography.

The traditional digital holographic microscopy (DHM) usually adopts highly coherent lasers as an illumination source. Although the hologram has high contrast, the coherent noise induced by the coherent lasers reduces the signal-to-noise ratio of the reconstructed image. Partially coherent illumination-based DHM (PCI-DHM) can effectively reduce the coherent noise and maintain high phase-measurement accuracy. PCI-DHM can be divided into on-axis and off-axis PCI-DHMs depending on the interference angle between the object and reference waves. This study reviews the architectures of several typical PCI-DHMs and compares their advantages and disadvantages for different schemes. Specifically, on-axis phase-shifting PCI-DHM uses an on-axis optical configuration, where both the object and reference waves have the same optical path length, and amplitude/phase maps of the sample can be reconstructed using the phase-shifting technique. Moreover, a three-dimensional tomographic image of the internal microstructure in the sample can be obtained based on the short-coherence characteristic of light source. Off-axis PCI-DHM usually uses grating to introduce an angle between the object and reference waves, constructing an achromatic interferometer. The real-time measurement of amplitude/phase maps of the sample can be achieved only from an off-axis hologram. Finally, the practical applications of PCI-DHM in quantitative phase imaging of biological cells, biological tissue, microstructures, and nanostructures are introduced.

Digital holographic microscopy (DHM) has attracted attention in the fields of biological imaging and materials science due to its advantages in high-precision quantitative phase imaging. However, the existence of conjugate images, the problem of phase wrapping, and the limited resolution have always hindered the wide application of DHM. In recent years, deep learning, as a specialized model for data feature extraction in machine learning, has been widely used in the field of optical imaging. In addition to improving imaging efficiency, its potential to solve imaging inverse problems has also been continuously explored by researchers, opening up a new path for the optical imaging. In this paper, we start from the working principle of deep learning applied to DHM, introduces its ideas and important mathematical concepts to solve the inverse problem of optical imaging, and at the same time summarizes the complete implementation process of deep learning. A brief summary of the research progress in recent years of deep learning in holographic reconstruction, auto-focusing and phase recovery, and holographic denoising and super-resolution is given, and summarize the existing problems in this research field and look forward to the development trend of research.

In recent years, fluorescence microscopy has been commonly applied in various fields of scientific research, such as biophysics, neuroscience, cell biology, and molecular biology, owing to its specificity, high contrast, and high signal-to-noise ratio. However, traditional fluorescence microscopes have limitations regarding spatial resolution, imaging speed, field of view, phototoxicity, and photobleaching; these limitations compromise their applications in subcellular observation, in vivo imaging, and molecular structure profiling. To moderate such limitations, researchers have adopted data-driven deep learning methods, which can enrich the existing fluorescence microscopy technologies and boost the performance boundary of traditional fluorescence microscopy. This article focuses on the technologies and applications of deep learning based fluorescence microscopy. First, we briefly summarize the basic principle and development path of deep learning technologies; then, we introduce the latest domestic and global progress of deep learning based fluorescence microscopy. Compared with the traditional microscopic imaging system, we show the superiority of deep learning in solving fluorescence microscopy problems. Finally, the future potential of developing deep learning based microscopy is highlighted.

The point spread function(PSF) is a key parameter to evaluate the performance of microscopes. In conventional microscopes, the closer the distance between PSF and the ideal Airy disk, the better the imaging performance of the system. With the development of computational microscopy imaging technology, the performance of microscopes has been greatly improved in many aspects. Especially, the active manipulation of the PSF of the microscope can significantly improves the performance of the imaging resolution and speed, etc. In this review, the research progress in computational microscopy imaging based on PSF engineering is summarized in terms of principles and methods. Moreover, the problems and challenges are analyzed, and its prospect is also given.

With the rise of micro-/nano-photonics, Fourier microscopy has become an important technique for characterizing micro-/nano-structured samples. However, traditional Fourier microscopy, also known as back-focal plane Fourier microscopy, can only obtain Fourier spectral distribution information, but not the spatial structure of the sample corresponding to the Fourier spectrum, which is inconvenience for characterizing of micro-/nano-structured samples. Thus, we have developed a computational Fourier microscopy based on computational imaging mode. It can not only obtain the Fourier spectrum distribution information, but also obtain a series of spatial structure images corresponding to different Fourier spectra and three-dimensional spatial structure image of the sample, which is beneficial to characterize the sample comprehensively and broaden the application range of Fourier microscopy. This paper will introduce the basic concepts, principles, research progress, and application prospects of computational Fourier microscopy.

Recently, photon counting imaging technology has become a research frontier in the field of LiDAR technology owing to its high sensitivity, high time resolution, and high photon efficiency. Efficient image reconstruction algorithms can improve the quality of reconstructed images at lower costs based on hardware systems or break through the bottleneck of solely relying on hardware technology, which has become a trending issue in the field of photon counting imaging technology. Aiming at the existing photon counting imaging algorithm, this study systematically sorts out the principles and characteristics of photon counting imaging technology, conducts in-depth investigations and discussions on several common issues, and considers and looks forward to the future development trend of this field.

Computational imaging is an imaging technology that not only relies on optical physical devices, but also relies on optical modulation and reconstruction algorithm. It provides new ideas for breaking through the limitations of traditional imaging systems in terms of temporal and spatial resolution and detection sensitivity. Computational ghost imaging (CGI) is one of the fastest-growing branches in the field of computational imaging, it has been widely used in single-pixel imaging, super-resolution imaging, biomedicine, information encryption, lidar, and information transmission under turbulence in recent years. In this paper, we summarize the progress of construction methods of illumination patterns and image reconstruction algorithms, which are two key technologies that affect quality of CGI. We mainly introduce the construction methods of random matrix, the orderly Hadamard matrix, orthogonal transformation matrix, and discuss the imaging performance of various illumination patterns under traditional correlation reconstruction algorithms and novel deep learning reconstruction algorithms. Finally, the construction methods of illumination patterns and reconstruction algorithms are summarized, and the development prospect of CGI technology is also discussed.

Light field imaging and processing is one of the most potential directions in the field of computational photography. Constructing concise and efficient representations of light field is the theoretical basis and key to promote the applications of light field. Based on the depth, texture, geometry, scale, and spectrum of light field, this paper analyzes characteristics of typical light field representations, including sub-aperture image, epipolar plane image, focal stack, super-pixel, multiplane images, hypergraph, Fourier slice, Fourier disparity layer, and epipolar focus spectrum. This paper also summarizes the impacts of different representations for light field applications, such as reconstruction, depth estimation, editing and so on. Finally, the advantages, disadvantages, and potential applications of various representations are summarized.

A camera mimics the biological vision system for acquiring natural scenes. Human beings and other animals are mostly equipped with binocular stereo or multi-eye vision systems for high-fidelity scene acquisition, which motivates us to develop a multi-camera system to enhance the imaging capacity. Multi-dimensional and multi-scale image/video acquisitions can be realized via heterogeneous sensors and shooting conditions of different cameras, and then leveraging matches across cameras to realize computational multiscale feature fusion for final enhanced reconstruction in respect of scale or dimensionality. In this article, we exemplify the multi-camera system in the applications of wide-field ultrahigh-definition imaging, high spatiotemporal video acquisition, high dynamic-range and low-light imaging enhancement to demonstrate its advantages in improving the imaging capacity, e.g., increasing imaging spatiotemporal resolution, expanding the field of view and extending imaging dynamic range.

With the development of stereo imaging technology, stereo image super-resolution has attracted increasing attention in recent years. Different from single image super-resolution, stereo image super-resolution can further improve the quality of image reconstruction by using the complementary information between left and right view images. In this paper, we present a comprehensive survey on recent advances of stereo image super-resolution field. Firstly, we introduce the fundamental theory of stereo imaging. Then, we review the existing stereo image super-resolution algorithms and stereo image datasets. Afterwards, we comprehensively evaluate several mainstream deep learning-based stereo image super-resolution algorithms on benchmark datasets, and investigate the influences of different training sets on the performances of super-resolution algorithms. Finally, we summarize challenges and prospect future research directions in stereo image super-resolution.

Polarization property has attracted much attention because it can be used for the inversion of the material characteristics and the three-dimensional morphology information of the object surface in the field of photoelectric detection. However, when using the diffuse reflection polarization property to solve the three-dimensional morphology, the zenith angle information of the surface normal and the degree of polarization have the one-to-one correspondence, which makes it widely applicable in complex lighting scenes. In this paper, we systematically analyzed the principle of diffuse reflection polarization three-dimensional imaging technology via the type of reflected light wave and its polarization characteristic model. Meanwhile, we described in detail about the current research progress of diffuse reflection polarization three-dimensional imaging technology, the exisiting foundation of the technology, and the future development direction.

The angle measurement technique based on the centroid positioning principle of the traditional optical imaging system generally has an upper limit of the measurement accuracy, it is about 1/100 of a pixel. Thus, in order to improve the angle measurement accuracy, cameras with the longer lens focal length and the larger detection array generally need to be adopted. But it increases the size, the weight, and the power consumption. It is not conducive to the miniaturization of equipment and the application on platforms with limited size, weight, and power consumption (such as satellites and aircrafts). The high accurate angle measurement technique for long distance target based on the computational interferometry converts the angle change of target light into the phase change of interference fringe by the optical interference method. Because the measurement accuracy of the phase change can be achieved as low as 1/1000 period by the mature interpolation method, the angle accuracy of interferometry is greatly improved compared with the traditional optical method. This paper mainly introduces the basic principle, the main characteristics, the research progress, and the existing difficulties of the high accurate angle measurement technique for long distance target based on the computational interferometry. It is hoped that the introduction of this paper will arouse the research interest of the majority of peers and promote the rapid development and the engineering application of this technique.

Synthetic aperture ladar is an important way to realize computational imaging.Firstly, a multi-channel inverse synthetic aperture ladar (ISAL) prototype, imaging detection experiment and signal processing method are introduced. Then, the composition of the prototype system and key technical solutions are described. Moreover, the experimental results of imaging detection of moving vehicle targets on ground are given by a coherent ladar prototype based on a one-transmit multiple-receive pulse system and an optical path with all fiber. Finally, under the wide view field conditions of expanding transmitting and receiving beams, the high-resolution imaging capabilities of multi-channel ISAL and the effectiveness of the along-track interferometry motion compensation imaging method are verified.

The three-dimensional surface of the reconstructed object is attached with the color texture of the object to make it realistic. In order to obtain texture data, on the basis of the three-dimensional measurement structure of the binocular camera, an additional color camera is added to obtain the texture. In the subsequent mapping process, it is necessary to know the internal and external parameters of the binocular system and the texture camera, that is, to carry out stereo calibration of the system composed of binocular system and texture camera. In order to obtain the texture information of the object at different angles, and change the position of the texture camera, it should be recalibrate. Aiming at this problem, a method of adding marker points is proposed. The texture camera can be used freely after the system is initially calibrated once, so as to complete the texture map after the texture camera shoots at any position. The experiment verifies the feasibility of this method. This method reduces the frequent calibration process of the texture camera and provides a convenient and easy way to implement point cloud data texture mapping.

In holographic reconstruction, the two-order and twin image noise produced by the phase-conjugate wave arising from the symmetry of the complex field, will affect the accuracy and the image quality of the reconstructed image. The acquisition of the holographic focus distance parameter for judging the best focal plane of object is also the key to holographic imaging. In this work, a numerical focusing imaging algorithm based on compressive sensing is proposed. By choosing a stack of reconstructed images in the axial direction with fixed interval, every image is processed by the total variation regularization. These images are used to calculate and construct the focus curve of the digital holography according to the focus metrics such as the tamura coefficient and the absolute gradient operator. Then the best focus plane is evaluated. The proposed method suppresses the noise of in-line digital reconstruction, improves the axial accuracy of reconstructing, and realizes a larger range of high-resolution imaging around the focus plane.

Fourier ptychography (FP) can reconstruct the amplitude and phase distribution of objects with a wide field of view and high. With the continuous development of deep learning, neural network has become one of the important methods to deal with the nonlinear inverse problems in computational imaging. Aiming at the characteristics of FP system such as strong data specificity and small amount of data, this paper proposes an algorithm combining computational imaging prior knowledge and deep learning, to design a neural network framework based on physical model, and verifies it on simulation samples. Furthermore, a far-field transmission system is constructed to verify the FP reconstruction of image sequences of macroscopic objects. Experimental results show that the system can reconstruct the complex amplitude distributions of high-resolution samples using limited simulation and real data sets, with high robustness to optical aberration and background noise.

Free space optical communication based on orbital angular momentum (OAM) is considered to be a promising next generation communication application. In order to realize OAM multiplexing communication, one of the key technologies is the detection of vortex beam’s OAM at the receiving end. Using convolution neural network method, a theoretical scheme for accurate identification of OAM of dual-mode vortex beams is studied. The OAM beam can provide two controllable degrees of freedom, e.g. the topological charge number l and the proportional coefficient n. The relationship of the training sample resolution or the number associated with the accuracy of the model is presented. The recognition accuracy is closely related with the quantum number l of the OAM, and the proportional coefficient n. This studies show that when l ranges from 1 to 10, and n varies from 0.01 to 0.99, the recognition accuracy rate of OAM is 100%.

Scattering imaging is the frontier and hotspot in the field of optical computational imaging field. Speckle autocorrelation imaging has attracted increasingly attention due to its efficient, fast, and noninvasive characteristics. The physical basis of speckle autocorrelation imaging is optical memory effect, and the mathematical basis is speckle autocorrelation incoherent imaging model. The reconstruction quality is directly affected by the high quality of speckle autocorrelation extraction and the effective suppression of background. Due to the existence of optical transmission noise, external strong background interference, and detection noise, the contrast of speckle autocorrelation will be seriously reduced, and its fine structure will be submerged in the background and interference noise, reducing the quality of reconstruction and even leading to fail of reconstruction. To enhance the applicability of speckle autocorrelation imaging and to improve reconstruction quality under strong background noise, we propose a strategy based on background subtraction and bilateral filtering pretreatment combined with multi-frame stacking average method, to improve the performance of reconstruction. The natural environment field experiments are carried out, and the experimental results verify the proposed method can realize high quality reconstruction under strong background disturbance. The effectiveness of the method is verified by experiments for targets at different spectral bandwidths of illumination light and different distances, and the reconstruction quality is significantly improved. This study has certain reference significance for the non-darkroom application of scattering imaging technology and the related applications of imaging under weak light conditions.

Compared with conventional microscopy imaging technology, Mueller matrix polarization microscopy imaging can provide more information about a medium’s microstructure characteristics. This paper presents the designs for a set of microscopy imaging systems based on the rotation of dual waveplates that achieve fast Mueller matrix computing-based imaging by selecting special uncorrelated incident polarization states. The system is then employed in imaging studies on onion inner epidermal cells immersed in glucose solutions with different mass fractions and human laryngeal carcinoma cells in different active states. Experimental results show that the measurement error of the system is less than 4.35%. Compared with traditional intensity imaging, which characterizes the surface structure of the sample, polarization imaging can detect changes in the sample more effectively, thereby providing a new research method for the biomedical field.

Fingerprints are widely used in the fields of criminal investigation and identification due to their uniqueness and lifelong invariance. Recently, criminal investigation forensic cameras are commonly used to extract fingerprints by taking photos as the traditional fingerprint extraction methods destroy the original fingerprint. Aiming at the problem that a clear image of the surface fingerprint from a curved object cannot be obtained in one shot, this study introduces the computational light-field imaging for the surface fingerprint extraction from the curved object. First, the fingerprint light-field camera is designed according to the fingerprint-shooting scene and the digital refocusing algorithm is used to obtain the fingerprint image stack. Then, the image fusion algorithm based on the image sharpness evaluation obtains a clear fingerprint image from the curved surface. Experimental results show that the fingerprint light-field camera and processing algorithm designed using the method can effectively extract fingerprints from the surface of curved objects.

High-resolution and large field-of-view imaging allows aerospace remote sensing to perform finer perception over a wider range. Based on the computational imaging basic principle, this paper proposes a suboptimal computational imaging design method with a large field-of-view. The imaging process, is divided into two components: hardware imaging and software restoration. The design method that combines software and hardware can fully incorporate the advantages of the two, reducing the difficulty of hardware design and improving the overall performance of system imaging. In terms of hardware design, a suboptimal optical design method is proposed, which seeks a consistent suboptimal point expansion function in a larger field-of-view rather than using the design degree of freedom resources in a small field-of-view. The imaging field-of-view under the state of limited design degree of freedom is enlarged when combined with the image restoration method. The off-axis three-mirror optical system is designed using the suboptimal method, which increases the design field-of-view to 5°, which is more than twice the field-of-view of conventional design method. When combined with the nonlinear image restoration method based on deep learning, the structural similarity of similar targets is more than 85%, and that of different types of targets is more than 80%, which effectively realizes the design of high-resolution and large field-of-view imaging system, and provides a new method for aerospace remote sensing wide-area fine observation.

Distributed optoelectronics solves the contradiction between large field of view and high resolution, which is widely used in military reconnaissance, security monitoring, astronomical observation, and other fields. The distributed optoelectronic system improves the image quality through imaging stitching and super-resolution reconstruction, thereby enhancing the detection and recognition capability. However, there is still a lack of systematic evaluation method for distributed optoelectronic systems. This article establishes a quantitative evaluation method for distributed optoelectronic systems. Firstly, we study the theory of distributed optoelectronic systems and the image processing algorithms. Then the image quality is evaluated by analyzing the spatial resolution, signal-to-noise ratio, information entropy, and other parameters. Finally, the theoretical analysis of the detection and recognition capability is carried out from perspectives of optoelectronic systems and image quality. This paper provides important theoretical support for the development of distributed optoelectronic technology.

To realize small-aperture cameras with the same resolving power as large-aperture cameras, a high-resolution imaging technology based on pixel coding is proposed. To reduce image aliasing at high and low frequencies, a pixel coding algorithm based on the system point spread function as the computing core is proposed. First, the encoding matrix of the system is directly constructed in the spatial domain. Then, the inversion method is used to decode the output image for enhanced image quality. Finally, the imaging system with a focal length of 100 mm and a pixel size of 9 μm is developed. The F-numbers of 11 (F11) and 25 (F25) are used for indoor experimental verification. The pixel coding algorithm is used to correct the image of distinguish plate obtained using the F25 system. The value of the corrected modulation transfer function (0.18) is equivalent to that obtained using the F11 system(0.24), and high image contrast is obtained. The experimental results verify the feasibility and effectiveness of the pixel coding algorithm, thereby allowing small-aperture cameras to achieve high-resolution imaging.