ObjectiveWith the development of the new generation of mobile communication technology, there is an increased demand for bandwidth, speed, and latency in passive optical networks (PONs). Wavelength-division multiplexing PON (WDM-PON) which utilizes frequency resources for bandwidth allocation can assign different channels to different optical network units (ONUs) simultaneously. This eliminates time slot competition among ONUs, reduces system latency, and holds promise for addressing high-latency issues. An embedded communication channel called the auxiliary management and control channel (AMCC) has been proposed and successfully implemented in WDM-PON to enable the transmission of management and control information at a lower cost without altering the frame structure. In recent years, there has been increasing attention to frequency-division multiplexing coherent PON (FDM-CPON), which also supports bandwidth allocation in the frequency domain. To complete AMCC transmission in FDM-CPON, we put forward two simple and cost-effective transmission mechanisms of transmission management and control signal for FDM-CPON, including the addition and multiplication of AMCC and data channel at the digital end. Meanwhile, we conduct a comparative analysis on the performance of these two transmission mechanisms in a 200 Gbit/s FDM-CPON system based on 16QAM transmission over 20-km fiber. The research results provide references for AMCC transmission and system design of high-speed FDM-CPON in the future.MethodsTo implement the two transmission mechanisms and conduct a comparative analysis on their performance in a 200 Gbit/s FDM-CPON system based on 16QAM transmission over a 20-km fiber, we generate 16QAM and on-off keying (OOK) signals for the transmission of data channel and AMCC at the digital end respectively. After mapping the low level in OOK to 1 and the high level to a real number greater than 1, the OOK signal can be up-sampled to the same length as the data channel signal. By multiplying bitwise, the combination of multiplication-based AMCC and the data channel can be achieved. For addition-based AMCC, the low level in OOK should be mapped to 0, while the high level is mapped to a complex number with both real and imaginary parts greater than 0. This mapped signal is then added bitwise to the data channel signal. After the combination of AMCC and the data channel, the signal is received by an integrated coherent receiver (ICR) over a 20-km fiber. At the receiver, the amplitude of the received signal is extracted, and the amplitude variations of the signal are obtained by smoothing filtering. After energy detection and inverse mapping, the decoding of the OOK signal is completed. Simultaneously, the received signal undergoes the classical coherent digital signal processing (DSP) for decoding. Additionally, we modify the modulation index (MI) and bandwidth of AMCC at the transmitter, studying the performance of the two transmission mechanisms in different conditions.Results and DiscussionsWe test the sensitivity curves of data channel signals overlaid with both multiplication-based AMCC and addition-based AMCC under different MIs, as well as the Q curves of OOK signals transmitted by AMCC. Under the same receiver optical power (ROP) and MI, the influence of multiplication-based AMCC on the sensitivity of the data channel signal is smaller. Simultaneously, the Q value of OOK transmitted by multiplication-based AMCC is greater than that of addition-based AMCC. We also experimentally verify the effect of the MI and bandwidth of AMCC on the sensitivity of the data channel signal and the Q value of AMCC. Under the same MI and bandwidth, the data channel signal combined with multiplication-based AMCC exhibits higher sensitivity and power budget than the data-channel signal combined with addition-based AMCC. Meanwhile, the larger MI and bandwidth lead to a greater influence of AMCC on the performance of the data-channel signal. When the MI of AMCC is set at 26.1% with a corresponding bandwidth of 24.4 MHz, the effect of multiplication-based AMCC on signal sensitivity is 3 dB lower than that of addition-based AMCC.ConclusionsWe verify and compare the effects of multiplication-based AMCC and addition-based AMCC on the performance of the data channel signal and the OOK signal transmitted by AMCC in a high-speed FDM-CPON. Experimental results from a 200 Gbit/s FDM-CPON system based on 16QAM transmission over 20-km fiber indicate that multiplication-based AMCC has a smaller influence on the sensitivity and power budget of the data-channel signal, with higher Q value of the AMCC-transmitted signal. When the MI of AMCC is set at 26.1% with a corresponding bandwidth of 24.4 MHz, the effect of multiplication-based AMCC on signal sensitivity is 3 dB lower than that of addition-based AMCC. Additionally, experiments are conducted to assess the effect of different MIs and bandwidths of AMCC on the sensitivity of the data-channel signal, with results consistent with the conclusions drawn from theoretical analysis. The results provide significant references for AMCC transmission and system design of high-speed FDM-CPON in the future.

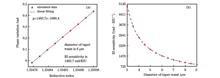

ObjectiveOptical fiber refractive index (RI) sensors have caught widespread attention from researchers in biochemical sensing and environmental monitoring due to their high precision, high sensitivity, resistance to electromagnetic interference, corrosion resistance, low cost, and easy preparation. The commonly employed optical fiber RI sensors currently include surface plasmon resonance, local surface plasmon resonance, fiber Bragg gratings, long-period fiber Bragg gratings, fiber-optic whispering gallery mode, fiber Fabry-Perot sensors, photonic crystal fibers, D-type fibers, and tapered fibers. However, most fiber optic RI sensors are currently single-point sensors and cannot achieve multi-point detection or even distributed sensing. Based on the detection of Rayleigh backscattering spectra (RBS) in optical fiber, optical frequency domain reflectometry (OFDR) features high measurement accuracy, high sensing spatial resolution, and long measurement distance, which makes it very suitable for distributed RI sensing. Distributed RI sensing can not only obtain the RI magnitude in the solution but also locally detect the diffusion processing of the solution and test the distribution of fluids. These are all that single-point sensors or even quasi-distributed sensors cannot achieve.MethodsTraditional distributed RI sensing based on OFDR adopts a cross-correlation demodulation algorithm, which has sound noise suppression ability and stability. However, it is difficult to achieve distributed RI measurements with a micron-level spatial resolution. Therefore, this type of distributed RI sensing based on cross-correlation demodulation is not enough to be applied to distributed biological analysis, drug design, and other fields. Unlike cross-correlation demodulation methods, OFDR based on differential relative phase demodulation realizes sensing by the relative phase change of RBS. Since the differential phase demodulation method directly measures the relative phase change caused by external RI changes, this method is more sensitive than traditional cross-correlation demodulation methods. Therefore, the differential relative phase demodulation method is expected to achieve distributed RI sensing with a micron-level spatial resolution.Results and DiscussionsWe first theoretically analyze the principle of differential relative phase demodulation and the RI sensitivity characteristics. To characterize the theoretical sensitivity of the differential phase demodulation method and compare it with experimental results, we simulate the relationship between phase variation and external RI change at a taper waist of 6 μm. The simulation results are shown in Fig. 1(a), and the slope of 1483.7 rad/RIU is the theoretical sensitivity. Meanwhile, in Eq. (11), Δf is related to taper waist radius r. Therefore, the relationship between theoretical sensitivity and the diameter of the taper waist can be simulated, with the results shown in Fig. 1(b). In the experiment, the phase variations along distance in the sensing area of tapered fiber are compared when only average denoising and wavelet denoising are adopted. This reveals that only average denoising cannot achieve distributed RI sensing at the micron level. Meanwhile, with only wavelet denoising, the phase variations caused by the RI changes in the sensing region with a spatial resolution of 68 μm can be distinguished. However, due to the excessive phase noise in the subfigure of Fig. 5(b), there are still significant fluctuations in the demodulation signal of the sensing region. After average denoising (H=5) and wavelet denoising, phase fluctuation noise can be well suppressed with a sensing spatial resolution of 340 μm. The phase variations along the fiber distance under different RI can be clearly distinguished. The results are shown in Fig. 6(c). A linear fitting curve between phase variations and the external RI change at the effective sensing region is shown in Fig. 6(d) with a linear fit of 0.997. The maximum standard deviation at each RI is 0.0067 rad, and the smoothed measurement sensitivity is 1328.6 rad/RIU, which is close to the simulation results in Fig. 1(b). To compare the difference between the proposed differential phase demodulation method and the traditional cross-correlation demodulation method, we utilize cross-correlation demodulation to the data in Fig. 6. The linear fitting curve of the proposed differential phase demodulation method is better than that of the cross-correlation algorithm. Meanwhile, the standard error of the smoothed differential phase demodulation method is lower than that of the cross-correlation demodulation algorithm. More importantly, compared to the cross-correlation demodulation method, the differential phase demodulation method increases the sensing spatial resolution by 10 times, reaching the level of hundreds of micrometers.ConclusionsWe present distributed RI sensing by tapered fiber based on differential relative phase OFDR. The principle of the proposed method is theoretically analyzed and the sensitivity of phase variations with external RI changes are simulated. In the experiment, we achieve distributed RI sensing with a spatial resolution of 340 μm after average denoising and wavelet smoothing. The effective sensing area is 45 mm. The linear fitting between phase variations and external RI change is 0.997 and the maximum standard deviation at each RI is 0.0067 rad. The experimental RI sensitivity is 1328.6 rad/RIU, close to the simulation result of 1483.7 rad/RIU. The linear fitting and standard deviation of the differential phase method are better than those of the cross-correlation algorithm. More importantly, the sensing spatial resolution is improved by 10 times. The proposed differential relative phase method based on OFDR provides a foundation for achieving micrometer-level distributed biosensing.

ObjectiveRocks have both mechanical and acoustic properties, and there exist inherent relations among them. The characteristics of ultrasonic waves (UWs) change when passing through rocks, and the UWs carry the structural information of rocks. Thus, the interior properties of rocks can be obtained by analyzing the received UWs. Nowadays, the hydraulic properties of rocks have become a new focus in the engineering field. For example, in oil and gas exploration, the water content affects the density and strength of reservoir rocks. The analysis results of the reservoir structure are directly affected by the varied amplitude and velocity of exploration waves. In rock engineering, such as solution mining, long-distance tunnels, and reservoir bank slopes, the pore water affects the stability of rocks and even threatens the safety of engineering projects. Therefore, it is significant to study the ultrasonic propagation characteristics of rocks during water absorption and softening.At present, a common method to detect the water content is to employ the piezoelectric transducer (PZT). However, the PZT has some inherent drawbacks, such as large size, narrow bandwidth, and low resistance to electromagnetic disturbance, which decreases the detection resolution and brings large deviations. Optical fiber sensors feature compact size, high sensitivity, broadband response, and sound resistance to electromagnetic interference. The most commonly employed optical fiber sensors in ultrasonic detection are Fabry-Perot interferometer (FPI) and fiber Bragg grating (FBG). The FPI sensors usually suffer from the low-reflection reflectors and the FBG encounter difficulties when utilized with high-frequency UWs. Fortunately, the optical fiber FPI constructed with two FBGs combines the advantages of both FPI and FBG and becomes the preferred solution in ultrasonic rock water content detection.MethodsWe propose a new ultrasonic method based on an FBG-FPI optical fiber sensor for water-content detection in rocks. In experiments, red sandstone is employed as the detecting object (cylinder, 80 mm×100 mm). The 1 MHz longitudinal pulsed wave emitted by PZT is adopted as the ultrasonic source. The transmitted UWs are detected by a pair of fiber gratings inscribed into a thin core fiber (TCF). The UWs velocity can be calculated by measuring the transmission distance and flight time inside the rock. The method of fast Fourier transform (FFT) is leveraged to convert time-domain signals into frequency-domain ones. For the frequency-domain results, the main frequency and the normalized amplitude are extracted respectively. By employing the fitted curve between the measured UWs velocity and the rock water variation, the water content is reconstructed, and an average detection deviation is obtained simultaneously. Additionally, the results measured by PZT are also recorded for comparison in identical conditions.Results and DiscussionsThe experiment results show that in the longitudinal wave conditions, the wave velocity of the red sandstone first decreases and then increases with the rising water content, while the main frequency and corresponding amplitude both decrease with the increasing water content. When the water content increases from 0 to 0.16%, the wave velocity measured by the optical fiber sensor (or PZT) decreases from 3440.86 m/s (or 3691.74 m/s) to 3389.83 m/s (or 3681.55 m/s). When the water content rises from 0.16% to 2.33%, the wave velocity measured by the optical fiber sensor (or PZT) increases from 3389.83 m/s (or 3681.55 m/s) to 4020.10 m/s (or 3980.10 m/s) (Fig. 5). When the water content increases from 0 to 2.33%, the main frequency measured by the optical fiber sensor (or PZT) decreases from 1.000 MHz (or 0.987 MHz) to 0.933 MHz (or 0.887 MHz), and the normalized amplitude reduces from 1.000 (or 1.000) to 0.058 (or 0.040) (Fig. 6). The optical fiber sensor and PZT are found to exhibit the similar response tendency with the changing water content. After water content reconstruction, an average absolute deviation between the optical fiber sensor (or PZT) measurement results and the actual values is approximately 0.055 (or 0.069) (Fig. 7). It is shown that the deviation of the FBG-FPI optical fiber sensor is smaller, which proves the optical fiber ultrasonic detection feasibility of rock water.ConclusionsA new optical fiber method is proposed for the ultrasonic detection of water content in rock mass. The time-domain and frequency-domain results are obtained using an FBG-FPI optical fiber sensor by ultrasonic transmission method. In the comparative experiments, the FBG-FPI optical fiber sensor presents a similar response tendency to PZT with increasing water content. Additionally, the FBG-FPI optical fiber sensor has a smaller detection deviation than that of PZT. Furthermore, laser ultrasound can be employed as a broadband source to replace piezoelectric excitation and helps to improve the detection resolution with the broadband response of optical fiber sensors.

ObjectivePhase-only hologram (POH) is favored by many researchers in holographic display technology due to its high diffraction efficiency and zero twin image. Common POH generation algorithms can be divided into iterative and non-iterative methods. The iterative methods require a lot of iterative optimization to obtain the required POH, which needs a large number of iterations and is time-consuming. The error diffusion algorithm does not require iteration and greatly improves the computational speed of POHs. In the traditional error diffusion method, the amplitude of all pixels on the complex amplitude hologram (CAH) is set to 1, and this hologram and its CAH are adopted to compute the error which will be diffused on the CAH to generate the POH. However, since different target images have various amplitude distributions, directly setting the CAH amplitude to 1 is not suitable for all images. Therefore, the quality of the generated POH is not high and the reconstruction image of the hologram cannot obtain a satisfactory display effect. Therefore, we call for a new error diffusion algorithm to improve the reconstructed image quality.MethodsTo improve the quality of the hologram reconstructed image generated by the error diffusion algorithm, we build a hologram error compensation model based on the bidirectional error diffusion algorithm by analyzing the relationship between the amplitude distribution of the target image and the generated hologram, and propose a new POH generation method. Firstly, the CAH of the target image is computed and its amplitude is set to 1. Secondly, the error between the POH and the original CAH is calculated by the error compensation model. Thirdly, the new error is adopted to generate a new POH by bidirectional error diffusion. Finally, a new error between this new POH and the original CAH is computed and the second error diffusion is carried out to obtain the final POH. Numerical simulations are conducted to compare the hologram reconstruction effect of the two methods. Additionally, the normalized correlation (NC) coefficient and the structural similarity index measure (SSIM) are employed to quantitatively compare and analyze the hologram reconstruction results. Meanwhile, the experimental schematic diagram is drawn and the optical imaging system is built, with the proposed method verified by optical experiments.Results and DiscussionsBy carrying out numerical simulation and optical experiments, the quality of hologram reconstructed images generated by different error diffusion methods is verified. The simulation results of the two error diffusion methods are shown in Fig. 6. The images of the first column in Fig. 6 are reconstructed ones by the traditional method, and contain obvious speckle noise. The images of the second column and third column in Fig. 6 are the reconstructed images of the holograms generated by the first improved error diffusion and second error diffusion respectively. Compared with the first column, the definition of the reconstructed images in the latter two columns is higher. The detail section of the images in the third column contains more information than the second column. For example, the detail part of Fig. 6(c) shows more information on the pepper stalk than that of Fig. 6(b). Additionally, for the detail part of the pirate, the hair of the man in Fig. 6(l) is more clear than that in Fig. 6(k), and the lines of the hair are more obvious. The NC coefficient and the SSIM are respectively adopted in Tables 2 and 3 to evaluate the quality of numerical simulation results of hologram reconstruction images quantitatively. After the first error diffusion, the NC coefficient and the SSIM increase by 0.05-0.14 and 0.036-0.09 respectively. After the second error diffusion, the NC coefficient and the SSIM increase by 0.01-0.026 and 0.025-0.036 respectively. Simulation results reveal that the reconstructed image quality of the proposed method is better than that of the original method. The similarity of the proposed method with the original image is higher, and reconstructed images of the proposed method are more in line with the visual quality requirements of human eyes. The comparison results of optical experiments on hologram reconstructed images by the traditional error diffusion method and the proposed error diffusion method are shown in Fig. 8. Fig. 8 indicates that for different target images, the hologram reconstructed images of the proposed algorithm can be displayed more clearly, but the hologram reconstructed images of the traditional error diffusion algorithm are obviously noisy and blurred. Comparison of the details of the two methods displays the sailboat in Fig. 8(e) and its reflection on the surface of the lake, while the sailboat in Fig. 8(e) is blurred. The pattern on the long spike behind the man’s hat in Fig. 8(g) is clear, while the pattern on the long spike in Fig. 8(h) is not clearly seen. The optical experiment results are consistent with those of simulations. The simulation and experimental results show that the proposed error diffusion algorithm is effective in improving the quality of hologram reconstructed images, with the feasibility and superiority of the proposed method verified.ConclusionsA bidirectional error diffusion compensation model is built by calculating the new error between CAH and POH. The hologram reconstructed images generated by the model contain more object light wave information. Additionally, the twice error diffusion algorithm is adopted to further improve the holographic display quality. Simulation results show that the reconstructed images generated by the improved method have higher resolution and more detailed information. The NC coefficient and SSIM serve as quantitative evaluation criteria for the simulation results. In Tables 2 and 3, the mean NC and SSIM values of the proposed method are 0.9743 and 0.8630 respectively, 0.0927 and 0.0848 higher than those of the traditional error diffusion method. The optical experiment results show that the reconstructed images generated by the improved algorithm have higher image quality and resolution in detail. Simulations and experimental results prove the effectiveness and feasibility of the improved algorithm, and this algorithm has application significance for computational holographic display.

ObjectiveX-ray computed tomography (CT) imaging technology, with nondestructive testing capabilities, has been widely used in industry, medicine, and other fields. When X-ray CT imaging is performed on samples containing high-absorption materials such as metals, the reconstructed images often contain metal artifacts due to beam hardening, scattering, and other factors, which severely degrade the quality of CT imaging. More recently, dual/multi-spectral CT has been proposed as an effective means of reducing beam-hardening and metal artifacts. However, it needs multiple scans of the object or specialized multi-spectral CT equipment. In this paper, we studied the multi-material decomposition reconstruction technique with traditional CT scanned data to reduce beam-hardening and metal artifacts.MethodsThe problem of multi-material decomposition reconstruction in traditional single-spectral CT is inherently highly underdetermined, leading to non-unique solutions. To obtain physically meaningful true solutions, it is necessary to incorporate additional constraints. In a type of scenario, the constituent materials of the scanned object are known and immiscible. The reconstructed image vectors are orthogonal if these materials are selected as basis materials needed in multi-material decomposition reconstruction. Based on this finding, an orthogonal multi-material decomposition reconstruction technique (OMDRT) combined with the X-ray energy spectrum was proposed. In the proposed OMDRT method, the order of basis materials was sorted based on the decreasing sequence of their attenuation coefficients. With triple-material decomposition reconstruction as an example, the proposed OMDRT method includes steps as follows: 1) triple-material decomposition reconstruction; 2) generation of the first material’ mark images from reconstructed image; 3) triple-material decomposition reconstruction with the first material’ mark images; 4) generation of the first and second materials’ mark images from reconstructed images; 5) triple-material decomposition reconstruction with the first and second materials’ mark images. Steps 4) and 5) were performed iteratively. In steps 3) and 5), the weights for the decomposition reconstruction of basis materials from the projection data were adjusted based on the materials’ regional location marked in the materials’ mark images。Results and DiscussionsThe numerical phantom used in the simulation is shown in Fig. 2(c), and it includes three materials: water, bone (simulating the teeth), and AgHg (simulating the dental filling) with standard densities of 1 g/cm3, 1.92 g/cm3, and 12 g/cm3, respectively. If the mass attenuation coefficients of these three materials are used as basis functions, the density of the material region in the corresponding image is the standard density. We select AgHg as the first basis material, bone as the second basis material, and water as the third basis material. By using the simulated projections of phantom without and with noise, density images are reconstructed with the proposed OMDRT. From the last rows in Fig. 4 and Fig. 11, we can see that the three materials are mostly separated in the results of three iterations, and metal artifacts have been effectively corrected basically. Figure 8 and figure 13 show that there are no obvious artifacts in either the density images or the virtual monochromatic image. To quantitatively analyze the image quality, we calculate the peak signal-to-noise ratio (PSNR) and normalized mean absolute deviation (NMAD) between the resulting virtual monochromatic images and the actual virtual monochromatic images. From Fig. 7 and Fig. 12, we can observe that the proposed OMDRT method converges within several iterations. In summary, the experimental results show that the method proposed in this paper has a good application effect in reducing metal artifacts.ConclusionsFor the metal artifact correction in CT images of scanned objects with known and non-mixing materials, we propose an iterative OMDRT of traditional CT. The proposed method chooses known materials as the basis materials, adjusting the weights for the decomposition reconstruction of basis materials based on their regional location. We choose a dental phantom with dental fillings to verify the validity of the proposed method. The basis materials are separated correctly with our method for both simulated noise-free data and Poisson noise data. In addition, artifacts caused by metal implants in both the triple-basis density images and the virtual monochromatic images combined by them are reduced effectively. Moreover, the proposed method converges within a small number of iterations, facilitating its widespread practical application. We verify the multi-material decomposition reconstruction technique of traditional CT. The experimental part does not utilize actual data and does not consider the effect of scattered photons, which are issues that require further research. During the experimental process, it is found that the accuracy of the spectrum significantly affects the effectiveness of the proposed method. How to acquire spectrum quickly and accurately is also a challenge that needs to be addressed in practical experiments. Future work will cover the OMDRT of dual/multi-spectral CT and explore its effectiveness in other applications.

ObjectiveThe static volumetric 3D display technology displays 3D objects by volume pixels in 3D space, presenting real stereoscopic images. It can provide physiological and psychological depth clues for human visual systems to perceive 3D objects and can meet all-around observation needs. Additionally, it is the most likely 3D display technology to achieve high spatial resolution, multi-angle, and simultaneous observation of multiple people, real-time interaction, and large size. Among them, the static volume 3D display technology based on dual beam addressing has attracted much attention due to its unique advantages such as fine voxel, high spatial resolution, easy realization of full-color display, and meanwhile the image is no shaking and does not require auxiliary equipment (such as glasses) to view. By employing the energy of two infrared photons to pump a material into an excited energy level, the electrons in the excited energy level will transition to a lower energy level and produce visible light, which is an effective way to achieve dual-beam addressing. The material that can implement this luminescence process is also known as the two-step two-frequency (TSTF) up-conversion luminescence (UCL) material, and it can have great potential applications in static volumetric 3D display technology due to its rapid response, high contrast, and high color purity. Despite this, the material has received few reports in volumetric 3D display applications because of its low UCL efficiency and small display volume. Additionally, some literature focuses on the properties of materials, with less introduction of 3D display systems. The above two points greatly limit the application and research interest of the 3D volume display of TSTF UCL materials. Thus, we develop a 3D imaging system based on the TSTF UCL mechanism of rare earth ions, and meanwhile build a projection imaging optical path based on digital optical processing (DLP) and a line laser shaping optical path based on scanning galvanometer and cylindrical mirror. The display system is based on the TSTF UCL technology, which employs a dual infrared laser excitation, and adopts the digital micromirror display (DMD) and scanning galvanometer to achieve rapid scanning of image volume at high resolution. It has lower material performance requirements and cost, and more simple method than dual DLP imaging mode. This system is very suitable for the preliminary study of the stereoscopic display effect of TSTF UCL materials and also provides an effective idea for the imaging schemes of other addressing media materials. Additionally, the TSTF UCL material utilized for the display is a cyclohexane solution of core-shell NaYF4∶0.5%Er@NaGdF4∶2%Yb@NaYF4∶1%Er (NYF@NGF@NYF) nanocrystals, which has great potential for large-scale imaging.MethodsWe present a static volumetric 3D display system with wide wavelength and fast response, which includes three parts of display medium, control system, and laser system. In the experiment, nanocrystals NaYF4∶Er@NaGdF4∶Yb@NaYF4∶Er with dual-step dual-frequency up-conversion ability are selected as imaging medium. The control system employs 1024×768 DMD and scanning galvanometer to project the infrared laser. By the appropriate design of imaging optical software, the two-dimensional slice of the stereoscopic image is converted into the control signal of the DMD/scanning galvanometer. The laser system adopts 1550 nm and 850 nm infrared lasers as the addressing and imaging light source and adjusts the beam and optical path with appropriate parameters.Results and DiscussionsThe upconversion emission spectra of NYF@NGF@NYF are measured [Fig. 3(b)]. After integrating the emission spectrum in the visible range (500-700 nm), it can be concluded that contrast is I1550+850/(I1550+I850)=28.69, where I1550+I850, I1550, and I850 are the emission intensity under co-excitation of 1550 nm and 850 nm lasers, under excitation of 1550 nm laser, and under excitation of 850 nm laser respectively. With self-made display materials and the self-built static volumetric display system, a variety of 3D images can be demonstrated at a refresh rate of 40 Hz, and the images are clear and bright (Fig. 7). The maximum luminous power of a single point measured by the power meter can reach 0.5 mW, the theoretical maximum resolution can be 30×1024×768, and the number of voxels is close to 23 million.ConclusionsWe report a two-beam scanning 3D imaging system based on the dual-frequency upconversion luminescence mechanism of rare earth ions. The DLP and scanning galvanometer in the optical path are controlled by the computer to build a 3D dynamic model in the liquid medium. The images presented by the system feature stability, high resolution, fast scanning speed, and maximum voxels of 23 million, without observation angle limitations. The various parts of the system such as the light source, the light path, and the display medium are independent and can be quickly replaced and flexibly adjusted to adapt to the excitation properties of different materials. The material adopted for the display is a cyclohexane solution of the core-shell structure NYF@NGF@NYF nanocrystals, which has great potential for large-scale imaging. The system has certain reference significance for the development of volumetric 3D display and provides support for the preliminary research on 3D display capability of display media such as TSTF materials. Meanwhile, this display system is characterized by convenient building and obvious display effect, without the requirement for high material properties. It assists with the preliminary research on up-conversion materials in 3D display and serves as references for exploring large-size 3D volume display technology.

ObjectiveDefects such as debonding, bulges, pores, pits, delaminations, and inclusions in composites are common during manufacture and service. They not only reduce strength and stiffness but also fail structures. Reliable non-destructive testing methods are required to assess the quality of composite materials. Long pulse thermography (LPT) is a full-field, non-contact, and non-destructive testing method based on image visualization that provides an efficient way to assess the defect quality. However, the defect visibility of LPT can be compromised by various factors such as experimental conditions, heat intensity, inherent material properties, and noise. The LPT effectiveness is constrained by fuzzy edges and low-contrast defects. Consequently, enhancing defect visibility via signal processing methods is crucial for inspecting defects in composite materials using LPT. Thus, we propose an infrared image sequence processing method that utilizes Fourier transform, phase integration, and edge-preserving filters to enhance the quality of LPT detection results for composite materials. Meanwhile, a few latent variables that better reflect the defect information inside the specimen are proposed by transforming the temperature information of the surface during the cooling period. These variables can eliminate the influence of uneven heating and improve defect visualization. This method enables clear delineation of defect edges and accurate measurement of defect sizes. Our approach and findings are expected to contribute to qualitative and quantitative measurements in the non-destructive testing of composite structures.MethodsWe propose a novel infrared image sequence processing algorithm to enhance the defect visibility of LPT. This approach comprises four steps of background uniformity processing, phase extraction, frequency domain integration, and image quantization. Initially, thermal data is acquired after a square pulse heating period and subsequently pre-processed to eliminate the inhomogeneity of the initial temperature distribution. Subsequently, phase Fourier analysis is conducted to extract the phase information related to defects of varying depths and sizes. Next, the phase difference between defect and sound regions is pixel-wise integrated along frequencies to integrate defect information into a new image. Lastly, the integrated phase image transforms into an 8-bit visual image by applying edge-preserving filters and local adaptive Gamma correction.Results and DiscussionsTo evaluate the effectiveness of the proposed method, we conduct an experiment using a glass fiber reinforced polymer (GFRP) panel and compare it with various thermal signal processing methods. The efficacy of the proposed method is substantiated via qualitative and quantitative analysis, with the influence of acquisition parameters additionally discussed. Figure 7 illustrates the raw infrared images captured in different instances. The defects with deep depths have low contrast and fuzzy edges. The phase images processed by background uniformity and Fourier transform are depicted in Figs. 9(a)-9(c). The visibility of defects in these phase images is improved compared to the raw images. However, the deeper defects are more obvious in the phase images at low frequencies and vice versa. It is challenging to identify all defects at various depths using only phase images at a single frequency. To this end, the frequency domain integration method is utilized to amalgamate the phase information of all defects, and subsequently, the resulting phase integration image is enhanced and quantified. The processed results are presented in Fig. 9(d), where all 20 defects of various depths and sizes are distinguishable. The edges of the defects are visible, which facilitates subsequent image segmentation and edge extraction processing for accurate defect size measurement. Additionally, three traditional thermal signal processing algorithms of absolute thermal contrast, thermographic signal reconstruction, and principal component analysis are also compared. Figures 11 and 12 highlight the superiority of the proposed method from qualitative and quantitative perspectives respectively. Analyzing the variations in temperature difference over time and the signal-to-noise ratio across various sampling frequencies (Fig. 13) allows for determining the optimal acquisition time of 30 seconds and a sampling frequency of 30 Hz, striking a balance between computational efficiency and detection effectiveness.ConclusionsWe employ a homemade infrared non-destructive testing system utilizing LPT for the experiments. A method for processing infrared image sequences based on Fourier transform, phase integration, and edge-preserving filters is developed to mitigate the influence of uneven heating and enhance the contrast of defects. The inspection results of the GFRP panel demonstrate that phase signals can offer more information about defects, and integrating phase information across all frequencies significantly enhances detection performance compared to a fixed-frequency signal phase image. Meanwhile, the accurate defect size measurement in segmented images further validates the reliability of the proposed method. An important advantage of this method is that fewer parameters should be determined, specifically the optimum sampling time and frame rate. Other data dimensionality reduction techniques such as ATC, TSR, or PCA can yield multiple principal component images requiring human visual interpretation. In contrast, the proposed method generates a single optimal detection image, which significantly amplifies the detection automation. Finally, our study provides guidance for practical non-destructive inspection of composite structures.

ObjectiveThe rapid advancement of modern information technology has led to the increasing maturation of three-dimensional (3D) shape measurement technologies. At present, this technology has been applied to biomedicine, cultural relic protection, man-machine interaction, and so on. Structured light measurement emerges as a prominent 3D measurement technology, distinguished by its non-contact, high precision, and rapid speed. It stands as one of the most extensively utilized and reliable 3D measurement technologies. The de Bruijn sequence, noted for the uniqueness of any fixed length subsequence within the entire sequence, is widely employed in structured light coding. In discrete sequence coding, only one projection pattern coded by a de Bruijn sequence is required to measure the 3D information of an object, ensuring high measurement efficiency. In continuous phase-shifting coding, the de Bruijn sequence is applied to code the phase order to assist in the phase unwrapping process. However, the presence of identical consecutive codes in a de Bruijn sequence makes it challenging to precisely determine fringe numbers and positions within uniform color areas in captured images. In this paper, to solve this problem, a new type of de Bruijn sequence named adjacency-hopping de Bruijn sequence is proposed. Such sequences guarantee that all neighboring codes are different while holding the uniqueness of the subsequences. These two properties lay the foundation for accurate decoding and efficient matching. Meanwhile, an efficient and complete structured light coding and decoding process is devised by combining the adjacency-hopping de Bruijn sequence with the phase-shifting method to complete the 3D measurement task.MethodsAccording to graph theory, generating a de Bruijn sequence can be accomplished by systematically traversing an Eulerian tour on a de Bruijn graph. In this paper, we redefine the vertex and edge sets of the de Bruijn graph to construct a specialized oriented graph. This oriented graph ensures that adjacent codes of each vertex are different. As a result, a unique type of de Bruijn sequence called an adjacency-hopping sequence, where all neighboring codes are guaranteed to be different, can be generated by traversing an Eulerian tour on the oriented graph. This specialized sequence is then employed to encode phase orders of the phase-shifting fringes. Specifically, the phase-shifting images are embedded into the red channel, while the phase order-encoded images via the proposed adjacency-hopping sequence are embedded into the green and blue channels. In the decoding process, color images captured by the camera are separated to calculate the wrapping phase and decode the phase order respectively. Subsequently, the Hash lookup algorithm is utilized for sequence matching, facilitating the determination of the phase order. Ultimately, 3D information is achieved.Results and DiscussionsInitially, a comparative experiment is devised to compare classic de Bruijn sequence-based coding approaches (e.g. the original de Bruijn sequence, the multi-slit de Bruijn sequence, and the recursive binary XOR sequence) with the proposed adjacency-hopping de Bruijn sequence coding method, showcasing the advantages of the proposed sequence in discrete coding. The experimental results illustrate that similar to the improved de Bruijn sequence-based approaches (i.e., the multi-slit de Bruijn sequence and the recursive binary XOR sequence), the proposed method effectively addresses the fringe separation problem encountered in the original de Bruijn sequence. Furthermore, compared with the aforementioned improved methods, the proposed adjacency-hopping de Bruijn sequence coding method demonstrates higher matching efficiency and is more suitable for integration with phase-shifting measurements. Subsequently, a series of practical measurement experiments are designed to further illustrate the processing flow of the proposed method and evaluate its performances, such as stability, measurement efficiency, and accuracy. The experimental results demonstrate that the coding and decoding method presented in this paper exhibits good robustness in scenarios involving optical path occlusions. Hence, it can be applied to measure objects with complex surface structures. Moreover, the proposed coding and decoding method achieves measurement accuracy comparable to the selected comparative phase-shifting approaches while significantly reducing the number of projected patterns, resulting in improved measurement efficiency.ConclusionsWe introduce a special de Bruijn sequence named the adjacency-hopping de Bruijn sequence. We theoretically prove the existence of such sequences and elucidate their generation method. The proposed sequence guarantees that all neighboring codes are different while preserving the uniqueness of subsequences. Then, the proposed sequence is employed to encode phase orders, and a novel phase-shifting-based coding method is finally introduced. On the projection side, the proposed method leads to a significant reduction in the number of projected patterns, thereby improving the projection efficiency. On the decoding side, each phase order-coded fringe can be separated accurately while guaranteeing efficient matching. The experimental results demonstrate that compared with the classical complementary Gray-code plus phase-shifting method and the multi-frequency heterodyne method, the proposed method achieves comparable measurement accuracy while reducing the number of projection patterns from 11 or 12 to 4.

ObjectiveDigital image correlation (DIC) technology is a processing method commonly employed for image matching, and meanwhile after nearly forty years of development, its accuracy, efficiency, practicality, and other aspects have yielded significant improvement. With the development and progress of science and technology, DIC technology for three-dimensional (3D) measurement should also be economical and practical, with the utilization of relatively simple devices for a full range of functions. DIC measurement systems with the assistance of the camera and external equipment can also be realized with multiple viewpoints and multi-directional measurements, on which many scholars have carried out thorough research. Among them, the single camera system has a flexible field of view adjustment and simple optical path, but features poor stability and low accuracy. The multi-camera system requires the adoption of multiple cameras, and the calibration process is complicated. Although the multi-camera measurement system can improve the range and accuracy of 3D measurement, it is difficult to be widely leveraged in 3D full-field measurement due to the high requirements of environmental conditions and the expensive cameras. Given the shortcomings of the existing single-camera and multi-camera systems, we propose a dual-camera 3D reconstruction method assisted by dual plane-mirror.MethodsWe put forward a visual 3D measurement method assisted by a dual plane-mirror, which is to analyze the virtual and real correspondences of the corner points in the mirror surface and thus obtain the reflection transformation matrix. Meanwhile, the virtual and real transformations of the object surface are completed by the reflection transformation matrix, and the 3D full-field measurements are realized finally. Additionally, this method avoids spraying diffuse spots on mirrors to take up the spatial resolution of the camera, making the solution of the reflection transformation matrix easy and efficient. Firstly, the image information of the front surface and the back side surface of the object is acquired simultaneously by the dual-camera DIC measurement system (Fig. 1). Secondly, the calibration plate is placed in front of the plane mirror, and the dual cameras can observe the real calibration plate and the virtual image in the mirror at the same time (Fig. 4). The midpoint method based on the common vertical line is adopted to determine the 3D coordinates of the corner points in space (Fig. 5), and the specific positions of the dual plane mirrors are finally determined by changing the position of the calibration plate several times. Finally, the reflection transformation matrix is solved by the mirror position equation, and then the 3D reconstruction of the object is completed.Results and DiscussionsTo verify the accuracy of the proposed method, we conduct static and dynamic experiments on the measured parts respectively. In the static experiments, the 3D contour of the game coin is reconstructed, and in the dynamic experiments, the thermal deformation of the five-side aluminum column is investigated (Fig. 6). By employing the proposed method, the dual-mirror equation and reflection transformation matrix can be obtained under the mirror angle of 108° (Table 1). The front and back contours of the ordinary game coin are reconstructed in three dimensions, the theoretical thickness of the game coin is 1.80 mm, and the measured thickness is around 1.75 mm (Fig. 9). The reconstruction method of spraying diffuse spots on the mirror surface is compared to verify the 3D reconstruction accuracy of the proposed method (Fig. 9), and the 3D reconstruction of the game coin by the proposed method is found to be better than that of spraying diffuse spots on the mirror surface. Meanwhile, the proposed method avoids taking up the spatial resolution of the camera by spraying diffuse spots on the mirror surface, with higher accuracy.Aluminum column 3D reconstruction and thermal deformation measurement results are shown. Firstly, the reconstruction results of the surface profile of the five-side aluminum column are obtained by the proposed method (Fig. 10), the real height of the aluminum column is 70.00 mm±0.01 mm, and the average measurement height is 70.0035 mm, which is a sound measurement effect. Secondly, the average height change of the five outer surfaces of the aluminum column can be obtained during thermal deformation (Table 2). The thermal deformation displacement cloud map of the outer surfaces is shown in Fig. 11 and the height change of different surfaces in the cooling process is illustrated in Fig. 12. To more intuitively demonstrate the accuracy of the proposed method of real-virtual transformation, we compare and analyze the deviation values of the height change obtained by the two methods at each node (Fig. 13), which shows that the proposed method has higher measurement accuracy.ConclusionsWe propose a dual plane mirror-assisted visual DIC 3D full-field measurement method. The static experiment results indicate that the proposed method is better than the reconstruction method of spraying diffuse spots on mirrors for the 3D reconstruction of game coins with higher accuracy. The results of dynamic thermal deformation experiments indicate that when the temperature of the five-side aluminum column is reduced from 330 to 20 ℃, the height change of the outer surfaces of the column is basically consistent with the simulation results of the finite element software, and the deviation values of the height change measured by the proposed method are smaller than those of the method of spraying diffuse spots on mirrors. Since the proposed method can avoid spraying diffuse spots on mirrors to take up the spatial resolution of the camera, it features simple operation, high measurement accuracy, and sound application perspectives.

ObjectiveMonocular stereo vision features low cost and compactness compared to binocular stereo vision and has a broader application prospect in space-constrained environments. Stereo vision systems based on dual-biprism are widely employed in engineering measurement due to their adjustable field of view (FoV). Compared to other types of monocular vision systems, this method is compact and easy to adjust. Topography reconstruction and deformation measurement are the main application purposes of monocular vision systems. The error factors existing in the imaging system should be considered and evaluated to obtain high-precision measurement results. The acquisition and reconstruction of depth information are crucial for accuracy. The depth equation derived from the optical geometry can be adopted to analyze factors affecting the reconstruction accuracy. Analyzing the influence of object distances and angles on image depth information and disparity in depth equations can provide references for system layout and optimization. Additionally, the artificially placed dual-biprism has offset and rotation, and the errors caused by postures will change the imaging model which is derived in the ideal state. Therefore, model correction considering posture errors is important for high-precision imaging. Meanwhile, the dual-biprism posture will lead to the FoV difference. The quantitative study of the FoV caused by the posture can be helpful for the reasonable arrangement of system layout and object positions. Based on the previous studies, to make the monocular stereo system composed of dual-biprism more applicable to high-precision topographic reconstruction and deformation measurement, we will conduct an in-depth study on the influences of systematic errors and prismatic postures on the FoV.MethodsThe depth equation of the monocular vision system is expressed by geometrical optics and the ray tracing method. By making a small angle assumption and ignoring the distance between the dual-biprism and the camera, a depth equation with parameters such as disparity, included angle, and object distance can be obtained, as demonstrated in Eq. (8). By solving the partial derivative of the depth equation, the relationship among object distance, included angle, and disparity is obtained, as illustrated in Eqs. (9)-(10). The classification of prism postures is discussed, including rotation around the base point and offset along the x- or z-direction, as shown in Fig. 3. According to the systematic error introduced by the prism postures, the imaging model is further modified. Furthermore, the modified model is utilized to analyze the influence of prism postures on the FoV, as described in Eqs. (12)-(14). The experiments include verifying the validity of the derivation of the depth equation by leveraging the DIC results as true values, proving the model correctness by calculating the coordinates of the corner points, and investigating the FoV changes caused by the prismatic postures by matching the coordinates of the corresponding points. First, the experiment of object distance change is carried out. After keeping the object distance unchanged, the included angle of the prism is changed to evaluate the influence of the object distance and included angle on the disparity respectively. The DIC results are compared with the results of Eq. (8) to verify the derivation correctness. The dual-biprism is offset according to the classifications, the image is collected before and after posture changes, and the pixel coordinates of the corners of the whole field are extracted by the corner recognition method. The angular coordinates and offset distance before posture change are substituted into Eqs. (12)-(14). The calculated pixel coordinates are compared with the pixel coordinates identified above to verify the equation derivation correctness. Finally, the influence of postures on the FoV is determined by tracking the pixel coordinates of specific corners in the calibration plate before and after the prism posture changes.Results and DiscussionsThe depth equation for the monocular stereo vision system can be described as Eq. (8). The influence of the parameters on the disparity can be obtained by solving the derivatives regarding the object distance and included angle for the depth equation respectively. The derived equations can be expressed as Eqs. (9) and (10). The depth equation description shows that the disparity decreases with increasing distance and shows a nonlinear change. As shown in Fig. 3, all three posture classifications cause a change in the standard virtual point model. The camera is calibrated after the device is placed to verify the derivation validity. As shown in Fig. 8, the FoV changes introduced by the postures can be obtained by tracking the corner points extracted in the calibration board before and after the posture variations. When the prism group rotates 1° clockwise around the base point, the FoV in each channel will shift anticlockwise, which will also cause the FoV in the overall overlapping area to move in this direction. If only the right prism rotates 1° clockwise, it will make the pixel coordinates of the virtual point in the right channel shift 57 pixels to the right, and the FoV offset of the side channel will reduce the overlapping FoV of the system. When the prism group is offset to the right by 1 mm along the x-direction, the same trend will be introduced. If only the right prism is offset, the virtual point will be offset by 49 pixels to the right. Meanwhile, when the prism group moves 1.4 mm along the positive half-axis of the z-direction, there is no significant FoV change in Fig. 8(c). The speckle images before and after the object distance and angle changes are captured, with the disparity map obtained. Then, the depth map can be computed using Eq. (8), in which the depth information of each point in the overlapping FoV can be obtained. The profile of the measured object can be obtained using the coordinate transformation method. The derivation correctness of the equation can be verified by selecting three cross-sections on the object and comparing the profiles of the object obtained by the two methods, as shown in Figs. 9-11. The corrected models considering the prismatic postures are illustrated in Eqs. (12)-(14). The pixel coordinates of the corner points obtained before the posture change are calculated by substituting them into Eqs. (12)-(14) to obtain the offset coordinates, which can be compared with the pixel coordinates of the corner points extracted after the offset to verify the correctness of the corrected model, as shown in Fig. 12.ConclusionsThe relationship between depth equation and disparity in prism-splitting type monocular stereo vision systems is studied, with the system error introduced by the dual-biprism postures considered. The depth equation of the system is derived by combining the virtual point model and ray tracing method. By solving the derivative of the depth equation, the influence of object distance and included angle on disparity is studied. The results show that the disparity of the image increases with the reducing object distance and rising included angle. The imaging model is modified to address the system errors introduced by postures. The experimental results show that the pixel coordinates of virtual points can be accurately calculated using the modified model with known offset distances of the dual-biprism and world coordinates of spatial points, which can determine the mapping relationship of spatial points for different prism postures. Finally, the rotation of the dual-biprism or the offset along the direction perpendicular to the optical axis of the camera will cause the FoV of the system to change, while the posture change along the optical axis of the camera will only reduce the imaging range. Finally, we can provide references for high-precision reconstruction and deformation measurement of monocular stereo vision systems composed of optical elements.

ObjectivePosition and pose are two basic parameters describing the position and attitude of an object in space, and they are extensively researched in robot grasping, automatic driving, and industrial inspection. Traditional attitude estimation methods such as using mechanical, laser tracker, inertial unit, and other attitude measurement systems have their drawbacks, including the need for contact measurement or susceptibility to interference by ambient light, and optical path complexity. As an optical measurement method, the digital image correlation (DIC) method features strong anti-interference ability and a simple optical path without contact. Meanwhile, it has been widely employed in the measurement of displacement, strain, and mechanical properties, but less research on attitude measurement is conducted. At present, there is a position measurement system based on the DIC method, which adopts the space vector method. This method requires the calculation of the inverse tangent function in rotation angle calculation, which has a large error and requires more calculation points. To deal with the shortcomings of the traditional position measurement system, we propose a position estimation system based on the three-dimensional digital correlation (3D-DIC) method to complete the measurement of multiple position parameters of a rigid body in space. Meanwhile, a new position solution method is put forward for the weaknesses of the existing space vector method, and a new matching calculation method is also proposed to solve the problem of DIC in measuring large rotation angles.MethodsThe mathematical model of position solution based on singular value decomposition (SVD) is first derived, and then the position measurement system is built for experiments. The specimen which has been sprayed with scattering spots is fixed on a moving platform, and the specimen moves along with the movement of the platform. After calibrating the binocular camera, the image sequences before and after the specimen movement are captured by the binocular camera, and the 3D-DIC is employed to match the image sequences before and after the movement and thus obtain the spatial three-dimensional coordinates of the calculation points. After obtaining a set of 3D coordinates before and after the movement of the calculation points, the SVD method is adopted to solve the rotation matrix and translation matrix, with the movement position parameters of the specimen solved. For the large errors of 3D-DIC in measuring large rotational deformation, we propose the matching calculation method of adding intermediate images. The feasibility and accuracy of the proposed method are verified by the translational degree of freedom and rotational degree of freedom experiments. Finally, a set of accuracy comparison experiments with the space vector method are conducted to verify whether this proposed method is better.Results and DiscussionsAfter experimental validation, the position estimation system based on the proposed 3D digital correlation method can realize the measurement of multiple position parameters of a rigid body in space. The absolute errors of the three translational degrees of freedom in the transverse, longitudinal, and elevation are less than 0.07 mm (Fig. 6), and the absolute errors of the yaw and roll angles are less than 0.02° when the rotation angle is less than 10° (Figs. 7 and 9). Meanwhile, the proposed matching calculation method of adding intermediate images also reduces the error of large angle measurement (Fig. 10). The accuracy comparison experiments with the existing space vector method show that the proposed method has smaller measurement errors in rotation angle measurement and requires fewer calculation points (Table 2).ConclusionsWe establish a position estimation system based on the 3D digital image correlation method, and propose a position solution method based on singular value decomposition. The 3D coordinates of the computation point are obtained by taking the image sequence before and after the motion of the object to be measured for the position solution, and multiple position parameter measurement of the spatial rigid body is realized. The results of the three translational degrees of freedom measurement experiments validate that the proposed 3D-DIC-based position measurement system is suitable for measuring the spatial translational degrees of freedom of the rigid body. Additionally, the large-angle measurement experiments verify that the proposed improved matching calculation method which adds intermediate images has obvious improvement in large-angle measurements, and the results of yaw angle and roll angle measurements show that the present measurement system is also applicable to the rotational degree of freedom position measurements of small and large angles. Compared with the traditional position estimation system, our method features high accuracy and a simple optical path without contact. Compared with the existing space vector method, our study has small measurement errors in both yaw and roll angles, and the required number of calculation points is also greatly reduced. In summary, the position and pose measurement system based on our 3D digital image correlation method is suitable for spatial rigid body position measurement, and the measurement accuracy is high, which meets the measurement requirements.

ObjectiveIn recent years, the research on silicon-based thermo-optic (TO) devices has focused on in-depth direction and become more complex, and the realization of higher-performance silicon-based TO devices is the main purpose of our research. There are many kinds of silicon-based optical switches developed so far. We design Mach-Zehnder interferometer (MZI)-type silicon-based TO switches with large bandwidth, simple structure, and high robustness, and the N×N TO-integrated switches and the electro-optic (EO)-integrated switches have been widely studied. The EO switch has a fast switching speed (nanosecond level), but its crosstalk and insertion loss are high due to the free carrier absorption effect. In contrast, TO switches excel in maintaining low loss and low crosstalk, but their switching response time is intrinsically limited, typically on the microsecond scale. Optical switches in hybrid network systems are typically used to handle high-capacity and high-bandwidth optical communication services, making TO switches the preferred choice due to their low loss and low crosstalk characteristics.MethodsThe MZI-type 1×8 silicon-based TO switch proposed and prepared in this paper is composed of one 2×2 MZI and six 1×2 MZI switching units connected by a binary tree structure, which has two input ports and eight output ports in the optical switch, with the first stage comprising a 2×2 MZI switching unit, the second stage comprising two 1×2 MZI switching units, and the third stage comprising four 1×2 MZI switching units. Effective control of the optical signals is achieved by the phase shift of the TO-tuned phase shifter, which directs the light to the destination branch waveguide, thus realizing the optical switching function. The coupler and phase shifter in the optical switching unit are optimized by using the finite-difference time-domain method and the particle swarm optimization algorithm to improve the switching performance and reduce the chip size. The long connecting waveguide is designed as a wide waveguide of 2 to reduce the waveguide transmission loss. The package connects the optical switch chip to a 14-channel optical fiber array by using an end-face coupling, curing it with an ultraviolet curing adhesive. In addition, a multi-channel voltage source is designed, which mainly consists of a CPU, op-amp LM324, analogue switches, and four DAC modules. This multi-channel voltage source has 32 selective ports, and the synchronous switching of the optical switching ports is achieved by simultaneously regulating the voltages of all levels of thermal phase shifters through the host computer. The results show that the optical switch achieves low on-chip insertion loss, lower crosstalk, and a reduced response time of the optical switch.Results and DiscussionsThe experiments demonstrate that the designed and prepared 1×8 TO switch performs well in all aspects; its average on-chip insertion loss is about 1.1 dB (Fig. 8); the fiber-to-fiber loss fluctuates and varies in different paths because the difference in connecting waveguide lengths of the different paths and different ports with different coupling efficiencies to the fiber may not be the same. The crosstalk of its eight output ports is less than -23.6 dB (Fig. 9), and the crosstalk is the leading cause of switching signal degradation. The 2×2 MMI coupler can reduce crosstalk. The response time of the switch is less than 60 μs (Fig. 11) because the thickness of the silica cladding layer at the bottom is more significant than that of the buffer layer. The thermal conductivity of silica is about 1/100 of that of silicon; after heating, the heat diffusion from the core layer to the substrate is slower, and the falling-edge time is longer than that of the rising-edge time. When the input electrical signal's frequency is high, the TO phase shifter's response can no longer follow the electrical signal due to its limited response bandwidth, and the response is no longer square-wave in character. However, the rise and fall time becomes shorter. In addition, there is a difference in the response time of the switch due to manufacturing process errors in different phase shifters.ConclusionsOur proposed 1×8 optical switch chip is based on a tree structure consisting of one 2×2 MZI and six 1×2 MZIs, with a TiN heater in each thermal phase shifter's upper/lower arm. The switch chip is constructed on a SOI platform by using a CMOS-compatible process with a size of 1.75 mm×3 mm. The chip exhibits an on-chip insertion loss of about 1.1 dB at the operating wavelength of 1550 nm, a crosstalk of less than -23.6 dB, a response time of the switch of better than 60 μs, and an average power dissipation of the switch of about 34.09 mW. The experimental results show that the 1×8 TO switch has the advantages of compactness, low loss, and low crosstalk.

ObjectiveBased on the light-matter interaction, optical tweezers generate strong force on micro and nano-sized particles by momentum transfer, which is non-contact and non-damage. In bioscience, optical tweezers have been applied to capture bacteria and non-invasive manipulation of organelles within a single living cell and become an effective way to detect and control micro- and nano-scale objects. However, optically capturing and manipulating individual particles smaller than the light wavelength remains a major challenge. To overcome the light diffraction limitation, the local surface plasmons (LSPs) in the metal nanostructure can effectively focus and confine the propagating light within the nanometer scale, with better spatial locality and higher local field intensity. A plasma well is generated by the coupling of electromagnetic waves at the interface of the metal-dielectric layer by surface plasmon excitation. This unique electromagnetic pattern can limit light beyond the diffraction limit, causing the electromagnetic field to decay exponentially from the metal-dielectric interface. These two properties are crucial for optical capture applications: the former significantly reduces the volume of the captured object, and the latter enhances the production of optical forces due to the field intensity gradient. Meanwhile, we study the distribution of the electric field and Poynting vector diagram under different polarization modes and the distribution of light force and potential well generated by the interaction between nanoparticles and the scattering near the field of coaxial structure. Finally, it provides a new way for capturing and manipulating micro and nano particles and other living cells in a low-concentration solution.MethodsThe coaxial structure consists of silicon concentric ring and a silver layer. The coaxial structure of the laser source illuminates vertically from the bottom, and the electric field distribution of online polarization, circular polarization, and different coaxial apertures under the light source and Poynting vector diagram are calculated by finite-difference time-domain (FDTD) method. Additionally, the Maxwell stress tensor method is adopted to calculate the light force generated by the interaction of dielectric particles with a 10 nm radius with the structure in free space. The optical trapping performance of the structure in two light modes is studied. The optical trapping force and potential well distribution of particles in the x-y and y-z planes under different light source modes are calculated. The force analysis of the nanoparticles shows that the positive force Fx and negative force F-x in both the x-y and y-z planes cause the coaxial structure to produce the trapped particles in the center of the potential well.Results and DiscussionsThe coaxial structure is coupled with the optical field to enhance transmission and local electromagnetic field (Fig. 1). The transmission characteristic curve shifts to the right as the height of the coaxial structure h increases. When h=150 nm, the transmission spectrum has two peaks at the wavelength of 540 nm and 750 nm. These peaks are transmitted by light waves into a coaxial aperture of finite thickness and are fully reflected, which brings the Fabry-Perot resonance mode. Under the action of the circularly polarized light field, the coaxial plasma structure generates a vortex light field of spin energy flow (Fig. 6). The resulting vortex field affects the spin angular momentum carried by the circularly polarized light and makes it pass through the coaxial aperture, and the orbital angular momentum is transmitted in the near field through the spin-orbit interaction of the electromagnetic field. The optical trapping force and potential well distribution of particles in the x-y and y-z planes under different light source modes are calculated respectively (Figs. 7 and 8). The dual trapping potential well is generated to expand the trapping region, overcome the Brownian diffusion of nanoparticles in a low-concentration solution, and improve the trapping efficiency, providing a new way for capturing and manipulating micro-nano particles and other living cells in a low-concentration solution.ConclusionsThe distribution of electric field and Poynting vector diagram under linear polarization and circular polarization light sources are calculated by the FDTD method, with the optical trapping performance of nanoparticles under two light modes. The results show that the transmission value reaches the maximum at 750 nm, and the depth of the potential well reaches 17kBT under the incident light intensity of 1 μW/μm2. Meanwhile, the circularly polarized light forms a potential well depth of 8kBT vortex field above the structure, which overcomes the Brownian diffusion of nanoparticles in a low-concentration solution and improves the capture efficiency. The results can be employed both as optical tweezers for manipulating nanoparticles and as semiconductor structures for laser emission.

ObjectiveAs the main pumping light source of solid state laser, fiber laser, and fiber amplifier, 976 nm diode laser has been widely used in industrial processing, medical treatment, communication, and other fields. As an important pumping light source of erbium-doped fiber amplifier, a 976 nm fundamental transverse mode diode laser can achieve high-efficiency coupling with fiber, improve the output performance of the fiber amplifier, and effectively reduce the cost of the fiber amplifier. It plays a very important role in improving the application of erbium-doped fiber amplifier in fiber communication and other fields. However, since the ridge waveguide in the ridge diode laser uses the weak refractive index guiding mechanism to suppress the higher-order transverse mode, it will be greatly affected by the lateral diffusion of carriers and the self-heating effect and eventually lead to the decline of the ridge waveguide mode guiding and the increase of the far-field angle. To further improve the coupling efficiency of diode lasers in fiber laser pumping applications and reduce the application cost of fiber lasers, it is still important to realize low far-field divergence angle and low power consumption of fundamental transverse mode ridge diode lasers.MethodsUsing InGaAs/GaAsP material as the strain-compensated quantum well structure, and GaAsP with high bandgap width as the barrier material can effectively reduce the carrier leakage effect of quantum well, provide strain compensation for InGaAs compressive strain quantum well, and improve the epitaxial growth quality. To achieve low loss, we optimize high output optical power and low far-field angle, the thickness of waveguide layers by using asymmetric large optical cavity epitaxial structure. The doping concentrations of the epitaxial layer materials are optimized to reduce the series resistance of the device, to achieve high power, high conversion efficiency, and low far-field output of the ridge diode laser. To achieve fundamental transverse mode output, we use the effective refractive index method to design and study the width and depth of the ridge waveguide and map the optical field distribution inside the device. Finally, according to the technological conditions, the ridge waveguide structure is selected with a width of 5 μm and a depth of 0.85 μm.Results and DiscussionsAfter the laser chip is designed and prepared, the output performance of the device is tested at 25 ℃. The device threshold current is about 51.2 mA, and a maximum continuous output power of 422 mW can be obtained at 550 mA injection current, with a maximum electro-optic conversion efficiency of 53.6% (Fig. 3). The peak wavelength is 973.3 nm at 550 mA injection current, and the corresponding spectral line width (FWHM) is 1.4 nm. When the injection current is 500 mA, the vertical and horizontal far-field distribution diagrams of the device are drawn (Fig. 5), and the corresponding vertical and horizontal far-field divergence angles (FWHM) are 24.15° and 3.9°, respectively. This indicates that the prepared ridge diode laser has a good fundamental transverse model property, which is conducive to improving the coupling efficiency between the diode laser and the fiber. Subsequently, we analyze the temperature characteristics of the device at the operating temperature of 15-35 ℃ and obtain a relatively high characteristic temperature of about 194 K. This is because GaAsP material with high band gap width is added to both sides of the InGaAs quantum well as the barrier layers, and there is a larger band level between the two materials, which can better suppress carrier leakage in the quantum well. The injection current utilization, the luminous efficiency, and the temperature stability of the laser device are improved. Similarly, the horizontal far-field changes little at 15 ℃, 25 ℃, and 35 ℃, and the corresponding horizontal far-field divergence angles are 3.45°, 3.90°, and 3.90°, respectively, which is conducive to increasing the coupling efficiency in optical pumping applications (Fig. 7).ConclusionsWe design and fabricate a 976 nm fundamental transverse mode ridge diode laser. To improve the conversion efficiency of the device, we improve high-bandgap GaAsP materials on both sides of the InGaAs compressive strain quantum well as a tensile strain barrier to improve the internal gain of the device, inhibit the carrier leakage in the quantum well, and improve the current utilization rate. In addition, we optimize the waveguide layer thickness and doping concentration in the device epitaxial structure to reduce the far-field divergence angle and achieve high-efficiency output by using an asymmetric large optical cavity epitaxial structure design. A 976 nm strain-compensated low far-field fundamental transverse mode ridge diode laser with a ridge width of 5 μm and a cavity length of 1500 μm is fabricated. At the operating temperature of 25 ℃, the maximum continuous output power of 422 mW can be obtained, the peak wavelength is 973.3 nm, and the spectral line width (FWHM) is 1.4 nm. When the injection current is 500 mA, the vertical and horizontal far-field divergence angles (FWHM) are 24.15° and 3.90°, respectively. In the operating temperature range of 15-35 ℃, the far-field divergence angle of the ridge diode laser is tested and analyzed. It is found that the far-field distribution of the device changes little with the increase in the test temperature, and the far-field divergence angle can be kept small.